The LDP subsystems and their relationships to other subsystems are illustrated in Figure 49. This illustration shows the interaction of the LDP subsystem with other subsystems, including memory management, label management, service management, SNMP, interface management, and RTM. In addition, debugging capabilities are provided through the logger.

The router uses a single consistent interface to configure all protocols and services. CLI commands are translated to SNMP requests and are handled through an agent-LDP interface. LDP can be instantiated or deleted through SNMP. Also, LDP targeted sessions can be set up to specific endpoints. Targeted-session parameters are configurable.

LDP activity in the operating system is limited to service-related signaling. Therefore, the configurable parameters are restricted to system-wide parameters, such as hello and keepalive timeouts.

config>

router>

ldp>

interface-parameters>

interface>enable-bfd [

ipv4][

ipv6]

config>

router>

interface>

bfd

The BGP TTL Security Hack (BTSH) was originally designed to protect the

BGP infrastructure from CPU utilization-based attacks. It is derived from the fact that the vast majority of ISP eBGP peerings are established between adjacent routers. Since TTL spoofing is considered nearly impossible, a mechanism based on an expected TTL value can provide a simple and reasonably robust defense from infrastructure attacks based on forged

BGP packets.

The TSH implementation supports the ability to configure TTL security per BGP/LDP peer and evaluate (in hardware) the incoming TTL value against the configured TTL value. If the incoming TTL value is less than the configured TTL value, the packets are discarded and a log is generated.

Note however that the fec-originate command has been extended to specify the interface name since an unnumbered interface will not have an IP address of its own. The user can however specify the interface name for numbered interfaces too.

The fec-originate command is supported when the next-hop is over an unnumbered interface.

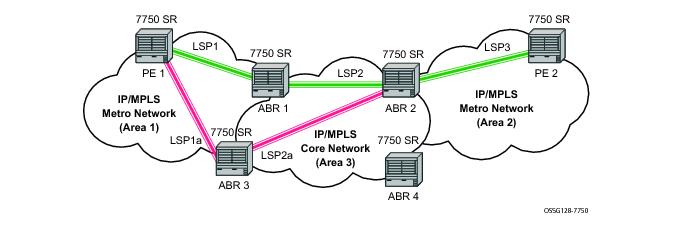

The network displayed in Figure 51 consists of two metro areas, Area 1 and 2 respectively, and a core area, Area 3. Each area makes use of TE LSPs to provide connectivity between the edge routers. In order to enable services between PE1 and PE2 across the three areas, LSP1, LSP2, and LSP3 are set up using RSVP-TE. There are in fact 6 LSPs required for bidirectional operation but we will refer to each bi-directional LSP with a single name, for example, LSP1. A targeted LDP (T-LDP) session is associated with each of these bidirectional LSP tunnels. That is, a T-LDP adjacency is created between PE1 and ABR1 and is associated with LSP1 at each end. The same is done for the LSP tunnel between ABR1 and ABR2, and finally between ABR2 and PE2. The loopback address of each of these routers is advertised using T-LDP. Similarly, backup bidirectional LDP over RSVP tunnels, LSP1a and LSP2a, are configured by way of ABR3.

The design in Figure 51 allows a service provider to build and expand each area independently

without requiring a full mesh of RSVP LSPs between PEs across the three areas.

In order to participate in a VPRN service, PE1 and PE2 perform the autobind to LDP. The LDP label which represents the target PE loopback address is used below the RSVP LSP label. Therefore a 3 label stack is required.

This implementation supports a variation of the application in Figure 51, in which area 1 is an LDP area. In that case, PE1 will push a two label stack while ABR1 will swap the LDP label and push the RSVP label as illustrated in

Figure 52. LDP-over-RSVP tunnels can also be used as IGP shortcuts.

In Figure 53, assume that the user wants to use LDP over RSVP between router A and

destination “Dest”. The first thing that happens is that either OSPF or IS-IS will perform an SPF calculation resulting in an SPF tree. This tree specifies the lowest possible cost to the destination. In the example shown, the destination “Dest” is reachable at the lowest cost through router X. The SPF tree will have the following path: A>C>E>G>X.

ECMP for LDP over RSVP is supported (also see ECMP Support for LDP ). If ECMP applies, all LSP endpoints found over the ECMP IGP path will be installed in the routing table by the IGP for consideration by LDP. It is important to note that IGP costs to each endpoint may differ because IGP selects the farthest endpoint per ECMP path.

Note that if the user specifies LSP names under the tunneling option, these LSPs are not directly used by LDP when the

rsvp-shortcut option is enabled. With IGP shortcuts, the set of tunnel next-hops is always provided by IGP in RTM. Consequently, the class-based forwarding rules described below do not apply to this set of named LSPs unless they were populated by IGP in RTM as next-hops for a prefix.

Note also that prefer-tunnel-in-tunnel must be disabled for class-based forwarding to apply to LDP prefixes which are the endpoint of the tunnels.

These two commands can also be passed in the lsp-template context such that LSPs created from that template will have the assigned Class-Based Forwarding (CBF) configurations.

|

•

|

Similarly, multiple LSPs can have the default-lsp configuration assigned. Only a single one will be designated to be the Default LSP. That LSP is the one with the lowest tunnel-id amongst those with the default-lsp option assigned.

|

Therefore, under normal conditions, LDP prefix packets will be sprayed over a set of ECMP tunnel next-hops by selecting either the LSP to which is assigned the forwarding class of the packets, if one exists, or the Default LSP, if one does not exist. However, the CBF is suspended until LDP downloads a new consistent set of tunnel next-hops for the FEC. For example, if the IOM detects that the LSP to which is assigned a forwarding class is not usable, it will switch the forwarding of packets classified to that forwarding class into the Default LSP, and if the IOM detects that the Default LSP is not usable, then it will revert to regular ECMP spraying across all tunnels in the set of ECMP tunnel next-hops.

config>

router>

isis>

loopfree-alternate

config>

router>

ospf>

loopfree-alternate.

config>

router>

ldp>

fast-reroute

config>

router>

interface>

ldp-sync-timer seconds

config>

router>

isis>

level>

loopfree-alternate-exclude

config>

router>

ospf>

area>

loopfree-alternate-exclude

Note that if IGP shortcut are also enabled in LFA SPF, as explained in Section 5.3.2, LSPs with destination address in that IS-IS level or OSPF area are also not included in the LFA SPF calculation.

config>

router>

isis>

interface>

loopfree-alternate-exclude

config>

router>

ospf>

area>

interface>

loopfree-alternate-exclude

config>

service>

vprn>

ospf>

area>

loopfree-alternate-exclude

config>

service>

vprn>

ospf>

area>

interface>

loopfree-alternate-exclude

The LDP FEC resolution when LDP FRR is not enabled operates as follows. When LDP receives a FEC, label binding for a prefix, it will resolve it by checking if the exact prefix, or a longest match prefix when the

aggregate-prefix-match option is enabled in LDP, exists in the routing table and is resolved against a next-hop which is an address belonging to the LDP peer which advertized the binding, as identified by its LSR-id. When the next-hop is no longer available, LDP de-activates the FEC and de-programs the NHLFE in the data path. LDP will also immediately withdraw the labels it advertised for this FEC and deletes the ILM in the data path unless the user configured the

label-withdrawal-delay option to delay this operation. Traffic that is received while the ILM is still in the data path is dropped. When routing computes and populates the routing table with a new next-hop for the prefix, LDP resolves again the FEC and programs the data path accordingly.

When LDP FRR is enabled and an LFA backup next-hop exists for the FEC prefix in RTM, or for the longest prefix the FEC prefix matches to when aggregate-prefix-match option is enabled in LDP, LDP will resolve the FEC as above but will program the data path with both a primary NHLFE and a backup NHLFE for each next-hop of the FEC.

When any of the following events occurs, LDP instructs in the fast path the IOM to enable the backup NHLFE for each FEC next-hop impacted by this event. The IOM do that by simply flipping a single state bit associated with the failed interface or neighbor/next-hop:

Also note that when the system ECMP value is set to ecmp=1 or to

no ecmp, which translates to the same and is the default value, SPF will be able to use the overflow ECMP links as LFA next-hops in these two cases.

Note that when the user enables the lfa-only option for an RSVP LSP, as described in

Loop-Free Alternate Calculation in the Presence of IGP shortcuts , such an LSP will not be used by LDP to tunnel an LDP FEC even when IGP shortcut is disabled but LDP-over-RSVP is enabled in IGP.

Figure 54 illustrates a simple network topology with point-to-point (P2P) interfaces and highlights three routes to reach router R5 from router R1.

|

•

|

Rule 1: Link-protect LFA backup next-hop (primary next-hop R1-R3 is a P2P interface): Distance_opt(R2, R5) < Distance_opt(R2, R1) + Distance_opt(R1, R5)

and,

Distance_opt(R2, R5) >= Distance_opt(R2, R3) + Distance_opt(R3, R5)

|

|

•

|

Rule 2: Node-protect LFA backup next-hop (primary next-hop R1-R3 is a P2P interface): Distance_opt(R2, R5) < Distance_opt(R2, R1) + Distance_opt(R1, R5)

and,

Distance_opt(R2, R5) < Distance_opt(R2, R3) + Distance_opt(R3, R5)

|

|

•

|

Rule 3: Link-protect LFA backup next-hop (primary next-hop R1-R3 is a broadcast interface): Distance_opt(R2, R5) < Distance_opt(R2, R1) + Distance_opt(R1, R5)

and,

Distance_opt(R2, R5) < Distance_opt(R2, PN) + Distance_opt(PN, R5)

where; PN stands for the R1-R3 link Pseudo-Node.

|

config>

router>

mpls>

lsp>

igp-shortcut [

lfa-protect |

lfa-only]

The lfa-protect option allows an LSP to be included in both the main SPF and the LFA SPFs. For a given prefix, the LSP can be used either as a primary next-hop or as an LFA next-hop but not both. If the main SPF computation selected a tunneled primary next-hop for a prefix, the LFA SPF will not select an LFA next-hop for this prefix and the protection of this prefix will rely on the RSVP LSP FRR protection. If the main SPF computation selected a direct primary next-hop, then the LFA SPF will select an LFA next-hop for this prefix but will prefer a direct LFA next-hop over a tunneled LFA next-hop.

The lfa-only option allows an LSP to be included in the LFA SPFs only such that the introduction of IGP shortcuts does not impact the main SPF decision. For a given prefix, the main SPF always selects a direct primary next-hop. The LFA SPF will select a an LFA next-hop for this prefix but will prefer a direct LFA next-hop over a tunneled LFA next-hop.

Without the new include-ldp-prefix argument, only core IPv4 routes learned from RTM are advertised as BGP labeled routes to this neighbor. And the stitching of LDP FEC to the BGP labeled route is not performed for this neighbor even if the same prefix was learned from LDP.

Note that the ‘from protocol’ statement has an effect only when the protocol value is ldp. Policy entries with protocol values of

rsvp,

bgp, or any value other than

ldp are ignored at the time the policy is applied to LDP.

The template comes up in the no shutdown state and as such it takes effect immediately. Once a template is in use, the user can change any of the parameters on the fly without shutting down the template. In this case, all targeted Hello adjacencies are.

|

•

|

User configuration of the peer with the targeted session parameters inherited from the config>router>ldp>targeted-session>ipv4 in the top level context or explicitly configured for this peer in the config>router>ldp>targeted-session>peer context and which overrides the top level parameters shared by all targeted peers. Let us refer to the top level configuration context as the global context. Note that some parameters only exist in the global context and as such their value will always be inherited by all targeted peers regardless of which event triggered it.

|

|

•

|

The triggering event caused a change to the local-lsr-id parameter value. In this case, the Hello adjacency is brought down which will also cause the LDP session to be brought down if this is the last Hello adjacency associated with the session. A new Hello adjacency and LDP session will then get established to the peer using the new value of the local LSR ID.

|

|

•

|

The triggering event caused the targeted peer shutdown option to be enabled. In this case, the Hello adjacency is brought down which will also cause the LDP session to be brought down if this is the last Hello adjacency associated with the session.

|

Finally, the value of any LDP parameter which is specific to the LDP/TCP session to a peer is inherited from the config>router>ldp>session-parameters>peer context. This includes MD5 authentication, LDP prefix per-peer policies, label distribution mode (DU or DOD), etc.

Figure 57 illustrates wholesale video distribution over P2MP LDP LSP. Static IGMP entries on edge are bound to P2MP LDP LSP tunnel-interface for multicast video traffic distribution.

In order to make use of the ECMP next-hop, the user must configure the ecmp value in the system to at least two (2) using the following command:

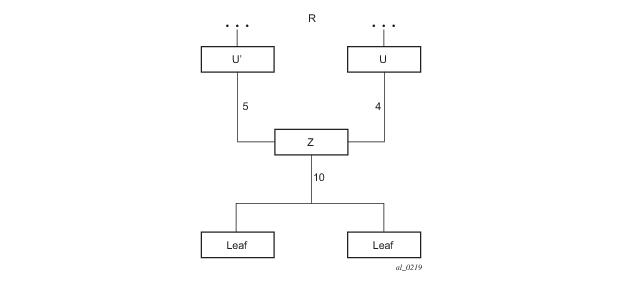

Upstream LSR U in Figure 58 is the primary next-hop for the root LSR

R of the P2MP FEC. This is also referred to as primary upstream LSR. Upstream LSR

U’ is an ECMP or LFA backup next-hop for the root LSR

R of the same P2MP FEC. This is referred to as backup upstream LSR. Downstream LSR

Z sends a label mapping message to both upstream LSR nodes and programs the primary ILM on the interface to LSR

U and a backup ILM on the interface to LSR

U’. The labels for the primary and backup ILMs must be different. LSR

Z thus will attract traffic from both of them. However, LSR

Z will block the ILM on the interface to LSR

U’ and will only accept traffic from the ILM on the interface to LSR

U.

In case of a failure of the link to LSR U or of the LSR

U itself causing the LDP session to LSR

U to go down, LSR

Z will detect it and reverse the ILM blocking state and will immediately start receiving traffic from LSR

U’ until IGP converges and provides a new primary next-hop, and ECMP or LFA backup next-hop, which may or may not be on the interface to LSR

U’. At that point LSR

Z will update the primary and backup ILMs in the data path.

|

2.

|

The following hash is performed: H = (CRC32(Opaque Value)) modulo N, where N is the number of upstream LSRs. The Opaque Value is the field identified in the P2MP FEC Element right after 'Opaque Length' field. The 'Opaque Length' indicates the size of the opaque value used in this calculation.

|

In order for the system to perform a fast switchover to the backup ILM in the fast path, LDP applies to the primary ILM uniform FRR failover procedures similar in concept to the ones applied to an NHLFE in the existing implementation of LDP FRR for unicast FECs. There are however important differences to note. LDP associates a unique Protect Group ID (PG–ID) to all mLDP FECs which have their primary ILM on any LDP interface pointing to the same upstream LSR. This PG-ID is assigned per upstream LSR regardless of the number of LDP interfaces configured to this LSR. As such this PG-ID is different from the one associated with unicast FECs and which is assigned to each downstream LDP interface and next-hop. If however a failure caused an interface to go down and also caused the LDP session to upstream peer to go down, both PG-IDs have their state updated in the IOM and thus the uniform FRR procedures will be triggered for both the unicast LDP FECs forwarding packets towards the upstream LSR and the mLDP FECs receiving packets from the same upstream LSR.

LDP shortcut for BGP next-hop resolution shortcuts allow for the deployment of a ‘route-less core’ infrastructure. Many service providers either have or intend to remove the IBGP mesh from their network core, retaining only the mesh between routers connected to areas of the network that require routing to external routes.

Shortcuts are implemented by utilizing Layer 2 tunnels (i.e., MPLS LSPs) as next hops for prefixes that are associated with the far end termination of the tunnel. By tunneling through the network core, the core routers forwarding the tunnel have no need to obtain external routing information and are immune to attack from external sources.

The tunnel table contains all available tunnels indexed by remote destination IP address. LSPs derived from received LDP /32 route FECs will automatically be installed in the table associated with the advertising router-ID when IGP shortcuts are enabled.

If a higher priority shortcut is not available or is not configured, a lower priority shortcut is evaluated. When no shortcuts are configured or available, the IGP next-hop is always used. Shortcut and next-hop determination is event driven based on dynamic changes in the tunneling mechanisms and routing states.

Refer to the 7750 SR OS Routing Protocols Guide for details on the use of LDP FEC and RSVP LSP for BGP Next-Hop Resolution.

When an IPv4 packet is received on an ingress network interfacea subscriber IES interface, or a regular IES interface, the lookup of the packet by the ingress forwarding engine will result in the packet being sent labeled with the label stack corresponding to the NHLFE of the LDP LSP when the preferred RTM entry corresponds to an LDP shortcut.

config>router>ldp>[no

] shortcut-transit-ttl-propagate

config>router>ldp>[no

] shortcut-local-ttl-propagate

When the no form of the above command is enabled for local packets, TTL propagation is disabled on all locally generated IP packets, including ICMP Ping, traceroute, and OAM packets that are destined to a route that is resolved to the LSP shortcut. In this case, a TTL of 255 is programmed onto the pushed label stack. This is referred to as pipe mode.

Similarly, when the no form is enabled for transit packets, TTL propagation is disabled on all IP packets received on any IES interface and destined to a route that is resolved to the LSP shortcut. In this case, a TTL of 255 is programmed onto the pushed label stack.

This feature introduces a new TLV referred to as LSR Overload Status TLV. This TLV is encoded using vendor proprietary TLV encoding as per RFC 5036. It uses a TLV type value of 0x3E02 and the Timetra OUI value of 0003FA.

where:

U-bit: Unknown TLV bit, as described in RFC 5036. The value MUST

be 1 which means if unknown to receiver then receiver should ignore

F-bit: Forward unknown TLV bit, as described in RFC RFC5036. The value

of this bit MUST be 1 since a LSR overload TLV is sent only between

two immediate LDP peers, which are not forwarded.

S-bit: The State Bit. It indicates whether the sender is setting the

LSR Overload Status ON or OFF. The State Bit value is used as

follows:

1 - The TLV is indicating LSR overload status as ON.

0 - The TLV is indicating LSR overload status as OFF.

Where:

U and F bits : MUST be 1 and 0 respectively as per section 3 of LDP

Capabilities [RFC5561].

S-bit : MUST be 1 (indicates that capability is being advertised).

show router ldp status

===============================================================================

LDP Status for LSR ID 110.20.1.110

===============================================================================

Admin State : Up Oper State : Up

Created at : 07/17/13 21:27:41 Up Time : 0d 01:00:41

Oper Down Reason : n/a Oper Down Events : 1

Last Change : 07/17/13 21:27:41 Tunn Down Damp Time : 20 sec

Label Withdraw Del*: 0 sec Implicit Null Label : Enabled

Short. TTL Prop Lo*: Enabled Short. TTL Prop Tran*: Enabled

Import Policies : Export Policies :

Import-LDP Import-LDP

External External

Tunl Exp Policies :

from-proto-bgp

Aggregate Prefix : False Agg Prefix Policies : None

FRR : Enabled Mcast Upstream FRR : Disabled

Dynamic Capability : False P2MP Capability : True

MP MBB Capability : True MP MBB Time : 10

Overload Capability: True <---- //Local Overload Capability

Active Adjacencies : 0 Active Sessions : 0

Active Interfaces : 2 Inactive Interfaces : 4

Active Peers : 62 Inactive Peers : 10

Addr FECs Sent : 0 Addr FECs Recv : 0

Serv FECs Sent : 0 Serv FECs Recv : 0

P2MP FECs Sent : 0 P2MP FECs Recv : 0

Attempted Sessions : 458

No Hello Err : 0 Param Adv Err : 0

Max PDU Err : 0 Label Range Err : 0

Bad LDP Id Err : 0 Bad PDU Len Err : 0

Bad Mesg Len Err : 0 Bad TLV Len Err : 0

Unknown TLV Err : 0

Malformed TLV Err : 0 Keepalive Expired Err: 4

Shutdown Notif Sent: 12 Shutdown Notif Recv : 5

===============================================================================

show router ldp session detail

===============================================================================

LDP Sessions (Detail)

===============================================================================

-------------------------------------------------------------------------------

Session with Peer 10.8.100.15:0, Local 110.20.1.110:0

-------------------------------------------------------------------------------

Adjacency Type : Targeted State : Nonexistent

Up Time : 0d 00:00:00

Max PDU Length : 4096 KA/Hold Time Remaining : 0

Link Adjacencies : 0 Targeted Adjacencies : 1

Local Address : 110.20.1.110 Peer Address : 10.8.100.15

Local TCP Port : 0 Peer TCP Port : 0

Local KA Timeout : 40 Peer KA Timeout : 40

Mesg Sent : 0 Mesg Recv : 1

FECs Sent : 0 FECs Recv : 0

Addrs Sent : 0 Addrs Recv : 0

GR State : Capable Label Distribution : DU

Nbr Liveness Time : 0 Max Recovery Time : 0

Number of Restart : 0 Last Restart Time : Never

P2MP : Not Capable MP MBB : Not Capable

Dynamic Capability : Not Capable LSR Overload : Not Capable <---- //Peer OverLoad Capab.

Advertise : Address/Servi*

Addr FEC OverLoad Sent : No Addr FEC OverLoad Recv : No

Mcast FEC Overload Sent: No Mcast FEC Overload Recv: No

Serv FEC Overload Sent : No Serv FEC Overload Recv : No

-------------------------------------------------------------------------------

- [show router ldp interface resource-failures]

- [show router ldp targ-peer resource-failures]

show router ldp interface resource-failures

===============================================================================

LDP Interface Resource Failures

===============================================================================

srl srr

sru4 sr4-1-5-1

===============================================================================

show router ldp targ-peer resource-failures

===============================================================================

LDP Peers Resource Failures

===============================================================================

10.20.1.22 110.20.1.3

===============================================================================

16 2013/07/17 14:21:38.06 PST MINOR: LDP #2003 Base LDP Interface Admin State

"Interface instance state changed - vRtrID: 1, Interface sr4-1-5-1, administrati

ve state: inService, operational state: outOfService"

13 2013/07/17 14:15:24.64 PST MINOR: LDP #2003 Base LDP Interface Admin State

"Interface instance state changed - vRtrID: 1, Peer 10.20.1.22, administrative s

tate: inService, operational state: outOfService"

- [show router ldp interface detail]

- [show router ldp targ-peer detail]

show router ldp interface detail

===============================================================================

LDP Interfaces (Detail)

===============================================================================

-------------------------------------------------------------------------------

Interface "sr4-1-5-1"

-------------------------------------------------------------------------------

Admin State : Up Oper State : Down

Oper Down Reason : noResources <----- //link LDP resource exhaustion handled

Hold Time : 45 Hello Factor : 3

Oper Hold Time : 45

Hello Reduction : Disabled Hello Reduction *: 3

Keepalive Timeout : 30 Keepalive Factor : 3

Transport Addr : System Last Modified : 07/17/13 14:21:38

Active Adjacencies : 0

Tunneling : Disabled

Lsp Name : None

Local LSR Type : System

Local LSR : None

BFD Status : Disabled

Multicast Traffic : Enabled

-------------------------------------------------------------------------------

show router ldp discovery interface "sr4-1-5-1" detail

===============================================================================

LDP Hello Adjacencies (Detail)

===============================================================================

-------------------------------------------------------------------------------

Interface "sr4-1-5-1"

-------------------------------------------------------------------------------

Local Address : 223.0.2.110 Peer Address : 224.0.0.2

Adjacency Type : Link State : Down

===============================================================================

show router ldp targ-peer detail

===============================================================================

LDP Peers (Detail)

===============================================================================

-------------------------------------------------------------------------------

Peer 10.20.1.22

-------------------------------------------------------------------------------

Admin State : Up Oper State : Down

Oper Down Reason : noResources <----- // T-LDP resource exhaustion handled

Hold Time : 45 Hello Factor : 3

Oper Hold Time : 45

Hello Reduction : Disabled Hello Reduction Fact*: 3

Keepalive Timeout : 40 Keepalive Factor : 4

Passive Mode : Disabled Last Modified : 07/17/13 14:15:24

Active Adjacencies : 0 Auto Created : No

Tunneling : Enabled

Lsp Name : None

Local LSR : None

BFD Status : Disabled

Multicast Traffic : Disabled

-------------------------------------------------------------------------------

show router ldp discovery peer 10.20.1.22 detail

===============================================================================

LDP Hello Adjacencies (Detail)

===============================================================================

-------------------------------------------------------------------------------

Peer 10.20.1.22

-------------------------------------------------------------------------------

Local Address : 110.20.1.110 Peer Address : 10.20.1.22

Adjacency Type : Targeted State : Down <----- //T-LDP resource exhaustion handled

===============================================================================

{...... snip......}

Num OLoad Interfaces: 4 <----- //#LDP interfaces resource in exhaustion

Num Targ Sessions: 72 Num Active Targ Sess: 62

Num OLoad Targ Sessions: 7 <----- //#T-LDP peers in resource exhaustion

Num Addr FECs Rcvd: 0 Num Addr FECs Sent: 0

Num Addr Fecs OLoad: 1 <----- //# of local/remote unicast FECs in Overload

Num Svc FECs Rcvd: 0 Num Svc FECs Sent: 0

Num Svc FECs OLoad: 0 <----- // # of local/remote service Fecs in Overload

Num mcast FECs Rcvd: 0 Num Mcast FECs Sent: 0

Num mcast FECs OLoad: 0 <----- // # of local/remote multicast Fecs in Overload

{...... snip......}

23 2013/07/17 15:35:47.84 PST MINOR: LDP #2002 Base LDP Resources Exhausted "Instance state changed - vRtrID: 1, administrative state: inService, operationa l state: inService"

- [clear router ldp resource-failures]

- [clear router ldp interface ifName]

- [clear router ldp peer peerAddress]

- [show router ldp session detail]

show router ldp session 110.20.1.1 detail

-------------------------------------------------------------------------------

Session with Peer 110.20.1.1:0, Local 110.20.1.110:0

-------------------------------------------------------------------------------

Adjacency Type : Both State : Established

Up Time : 0d 00:05:48

Max PDU Length : 4096 KA/Hold Time Remaining : 24

Link Adjacencies : 1 Targeted Adjacencies : 1

Local Address : 110.20.1.110 Peer Address : 110.20.1.1

Local TCP Port : 51063 Peer TCP Port : 646

Local KA Timeout : 30 Peer KA Timeout : 45

Mesg Sent : 442 Mesg Recv : 2984

FECs Sent : 16 FECs Recv : 2559

Addrs Sent : 17 Addrs Recv : 1054

GR State : Capable Label Distribution : DU

Nbr Liveness Time : 0 Max Recovery Time : 0

Number of Restart : 0 Last Restart Time : Never

P2MP : Capable MP MBB : Capable

Dynamic Capability : Not Capable LSR Overload : Capable

Advertise : Address/Servi* BFD Operational Status : inService

Addr FEC OverLoad Sent : Yes Addr FEC OverLoad Recv : No <---- // this LSR sent overLoad for unicast FEC type to peer

Mcast FEC Overload Sent: No Mcast FEC Overload Recv: No

Serv FEC Overload Sent : No Serv FEC Overload Recv : No

-------------------------------------------------------------------------------

show router ldp session 110.20.1.110 detail

-------------------------------------------------------------------------------

Session with Peer 110.20.1.110:0, Local 110.20.1.1:0

-------------------------------------------------------------------------------

Adjacency Type : Both State : Established

Up Time : 0d 00:08:23

Max PDU Length : 4096 KA/Hold Time Remaining : 21

Link Adjacencies : 1 Targeted Adjacencies : 1

Local Address : 110.20.1.1 Peer Address : 110.20.1.110

Local TCP Port : 646 Peer TCP Port : 51063

Local KA Timeout : 45 Peer KA Timeout : 30

Mesg Sent : 3020 Mesg Recv : 480

FECs Sent : 2867 FECs Recv : 16

Addrs Sent : 1054 Addrs Recv : 17

GR State : Capable Label Distribution : DU

Nbr Liveness Time : 0 Max Recovery Time : 0

Number of Restart : 0 Last Restart Time : Never

P2MP : Capable MP MBB : Capable

Dynamic Capability : Not Capable LSR Overload : Capable

Advertise : Address/Servi* BFD Operational Status : inService

Addr FEC OverLoad Sent : No Addr FEC OverLoad Recv : Yes <---- // this LSR received overLoad for unicast FEC type from peer

Mcast FEC Overload Sent: No Mcast FEC Overload Recv: No

Serv FEC Overload Sent : No Serv FEC Overload Recv : No

===============================================================================

70002 2013/07/17 16:06:59.46 PST MINOR: LDP #2008 Base LDP Session State Change "Session state is operational. Overload Notification message is sent to/from peer 110.20.1.1:0 with overload state true for fec type prefixes"

Num Entities OLoad (FEC: Address Prefix ): Sent: 7 Rcvd: 0 <----- // # of session in OvLd for fec-type=unicast

Num Entities OLoad (FEC: PWE3 ): Sent: 0 Rcvd: 0 <----- // # of session in OvLd for fec-type=service

Num Entities OLoad (FEC: GENPWE3 ): Sent: 0 Rcvd: 0 <----- // # of session in OvLd for fec-type=service

Num Entities OLoad (FEC: P2MP ): Sent: 0 Rcvd: 0 <----- // # of session in OvLd for fec-type=MulticastP2mp

Num Entities OLoad (FEC: MP2MP UP ): Sent: 0 Rcvd: 0 <----- // # of session in OvLd for fec-type=MulticastMP2mp

Num Entities OLoad (FEC: MP2MP DOWN ): Sent: 0 Rcvd: 0 <----- // # of session in OvLd for fec-type=MulticastMP2mp

Num Active Adjacencies: 9

Num Interfaces: 6 Num Active Interfaces: 6

Num OLoad Interfaces: 0 <----- // link LDP interfaces in resource exhaustion should be zero when Overload Protection Capability is supported

Num Targ Sessions: 72 Num Active Targ Sess: 67

Num OLoad Targ Sessions: 0 <----- // T-LDP peers in resource exhaustion should be zero if Overload Protection Capability is supported

Num Addr FECs Rcvd: 8667 Num Addr FECs Sent: 91

Num Addr Fecs OLoad: 1 <----- // # of local/remote unicast Fecs in Overload

Num Svc FECs Rcvd: 3111 Num Svc FECs Sent: 0

Num Svc FECs OLoad: 0 <----- // # of local/remote service Fecs in Overload

Num mcast FECs Rcvd: 0 Num Mcast FECs Sent: 0

Num mcast FECs OLoad: 0 <----- // # of local/remote multicast Fecs in Overload

Num MAC Flush Rcvd: 0 Num MAC Flush Sent: 0

69999 2013/07/17 16:06:59.21 PST MINOR: LDP #2002 Base LDP Resources Exhausted "Instance state changed - vRtrID: 1, administrative state: inService, operational state: inService"

LDP IPv6 can be enabled on the SR OS interface. Figure 61 shows the LDP adjacency and session over an IPv6 interface.

The SR OS LDP IPv6 implementation uses a 128-bit LSR-ID as defined in draft-pdutta-mpls-ldp-v2-00. See

LDP Process Overview for more information about interoperability of this implementation with 32-bit LSR-ID, as defined in

draft-ietf-mpls-ldp-ipv6-14.

The user can configure the local-lsr-id option on the interface and change the value of the LSR-ID to either the local interface or to another interface name, loopback or not. The global unicast IPv6 address corresponding to the primary IPv6 address of the interface is used as the LSR-ID. If the user invokes an interface which does not have a global unicast IPv6 address in the configuration of the transport address or the configuration of the

local-lsr-id option, the session will not come up and an error message will be displayed.

The user can configure the local-lsr-id option on the targeted session and change the value of the LSR-ID to either the local interface or to some other interface name, loopback or not. The global unicast IPv6 address corresponding to the primary IPv6 address of the interface is used as the LSR-ID. If the user invokes an interface which does not have a global unicast IPv6 address in the configuration of the transport address or the configuration of the

local-lsr-id option, the session will not come up and an error message will be displayed. In all cases, the transport address for the LDP session and the source IP address of targeted Hello message will be updated to the new LSR-ID value.

Adjacency-level FEC-type capability advertisement is defined in draft-pdutta-mpls-ldp-adj-capability. By default, all FEC types supported by the LSR are advertised in the LDP IPv4 or IPv6 session initialization; see

LDP Session Capabilities for more information. If a given FEC type is enabled at the session level, it can be disabled over a given LDP interface at the IPv4 or IPv6 adjacency level for all IPv4 or IPv6 peers over that interface. If a given FEC type is disabled at the session level, then FECs will not be advertised and enabling that FEC type at the adjacency level will not have any effect. The LDP adjacency capability can be configured on link Hello adjacency only and does not apply to targeted Hello adjacency.

These commands, when applied for the P2MP FEC, deprecate the existing command multicast‑traffic {

enable |

disable} under the interface. Unlike the session-level capability, these commands can disable multicast FEC for IPv4 and IPv6 separately.

By default, IPv6 address distribution is determined by whether the Dual-stack capability TLV, which is defined in draft-ietf-mpls-ldp-ipv6, is present in the Hello message from the peer. This coupling is introduced because of interoperability issues found with existing third-party LDP IPv4 implementations.

|

4.

|

When all LDP IPv4 sessions have dynamic capabilities enabled, with per-peer FEC-capabilities for IPv6 FECs disabled, then the GLOBAL IMPORT policy can be removed.

|

This command provides the flexibility required in case the user does not need to track both Hello adjacency and next-hops of FECs. For example, if the user configures bfd-enable ipv6 only to save on the number of BFD sessions, then LDP will track the IPv6 Hello adjacency and the next-hops of IPv6 prefix FECs. LDP will not track next-hops of IPv4 prefix FECs resolved over the same LDP IPv6 adjacency. If the IPv4 data plane encounters errors and the IPv6 Hello adjacency is not affected and remains up, traffic for the IPv4 prefix FECs resolved over that IPv6 adjacency will be black-holed. If the BFD tracking the IPv6 Hello adjacency times out, then all IPv4 and IPv6 prefix FECs will be updated.

The SDP of type LDP with

far-end and

tunnel-farend options using IPv6 addresses is supported. Note that the addresses need not be of the same family (IPv6 or IPv4) for the SDP configuration to be allowed. The user can have an SDP with an IPv4 (or IPv6) control plane for the T-LDP session and an IPv6 (or IPv4) LDP FEC as the tunnel.

Because IPv6 LSP is only supported with LDP, the use of a far-end IPv6 address will not be allowed with a BGP or RSVP/MPLS LSP. In addition, the CLI will not allow an SDP with a combination of an IPv6 LDP LSP and an IPv4 LSP of a different control plane. As a result, the following commands are blocked within the SDP configuration context when the far-end is an IPv6 address:

Services which use LDP control plane (such as T-LDP VPLS and R-VPLS, VLL, and IES/VPRN spoke interface) will have the spoke-SDP (PW) signaled with an IPv6 T-LDP session when the far‑end option is configured to an IPv6 address. The spoke-SDP for these services binds by default to an SDP that uses a LDP IPv6 FEC, which prefix matches the far end address. The spoke-SDP can use a different LDP IPv6 FEC or a LDP IPv4 FEC as the tunnel by configuring the

tunnel-far-end option. In addition, the IPv6 PW control word is supported with both data plane packets and VCCV OAM packets. Hash label is also supported with the above services, including the signaling and negotiation of hash label support using T-LDP (Flow sub-TLV) with the LDP IPv6 control plane. Finally, network domains are supported in VPLS.

|

•

|

The sdp-id must match an SDP which uses LDP IPv6 FEC

|

|

•

|

The far-end ip-address command is not supported with LDP IPv6 transport tunnel. The user must reference a spoke-SDP using a LDP IPv6 SDP coming from mirror source node.

|

|

•

|

In the spoke-sdp sdp-id:vc-id command, vc-id should match that of the spoke-sdp configured in the mirror-destination context at mirror source node.

|

|

•

|

Configuring ingress-vc-label is optional; both static and t-ldp are supported.

|

far-end ip-address [vc-id

vc-id] [ing-svc-label

ingress-vc-label| tldp] [icb]

config>router>static-route-entry ip-prefix/prefix-length [mcast]

MPLS OAM tools lsp-ping and

lsp-trace are updated to operate with LDP IPv6 and support the following:

|

2.

|

The src-ip-address option is extended to accept IPv6 address of the sender node. If the user did not enter a source IP address, the system IPv6 address will be used. If the user entered a source IP address of a different family than the LDP target FEC stack TLV, an error is returned and the test command is aborted.

|

|

4.

|

For lsp-trace, the downstream information in DSMAP/DDMAP will be encoded as the same family as the LDP control plane of the link LDP or targeted LDP session to the downstream peer.

|

Finally, vccv-ping and

vccv-trace for a single-hop PW are updated to support IPv6 PW FEC 128 and FEC 129 as per RFC 6829. In addition, the PW OAM control word is supported with VCCV packets when the

control-word option is enabled on the spoke-SDP configuration. The value of the Channel Type field is set to 0x57 indicates that the Associated Channel carries an IPv6 packet, as per RFC 4385.

SR OS implementation uses a 128-bit LSR-ID as defined in draft-pdutta-mpls-ldp-v2 to establish an LDP IPv6 session with a peer LSR. This is done such that a routable system IPv6 address can be used by default to bring up the LDP task on the router and establish link LDP and T-LDP sessions to other LSRs, as is the common practice with LDP IPv4 in existing customer deployments. More importantly, this allows for the establishment of control plane independent LDP IPv4 and LDP IPv6 sessions between two LSRs over the same interface or set of interfaces. The SR OS implementation allows for two separate LDP IPv4 and LDP IPv6 sessions between two LSRs over the same interface or a set of interfaces because each session uses a unique LSR-ID (32-bit for IPv4 and 128-bit for IPv6).

The SR OS LDP implementation does not interoperate with an implementation using a 32-bit LSR-ID as defined in draft-ietf-mpls-ldp-ipv6 to establish an IPv6 LDP session. The latter specifies an LSR can send both IPv4 and IPv6 Hellos over an interface such that it can establish either an IPv4 or an IPv6 LDP session with LSRs on the same subnet. It thus does not allow for separate LDP IPv4 and LDP IPv6 LDP sessions between two routers.

The SR OS LDP implementation otherwise complies with all other aspects of draft-ietf-mpls-ldp-ipv6, including the support of the dual-stack capability TLV in the Hello message. The latter is used by an LSR to inform its peer that it is capable of establishing either an LDP IPv4 or LDP IPv6 session and to convey the IP family preference for the LDP Hello adjacency and thus for the resulting LDP session. This is required because the implementation described in

draft-ietf-mpls-ldp-ipv6 allows for a single session between LSRs, and both LSRs must agree if the session should be brought up using IPv4 or IPv6 when both IPv4 and IPv6 Hellos are exchanged between the two LSRs. The SR OS implementation has a separate session for each IP family between two LSRs and, as such, this TLV is used to indicate the family preference and to also indicate that it supports resolving IPv6 FECs over an IPv4 LDP session.

This implementation supports advertising and resolving IPv6 prefix FECs over an LDP IPv4 session using a 32-bit LSR-ID in compliance with draft-ietf-mpls-ldp-ipv6. When introducing an LSR based on the SR OS in a LAN with a broadcast interface, it can peer with third party LSR implementations which support

draft-ietf-mpls-ldp-ipv6 and LSRs which do not. When its peers using IPv4 LDP control plane with a third-party LSR implementation which does not support it, the advertisement of IPv6 addresses or IPv6 FECs to that peer may cause it to bring down the IPv4 LDP session.

In other words, there are deployed third-party LDP implementations which are compliant with RFC 5036 for LDP IPv4, but which are not compliant with RFC 5036 for handling IPv6 address or IPv6 FECs over an LDP IPv4 session. To address this issue, draft-ietf-mpls-ldp-ipv6 modifies RFC 5036 by requiring implementations complying with

draft-ietf-mpls-ldp-ipv6 to check for the dual-stack capability TLV in the IPv4 Hello message from the peer. Without the peer advertising this TLV, an LSR must not send IPv6 addresses and FECs to that peer. SR OS implementation implements this change.

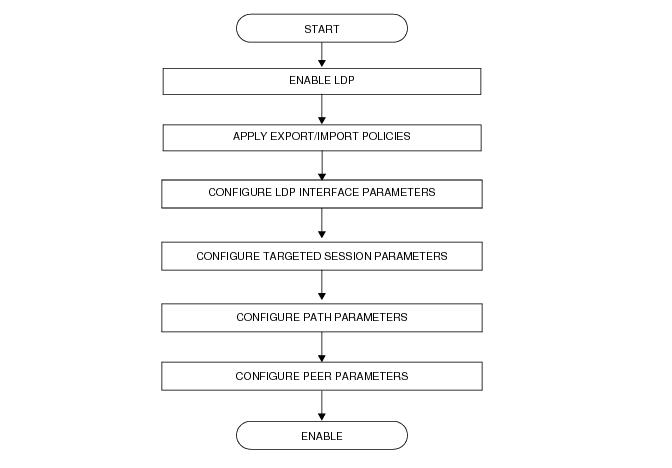

Figure 64 displays the process to provision basic LDP parameters.