Classification, queueing and scheduling

Flow classification

The flow classifier determines into which flow each incoming packet is mapped. On customer-role ingress ports, a number of flows can be defined, based on port, user priority, VLAN ID, IP-ToS field and destination MAC address. For each flow a rate controller can be specified (CIR/PIR value).

Apart from these flows based on input criteria, a default flow is defined for packets that do not fulfil any of the specified criteria for the flows, e.g. untagged packets that have no user priority field. Thus, untagged traffic is classified per port. All traffic on a certain port is treated equally and attached a configurable default port user priority value to map the traffic on the appropriate queues.

A default user priority can be specified on port level to be added to each packet in the default flow (see Default user priority). Furthermore, the rate controller behaviour for the default flow can be specified. The same fixed mapping table from user priority to traffic class to egress queue is applied to packets in the default flow as to packets in the specified flows.

Provided that Quality of Service - Classification, Queueing and Scheduling (QoS CQS, cf. Quality of Service configuration options) is enabled, each flow can be assigned a traffic class by using QoS profiles (see Quality of Service provisioning).

Each traffic class is associated with a certain egress queue (see Traffic class to queue assignment).

Ingress direction for network-role ports

For network-role ports, two cases need to be differentiated:

-

On I-NNI ports, explicit provisioning of the flow identification (flow configuration) is not provisionable. I-NNI ports always have the default QoS profile assigned. On an I-NNI port, the only purpose of the flow classifier is to evaluate the traffic class. The traffic class determines the egress queue.

-

E-NNI trunk ports may be split in so-called virtual ports which can be provisioned by means of virtual port descriptors (VPDs). Explicit provisioning of the flow identification (flow configuration) enables the DiffServEdge function for this fraction of the network-role port. Ingress rate control of these virtual ports is the same as for customer-role ports.

Ingress rate control

Ingress rate control is a means to limit the users access to the network, in case the available bandwidth is too small to handle all offered ingress packets.

A rate controller has two parameters, a provisionable committed information rate CIR (or PIR, if CIR = 0), see below), and a committed burst size (CBS). The committed burst size is the committed information rate multiplied by 0.11 seconds.(CBS = 0.11 seconds × CIR).

Rate control is supported for every ingress flow on every customer-role port. There is one rate controller per flow. A “color unaware one-rate two-color marker” is supported, which can be seen as a degenerate case of the two-rate three-color marker. “Color unaware” means that the rate controller ignores and overwrites any dropping precedence given by an upstream network element (network-role ports with DiffServEdge function (E-NNI) only).

The rate controller is accurate within 5% of the rates specified for the CIR and PIR. The rate metering comprises the whole Ethernet MAC frame. Products may deviate from this and count only the IP package size. The rate controller measurement accuracy is optimized for long frame traffic. Shorter frames are underestimated. Thus, it is recommended to dimension the transporting network to have always a headroom of at least 10% bandwidth compared to the committed information rate (CIR) provisioned.

A two-rate three-color marker is defined by three colors, specifying the dropping precedence, and two rates as delimiter between the colors. The marker will mark each packet with a certain color, depending on the rate of arriving packets, and the amount of credits in the token bucket. The size of the token bucket will determine how long and far a data burst may be surpassed before the packets are marked with a higher dropping precedence.

The three colors indicate:

The two rates mean:

|

Committed Information Rate (CIR) |

The committed information rate is the delimiter between green and yellow packets. If the information rate is less than the committed information rate, all frames will be admitted to the egress queues. These frames will be marked “green”, and have a low probability to be dropped at the egress queues. |

|

Peak Information Rate (PIR) |

The peak information rate is the delimiter between yellow and red packets. If the information rate is greater than the committed information rate (CIR), but less than the peak information rate (PIR), the frames will be admitted to the egress queues. They will be marked “yellow” and have a high probability to be dropped (“high dropping precedence”). If the information rate is greater than the PIR, the frames will be marked “red” and dropped immediately. For the LAN-VPN (M-LAN) operation mode the relationship between CIR and PIR is determined by the rate control mode. For the IEEE 802.1Q STP virtual switch mode and the provider bridge mode the relationship is as specified in the assigned QoS profile. Note that on the LKA4 unit, any PIR is interpreted as infinite (if not: CIR=0, or CIR=PIR). |

Important! Provisioning of rate controllers does not apply to network-role ports (see Quality of Service (QoS) overview).

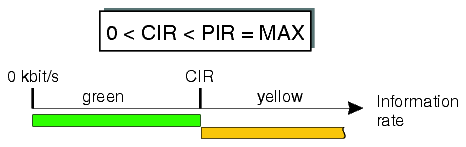

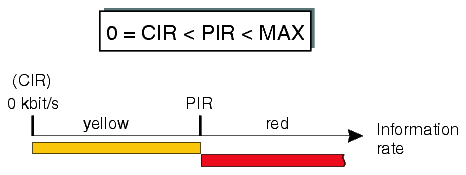

In general, the behavior of the rate controller is characterized as follows:

In case oversubscription support is disabled (QoS_osub = disabled), then the provisioning of the PIR is ignored and system-internally the value of the CIR is taken instead. This leads to a strict policing of all flows entering at a customer-role port of this VS.

Rate control modes

The rate controller can operate in two different modes:

1. Strict policing mode (CIR = PIR)

The strict policing mode allows each user to subscribe to a minimum committed SDH WAN bandwidth, or CIR (committed information rate). This mode will guarantee the bandwidth up to CIR but will drop any additional incoming frames at the ingress LAN port that would exceed the CIR.

All packets below CIR are marked green; all packets above PIR (= CIR) are marked red and dropped.

2. Oversubscription mode (CIR < PIR)

The oversubscription mode allows users to burst their data flow to a maximum available WAN bandwidth at a given instance. When PIR is set equal to the maximum of the physical network port bandwidth, then a user is allowed to send more data than the specified CIR. The additional data flow above CIR has a higher dropping probability.

The following two cases can be differentiated in oversubscription mode.

Provisioning the rate control mode

The desired rate control mode can be chosen by enabling/disabling oversubscription support (QoS_osub = enabled/disabled), and by setting the CIR and PIR values. CIR and PIR values can be set by means of QoS profiles (see Quality of Service provisioning).

The setting of the QoS_osub configuration parameter in combination with the relationship between CIR and PIR determines which rate control mode becomes effective. If, for example, oversubscription support is enabled, and the relationship between CIR and PIR is CIR = PIR ≤ MAX, then the rate controller is operated in strict policing mode.

Important!-

Which of the rate control modes can actually be configured depends on the mode of operation (see Quality of Service configuration options).

-

As a general rule it is recommended to use the oversubscription mode for TCP/IP applications, especially in case of meshed or ring network topologies where multiple end-users share the available bandwidth.

Dropper/Marker

Based on the indication of the rate controller, and the rate control mode for the flow, the dropper/marker will do the following:

|

No rate control |

Oversubscription mode |

Strict policing mode | |

|---|---|---|---|

|

Incoming rate < CIR |

mark “green” |

mark “green” |

mark “green” |

|

Incoming rate > CIR |

mark “green” |

mark “yellow” |

drop |

In the dropper function a decision is made whether to drop or forward a packet. On a TransLAN® card a deterministic dropping from tail when the queue is full is implemented. Packets that are marked red are always dropped. If WAN Ethernet link congestion occurs, frames are dropped. Yellow packets are always dropped before any of the green packets are dropped. This is the only dependency on queue occupation and packet color that is currently present in the dropper function. No provisioning is needed.

Default user priority

A default user priority can be configured for each customer-role port. Possible values are 0 (lowest priority) … 7 (highest priority) in steps of 1. The default setting is 0.

Provisioning of the default user priority does not apply to network-role ports.

The default user priority is treated differently depending on the taggging mode:

-

Incoming frames without a user priority encoding (untagged frames) are treated as if they had the default user priority.

-

IEEE 802.1Q VLAN tagging mode and provider bridge mode

Incoming frames without a user priority encoding (untagged frames) get a default user priority assigned. This C-UP may be equal to a user priority given by one of the provisioned flow descriptors. The subsequent traffic class assignment for this flow, however, will overwrite this C-UP bits again.

Traffic classes

At each ingress port, the traffic class (TC) for each frame is determined. At customer-role ports, this is done via the flow identification and the related provisioned traffic class. At network-role ports, the traffic class is directly derived from the p-bits of the outermost VLAN tag.

Depending on the operation mode, these traffic classes exist:

|

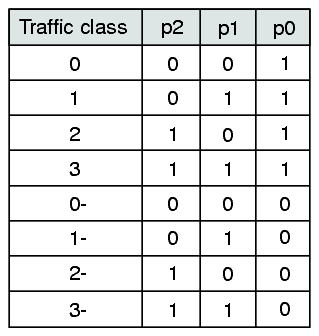

Provider bridge mode and IEEE 802.1Q VLAN tagging mode with encoding of the dropping precedence |

The traffic class is encoded in the user priority bits using p2 and p1. Thus, 4 traffic classes are defined: 0, 1, 2, 3. |

|

IEEE 802.1Q VLAN tagging mode without encoding of the dropping precedence |

The traffic class is encoded in the user priority bits using p2, p1, and p0. Thus, 8 traffic classes are defined: 0, 0-, 1, 1-, 2, 2-, 3, 3-. The “n” traffic classes differ from the “n-” traffic classes in the value of the p0 bit. |

Notes:

The support of dropping precedence encoding and evaluation can be enabled or disabled per virtual switch by means of the QoS_osub configuration parameter (QoS_osub = enabled/disabled). All virtual switches belonging to the same TransLAN® network must be provisioned equally for their TPID and this QoS_osub configuration parameter. These tables show the traffic class encoding in the user priority bits:

With oversubscription:

Without oversubscription:

For the IEEE 802.1Q VLAN tagging mode with oversubscription support (QoS_osub = enabled) it is recommended not to use the n- classes, otherwise all frames will always be marked yellow (i.e. they will have a higher dropping precedence; p0 = 0). In the provider bridge mode, any assignment of an n- class will be recognized as the related n class (tolerant system behavior for inconsistent provisioning).

Traffic class to queue assignment

The assignment of the traffic classes to the egress queues is as follows:

|

Transparent tagging |

IEEE 802.1Q VLAN tagging and IEEE 802.1ad VLAN tagging (provider bridge mode) | ||

|---|---|---|---|

|

Traffic class |

Queue |

Traffic class |

Queue |

|

3, and Internal use |

4 |

Internal use |

3 |

|

2 |

3 |

3 (and 3 -) |

4 |

|

1 |

2 |

2 (and 2-) |

2 |

|

0 |

1 |

1 (and 1-) and 0 (and 0-) |

1 |

Notes:

“Internal use” means that the queue is used for network management traffic (spanning tree BPDU's or GVRP PDU's, for example).

Queueing

The egress treatment is the same for customer-role and network-role ports.

Every port has four associated egress queues. The queues 1 and 2 are to be used for delay-insensitive traffic (for instance file transfer); the queues 3 and 4 are to be used for delay-sensitive traffic (for instance voice or video).

Please refer to Traffic class to queue assignment for the assignment of the traffic classes to the egress queues.

Repeater mode

In the repeater mode, there is no queueing process as described above. All frames go through the same queue.

Scheduler

The preceding functional blocks assure that all packets are mapped into one of the egress queues, and that no further packets need to be dropped.

The scheduler determines the order, in which packets from the four queues are forwarded. The scheduler on each of the four queues can be in one of two operational modes, strict priority or weighted bandwidth. Any combination of queues in either of the two modes is allowed. When exactly one queue is in weighted bandwidth mode, it is interpreted as a strict priority queue with the lowest priority.

Provided that Quality of Service - Classification, Queueing and Scheduling (QoS CQS, cf. Quality of Service configuration options) is enabled, the queue scheduling method can be configured as follows:

|

Queue scheduling method | |

|---|---|

|

Strict priority |

The packets in strict priority queues are forwarded strictly according to the queue ranking. The queue with the highest ranking will be served first. A queue with a certain ranking will only be served when the queues with a higher ranking are empty. The strict priority queues are always served before the weighted bandwidth queues. |

|

Weighted bandwidth |

The weights of the weighted bandwidth queues will be summed up; each queue gets a portion relative to its weight divided by this summed weight, the so-called normalized weight. The packets in the weighted bandwidth queues are handled in a Round-Robin order according to their normalized weight. |

Each of the two modes has his well-known advantages and drawbacks. Strict priority queues will always be served before weighted bandwidth queues. So with strict priority, starvation of the lower priority queues cannot be excluded. Starvation should be avoided by assuring that upstream policing is configured such that the queue is only allowed to occupy some fraction of the output link's capacity. This can be done by setting the strict policing rate control mode for the flows that map into this queue, and specifying an appropriate value for the CIR. The strict priority scheme can be used for low-latency traffic such as Voice over IP and internal protocol data such as spanning tree BPDU's or GVRP PDU's.

Weighted bandwidth queues are useful to assign a guaranteed bandwidth to each of the queues. The bandwidth can of course only be guaranteed if concurrent strict priority queues are appropriately rate-limited.

Usually the queue with the lowest number also has the lowest ranking order, but the ranking order of the strict priority queues can be redefined.

Important! It is recommended not to change the mode and ranking of the queue which is used by protocol packets like spanning tree BPDU's and GVRP PDU's (queue 3 or queue 4, respectively; cf. Traffic class to queue assignment).

Weight

A weight can be assigned to each port’s egress queue in order to define the ranking of the queue.

The weight of a strict priority queue has a significance compared to the weight of other strict priority queues only.

The weight of a weighted bandwidth queue has a significance compared to the weight of other weighted bandwidth queues only.

The weights of the weighted bandwidth queues are normalized to 100%, whereas the normalized weights of the strict priority queues indicate just ordering.

Example

The following table shows an example of a scheduler table:

|

Queue |

Queue scheduling method |

Weight |

Normalized weight |

|---|---|---|---|

|

1 |

Weighted bandwidth |

5 |

50% |

|

2 |

Strict priority |

9 |

1 |

|

3 |

Weighted bandwidth |

5 |

50% |

|

4 |

Strict priority |

15 |

2 |

The strict priority queues are served before the weighted bandwidth queues. The strict priority queue with the highest weight is served first, queue 4 in this example.

In this example, after serving the strict priority queues 4 and 2, the remaining bandwidth is evenly divided over queues 1 and 3.

Depending on the mode of operation, queue 3 or queue 4 is used for network management traffic, for instance for the spanning tree protocol (see Traffic class to queue assignment). Hindering this traffic can influence Ethernet network stability.

Default settings

These are the default settings of the queue scheduling method and weight:

|

Queue |

Queue scheduling method |

Weight |

|---|---|---|

|

1 |

Strict priority |

1 |

|

2 |

Strict priority |

2 |

|

3 |

Strict priority |

3 |

|

4 |

Strict priority |

239 |

Note: To ensure network stability (internal (r)STP BPDUs must arrive), queue 4 is served with strict priority scheduling with a fixed priority of 239.

Alcatel-Lucent – Proprietary

Use pursuant to applicable agreements