What are the NFM-P system redundancy models?

Overview

|

CAUTION Service Disruption |

It is recommended that you deploy the primary server and database in the same geographical location and LAN.

This results in increased NFM-P system performance and fault tolerance.

A redundant NFM-P system provides greater fault tolerance by ensuring that there is no single point of software failure in the NFM-P management network. A redundant system consists of the following components:

The current state of a component defines the primary or standby role of the component. The primary main server actively manages the network and the primary database is open in read/write mode. When a standby component detects a primary component failure, it automatically changes roles from standby to primary. You can also change the role of a component using the NFM-P client GUI or a CLI script.

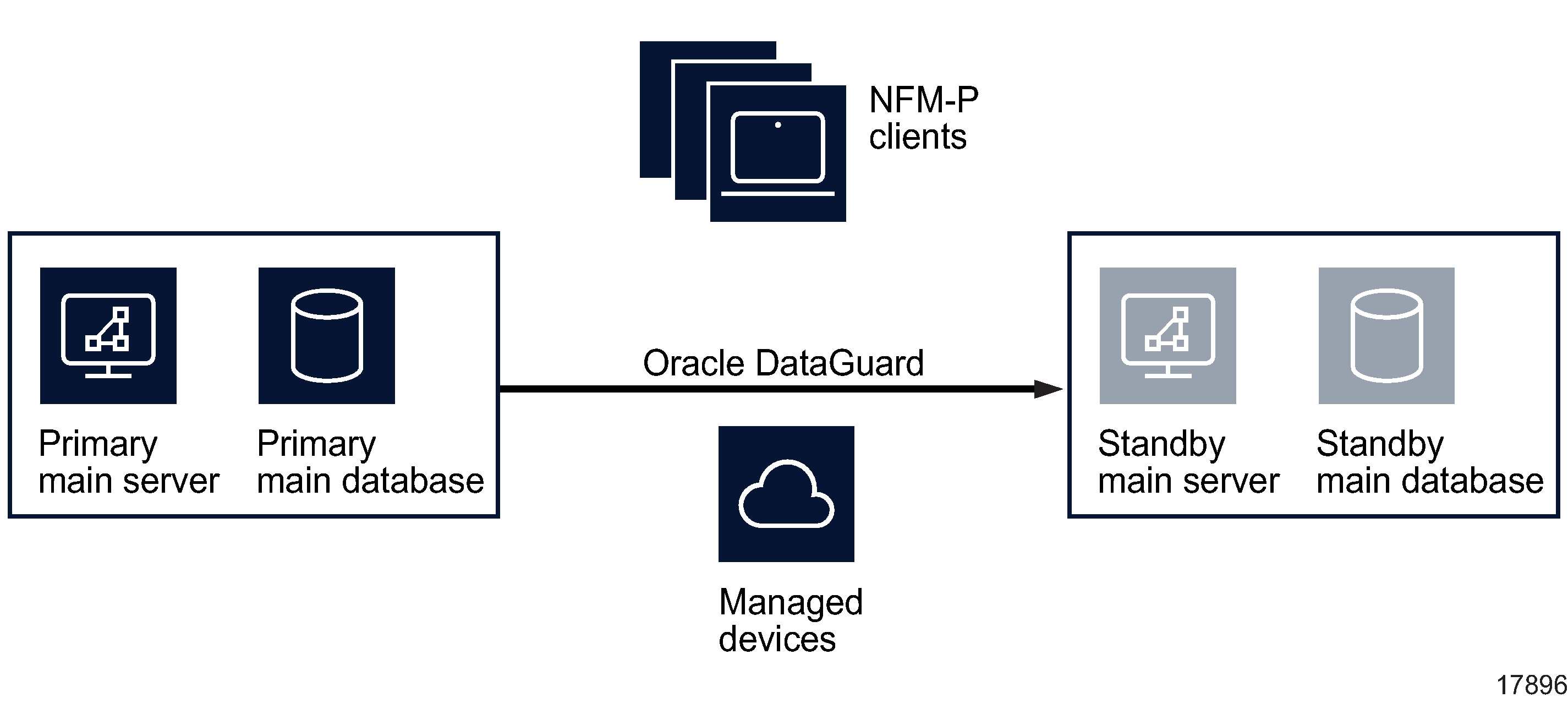

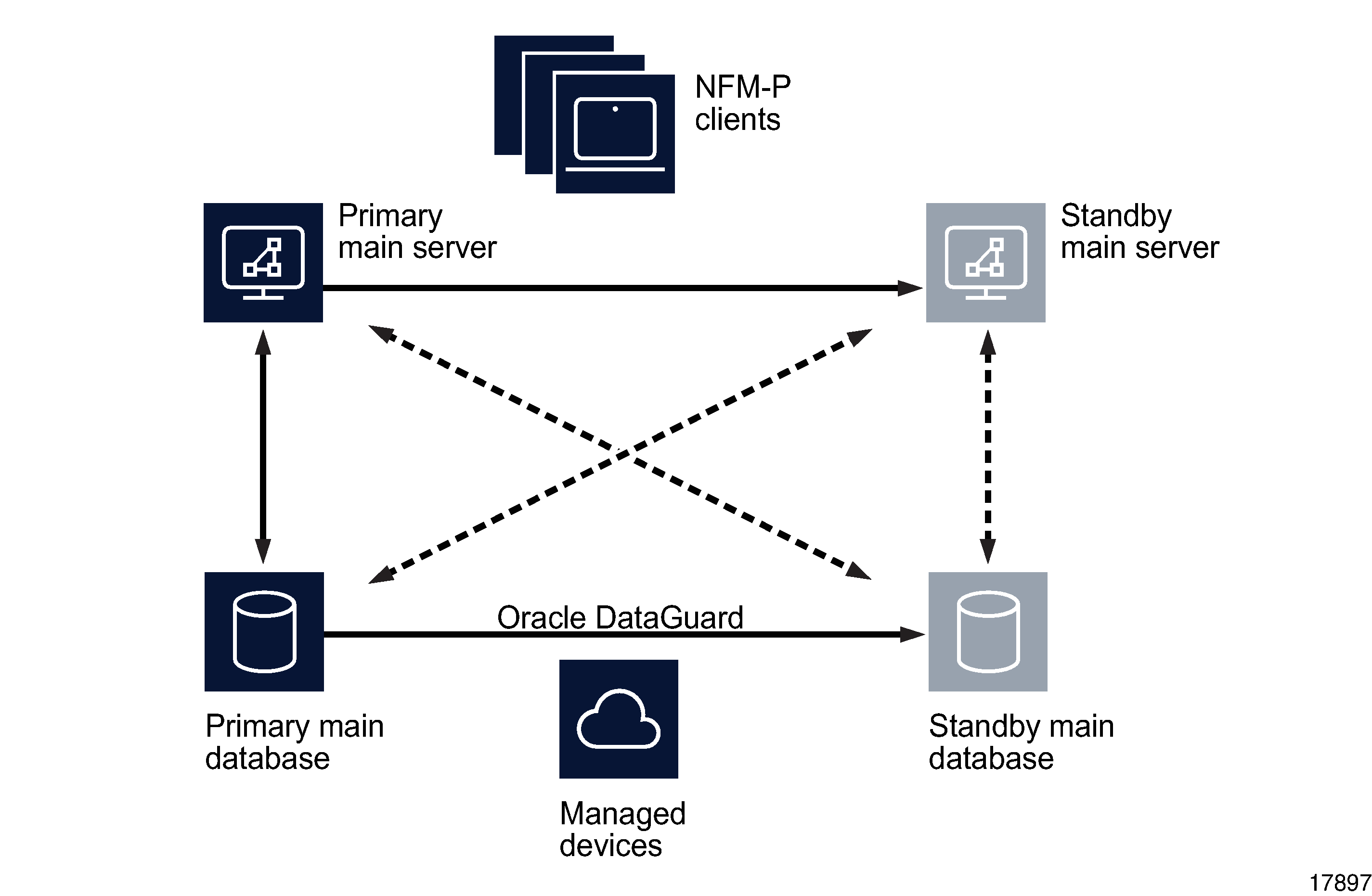

The NFM-P supports collocated and distributed system redundancy. A collocated system requires two stations that each host a main server and database. A distributed system requires four stations that each host a main server or database. Each main server and database is logically independent, regardless of the deployment type.

The primary and standby main servers communicate with the redundant databases and periodically verify server redundancy. If the standby server fails to reach the primary server within 60s, the standby server becomes a primary server. See How do I respond to redundancy failures? for information about various NFM-P redundancy failure scenarios.

A main database uses the Oracle DataGuard function to maintain redundancy. During a redundant NFM-P installation or upgrade, the Oracle DataGuard synchronization level is set to real-time apply, which ensures that the primary and standby databases are synchronized.

Figure 17-1: Collocated redundant NFM-P deployment

Figure 17-2: Distributed redundant NFM-P deployment

A main server role change is called a server activity switch. An automatic database role change is called a failover; a manual database role change is called a switchover.

A typical redundant NFM-P system has a primary server and database in a geographically separate facility from the standby server and database facility. To ensure that the primary components are in the same LAN after an activity switch or failover, you can configure automatic database realignment during a main server installation or upgrade. See Automatic database realignment for more information.

The NFM-P GUI, browser, and XML API clients must always communicate with the current primary main server. After a server activity switch or switchover:

-

The GUI clients automatically connect to the new primary main server, which is the former standby.

-

The XML API and browser clients do not automatically connect to the new primary main server; you must redirect each browser and XML API client to the new primary main server.

The following general conditions apply to NFM-P system redundancy:

-

The main servers and databases must each be redundant. For example, you cannot have redundant servers and a standalone database.

-

The network that contains a redundant NFM-P system must meet the latency and bandwidth requirements described in the NSP NFM-P Planning Guide.

Note: To provide hardware fault tolerance in addition to software redundancy, it is recommended that you use redundant physical links between the primary and standby servers and databases to ensure there is no single point of network or hardware failure.

-

The server and database stations require the same OS version and patch level.

-

The server stations require identical disk layouts and partitioning.

-

The database stations require identical disk layouts and partitioning.

-

Only the nsp user on a main server station can perform a server activity switch.

-

The following users can perform a database switchover:

Auxiliary server redundancy

NFM-P auxiliary servers are optional servers that extend the network management processing engine by distributing server functions among multiple stations. An NFM-P main server controls task scheduling and sends task requests to auxiliary servers. Each auxiliary server is installed on a separate station, and responds to processing requests only from the current primary main server in a redundant system.

When an auxiliary server cannot connect to the primary main server or database, it re-initializes and continues trying to connect until it succeeds or, in the case of a database failover, until the main server directs it to the peer database.

After startup, an auxiliary server waits for initialization information from a main server. An auxiliary server restarts if it does not receive all required initialization information within five minutes.

Note: NFM-P system performance may degrade when a main server loses contact with a number of auxiliary servers that exceeds the number of Preferred auxiliary servers.

When an auxiliary server fails to respond to a primary main server, the main server tries repeatedly to establish communication before it generates an alarm. The alarm clears when the communication is re-established.

Auxiliary server types

The auxiliary servers in an NFM-P system are specified in each main server configuration, which includes the address of each auxiliary server in the system, and the auxiliary server type, which is one of the following:

-

Reserved—processes requests when a Preferred auxiliary server is unavailable

-

Remote Standby—unused by the main server; processes requests only from the peer main server, and only when the peer main server is operating as the primary main server

If a Preferred auxiliary server is unresponsive, the main server directs the requests to another Preferred auxiliary server, if available, or to a Reserved auxiliary server. When the unresponsive Preferred auxiliary server returns to service, the main server reverts to the Preferred auxiliary server and stops sending requests to any Reserved auxiliary server that had assumed the Preferred workload.

An auxiliary server that is specified as a Remote Standby auxiliary server is a Preferred or Reserved auxiliary server of the peer main server. The Remote Standby designation of an auxiliary server in a main server configuration ensures that the main server does not use the auxiliary server under any circumstances. Such a configuration may be required when the network latency between the primary and standby main servers is high, for example, when the NFM-P system is geographically dispersed.

Alternatively, if all main and auxiliary servers are in the same physical facility and the network latency between components is not a concern, no Remote Standby designation is required, and you can apply the Preferred and Reserved designations based on your requirements. For example, you may choose to configure a Preferred auxiliary server of one main server as the Reserved auxiliary server of the peer main server, and a Reserved auxiliary server as the Preferred of the peer main server.

Auxiliary database geographic redundancy

A geographically redundant, or geo-redundant, auxiliary database has one cluster of one, or three or more, auxiliary database stations in each NSP data center. The cluster that is designated the active cluster processes transactions; the other cluster acts as a warm standby in the event of an active cluster failure.

The active cluster replicates the incremental database content changes on the standby cluster every 30 minutes. Because the active database updates are not immediately replicated, some data loss may occur under failure or switchover conditions.

An analytics server always uses the active auxiliary database as a data source. In the event of an active cluster failure and a resulting failover to the standby cluster, no operator intervention is required to redirect an analytics server. After an auxiliary database failover, an analytics server automatically uses the auxiliary database cluster that has the active role.

An auxiliary database failover is non-revertive; a manual switchover is required to restore the former cluster roles when the failed cluster returns to service.

See How do I perform an auxiliary database switchover? for information about switching auxiliary database cluster roles.

IPDR file transfer redundancy

The NFM-P can forward collected AA accounting and AA Cflowd statistics in IPDR format to redundant target servers for retrieval by OSS applications. For additional redundancy, you can configure multiple NSP Flow Collectors to forward AA Cflowd statistics data to the target servers. Such a configuration provides a high degree of fault tolerance in the event of an NFM-P component failure.

AA accounting statistics collection

An NFM-P main or auxiliary server forwards AA accounting statistics files to the target servers specified in an IPDR file transfer policy. An IPDR file transfer policy also specifies the file transfer type and user credentials, and the destination directory on the target server. See How do I configure the IPDR file-transfer policy? for configuration information.

Each IPDR file is transferred as it is closed. A file that cannot be transferred is retained and an error is logged. Corrupt files, and files that cannot be created, are stored in a directory named “bad” below the specified destination directory on the server.

Note: An main or auxiliary server does not retain successfully transferred IPDR files; each successfully transferred file is deleted after the transfer.

After you configure the IPDR file transfer policy, the main or auxiliary server that collects AA accounting statistics forwards the statistics files to the primary transfer target named in the policy. If the server is unable to perform a file transfer, for example, because of an unreachable target, invalid user credentials, or a disk-capacity issue, the main or auxiliary server attempts to transfer the files to the alternate target, if one is specified in the policy. Statistics data is sent to only one target; no statistics data is duplicated on the target servers.

AA Cflowd statistics collection

In an NFM-P system that uses NSP Flow Collectors, each collector transfers AA Cflowd statistics files to a target server for OSS retrieval.

You can specify the same or a different target for each NSP Flow Collector. For example, to ensure minimal data loss in the event of a collector or target failure, you can configure two collectors to:

In such a configuration, if files are absent from a target, or the target is unreachable, the files are available from the other target.

See the NSP Installation and Upgrade Guide for information about configuring NSP Flow Collector functions.