QoS policies

Overview

Policies group and manage the various QoS elements used to determine how traffic is routed.

-

Specify how QoS marking is interpreted, how customer traffic is mapped into queues, and how queues are classified.

-

Specify how customer traffic is mapped into queues, specify queue classification, queue parameters, and QoS marking.

-

Specify QoS marking to forwarding class mapping on ingress and QoS marking to forwarding class mapping on egress.

-

Specify CIR, PIR, and burst sizes for each queue. Forwarding class to queue mapping is not configurable.

-

Specify custom settings and a hierarchical structure of virtual schedulers to replace the default hardware schedulers on the device.

-

Specify bandwidth allocation at the egress port level.

-

Specify schedulers to define egress port and ingress scheduler behavior on an HSMDA.

-

Specify WRED settings to customize how in-profile and out-of-profile traffic is processed in hardware buffers, applied to daughter cards or ports.

-

Specify settings for controlling how the depth of HSMDA queues is managed.

-

Specify ATM QoS settings to customize ATM traffic parameters including service category and shaping.

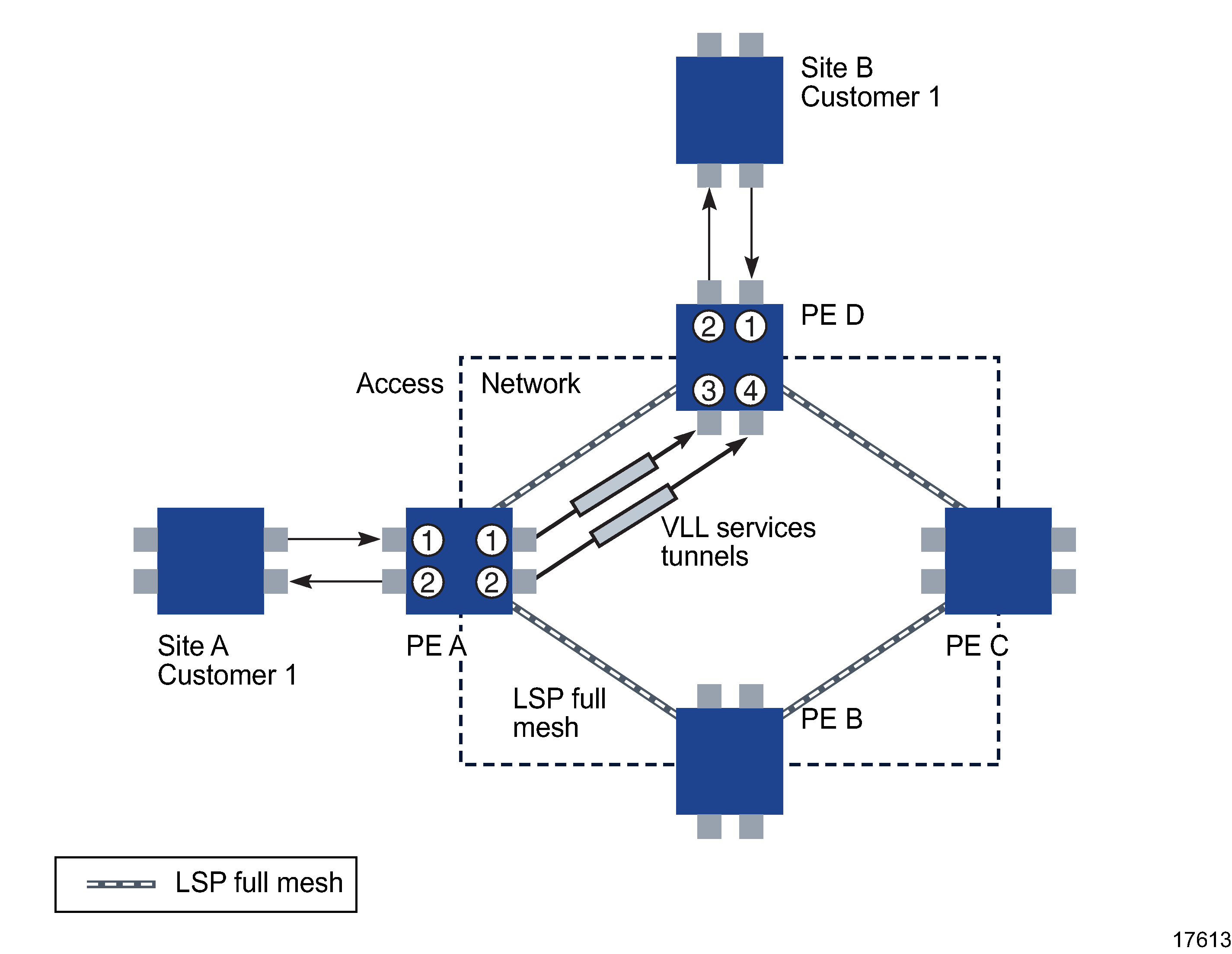

The figure below shows where QoS policies are applied at the service level.

Figure 70-6: Service-level view of policies

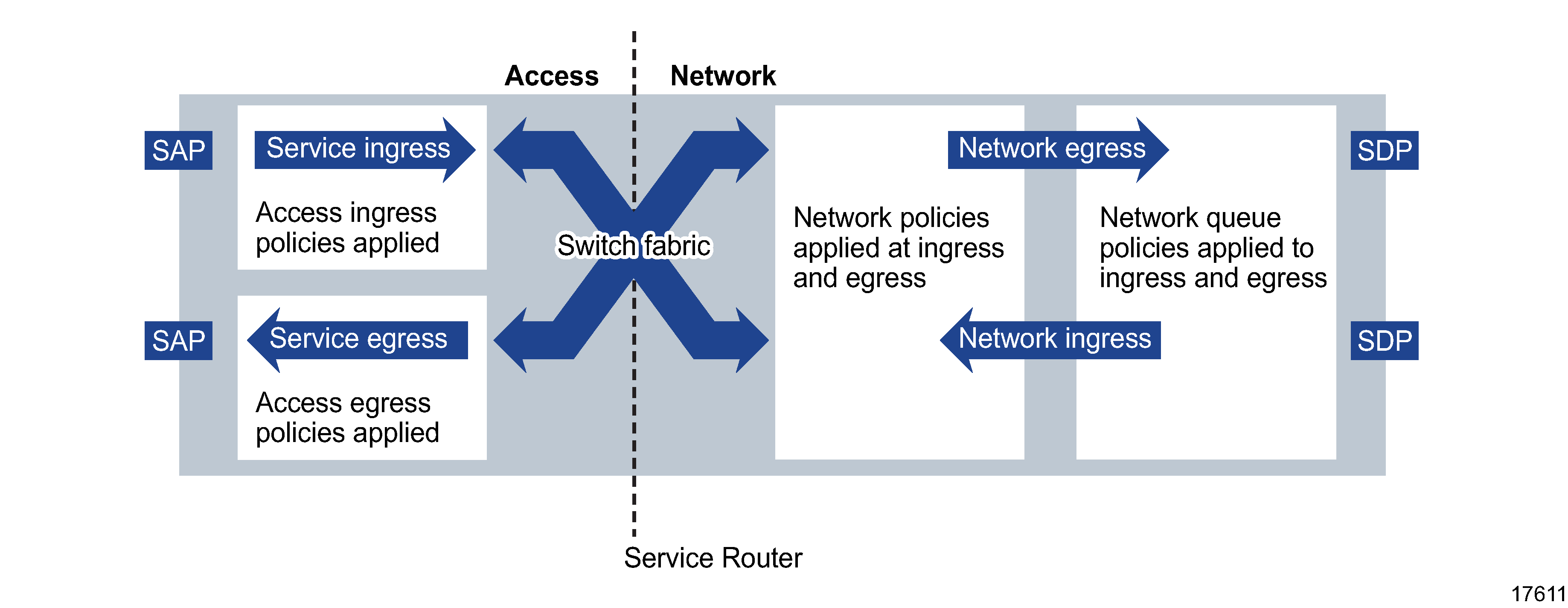

The figure below shows where policies are applied on a device with respect to access and network ingress and egress traffic.

Figure 70-7: Types of traffic on a device and applied policies

SAP access ingress policies

Access ingress policies are applied to access interfaces and specify QoS characteristics on ingress. Participation in access ingress policies is defined when access interfaces are configured or modified.

Access ingress policies include:

-

mapping of QoS marking, such as dot1p, DSCP, and precedence, and IP/MAC address information to forwarding classes

Figure 70-8: Access ingress policy elements

See SAP access ingress policies in QoS policy types for more information about access ingress policies.

SAP access egress policies

Access egress policies are applied to access egress interfaces and specify QoS characteristics on egress. Participation in access egress policies is defined when access interfaces are configured or modified.

Access egress policies include:

Figure 70-9: Access egress policy elements

In Figure 70-9, Access egress policy elements , packets are marked with an FC, either by:

-

ingress policy if the packet was received on the same device

-

in the tunnel transport encapsulation if received using a service tunnel

See SAP access egress policies in QoS policy types for more information.

Network policies

Network policies define egress QoS markings and ingress QoS interpretation for traffic on core network IP interfaces.

A network policy defines:

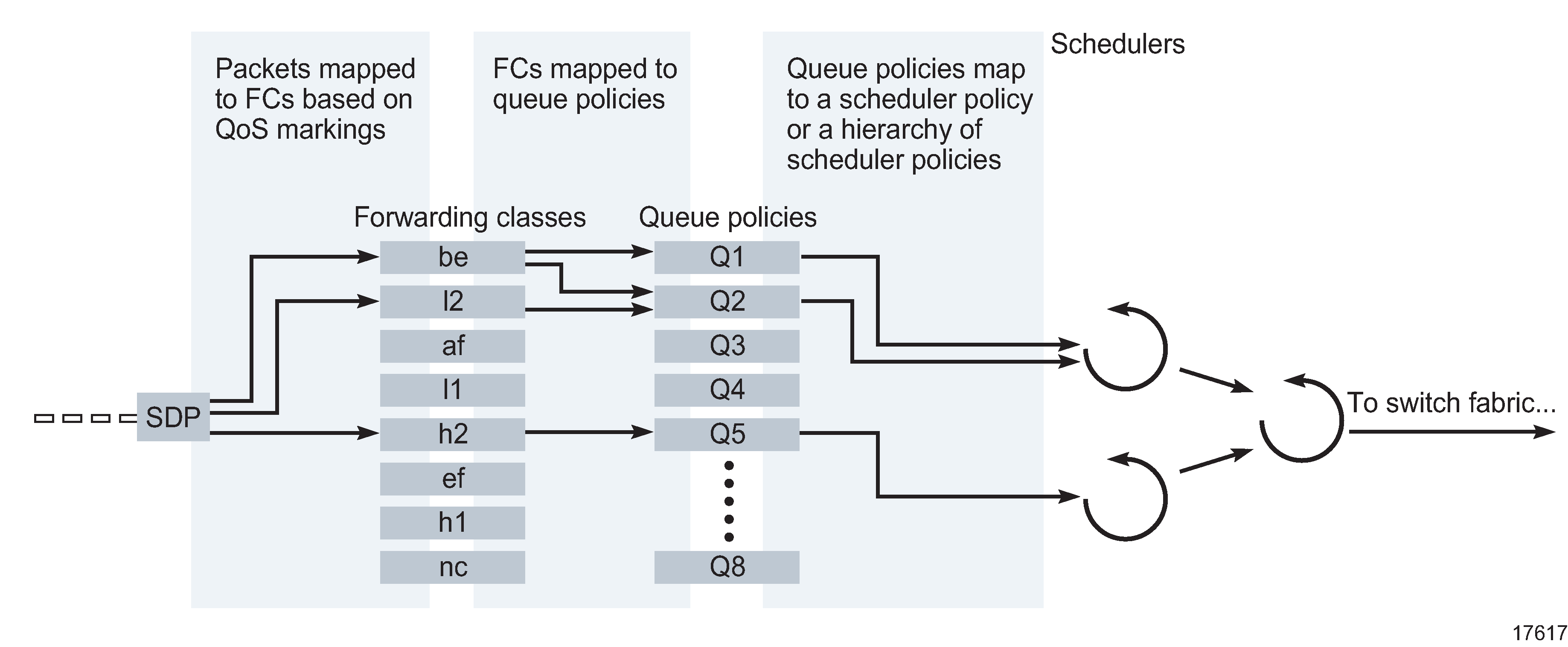

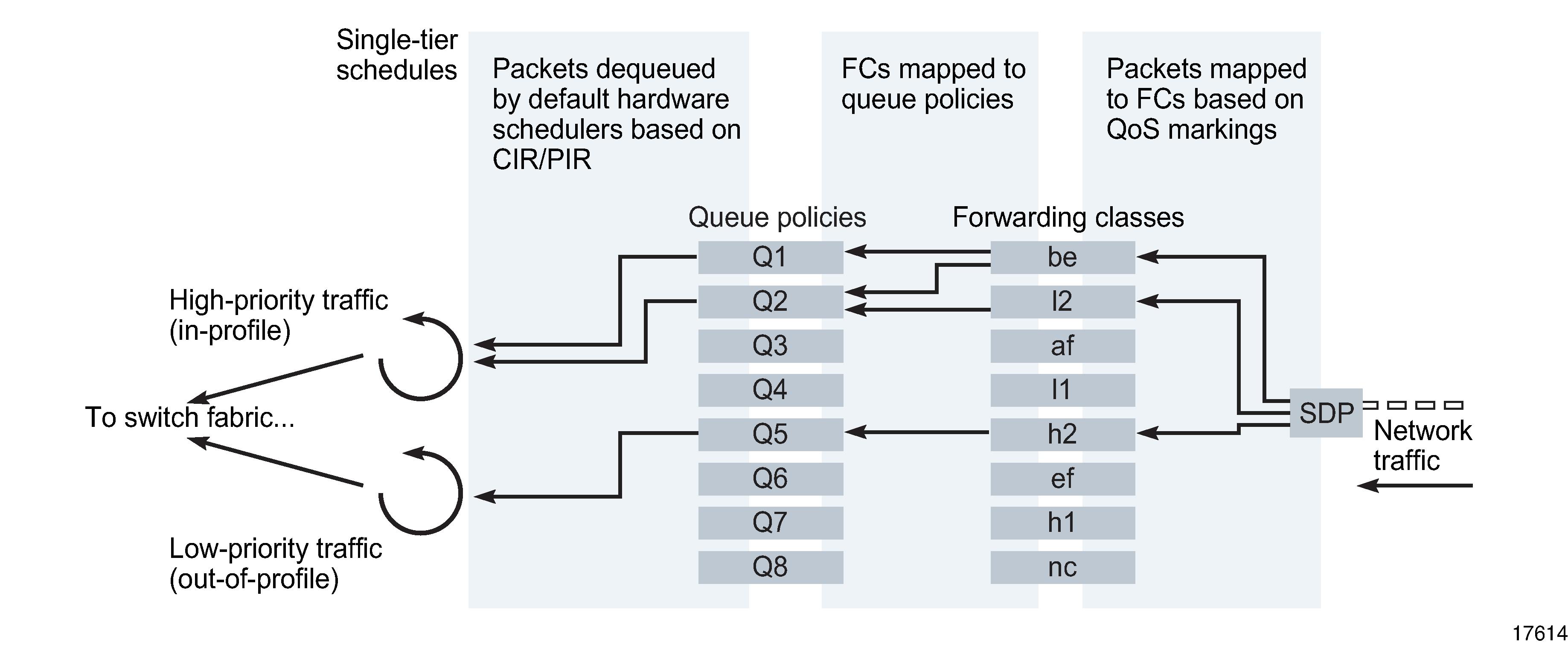

The figure below shows the sequence of how the elements of network and network queue policies are applied at ingress.

Figure 70-10: Network and network queue policy elements on ingress

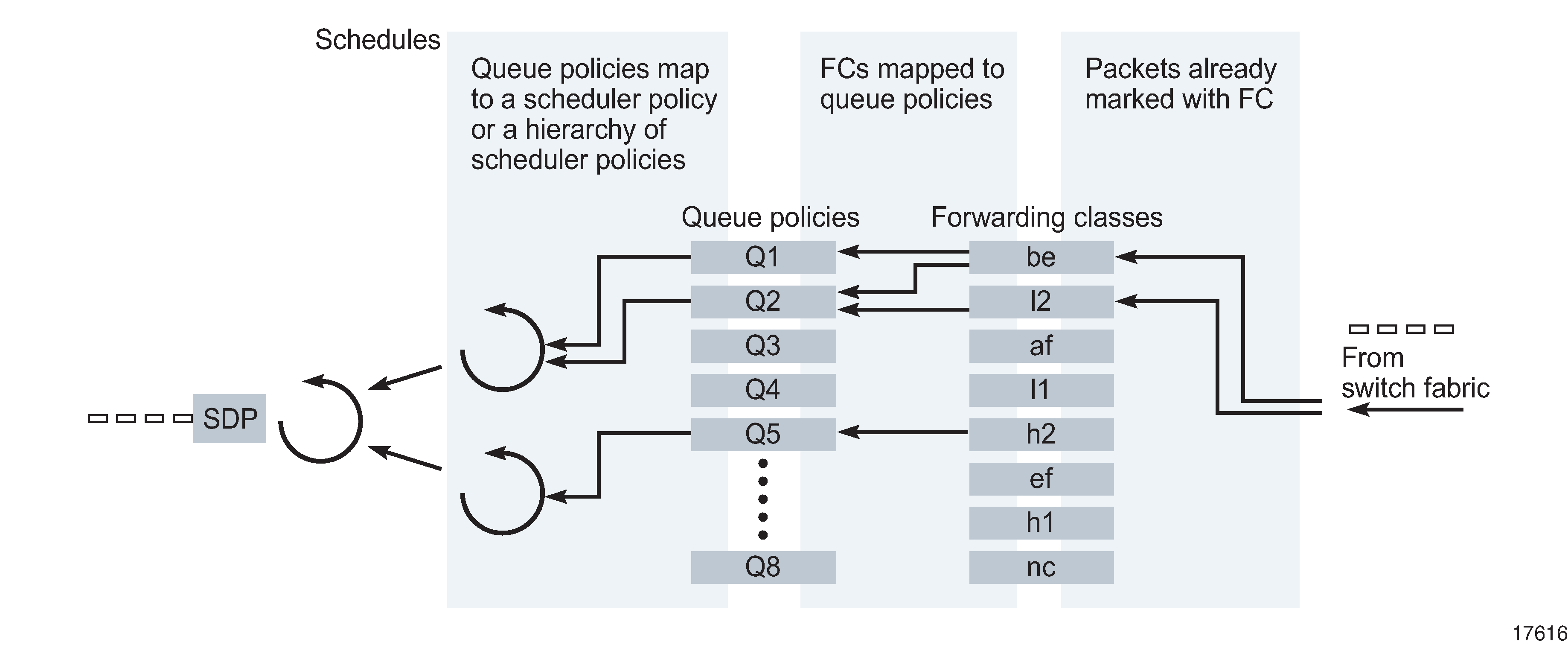

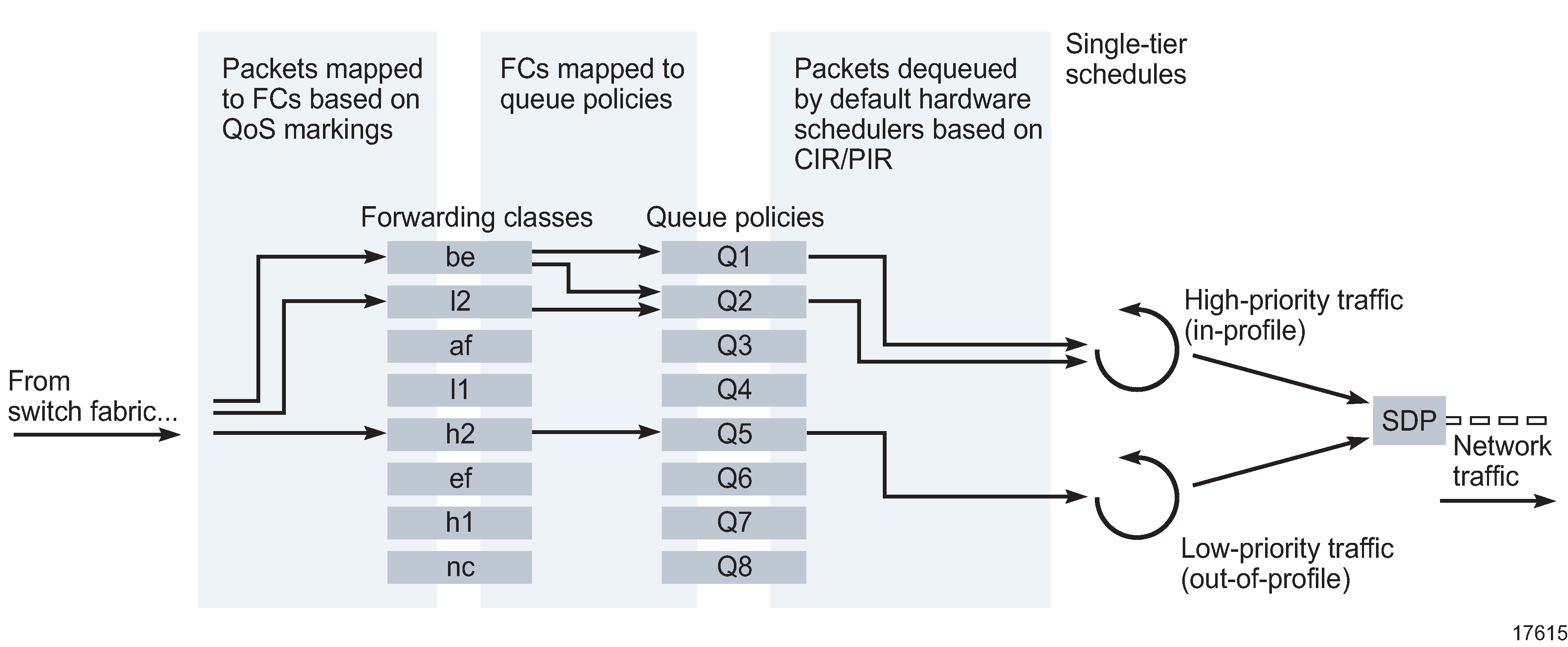

The figure below shows the sequence of how the elements of network and network queue policies are applied at egress.

Figure 70-11: Network and network queue policy elements on egress

See Network policies in QoS policy types for more information.

Network queue policies

Network queue policies define the network forwarding class queue characteristics for core egress network ports and for ingress on MDAs.

See Figure 70-10, Network and network queue policy elements on ingress and Figure 70-11, Network and network queue policy elements on egress for the sequence of how the different elements of network and network queue policies are applied on ingress in and on egress.

The queued packets are serviced by single-tier schedulers on the device and are forwarded to a single destination switch fabric port or a network interface.

See Network queue policies in QoS policy types for more information about network queue policies.

Scheduling

Scheduling defines the order and method for how packets which are enqueued in different queues, are dequeued. Ingress schedulers control the data transfer between the queues and the switch fabric. Egress schedulers control the data transfer between the egress queues and the switch fabric. Packets are not actually forwarded to schedulers, but are forwarded from the queues directly to ingress and egress interfaces. Participation in scheduler policies is defined when access interfaces are configured or modified.

There are two types of scheduling:

-

Single-tier scheduling is the hardware-based default method for scheduling queues on the device. There are no configurable parameters for single-tier schedulers. When a scheduler policy is not specified for an access interface, rate limiting is specified by the values specified in the queue and scheduling is performed by the default hardware scheduler on the device. Single-tier scheduling consists of a pair of scheduling priority loops in the 7750 SR and bases scheduling on the CIR and the PIR set in the queue policy. One loop is for scheduling high-priority (in-profile) traffic, and the other loop is for low-priority (out-of-profile) traffic.

-

Virtual hierarchical (multi-tier) scheduling can provide more flexible scheduling for access ingress and egress interfaces, and determine how queues are scheduled. They are defined using a Scheduler policy, and can be configured to override default hardware scheduling. You can create up to three tiers of virtual schedulers.

Aggregation schedulers are used to share a scheduler policy across a number of ports or daughter cards. This can be useful when a number of ports or cards are dedicated to the same customer. See Scheduler policies in QoS policy types for more information about configuring aggregation schedulers.

Port scheduler policies

Port scheduler policies define the bandwidth allocation based on the available bandwidth at the egress port level. A port scheduler policy manages a bandwidth allocation algorithm that represents a virtual multi-tier scheduling hierarchy.

The port scheduler allocates bandwidth to each service or subscriber that is associated with an egress port. Egress queues on the service may have a child association with a scheduler policy on the SAP or multi-service site. All queues must compete for bandwidth from an egress port.

There are two methods of bandwidth allocation on the egress access port:

-

direct association of port scheduler on a SAP or multi-service site with service or subscriber queue

A service or subscriber queue is associated with a scheduler on the L2 access interface or multi-service site, and the service-level scheduler policy is associated with a port level scheduler.

-

direct association of port scheduler with service or subscriber queue

A service or subscriber queue is associated with a port scheduler. The port scheduler hierarchy allocates bandwidth at each priority level to each service or subscriber queue.

See Port scheduler policies in QoS policy types for more information about configuring port scheduler policies.

HSMDA scheduler policies

The port-based scheduler manages forwarding for each egress port on the HSMDA. Each port-based scheduler maintains up to eight forwarding levels. Eight scheduling classes contain each of the queues that are assigned to the port scheduler. Membership in a scheduler is defined by the queue identifier.

The port-based scheduler supports a port-based shaper that is used to create a sub-rate condition on the port. Each scheduling level can be configured with a shaping rate to limit the amount of bandwidth allowed for that level.

The port-based scheduler allows two weighted groups (group 1 and group 2) to be created. Each group can be populated with three consecutive scheduling classes.

See HSMDA scheduler policies in QoS policy types for more information about configuring slope policies.

Slope policies

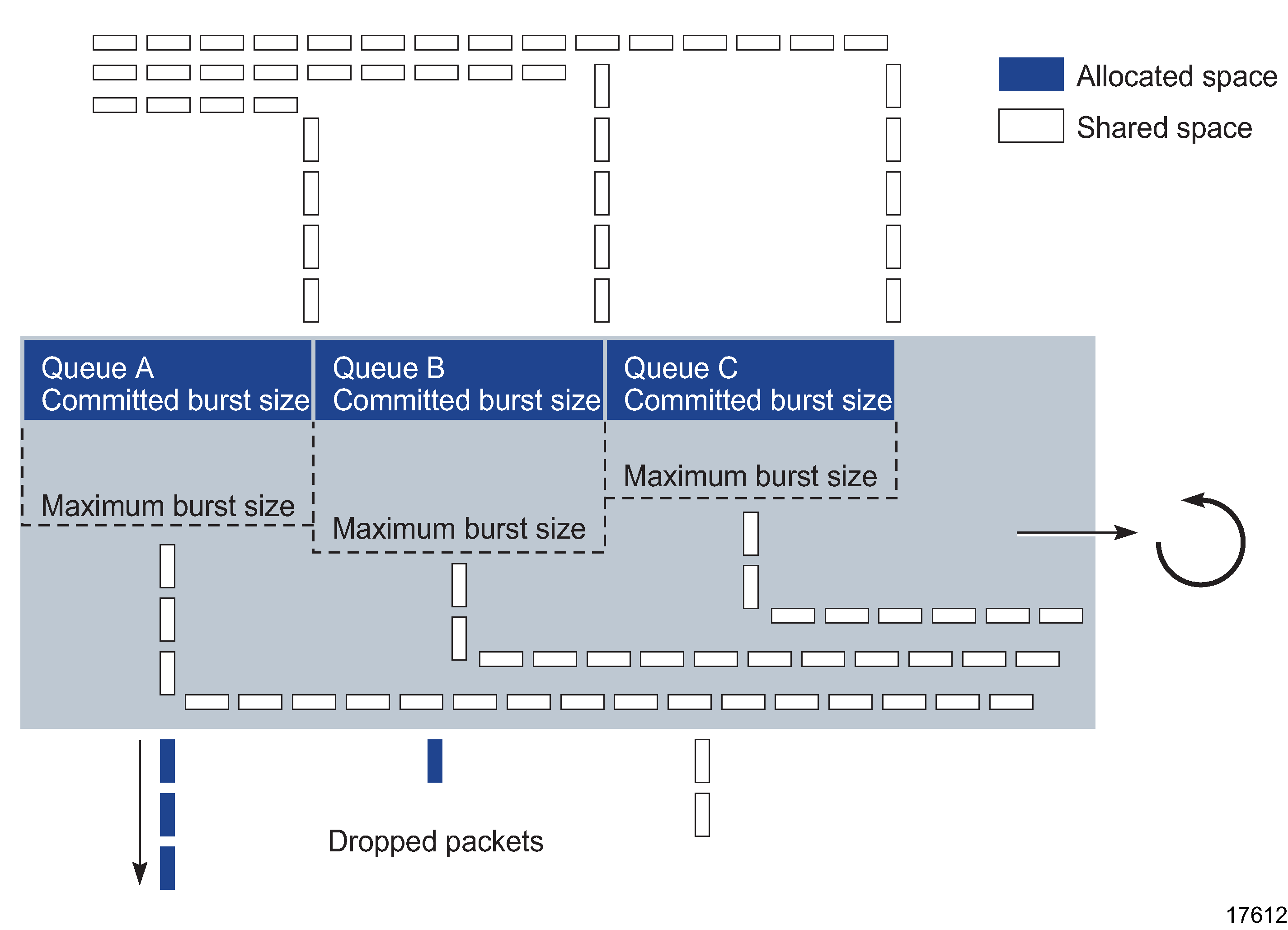

Slope policies manage how shared buffers are utilized on the SR. When traffic is queued, the WRED slope parameters in the slope policy determine how the traffic is buffered for de-queuing, as shown in the figure below.

Figure 70-12: Slope policy characteristics

All queues are in contention for shared buffer space when they exceed their CBS, and can use their MBS, when space is available in the shared buffer space. The WRED parameters determine whether a packet is discarded or not, and, as a result, determine whether the packet is dequeued. When the shared buffer space exceeds or approaches the maximum percentage defined by the WRED configuration, packets are discarded.

By using two independent slope policy configurations, one for in-profile traffic and one for out-of-profile traffic, you can configure in-profile traffic to receive preferential treatment over out-of-profile traffic.

See Slope policies in QoS policy types for more information about configuring slope policies.

HSMDA slope policies

HSMDA slope policies control the HSMDA queues. Each queue supports an index for an HSMDA slope policy table. Each policy in the table consists of two RED slopes (one high priority and another for low priority) to manage queue congestion. HSMDA RED slopes operate on the instantaneous depth of the queue.

A packet that attempts to enter a queue triggers a check to see whether the packet is allowed based on queue congestion conditions. The packet contains a congestion-priority flag that indicates whether the HSMDA is to use the high or low slope. The slope policy containing the slope is derived from the policy index in the queue configuration parameters on the HSMDA.

The RED slope discards are based on the current queue depth before a packet is allowed into the queue. Therefore, a queue may consume buffers that are greater than the configured MBS value based on the size of the packet. After the packet maximum is reached, packets that are associated with the queue are discarded. When the schedule removes packets from the queue, the queue depth decreases, which eventually lowers the depth of the threshold.

See HSMDA WRED slope policies in QoS policy types for more information about configuring HSMDA slope policies.