What are the best practices for telemetry data collection?

Data collection prerequisites

All DCA functions, including baselines, indicators, and OAM testing, depend on telemetry data. Telemetry data collection must be configured before any function in the Data Collection and Analysis views can be used.

NSP has the following prerequisites for telemetry data collection:

-

The NEs from which you want to collect data must be discovered, managed, and reachable by the NSP.

-

Mediation policies must be configured in the discovery rules used to discover and manage the NEs:

See “Device discovery” in the NSP Device Management Guide for more information about NE discovery and mediation.

-

If you want to use a telemetry subscription to manage MIB statistics from classic NEs, statistics collection must be configured in the NFM-P.

-

Cloud native telemetry requires the gnmiTelemetry installation option.

-

All required NE adaptors and telemetry custom resources must be installed on the NSP server:

-

for the list of required telemetry artifacts for an NE family, see the artifact guide for the NE adaptor artifact bundle

-

for information on installing the telemetry CRs, see How do I install telemetry artifacts?

-

-

If you want to use statistics for Analytics reports with granularity other than raw data, you need aggregation rules for the telemetry types you want to collect; see the Telemetry tutorial on the Network Developer Portal.

-

You need to configure the aggregation time zone.

Accounting telemetry

Accounting telemetry requires retrieval of accounting file information from NEs. The NSP polls the NE directories specified in the device helper custom resource for accounting file updates. The file on the NE is transformed to JSON format, compressed, and saved in the NSP file server.

CAUTION: All accounting files found on the NE are transferred to NSP, processed, then deleted from the NE. The file is no longer available for collection by NFM-P or any other external application. This applies to both Classic and model-driven NEs.

If communication with the NE is lost, files that were stored on the NE during the loss of communication are retrieved when communication is reestablished, provided the files have not been purged by the NE.

The following prerequisites apply for accounting telemetry:

-

an file transfer mediation policy must be part of the discovery rule used to discover the NE.

If the unified discovery rule includes both a file transfer mediation policy for MDM and a classic discovery rule, the classic file transfer policy is used for accounting telemetry for classic NEs, and the MDM file transfer policy is used for model-driven NEs.

-

The NE must be configured to allow parallel SSH sessions; see the NE documentation.

-

an accounting policy must be configured on the NE. The accounting policy can be configured via CLI; see the NE documentation, or in the Device Management, Device Configuration views using the icm-file-accounting intent type; see the NSP Device Management Guide.

-

a file purge policy in the NSP is recommended; see “How do I configure file purge policies?” in the NSP System Administrator Guide.

Accounting telemetry catchup

If accounting files are already stored on the NE when NSP starts accounting telemetry collection, NSP performs a data backfill to pull and process these files.

If accounting statistics are output to the database and/or Kafka during data backfill, it may result in delayed catch up or data loss.

You can monitor the data backfill process in Grafana.

Use the GRAFANA button on the System Health dashboard to open Grafana, or access the tool at the following URL:

https://NSP_address/grafana

where NSP_address is the public NSP address

In Grafana, open the Telemetry Accounting Processor Metrics dashboard. When the Skipped File Pulls metric is zero, the data backfill is complete.

It is highly recommended to enable only the file output option in accounting telemetry subscriptions during the data backfill phase; see How do I manage subscriptions?. After backfill is complete, the subscription can be edited to add additional output options as needed.

Data available for collection

When NSP collects device telemetry, only data in statistics containers is available for collection. Furthermore, NSP may only make a subset of the attributes in the statistics containers available in its telemetry types. If you cannot find the counter you are looking for in the NSP telemetry types, verify that it is available in a statistics container in the relevant NE state YANG path.

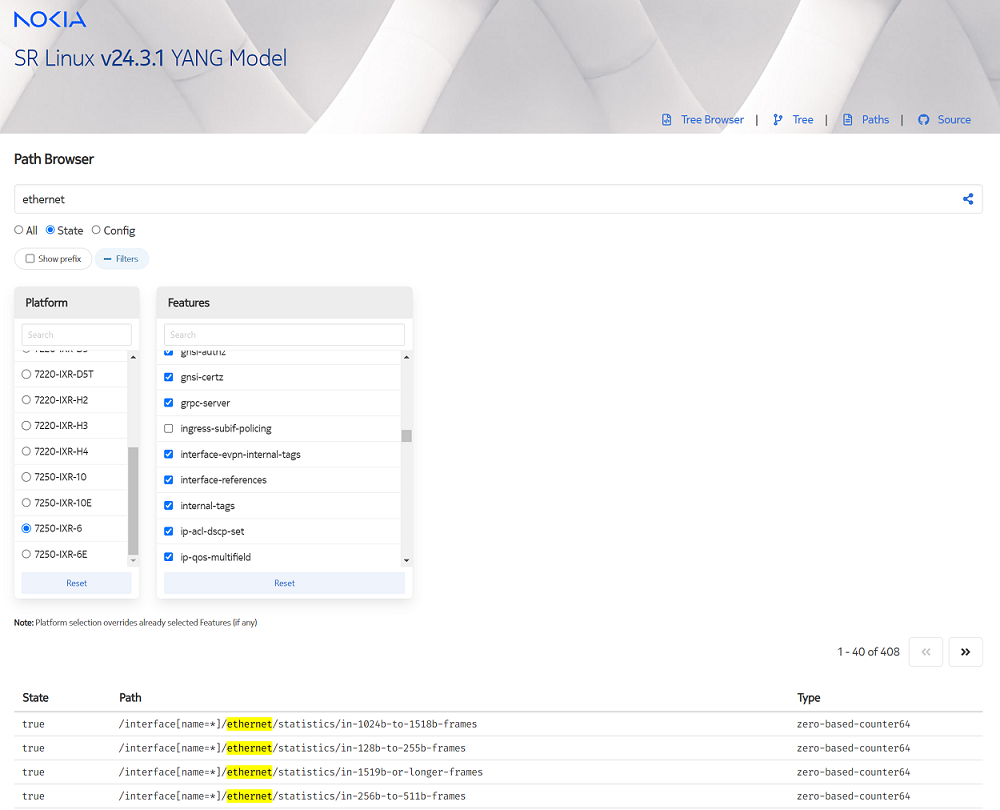

For example, the SR Linux search tool can be found here: (https://yang.srlinux.dev/). Click on the NE release of interest and filter for the paths you need, as shown in the following example:

For more information about the supported telemetry attributes for your NE family, see the artifact guide for the NE adaptor artifact bundle. For more information about telemetry definitions, see the Telemetry collection API documentation on the Network Developer Portal.

Automatic subscriptions

Subscriptions are automatically created for Baseline Analytics, NSP Indicators, and OAM testing. To collect statistics for another purpose, create a subscription and set its state to enabled.

RESTCONF APIs are available for telemetry collection and aggregation; see the Network Assurance API documentation on the Network Developer Portal.

Data storage

You can enable database storage for MDM- managed NEs as part of subscription creation. By default, the data collected is stored in Postgres unless there is an auxiliary database enabled, in which case all collected data is stored in the auxiliary database. For NFM-P-collected SNMP or accounting statistics, the database parameter in the subscription is ignored.

Historical data, that is, data that is stored in a database, is retained according to the age-out policy; see How do I edit an age-out policy?.

Note: For statistics to be available to the Analytics application for aggregated reports, they must be stored in the auxiliary database.

The Telemetry data API also provides the functionality to subscribe, stream, and plot historical and real-time data; see Use Case 5 in the Telemetry Collection tutorial on the Network Developer Portal.