Redundancy mechanisms

Redundancy options per NSP deployment type

NSP provides redundancy options through disaster recovery (DR) and high availability (HA). The following redundancy options are available depending on the NSP deployment type:

-

Basic—production deployment with a single node cluster (no HA) and option for DR

-

Medium—production deployment with redundancy options for DR and HA (DR only, HA only, or HA plus DR)

-

Standard—production deployment with redundancy options for DR and HA (DR only, HA only, or HA plus DR)

-

Enhanced—production deployment always deployed in multi-node cluster for HA, with option for DR

Enhanced is the only deployment type that always includes HA due to the inherently multi-nodal nature of the deployment type. All non-lab deployments have the option of DR, where NSP is deployed in identical redundant clusters in geographically separate data centers. Special deployments that only include the NRC-P Simulation tool are standalone only with no option for DR or HA.

Disaster recovery

A DR NSP deployment consists of identical primary and standby NSP clusters and ancillary components in separate, geographically distributed data centers, or “sites”. One cluster has what is called the primary role, and processes all client requests.

The standby NSP cluster in a DR deployment operates in warm standby mode. If a primary cluster failure is detected, the standby automatically initializes as the primary, and fully assumes the primary role.

Note: In a DR deployment, it is strongly recommended that all primary components are in the same physical facility. An NSP administrator can align the NSP component roles, as required.

NSP Role Manager

In a DR NSP deployment, the Role Manager runs in an NSP cluster and acts as a Kubernetes controller. The Role Manager monitors the Kubernetes objects for changes, and updates the objects as required based on the current primary or standby site role.

The Role Manager has the following operation modes:

-

standalone—The Role Manager sets the cluster mode to 'active' at initialization time, and does nothing more.

-

DR—The Role Manager negotiates the local role with the DR peer, determining which cluster will run in 'active' and which in 'standby' mode.

The Role Manager uses the configuration in the dr section of the NSP configuration file to identify the local and peer sites.

The NSP monitors the following NSP base services in a DR deployment:

DR fault conditions

If any base service in a DR deployment is unavailable for more than three minutes, or two instances of a service in an HA+DR deployment are unavailable for more than three minutes:

-

An activity switch occurs; consequently, the peer NSP cluster assumes the primary role.

-

An alarm is raised against the service or containing pod to indicate that the service or pod is down.

Note: Such an alarm may not be generated because of a base service disruption, depending on the circumstances.

-

A major ActivitySwitch alarm is raised against the former active site, which is now the standby site.

The following are the alarms that the NSP raises against the NmsSystem object in response to such a failure:

Note: If you clear an alarm while the failure condition is still present, the NSP does not raise the alarm again.

The following example describes an alarm condition in a simple DR deployment.

-

An activity switch occurs; the standby site consequently assumes the primary role.

-

A major ActivitySwitch alarm is raised against the former primary site, which is now the standby site.

Integrated component redundancy

In a DR NSP deployment, each integrated component of the system must also be redundant. For example, if a DR NSP deployment includes classic management, the NFM-P must also be deployed using redundancy.

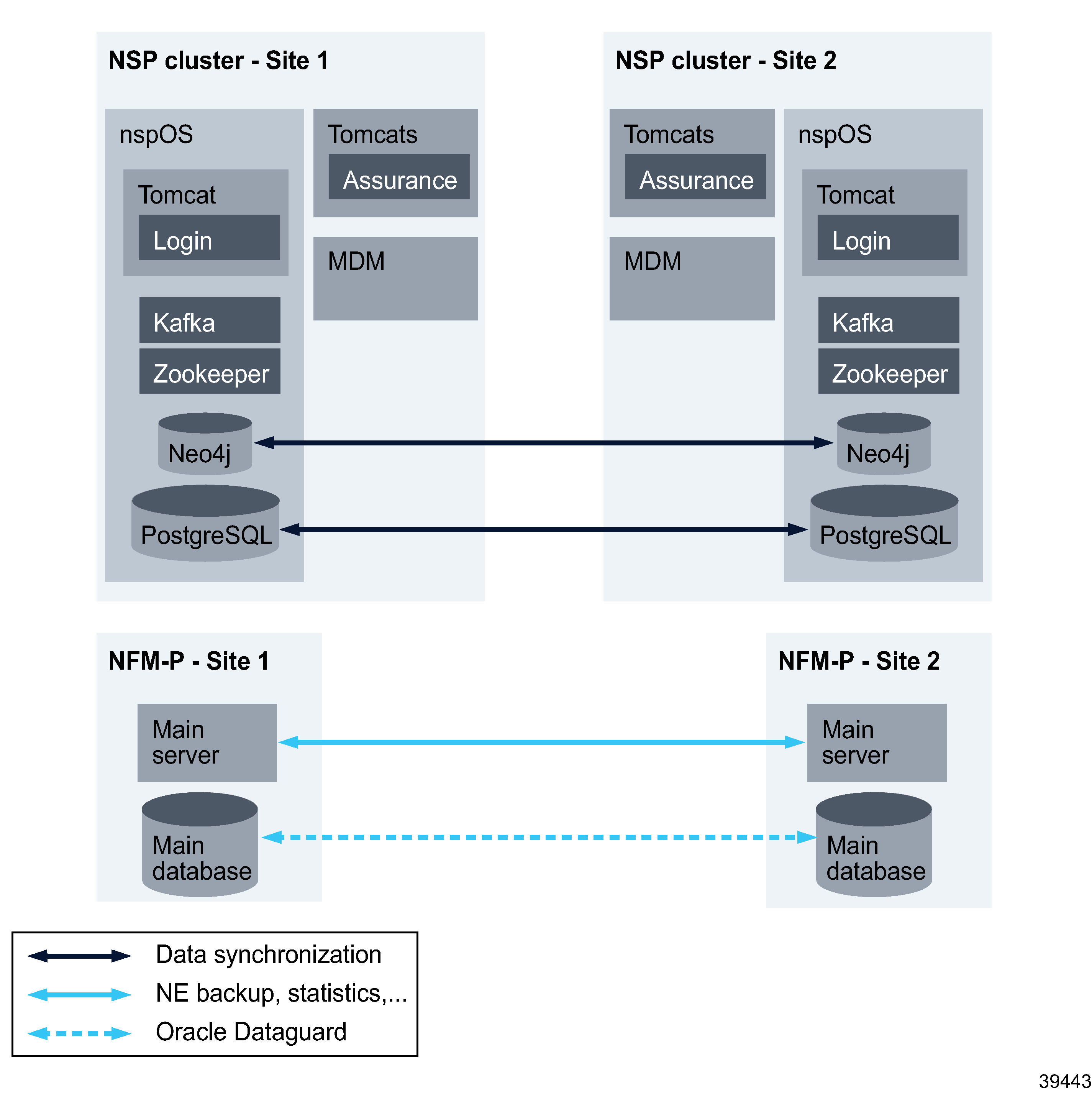

The following figure shows a simple NSP DR deployment.

Figure 8-1: NSP DR deployment with integrated NFM-P

High availability

NSP cluster deployment supports high availability of critical services through replica pods in a container environment. Specific pods are deployed with multiple replicas.

The containerized NSP cluster VMs support HA deployment.

Note: In an enhanced/HA deployment, if node4 were to go down due to an ungraceful shutdown (such as a power outage), a switchover would be triggered.

High availability and NSP file service

When an NSP cluster is deployed with HA and the active nsp-file-service pod restarts, or when a switchover to the standby pod occurs, the NSP is not immediately available to service incoming file service requests.

The NSP file service requires several minutes to recover from a pod restart or switchover. Until the primary pod is fully initialized, the NSP rejects incoming file-service requests, which must be retried when the primary pod is available.

In the event of an NSP file-service pod switchover, the NSP raises the following alarm: