NSP deployment

NSP deployment types

An NSP deployment in a data center consists of the following:

-

container environment in which a Kubernetes orchestration layer co-ordinates NSP service deployment in a group of virtual machines called the NSP cluster. Each VM in the cluster is deployed as a Kubernetes node.

-

RPM-based components on separate stations or VMs outside the NSP cluster

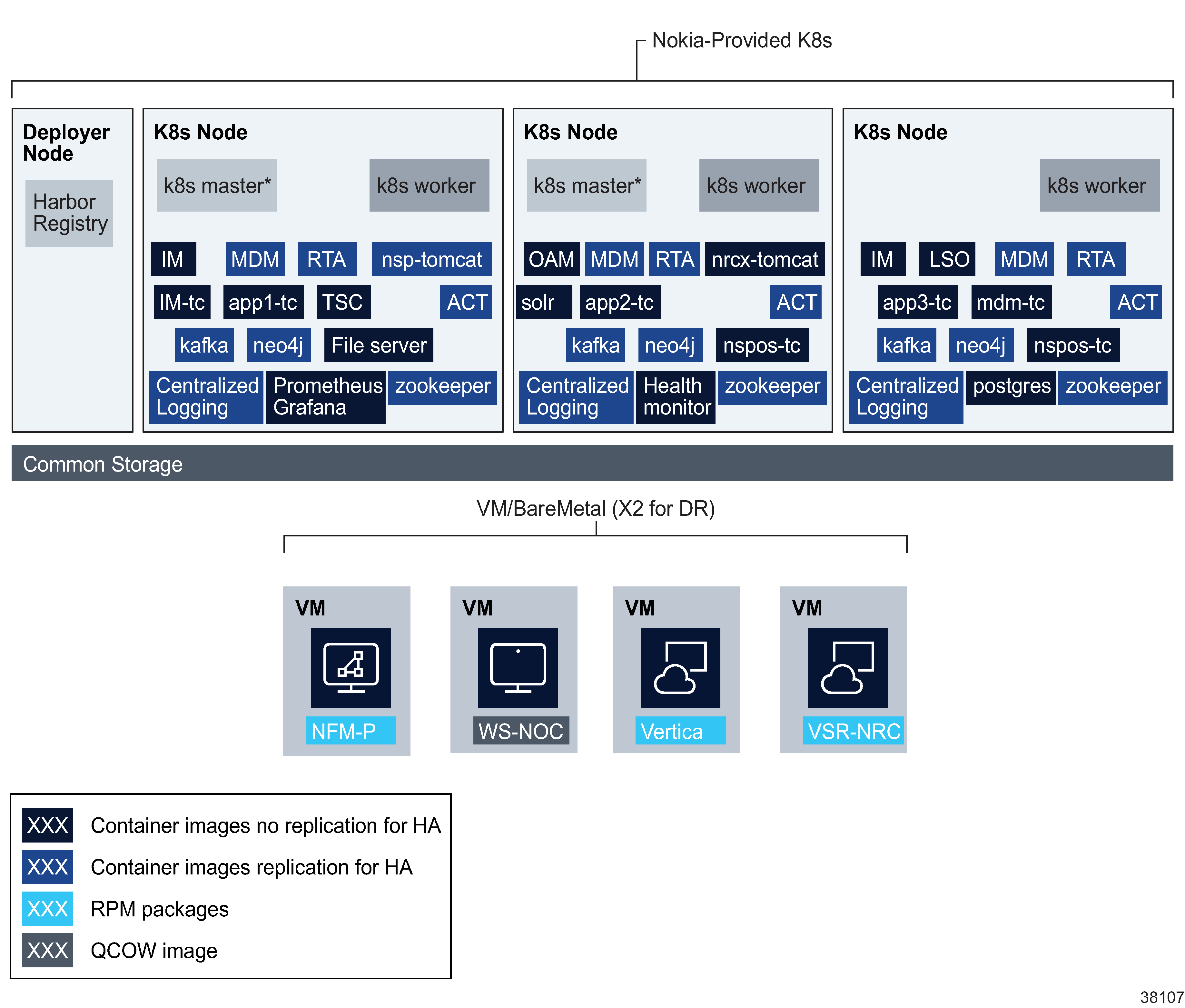

Production deployment

Figure 1-1, NSP system, production deployment shows an example three-node NSP cluster and a number of external RPM-based components. Depending on the specified NSP deployment profile and installation options, a cluster may have additional nodes.

Figure 1-1: NSP system, production deployment

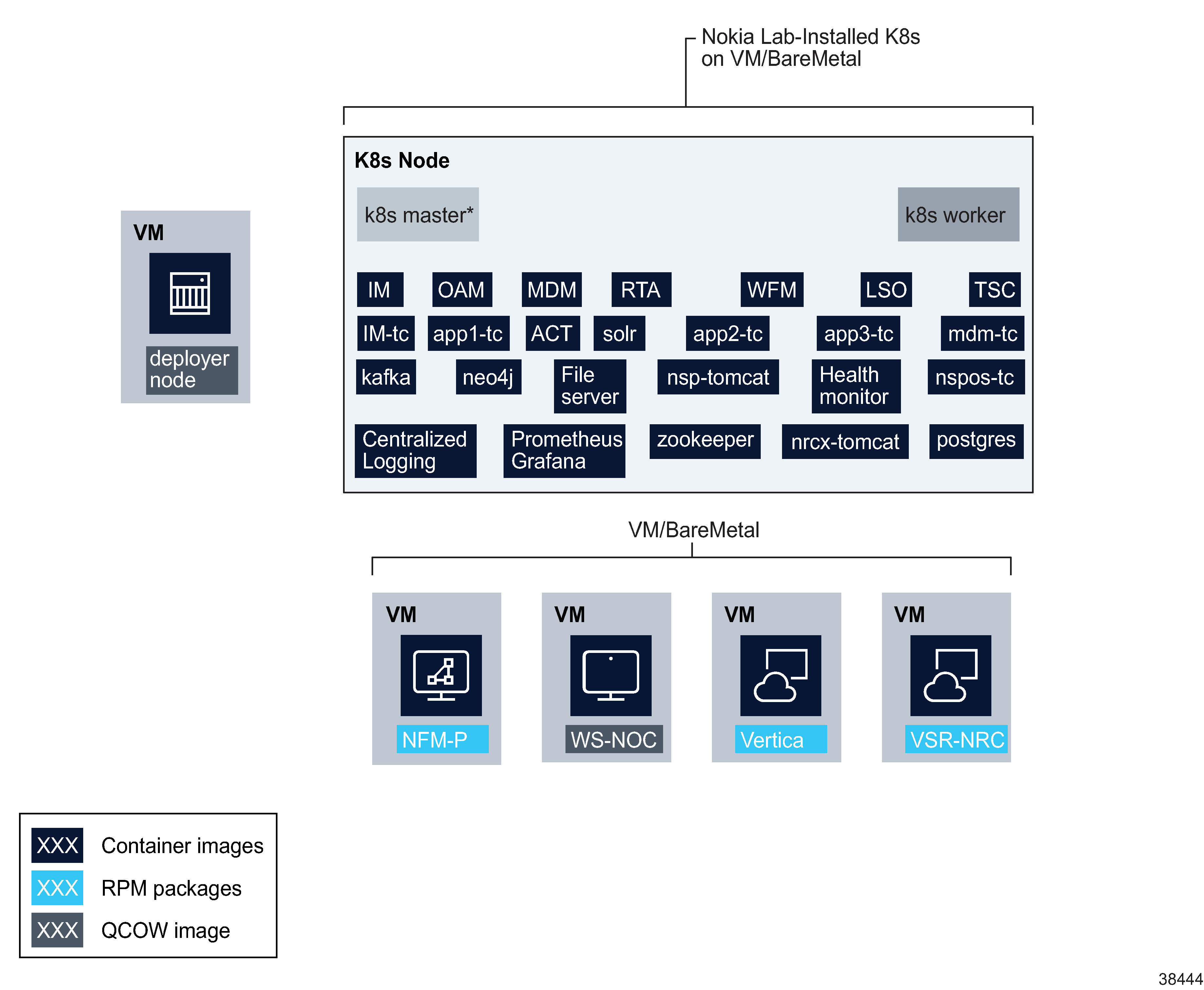

Lab deployment

A one-node NSP cluster is used in a lab deployment, as shown in Figure 1-2, NSP system, lab deployment.

Figure 1-2: NSP system, lab deployment

NSP system redundancy

NSP installations can provide redundancy through two mechanisms:

-

High-availability (HA) is a local fault recovery mechanism within a multi-node NSP cluster. HA uses Kubernetes pod replicas to ensure minimal downtime in the event of the failure of a pod that provides critical services to the cluster, avoiding a system switchover to a redundant DR data center. Single-node NSP deployments cannot benefit from the inherent fault tolerance provided by HA.

-

Disaster recovery (DR) is a redundancy option available to any deployment size where two identical NSP installations exist in geographically separate data centers, providing fundamental network resiliency through geographical redundancy. Failover times for DR deployments are significantly longer than HA fault recovery and result in a brief interruption of services. In a DR deployment, the geographically dispersed installations must be identical in terms of NSP cluster components and redundancy models.

You must ensure that your NSP deployment has the redundancy that your use case requires. Nokia recommends combining HA and DR for the most robust redundancy solution.

An NSP deployment can include the NFM-P and WS-NOC, which also support redundant deployment. An NSP deployment can have a mixed redundancy model when the NSP cluster is deployed as an enhanced (HA) deployment type. In this scenario, the NSP cluster can be standalone, while the NFM-P or WS-NOC components can be deployed with DR redundancy.

Note: A redundant NSP deployment supports classic HA and fast-HA WS-NOC deployment; see the NSP 24.8 Release Notice for compatibility information.

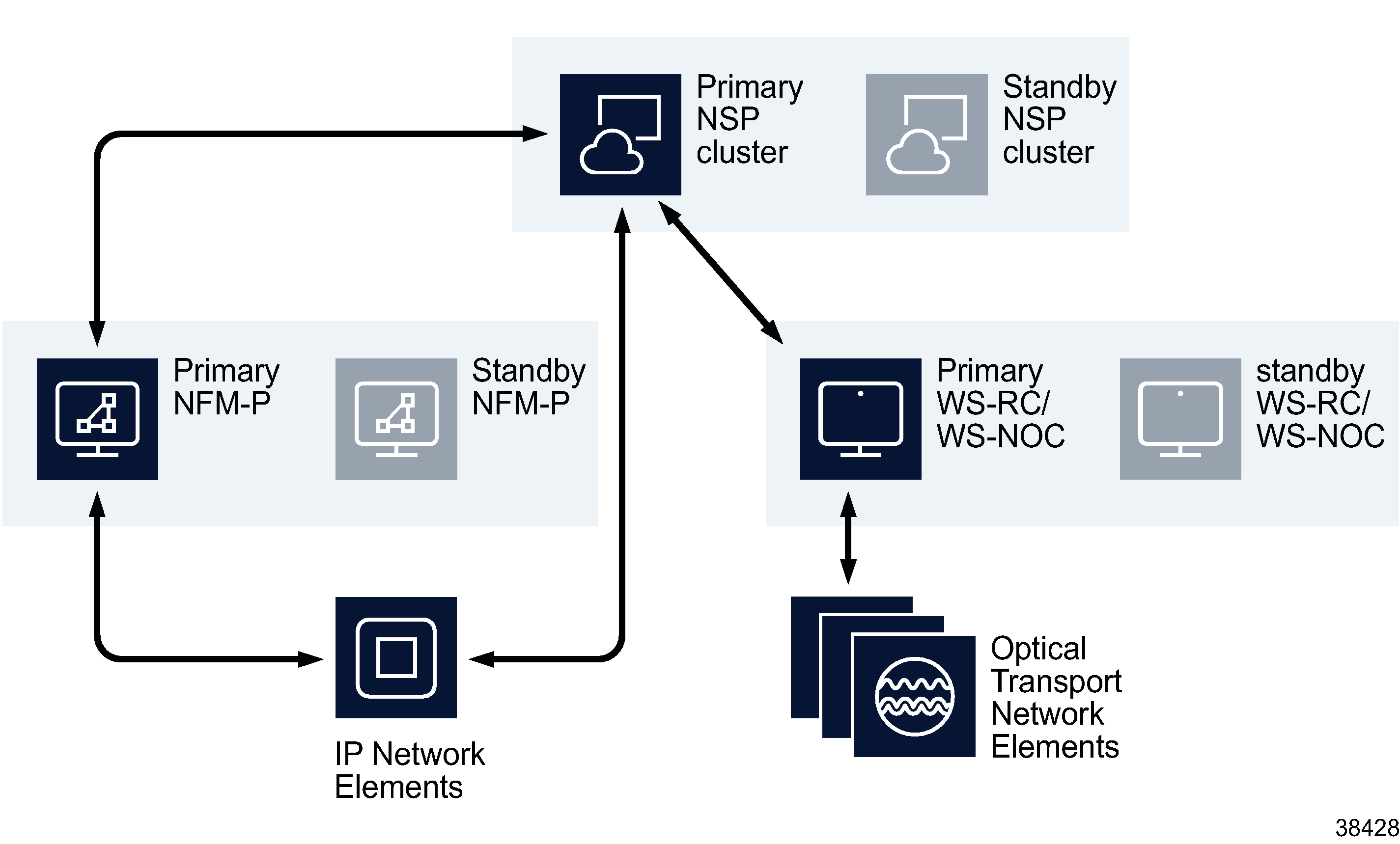

In a DR deployment of NSP that includes the NFM-P and WS-NOC, the primary NSP cluster operates independently of the primary NFM-P and WS-NOC; if the NSP cluster undergoes an activity switch, the new primary cluster connects to the primary NFM-P and WS-NOC. Similarly, if the NFM-P or WS-NOC undergoes an activity switch, the primary NSP cluster connects to the new primary NFM-P or WS-NOC.

Figure 1-3, DR NSP deployment including NFM-P and WS-NOC shows a fully redundant NSP deployment that includes the NFM-P and WS-NOC .

Figure 1-3: DR NSP deployment including NFM-P and WS-NOC

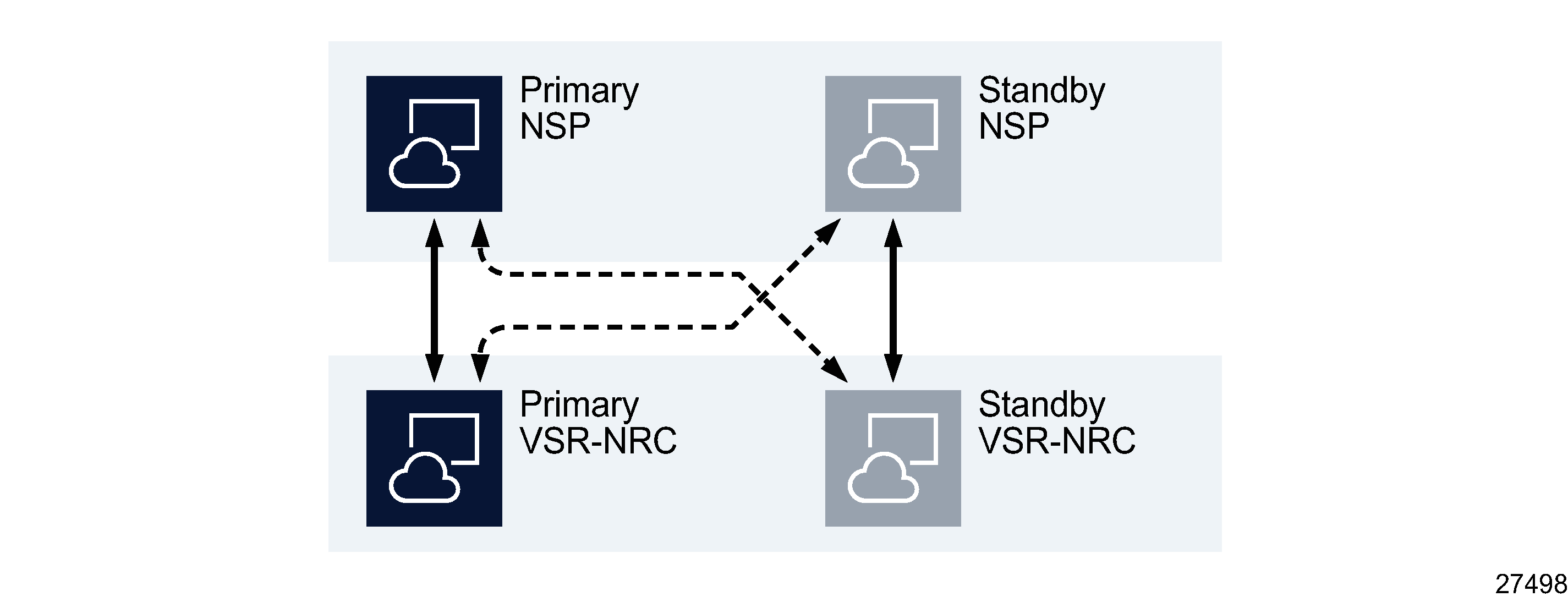

VSR-NRC redundancy

The VSR-NRC also supports redundant deployment. A standalone NSP can be deployed with a standalone or redundant VSR-NRC. When NSP is installed with a redundant VSR-NRC, if the communication channel between NSP and primary VSR-NRC fails, then the NSP switches communication to the standby VSR-NRC.

In a DR deployment of NSP, a redundant deployment of VSR-NRC is required. The primary NSP will communicate to NEs through the primary VSR-NRC, but if the communication channel to primary VSR-NRC fails, then the primary NSP can switch to the standby VSR-NRC.

Figure 1-4: Redundant NSP deployment with redundant VSR-NRC

When an activity switch takes place between redundant NSP clusters, the new active NSP cluster communicates with IP NEs through its corresponding VSR-NRC instance.

MDM redundancy and HA

The MDM is deployed within the NSP cluster. In an NSP cluster, a maximum of two MDM pods can be deployed on each node within the cluster (eg. a 3 node cluster can deploy a maximum of 6 MDM pods). In a DR NSP cluster deployment, each NSP cluster will have the same number of nodes and MDM instances.

In an HA NSP cluster, the MDM pods are deployed in a N+M deployment (where N+M equals the number of nodes in the cluster), with N active MDM instances and M standby instances. If an active MDM instance fails, a standby MDM instance in the active cluster will take over management of the nodes that were managed by the failed MDM instance. When the failed instance recovers, it becomes a standby instance (it will not automatically revert to active). When more than M active MDM instances fail simultaneously, a manual activity switch to the standby NSP cluster will be required. Each NSP cluster must have the same N+M configuration of MDM.