PBB-Epipe

This chapter provides information about Provider Backbone Bridging (PBB) — Ethernet Virtual Leased Line in an MPLS-based network which is applicable to SR OS.

Topics in this chapter include:

Applicability

This chapter was initially written for SR OS Release 7.0.R5. The CLI in the current edition corresponds to SR OS Release 20.10.R2. There are no specific prerequisites.

Overview

RFC 7041, Extensions to VPLS PE model for Provider Backbone Bridging, describes the PBB-VPLS model supported by SR OS. This model expands the VPLS PE model to support PBB as defined by the IEEE 802.1ah.

The PBB model is organized around a B-component (backbone instance) and an I-component (customer instance). In Nokia’s implementation of the PBB model, the use of an Epipe as I-component is allowed for point-to-point services. Multiple I-VPLS and Epipe services can be all mapped to the same B-VPLS (backbone VPLS instance).

The use of Epipe scales the E-Line services because no MAC switching, learning, or replication is required in order to deliver the point-to-point service. All packets ingressing the customer SAP are PBB-encapsulated and unicasted through the B-VPLS tunnel using the backbone destination MAC of the remote PBB PE. All the packets egressing the B-VPLS destined for the Epipe are PBB de-encapsulated and forwarded to the customer SAP.

Some use cases for PBB-Epipe are:

Get a more efficient and scalable solution for point-to-point services:

Up to 8K VPLS services per box are supported (including I-VPLS or B-VPLS) and using I-VPLS for point-to-point services takes VPLS resources as well as unnecessary customer MAC learning. A better solution is to connect a PBB-Epipe to a B-VPLS instance, where there is no customer MAC switching/learning.

Take advantage of the pseudowire aggregation in the M:1 model:

Many Epipe services may use only a single service and set of pseudowires over the backbone.

Have a uniform provisioning model for both point-to-point (Epipe) and multipoint (VPLS) services.

Using the PBB-Epipe, the core MPLS/pseudowire infrastructure does not need to be modified: the new Epipe inherits the existing pseudowire and MPLS structure already configured on the B-VPLS and there is no need for configuring new tunnels or pseudowire switching instances at the core.

Knowledge of the PBB-VPLS architecture and functionality on the service router family is assumed throughout this section. For additional information, see the relevant Nokia user documentation.

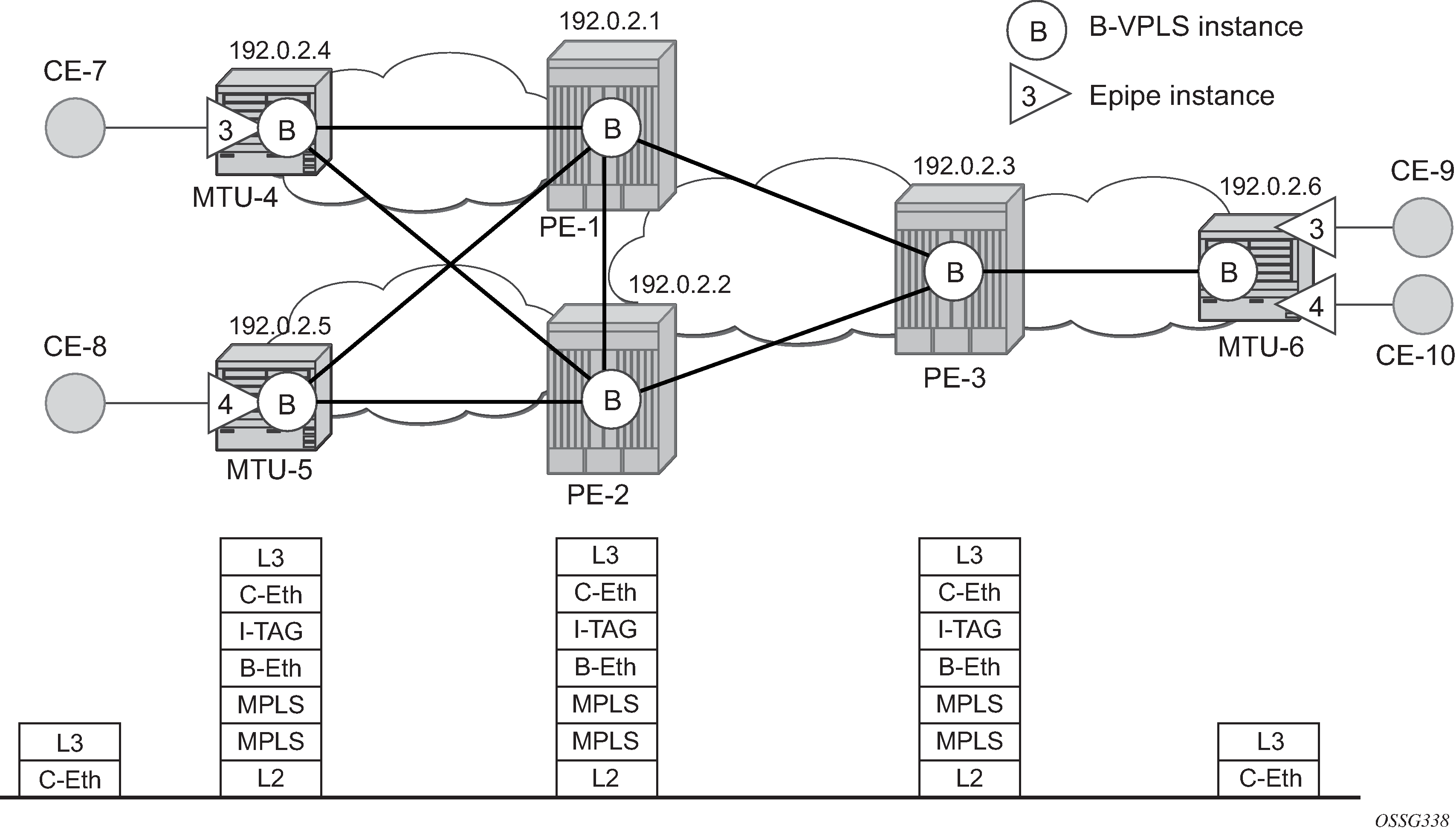

Example topology shows the example topology that is used throughout the rest of the chapter.

The setup consists of a three SR OS routers in the core (PE-1, PE-2, and PE-3) and three Multi-Tenant Unit (MTU) nodes connected to the core. A backbone VPLS instance (B-VPLS 101) will be defined in all the six nodes, whereas two Epipe services will be defined as illustrated in Example topology (Epipe 3 in nodes MTU-4 and MTU-6, Epipe 4 in nodes MTU-5 and MTU-6). Those Epipe services will be multiplexed into the common B-VPLS 101, using the I-Service ID (ISID) field within the I-TAG as the demultiplexer field required at the egress MTU to differentiate each specific customer. I-VPLS and Epipe services can be mapped to the same B-VPLS.

The B-VPLS domain constitutes a H-VPLS network itself, with spoke-SDPs from the MTUs to the core PE layer. Active/standby (A/S) spoke-SDPs can be used from the MTUs to the PEs (like in the MTU-4 and MTU-5 cases) or single non-redundant spoke-SDPs (like MTU-6).

The protocol stack being used along the path between the CEs is represented in Example topology.

Configuration

This section describes all the relevant PBB-Epipe configuration tasks for the setup shown in Example topology. The appropriate B-VPLS and associated IP/MPLS configuration is out of the scope of this document. In this particular example, the following protocols will be configured beforehand in the core:

ISIS-TE as IGP with all the interfaces being level-2. Alternatively, OSPF could have been used.

RSVP-TE as the MPLS protocol to signal the transport tunnels.

LSPs between core PEs will be fast re-route protected (facility bypass tunnels) whereas LSP tunnels between MTUs and PEs will not be protected.

The protection between MTU-4, MTU-5 and PE-1, PE-2 will be based on the A/S pseudowire protection configured in the B-VPLS.

BGP is configured for auto-discovery—BGP-AD (Layer 2 VPN family), because FEC 129 will be used to establish the pseudowires between PEs in the core (FEC 128 between MTU and PE nodes).

Once the IP/MPLS infrastructure is up and running, the service configuration tasks described in the following sections can be implemented.

PBB Epipe service configuration

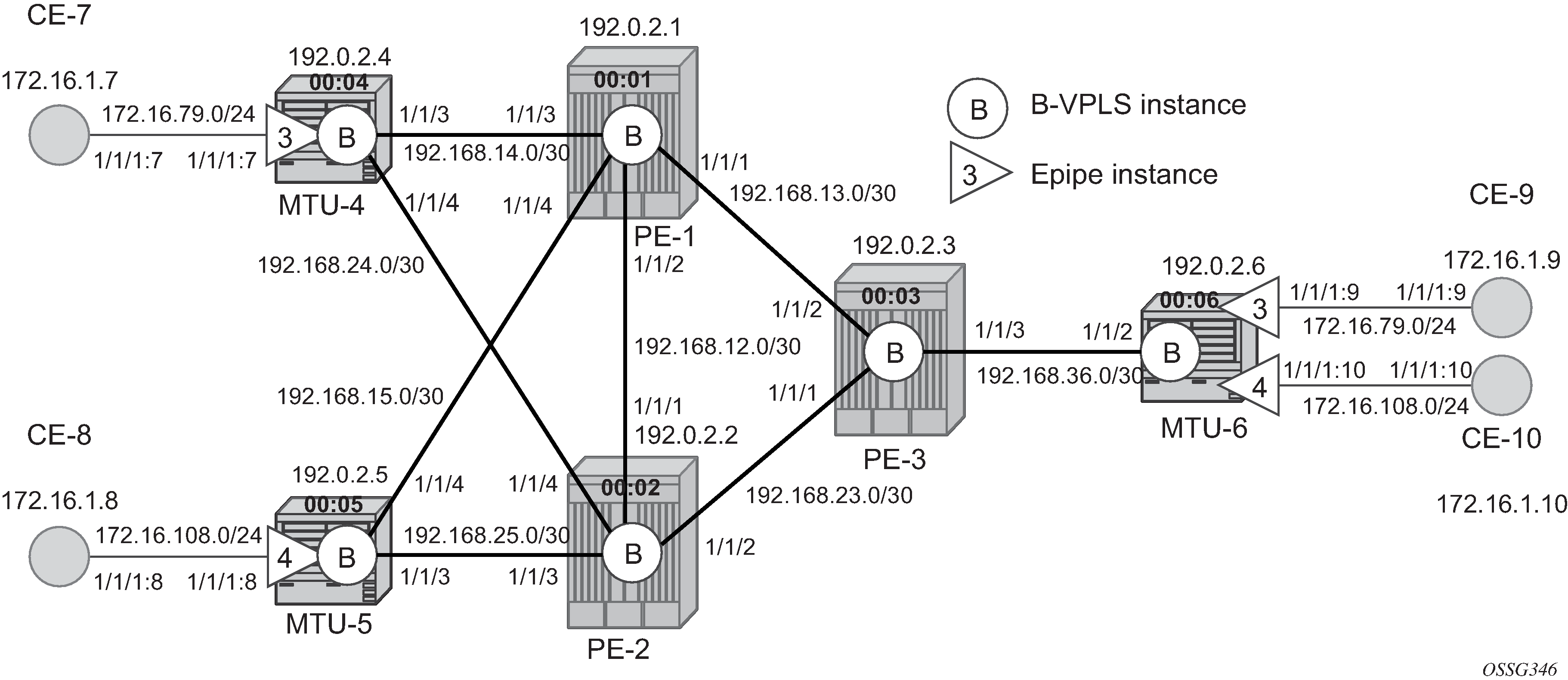

Setup detailed view shows an example where the Epipes 3 and 4 are using the B-VPLS 101 in the core. The same B-VPLS which is multiplexing the Epipe services into a common service provider infrastructure can also be used to connect the I-VPLS instances existing in the network for multipoint services.

B-VPLS and PBB configuration

First, configure the B-VPLS instance that will carry the PBB traffic. There is no specific requirement on the B-VPLS to support Epipes. The following shows the B-VPLS configuration on MTU-4 and PE-1.

# on MTU-4:

configure

service

vpls 101 name "B-VPLS 101" customer 1 b-vpls create

service-mtu 2000

pbb

source-bmac 00:04:04:04:04:04

exit

endpoint "core" create

no suppress-standby-signaling

exit

spoke-sdp 41:101 endpoint "core" create

precedence primary

exit

spoke-sdp 42:101 endpoint "core" create

exit

no shutdown

exit

# on PE-1:

configure

service

pw-template 1 use-provisioned-sdp create

split-horizon-group "CORE"

exit

exit

vpls 101 name "B-VPLS 101" customer 1 b-vpls create

service-mtu 2000

pbb

source-bmac 00:01:01:01:01:01

exit

bgp

route-target export target:65000:101 import target:65000:101

pw-template-binding 1

exit

exit

bgp-ad

vpls-id 65000:101

no shutdown

exit

spoke-sdp 14:101 create

exit

spoke-sdp 15:101 create

exit

no shutdown

exit

The relevant B-VPLS commands are in bold.

The keyword b-vpls is given at creation time and therefore it cannot be added to an existing regular VPLS instance. Besides the b-vpls keyword, the B-VPLS is a regular VPLS instance in terms of configuration, with the following exceptions:

The B-VPLS service MTU must be at least 18 bytes greater than the Epipe MTU of the multiplexed instances. In this example, the I-VPLS instances will have the default service MTU (1514 bytes), therefore, any MTU equal or greater than 1532 bytes must be configured. In this particular example, an MTU of 2000 bytes is configured in the B-VPLS instance throughout the network.

The source B-MAC is the MAC that will be used as a source when the PBB traffic is originated from that node. It is possible to configure a source B-MAC per B-VPLS instance (if there are more than one B-VPLS) or a common source B-MAC that will be shared by all the B-VPLS instances in the node. A common B-MAC is configured as follows:

# on MTU-4: configure service pbb source-bmac 00:04:04:04:04:04# on MTU-5: configure service pbb source-bmac 00:05:05:05:05:05# on MTU-6: configure service pbb source-bmac 00:06:06:06:06:06

The following considerations will be taken into account when configuring the B-VPLS:

B-VPLS SAPs:

Ethernet null, dot1q, and qinq encapsulations are supported.

Default SAP types are blocked in the CLI for the B-VPLS SAP.

B-VPLS SDPs:

For MPLS, both mesh and spoke-SDPs with split-horizon groups are supported.

Similar to regular pseudowire, the outgoing PBB frame on an SDP (for example, Bpseudowire) contains a BVID q-tag only if the pseudowire type is Ethernet VLAN (vc-type=vlan). If the pseudowire type is Ethernet (vc-type=ether), the BVID q-tag is stripped before the frame goes out.

BGP-AD is supported in the B-VPLS, therefore, spoke-SDPs in the B-VPLS can be signaled using FEC 128 or FEC 129. In this example, BGP-AD and FEC 129 are used. A split-horizon group has been configured to emulate the behavior of mesh SDPs in the core.

While Multiple MAC Registration Protocol (MMRP) is useful to optimize the flooding in the B-VPLS domain and build a flooding tree on a per I-VPLS basis, it does not have any effect for Epipes because the destination B-MAC used for Epipes is always the destination B-MAC configured in the Epipe and never the group B-MAC corresponding to the ISID.

If a local Epipe instance is associated with the B-VPLS, local frames originated or terminated on local Epipe(s) are PBB encapsulated or de-encapsulated using the PBB Etype provisioned under the related port or SDP component.

By default, the PBB Etype is 0x88e7 (which is the standard one defined in the 802.1ah, indicating that there is an I-TAG in the payload) but this PBB Etype can be changed if required due to interoperability reasons. This is the way to change it at port and/or SDP level:

A:MTU-4# configure port 1/1/3 ethernet pbb-etype

- pbb-etype <0x0600..0xffff>

- no pbb-etype

<0x0600..0xffff> : [1536..65535] - accepts in decimal or hex

A:MTU-4# configure service sdp 41 pbb-etype

- no pbb-etype [<0x0600..0xffff>]

- pbb-etype <0x0600..0xffff>

<0x0600..0xffff> : [1536..65535] - accepts in decimal or hex

The following commands are useful to check the actual PBB Etype.

A:MTU-4# show service sdp 41 detail | match PBB

Bw BookingFactor : 100 PBB Etype : 0x88e7

A:MTU-4# show port 1/1/3 | match PBB

PBB Ethertype : 0x88e7

Before configuring the Epipe itself, the operator can optionally configure MAC names under the PBB context. MAC names will simplify the Epipe provisioning later on and in case of any change on the remote node MAC address, only one configuration modification is required as opposed as one change per affected Epipe (potentially thousands of Epipes which are terminated onto the same remote node). The MAC names are configured in the service PBB CLI context:

*A:MTU-4# configure service pbb mac-name

- mac-name <name> <ieee-address>

- no mac-name <name>

<name> : 32 char max

<ieee-address> : xx:xx:xx:xx:xx:xx or xx-xx-xx-xx-xx-xx

# on all nodes:

configure

service

pbb

mac-name "MTU-4" 00:04:04:04:04:04

mac-name "MTU-5" 00:05:05:05:05:05

mac-name "MTU-6" 00:06:06:06:06:06

It is not required to configure a node with its own MAC address, so on MTU-4, the line defining the mac-name MTU-4 can be omitted.

Epipe configuration

Once the common B-VPLS is configured, the next step is the provisioning of the customer Epipe instances. For PBB-Epipes, the I-component or Epipe is composed of an I-SAP and a PBB tunnel endpoint which points to the backbone destination MAC address (B-DA).

The following outputs show the relevant CLI configuration for the two Epipe instances represented in Setup detailed view. The Epipe instances are configured on the MTU devices, whereas the core PEs are kept as customer-unaware nodes.

Epipes 3 and 4 are configured on MTU-6 as follows:

# on MTU-6:

configure

service

epipe 3 name "Epipe 3" customer 1 create

description "pbb epipe number 3"

pbb

tunnel 101 backbone-dest-mac "MTU-4" isid 3

exit

sap 1/1/1:9 create

exit

no shutdown

exit

epipe 4 name "Epipe 4" customer 1 create

description "pbb epipe number 4"

pbb

tunnel 101 backbone-dest-mac "MTU-5" isid 4

exit

sap 1/1/1:10 create

exit

no shutdown

exit

# on MTU-4:

configure

service

epipe 3 name "Epipe 3" customer 1 create

description "pbb epipe number 3"

pbb

tunnel 101 backbone-dest-mac "MTU-6" isid 3

exit

sap 1/1/1:7 create

exit

no shutdown

exit

# on MTU-5:

configure

service

epipe 4 name "Epipe 4" customer 1 create

description "pbb epipe number 4"

pbb

tunnel 101 backbone-dest-mac "MTU-6" isid 4

exit

sap 1/1/1:8 create

exit

no shutdown

exit

All Ethernet SAPs supported by a regular Epipe are also supported in the PBB Epipe. spoke-SDPs are not supported in PBB-Epipes, for example, no spoke-SDP is allowed when PBB tunnels are configured on the Epipe.

The PBB tunnel links the SAP configured to the B-VPLS 101 existing in the core. The following parameters are accepted in the PBB tunnel configuration:

A:MTU-5# configure service epipe 4 pbb tunnel

- no tunnel

- tunnel <service-id> backbone-dest-mac <mac-name> isid <ISID>

- tunnel <service-id> backbone-dest-mac <ieee-address> isid <ISID>

<service-id> : [1..2148007978]|<svc-name:64 char max>

<mac-name> : 32 char max

<ieee-address> : xx:xx:xx:xx:xx:xx or xx-xx-xx-xx-xx-xx

<ISID> : [0..16777215]

Where:

The service-id matches the B-VPLS ID.

The backbone-dest-mac can be given by a MAC name (as in this configuration example) or the MAC address itself. It is recommended to use MAC names, as explained in the previous section.

The ISID must be specified.

Flood avoidance in PBB-Epipes

As already discussed in the previous section, when provisioning a PBB Epipe, the remote backbone-dest-mac must be explicitly configured on the PBB tunnel so that the ingress PBB node can build the 802.1ah encapsulation.

If the configured remote backbone destination MAC address is not known in the local FDB, the Epipe customer frames will be 802.1ah encapsulated and flooded into the B-VPLS until the MAC is learned. As previously stated, MMRP does not help to minimize the flooding because the PBB Epipes always use the configured backbone-destination-mac for flooding traffic as opposed to the group B-MAC derived from the ISID.

Flooding could be indefinably prolonged in the following cases:

Configuration mistake of the backbone-destination-mac. The service will not work, but the operator will not detect the mistake, because the customer traffic is not dropped at the source node. Every single frame is turned into an unknown unicast PBB frame and therefore flooded into the B-VPLS domain.

Change the backbone-smac in the remote PE B-VPLS instance.

There is only unidirectional traffic in the Epipe service. In this case, the backbone-dest-mac will never be learned in the local FIB and the frames will always be flooded into the B-VPLS domain.

The remote node owning the backbone-destination-mac simply goes down.

In any of those cases, the operator can easily check whether the PBB Epipe is flooding into the B-VPLS domain, just by looking at the flood flag in the following command output:

*A:MTU-4# show service id 3 base

===============================================================================

Service Basic Information

===============================================================================

Service Id : 3 Vpn Id : 0

Service Type : Epipe

---snip---

-------------------------------------------------------------------------------

Service Access & Destination Points

-------------------------------------------------------------------------------

Identifier Type AdmMTU OprMTU Adm Opr

-------------------------------------------------------------------------------

sap:1/1/1:7 q-tag 1518 1518 Up Up

-------------------------------------------------------------------------------

PBB Tunnel Point

-------------------------------------------------------------------------------

B-vpls Backbone-dest-MAC Isid AdmMTU OperState Flood Oper-dest-MAC

-------------------------------------------------------------------------------

101 MTU-6 3 2000 Up Yes 00:06:06:06:06:06

-------------------------------------------------------------------------------

Last Status Change: 01/05/2021 16:03:03

Last Mgmt Change : 01/05/2021 16:03:03

===============================================================================

In this particular example, the PBB Epipe 3 is flooding into the B-VPLS 101, as the flood flag indicates. The operator can also confirm that the operational destination B-MAC for the PBB tunnel, MTU-6, has not been learned in the B-VPLS FDB:

*A:MTU-4# show service id 101 fdb pbb

=======================================================================

Forwarding Database, b-Vpls Service 101

=======================================================================

MAC Source-Identifier iVplsMACs Epipes Type/Age

-----------------------------------------------------------------------

No Matching Entries

=======================================================================

In small B-VPLS environments (up to 20 B-VPLSs, each with 10 MC-LAGs), it is possible to configure the PBB V-VPLS MAC notification mechanism to send notification messages at regular intervals (using the renotify parameter), rather than being only event-driven. This can avoid flooding into the B-VPLS.

Flooding cases 1 and 2 — Wrong backbone-dest-mac

Flooding cases 1 and 2 should be fixed after detecting the flooding (see previous commands) and checking the FDBs and PBB tunnel configurations.

Flooding case 3 — Unidirectional traffic: virtual MEP and CCM configuration

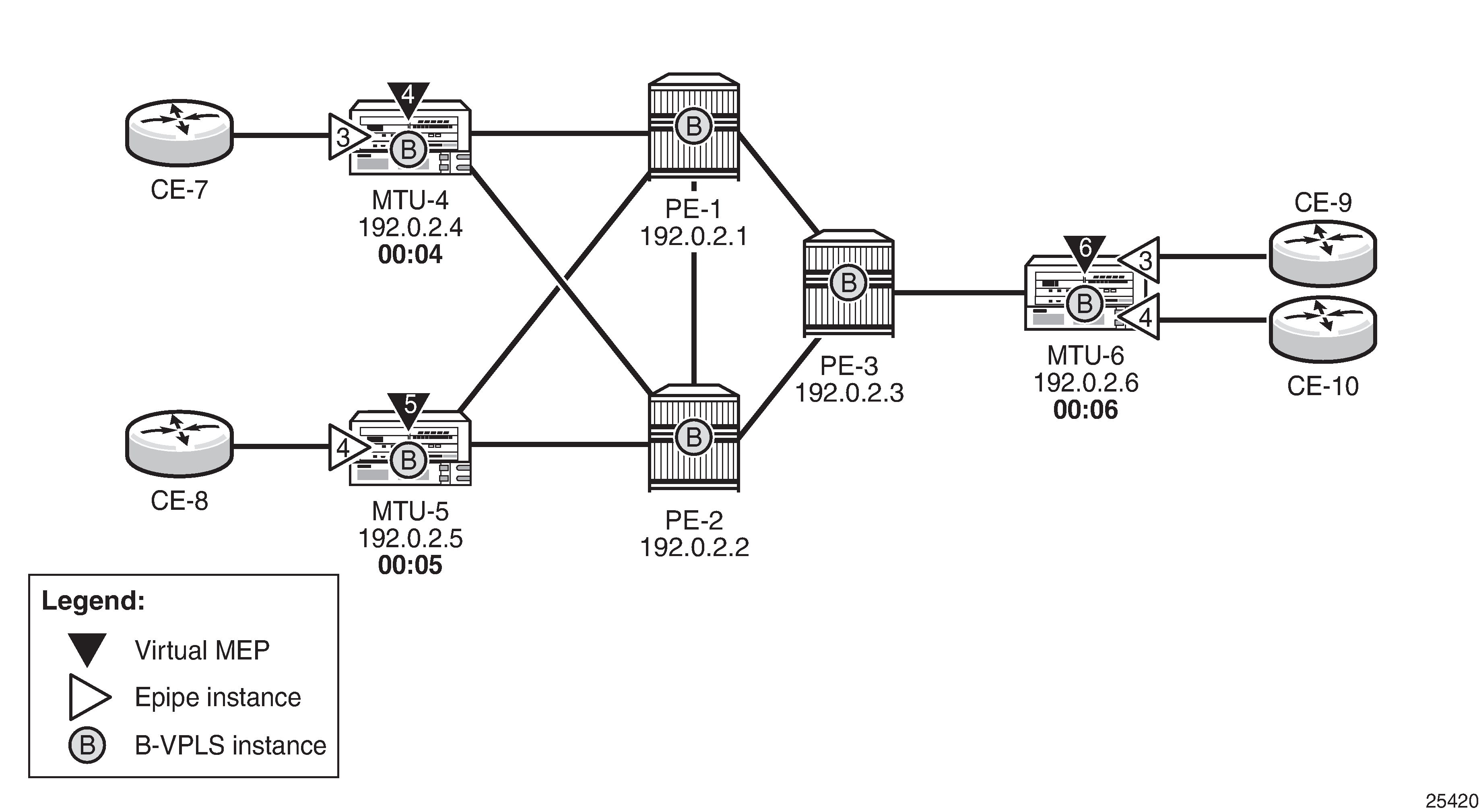

For flooding case 3 (unidirectional traffic), Nokia recommends the use of ETH-CFM (802.1ag/Y.1731 Connectivity Fault Management) virtual Maintenance End Points (MEPs). By defining a virtual MEP per node terminating a PBB-Epipe, configuring the MEP MAC address to be the source-bmac value and activating continuity check messages (CCM), a twofold effect is achieved:

The pbb-tunnel backbone-destination-mac will always be learned at the local FDB, as long as the remote virtual MEP is active and sending CC messages. As a result, there will not be flooding even if we have unidirectional traffic.

An automatic proactive OAM mechanism exists to detect failures on remote nodes, which ultimately cause unnecessary flooding in the B-VPLS domain.

Virtual MEPs for flooding avoidance shows an example where the virtual MEPs MEP4, MEP5, and MEP6 are configured in B-VPLS 101:

The following configuration example uses MTU-4. First, the general ETH-CFM configuration is made:

# on MTU-4:

configure

eth-cfm

domain 1 format none level 3 admin-name "domain 1"

association 1 format icc-based name "B-VPLS-000101" admin-name "assoc-1"

bridge-identifier 101

exit

remote-mepid 5

remote-mepid 6

exit

exit

exit

Then the actual virtual MEP configuration is made:

# on MTU-4:

configure

service

vpls 101

eth-cfm

mep 4 domain 1 association 1

ccm-enable

mac-address 00:04:04:04:04:04

no shutdown

exit

exit

exit

The MAC address configured for the MEP4 matches the MAC address configured as the source-bmac on MTU-4, which is the backbone-destination-mac configured on the Epipe 3 PBB tunnel on MTU-6. The source-BMAC address on MTU-4 is 00:04:04:04:04:04, as follows:

# on MTU-4:

configure

service

pbb

source-bmac 00:04:04:04:04:04

mac-name "MTU-4" 00:04:04:04:04:04

mac-name "MTU-5" 00:05:05:05:05:05

mac-name "MTU-6" 00:06:06:06:06:06

exit

The backbone destination MAC address configured on MTU-6 uses MAC name ‟MTU-4”, which corresponds to MAC address 00:04:04:04:04:04, as follows:

# on MTU-6:

configure

service

pbb

source-bmac 00:06:06:06:06:06

mac-name "MTU-4" 00:04:04:04:04:04

mac-name "MTU-5" 00:05:05:05:05:05

mac-name "MTU-6" 00:06:06:06:06:06

exit

epipe 3 name " Epipe 3" customer 1 create

description "pbb epipe number 3"

pbb

tunnel 101 backbone-dest-mac "MTU-4" isid 3

exit

sap 1/1/1:9 create

exit

no shutdown

exit

Once MEP4 has been configured, check that MTU-6 is receiving CC messages from MEP4 with the following command:

*A:MTU-6# show eth-cfm mep 6 domain 1 association 1 all-remote-mepids

=============================================================================

Eth-CFM Remote-Mep Table

=============================================================================

R-mepId AD Rx CC RxRdi Port-Tlv If-Tlv Peer Mac Addr CCM status since

-----------------------------------------------------------------------------

4 True False Absent Absent 00:04:04:04:04:04 01/05/2021 16:05:12

5 True False Absent Absent 00:05:05:05:05:05 01/05/2021 16:05:12

=============================================================================

Entries marked with a 'T' under the 'AD' column have been auto-discovered.

As a result of the CC messages coming from MEP4, the MTU-4 MAC is permanently learned in the B-VPLS 101 FDB on node MTU-6 and no flooding takes place. The following output shows that the flooding flag is not set.

*A:MTU-6# show service id 3 base

===============================================================================

Service Basic Information

===============================================================================

Service Id : 3 Vpn Id : 0

Service Type : Epipe

---snip---

-------------------------------------------------------------------------------

Service Access & Destination Points

-------------------------------------------------------------------------------

Identifier Type AdmMTU OprMTU Adm Opr

-------------------------------------------------------------------------------

sap:1/1/1:9 q-tag 1518 1518 Up Up

-------------------------------------------------------------------------------

PBB Tunnel Point

-------------------------------------------------------------------------------

B-vpls Backbone-dest-MAC Isid AdmMTU OperState Flood Oper-dest-MAC

-------------------------------------------------------------------------------

101 MTU-4 3 2000 Up No 00:04:04:04:04:04

-------------------------------------------------------------------------------

Last Status Change: 01/05/2021 16:03:16

Last Mgmt Change : 01/05/2021 16:03:16

===============================================================================

Flooding case 4 — Remote node failure

If the node owner of the backbone-dest-mac fails or gets isolated, the node where the PBB Epipe is initiated will not detect the failure; that is, if MTU-4 fails, the Epipe 3 remote end will also fail but MTU-6 will not detect the failure and as a result of that, MTU-6 will flood the traffic to the network (flooding will occur after MTU-4 MAC is removed from the B-VPLS FDBs, due to either the B-VPLS flushing mechanisms or aging).

In order to avoid/reduce flooding in this case, the following mechanisms are recommended:

Provision virtual MEPs in the B-VPLS instances terminating PBB Epipes, as already explained. This will guarantee there is no unknown B-MAC unicast being flooded under normal operation.

CCM timers should be provisioned based on how long the service provider is willing to accept flooding.

*A:MTU-6# configure eth-cfm domain 1 association 1 ccm-interval - ccm-interval <interval> - no ccm-interval <interval> : {10ms|100ms|1|10|60|600} - default 10 secondsIt is possible to provision discard-unknown in the B-VPLS, so that flooded traffic due to the destination MAC being unknown in the B-VPLS is discarded immediately. This can be configured on the PEs and the MTUs. On the MTUs, it is important to configure this in conjunction with the CC messages from the virtual MEPs to ensure that the remote B-MACs are learned in both directions. If, for any reason, the remote B-MACs are not in the MTU B-VPLS, no traffic will be forwarded at all on the PBB-Epipe.

# on all nodes: configure service vpls 101 discard-unknownAs soon as the MTU node recovers, it will start sending CC messages and the backbone MAC address will be learned on the backbone nodes and MTU nodes again.

With the recommended configuration in place, in case MTU-4 fails, the backbone-dest-mac configured on the PBB tunnel for Epipe 3 on MTU-6 will be removed from the B-VPLS 101 on all the nodes (either by MAC flush mechanisms on the B-VPLS or by aging). From that point on, traffic originated from CE-9 will be discarded at MTU-6 and won’t be flooded further.

As soon as MTU-4 comes back up, MEP4 will start sending CCM and as such the MTU-4 MAC will be learned throughout the B-VPLS 101 domain and in particular in PE-1, PE-3, and MTU-6 (CCM PDUs use a multicast address). From the moment MTU-4 MAC is known on the backbone nodes and MTU-6, the traffic will not be discarded any more, but forwarded to MTU-4.

PBB-Epipe show commands

The following commands can help to check the PBB Epipe configuration and their related parameters.

For the B-VPLS service:

*A:MTU-4# show service id 101 base

===============================================================================

Service Basic Information

===============================================================================

Service Id : 101 Vpn Id : 0

Service Type : b-VPLS

MACSec enabled : no

Name : B-VPLS 101

Description : (Not Specified)

Customer Id : 1 Creation Origin : manual

Last Status Change: 01/05/2021 16:00:57

Last Mgmt Change : 01/05/2021 16:08:54

Etree Mode : Disabled

Admin State : Up Oper State : Up

MTU : 2000

SAP Count : 0 SDP Bind Count : 2

Snd Flush on Fail : Disabled Host Conn Verify : Disabled

SHCV pol IPv4 : None

Propagate MacFlush: Disabled Per Svc Hashing : Disabled

Allow IP Intf Bind: Disabled

Fwd-IPv4-Mcast-To*: Disabled Fwd-IPv6-Mcast-To*: Disabled

Mcast IPv6 scope : mac-based

Temp Flood Time : Disabled Temp Flood : Inactive

Temp Flood Chg Cnt: 0

SPI load-balance : Disabled

TEID load-balance : Disabled

Src Tep IP : N/A

Vxlan ECMP : Disabled

MPLS ECMP : Disabled

VSD Domain : <none>

Oper Backbone Src : 00:04:04:04:04:04

Use SAP B-MAC : Disabled

i-Vpls Count : 0

Epipe Count : 1

Use ESI B-MAC : Disabled

-------------------------------------------------------------------------------

Service Access & Destination Points

-------------------------------------------------------------------------------

Identifier Type AdmMTU OprMTU Adm Opr

-------------------------------------------------------------------------------

sdp:41:101 S(192.0.2.1) Spok 8000 8000 Up Up

sdp:42:101 S(192.0.2.2) Spok 8000 8000 Up Up

===============================================================================

* indicates that the corresponding row element may have been truncated.

For the Epipe service:

*A:MTU-4# show service id 3 base

===============================================================================

Service Basic Information

===============================================================================

Service Id : 3 Vpn Id : 0

Service Type : Epipe

MACSec enabled : no

Name : Epipe 3

Description : pbb epipe number 3

Customer Id : 1 Creation Origin : manual

Last Status Change: 01/05/2021 16:03:03

Last Mgmt Change : 01/05/2021 16:03:03

Test Service : No

Admin State : Up Oper State : Up

MTU : 1514

Vc Switching : False

SAP Count : 1 SDP Bind Count : 0

Per Svc Hashing : Disabled

Vxlan Src Tep Ip : N/A

Force QTag Fwd : Disabled

Oper Group : <none>

-------------------------------------------------------------------------------

Service Access & Destination Points

-------------------------------------------------------------------------------

Identifier Type AdmMTU OprMTU Adm Opr

-------------------------------------------------------------------------------

sap:1/1/1:7 q-tag 1518 1518 Up Up

-------------------------------------------------------------------------------

PBB Tunnel Point

-------------------------------------------------------------------------------

B-vpls Backbone-dest-MAC Isid AdmMTU OperState Flood Oper-dest-MAC

-------------------------------------------------------------------------------

101 MTU-6 3 2000 Up No 00:06:06:06:06:06

-------------------------------------------------------------------------------

Last Status Change: 01/05/2021 16:03:03

Last Mgmt Change : 01/05/2021 16:03:03

===============================================================================

The following command shows all the Epipe instances multiplexed into a particular B-VPLS and its status.

*A:MTU-4# show service id 101 epipe

===============================================================================

Related Epipe services for b-Vpls service 101

===============================================================================

Epipe SvcId Oper ISID Admin Oper

-------------------------------------------------------------------------------

3 3 Up Up

-------------------------------------------------------------------------------

Number of Entries : 1

-------------------------------------------------------------------------------

===============================================================================

To check the virtual MEP information, the following command shows the local virtual MEPs configured on the node:

*A:MTU-4# show eth-cfm cfm-stack-table all-virtuals

===============================================================================

CFM Stack Table Defect Legend:

R = Rdi, M = MacStatus, C = RemoteCCM, E = ErrorCCM, X = XconCCM

A = AisRx, L = CSF LOS Rx, F = CSF AIS/FDI rx, r = CSF RDI rx

G = receiving grace PDU (MCC-ED or VSM) from at least one peer

===============================================================================

CFM Virtual Stack Table

===============================================================================

Service Lvl Dir Md-index Ma-index MepId Mac-address Defect G

-------------------------------------------------------------------------------

101 3 U 1 1 4 00:04:04:04:04:04 ------- -

===============================================================================

The following command shows all the information related to the remote MEPs configured in the association, for example, the remote virtual MEPs configured in MTU-5 and MTU-6:

*A:MTU-4# show eth-cfm mep 4 domain 1 association 1 all-remote-mepids

=============================================================================

Eth-CFM Remote-Mep Table

=============================================================================

R-mepId AD Rx CC RxRdi Port-Tlv If-Tlv Peer Mac Addr CCM status since

-----------------------------------------------------------------------------

5 True False Absent Absent 00:05:05:05:05:05 01/05/2021 16:04:56

6 True False Absent Absent 00:06:06:06:06:06 01/05/2021 16:04:56

=============================================================================

Entries marked with a 'T' under the 'AD' column have been auto-discovered.

The following command shows the detail information and status of the local virtual MEP configured in MTU-4:

*A:MTU-4# show eth-cfm mep 4 domain 1 association 1

===============================================================================

Eth-Cfm MEP Configuration Information

===============================================================================

Md-index : 1 Direction : Up

Ma-index : 1 Admin : Enabled

MepId : 4 CCM-Enable : Enabled

SvcId : 101

Description : (Not Specified)

FngAlarmTime : 0 FngResetTime : 0

FngState : fngReset ControlMep : False

LowestDefectPri : macRemErrXcon HighestDefect : none

Defect Flags : None

Mac Address : 00:04:04:04:04:04 Collect LMM Stats : disabled

LMM FC Stats : None

LMM FC In Prof : None

TxAis : noTransmit TxGrace : noTransmit

Facility Fault : disabled

CcmLtmPriority : 7 CcmPaddingSize : 0 octets

CcmTx : 47 CcmSequenceErr : 0

CcmTxIfStatus : Absent CcmTxPortStatus : Absent

CcmTxRdi : False CcmTxCcmStatus : transmit

CcmIgnoreTLVs : (Not Specified)

Fault Propagation: disabled FacilityFault : n/a

MA-CcmInterval : 10 MA-CcmHoldTime : 0ms

MA-Primary-Vid : Disabled

Eth-1Dm Threshold: 3(sec) MD-Level : 3

Eth-1Dm Last Dest: 00:00:00:00:00:00

Eth-Dmm Last Dest: 00:00:00:00:00:00

Eth-Ais : Disabled

Eth-Ais Tx defCCM: allDef

Eth-Tst : Disabled

Eth-CSF : Disabled

Eth-Cfm Grace Tx : Enabled Eth-Cfm Grace Rx : Enabled

Eth-Cfm ED Tx : Disabled Eth-Cfm ED Rx : Enabled

Eth-Cfm ED Rx Max: 0

Eth-Cfm ED Tx Pri: CcmLtmPri (7)

Eth-BNM Receive : Disabled Eth-BNM Rx Pacing : 5

Redundancy:

MC-LAG State : n/a

CcmLastFailure Frame:

None

XconCcmFailure Frame:

None

===============================================================================

When there is a failure on a remote Epipe node, as described, the source node keeps sending traffic. The 802.1ag/Y.1731 virtual MEP configured can help to detect and troubleshoot the problem. For instance, when a failure happens in MTU-6 (node goes down or the B-VPLS instance is disabled), the virtual MEP show commands will show the following information:

# on MTU-6:

configure

service

vpls 101

shutdown

*A:MTU-4# show eth-cfm mep 4 domain 1 association 1

===============================================================================

Eth-Cfm MEP Configuration Information

===============================================================================

Md-index : 1 Direction : Up

Ma-index : 1 Admin : Enabled

MepId : 4 CCM-Enable : Enabled

SvcId : 101

Description : (Not Specified)

FngAlarmTime : 0 FngResetTime : 0

FngState : fngDefectReported ControlMep : False

LowestDefectPri : macRemErrXcon HighestDefect : defRemoteCCM

Defect Flags : bDefRDICCM bDefRemoteCCM

Mac Address : 00:04:04:04:04:04 Collect LMM Stats : disabled

LMM FC Stats : None

LMM FC In Prof : None

TxAis : noTransmit TxGrace : noTransmit

Facility Fault : disabled

CcmLtmPriority : 7 CcmPaddingSize : 0 octets

CcmTx : 70 CcmSequenceErr : 0

CcmTxIfStatus : Absent CcmTxPortStatus : Absent

CcmTxRdi : True CcmTxCcmStatus : transmit

CcmIgnoreTLVs : (Not Specified)

Fault Propagation: disabled FacilityFault : n/a

MA-CcmInterval : 10 MA-CcmHoldTime : 0ms

MA-Primary-Vid : Disabled

Eth-1Dm Threshold: 3(sec) MD-Level : 3

Eth-1Dm Last Dest: 00:00:00:00:00:00

Eth-Dmm Last Dest: 00:00:00:00:00:00

Eth-Ais : Disabled

Eth-Ais Tx defCCM: allDef

Eth-Tst : Disabled

Eth-CSF : Disabled

Eth-Cfm Grace Tx : Enabled Eth-Cfm Grace Rx : Enabled

Eth-Cfm ED Tx : Disabled Eth-Cfm ED Rx : Enabled

Eth-Cfm ED Rx Max: 0

Eth-Cfm ED Tx Pri: CcmLtmPri (7)

Eth-BNM Receive : Disabled Eth-BNM Rx Pacing : 5

Redundancy:

MC-LAG State : n/a

CcmLastFailure Frame:

None

XconCcmFailure Frame:

None

===============================================================================

The bDefRemoteCCMdefect flag clearly shows that there is a remote MEP in the association which has stopped sending CCMs. In order to find out which node is affected, see the following output:

*A:MTU-4# show eth-cfm mep 4 domain 1 association 1 all-remote-mepids

=============================================================================

Eth-CFM Remote-Mep Table

=============================================================================

R-mepId AD Rx CC RxRdi Port-Tlv If-Tlv Peer Mac Addr CCM status since

-----------------------------------------------------------------------------

5 True True Absent Absent 00:05:05:05:05:05 01/05/2021 16:04:56

6 False False Absent Absent 00:00:00:00:00:00 01/05/2021 16:14:28

5 True True Absent Absent 00:05:05:05:05:05 06/14/2019 09:13:39

6 False False Absent Absent 00:00:00:00:00:00 06/14/2019 09:17:58

=============================================================================

Entries marked with a 'T' under the 'AD' column have been auto-discovered.

CCMs are no longer received from virtual MEP 6 (the one defined in MTU-6) since 01/05/2021 16:14:28. This conveys which node has failed and when it failed.

Conclusion

Point-to-Point Ethernet services can use the same operational model followed by PBB VPLS for multipoint services. In other words, Epipes can be linked to the same B-VPLS domain being used by I-VPLS instances and use the existing H-VPLS network infrastructure in the core. The use of PBB Epipes reduces dramatically the number of services and pseudowires in the core and therefore allows the service provider to scale the number of E-Line services in the network.

The example used in this chapter shows the configuration of the PBB Epipes as well as all the related features which are required for this environment. Show commands have also been suggested so that the operator can verify and troubleshoot the service.