OpenStack ML2 plugin

EDA Cloud Connect integrates with OpenStack to provide fabric level application networks for OpenStack virtual machines. The EDA Cloud Connect integration leverages the OpenStack Neutron architecture to support managing the fabric directly from OpenStack and allows the fabric to dynamically respond to the networking needs of the application.

It provides the following advantages and capabilities:

- Direct integration into the network management workflow of OpenStack.

- The use of the common ML2 plugins used by enterprise applications and VNFs like OVS, OVS-DPDK and SR-IOV.

- Automatic provisioning of the fabric based on where the virtual machines need the connectivity.

- Support of advanced workflows through EDA, including for VNF use cases with features like QoS, ACLs, and BGP PE-CE.

- Interconnectivity between different cloud environments, allowing for flexible network configurations.

Supported versions

Currently, the OpenStack plugin is only supported with the Nokia CBIS 24 OpenStack distribution.

Architecture

The OpenStack Plugin deploys some components into the OpenStack environment to allow the management of the SR Linux-based fabric through OpenStack. Below is an overview of these components.

Connect ML2 plugin

The Connect ML2 plugin is the heart of the integration between OpenStack and EDA. This plugin integrates with OpenStack Neutron and reacts to the creation of networks, network segments, and VM ports.

When a network segment is created in OpenStack Neutron, a matching

BridgeDomain is created in EDA.

When a VM port is created inside a Neutron Subnet and the VM is started on an

OpenStack compute node, the ML2 plugin learns which compute node the VM is deployed

and through the internal topology ensures the necessary

ConnectInterfaces are configured in EDA.

This information is learned from the L2 Agent extension which stores the Neutron Network to physical interfaces topology in the Neutron database. This information is then provided to the Connect service and together with the LLDP information of the fabric, the Connect service knows which downlinks in the fabrics need to be configured.

Connect L2 Agent Extensions

The Connect L2 Agent Extensions extend the already existing L2 Agent that is present on every OpenStack compute. These extensions are responsible for mapping the relation between the physical NICs and the different networking constructs set up for the Neutron networks.

Deployment

This section describes how to prepare for the deployment of the OpenStack plugin and how to prepare and install the CBIS extension.

CBIS extension preparation

This section describes how to prepare for the installation of the CBIS extension for the EDA ML2 plugin.

The EDA ML2 plugin creates a ConnectPlugin within the Connect Service

using the name of the CBIS Cloud deployment, as provisioned at installation time. Ensure

that no ConnectPlugin exists with that name before the CBIS

deployment.

Creating a service account and token

Creating a service account

The EDA Connect OpenStack Plugin uses a ServiceAccount resource in

the EDA Kubernetes cluster to create the necessary resources in the EDA cluster for

the integration to properly work.

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: openstack-plugin

namespace: eda-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: openstack-plugin

subjects:

- kind: ServiceAccount

name: openstack-plugin

namespace: eda-system

roleRef:

kind: ClusterRole

# This cluster role is assumed to be already installed by connect app.

name: eda-connect-plugin-cluster-role

apiGroup: rbac.authorization.k8s.ioThis service account must be created in the eda-system

namespace.

Creating a service account token

The Service Account Token can be used by the plugin to connect to the EDA Kubernetes cluster. You can create a service account token by using the following manifest, which should be applied on the EDA Kubernetes cluster.

---

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: openstack-plugin

namespace: eda-system

annotations:

kubernetes.io/service-account.name: openstack-pluginca_cert information using the following commands from the

eda-system

namespace.kubectl get secrets/openstack-plugin -n eda-system --template={{.data.token}} | base64 --decode

kubectl get secrets/openstack-plugin -n eda-system --template={{.data.ca_cert}} | base64 --decodeWhen using the OpenStack plugin in production, create one service account per plugin. This way, tokens can be revoked on a per-plugin basis.

Deploying the CBIS extension

The following procedure is used to install the CBIS extension.

-

Extract the tarball.

Untar the integration tarball and put the extracted content under /root/cbis-extensions inside the CBIS Manager VM.

This creates the /root/cbis-extensions/eda_connect folder.

-

Refresh the CBIS manager UI.

The CBIS Manager should now detect /root/cbis-extensions/eda_connect as an SDN extension.

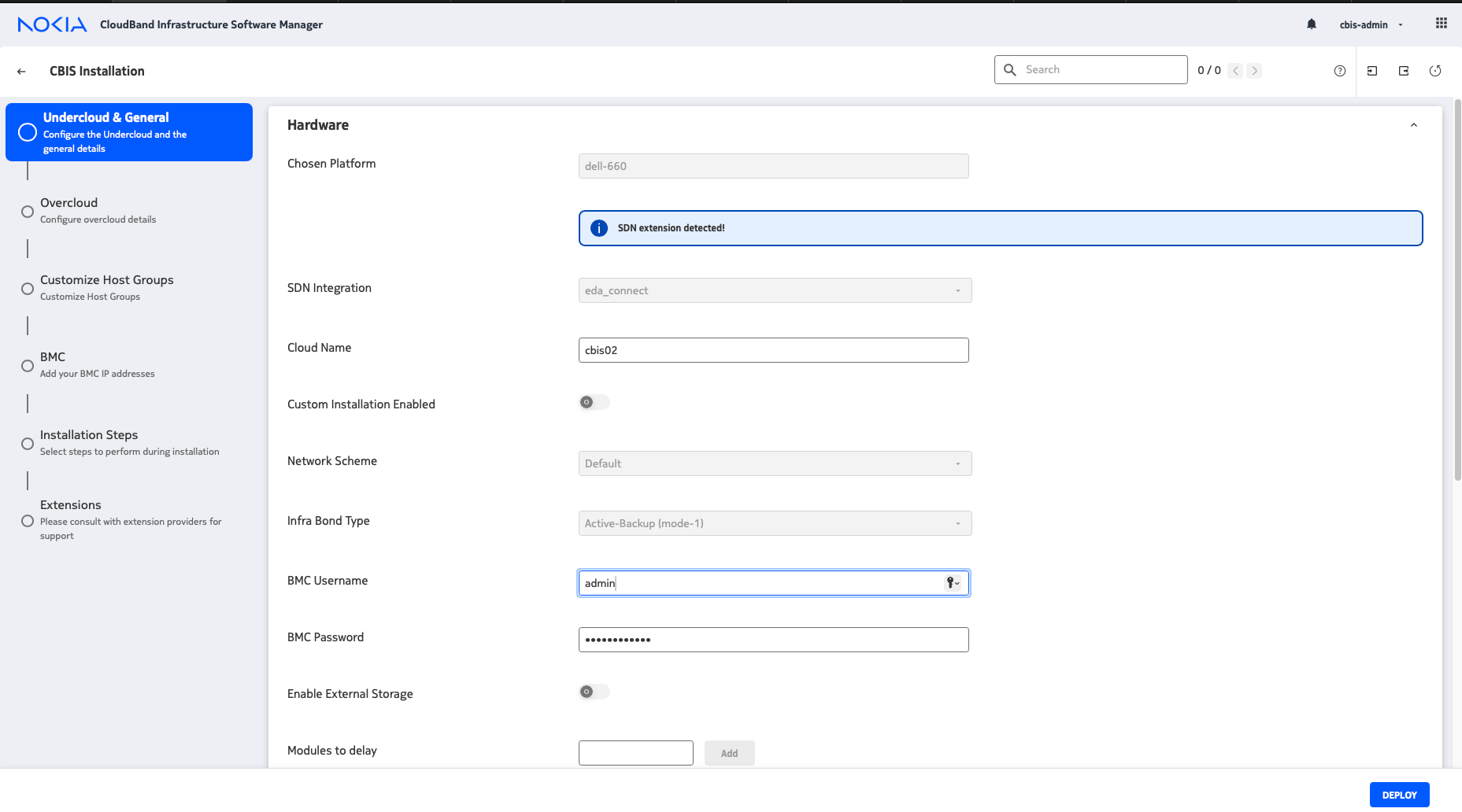

Figure 2. Detection of SDN extension by CBIS Manager

-

Add the extension configuration in CBIS manager UI.

Choose a unique name for each cloud. If there is another cloud name registered to the same EDA environment, the CBIS deployment will fail before undercloud deployment. An error message indicates that a ConnectPlugin exists with the same name.

-

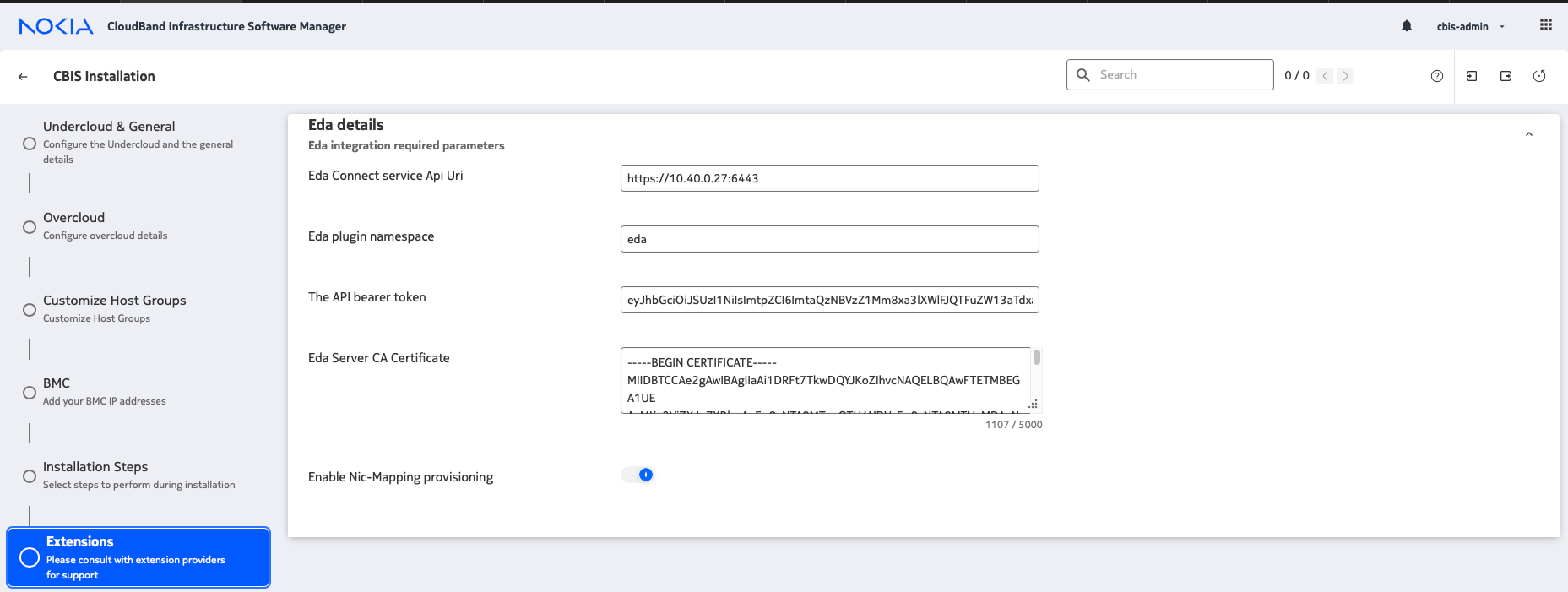

Enter the EDA environment details.

Figure 3. EDA environment details

The Connect OpenStack plugin communicates with EDA using the Kubernetes API.

- EDA Connect service API URI

URI of the Kubernetes API hosting EDA. This can be found in the kubeconfig file for your cluster.

- EDA plugin namespace

The namespace in which the nodes for this cloud are deployed.

- API Bearer Token

The bearer token needed to communicate with the Kubernetes API. This can be extracted from the service account token created in a previous step.

- EDA Server CA Certificate

The self-signed CA certificate of the Kubernetes API. This can be extracted from the service account token created in a previous step.

- Nic-Mapping provisioning

Allows manual creation of NIC-mapping entries.

- EDA Connect service API URI

Updating the bearer token

The following procedure is used when the bearer token needs to be updated after installation.

-

Update the plugin.ini file with the new token.

The configuration file can be found at /etc/neutron/plugins/ml2/ml2_conf_eda_connect.ini.

[ml2_eda_connect] # Api host of the Connect service #api_host = None # Api bearer autentication token api_token = <new api token> # CA certificate file ca_cert_path = /opt/stack/data/eda.pem # Verify SSL #verify_ssl = True # Used as an identifier of the Connect plugin #plugin_name = openstack # Plugin heartbeat interval min=3, max=60 to Connect service # in seconds. #heartbeat_interval = 10If you need to update the certificate info for connecting to the EDA Kubernetes API, replace the file referred to in

ca_cert_path. - Restart Neutron.

Neutron ML2 plugin configurations

The EDA Connect OpenStack plugin and the wider Neutron ML2 framework support a range of configurations that fine tune their behavior.

Virtualization and network types

- VLAN

The plugin orchestrates on VLAN neutron networks, programming the EDA Cloud Connect service for fabric-offloaded forwarding.

- VXLAN and GRE

The plugin does not orchestrate on VXLAN or GRE neutron networks, but is designed to be tolerant of other Neutron ML2 mechanism drivers.

When using another ML2 mechanism driver to provision these networks, create the relevant

VNET or BridgeDomain and VLAN

resources in EDA, as the plugin does not automatically take care of those. Typically,

these utilise the untagged VLAN to communicate between the nodes.

- VIRTIO

- SRIOV

- DPDK

Networking models

The ML2 plugin supports the following networking models:

- OpenStack managed networking

- EDA managed networking

Forwarding capabilities

- L2 forwarding

Fabric-offloaded

- L3 forwarding

- Using EDA managed networking model, fabric-offloaded

- Using OpenStack managed networking model, using virtualized routing on OpenStack controller nodes

Bonding

- VIRTIO and SRIOV (Linux bonds)

- DPDK (OVS bonds)

VIRTIO and SRIOV (Linux bonds)

Active/Backup for VIRTIO and SRIOV ports is supported.

No LAG should be configured for Active/Backup in the Interfaces in

EDA.

Active/Active for VIRTIO and SRIOV ports is supported by the ML2 plugin, but not by CBIS.

DPDK (OVS bonds)

LACP-based Active/Active is supported.

A LAG should be configured for Active/Active in the Interfaces in

EDA, with corresponding LACP configuration.

As on CBIS SRIOV computes, both the infra bonds and tenant bonds are SRIOV agent-managed. The fabric must be wired accordingly and must have all NICs wired into the fabric.

Active/Standby is not supported by the ML2 plugin for DPDK ports.

If required, a simplified setup can be created, where only the tenant bond NICs into the fabric. The following table shows an example of this setup.

| Port name | Number of VFs on port | Enable trust on port | Port to Physnet Mapping | Allow untagged traffic in VGT | Allow infra VLANs in VGT |

|---|---|---|---|---|---|

| nic_1_port_1 | 45 | false | none | false | false |

| nic_1_port_2 | 45 | false | none | false | false |

| nic_2_port_1 | 45 | false | physnet1 | false | false |

| nic_2_port_2 | 45 | false | physnet2 | false | false |

Trunking

The network trunk service allows multiple networks to be connected to an instance using a single virtual NIC (vNIC). Multiple networks can be presented to an instance by connecting it to a single port.

For details about the configuration and operation of the network trunk service, see the OpenStack Neutron Documentation.

Trunking is supported for VRTIO, DPDK and SRIOV.

- For vnic_type=normal ports (VIRTIO/DPDK), trunking is supported through an upstream openvswitch trunk driver.

- For vnic_type=direct ports (SRIOV), trunking is supported by the EDA Connect ML2 plugin trunk driver.

Using trunks with SRIOV has some limitations with regard to the upstream OVS trunk model:

- Both parent ports of the trunk and all subports must be created with the

vnic_typedirect. - To avoid the need for QinQ on switches, trunks for the SRIOV instance must be created with parent port belonging to a flat network (untagged).

- If multiple projects within a deployment must be able to use trunks, the Neutron network above must be created as shared (using the --share attribute).

- When adding subports to a trunk, their

segmentation-typemust be specified as VLAN and theirsegmentation-idmust be equal to asegmentation-idof the Neutron network that the subport belongs to.

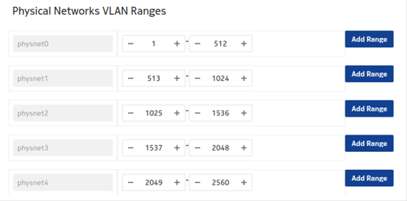

Network VLAN segmentation and segregation

The ML2 plugin only acts on VLAN Neutron networks. To use VLAN networking, configure

provider_network_type = vlan.

CBIS applies an interconnected bridge model on the OpenStack controller nodes to support multi-physnet host interfaces and to be more economical on the usage of physical NICs. As a result, when VLAN networks with overlapping segmentation IDs across physnets are applied, care must be taken that no overlapping segmentation IDs are wired on a host interface. Such a configuration would not be supported by the SR Linux fabric (or any other fabric).

A typical case would be if DHCP is enabled on the subnets created on

segmentation-id overlapping networks, as the Neutron DHCP agent on

the OpenStack controller nodes would effectively end up in the described conflicting

state. This can be avoided by disabling DHCP on the subnets, that is, defining the

subnet with dhcp_enable = false. Even when DHCP is not effectively

consumed by any VNF deployed in the related networks, the conflict would still occur

when DHCP is enabled on the subnets.

Usage and managed networks

The Connect-based OpenStack solution is fully compatible with OpenStack. Connect also supports networking managed by EDA itself.

OpenStack managed networks

The Connect-based OpenStack solution is fully compatible with OpenStack. The standard API commands for creating networks and subnets remain valid.

When mapping to Connect:

- The network in OpenStack is created within the user's project. If the network is

created by an administrator, the administrator can optionally select the project to

which the network belongs. For each network segment, a

BridgeDomainresource is created. - Only VLAN-based networks (where

segmentation_type == vlan) are handled by the EDA ML2 plugin, meaning only VLAN networks are HW-offloaded to the fabric managed by EDA. Neutron defines the default segmentation type for tenant networks. If you want non-administrators to create their own networks that are offloaded to the EDA fabric, the segmentation type must be set tovlan. However, if only administrators need this capability, then the segmentation type can be left to its default, because administrators can specify the segmentation type as part of network creation. - The plugin only supports Layer 2 EDA fabric offloaded networking. When a Layer 3

model is defined in OpenStack (using router and router attachments), this model

works. However, Layer 3 is software-based according to the native OpenStack

implementation.

Layer 3-associated features, like floating IPs, also work but these are only software-based according to the native OpenStack implementation. This is tested with the traditional non-Distributed Virtual Routing (DVR) implementation only. DVR has not been tested, and might not work.

- MTU provisioning is not supported.

EDA managed networks

In addition to the OpenStack managed networking model, Connect supports networking

managed by EDA itself. In this case, VNET or

BridgeDomain resources are created first in EDA directly and

then are consumed in OpenStack.

The eda_bridge_domain extension supports EDA managed networking.

This refers to the Name of a BridgeDomain that you

want to link the network to.

-

Using the EDA rest API, the Kubernetes interface or the UI, create a VNET with

BridgeDomainor a standaloneBridgeDomain. -

Obtain the name of the resources.

The Connect OpenStack ML2 plugin can only see resources in its own namespace, cross namespace referencing is not supported.

-

Create OpenStack resources.

The following is an example workflow.

- In OpenStack create the consuming network, and link it to the pre-created entity.Since BridgeDomains are actually mapped at the network segment level, you can also specify

openstack network create --eda-bridge-domain xyz os-network-1--eda-bridge-domainwhen creating a network segment.openstack network segment create --network os-network-1 --network-type vlan --eda-bridge-domain xyz os-network-segment-1 -

Create a subnet within this network using the standard API syntax. For example:

openstack subnet create --network os-network-1 --subnet-range 10.10.1.0/24 os-subnet-1 -

Create a Neutron port and Nova server within this network and a subnet that also uses standard APIs.

The OpenStack ML2 plugin handles the creation of

VLANandConnectInterfaceresources as in the OpenStack managed use case. However, theBridgeDomainis fully owned by the operator. Possible use cases for this are fabric-based L3 or BGP peering.

Edge topology introspection

Automated Edge Topology Introspection

When the NIC-mapping agent extension is enabled, it persists the physnet

<-> compute,interface relation in the Neutron database so it can

wire Neutron ports properly in the Fabric.

The known interface mappings can be consulted using the openstack eda interface mapping list CLI command .

Automated LLDP Provisioning

When deploying using the CBIS integration, LLDP introspection is automatically enabled for all computes.

Audits with HA OpenStack deployments

On highly available (HA) OpenStack deployments, when multiple audit requests are created concurrently, they may be processed concurrently by different Neutron instances. This situation can lead to multiple processing instances competing to correct the same discrepancy, yielding unpredictable results. Nokia recommends that before creating a new Audit request, ensure that there are no Audits in Connect in the `InProgress` state.