ML2 configurations

The Fabric Services System ML2 plugin supports a range of configurations that fine-tune its behavior.

Virtualization and network types

The Fabric Services System ML2 plugin supports the following network segmentation types:

- VLAN: the plugin orchestrates on VLAN neutron networks, programming the Fabric Services System for SRL fabric-offloaded forwarding.

- VXLAN and GRE: the plugin does not orchestrate on VXLAN or GRE neutron networks, but it is designed to be tolerant to other Neutron ML2 mechanism drivers.

The ML2 plugin also supports the following virtualization types:

- VIRTIO

- SRIOV

- DPDK

Networking models

The Fabric Services System ML2 plugin supports:

- OpenStack managed networking

- Fabric Services System managed networking

Forwarding capabilities

The Fabric Services System ML2 plugin supports:

- L2 forwarding, SRL fabric-offloaded

- L3 forwarding

- Using Fabric Services System managed networking model, SRL fabric-offloaded

- Using OpenStack managed networking model using virtualized routing on OpenStack controller nodes (DVR is not supported)

Bonding

The Fabric Services System ML2 plugin supports the following CBIS supported bonding models:

- VIRTIO and SRIOV (linuxbonds): Active/BackupNote: Active/Active is supported by the ML2 plugin as well, but not by CBIS.Note: No LAG must be set up in the Fabric Services System or the SR Linux fabric.

- DPDK (OVS bonds): LACP based Active/Active (*no* support for Active/Backup).

Note: A corresponding LAG must be set up in Fabric Services System or the SRL fabric.

Trunking

The network trunk service allows multiple networks to be connected to an instance using a single virtual NIC (vNIC). Multiple networks can be presented to an instance by connecting it to a single port.

For details about the configuration and operation of the network trunk service, see https://docs.openstack.org/neutron/train/admin/config-trunking.html.

Trunking is supported for VRTIO, DPDK and SRIOV.

- For vnic_type=normal ports (VIRTIO/DPDK), trunking is supported through an upstream openvswitch trunk driver.

- For vnic_type=direct ports (SRIOV), trunking is supported by the Fabric Services System ML2 plugin trunk driver.

Using trunks with SRIOV has some limitations with regard to the upstream OVS trunk model:

- Both parent ports of the trunk and all subports must be created with vnic_type 'direct'.

- To avoid the need for QinQ on switches, trunks for the SRIOV instance must be created with parent port belonging to a flat network (untagged).

- If multiple projects within a deployment must be able to use trunks, the Neutron network above must be created as shared (using the --share attribute).

- When adding subports to a trunk, their segmentation-type must be specified as VLAN and their segmentation-id must be equal to a segmentation-id of the Neutron network that the subport belongs to.

Bonding architecture

For DPDK bonds, the ML2 plugin only supports LACP Active/Active bonding. Corresponding LAGs must be set up in the Fabric Services System environment, and must be active in the SR Linux fabric.

In simplified setups, such as one in which only the tenant bond NICs are wired into fabric, take care to reflect the configuration in the Port-to-Physnet mapping. The example below shows such a configuration excluding the infra bond NICs.

| Port name | Number of VFs on port | Enable trust on port | Port to Physnet mapping | Allow untagged traffic in VGT | Allow infra VLANsin VGT |

|---|---|---|---|---|---|

| nic_1_port_1 | 45 | false | none | false | false |

| nic_1_port_2 | 45 | false | none | false | false |

| nic_2_port_1 | 45 | false | physnet1 | false | false |

| nic_2_port_2 | 45 | false | physnet2 | false | false |

Network VLAN segmentation and VLAN segregation

The Fabric Services System ML2 plugin only acts on VLAN

Neutron networks. To use VLAN networking, configure provider_network_type =

vlan.

CBIS applies an interconnected bridge model on the OpenStack controller nodes to support multi-physnet host interfaces and be more economical on the physical NIC's usage. As a result, when VLAN networks with overlapping segmentation IDs across physnets are applied, care must be taken that no overlapping segmentation IDs are wired on a host interface. Such a configuration would not be supported by the SR Linux fabric (or any other fabric).

A typical

case for this to arise would be if DHCP is enabled on the subnets created on

segmentation-ID overlapping networks, as the Neutron DHCP agent on the OpenStack

controller nodes would effectively end up in the described conflicting state. This

can be avoided by disabling DHCP on the subnets, that is, defining the subnet with

dhcp_enable = False. Even when DHCP is not effectively consumed

by any VNF deployed in the related networks, the conflict would still occur when

DHCP is enabled on the subnets.

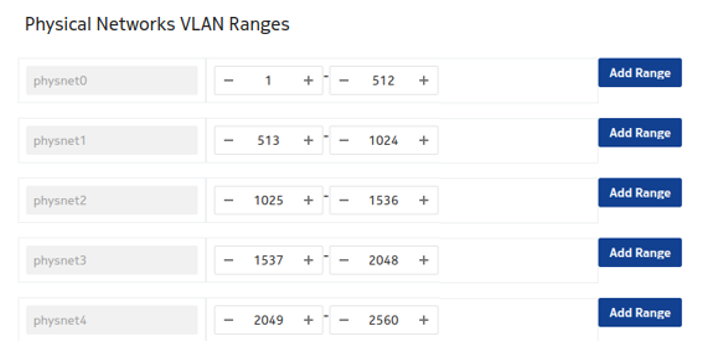

If the deployment use cases demand this wiring (for example, some of the deployed VNFs rely on DHCP), the system's VLAN ranges must be segregated per physical network at CBIS installation time. An example configuration is shown below.