Port features

This section contains information about the following topics:

Multilink point-to-point protocol

This section contains information about the following topics:

MLPPP overview

Multilink point-to-point protocol (MLPPP) is a method of splitting, recombining, and sequencing packets across multiple logical data links. MLPPP is defined in RFC 1990, The PPP Multilink Protocol (MP).

MLPPP allows multiple PPP links to be bundled together, providing a single logical connection between two routers. Data can be distributed across the multiple links within a bundle to achieve high bandwidth. As well, MLPPP allows for a single frame to be fragmented and transmitted across multiple links. This capability allows for lower latency and also for a higher maximum receive unit (MRU).

Multilink protocol is negotiated during the initial LCP option negotiations of a standard PPP session. A system indicates to its peer that it is willing to perform MLPPP by sending the MP option as part of the initial LCP option negotiation.

The system has the following capabilities:

The system offering the option is capable of combining multiple physical links into one logical link.

The system is capable of receiving upper layer protocol data units (PDUs) that are fragmented using the MP header and then reassembling the fragments back into the original PDU for processing.

The system is capable of receiving PDUs of size N octets, where N is specified as part of the option, even if N is larger than the maximum receive unit (MRU) for a single physical link.

When MLPPP has been successfully negotiated, the sending system is free to send PDUs encapsulated and/or fragmented with the MP header.

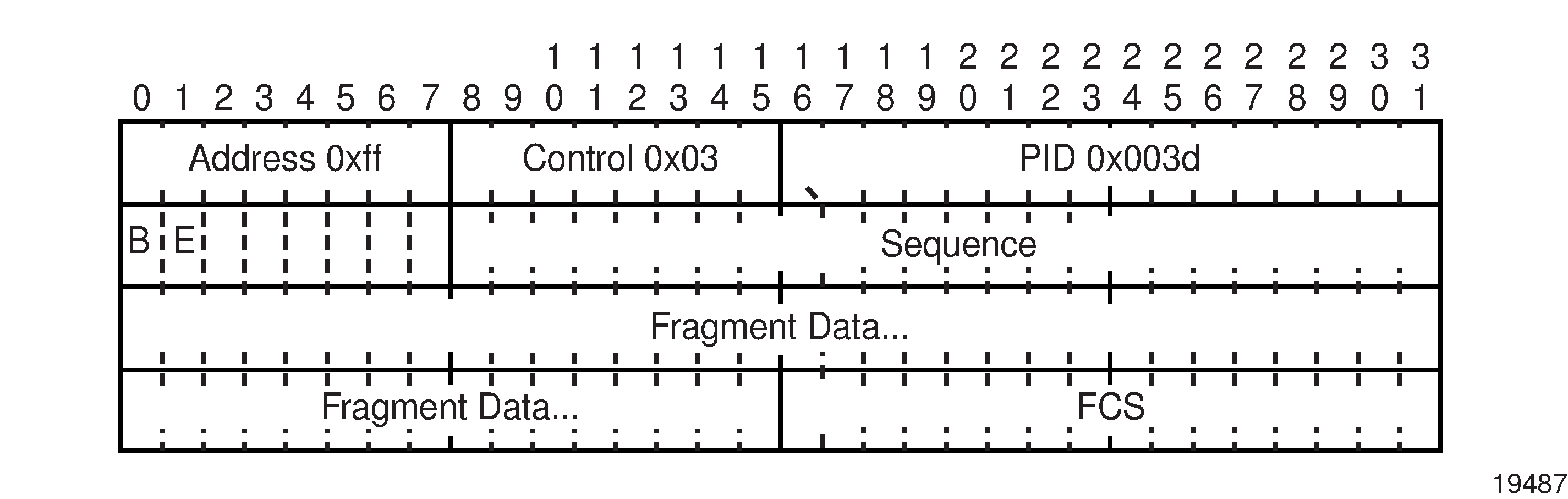

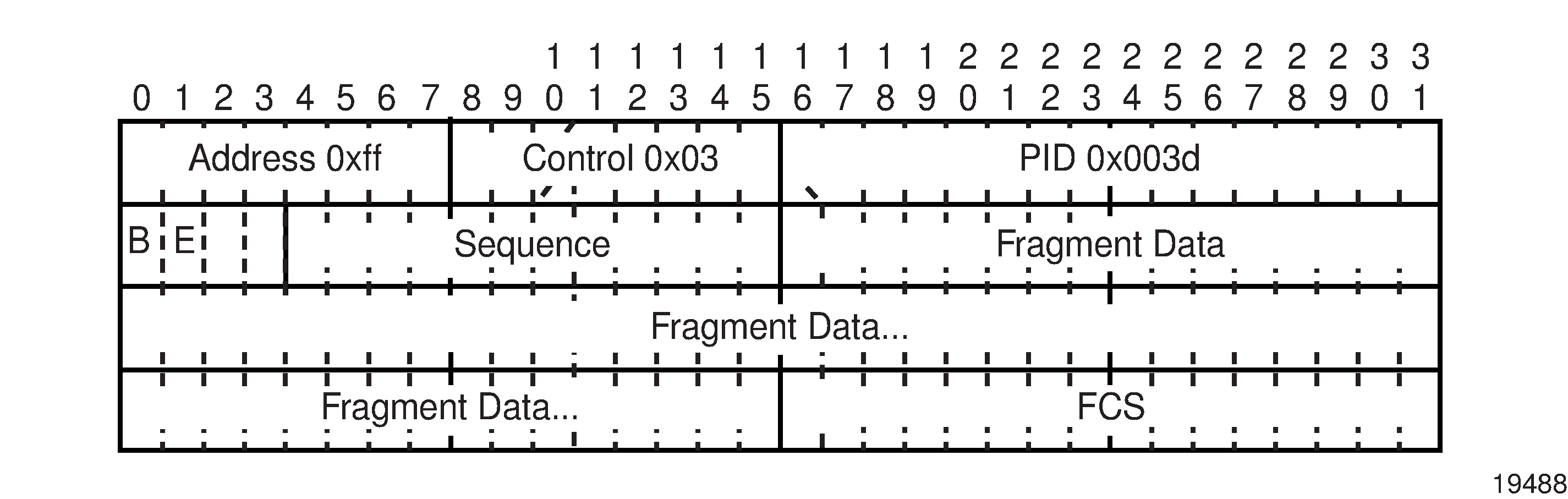

MP introduces a new protocol type with a protocol ID (PID) of 0x003d. MLPPP 24-bit fragment format and MLPPP 12-bit fragment format show the MLPPP fragment frame structure. Framing to indicate the beginning and end of the encapsulation is the same as that used by PPP and described in RFC 1662, PPP in HDLC-like Framing.

MP frames use the same HDLC address and control pair value as PPP: Address – 0xFF and Control – 0x03. The 2-octet protocol field is also structured the same way as in PPP encapsulation.

The required and default format for MP is the 24-bit format. During the LCP state, the 12-bit format can be negotiated. The 7705 SAR is capable of supporting and negotiating the alternate 12-bit frame format.

The maximum differential delay supported for MLPPP is 25 ms.

Protocol field

The protocol field (PID) is 2 octets. Its value identifies the datagram encapsulated in the Information field of the packet. For MP, the PID also identifies the presence of a 4-octet MP header (or 2-octet, if negotiated).

A PID of 0x003d identifies the packet as MP data with an MP header.

The LCP packets and protocol states of the MLPPP session follow those defined by PPP in RFC 1661. The options used during the LCP state for creating an MLPPP NCP session are described in the sections that follow.

B&E bits

The B&E bits are used to indicate the start and end of a packet. Ingress packets to the MLPPP process have an MTU, which may or may not be larger than the maximum received reconstructed unit (MRRU) of the MLPPP network. The B&E bits manage the fragmentation of ingress packets when the packet exceeds the MRRU.

The B-bit indicates the first (or beginning) packet of a given fragment. The E-bit indicates the last (or ending) packet of a fragment. If there is no fragmentation of the ingress packet, both B&E bits are set to true (=1).

Sequence number

Sequence numbers can be either 12 or 24 bits long. The sequence number is 0 for the first fragment on a newly constructed bundle and increments by one for each fragment sent on that bundle. The receiver keeps track of the incoming sequence numbers on each link in a bundle and reconstructs the required unbundled flow through processing of the received sequence numbers and B&E bits. For a detailed description of the algorithm, see RFC 1990.

Information field

The Information field is 0 or more octets. The Information field contains the datagram for the protocol specified in the protocol field.

The MRRU has the same default value as the MTU for PPP. The MRRU is always negotiated during LCP.

Padding

On transmission, the Information field of the ending fragment may be padded with an arbitrary number of octets up to the MRRU. It is the responsibility of each protocol to distinguish padding octets from real information. Padding must only be added to the last fragment (E-bit set to true).

FCS

The FCS field of each MP packet is inherited from the normal framing mechanism from the member link on which the packet is transmitted. There is no separate FCS applied to the reconstituted packet as a whole if it is transmitted in more than one fragment.

LCP

The link control protocol (LCP) is used to establish the connection through an exchange of configure packets. This exchange is complete, and the LCP opened state entered, once a Configure-Ack packet has been both sent and received.

LCP allows for the negotiation of multiple options in a PPP session. MP is somewhat different from PPP, and therefore the following options are set for MP and are not negotiated:

no async control character map

no magic number

no link quality monitoring

address and control field compression

protocol field compression

no compound frames

no self-describing padding

Any non-LCP packets received during this phase must be silently discarded.

T1/E1 link hold timers

T1/E1 link hold timers (or MLPPP link flap dampening) guard against the node reporting excessive interface transitions. Timers can be set to determine when link up and link down events are advertised; that is, up-to-down and down-to-up transitions of the interface are not advertised to upper layer protocols (are dampened) until the configured timer has expired.

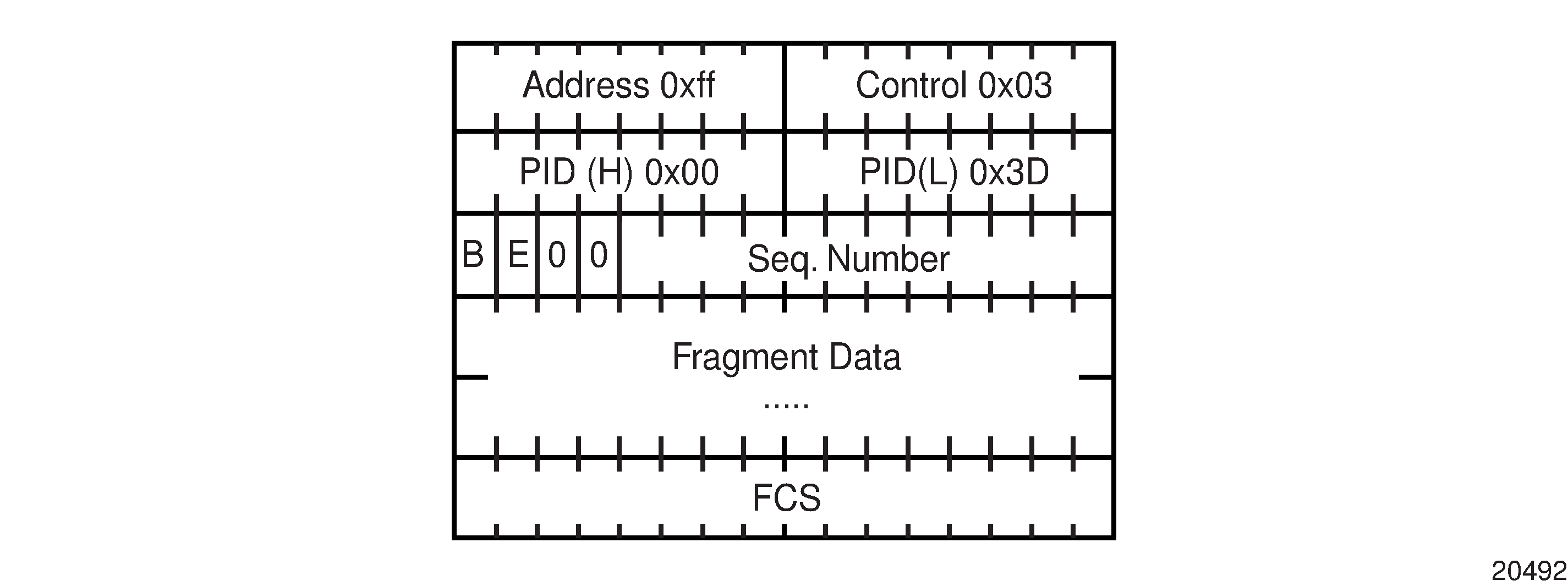

Multiclass MLPPP

The 7705 SAR supports multiclass MLPPP (MC-MLPPP) to address end-to-end delay caused by low-speed links transporting a mix of small and large packets. With MC-MLPPP, large, low-priority packets are fragmented to allow opportunities to send high-priority packets. QoS for MC-MLPPP is described in QoS in MC-MLPPP.

MC-MLPPP allows for the prioritization of multiple types of traffic flowing over MLPPP links, such as traffic between the cell site routers and the mobile operator’s aggregation routers. MC-MLPPP, as defined in RFC 2686, The Multi-Class Extension to Multi-Link PPP, is an extension of the MLPPP standard. MC-MLPPP is supported on access ports wherever PPP/MLPPP is supported, except on the 2-port OC3/STM1 Channelized Adapter card. It allows multiple classes of fragments to be transmitted over an MLPPP bundle, with each class representing a different priority level mapped to a forwarding class. The highest-priority traffic is transmitted over the MLPPP bundle with minimal delay regardless of the order in which packets are received.

Original MLPPP header format shows the original MLPPP header format that allowed only two implied classes. The two classes were created by transmitting two interleaving flows of packets; one with MLPPP headers and one without. This resulted in two levels of priority sent over the physical link, even without the implementation of multiclass support.

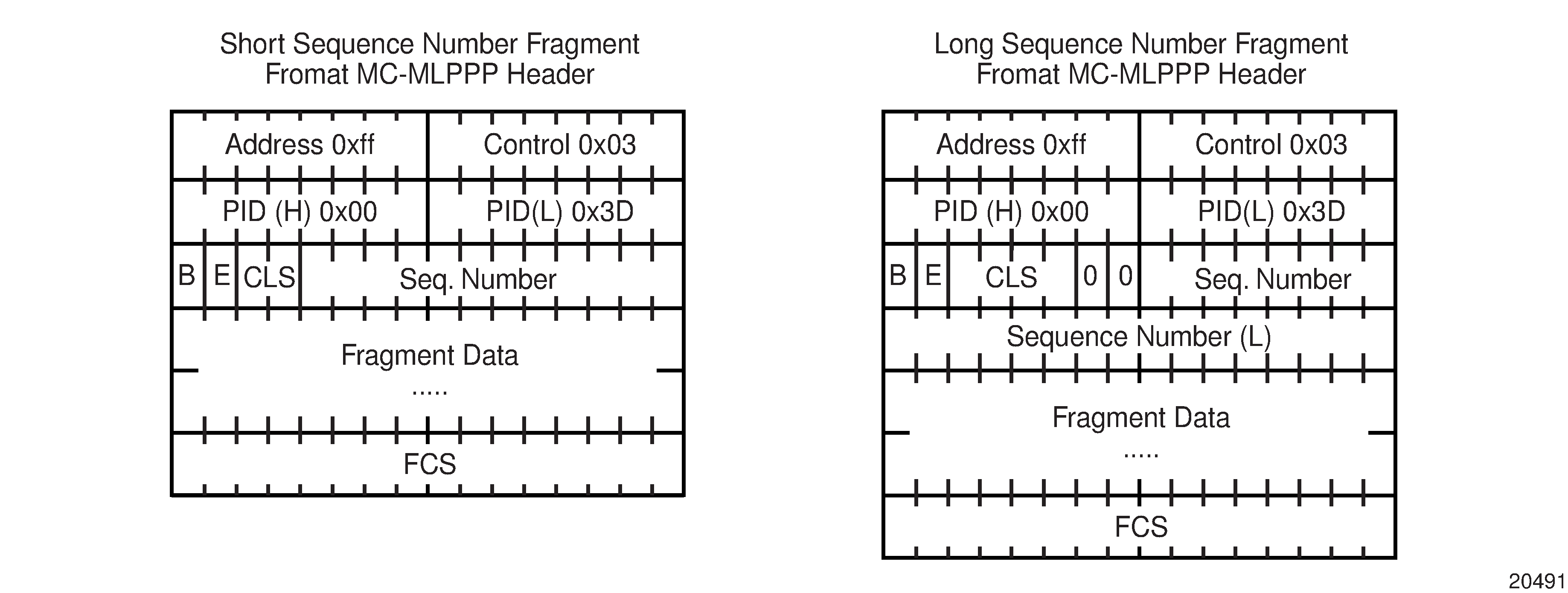

MC-MLPPP header format shows the short and long sequence number fragment format MC-MLPPP headers. The short sequence number fragment format header includes two class bits to allow for up to four classes of service. Four class bits are available in the long sequence number fragment format header, but a maximum of four classes are still supported. This extension to the MLPPP header format is detailed in RFC 2686.

The new MC-MLPPP header format uses the previously unused bits before the sequence number as the class identifier to allow four distinct classes of service to be identified.

QoS in MC-MLPPP

MC-MLPPP on the 7705 SAR supports scheduling based on multiclass implementation. Instead of the standard profiled queue-type scheduling, an MC-MLPPP encapsulated access port performs class-based traffic servicing. The four MC-MLPPP classes are scheduled in a strict priority fashion, as shown in the following table.

MC-MLPPP class |

Priority |

|---|---|

0 |

Priority over all other classes |

1 |

Priority over classes 2 and 3 |

2 |

Priority over class 3 |

3 |

No priority |

For example, if a packet is sent to an MC-MLPPP class 3 queue and all other queues are empty, the 7705 SAR fragments the packet according to the configured fragment size and begins sending the fragments. If a new packet arrives at an MC-MLPPP class 2 queue while the class 3 fragment is still being serviced, the 7705 SAR finishes sending any fragments of the class 3 packet that are on the wire, then holds back the remaining fragments in order to service the higher-priority packet.

The fragments of the first packet remain at the top of the class 3 queue. For packets of the same class, MC-MLPPP class queues operate on a first-in, first-out basis.

The user configures the required number of MLPPP classes to use on a bundle. The forwarding class of the packet, as determined by the ingress QoS classification, is used to determine the MLPPP class for the packet. The mapping of forwarding class to MLPPP class is a function of the user-configurable number of MLPPP classes. The mapping for 4-class, 3-class, and 2-class MLPPP bundles is shown in the following table.

FC ID |

FC name |

MLPPP class 4-class bundle |

MLPPP class 3-class bundle |

MLPPP class 2-class bundle |

|---|---|---|---|---|

7 |

NC |

0 |

0 |

0 |

6 |

H1 |

0 |

0 |

0 |

5 |

EF |

1 |

1 |

1 |

4 |

H2 |

1 |

1 |

1 |

3 |

L1 |

2 |

2 |

1 |

2 |

AF |

2 |

2 |

1 |

1 |

L2 |

3 |

2 |

1 |

0 |

BE |

3 |

2 |

1 |

If one or more forwarding classes are mapped to a queue, the scheduling priority of the queue is based on the lowest forwarding class mapped to it. For example, if forwarding classes 0 and 7 are mapped to a queue, the queue is serviced by MC-MLPPP class 3 in a 4-class bundle model.

cHDLC

The 7705 SAR supports Cisco HDLC, which is an encapsulation protocol for information transfer. Cisco HDLC is a bit-oriented synchronous data-link layer protocol that specifies a data encapsulation method on synchronous serial links using frame characters and checksums.

Cisco HDLC monitors line status on a serial interface by exchanging keepalive request messages with peer network devices. The protocol also allows routers to discover IP addresses of neighbors by exchanging SLARP address-request and address-response messages with peer network devices.

The basic frame structure of a cHDLC frame is shown in the following table.

Flag |

Address |

Control |

Protocol |

Information |

FCS |

|---|---|---|---|---|---|

0x7E |

0x0F, 0x8F |

0x00 |

0x0800, 0x8035 |

— |

16 or 32 bit |

The fields in the cHDLC frame have the following characteristics:

Address field – supports unicast (0x0F) and broadcast (0x8F) addresses

Control field – always set to 0x00

Protocol field – supports IP (0x0800) and SLARP (0x8035; see SLARP for information about limitations)

Information field – the length can be 0 to 9 kB

FCS field – can be 16 or 32 bits. The default is 16 bits for ports with a speed equal to or lower than OC3, and 32 bits for all other ports. The FCS for cHDLC is calculated with the same method and same polynomial as PPP.

SLARP

The 7705 SAR supports only the SLARP keepalive protocol.

For the SLARP keepalive protocol, each system sends the other a keepalive packet at a user configurable interval. The default interval is 10 seconds. Both systems must use the same interval to ensure reliable operation. Each system assigns sequence numbers to the keepalive packets it sends, starting with zero, independent of the other system. These sequence numbers are included in the keepalive packets sent to the other system. Also included in each keepalive packet is the sequence number of the last keepalive packet received from the other system, as assigned by the other system. This number is called the returned sequence number. Each system keeps track of the last returned sequence number it has received. Immediately before sending a keepalive packet, the system compares the sequence number of the packet it is about to send with the returned sequence number in the last keepalive packet it has received. If the two differ by 3 or more, it considers the line to have failed, and will not route higher-level data across it until an acceptable keepalive response is received.

IMA

Inverse Multiplexing over ATM (IMA) is a cell-based protocol where an ATM cell stream is inverse-multiplexed and demultiplexed in a cyclical fashion among ATM-supporting channels to form a higher bandwidth logical link. This logical link is called an IMA group. By grouping channels into an IMA group, customers gain bandwidth management capability at in-between rates (for example, between DS1 and DS3 or between E1 and E3) through the addition or removal of channels to or from the IMA group. The 7705 SAR supports the IMA protocol as specified by the Inverse Multiplexing for ATM (IMA) Specification version 1.1.

In the ingress direction, traffic coming over multiple ATM channels configured as part of a single IMA group is converted into a single ATM stream and passed for further processing to the ATM layer, where service-related functions (for example, Layer 2 traffic management or feeding into a pseudowire) are applied. In the egress direction, a single ATM stream (after service functions are applied) is distributed over all paths that are part of an IMA group after ATM layer processing takes place.

An IMA group interface compensates for differential delay and allows for only a minimal cell delay variation. The maximum differential delay supported for IMA is 75 ms on the 16-port T1/E1 ASAP Adapter card and 32-port T1/E1 ASAP Adapter card and 50 ms on the 2-port OC3/STM1 Channelized Adapter card.

The interface deals with links that are added or deleted, or that fail. The higher layers see only an IMA group and not individual links; therefore, service configuration and management is done using IMA groups, and not individual links that are part of it.

The IMA protocol uses an IMA frame as the unit of control. An IMA frame consists of a series of 128 consecutive cells. In addition to ATM cells received from the ATM layer, the IMA frame contains IMA OAM cells. Two types of cells are defined: IMA Control Protocol (ICP) cells and IMA filler cells. ICP cells carry information used by the IMA protocol at both ends of an IMA group (for example, IMA frame sequence number, link stuff indication, status and control indication, IMA ID, Tx and Rx test patterns, version of the IMA protocol). A single ICP cell is inserted at the ICP cell offset position (the offset may be different on each link of the group) of each frame. Filler cells are used by the transmitting side to fill up each IMA frame in case there are not enough ATM stream cells from the ATM layer, so a continuous stream of cells is presented to the physical layer. Those cells are then discarded by the receiving end. IMA frames are transmitted simultaneously on all paths of an IMA group, and when they are received out of sync at the other end of the IMA group link, the receiver compensates for differential link delays among all paths.

Network synchronization on ports and circuits

The 7705 SAR provides network synchronization on the following ports and CES circuits:

Network synchronization on T1/E1 and Ethernet ports

Line timing mode provides physical layer timing (Layer 1) that can be used as an accurate reference for nodes in the network. This mode is immune to any packet delay variation (PDV) occurring on a Layer 2 or Layer 3 link. Physical layer timing provides the best synchronization performance through a synchronization distribution network.

On the 7705 SAR-A variant with T1/E1 ports, line timing is supported on T1/E1 ports. Line timing is also supported on all synchronous Ethernet ports on both 7705 SAR-A variants. Synchronous Ethernet is supported on the XOR ports (1 to 4), configured as either RJ45 ports or SFP ports. Synchronous Ethernet is also supported on SFP ports 5 to 8. Ports 9 to 12 do not support synchronous Ethernet and therefore do not support line timing.

On the 7705 SAR-Ax, line timing is supported on all Ethernet ports.

On the 7705 SAR-H, line timing is supported on:

all Ethernet ports

T1/E1 ports on a chassis equipped with a 4-port T1/E1 and RS-232 Combination module

On the 7705 SAR-Hc, line timing is supported on all Ethernet ports.

On the 7705 SAR-M variants with T1/E1 ports, line timing is supported on T1/E1 ports. Line timing is also supported on all RJ45 Ethernet ports and SFP ports on all 7705 SAR-M variants.

In addition, line timing is supported on the following 7705 SAR-M modules:

2-port 10GigE (Ethernet) module

6-port SAR-M Ethernet module

On the 7705 SAR-Wx, line timing is supported on:

RJ45 Ethernet ports and optical SFP ports (these ports support synchronous Ethernet and IEEE 1588v2 PTP)

On the 7705 SAR-X, line timing is supported on T1/E1 ports and Ethernet ports.

On the 7705 SAR-8 Shelf V2 and 7705 SAR-18, line timing is supported on:

16-port T1/E1 ASAP Adapter card

32-port T1/E1 ASAP Adapter card

6-port Ethernet 10Gbps Adapter card

8-port Gigabit Ethernet Adapter card (dual-rate and copper SFPs do not support synchronous Ethernet)

2-port 10GigE (Ethernet) Adapter card

10-port 1GigE/1-port 10GigE X-Adapter card (supported on the 7705 SAR-18 only)

4-port DS3/E3 Adapter card

2-port OC3/STM1 Channelized Adapter card

4-port OC3/STM1 / 1-port OC12/STM4 Adapter card

4-port OC3/STM1 Clear Channel Adapter card

Packet Microwave Adapter card on ports that support synchronous Ethernet and on ports that support PCR

Synchronous Ethernet is a variant of line timing and is automatically enabled on ports and SFPs that support it. The operator can select a synchronous Ethernet port as a candidate for the timing reference. The recovered timing from this port is then used to time the system. This ensures that any of the system outputs are locked to a stable, traceable frequency source.

Network synchronization on SONET/SDH ports

Each SONET/SDH port can be independently configured to be loop-timed (recovered from an Rx line) or node-timed (recovered from the SSU in the active CSM).

A SONET/SDH port’s receive clock rate can be used as a synchronization source for the node.

Network synchronization on DS3/E3 ports

Each clear channel DS3/E3 port on a 4-port DS3/E3 Adapter card can be independently configured to be loop-timed (recovered from an Rx line), node-timed (recovered from the SSU in the active CSM), or differential-timed (derived from the comparison of a common clock to the received RTP timestamp in TDM pseudowire packets). When a DS3 port is channelized, each DS1 or E1 channel can be independently configured to be loop-timed, node-timed, or differential-timed (differential timing on DS1/E1 channels is supported only on the first three ports of the card). When not configured for differential timing, a DS3/E3 port can be configured to be a timing source for the node.

Network synchronization on DS3 CES circuits

Each DS3 CES circuit on a 2-port OC3/STM1 Channelized Adapter card card can be loop-timed (recovered from an Rx line) or free-run (timing source is from its own clock). A DS3 circuit can be configured to be a timing source for the node.

Network synchronization on T1/E1 ports and circuits

Each T1/E1 port can be independently configured for loop-timing (recovered from an Rx line) or node-timing (recovered from the SSU in the active CSM).

In addition, T1/E1 CES circuits on the following can be independently configured for adaptive timing (clocking is derived from incoming TDM pseudowire packets):

16-port T1/E1 ASAP Adapter card

32-port T1/E1 ASAP Adapter card

7705 SAR-M (variants with T1/E1 ports)

7705 SAR-X

7705 SAR-A (variant with T1/E1 ports)

T1/E1 ports on the 4-port T1/E1 and RS-232 Combination module

T1/E1 CES circuits on the following can be independently configured for differential timing (recovered from RTP in TDM pseudowire packets):

16-port T1/E1 ASAP Adapter card

32-port T1/E1 ASAP Adapter card

4-port OC3/STM1 / 1-port OC12/STM4 Adapter card (DS1/E1 channels)

4-port DS3/E3 Adapter card (DS1/E1 channels on DS3 ports; E3 ports cannot be channelized); differential timing on DS1/E1 channels is supported only on the first three ports of the card

7705 SAR-M (variants with T1/E1 ports)

7705 SAR-X

7705 SAR-A (variant with T1/E1 ports)

T1/E1 ports on the 4-port T1/E1 and RS-232 Combination module

A T1/E1 port can be configured to be a timing source for the node.

Node synchronization from GNSS receiver ports

The GNSS receiver port on the 7705 SAR-Ax, 7705 SAR-Wx, or 7705 SAR-H GPS Receiver module, and the GNSS Receiver card installed in a 7705 SAR-8 Shelf V2 or 7705 SAR-18, can provide a synchronization clock to the SSU in the router with the corresponding QL for SSM. This frequency can then be distributed to the rest of the router from the SSU as configured with the ref-order and ql-selection commands; see the 7705 SAR Basic System Configuration Guide for information. The GNSS reference is qualified only if the GNSS receiver port is operational, has sufficient satellites locked, and has a frequency successfully recovered. A PTP master/boundary clock can also use this frequency reference with PTP peers.

In the event of GNSS signal loss or jamming resulting in the unavailability of timing information, the GNSS receiver automatically prevents output of clock or synchronization data to the system, and the system can revert to alternate timing sources.

A 7705 SAR using GNSS or IEEE 1588v2 PTP for time of day/phase recovery can perform high-accuracy OAM timestamping and measurements. See the 7705 SAR Basic System Configuration Guide for information about node timing sources.

Flow control on Ethernet ports

IEEE 802.3x flow control, which is the process of pausing the transmission based on received pause frames, is supported on Fast Ethernet, Gigabit Ethernet, and 10-Gigabit Ethernet (SFP+) ports. In the transmit direction, the Ethernet ports generate pause frames if the buffer occupancy reaches critical values or if port FIFO buffers are overloaded. Pause frame generation is automatically handled by the Ethernet Adapter card when the system-wide constant thresholds are exceeded. The generation of pause frames ensures that newly arriving frames still can be processed and queued, mainly to maintain the SLA agreements.

If autonegotiation is on for an Ethernet port, enabling and disabling of IEEE 802.3x flow control is autonegotiated for receive and transmit directions separately. If autonegotiation is turned off, the reception and transmission of IEEE 802.3x flow control is enabled by default and cannot be disabled.

Ingress flow control for the 6-port SAR-M Ethernet module is Ethernet link-based and not port-based. When IEEE 802.3x flow control is enabled on the 6-port SAR-M Ethernet module, pause frames are multicast to all ports on the Ethernet link. There are two Ethernet links on the 6-port SAR-M Ethernet module: one for ports 1, 3, and 5, and one for ports 2, 4, and 6. Pause frames are sent to either ports 1, 3, and 5, or to ports 2, 4, and 6, depending on which link the pause frame originates.

Ethernet OAM

This section contains information about the following topics:

For more information about Ethernet OAM, see the 7705 SAR OAM and Diagnostics Guide, ‟Ethernet OAM capabilities”.

Ethernet OAM overview

802.3ah Clause 57 (EFM OAM) defines the operations, administration, and maintenance (OAM) sublayer, which is a link level Ethernet OAM. It provides mechanisms for monitoring link operations such as remote fault indication and remote loopback control.

Ethernet OAM gives network operators the ability to monitor the status of Ethernet links and quickly determine the location of failing links or fault conditions.

Because some of the sites where the 7705 SAR will be deployed will only have Ethernet uplinks, this OAM functionality is mandatory. For example, mobile operators must be able to request remote loopbacks from the peer router at the Ethernet layer in order to debug any connectivity issues. EFM OAM provides this capability.

EFM OAM is supported on network and access Ethernet ports and is configured at the port level. The access ports can be configured to tunnel the OAM traffic originated by the far-end devices.

EFM OAM has the following characteristics:

All EFM OAM, including loopbacks, operate on point-to-point links only.

EFM loopbacks are always line loopbacks (line Rx to line Tx).

When a port is in loopback, all frames (except EFM frames) are discarded. If dynamic signaling and routing is used (dynamic LSPs, OSPF, IS-IS, or BGP routing), all services also go down. If all signaling and routing protocols are static (static routes, LSPs, and service labels), the frames are discarded but services stay up.

The following EFM OAM functions are supported:

OAM capability discovery

configurable transmit interval with an Information OAMPDU

active or passive mode

OAM loopback

OAMPDU tunneling and termination (for Epipe service)

dying gasp at network and access ports

non-zero vendor-specific information field – the 32-bit field is encoded using the format 00:PP:CC:CC and references TIMETRA-CHASSIS-MIB

00 – must be zeros

PP – the platform type from tmnxHwEquippedPlatform

CC:CC – the chassis type index value from tmnxChassisType that is indexed in tmnxChassisTypeTable. The table identifies the specific chassis backplane.

The value 00:00:00:00 is sent for all releases that do not support the non-zero value or are unable to identify the required elements. There is no decoding of the peer or local vendor information fields on the network element. The hexadecimal value is included in the show port port-id ethernet efm-oam output.

With ignore-efm-state configured, if the EFM OAM protocol cannot negotiate a peer session or an established session fails, the port will enter the link up state. The link up state is used by many protocols to indicate that the port is administratively up and there is physical connectivity but a protocol (such as EFM OAM) has caused the port operational state to be down. The show port slot/mda/port command output includes a Reason Down field to indicate if the protocol is the underlying reason for the link up state. For EFM OAM, the Reason Down code is efmOamDown. This is shown in the following command output example, where port 1/1/3 is in a link up state.

*A:ALU-1># show port

===============================================================================

Ports on Slot 1

===============================================================================

Port Admin Link Port Cfg Oper LAG/ Port Port Port C/QS/S/XFP/

Id State State MTU MTU Bndl Mode Encp Type MDIMDX

-------------------------------------------------------------------------------

1/1/1 Down No Down 1578 1578 - netw null xcme

1/1/2 Down No Down 1578 1578 - netw null xcme

1/1/3 Up Yes Link Up 1522 1522 - accs qinq xcme

1/1/4 Down No Down 1578 1578 - netw null xcme

1/1/5 Down No Down 1578 1578 - netw null xcme

1/1/6 Down No Down 1578 1578 - netw null xcme

*A:ALU-1># show port 1/1/3

===============================================================================

Ethernet Interface

===============================================================================

Description : 10/100/Gig Ethernet SFP

Interface : 1/1/3 Oper Speed : N/A

Link-level : Ethernet Config Speed : 1 Gbps

Admin State : up Oper Duplex : N/A

Oper State : down Config Duplex : full

Reason Down : efmOamDown

Physical Link : Yes MTU : 1522

Single Fiber Mode : No Min Frame Length : 64 Bytes

IfIndex : 35749888 Hold time up : 0 seconds

Last State Change : 12/18/2012 15:58:29 Hold time down : 0 seconds

Last Cleared Time : N/A DDM Events : Enabled

Phys State Chng Cnt: 1

......

The EFM OAM protocol can be decoupled from the port state and operational state. In cases where an operator wants to remove the protocol, monitor only the protocol, migrate, or make changes, the ignore-efm-state command can be configured under the config>port>ethernet>efm-oam context.

When the ignore-efm-state command is configured on a port, the protocol behavior is normal. However, any failure in the EFM protocol state (discovery, configuration, time-out, loops, and so on) will not affect the port. Only a protocol warning message will be raised to indicate issues with the protocol. When the ignore-efm-state command is not configured on a port, the default behavior is that the port state will be affected by any EFM OAM protocol fault or clear conditions.

Enabling and disabling this command immediately affects the port state and operating state based on the active configuration, and this is displayed in the show port command output. For example, if the ignore-efm-state command is configured on a port that is exhibiting a protocol error, that protocol error does not affect the port state or operational state and there is no Reason Down code in the output. If the ignore-efm-state command is disabled on a port with an existing EFM OAM protocol error, the port will transition to port state link up, operational state down with reason code efmOamDown.

If the port is a member of a microwave link, the ignore-efm-state command must be enabled before the EFM OAM protocol can be activated. This restriction is required because EFM OAM is not compatible with microwave links.

CRC monitoring

Cyclic redundancy check (CRC) errors typically occur when Ethernet links are compromised due to optical fiber degradation, weak optical signals, bad optical connections, or problems on a third-party networking element. As well, higher-layer OAM options such as EFM and BFD may not detect errors and trigger appropriate alarms and switchovers if the errors are intermittent, since this does not affect the continuous operation of other OAM functions.

CRC error monitoring on Ethernet ports allows degraded links to be alarmed or failed in order to detect network infrastructure issues, trigger necessary maintenance, or switch to redundant paths. This is achieved through monitoring ingress error counts and comparing them to the configured error thresholds. The rate at which CRC errors are detected on a port can trigger two alarm states. Crossing the configured signal degrade (SD) threshold (sd-threshold) causes an event to be logged and an alarm to be raised, which alerts the operator to a potential issue on a link. Crossing the configured signal failure (SF) threshold (sf-threshold) causes the affected port to enter the operationally down state, and causes an event to be logged and an alarm to be raised.

The CRC error rates are calculated as M✕10E-N, which is the ratio of errored frames allowed for total frames received. The operator can configure both the threshold (N) and a multiplier (M). If the multiplier is not configured, the default multiplier (1) is used.

For example, setting the SD threshold to 3 results in a signal degrade error rate threshold of 1✕10E-3 (1 errored frame per 1000 frames). Changing the configuration to an SD threshold of 3 and a multiplier of 5 results in a signal degrade error rate threshold of 5✕10E-3 (5 errored frames per 1000 frames). The signal degrade error rate threshold must be lower than the signal failure error rate threshold because it is used to notify the operator that the port is operating in a degraded but not failed condition.

A sliding window (window-size) is used to calculate a statistical average of CRC error statistics collected every second. Each second, the oldest statistics are dropped from the calculation. For example, if the default 10-s sliding window is configured, at the 11th second the oldest second of statistical data is dropped and the 11th second is included. This sliding average is compared against the configured SD and SF thresholds to determine if the error rate over the window exceeds one or both of the thresholds, which will generate an alarm and log event.

When a port enters the failed condition as a result of crossing an SF threshold, the port is not automatically returned to service. Because the port is operationally down without a physical link, error monitoring stops. The operator can enable the port by using the shutdown and no shutdown port commands or by using other port transition functions such as clearing the MDA (clear mda command) or removing the cable. A port that is down due to crossing an SF threshold can also be re-enabled by changing or disabling the SD threshold. The SD state is self-clearing, and it clears if the error rate drops below 1/10th of the configured SD rate.

Remote loopback

EFM OAM provides a link-layer frame loopback mode, which can be controlled remotely.

To initiate a remote loopback, the local EFM OAM client sends a loopback control OAMPDU by enabling the OAM remote loopback command. After receiving the loopback control OAMPDU, the remote OAM client puts the remote port into local loopback mode.

OAMPDUs are slow protocol frames that contain appropriate control and status information used to monitor, test, and troubleshoot OAM-enabled links.

To exit a remote loopback, the local EFM OAM client sends a loopback control OAMPDU by disabling the OAM remote loopback command. After receiving the loopback control OAMPDU, the remote OAM client puts the port back into normal forwarding mode.

When a port is in local loopback mode (the far end requested an Ethernet OAM loopback), any packets received on the port will be looped back, except for EFM OAMPDUs. No data will be transmitted from the node; only data that is received on the node will be sent back out.

When the node is in remote loopback mode, local data from the CSM is transmitted, but any data received on the node is dropped, except for EFM OAMPDUs.

Remote loopbacks should be used with caution; if dynamic signaling and routing protocols are used, all services go down when a remote loopback is initiated. If only static signaling and routing is used, the services stay up. On the 7705 SAR, the Ethernet port can be configured to accept or reject the remote-loopback command.

802.3ah OAMPDU tunneling and termination for Epipe service

Customers who subscribe to Epipe service may have customer equipment running 802.3ah at both ends. The 7705 SAR can be configured to tunnel EFM OAMPDUs received from a customer device to the other end through the existing network using MPLS or GRE, or to terminate received OAMPDUs at a network or an access Ethernet port.

While tunneling offers the ability to terminate and process the OAM messages at the head-end, termination on the first access port at the cell site can be used to detect immediate failures or can be used to detect port failures in a timelier manner. The user can choose either tunneling or termination, but not both at the same time.

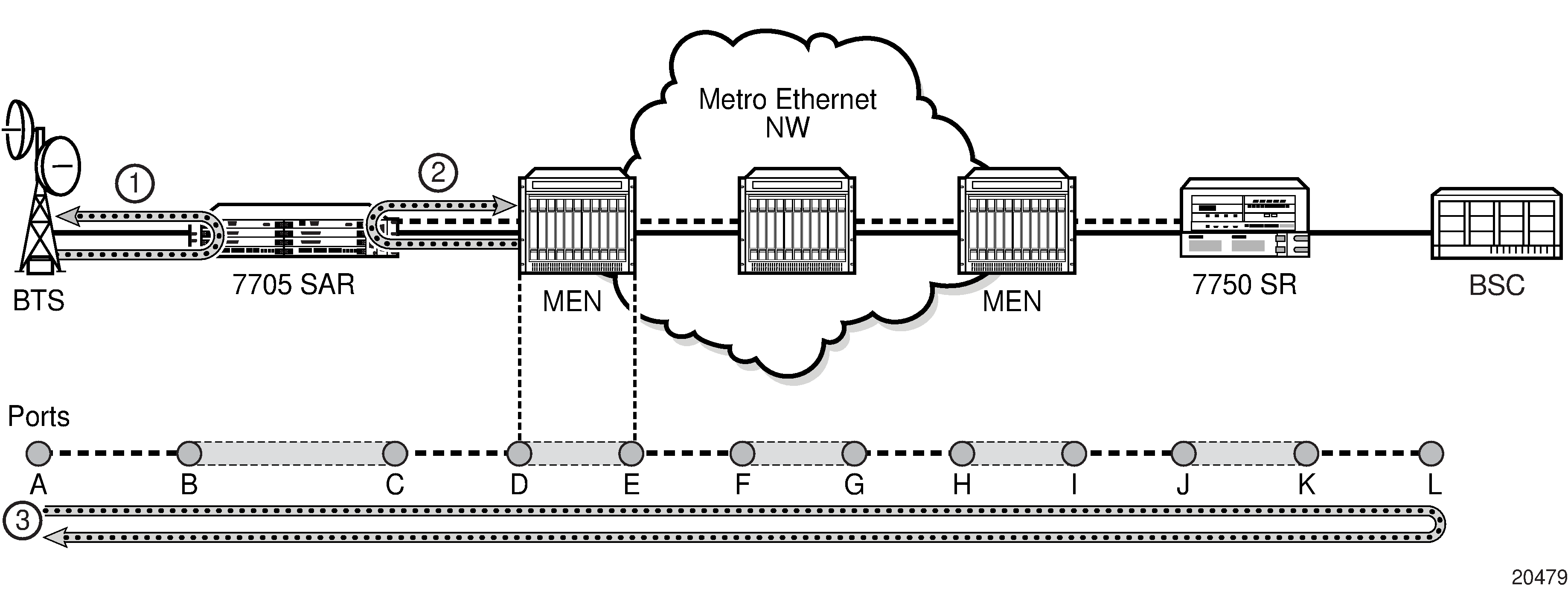

In the following figure, scenario 1 shows the termination of received EFM OAMPDUs from a customer device on an access port, while scenario 2 shows the same thing except for a network port. Scenario 3 shows tunneling of EFM OAMPDUs through the associated Ethernet PW. To configure termination (scenario 1), use the config>port>ethernet>efm-oam>no shutdown command.

Dying gasp

Dying gasp is used to notify the far end that EFM-OAM is disabled or shut down on the local port. The dying gasp flag is set on the OAMPDUs that are sent to the peer. The far end can then take immediate action and inform upper layers that EFM-OAM is down on the port.

When a dying gasp is received from a peer, the node logs the event and generates an SNMP trap to notify the operator.

Ethernet loopbacks

The following loopbacks are supported on Ethernet ports:

timed network line loopback

timed and untimed access line loopbacks

timed and untimed access internal loopbacks

persistent access line loopback

persistent access internal loopback

MAC address swapping

CFM loopback on network and access ports

CFM loopback on ring ports and v-port

Line and internal Ethernet loopbacks

A line loopback loops frames received on the corresponding port back toward the transmit direction. Line loopbacks are supported on ports configured for access or network mode.

Similarly, a line loopback with MAC addressing loops frames received on the corresponding port back toward the transmit direction, and swaps the source and destination MAC addresses before transmission. See MAC swapping for more information.

An internal loopback loops frames from the local router back to the framer. This is usually referred to as an equipment loopback. The transmit signal is looped back and received by the interface. Internal loopbacks are supported on ports configured in access mode.

If a loopback is enabled on a port, the port mode cannot be changed until the loopback has been disabled.

A port can support only one loopback at a time. If a loopback exists on a port, it must be disabled or the timer must expire before another loopback can be configured on the same port. EFM-OAM cannot be enabled on a port that has an Ethernet loopback enabled on it. Similarly, an Ethernet loopback cannot be enabled on a port that has EFM-OAM enabled on it.

When an internal loopback is enabled on a port, autonegotiation is turned off silently. This is to allow an internal loopback when the operational status of a port is down. Any user modification to autonegotiation on a port configured with an internal Ethernet loopback will not take effect until the loopback is disabled.

The loopback timer can be configured from 30 s to 86400 s. All non-zero timed loopbacks are turned off automatically under the following conditions: an adapter card reset, an activity switch, or timer expiry. Line or internal loopback timers can also be configured as a latched loopback by setting the timer to 0 s, or as a persistent loopback with the persistent keyword. Latched and persistent loopbacks are enabled indefinitely until turned off by the user. Latched loopbacks survive adapter card resets and activity switches, but are lost if there is a system restart. Persistent loopbacks survive adapter card resets and activity switches and can survive a system restart if the admin save or admin save detail command was executed before the restart. Latched loopbacks (untimed) and persistent loopbacks can be enabled only on Ethernet access ports.

Persistent loopbacks are the only Ethernet loopbacks saved to the database by the admin-save and admin-save-detail commands.

An Ethernet port loopback may interact with other features. See Interaction of Ethernet port loopback with other features for more information.

MAC swapping

Typically, an Ethernet port loopback only echoes back received frames. That is, the received source and destination MAC addresses are not swapped. However, not all Ethernet equipment supports echo mode, where the original sender of the frame must support receiving its own port MAC address as the destination MAC address.

The MAC swapping feature on the 7705 SAR is an optional feature that will swap the received destination MAC address with the source MAC address when an Ethernet port is in loopback mode. After the swap, the FCS is recalculated to ensure the validity of the Ethernet frame and to ensure that the frame is not dropped by the original sender due to a CRC error.

Interaction of Ethernet port loopback with other features

EFM OAM and line loopback are mutually exclusive. If one of these functions is enabled, it must be disabled before the other can be used.

However, a line loopback precedes the dot1x behavior. That is, if the port is already dot1x-authenticated it will remain so. If it is not, EAP authentication will fail.

Ethernet port-layer line loopback and Ethernet port-layer internal loopback can be enabled on the same port with the down-when-looped feature. EFM OAM cannot be enabled on the same port with the down-when-looped feature. For more information, see Ethernet port down-when-looped.

CFM loopbacks for OAM on Ethernet ports

This section contains information about the following topics:

CFM loopback overview

Connectivity fault management (CFM) loopback support for loopback messages (LBMs) on Ethernet ports allows operators to run standards-based Layer 1 and Layer 2 OAM tests on ports receiving unlabeled packets.

The 7705 SAR supports CFM MEPs associated with different endpoints (that is, Up and Down SAP MEPs, Up and Down spoke SDP MEPs, Up and Down mesh SDP MEPs, and network interface facility Down MEPs). In addition, for traffic received from an uplink (network ingress), the 7705 SAR supports CFM LBM for both labeled and unlabeled packets. CFM loopbacks are applied to the Ethernet port.

See the 7705 SAR OAM and Diagnostics Guide, ‟Ethernet OAM Capabilities”, for information about CFM MEPs.

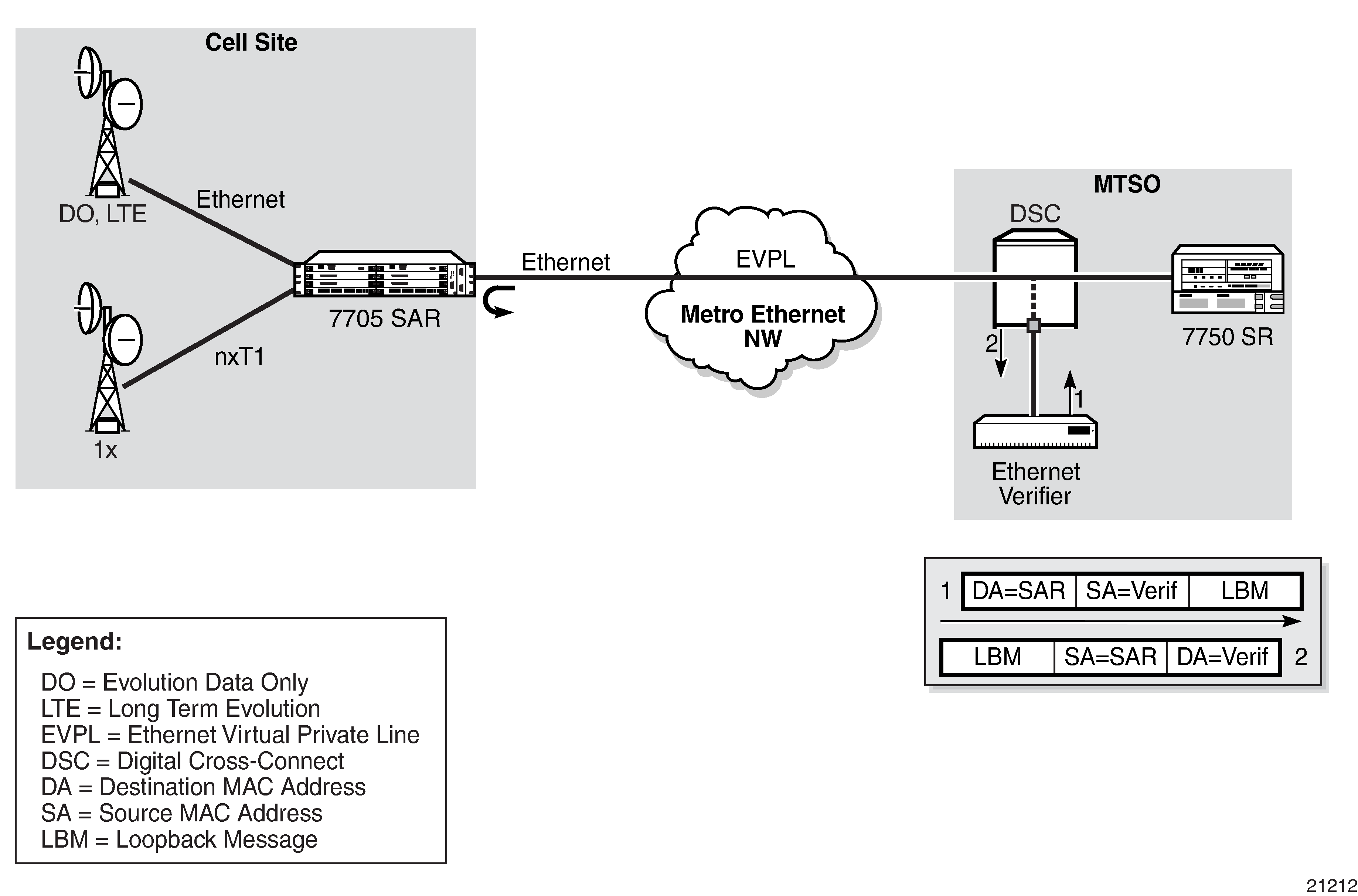

The following figure shows an application where an operator leases facilities from a transport network provider in order to transport traffic from a cell site to their MTSO. The operator leases a certain amount of bandwidth between the two endpoints (the cell site and the MTSO) from the transport provider, who offers Ethernet Virtual Private Line (EVPL) or Ethernet Private Line (EPL) PTP service. Before the operator offers services on the leased bandwidth, the operator runs OAM tests to verify the SLA. Typically, the transport provider (MEN provider) requires that the OAM tests be run in the direction of (toward) the first Ethernet port that is connected to the transport network. This is done to eliminate the potential effect of queuing, delay, and jitter that may be introduced by an SDP or SAP.

The figure shows an Ethernet verifier at the MTSO that is directly connected to the transport network (in front of the 7750 SR). Therefore, the Ethernet OAM frames are not label-encapsulated. Because Ethernet verifiers do not support label operations and the transport provider mandates that OAM tests be run between the two hand-off Ethernet ports, the verifier cannot be relocated behind the 7750 SR node at the MTSO. Therefore, CFM loopback frames received are not MPLS-encapsulated, but are simple Ethernet frames where the type is set to CFM (dot1ag or Y.1731).

CFM loopback mechanics

The following are important facts to consider when working with CFM loopbacks:

CFM loopbacks can be enabled on a per-port basis, and:

the port can be in access or network mode

when enabled on a port, all received LBM frames are processed, regardless of the VLAN and the service that the VLAN or SAP is bound to

there is no associated MEP creation involved with this feature; therefore, no domain, association, or similar checks are performed on the received frame

upon finding a destination address MAC match, the LBM frame is sent to the CFM process

CFM loopback support on a physical ring port on the 2-port 10GigE (Ethernet) Adapter card or 2-port 10GigE (Ethernet) module differs from other Ethernet ports. For these ports, cfm-loopback is configured, optionally, using dot1p and match-vlan to create a list of up to 16 VLANs. The null VLAN is always applied. The CFM loopback message will be processed if it does not contain a VLAN header or if it contains a VLAN header with a VLAN ID that matches one in the configured match-vlan list.

received LBM frames undergo no queuing or scheduling in the ingress direction

at egress, loopback reply (LBR) frames are stored in their own queue; that is, a separate new queue is added exclusively for LBR frames

users can configure the way a response frame is treated among other user traffic stored in network queues; the configuration options are high-priority, low-priority, or dot1p, where dot1p applies only to physical ring ports

for network egress or access egress, where 4-priority scheduling is enabled:

high-priority – either cir = port_speed, which applies to all frames that are scheduled via an expedited in-profile scheduler, or RR for all other (network egress queue) frames that reside in expedited queues and are in an in-profile state

low-priority – either cir = 0, pir = port_speed, which applies to all frames that are scheduled via a best effort out-of-profile scheduler, or RR for all other frames that reside in best-effort queues and are in an out-of-profile state

for the 8-port Gigabit Ethernet Adapter card, the 10-port 1GigE/1-port 10GigE X-Adapter card, and the v-port on the 2-port 10GigE (Ethernet) Adapter card and 2-port 10GigE (Ethernet) module, for network egress, where 16-priority scheduling is enabled:

high-priority – has higher priority than any user frames

low-priority – has lower priority than any user frames

for the physical ring ports on the 2-port 10GigE (Ethernet) Adapter card and 2-port 10GigE (Ethernet) module, which can only operate as network egress, the priority of the LBR frame is derived from the dot1p setting of the received LBM frame. Based on the assigned ring-type network queue policy, dot1p-to-queue mapping is handled using the same mapping rule that applies to all other user frames.

the above queue parameters and scheduler mappings are all preconfigured and cannot be altered. The desired QoS treatment is selected by enabling the CFM loopback and specifying high-priority, low-priority, or dot1p.

Ethernet port down-when-looped

Newly provisioned circuits are often put into loopback with a physical loopback cable for testing and to ensure the ports meet the SLA. If loopbacks are not cleared, or physically removed, by the operator when the testing is completed, they can adversely affect the performance of all other SDPs and customer interfaces (SAPs). This is especially problematic for point-to-multipoint services such as VPLS, since Ethernet does not support TTL, which is essential in terminating loops.

The down-when-looped feature is used on the 7705 SAR to detect loops within the network and to ensure continued operation of other ports. When the down-when-looped feature is activated, a keepalive loop PDU is transmitted periodically toward the network. The Ethernet port then listens for returning keepalive loop PDUs. In unicast mode, a loop is detected if any of the received PDUs have an Ethertype value of 9000, which indicates a loopback (Configuration Test Protocol), and the source (SRC) and destination (DST) MAC addresses are identical to the MAC address of the Ethernet port. In broadcast mode, a loop is detected if any of the received PDUs have an Ethertype value of 9000 and the SRC MAC address matches the MAC address of the Ethernet port and the DST MAC address matches the broadcast MAC address. When a loop is detected, the Ethernet port is immediately brought down.

Ethernet port-layer line loopbacks and the down-when-looped feature can be enabled on the same port. The keepalive loop PDU is still transmitted; however, if the port receives its own keepalive loop PDU, the keepalive PDU is extracted and processed to avoid infinite looping.

Ethernet port-layer internal loopbacks and the down-when-looped feature can also be enabled on the same port. When the keepalive PDU is internally looped back, it is extracted and processed as usual. If the SRC MAC address matches the port MAC address, the port is disabled due to detection of a loop. If the SRC MAC address is a broadcast MAC address because the swap-src-dst-mac option in the loopback command is enabled, then there is no change to port status and it remains operationally up.

EFM OAM and down-when-looped cannot be enabled on the same port.

Ethernet ring (adapter card and module)

The 2-port 10GigE (Ethernet) Adapter card can be installed in a 7705 SAR-8 Shelf V2 or 7705 SAR-18 chassis and the 2-port 10GigE (Ethernet) module can be installed in a 7705 SAR-M to connect to and from access rings carrying a high concentration of traffic. For the maximum number of cards or modules supported per chassis, see Maximum number of cards/modules supported in each chassis.

A number of 7705 SAR nodes in a ring typically aggregate traffic from customer sites, map the traffic to a service, and connect to an SR node. The SR node acts as a gateway point out of the ring. A 10GigE ring allows for higher bandwidth services and aggregation on a per-7705 SAR basis. The 2-port 10GigE (Ethernet) Adapter card/module increases the capacity of backhaul networks by providing 10GigE support on the aggregation nodes, thus increasing the port capacity.

In a deployment of a 2-port 10GigE (Ethernet) Adapter card/module, each 7705 SAR node in the ring is connected to the east and west side of the ring over two different 10GigE ports. If 10GigE is the main uplink, the following are required for redundancy:

two cards per 7705 SAR-8 Shelf V2

two cards per 7705 SAR-18

two 7705 SAR-M nodes, each equipped with 2-port 10GigE (Ethernet) module

With two cards per 7705 SAR-8 Shelf V2 or 7705 SAR-18 node, for example, east and west links of the ring can be terminated on two different adapter cards, reducing the impact of potential hardware failure.

The physical ports on the 2-port 10GigE (Ethernet) Adapter card/module boot up in network mode and this network setting cannot be disabled or altered. At boot-up, the MAC address of the virtual port (v-port) is programmed automatically for efficiency and security reasons.

There is native built-in Ethernet bridging among the ring ports and the v-port. Bridging destinations for traffic received from one of the ring ports include the 10GigE ring port and the network interfaces on the v-port. Bridging destinations for traffic received from the v-port include one or both of the 10GigE ring ports.

With bridging, broadcast and multicast frames are forwarded over all ports except the received one. Unknown frames are forwarded to both 10GigE ports if received from the v-port or forwarded to the other 10GigE port only if received from one of the 10GigE ports (the local v-port MAC address is always programmed).

The bridge traffic of the physical 10GigE ports is based on learned and programmed MAC addresses.

MTU configuration guidelines

This section contains information about the following topics:

MTU configuration overview

Because of the services overhead (that is, pseudowire/VLL, MPLS tunnel, dot1q/qinq and dot1p overhead), it is crucial that configurable variable frame size be supported for end-to-end service delivery.

Observe the following general rules when planning your service and physical maximum transmission unit (MTU) configurations:

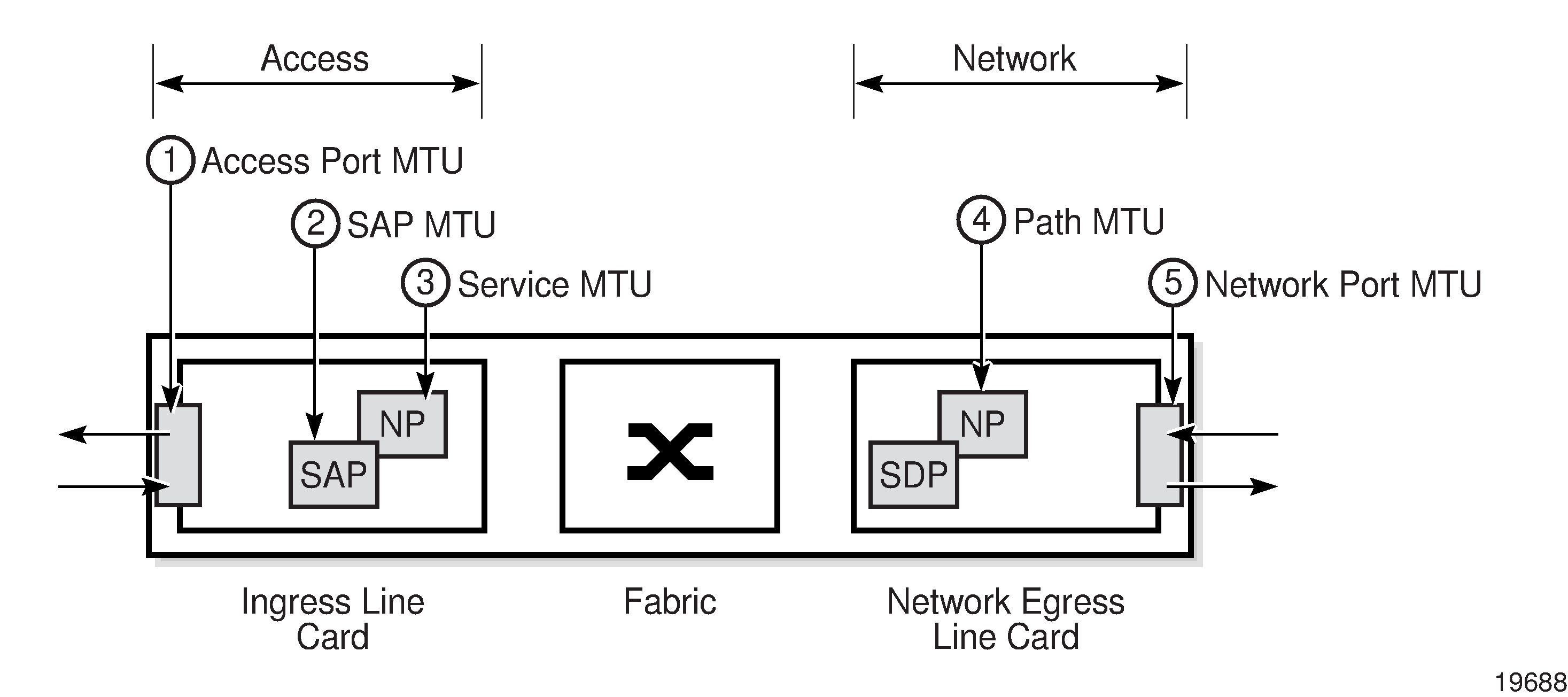

The 7705 SAR must contend with MTU limitations at many service points. The physical (access and network) port, service, and SDP MTU values must be individually defined. MTU points on the 7705 SAR identifies the various MTU points on the 7705 SAR.

The ports that will be designated as network ports intended to carry service traffic must be identified.

MTU values should not be modified frequently.

MTU values must conform to both of the following conditions:

the service MTU must be less than or equal to the SDP path MTU

the service MTU must be less than or equal to the access port (SAP) MTU

When the allow-fragmentation command is enabled on an SDP, the current MTU algorithm is overwritten with the configured path MTU. The administrative MTU and operational MTU both show the specified MTU value. If the path MTU is not configured or available, the operational MTU is set to 2000 bytes, and the administrative MTU displays a value of 0. When allow-fragmentation is disabled, the operational MTU reverts to the previous value.

For more information, see the ‟MTU Settings” section in the 7705 SAR Services Guide. To configure various MTU points, use the following commands:

port MTUs are set with the mtu command, under the config>port context, where the port type can be Ethernet, TDM, serial, or SONET/SDH

service MTUs are set in the appropriate config>service context

path MTUs are set with the path-mtu command under the config>service>sdp context

Frame size configuration is supported for an Ethernet port configured as an access or a network port.

For an Ethernet adapter card that does not support jumbo frames, all frames received at an ingress network or access port are policed against 1576 bytes (1572 + 4 bytes of FCS), regardless of the port MTU. Any frames longer than 1576 bytes are discarded and the ‟Too Long Frame” and ‟Error Stats” counters in the port statistics display are incremented. See Jumbo frames for more information.

At network egress, Ethernet frames are policed against the configured port MTU. If the frame exceeds the configured port MTU, the ‟Interface Out Discards” counter in the port statistics is incremented.

When the network group encryption (NGE) feature is used, additional bytes due to NGE packet overhead must be considered. See the ‟NGE Packet Overhead and MTU Considerations” section in the 7705 SAR Services Guide for more information.

IP fragmentation

IP fragmentation is used to fragment a packet that is larger than the MTU of the egress interface, so that the packet can be transported over that interface.

For IPv4, the router fragments or discards the IP packets based on whether the DF (Do not fragment) bit is set in the IP header. If the packet that exceeds the MTU cannot be fragmented, the packet is discarded and an ICMP message ‟Fragmentation Needed and Don’t Fragment was Set” is sent back to the source IP address.

For IPv6, the router cannot fragment the packet so must discard it. An ICMP message ‟Packet too big” is sent back to the source node.

As a source of self-generated traffic, the 7705 SAR can perform packet fragmentation.

Fragmentation can be enabled for GRE tunnels. See the ‟GRE Fragmentation” section in the 7705 SAR Services Guide for more information.

Jumbo frames

Jumbo frames are supported on all Ethernet ports.

The maximum MTU size for a jumbo frame on the 7705 SAR is 9732 bytes. The maximum MTU for a jumbo frame may vary depending on the Ethernet encapsulation type, as shown in the following table. The calculations of the other MTU values (service MTU, path MTU, and so on) are based on the port MTU. The values in the table are also maximum receive unit (MRU) values. MTU values are user-configured values. MRU values are the maximum MTU value that a user can configure on an adapter card that supports jumbo frames.

Encapsulation |

Maximum MTU (bytes) |

|---|---|

Null |

9724 |

Dot1q |

9728 |

QinQ |

9732 |

For an Ethernet adapter card, all frames received at an ingress network or access port are policed against the MRU for the ingress adapter card, regardless of the configured MTU. Any frames larger than the MRU are discarded and the ‟Too Long Frame” and ‟Error Stats” counters in the port statistics display are incremented.

At network egress, frames are checked against the configured port MTU. If the frame exceeds the configured port MTU and the DF bit is set, then the ‟MTU Exceeded” discard counter will be incremented on the ingress IP interface statistics display, or on the MPLS interface statistics display if the packet is an MPLS packet.

For example, on adapter cards that do not support an MTU greater than 2106 bytes, fragmentation is not supported for frames greater than the maximum supported MTU for that card (that is, 2106 bytes). If the maximum supported MTU is exceeded, the following occurs:

An appropriate ICMP reply message (Destination Unreachable) is generated by the 7705 SAR. The router ensures that the ICMP generated message cannot be used as a DOS attack (that is, the router paces the ICMP message).

The appropriate statistics are incremented.

Jumbo frames offer better utilization of an Ethernet link because as more payload is packed into an Ethernet frame of constant size, the ratio of overhead to payload is minimized.

From the traffic management perspective, large payloads may cause long delays, so a balance between link utilization and delay must be found. For example, for ATM VLLs, concatenating a large number of ATM cells when the MTU is set to a very high value could generate a 9-kB ATM VLL frame. Transmitting a frame that large would take more than 23 ms on a 3-Mb/s policed Ethernet uplink.

Behavior of adapter cards not supporting jumbo frames

The 7705 SAR-8 Shelf V2 and the 7705 SAR-18 do not support ingress fragmentation, and this is true for jumbo frames. Therefore, any jumbo frame packet that gets routed to an adapter card that does not have Ethernet ports and therefore does not support jumbo frame MTU (for example, a 16-port T1/E1 ASAP Adapter card or a 4-port OC3/STM1 / 1-port OC12/STM4 Adapter card) is discarded if the packet size is greater than the TDM port’s maximum supported MTU. If the maximum supported MTU is exceeded, the following occurs:

An ICMP reply message (Destination Unreachable) is generated by the 7705 SAR. The router ensures that the ICMP-generated message cannot be used as a DOS attack (that is, the router paces the ICMP message).

The port statistics show IP or MPLS Interface MTU discards, for IP or MPLS traffic, respectively. MTU Exceeded Packets and Bytes counters exist separately for IPv4/6 and MPLS under the IP interface hierarchy for all discarded packets where ICMP Error messages are not generated.

For example, if a packet arrives on an 8-port Gigabit Ethernet Adapter card and is to be forwarded to a 16-port T1/E1 ASAP Adapter card with a maximum port MTU of 2090 bytes and a channel group configured for PPP with the port MTU of 1000 bytes, the following may occur:

If the arriving packet is 800 bytes, forward the packet.

If the arriving packet is 1400 bytes, forward the packet, which will be fragmented by the egress adapter card.

If the arriving packet is fragmented and the fragments are 800 bytes, forward the packet.

If the arriving packet is 2500 bytes, send an ICMP error message (because the egress adapter card has a maximum port MTU of 2090 bytes).

If the arriving packet is fragmented and the fragment size is 2500 bytes, there is an ICMP error.

Jumbo frame behavior on the fixed platforms

The 7705 SAR-A, 7705 SAR-Ax, 7705 SAR-H, 7705 SAR-Hc, 7705 SAR-M, 7705 SAR-Wx, and 7705 SAR-X are able to fragment packets between Ethernet ports (which support jumbo frames) and TDM ports (which do not support jumbo frames). In this case, when a packet arrives from a port that supports jumbo frames and is routed to a port that does not support jumbo frames (that is, a TDM port) the packet will get fragmented to the port MTU of the TDM port.

For example, if a packet arrives on a 7705 SAR-A and is to be forwarded to a TDM port that has a maximum port MTU of 2090 bytes and a channel group configured for PPP with the port MTU of 1000 bytes (PPP port MTU), the following may occur:

If the arriving packet is 800 bytes, forward the packet.

If the arriving packet is 1400 bytes and the DF bit is 0, forward the packet, which will be fragmented to the PPP port MTU size.

If the arriving packet is 2500 bytes and the DF bit is 0, forward the packet, which will be fragmented to the PPP port MTU size.

Multicast support for jumbo frames

Jumbo frames are supported in a multicast configuration as long as all adapter cards in the multicast group support jumbo frames. If an adapter card that does not support jumbo frames is present in the multicast group, the replicated multicast jumbo frame packet will be discarded by the fabric because of an MRU error of the fabric port (Rx).

The multicast group replicates the jumbo frame for all adapter cards, regardless of whether they support jumbo frames, only when forwarding the packet through the fabric. The replicated jumbo frame packet is discarded on adapter cards that do not support jumbo frames.

PMC jumbo frame support

For the Packet Microwave Adapter card (PMC), ensure that the microwave hardware installed with the card supports the corresponding jumbo frame MTU. If the microwave hardware does not support the jumbo frame MTU, it is recommended that the MTU of the PMC port be set to the maximum frame size that is supported by the microwave hardware.

Default port MTU values

The following table displays the default and maximum port MTU values that are dependent upon the port type, mode, and encapsulation type.

Port type |

Mode |

Encap type |

Default (bytes) |

Max MTU (bytes) |

|---|---|---|---|---|

|

10/100 Ethernet1 |

Access/ Network |

null |

1514 |

9724 2 |

dot1q |

1518 |

9728 2 |

||

|

qinq3 |

1522 (access only) |

9732 (access only) 2 |

||

|

GigE SFP 1and 10-GigE SFP+ |

Access/ Network |

null |

1514 (access) 1572 (network) |

9724 (access and network) |

dot1q |

1518 (access) 1572 (network) |

9728 (access and network) |

||

|

qinq3 |

1522 (access only) |

9732 (access only) |

||

Ring port |

Network |

null |

9728 (fixed) |

9728 (fixed) |

v-port (on Ring adapter card) |

Network |

null |

1572 |

9724 |

dot1q |

1572 |

9728 |

||

TDM (PW) |

Access |

cem |

1514 |

1514 |

TDM (ATM PW) |

Access |

atm |

1524 |

1524 |

TDM (FR PW) |

Access |

frame-relay |

1514 |

2090 |

TDM (HDLC PW) |

Access |

hdlc |

1514 |

2090 |

TDM (IW PW) |

Access |

cisco-hdlc |

1514 |

2090 |

TDM (PPP/MLPPP) |

Access |

ipcp |

1502 |

2090 |

Network |

ppp-auto |

1572 |

2090 |

|

|

Serial V.35 or X.21 (FR PW) 4 |

Access |

frame-relay |

1514 |

2090 |

|

Serial V.35 or X.21 (HDLC PW) 4 |

Access |

hdlc |

1514 |

2090 |

|

Serial V.35 or X.21 (IW PW) 4 |

Access |

frame-relay |

1514 |

2090 |

ipcp |

1502 |

2090 |

||

cisco-hdlc |

1514 |

2090 |

||

SONET/SDH |

Access |

atm |

1524 |

1524 |

SONET/SDH |

Network |

ppp-auto |

1572 |

2090 |

Notes:

- The maximum MTU value is supported only on cards that have buffer chaining enabled.

- On the Packet Microwave Adapter card, the MWA ports support 4 bytes less than the Ethernet ports. MWA ports support a maximum MTU of 9720 bytes (null) or 9724 bytes (dot1q). MWA ports do not support QinQ.

- QinQ is supported only on access ports.

- For X.21 serial ports at super-rate speeds.

For more information, see the ‟MTU Settings” section in the 7705 SAR Services Guide.

LAG

This section contains information about the following topics:

LAG overview

The 7705 SAR supports link aggregation groups (LAGs) based on the IEEE 802.1ax standard (formerly 802.3ad). Link aggregation provides:

increased bandwidth by combining multiple links into one logical link (in active/active mode)

load sharing by distributing traffic across multiple links (in active/active mode)

redundancy and increased resiliency between devices by having a standby link to act as backup if the active link fails (in active/standby mode)

In the 7705 SAR implementation, all links must operate at the same speed.

Packet sequencing must be maintained for any given session. The hashing algorithm deployed by Nokia routers is based on the type of traffic transported to ensure that all traffic in a flow remains in sequence while providing effective load sharing across the links in the LAG. See LAG and ECMP hashing for more information.

LAGs must be statically configured or formed dynamically with Link Aggregation Control Protocol (LACP). See LACP and active/standby operation for information about LACP.

All Ethernet-based supported services can benefit from LAG, including:

network interfaces and SDPs

spoke SDPs, mesh SDPs, and EVPN endpoints

IES and VPRN interfaces and SAPs

Ethernet and IP pseudowire SAPs

routed VPLS (r-VPLS) SAPs

LAGs are supported on access, network, and hybrid ports. A LAG can be in active/active mode or in active/standby mode for access, network, or hybrid ports. Active/standby mode is a subset of active/active mode if subgroups are enabled.

LAGs are supported on access ports on the following:

8-port Gigabit Ethernet Adapter card

10-port 1GigE/1-port 10GigE X-Adapter card (10-port GigE mode)

6-port Ethernet 10Gbps Adapter card

4-port SAR-H Fast Ethernet module

6-port SAR-M Ethernet module

Packet Microwave Adapter card (for ports not in a microwave link)

all fixed platforms

LAGs are supported on network ports on the following:

8-port Gigabit Ethernet Adapter card

10-port 1GigE/1-port 10GigE X-Adapter card

6-port Ethernet 10Gbps Adapter card

4-port SAR-H Fast Ethernet module

6-port SAR-M Ethernet module

Packet Microwave Adapter card (for ports not in a microwave link and ports in a 1+0 network microwave link; LAGs are not supported on ports in a 1+1 HSB microwave link)

all fixed platforms

LAGs are supported on hybrid ports on the following:

8-port Gigabit Ethernet Adapter card

10-port 1GigE/1-port 10GigE X-Adapter card (10-port GigE mode)

6-port Ethernet 10Gbps Adapter card

6-port SAR-M Ethernet module

Packet Microwave Adapter card (for ports not in a microwave link)

all fixed platforms

On access ports, a LAG supports active/active and active/standby operation. For active/standby operation the links must be in different subgroups. Links can be on the same platform or adapter card/module or distributed over multiple components. Load sharing is supported among the active links in a LAG group.

On network ports, a LAG supports active/active and active/standby operation. For active/standby operation the links must be in different subgroups. Links can be on the same platform or adapter card/module or distributed over multiple components. Load sharing is supported among the active links in a LAG group. Any tunnel type (for example, IP, GRE, or MPLS) transporting any service type, any IP traffic, or any labeled traffic (LER, LSR) can use the LAG load-sharing, active/active, and active/standby functionality.

LAGs are supported on network 1+0 microwave links. Ports that are in a microwave link can be added to the same LAG as ports that are not in a microwave link. Ports belonging to a microwave link must have limited autonegotiation enabled before the link can be added to a LAG.

A LAG that contains ports in a microwave link must have LACP enabled for active/standby operation. Static LAG configuration (without LACP) is not supported for active/standby LAGs with microwave-enabled ports.

On hybrid ports, a LAG supports active/active and active/standby operation. For active/standby operation the links must be in different subgroups. Links can be on the same platform or adapter card/module or distributed over multiple components. Load sharing is supported among the active links in a LAG group.

A LAG group with assigned members can be converted from one mode to another as long as the number of member ports are supported in the new mode and the ports all support the new mode, none of the members belong to a microwave link, and the LAG group is not associated with a network interface or a SAP.

A subgroup is a group of links within a LAG. On access, network, or hybrid ports, a LAG can have a maximum of four subgroups and a subgroup can have links up to the maximum number supported on the LAG. The LAG is active/active if there is only one sub-group and is active/standby if there is more than one subgroup.

When configuring a LAG, most port features (port commands) can only be configured on the primary member port. The configuration, or any change to the configuration, is automatically propagated to any remaining ports within the same LAG. Operators cannot modify the configurations on non-primary ports. For more information, see Configuring LAG parameters.

If the LAG has one member link on a second-generation (Gen-2) Ethernet adapter card and the other link on a third-generation (Gen-3) Ethernet adapter card or platform, a mix-and-match scenario exists for traffic management on the LAG SAP. In this case, all QoS parameters for the LAG SAP are configured but only those parameters applicable to the active member link are used. See LAG support on mixed-generation hardware for more information.

Configuring a multiservice site (MSS) aggregate rate can restrict the use of LAG SAPs. For more information, see the ‟MSS and LAG interaction on the 7705 SAR-8 Shelf V2 and 7705 SAR-18” section in the 7705 SAR Quality of Service Guide.

LACP and active/standby operation

On access, network, and hybrid ports, where multiple links in a LAG can be active at the same time, normal operation is that all non-failing links are active and traffic is load-balanced across all the active links. In some cases, however, it is desirable to have only some of the links active and the other links kept in standby mode. The Link Aggregation Control Protocol (LACP) is used to make the selection of the active links in a LAG predictable and compatible with any vendor equipment. The mechanism is based on the IEEE 802.1ax standard so that interoperability is ensured.

LACP is disabled by default and therefore must be enabled on the LAG if required. LACP can be used in either active mode or passive mode. The mode must match with connected CE devices for proper operation. For example, if the LAG on the 7705 SAR end is configured to be active, the CE end must be passive.

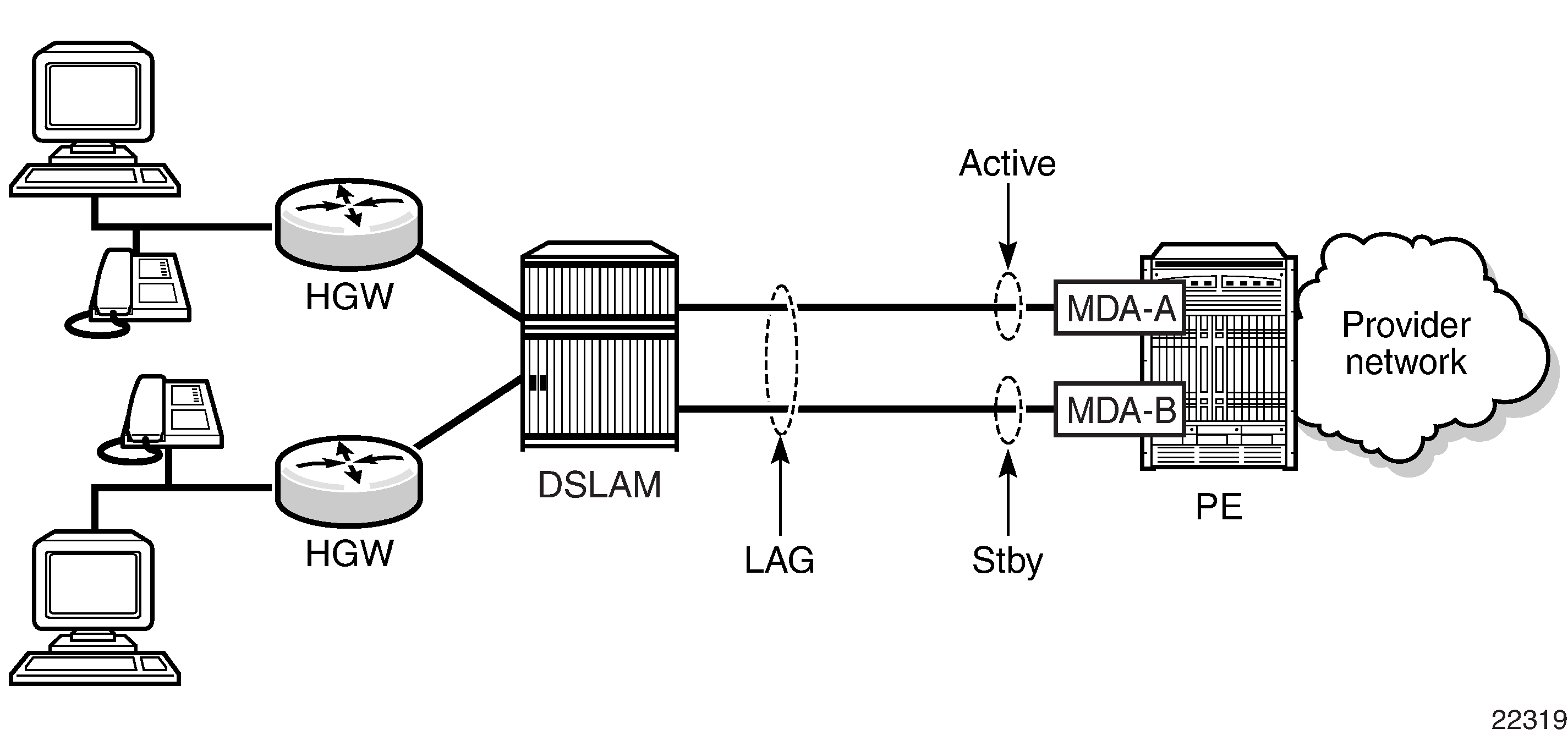

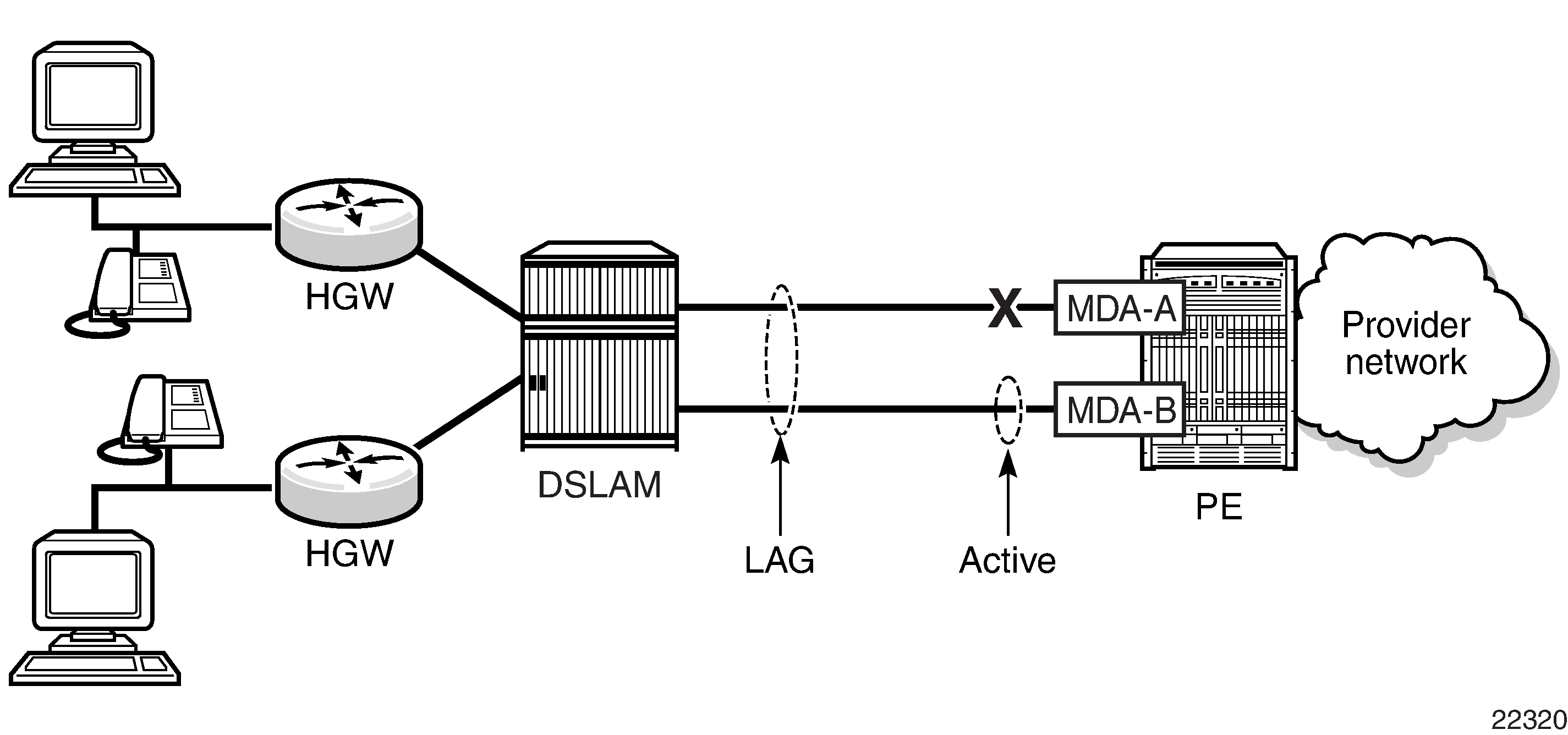

The following figure shows the interconnection between a DSLAM and a LAG aggregation node. In this configuration, LAG is used to protect against hardware failure. If the active link goes down, the link on standby takes over (see LAG on access failure switchover). The links are distributed across two different adapter cards to eliminate a single point of failure.

LACP handles active/standby operation of LAG subgroups as follows:

Each link in a LAG is assigned to a subgroup. On access, network, and hybrid ports, a LAG can have a maximum of four subgroups and a subgroup can have up to the maximum number of links supported for the LAG. The selection algorithm implemented by LACP ensures that only one subgroup in a LAG is selected as active.

The algorithm selects the active link as follows:

If multiple subgroups satisfy the selection criteria, the subgroup currently active remains active. Initially, the subgroup containing the highest-priority (lowest value) eligible link is selected as active.

An eligible member is a link that can potentially become active. This means it is operationally up, and if the slave-to-partner flag is set, the remote system did not disable its use (by signaling standby).

The selection algorithm works in a revertive mode (for details, see the IEEE 802.1ax standard). This means that every time the configuration or status of a subgroup changes, the selection algorithm reruns. If multiple subgroups satisfy the selection criteria, the subgroup currently active remains active. This behavior does not apply if the selection-criteria hold-time parameter is set to infinite.

Log events and traps are generated at both the LAG and link level to indicate any LACP changes. See the TIMETRA-LAG-MIB for details.

QoS adaptation for LAG on access

QoS on access port LAGs (access ports and hybrid ports in access mode) is handled differently from QoS on network port LAGs (see QoS for LAG on network). Based on the configured hashing, traffic on a SAP can be sent over multiple LAG ports or can use a single port of a LAG. There are two user-selectable adaptive QoS modes (distribute and link) that allow the user to determine how the configured QoS rate is distributed to each of the active LAG port SAP queue schedulers, SAP schedulers (H-QoS), and MSS schedulers. These modes are:

adapt-qos distribute

For SAP queue schedulers, SAP schedulers (H-QoS), and SAP egress MSS schedulers, distribute mode divides the QoS rates (as specified by the SLA) equally among the active LAG links (ports). For example, if a SAP queue PIR and CIR are configured on an active/active LAG SAP to be 200 Mb/s and 100 Mb/s respectively, and there are four active LAG ports, the SAP queue on each LAG port will be configured with a PIR of 50 Mb/s (200/4) and a CIR of 25 Mb/s (100/4).

For the SAP ingress MSS scheduler, the scheduler rate is configured on an MDA basis. Distributive adaptive QoS divides the QoS rates (as specified by the SLA) among the active link MDAs proportionally to the number of active links on each MDA.

For example, if an MSS shaper group with an aggregate rate of 200 Mb/s and a CIR of 100 Mb/s is assigned to an active/active LAG SAP where the LAG has two ports on MDA 1 and three ports on MDA 2, the MSS shaper group on MDA 1 will have an aggregate rate of 80 Mb/s (200 ✕ 2/5 of the SLA) and a CIR of 40 Mb/s (100 ✕ 2/5 of the SLA). MDA 2 will have an aggregate rate of 120 Mb/s (200 ✕ 3/5) and a CIR of 60 Mb/s (100 ✕ 3/5).

adapt-qos link (default)

For SAP queue schedulers, SAP schedulers (H-QoS), and SAP egress MSS schedulers, link mode forces the full QoS rates (as specified by the SLA) to be configured on each of the active LAG links. For example, if a SAP queue PIR and CIR are configured on an active/active LAG SAP to be 200 Mb/s and 100 Mb/s respectively, and there are two active LAG ports, the SAP queue on each LAG port will be configured to the full SLA, which is a PIR of 200 Mb/s and a CIR of 100 Mb/s.

For the SAP ingress MSS scheduler, the scheduler rate is configured on an MDA basis. In LAG link mode, each active LAG link MDA MSS shaper scheduler is configured with the full SLA. For example, if an MSS shaper group is configured with an aggregate rate of 200 Mb/s and CIR of 100 Mb/s and is assigned to an active/active LAG SAP with three ports on MDA 1 and two ports on MDA 2, the MSS shaper group on MDA 1 and MDA 2 are each configured with the full SLA of 200 Mb/s for the aggregate rate and 100 Mb/s for the CIR.

The following table shows examples of rate and bandwidth distributions based on the adapt-qos mode configuration.

| Scheduler | Distribute |

Link |

|---|---|---|

SAP queue scheduler |

Rate distributed = rate / number of active links |

100% rate configured on each LAG SAP queue |

SAP scheduler (H-QoS) |

Rate distributed = rate / number of active links |

100% rate configured on each SAP scheduler |

SAP egress MSS scheduler |

Rate distributed = rate / number of active links |

100% rate configured on each port’s MSS scheduler |

SAP ingress MSS scheduler |

Rate distributed per active LAG MDA = rate ✕ (number of active links on MDA / total number of active links) |

100% rate configured on each active LAG MDA MSS scheduler |

The following restrictions apply to ingress MSS LAG adaptive QoS (distribute mode):

A unique MSS shaper group must be used per LAG when a non-default ingress MSS shaper group is assigned to a LAG SAP using adaptive QoS.

When a shaper group is assigned to a LAG SAP using adaptive QoS, all ports in the LAG group must have their MDAs assigned to the same shaper policy.

The following restrictions apply to egress MSS LAG:

The shaper policy for all LAG ports in a LAG must be the same and can only be configured on the primary LAG port member.

The following limitations apply to adaptive QoS (distribute mode):

The QoS rates for an ingress LAG using adaptive QoS are only distributed among the active links when a non-default shaper group is used. If a default shaper group is used, the full QoS rates are configured for each port in the LAG as if link mode is being used.

The QoS rates for an ingress or egress LAG using adaptive QoS will not be distributed among the active links when a user sets the PIR/CIR on a SAP queue, or aggregate rate/CIR on a SAP scheduler or MSS scheduler, to the default values (max and 0).

Adaptive QoS examples (distribute mode)

The following examples can be used as guidelines for configuring adapt-qos distribute.

SLA distribution for SAP queue-level PIR/CIR configuration

Configure a qos sap-ingress policy with a queue ID of 2, a PIR of 200 Mb/s, and a CIR of 100 Mb/s. Assign it to an active/active LAG SAP with five active ports.

For each port, the PIR/CIR configuration of SAP queue 2 is calculated so that the PIR = 40 Mb/s and CIR = 20 Mb/s.

If one link goes down, the PIR/CIR configuration of SAP queue 2 on each active port is recalculated so that the PIR = 50 Mb/s and CIR = 25 Mb/s.

SLA distribution for ingress/egress (H-QoS)

Create a LAG SAP with two different ports (for example, port 1/1/1 and port 1/1/2) in a LAG subgroup.

Configure a LAG SAP aggregate rate of 200 Mb/s and a CIR of 100 Mb/s.

To maintain the SLA, the SAP aggregate rate and CIR must be divided by the number of operational links in the LAG group.