QoS policies

QoS overview

Routers are designed with Quality of Service (QoS) mechanisms on both ingress and egress to support multiple customers and multiple services per physical interface. The routers can classify, police, shape, and mark traffic.

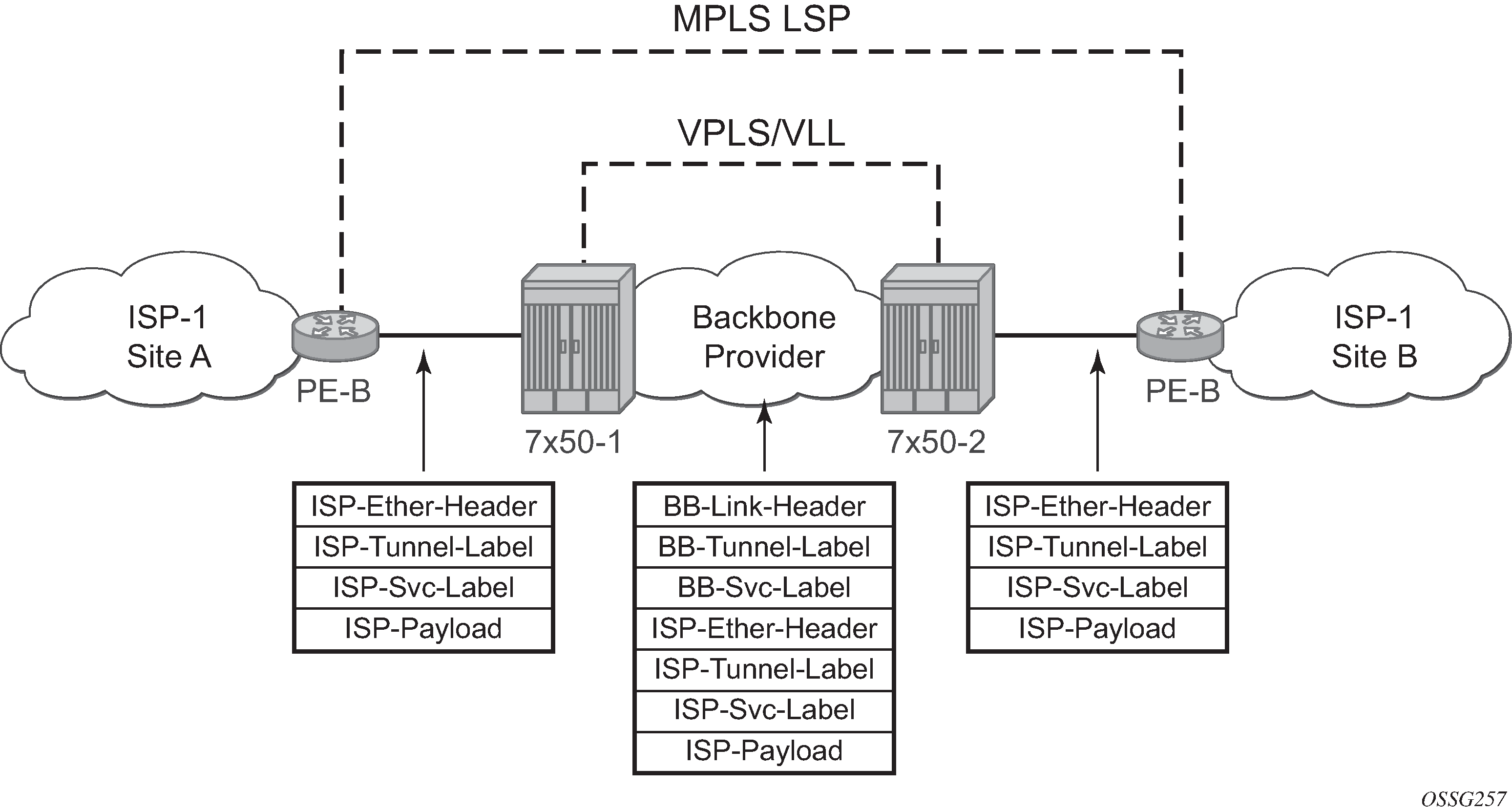

In the Nokia service router service model, a service is provisioned on the provider-edge (PE) equipment. Service data is encapsulated, then sent in a service tunnel to the far-end Nokia service router where the service data is delivered.

The operational theory of a service tunnel is that the encapsulation of the data between the two Nokia service routers (such as the 7950 XRS, 7750 SR, 7750 SR MG, and 7450 ESS) appears like a Layer 2 path to the service data although it is really traversing an IP or IP/MPLS core. The tunnel from one edge device to the other edge device is provisioned with an encapsulation and the services are mapped to the tunnel that most appropriately supports the service needs.

The router supports eight forwarding classes internally named:

Network-Control

High-1

Expedited

High-2

Low-1

Assured

Low-2

Best-Effort

The forwarding classes are described in more detail in Forwarding classes chapter.

Router QoS policies control how QoS is handled at distinct points in the service delivery model within the device. There are different types of QoS policies that cater to the different QoS needs at each point in the service delivery model. QoS policies are defined in a global context in the router and only take effect when the policy is applied to a relevant entity.

QoS policies are uniquely identified with a policy ID number or name. Policy ID 1 or Policy ID ‟default” is reserved for the default policy that is used if no policy is explicitly applied.

The QoS policies within the router can be divided into three main types:

QoS policies are used for classification, defining and queuing attributes, and marking.

Slope policies define default buffer allocations and WRED slope definitions.

Scheduler policies determine how queues are scheduled.

Forwarding classes

Routers support multiple forwarding classes and class-based queuing, so the concept of forwarding classes is common to all QoS policies.

Each forwarding class, also called Class of Service (CoS), is important only in relation to the other forwarding classes. A forwarding class provides network elements a method to weigh the relative importance of one packet over another in a different forwarding class.

Queues are created for a specific forwarding class to determine how the queue output is scheduled into the switch fabric. The forwarding class of the packet, along with the profile state, determines how the packet is queued and handled (the Per Hop Behavior (PHB)) at each hop along its path to a destination egress point. Routers support eight forwarding classes.

Forwarding classes lists the default definitions for the forwarding classes. The forwarding class behavior, in terms of ingress marking interpretation and egress marking, can be changed by Network QoS policies. All forwarding class queues support the concept of in-profile, out-of-profile and, at egress only, inplus-profile and exceed-profile.

FC-ID |

FC name |

FC designation |

DiffServ name |

Class type |

Notes |

|---|---|---|---|---|---|

7 |

Network-Control |

NC |

NC2 |

High-Priority |

Intended for network control traffic |

6 |

High-1 |

H1 |

NC1 |

Intended for a second network control class or delay/jitter sensitive traffic |

|

5 |

Expedited |

EF |

EF |

Intended for delay/jitter sensitive traffic |

|

4 |

High-2 |

H2 |

AF4 |

Intended for delay/jitter sensitive traffic |

|

3 |

Low-1 |

L1 |

AF2 |

Assured |

Intended for assured traffic. Also, is the default priority for network management traffic. |

2 |

Assured |

AF |

AF1 |

Intended for assured traffic |

|

1 |

Low-2 |

L2 |

CS1 |

Best Effort |

Intended for BE traffic |

0 |

Best-Effort |

BE |

BE |

The forwarding classes can be classified into three class types:

High-priority or Premium

Assured

Best-Effort

High-priority classes

The high-priority forwarding classes are Network-Control (nc), Expedited (ef), High-1 (h1), and High-2 (h2). High-priority forwarding classes are always serviced at congestion points over other forwarding classes; this behavior is determined by the router queue scheduling algorithm. See Virtual hierarchical scheduling for more information.

With a strict PHB at each network hop, service latency is mainly affected by the amount of high-priority traffic at each hop. These classes are intended to be used for network control traffic or for delay- or jitter-sensitive services.

If the service core network is oversubscribed, a mechanism to engineer a path through the core network and to reserve bandwidth must be used to apply strict control over the delay and bandwidth requirements of high-priority traffic. In the router, RSVP-TE can be used to create a path defined by an MPLS LSP through the core. Premium services are then mapped to the LSP, with care to not oversubscribe the reserved bandwidth.

If the core network has sufficient bandwidth, it is possible to effectively support the delay and jitter characteristics of high-priority traffic without using traffic engineered paths, as long as the core treats high-priority traffic with the correct PHB.

Assured classes

The assured forwarding classes are Assured (af) and Low 1 (l1). Assured forwarding classes provide services with a committed rate and a peak rate, much like Frame Relay. Packets transmitted through the queue at or below the committed transmission rate are marked in-profile. If the core service network has sufficient bandwidth along the path for the assured traffic, all aggregate in-profile service packets reach the service destination.

Packets transmitted from the service queue that are above the committed rate are marked out-of-profile. When an assured out-of-profile service packet is received at a congestion point in the network, it is discarded before in-profile assured service packets.

Multiple assured classes are supported with relative weighting between them. In DiffServ, the code points for the various Assured classes are AF4, AF3, AF2, and AF1. Typically, AF4 has the highest weight of the four and AF1 the lowest. The Assured and Low-1 classes are differentiated based on the default DSCP mappings. All DSCP and EXP mappings can be modified by the user.

Best-effort classes

The best-effort classes are Low 2 (l2) and Best-Effort (be). The best-effort forwarding classes have no delivery guarantees. All packets within this class are treated by default as out-of-profile assured service packets.

Queue parameters

This section describes the queue parameters provisioned on access and queues for QoS.

Queue ID

The queue ID is used to uniquely identify the queue. The queue ID is only unique within the context of the QoS policy within which the queue is defined.

Unicast or multipoint queue

Unicast queues are used for all services and routing when the traffic is forwarded to a single destination.

Multipoint ingress queues are used by VPLS services for multicast, broadcast, and unknown traffic and by IES and VPRN services for multicast traffic when IGMP, MLD, or PIM is enabled on the service interface.

Queue type

The type of a queue dictates how it is scheduled relative to other queues at the hardware level. Being able to define the scheduling properties of a queue is important because a single queue allows support for multiple forwarding classes.

The queue type defines the relative priority of the queue and can be configured to expedited (higher priority) or best-effort (lower) priority. However, the instantaneous scheduling priority of a queue changes dynamically depending on its current scheduling rate compared to its operational Committed Information Rate (CIR) and Fair Information Rate (FIR) (see Queue scheduling). Parental virtual schedulers can be defined for the queue using scheduler policies which enforce how the queue interacts for bandwidth with other queues associated with the same scheduler hierarchy (see Scheduler policies).

The queue type of SAP ingress and egress queues, network queue policy queues, and ingress queue group template queues are defined at queue creation time. The queue type of egress queue group template queues and shared-queue policy queues can be modified after the queue has been created.

The default behavior for SAP ingress and egress queues, network queue policy queues, and shared queue policy queues is to automatically choose the expedited or best effort nature of the queue based on the forwarding classes mapped to it. This is achieved by configuring the queue type to auto-expedited. As long as all forwarding classes mapped to the queue are expedited (nc, ef, h1, or h2), the queue is treated as an expedited queue by the hardware schedulers. When any best effort forwarding classes are mapped to the queue (be, af, l1, or l2), the queue is treated as best effort by the hardware schedulers.

The default queue type for ingress queue group template and egress queue group template queues is best-effort.

Queue scheduling

Packets are scheduled from queues by hardware schedulers based on the type of the queue (see Queue type) and the current scheduling rate of the queue compared to its operational CIR and FIR. This applies to unicast queues at both ingress and egress, and multipoint queues at ingress, but not to HSQ IOM queues.

The queue type should be chosen based on the kind of traffic in the forwarding classes mapped to the queue.

The hardware scheduler services queues to forward packets from them in a strict priority order, as follows:

Ingress queues, in priority order:

Expedited queues where the queue’s current scheduling rate is below its operational FIR

Best effort queues where the queue’s current scheduling rate is below its operational FIR

Expedited queues where the queue’s current scheduling rate is below its operational CIR

Best effort queues where the queue’s current scheduling rate is below its operational CIR

Expedited queues where the queue’s current scheduling rate is above both its operational FIR and CIR

Best effort queues where the queue’s current scheduling rate is above both its operational FIR and CIR

-

Egress queues, in priority order:

-

Expedited queues where the queue’s current scheduling rate is below its operational FIR

-

Best effort queues where the queue’s current scheduling rate is below its operational FIR

-

Expedited queues where the queue’s current scheduling rate is below its operational CIR

-

Best effort queues where the queue’s current scheduling rate is below its operational CIR

-

Expedited queues where the queue’s current scheduling rate is above both its operational FIR and CIR

-

Best effort queues where the queue’s current scheduling rate is above both its operational FIR and CIR

-

Peak information rate

The Peak Information Rate (PIR) defines the maximum rate at which packets are allowed to exit the queue. The PIR does not specify the maximum rate at which packets may enter the queue; this is governed by the queue's ability to absorb bursts and is defined by its maximum burst size (MBS).

The actual transmission rate of a service queue depends on more than just its PIR. Each queue is competing for transmission bandwidth with other queues. Each queue's PIR, CIR, FIR, and the queue type (see Queue type) all combine to affect a queue's ability to transmit packets, as described in Queue scheduling.

The PIR is provisioned on ingress and egress queues within service ingress and egress QoS policies, network queue policies, ingress and egress queue group templates, and shared queue policies.

When defining the PIR for a queue, the value specified is the administrative PIR for the queue. The router has a number of native rates in hardware that it uses to determine the operational PIR for the queue. The user has some control over how the administrative PIR is converted to an operational PIR should the hardware not support the exact PIR value specified. The interpretation of the administrative PIR is discussed in Adaptation rule.

Committed information rate

The Committed Information Rate (CIR) for a queue performs two distinct functions:

-

profile marking by service ingress queues

Service ingress queues (configured in SAP ingress QoS policies or ingress queue group templates) mark packets in-profile or out-of-profile based on the CIR. For each packet in a service ingress queue, the CIR is compared to the current transmission rate of the queue. If the current rate is at or below the CIR threshold, the transmitted packet is internally marked in-profile. If the current rate is above the threshold, the transmitted packet is internally marked out-of-profile. This operation can be overridden by configuring cir-non-profiling under the queue. This allows the queue scheduling priority to continue to be based on the below CIR or above CIR of the queue, but packets are not re-profiled depending on the state of the queue when they are scheduled (below CIR or above CIR). Instead, their profile state remains as out-of-profile, unless they are explicitly classified as in-profile or out-of-profile in which case they remain in-profile or out-of-profile.

-

scheduler queue priority

The scheduler serving a group of service ingress or egress queues prioritizes individual queues based on their current FIR, CIR, and PIR states. See Queue scheduling for more information about queue scheduling.

All router queues support the concept of in-profile, out-of-profile, together with inplus-profile and exceed-profile at egress only. The network QoS policy applied at network egress determines how or if the profile state is marked in packets transmitted into the service core network. If the profile state is marked in the service core packets, the packets are dropped preferentially at congestion points in the core as follows:

exceed-profile

out-of-profile

in-profile

inplus-profile

When defining the CIR for a queue, the value specified is the administrative CIR for the queue. The router has a number of native rates in hardware that it uses to determine the operational CIR for the queue. The user has some control over how the administrative CIR is converted to an operational CIR if the hardware does not support the exact CIR specified. See Adaptation rule for more information about the interpretation of the administrative CIR.

Although the router is flexible in how the CIR can be configured, there are conventional ranges for the CIR based on the forwarding class of a queue. A service queue associated with the high-priority class normally has the CIR threshold equal to the PIR rate, although the router allows the CIR to be provisioned to any rate below the PIR if this behavior is required. If the service queue is associated with a best-effort class, the CIR threshold is normally set to zero; however, this is flexible.

The CIR for a service queue is provisioned in ingress and egress service queues within service ingress QoS policies and service egress QoS policies respectively. CIRs for network queues are defined within network queue policies. CIRs for queue group instance queues are defined within ingress and egress queue group templates.

Fair information rate

A Fair Information Rate (FIR) can be configured on a queue to use two additional scheduling priorities (expedited queue within FIR and best-effort queue within FIR). The queues which operate below their FIR are always served before the queues operating at or above their FIR. See Queue scheduling for more information about queue scheduling.

The FIR does not affect the queue packet profiling operation which is dependent only on the queue's CIR rate at the time that the packet is scheduled; the FIR is purely a scheduling mechanism.

When defining the FIR for a queue, the value specified is the administrative FIR for the queue. The router has a number of native rates in hardware that it uses to determine the operational FIR for the queue. The user has some control over how the administrative FIR is converted to an operational FIR if the hardware does not support the exact FIR specified. See Adaptation rule for more information about the interpretation of the administrative FIR.

The FIR rate is supported in SAP ingress QoS policies (for ingress SAP and subscriber queues), ingress queue group templates (for access ingress queue group queues), and network queue policies (for ingress and egress network queues). The FIR can also be configured on system-created ingress shared queues. The FIR is configured in kb/s in a SAP ingress QoS policy and an ingress queue group template, and as a percent in a SAP ingress QoS policy, network queue policy, and ingress shared queue. The default FIR rate is zero (0) with an adaptation rule closest.

FIR is only supported on FP4-based or later hardware and is ignored when the related policy is applied to FP3-based hardware.

The FIR rate is not used within virtual hierarchical scheduling (see Virtual hierarchical scheduling), but it is capped by the resulting operational PIR.

A FIR should not be configured in a SAP ingress QoS policy, ingress queue group template, or network queue policy associated with a LAG which spans FP4-based or later and FP3-based hardware as the resulting operation could be different depending on which hardware type the traffic uses.

Adaptation rule

The adaptation rule provides the QoS provisioning system with the ability to adapt specific FIR-, CIR-, and PIR-defined administrative rates to the underlying capabilities of the hardware that the queue is created on to derive the operational rates. The administrative FIR, CIR, and PIR rates are translated to operational rates enforced by the hardware queue. The adaptation rule provides a constraint used when the exact rate is not available because of hardware implementation trade-offs.

For the FIR, CIR, and PIR parameters individually, the system attempts to find the best operational rate depending on the defined constraint. The supported constraints are:

- minimum

- finds the hardware supported rate that is equal to or higher than the specified rate

- maximum

- finds the hardware supported rate that is equal to or lesser than the specified rate

- closest

- finds the hardware supported rate that is closest to the specified rate

Depending on the hardware on which the queue is provisioned, the operational FIR, CIR, and PIR settings used by the queue depends on the method that the hardware uses to implement and represent the mechanisms that enforce the FIR, CIR, and PIR rates.

As the hardware has a very granular set of rates, Nokia’s recommended method to determine which hardware rate is used for a queue is to configure the queue rates with the associated adaptation rule and use the show pools output command to display the rate achieved.

To illustrate how the adaptation rule constraints (minimum, maximum, and closest) are evaluated in determining the operational CIR or PIR rates, assume there is a queue on an IOM3-XP where the administrative CIR and PIR rates are 401 Mb/s and 403 Mb/s, respectively.

The following output shows the operating CIR and PIR rates achieved for the different adaptation rule settings:

*A:PE# # queue using default adaptation-rule=closest

*A:PE# show qos sap-egress 10 detail | match expression "Queue-Id|CIR Rule"

Queue-Id : 1 Queue-Type : auto-expedite

PIR Rule : closest CIR Rule : closest

*A:PE#

*A:PE# show pools 1/1/1 access-egress service 1 | match expression "PIR|CIR"

Admin PIR : 403000 Oper PIR : 403200

Admin CIR : 401000 Oper CIR : 401600

*A:PE#

*A:PE# configure qos sap-egress 10 queue 1 adaptation-rule pir max cir max

*A:PE#

*A:PE# show qos sap-egress 10 detail | match expression "Queue-Id|CIR Rule"

Queue-Id : 1 Queue-Type : auto-expedite

PIR Rule : max CIR Rule : max

*A:PE#

*A:PE# show pools 1/1/1 access-egress service 1 | match expression "PIR|CIR"

Admin PIR : 403000 Oper PIR : 401600

Admin CIR : 401000 Oper CIR : 400000

*A:PE#

*A:PE# configure qos sap-egress 10 queue 1 adaptation-rule pir min cir min

*A:PE#

*A:PE# show qos sap-egress 10 detail | match expression "Queue-Id|CIR Rule"

Queue-Id : 1 Queue-Type : auto-expedite

PIR Rule : min CIR Rule : min

*A:PE#

*A:PE# show pools 1/1/1 access-egress service 1 | match expression "PIR|CIR"

Admin PIR : 403000 Oper PIR : 403200

Admin CIR : 401000 Oper CIR : 401600

*A:PE#

Committed burst size

The Committed Burst Size (CBS) parameter specifies the size of buffer that can be drawn from the reserved buffer portion of the queue buffer pool. When the reserved buffers for a specific queue have been used, the queue competes with other queues for additional buffer resources up to the MBS.

The CBS is provisioned on ingress and egress service queues within service ingress QoS policies and service egress QoS policies, respectively. The CBS for a queue is specified in kbytes.

The CBS for a network queue is defined within network queue policies based on the forwarding class. The CBS for the queue for the forwarding class is defined as a percentage of buffer space for the pool.

Maximum burst size

The Maximum Burst Size (MBS) parameter specifies the maximum queue depth to which a queue can grow. This parameter ensures that a customer that is massively or continuously over-subscribing the PIR of a queue does not consume all the available buffer resources. For high-priority forwarding class service queues, the MBS can be relatively smaller than the other forwarding class queues because the high-priority service packets are scheduled with priority over other service forwarding classes.

The MBS is provisioned on ingress and egress service queues within service ingress QoS policies and service egress QoS policies, respectively. The MBS for a service queue is specified in bytes or kbytes.

The MBSs for network queues are defined within network queue policies based on the forwarding class. The MBSs for the queues for the forwarding class are defined as a percentage of buffer space for the pool.

Queue drop tails

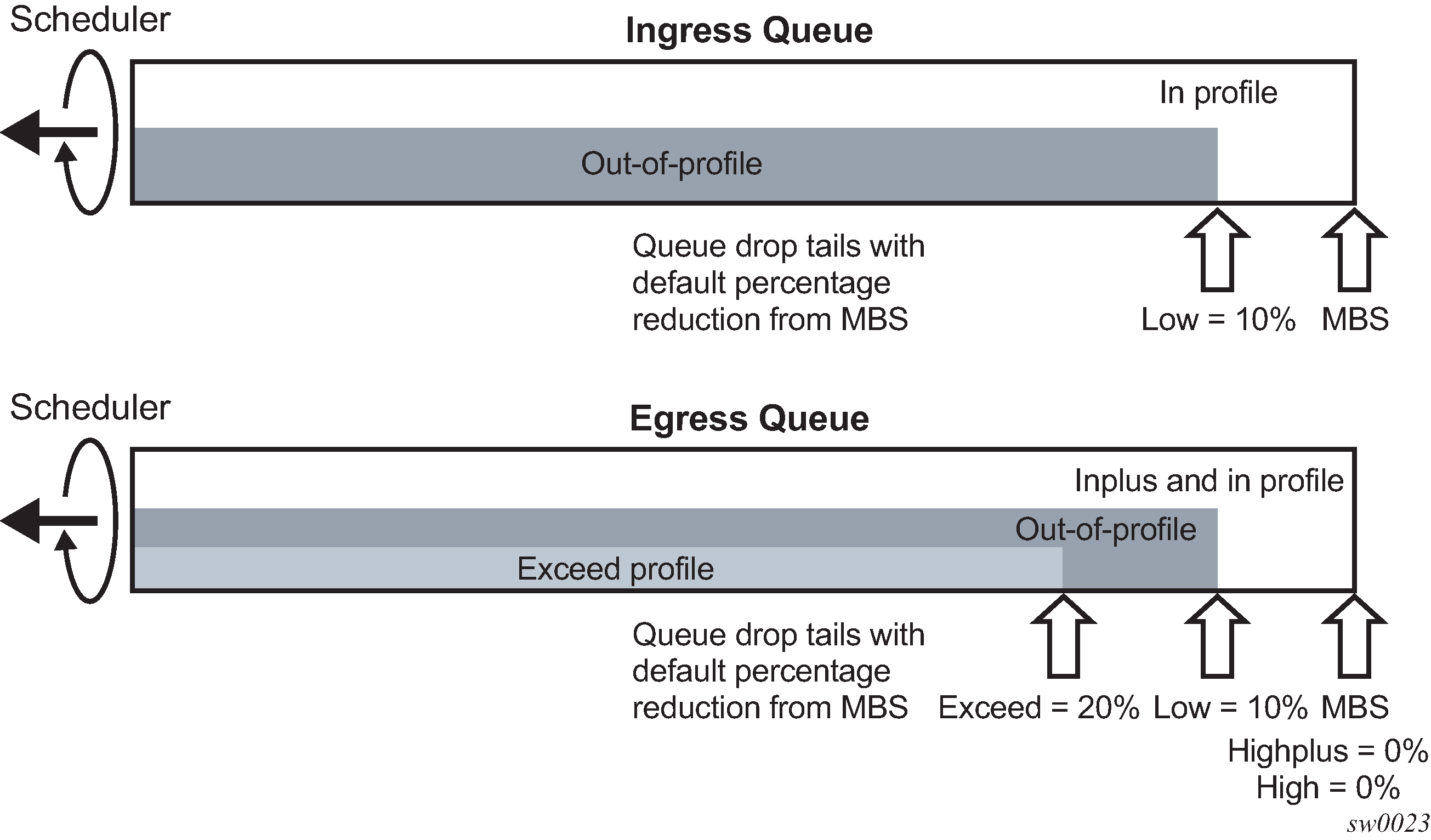

The MBS determines the maximum queue depth after which no additional packets are accepted into the queue. Additional queue drop tails are available for the different packet profiles to allow preferential access to the queue's buffers which allows higher priority packets to be accepted into a queue when there is congestion for lower priority packets.

At ingress there is a low drop tail in addition to the MBS. High enqueuing priority packets (for ingress SAP priority mode queues) and in-profile packets (for ingress SAP profile mode queues, network, and shared queues) are allowed to fill up the queue up to the MBS, however, low enqueuing priority packets (for ingress SAP priority mode queues) and out-of-profile packets (for ingress SAP profile mode queues, network, and shared queues) can only fill the queue up to the queue's low drop tail setting.

At egress there are four drop tails in addition to the MBS, one for each profile state:

an exceed drop tail for exceed-profile packets

a low drop tail for out-of-profile packets

a high drop tail for in-profile packets

a highplus drop tail for highplus-profile packets

Each profile type can only fill the queue up to its corresponding drop tail. Ingress and egress queue drop tails shows the ingress and egress queue drop tails.

At both ingress and egress, the drop tails are configured as a percentage reduction from the MBS (specifying 10% places the drop tail at 90% of the MBS) and consequently all are limited by the queue's MBS.

The default percentage for the low drop tail for ingress SAP, queue group, and shared queues is a reduction from the MBS of 10% (low)

The default percentages for the drop tails for egress SAP, queue group, and network queues is a percentage reduction from the MBS of:

exceed = 20%

low = 10%

high = 0%

highplus = 0%

The exceed, high, and highplus drop tails are not configurable for network queues, however the exceed drop tail is set to a value of 10% in addition to low drop tail and capped by the MBS.

The four drop tails can be configured in any order within egress SAP and queue group queues, however it is logical to order them (from shortest to longest) as exceed, then low, then high, then highplus.

The low drop tail configuration can be overridden for ingress and egress SAP and queue group queues, and for network egress queues. It is also possible to override the low drop tail for subscriber queues within an SLA profile using the keyword high-prio-only.

When there is congestion the drop tail ordering gives preferential access to the queue's buffers. For example, if the drop tails on an egress SAP queue are configured as exceed = 20%, low = 10%, high = 5% and highplus = 0%, when the queue depth is below 80% all profile packet types are accepted into the queue. If the depth increases above 80%, then exceed profile packets are not accepted and are therefore dropped, while the out-of-profile, in-profile, and inplus profile packets are still accepted into the queue (giving them preference over the exceed profile packets). If the queue depth goes beyond 90% the out-of-profile packets are also dropped. Similarly, if the queue depth goes beyond 95% the in-profile packets are dropped. It is only when the MBS has been reached that the inplus profile packets are dropped. This example assumes that the pool in which the queue exists is not congested.

WRED per queue

Egress SAP, subscriber, and network queues by default use drop tails within the queues and WRED slopes applied to the pools in which the queues reside to apply congestion control to the traffic in those queues. An alternative to this is to apply the WRED slopes directly to the egress queue using WRED per queue. See WRED-per-queue congestion control summary .

WRED per queue is supported for SAP egress QoS policy queues (and therefore to egress SAP and subscriber queues) and for queues within an egress queue group template. There are two modes available:

Native, which is supported on FP3- and higher-based hardware

Pool-per-queue

| Inplus-profile traffic | In-profile traffic | Out-of- profile traffic | Exceed-profile traffic | WRED-queue mode | Slope usage | Comments | |

|---|---|---|---|---|---|---|---|

FP3- and higher-based queues |

Drop tail (MBS) used |

Drop tail (MBS) used |

Low slope used |

Exceed slope used |

Native |

Exceed-low |

If slope shutdown, MBS is used Highplus and high slope not used |

Pool-per-queue |

Highplus slope |

High slope |

Low slope |

Exceed slope |

Pool-per-queue |

Default |

If slope shutdown, MBS is used |

Pool/megapool |

Highplus slope |

High slope |

Low slope |

Exceed slope |

N/A |

N/A |

If slope shutdown, total pool size is used |

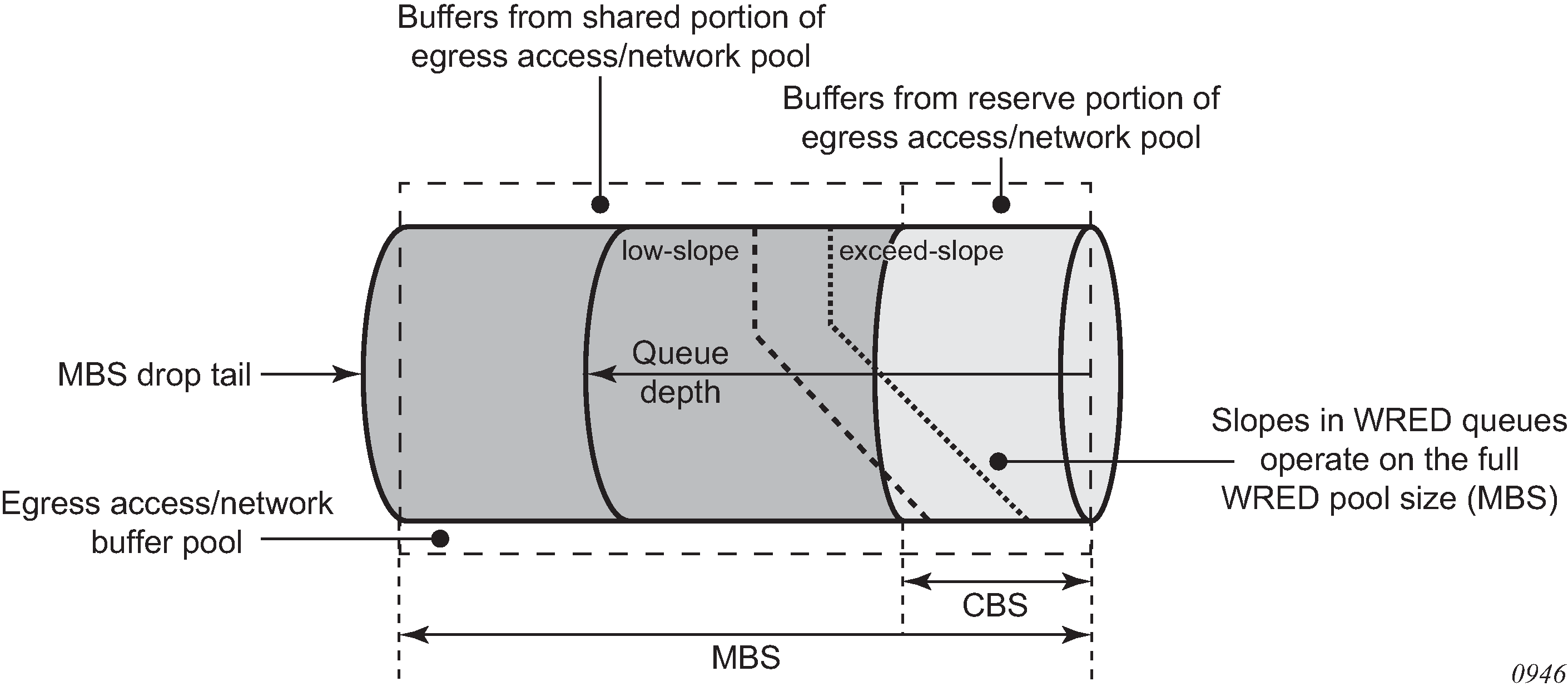

Native queue mode

When an egress queue is configured for native mode, it uses the native WRED capabilities of the forwarding plane queue. This is supported on FP3- and higher-based hardware.

Congestion control within the queue uses the low and exceed slopes from the applied slope policy together with the MBS drop tail. The queue continues to take buffers from its associated egress access or network buffer pool, on which WRED can also be enabled. This is shown in WRED queue: native mode.

To configure a native WRED queue, the wred-queue command is used under queue in a SAP egress QoS policy or egress queue group template with the mode set to native as follows:

qos

queue-group-templates

egress

queue-group <queue-group-name> create

queue <queue-id> create

wred-queue [policy <slope-policy-name>] mode native

slope-usage exceed-low

exit

exit

exit

exit

sap-egress <policy-id> create

queue <queue-id> [<queue-type>] [create]

wred-queue [policy <slope-policy-name>] mode native

slope-usage exceed-low

exit

exitCongestion control is provided by both the slope policy applied to the queue and the MBS drop tail.

The slope-usage defines the mapping of the traffic profile to the WRED slope and only exceed-low is allowed with a native mode queue. The slope mapping is shown in the following example and requires the low and exceed slopes to be no shutdown in the applied slope policy (otherwise traffic uses the MBS drop tail or a pool slope):

Out-of-profile maps to the low slope.

Exceed-profile maps to the exceed slope.

The instantaneous queue depth is used against the slopes when native mode is configured; consequently, the time-average-factor within the slope policy is ignored.

The inplus and in-profile traffic uses the MBS drop tail for congestion control (the highplus or high slopes are not used with a native mode queue).

If a queue is configured to use native mode WRED per queue on hardware earlier than FP3, the queue operates as a regular queue.

For example, the following SAP egress QoS policy is applied to SAP on an FP3- or higher-based hardware:

sap-egress 10 create

queue 1 create

wred-queue policy "slope1" mode native

slope-usage exceed-low

exit

exitThe details of both the pool and queue can then be shown using the following command:

*A:PE# show pools 1/1/1 access-egress service 1

===============================================================================

Pool Information

===============================================================================

Port : 1/1/1

Application : Acc-Egr Pool Name : default

CLI Config. Resv CBS : 30%(default)

Resv CBS Step : 0% Resv CBS Max : 0%

Amber Alarm Threshold: 0% Red Alarm Threshold : 0%

-------------------------------------------------------------------------------

Queue-Groups

-------------------------------------------------------------------------------

Queue-Group:Instance : policer-output-queues:1

-------------------------------------------------------------------------------

Utilization State Start-Avg Max-Avg Max-Prob

-------------------------------------------------------------------------------

HiPlus-Slope Down 85% 100% 80%

High-Slope Down 70% 90% 80%

Low-Slope Down 50% 75% 80%

Exceed-Slope Down 30% 55% 80%

Time Avg Factor : 7

Pool Total : 192000 KB

Pool Shared : 134400 KB Pool Resv : 57600 KB

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Current Resv CBS Provisioned Rising Falling Alarm

%age all Queues Alarm Thd Alarm Thd Color

-------------------------------------------------------------------------------

30% 0 KB NA NA Green

Pool Total In Use : 0 KB

Pool Shared In Use : 0 KB Pool Resv In Use : 0 KB

WA Shared In Use : 0 KB

HiPlus-Slope Drop Pr*: 0 Hi-Slope Drop Prob : 0

Lo-Slope Drop Prob : 0 Excd-Slope Drop Prob : 0

===============================================================================

Queue Information

===============================================================================

===============================================================================

Queue : 1->1/1/1->1

===============================================================================

FC Map : be l2 af l1 h2 ef h1 nc

Dest Tap : not-applicable Dest FP : not-applicable

Admin PIR : 10000000 Oper PIR : Max

Admin CIR : 0 Oper CIR : 0

Admin MBS : 12000 KB Oper MBS : 12000 KB

High-Plus Drop Tail : not-applicable High Drop Tail : not-applicable

Low Drop Tail : not-applicable Exceed Drop Tail : not-applicable

CBS : 0 KB Depth : 0

Slope : slope1

WRED Mode : native Slope Usage : exceed-low

-------------------------------------------------------------------------------

HighPlus Slope Information

-------------------------------------------------------------------------------

State : disabled

Start Average : 0 KB Max Average : 0 KB

Max Probability : 0 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

High Slope Information

-------------------------------------------------------------------------------

State : disabled

Start Average : 0 KB Max Average : 0 KB

Max Probability : 0 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Low Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 6960 KB Max Average : 8880 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Exceed Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 4680 KB Max Average : 6600 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

===============================================================================

===============================================================================

===============================================================================

* indicates that the corresponding row element may have been truncated.

*A:PE#

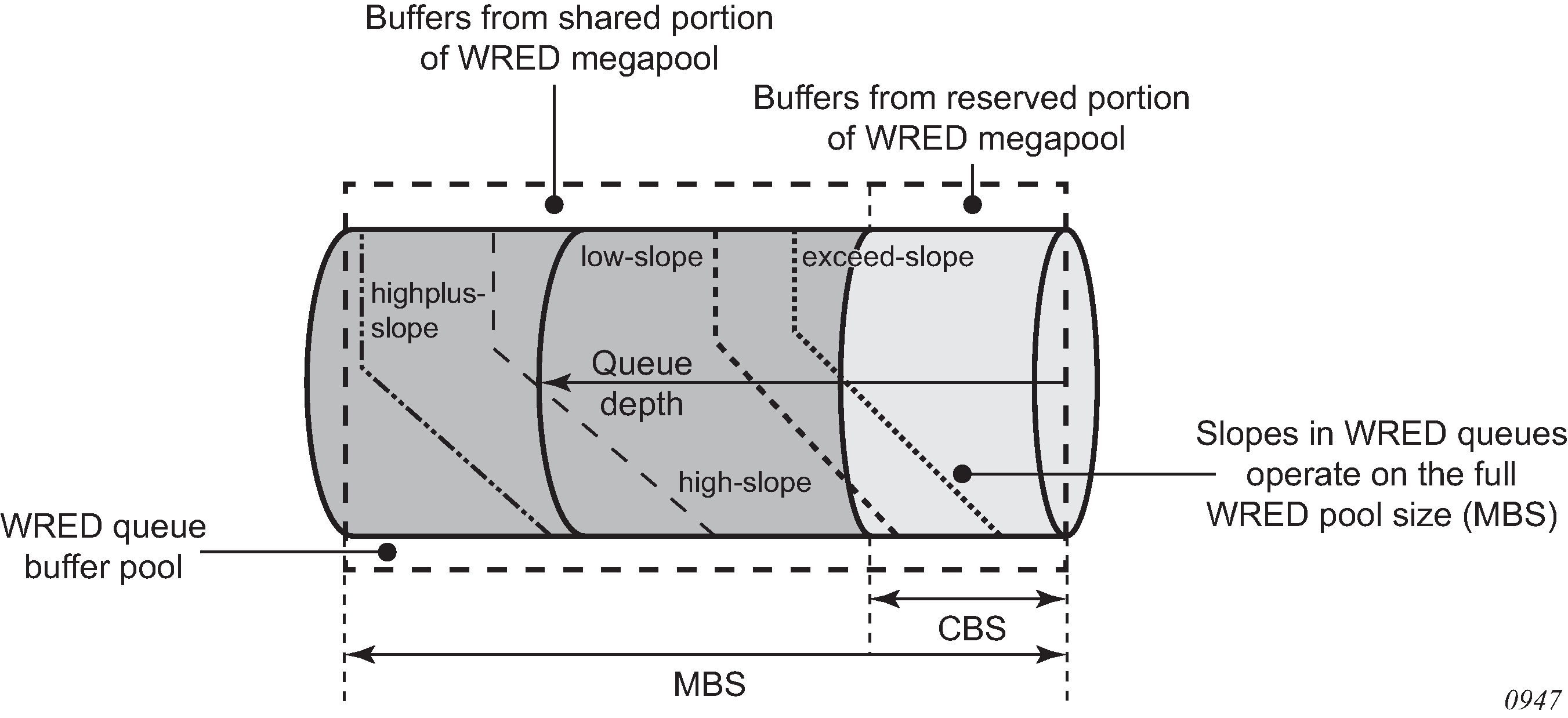

Pool per queue mode

When pool per queue mode is used, the queue resides in its own pool that is located in the forwarding plane egress megapool. The size of the pool is the same as the size of the queue (based on the MBS); consequently, the WRED slopes operating on the pool's buffer utilization are reacting to the congestion depth of the queue. The size of the reserved CBS portion of the buffer pool is dictated by the queue's CBS parameter. This is shown in WRED queue: pool-per-queue mode.

The queue pools take buffers from the WRED egress megapool that must be enabled per FP; if this megapool is not enabled, the queue operates as a regular queue. By default, only the ingress normal and egress normal megapools exist on an FP. The egress WRED megapool is configured using the following commands:

configure

card <slot-number>

fp <fp-number>

egress

wred-queue-control

[no] buffer-allocation min <percentage> max

<percentage>

[no] resv-cbs min <percentage> max <percentage>

[no] shutdown

[no] slope-policy <slope-policy-name>The buffer allocation determines how much of the egress normal megapool is allocated for the egress WRED megapool, with the resv-cbs defining the amount of reserved buffer space in the egress WRED megapool. In both cases, the min and max values must be equal. The slope-policy defines the WRED slope parameters and the time average factor used on the megapool to handle congestion as the megapool buffers are used. The no shutdown command enables the megapool. The megapools on card 1 fp 1 can be shown as follows:

*A:PE# show megapools 5 fp 1

===============================================================================

MegaPool Summary

===============================================================================

Type Slot-FP App. Pool Name Actual ResvCBS PoolSize

Admin ResvCBS

-------------------------------------------------------------------------------

fp 5-1 Egress normal n/a 282624

n/a

fp 5-1 Ingress normal n/a 374784

n/a

fp 5-1 Egress wred 24576 93696

25%

===============================================================================

*A:PE#

To configure a pool per queue, the wred-queue command is used under queue in a SAP egress QoS policy or egress queue group template with the mode set to pool-per-queue as follows:

qos

queue-group-templates

egress

queue-group <queue-group-name> create

queue <queue-id> create

wred-queue [policy <slope-policy-name>] mode pool-

per-queue

slope-usage default

exit

exit

exit

exit

sap-egress <policy-id> create

queue <queue-id> [<queue-type>] [create]

wred-queue [policy <policy-name>] mode pool-per-queue

slope-usage default

exit

exitCongestion control is provided by the slope policy applied to the queue, with the slopes to be used having been no shutdown (otherwise traffic uses the MBS drop tail or a megapool slope). The slope-usage defines the mapping of the traffic profile to the WRED slope and only default is allowed with pool-per-queue that gives the following mapping:

Inplus-profile maps to the highplus slope.

In-profile maps to the high slope.

Out-of-profile maps to the low slope.

Exceed-profile maps to the exceed slope.

For example, the following SAP egress QoS policy is applied to SAP on an FP with the egress WRED megapool enabled:

sap-egress 10 create

queue 1 create

wred-queue policy "slope1" mode pool-per-queue

slope-usage default

exit

exitThe details of both the megapool and queue pool usages can then be shown using the show megapools command (detailed version):

*A:PE# show megapools 5 fp 1 wred service-id 1

===============================================================================

MegaPool Information

===============================================================================

Slot : 5 FP : 1

Application : Egress Pool Name : wred

Resv CBS : 25%

-------------------------------------------------------------------------------

Utilization State Start-Avg Max-Avg Max-Prob

-------------------------------------------------------------------------------

HiPlus-Slope Down 85% 100% 80%

High-Slope Down 70% 90% 80%

Low-Slope Down 50% 75% 80%

Exceed-Slope Down 30% 55% 80%

Time Avg Factor : 7

Pool Total : 93696 KB

Pool Shared : 69120 KB Pool Resv : 24576 KB

Pool Total In Use : 0 KB

Pool Shared In Use : 0 KB Pool Resv In Use : 0 KB

WA Shared In Use : 0 KB

HiPlus-Slope Drop Pr*: 0 Hi-Slope Drop Prob : 0

Lo-Slope Drop Prob : 0 Excd-Slope Drop Prob : 0

===============================================================================

Queue : 1->5/1/1:1->1

===============================================================================

FC Map : be l2 af l1 h2 ef h1 nc

Dest Tap : not-applicable Dest FP : not-applicable

Admin PIR : 10000000 Oper PIR : Max

Admin CIR : 0 Oper CIR : 0

Admin MBS : 12288 KB Oper MBS : 12288 KB

High-Plus Drop Tail : not-applicable High Drop Tail : not-applicable

Low Drop Tail : not-applicable Exceed Drop Tail : not-applicable

CBS : 0 KB Depth : 0

Slope : slope1

WRED Mode : pool-per-queue Slope Usage : default

Time Avg Factor : 7

-------------------------------------------------------------------------------

HighPlus Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 10752 KB Max Average : 12288 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

High Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 9408 KB Max Average : 10944 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Low Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 6144 KB Max Average : 9216 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Exceed Slope Information

-------------------------------------------------------------------------------

State : enabled

Start Average : 3648 KB Max Average : 6720 KB

Max Probability : 80 % Curr Probability : 0 %

-------------------------------------------------------------------------------

===============================================================================

===============================================================================

* indicates that the corresponding row element may have been truncated.

*A:PE#

Each WRED pool-per-queue uses a WRED pool resource on the FP. The resource usage is displayed in the tools dump resource-usage card [slot-num] fp [fp-number] command output under the Dynamic Q2 WRED Pools header.

Packet markings

Typically, customer markings placed on packets are not treated as trusted from an in-profile or out-of-profile perspective. This allows the use of the ingress buffering to absorb bursts over PIR from a customer and only perform marking as packets are scheduled out of the queue (as opposed to using a hard-policing function that operates on the received rate from the customer). The resulting profile (in or out) based on ingress scheduling into the switch fabric is used by network egress for tunnel marking and egress congestion management.

The high/low priority feature allows a provider to offer a customer the ability to have some packets treated with a higher priority when buffered to the ingress queue. If the queue is configured with a non-zero low drop tail setting, a portion of the ingress queue’s allowed buffers are reserved for high-priority traffic. An access ingress packet must hit an ingress QoS action in order for the ingress forwarding plane to treat the packet as high priority (the default is low priority).

If the ingress queue for the packet is above the low drop tail setting, the packet is discarded unless it has been classified as high priority. The priority of the packet is not retained after the packet is placed into the ingress queue. After the packet is scheduled out of the ingress queue, the packet is considered in-profile or out-of-profile based on the dynamic rate of the queue relative to the queue’s CIR parameter.

At access ingress, the priority of a packet has no effect on which packets are scheduled first. Only the first buffering decision is affected.

At ingress and egress, the current dynamic rate of the queue relative to the queue’s CIR and FIR (where supported) does affect the scheduling priority between queues going to the same destination (either the switch fabric tap or egress port). See Queue scheduling for information about the strict operating priority for queues.

For access ingress, the CIR controls both dynamic scheduling priority and marking threshold (unless cir-non-profiling is configured). At network ingress, the queue’s CIR affects the scheduling priority but does not provide a profile marking function as the network ingress policy trusts the received marking of the packet, based on the network QoS policy.

At egress, the profile of a packet is only important for egress queue buffering decisions and egress marking decisions, not for scheduling priority. The egress queue’s CIR determines the dynamic scheduling priority but does not affect the packet’s ingress determined profile.

Queue counters

The router maintains counters for queues within the system for granular billing and accounting. Each queue maintains the following counters:

counters for packets and octets accepted into the queue

counters for packets and octets rejected at the queue

counters for packets and octets transmitted in-profile

counters for packets and octets transmitted out-of-profile

Color aware profiling

The normal handling of SAP ingress access packets applies an in-profile or out-of-profile state (associated with the colors green and yellow, respectively) to each packet relative to the dynamic rate of the queue while the packet is forwarded toward the egress side of the system. When the queue rate is within or equal to the configured CIR, the packet is considered in-profile. When the queue rate is above the CIR, the packet is considered out-of-profile (this applies when the packet is scheduled out of the queue, not when the packet is buffered into the queue).

Egress queues use the profile marking of packets to preferentially buffer in-profile packets during congestion events. When a packet has been marked in-profile or out-of-profile by the ingress access SLA enforcement, the packet is tagged with an in-profile or out-of-profile marking allowing congestion management in subsequent hops toward the packet’s ultimate destination. Each hop to the destination must have an ingress table that determines the in-profile or out-of-profile nature of a packet, based on its QoS markings.

Color aware profiling adds the ability to selectively treat packets received on a SAP as in-profile or out-of-profile regardless of the queue forwarding rate. This allows a customer or access device to color a packet out-of-profile with the intention of preserving in-profile bandwidth for higher priority packets. The customer or access device may also color the packet in-profile, but this is rarely done as the original packets are usually already marked with the in-profile marking.

Each ingress access forwarding class may have one or multiple subclass associations for SAP ingress classification purposes. Each subclass retains the chassis-wide behavior defined to the parent class while providing expanded ingress QoS classification actions. Subclasses are created to provide a match association that enforces actions different than the parent forwarding class. These actions include explicit ingress remarking decisions and color aware functions.

All non-profiled and profiled packets are forwarded through the same ingress access queue to prevent out-of-sequence forwarding. Profiled packets in-profile are counted against the total packets flowing through the queue that are marked in-profile. This reduces the amount of CIR available to non-profiled packets causing fewer to be marked in-profile. Profiled packets out-of-profile are not counted against the total packets flowing through the queue that are marked in-profile. This ensures that the amount of non-profiled packets marked in-profile is not affected by the profiled out-of-profile packet rate.

QoS policies overview

Service ingress, service egress, and network QoS policies are defined with a scope of either template or exclusive. Template policies can be applied to multiple SAPs or IP interfaces; exclusive policies can only be applied to a single entity.

On most systems, the number of configurable SAP ingress and egress QoS policies per system is larger than the maximum number that can be applied per FP. The tools>dump>resource-usage>card>fp output displays the number of policies applied on an FP (the default SAP ingress policy is always applied once for internal use). The tools>dump>resource-usage>system output displays the usage of the policies at a system level. The show>qos>sap-ingress and show>qos>sap-egress commands can be used to show the number of policies configured.

One service ingress QoS policy and one service egress QoS policy can be applied to a specific SAP. One network QoS policy can be applied to a specific IP interface. A network QoS policy defines both ingress and egress behavior.

Router QoS policies are applied on service ingress, service egress, and network interfaces and define classification rules for how traffic is mapped to queues:

the number of forwarding class queues

the queue parameters used for policing, shaping, and buffer allocation

QoS marking/interpretation

The router supports thousands of queues. The exact numbers depend on the hardware being deployed.

There are several types of QoS policies:

service ingress

service egress

network (for ingress and egress)

network queue (for ingress and egress)

scheduler

shared queue

slope

Service ingress QoS policies are applied to the customer-facing SAPs and map traffic to forwarding class queues on ingress. The mapping of traffic to queues can be based on combinations of customer QoS marking (IEEE 802.1p bits, DSCP, and ToS precedence), IP criteria, and MAC criteria.

The characteristics of the forwarding class queues are defined within the policy as to the number of forwarding class queues for unicast traffic and the queue characteristics. There can be up to eight unicast forwarding class queues in the policy; one for each forwarding class. A service ingress QoS policy also defines up to three queues per forwarding class to be used for multipoint traffic for multipoint services.

In the case of VPLS, four types of forwarding are supported (that is not to be confused with forwarding classes): unicast, multicast, broadcast, and unknown. Multicast, broadcast, and unknown types are flooded to all destinations within the service while the unicast forwarding type is handled in a point-to-point manner within the service.

Service egress QoS policies are applied to SAPs and map forwarding classes to service egress queues for a service. Up to eight queues per service can be defined for the eight forwarding classes. A service egress QoS policy also defines how to remark the forwarding class to IEEE 802.1p bits in the customer traffic.

Network QoS policies are applied to IP interfaces. On ingress, the policy applied to an IP interface maps incoming DSCP and EXP values to forwarding class and profile state for the traffic received from the core network. On egress, the policy maps forwarding class and profile state to DSCP and EXP values for traffic to be transmitted into the core network.

Network queue policies are applied on egress to network ports and channels and on ingress to FPs. The policies define the forwarding class queue characteristics for these entities.

Service ingress, service egress, and network QoS policies are defined with a scope of either template or exclusive. Template policies can be applied to multiple SAPs or IP interfaces whereas exclusive policies can only be applied to a single entity.

One service ingress QoS policy and one service egress QoS policy can be applied to a specific SAP. One network QoS policy can be applied to a specific IP interface. A network QoS policy defines both ingress and egress behavior.

If no QoS policy is explicitly applied to a SAP or IP interface, a default QoS policy is applied.

A summary of the major functions performed by the QoS policies is listed in QoS policy types and descriptions .

Policy type |

Applied at… |

Description |

See |

|---|---|---|---|

Service Ingress |

SAP ingress |

Defines up to 32 forwarding class queues and queue parameters for traffic classification Defines up to 31 multipoint service queues for broadcast, multicast, and destination unknown traffic in multipoint services Defines match criteria to map flows to the queues based on combinations of customer QoS (IEEE 802.1p/DE bits, DSCP, TOS precedence), IP criteria, or MAC criteria |

|

Service Egress |

SAP egress |

Defines up to eight forwarding class queues and queue parameters for traffic classification Maps one or more forwarding classes to the queues |

|

Network |

Router interface |

Used for classification/marking of IP and MPLS packets At ingress, defines DSCP, dot1p MPLS LSP-EXP, and IP criteria classification to FC mapping At ingress, defines FC to policer/queue-group queue mapping At egress, defines DSCP or precedence FC mapping At egress, defines FC to policer/queue-group queue mapping At egress, defines DSCP, MPLS LSP-EXP, and dot1p/DE marking |

|

Network Queue |

Network ingress FP and egress port |

Defines forwarding class mappings to network queues and queue characteristics for the queues |

|

Slope |

Ports |

Enables or disables the WRED slope parameters within an egress queue, an ingress pool, or an egress megapool |

|

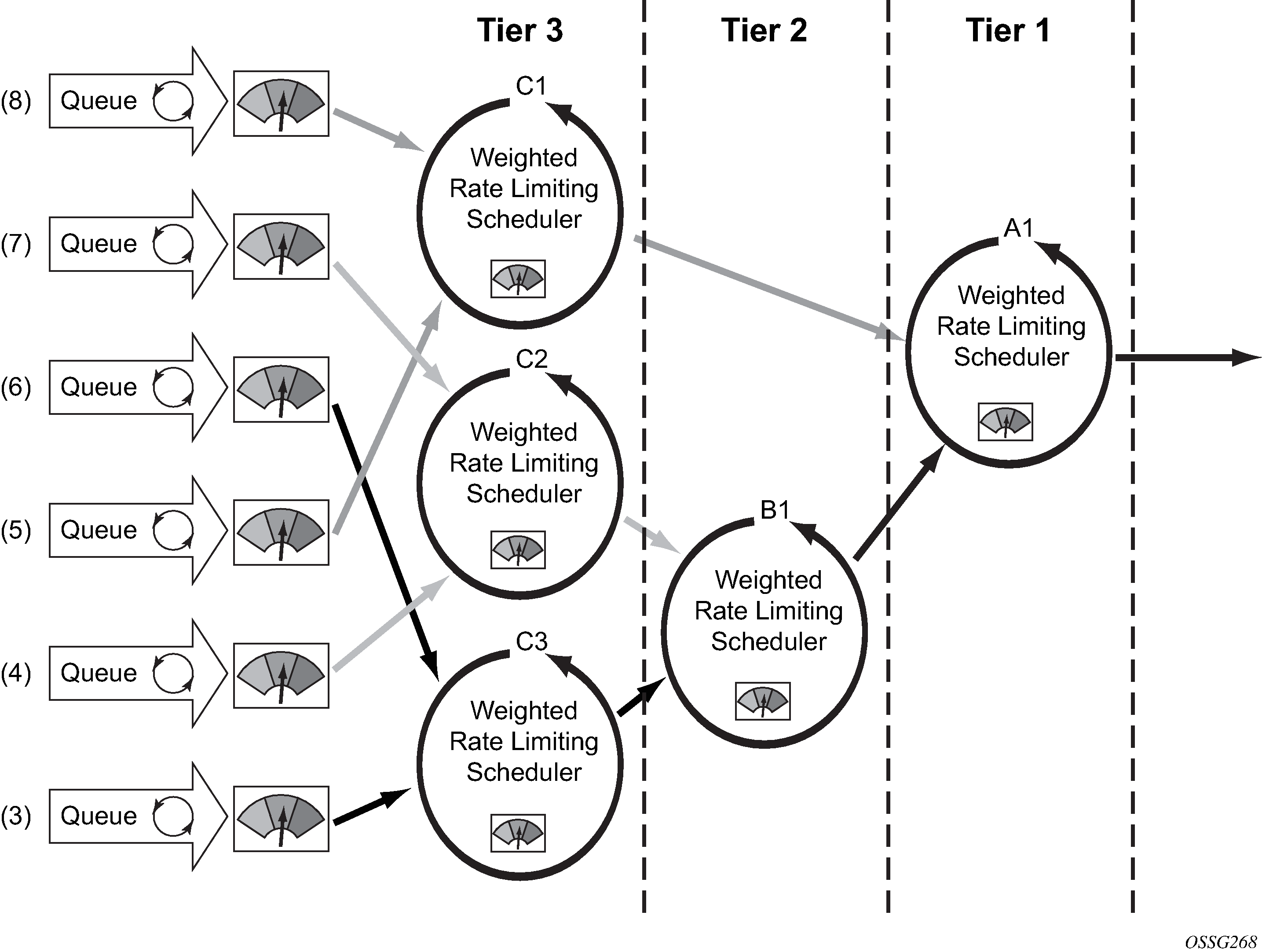

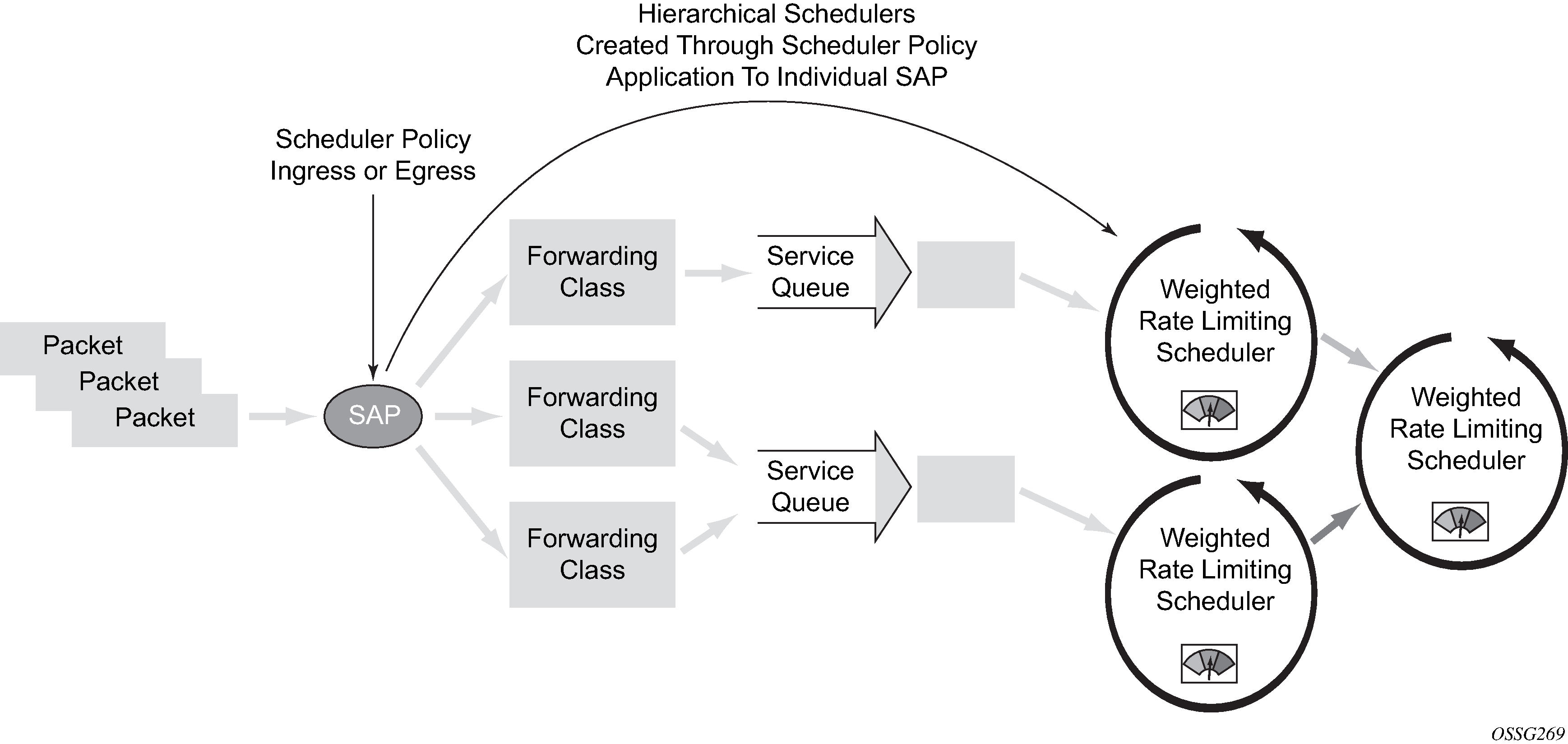

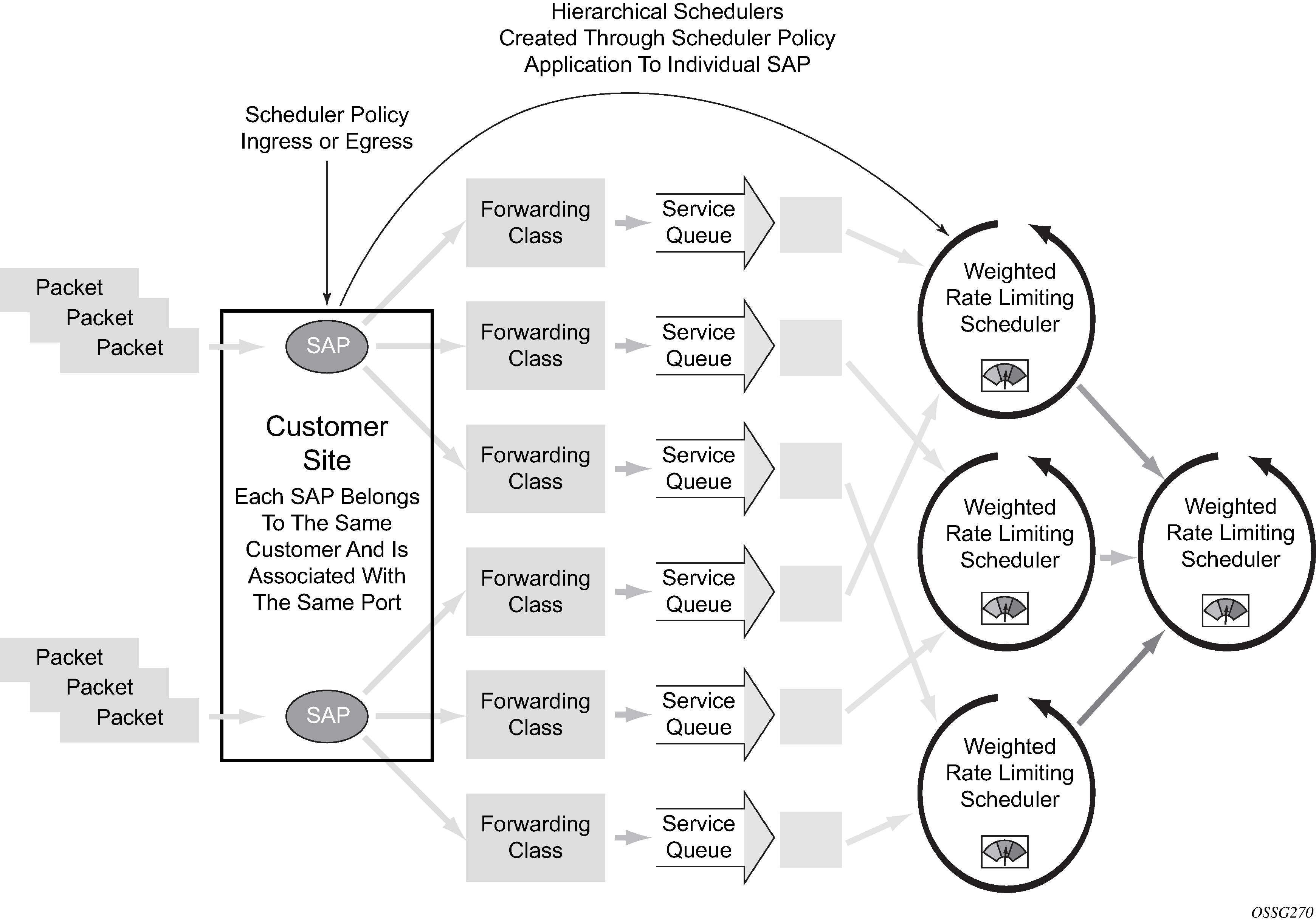

Scheduler |

Customer multiservice site Service SAP |

Defines the hierarchy and parameters for each scheduler Defined in the context of a tier that is used to place the scheduler within the hierarchy Three tiers of virtual schedulers are supported |

|

Shared Queue |

SAP ingress |

Shared queues can be implemented to mitigate the queue consumption on an MDA |

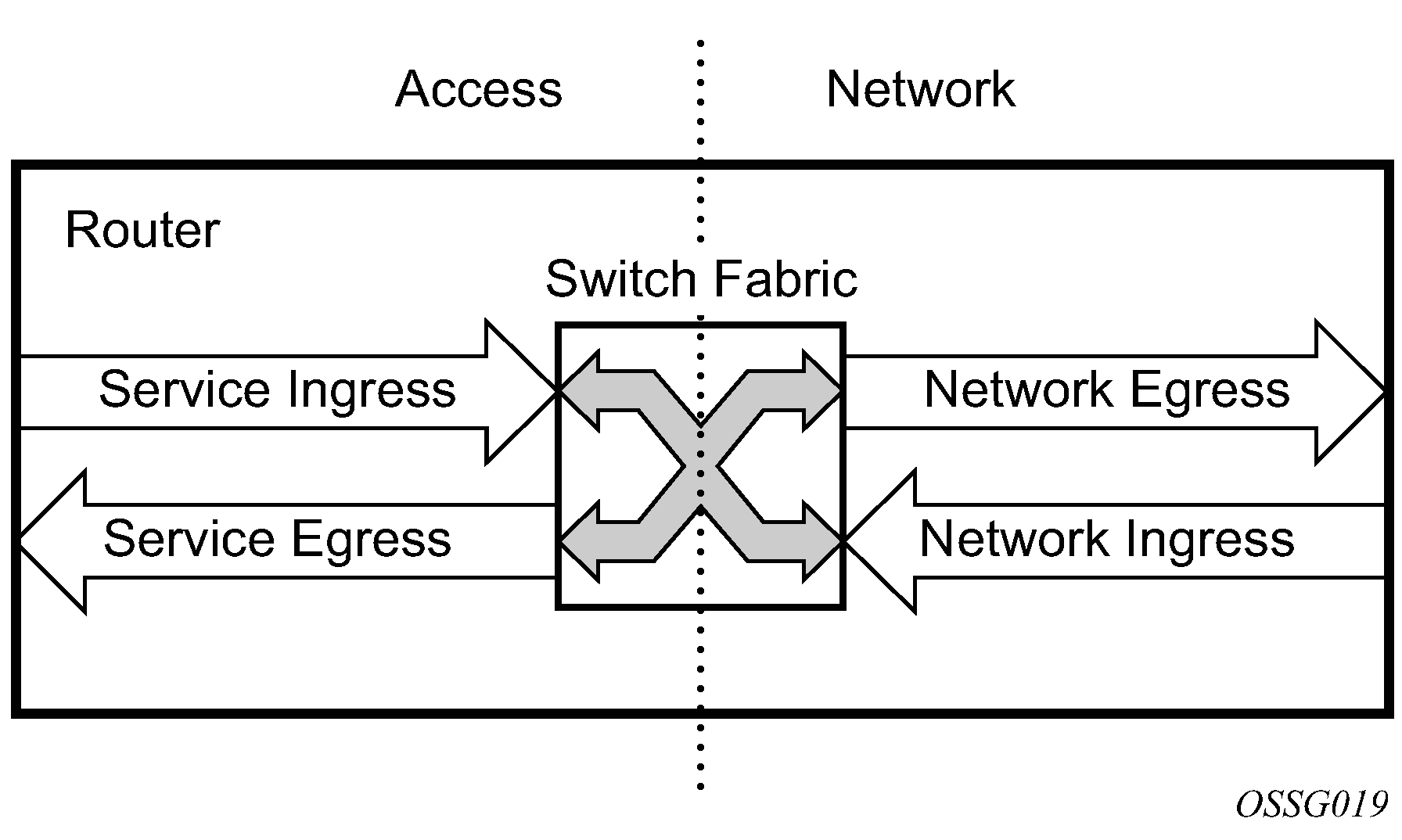

Service versus network QoS

The QoS mechanisms within the routers are specialized for the type of traffic on the interface. For customer interfaces, there is service ingress and egress traffic, and for network core interfaces, there is network ingress and network egress traffic, as shown in Service vs network traffic types.

The router uses QoS policies applied to a SAP for a service or to a network FP/port to define the queuing, queue attributes, and QoS marking/interpretation.

The router supports four types of service and network QoS policies:

service ingress QoS policies

service egress QoS policies

network QoS policies

network queue QoS policies

QoS policy entities

Services are configured with default QoS policies. Additional policies must be explicitly created and associated. There is one default service ingress QoS policy, one default service egress QoS policy, and one default network QoS policy. Only one ingress QoS policy and one egress QoS policy can be applied to a SAP or network entity.

When a new QoS policy is created, default values are provided for most parameters with the exception of the policy ID and queue ID values, descriptions, and the default action queue assignment. Each policy has a scope, default action, description, and at least one queue. The queue is associated with a forwarding class.

Service types and applicable service QoS policies lists which service QoS policies are supported for each service type.

| Service type | QoS policies |

|---|---|

|

Epipe |

Both ingress and egress policies are supported on an Epipe SAP. |

|

VPLS |

Both ingress and egress policies are supported on a VPLS SAP. |

|

IES |

Both ingress and egress policies are supported on an IES SAP. |

|

VPRN |

Both ingress and egress policies are supported on a VPRN SAP. |

Network QoS policies can be applied to the following entities:

network interfaces

Cpipe/Epipe/Ipipe and VPLS spoke-SDPs

VPLS spoke- and mesh-SDPs

IES and VPRN interface spoke-SDPs

VPRN network ingress

VXLAN network ingress

Network queue policies can be applied to:

ingress FPs

egress ports

Default QoS policies map all traffic with equal priority and allow an equal chance of transmission (Best Effort (be) forwarding class) and an equal chance of being dropped during periods of congestion. QoS prioritizes traffic according to the forwarding class and uses congestion management to control access ingress, access egress, and network traffic with queuing according to priority.

Network QoS policies

Network QoS policies define egress QoS marking and ingress QoS interpretation for traffic on core network IP interfaces. The router automatically creates egress queues for each of the forwarding classes on network IP interfaces.

A network QoS policy defines both the ingress and egress handling of QoS on the IP interface. The following functions are defined:

ingress

defines DSCP, Dot1p, and IP criteria mappings to forwarding classes

defines LSP EXP value mappings to forwarding classes

egress

defines DSCP and IP precedence mappings to forwarding classes

defines forwarding class to DSCP value markings

defines forwarding class to Dot1p/DE value markings

defines forwarding class to LSP EXP value markings

enables/disables remarking of QoS

defines FC to policer/queue-group queue mapping

The required elements to be defined in a network QoS policy are:

a unique network QoS policy ID

egress forwarding class to DSCP value mappings for each forwarding class

egress forwarding class to Dot1p value mappings for each forwarding class

egress forwarding class to LSP EXP value mappings for each forwarding class

enabling/disabling of egress QoS remarking

a default ingress forwarding class and in-profile/out-of-profile state

Optional network QoS policy elements include:

DSCP name-to-forwarding class and profile state mappings for all DSCP values received

LSP EXP value-to-forwarding class and profile state mappings for all EXP values received

ingress FC fp-redirect-group policer mapping

egress FC port-redirect-group queue/policer mapping

Network policy ID 1 is reserved as the default network QoS policy. The default policy cannot be deleted or changed.

The default network QoS policy is applied to all network interfaces that do not have another network QoS policy explicitly assigned. Default network QoS policy egress marking describes the default network QoS policy egress marking.

| FC-ID | FC name | FC label | DiffServ name | Egress DSCP marking | Egress LSP EXP marking | ||

|---|---|---|---|---|---|---|---|

| In-profile name | Out-of-profile name | In-profile | Out-of- profile | ||||

7 |

Network Control |

nc |

NC2 |

nc2 111000 - 56 |

nc2 111000 - 56 |

111 - 7 |

111 - 7 |

6 |

High-1 |

h1 |

NC1 |

nc1 110000 - 48 |

nc1 110000 - 48 |

110 - 6 |

110 - 6 |

5 |

Expedited |

ef |

EF |

ef 101110 - 46 |

ef 101110 - 46 |

101 - 5 |

101 - 5 |

4 |

High-2 |

h2 |

AF4 |

af41 100010 - 34 |

af42 100100 - 36 |

100 - 4 |

100 - 4 |

3 |

Low-1 |

l1 |

AF2 |

af21 010010 - 18 |

af22 010100 - 20 |

011 - 3 |

010 - 2 |

2 |

Assured |

af |

AF1 |

af11 001010 - 10 |

af12 001100 - 12 |

011 - 3 |

010 - 2 |

1 |

Low-2 |

l2 |

CS1 |

cs1 001000 - 8 |

cs1 001000 - 8 |

001 - 1 |

001 - 1 |

0 |

Best Effort |

be |

BE |

be 000000 - 0 |

be 000000 - 0 |

000 - 0 |

000 - 0 |

For network ingress, Default network QoS policy DSCP to forwarding class mappings and Forwarding class and enqueuing priority classification hierarchy based on rule type list the default mapping of DSCP name and LSP EXP values to forwarding class and profile state for the default network QoS policy.

| Ingress DSCP | Forwarding class | ||||

|---|---|---|---|---|---|

| dscp-name | dscp-value (binary - decimal) | FC ID | Name | Label | Profile state |

Default |

0 |

Best-Effort |

be |

Out |

|

ef |

101110 - 46 |

5 |

Expedited |

ef |

In |

nc1 |

110000 - 48 |

6 |

High-1 |

h1 |

In |

nc2 |

111000 - 56 |

7 |

Network Control |

nc |

In |

af11 |

001010 - 10 |

2 |

Assured |

af |

In |

af12 |

001100 - 12 |

2 |

Assured |

af |

Out |

af13 |

001110 - 14 |

2 |

Assured |

af |

Out |

af21 |

010010 - 18 |

3 |

Low-1 |

l1 |

In |

af22 |

010100 - 20 |

3 |

Low-1 |

l1 |

Out |

af23 |

010110 - 22 |

3 |

Low-1 |

l1 |

Out |

af31 |

011010 - 26 |

3 |

Low-1 |

l1 |

In |

af32 |

011100 - 28 |

3 |

Low-1 |

l1 |

Out |

af33 |

011110 - 30 |

3 |

Low-1 |

l1 |

Out |

af41 |

100010 - 34 |

4 |

High-2 |

h2 |

In |

af42 |

100100 - 36 |

4 |

High-2 |

h2 |

Out |

af43 |

100110 - 38 |

4 |

High-2 |

h2 |

Out |

Network queue QoS policies

Network queue policies define the network forwarding class queue characteristics. Network queue policies are applied on egress on core network ports or channels and on ingress FPs. Network queue policies can be configured to use as many queues as needed. This means that the number of queues can vary. Not all policies use the same number of queues as the default network queue policy. The multicast queues are only used at ingress.

The queue characteristics that can be configured on a per-forwarding class basis are:

CBS as a percentage of the buffer pool

MBS as a percentage of the buffer pool

low drop tail as a percentage reduction from MBS

PIR as a percentage of the FP ingress capacity or egress port bandwidth

CIR as a percentage of the FP ingress capacity or egress port bandwidth

FIR as a percentage of the FP ingress capacity or egress port bandwidth

Network queue policies are identified with a unique policy name that conforms to the standard router alphanumeric naming conventions.

The system default network queue policy is named default and cannot be edited or deleted. For information about the default network queue policy, see Network queue QoS policies.

Service ingress QoS policies

Service ingress QoS policies define ingress service forwarding class queues and map flows to those queues. When a service ingress QoS policy is created by default, it always has two queues defined that cannot be deleted: one for the default unicast traffic and one for the default multipoint traffic. These queues exist within the definition of the policy. The queues only get instantiated in hardware when the policy is applied to a SAP. In the case where the service does not have multipoint traffic, the multipoint queues are not instantiated.

In the simplest service ingress QoS policy, all traffic is treated as a single flow and mapped to a single queue, and all flooded traffic is treated with a single multipoint queue. The required elements to define a service ingress QoS policy are:

a unique service ingress QoS policy ID

a QoS policy scope of template or exclusive

at least one default unicast forwarding class queue. The parameters that can be configured for a queue are discussed in Queue parameters.

at least one multipoint forwarding class queue

Optional service ingress QoS policy elements include:

additional unicast queues up to a total of 31

additional multipoint queues up to 31

QoS policy match criteria to map packets to a forwarding class

To facilitate more forwarding classes, subclasses are supported. Each forwarding class can have one or multiple subclass associations for SAP ingress classification purposes. Each subclass retains the chassis-wide behavior defined to the parent class while providing expanded ingress QoS classification actions.

There can be up to 64 classes and subclasses combined in a sap-ingress policy. With the extra 56 values, the size of the forwarding class space is more than sufficient to handle the various combinations of actions.

Forwarding class expansion is accomplished through the explicit definition of sub-forwarding classes within the SAP ingress QoS policy. The CLI mechanism that creates forwarding class associations within the SAP ingress policy is also used to create subclasses. A portion of the subclass definition directly ties the subclass to a parent, chassis-wide forwarding class. The subclass is only used as a SAP ingress QoS classification tool; the subclass association is lost when ingress QoS processing is finished.

When configured with this option, the forwarding class and drop priority of incoming traffic are determined by the mapping result of the EXP bits in the top label. Forwarding class and enqueuing priority classification hierarchy based on rule type lists the classification hierarchy based on rule type.

# |

Rule | Forwarding class | Enqueuing priority | Comments |

|---|---|---|---|---|

1 |

default-fc |

Set the policy’s default forwarding class. |

Set to policy default |

All packets match the default rule. |

2 |

dot1p dot1p-value |

Set when an fc-name exists in the policy. Otherwise, preserve from the previous match. |

Set when the priority parameter is high or low. Otherwise, preserve from the previous match. |

Each dot1p-value must be explicitly defined. Each packet can only match a single dot1p rule. |

3 |

lsp-exp exp-value |

Set when an fc-name exists in the policy. Otherwise, preserve from the previous match. |

Set when the priority parameter is high or low. Otherwise, preserve from the previous match. |

Each exp-value must be explicitly defined. Each packet can only match a single lsp-exp rule. This rule can only be applied on Ethernet L2 SAP. |

4 |

prec ip-prec-value |

Set when an fc-name exists in the policy. Otherwise, preserve from the previous match. |

Set when the priority parameter is high or low. Otherwise, preserve from the previous match. |

Each ip-prec-value must be explicitly defined. Each packet can only match a single prec rule. |

5 |

dscp dscp-name |

Set when an fc-name exists in the policy. Otherwise, preserve from the previous match. |

Set when the priority parameter is high or low in the entry. Otherwise, preserve from the previous match. |

Each dscp-name that defines the DSCP value must be explicitly defined. Each packet can only match a single DSCP rule. |

6 |

IP criteria: multiple entries per policy Multiple criteria per entry |

Set when an fc-name exists in the entry’s action. Otherwise, preserve from the previous match. |

Set when the priority parameter is high or low in the entry action. Otherwise, preserve from the previous match. |

When IP criteria is specified, entries are matched based on ascending order until first match, then processing stops. A packet can only match a single IP criteria entry. |

7 |

MAC criteria: multiple entries per policy Multiple criteria per entry |

Set when an fc-name exists in the entry’s action. Otherwise, preserve from the previous match. |

Set when the priority parameter is specified as high or low in the entry action. Otherwise, preserve from the previous match. |

When MAC criteria is specified, entries are matched based on ascending order until first match, then processing stops. A packet can only match a single MAC criteria entry. |

FC mapping based on EXP bits at VLL/VPLS SAP

To accommodate backbone ISPs who want to provide VPLS/VLL to small ISPs as a site-to-site inter-connection service, small ISP routers can connect to Ethernet Layer 2 SAPs. The traffic is encapsulated in a VLL/VPLS SDP. These small ISP routers are typically PE routers. To provide appropriate QoS, the routers support a new classification option that based on received MPLS EXP bits. Example configuration — carrier application shows a sample configuration.

The lsp-exp command is supported in sap-ingress qos policy. This option can only be applied on Ethernet Layer 2 SAPs.

Forwarding class classification based on rule type lists forwarding class behavior by rule type.

| # | Rule | Forwarding class | Comments |

|---|---|---|---|

1 |

default-fc |

Set the policy’s default forwarding class. |

All packets match the default rule. |

2 |

IP criteria:

|

Set when an fc-name exists in the entry’s action. Otherwise, preserve from the previous match. |

When IP criteria is specified, entries are matched based on ascending order until first match, then processing stops. A packet can only match a single IP criteria entry. |

3 |

MAC criteria:

|

Set when an fc-name exists in the entry’s action. Otherwise, preserve from the previous match. |

When MAC criteria is specified, entries are matched based on ascending order until first match, then processing stops. A packet can only match a single MAC criteria entry. |

The enqueuing priority is specified as part of the classification rule and is set to ‟high” or ‟low”. The enqueuing priority relates to the forwarding class queue’s low drop tail where only packets with a high enqueuing priority are accepted into the queue when the queue’s depth reaches the defined threshold. See Queue drop tails.

The mapping of IEEE 802.1p bits, IP Precedence, and DSCP values to forwarding classes is optional as is specifying IP and MAC criteria.

The IP and MAC match criteria can be very basic or quite detailed. IP and MAC match criteria are constructed from policy entries. An entry is identified by a unique, numerical entry ID. A single entry cannot contain more than one match value for each match criteria. Each match entry has a queuing action that specifies:

the forwarding class of packets that match the entry

the enqueuing priority (high or low) for matching packets

The entries are evaluated in numerical order based on the entry ID from the lowest to highest ID value. The first entry that matches all match criteria has its action performed.

The supported service ingress QoS policy IP match criteria are:

destination IP address/prefix

destination port/range

IP fragment

protocol type (TCP, UDP, and so on)

source port/range

source IP address/prefix

DSCP value

The supported service ingress QoS policy MAC match criteria are:

IEEE 802.2 LLC SSAP value/mask

IEEE 802.2 LLC DSAP value/mask

IEEE 802.3 LLC SNAP OUI zero or non-zero value

IEEE 802.3 LLC SNAP PID value

IEEE 802.1p value/mask

source MAC address/mask

destination MAC address/mask

EtherType value

MAC match Ethernet frame types describes the frame format on which the MAC match criteria that can be used for an Ethernet frame depends on.

| Frame format | Description |

|---|---|

802dot3 |

IEEE 802.3 Ethernet frame. Only the source MAC, destination MAC, and IEEE 802.1p value are compared for match criteria. |

802dot2-llc |

IEEE 802.3 Ethernet frame with an 802.2 LLC header. |

802dot2-snap |

IEEE 802.2 Ethernet frame with 802.2 SNAP header. |

ethernet-II |

Ethernet type II frame where the 802.3 length field is used as an Ethernet type (Etype) value. Etype values are 2-byte values greater than 0x5FF (1535 decimal). |

The 802dot3 frame format matches across all Ethernet frame formats where only the source MAC, destination MAC, and IEEE 802.1p value are compared. The other Ethernet frame types match those field values in addition to fields specific to the frame format. MAC match criteria frame type dependencies lists the criteria that can be matched for the various MAC frame types.

| Frame format | Source MAC | Dest MAC | IEEE 802.1p value | Etype value | LLC header SSAP/DSAP value/mask | SNAP-OUI zero/non-zero value | SNAP- PID value |

|---|---|---|---|---|---|---|---|

802dot3 |

Yes |

Yes |

Yes |

No |

No |

No |

No |

802dot2-llc |

Yes |

Yes |

Yes |

No |

Yes |

No |

No |

802dot2-snap |

Yes |

Yes |

Yes |

No |

No1 |

Yes |

Yes |

ethernet-II |

Yes |

Yes |

Yes |

Yes |

No |

No |

No |

Service ingress QoS policy ID 1 is reserved for the default service ingress policy. The default policy cannot be deleted or changed.

The default service ingress policy is implicitly applied to all SAPs that do not explicitly have another service ingress policy assigned. Default service ingress policy ID 1 definition lists the characteristics of the default policy.

| Characteristic | Item | Definition |

|---|---|---|

Queues |

Queue 1 |

One queue for all unicast traffic:

|

Queue 11 |

One queue for all multipoint traffic:

|

|

Flows |

Default Forwarding Class |

One flow defined for all traffic; all traffic mapped to best-effort (be) with a low priority. |

Egress forwarding class override

Egress forwarding class override provides additional QoS flexibility by allowing the use of a different forwarding class at egress than was used at ingress.

The ingress QoS processing classifies traffic into a forwarding class (or subclass) and by default the same forwarding class is used for this traffic at the access or network egress. The ingress forwarding class or subclass can be overridden so that the traffic uses a different forwarding class at the egress. This can be configured for the main forwarding classes and for subclasses, allowing each to use a different forwarding class at the egress.

The buffering, queuing, policing, and remarking operation at the ingress and egress remain unchanged. Egress reclassification is possible. The profile processing is completely unaffected by overriding the forwarding class.

When used in conjunction with QoS Policy Propagation Using BGP (QPPB), a QPPB assigned forwarding class takes precedence over both the normal ingress forwarding class classification rules and any egress forwarding class overrides.

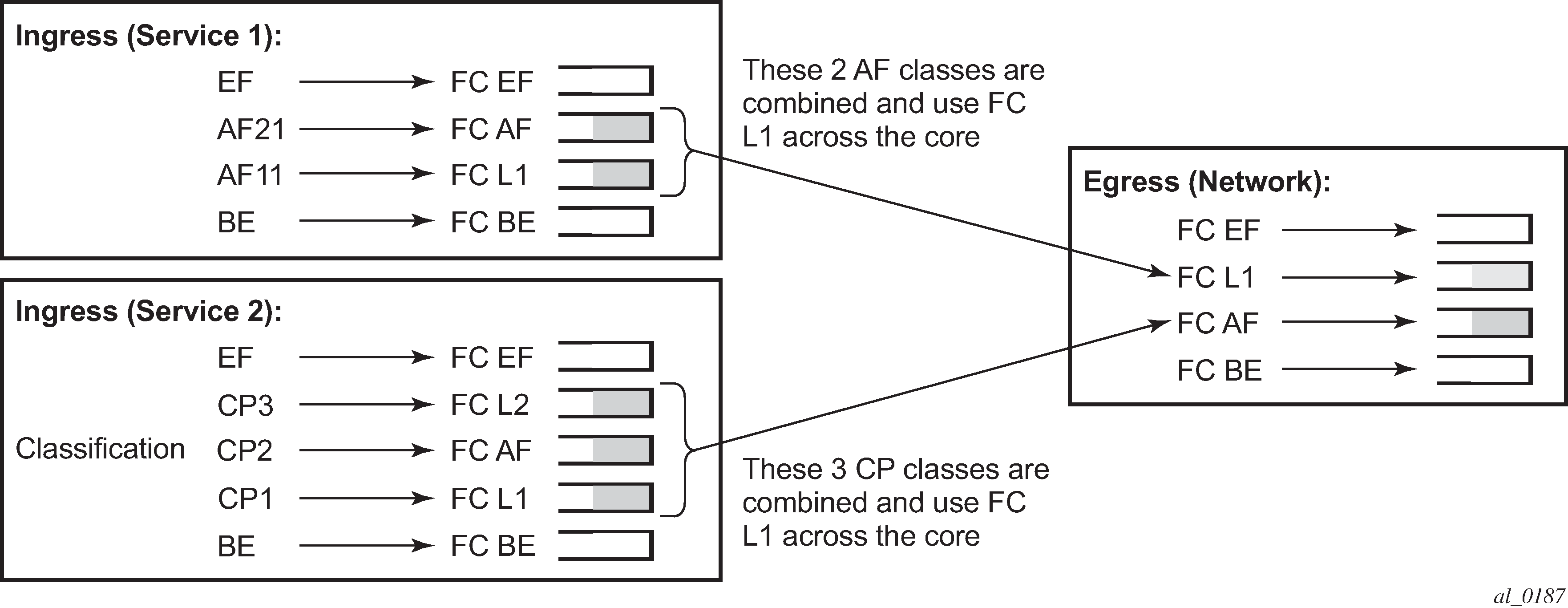

Egress forwarding class override shows the ingress service 1 using forwarding classes AF and L1 that are overridden to L1 for the network egress, while it also shows ingress service 2 using forwarding classes L1, AF, and L2 that are overridden to AF for the network egress.

Service egress QoS policies

Service egress queues are implemented at the transition from the service core network to the service access network. The advantages of per-service queuing before transmission into the access network are:

per-service egress subrate capabilities especially for multipoint services

more granular, fairer scheduling per-service into the access network

per-service statistics for forwarded and discarded service packets

The subrate capabilities and per-service scheduling control are required to make multiple services per physical port possible. Without egress shaping, it is impossible to support more than one service per port. There is no way to prevent service traffic from bursting to the available port bandwidth and starving other services.

For accounting purposes, per-service statistics can be logged. When statistics from service ingress queues are compared with service egress queues, the ability to conform to per-service QoS requirements within the service core can be measured. The service core statistics are a major asset to core provisioning tools.

Service egress QoS policies define egress queues and map forwarding class flows to queues. In the simplest service egress QoS policy, all forwarding classes are treated like a single flow and mapped to a single queue. To define a basic egress QoS policy, the following are required:

a unique service egress QoS policy ID

a QoS policy scope of template or exclusive

at least one defined default queue

Optional service egress QoS policy elements include:

additional queues up to a total of eight separate queues (unicast)

dot1p/DE, DSCP, and IP precedence remarking based on forwarding class

Each queue in a policy is associated with one of the forwarding classes. Each queue can have its individual queue parameters allowing individual rate shaping of the forwarding classes mapped to the queue.

More complex service queuing models are supported in the router where each forwarding class is associated with a dedicated queue.

The forwarding class determination per service egress packet is determined either at ingress or egress. If the packet ingressed the service on the same router, the service ingress classification rules determine the forwarding class of the packet. If the packet is received on a network interface, the forwarding class is marked in the tunnel transport encapsulation. In each case, the packet can be reclassified into a different forwarding class at service egress.

Service egress QoS policy ID 1 is reserved as the default service egress policy. The default policy cannot be deleted or changed. The default access egress policy is applied to all SAPs that do not have another service egress policy explicitly assigned. Default service egress policy ID 1 definition lists the characteristics of the default policy.

| Characteristic | Item | Definition |

|---|---|---|

Queues |

Queue 1 |

One queue defined for all traffic classes:

|

Flows |

Default Action |

One flow defined for all traffic classes; all traffic mapped to queue 1 with no marking. |

Slope policies

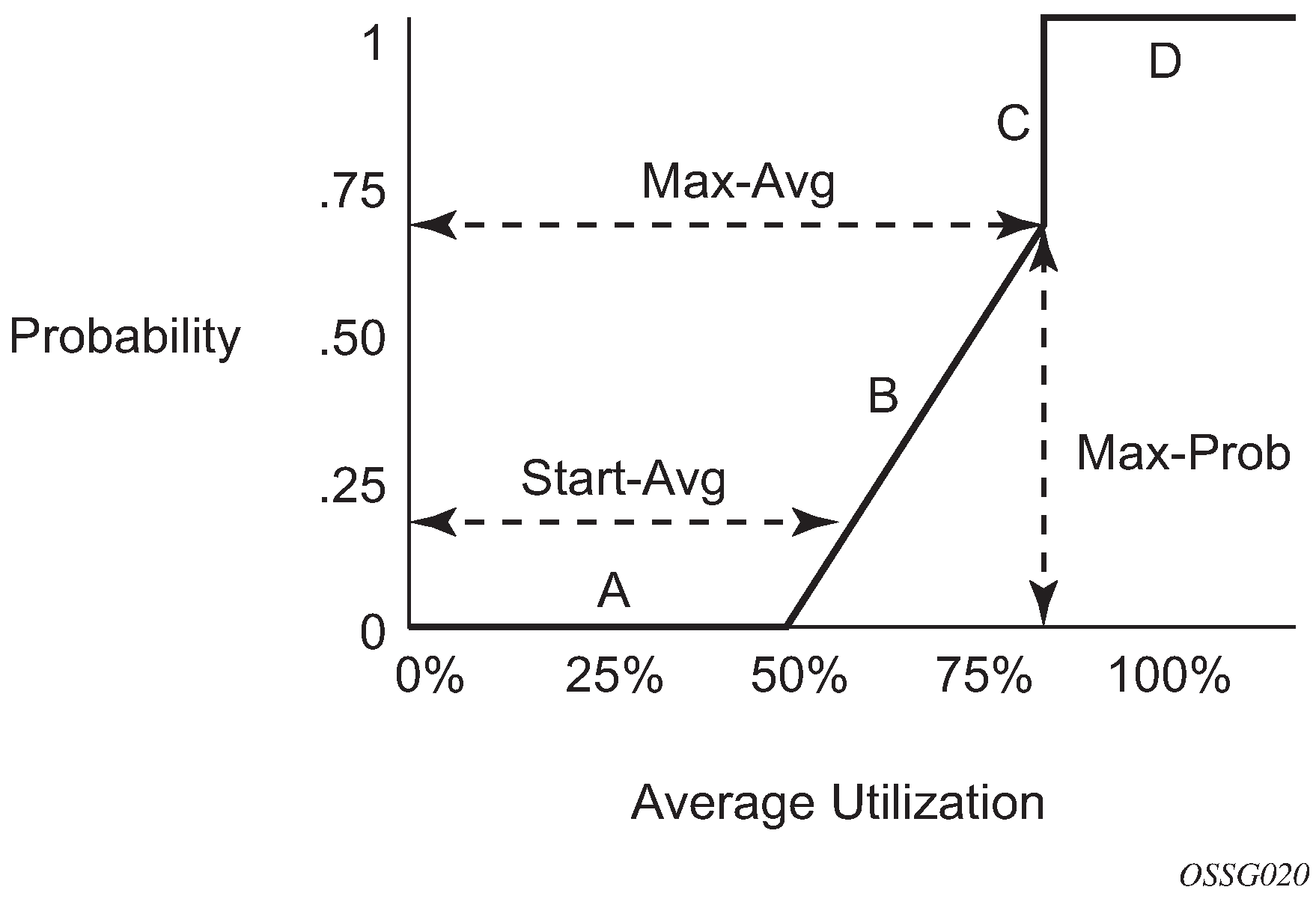

Slope policies are used to define the RED slope characteristics as a percentage of the shared pool size for the pool on which the policy is applied.