Virtual Private Routed Network service

VPRN service overview

RFC 2547b is an extension to the original RFC 2547, BGP/MPLS VPNs, which details a method of distributing routing information using BGP and MPLS forwarding data to provide a Layer 3 Virtual Private Network (VPN) service to end customers.

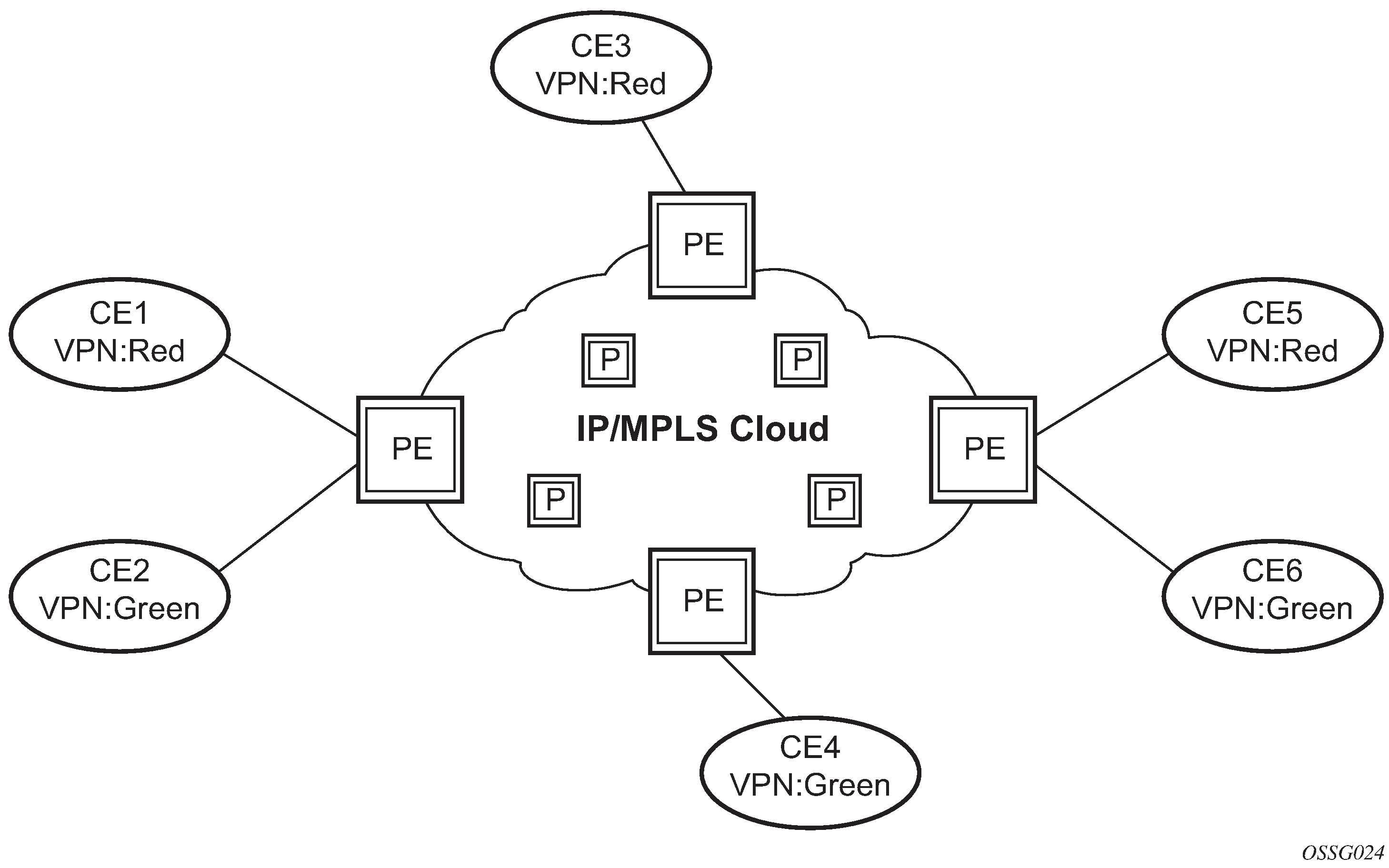

Each Virtual Private Routed Network (VPRN) consists of a set of customer sites connected to one or more PE routers. Each associated PE router maintains a separate IP forwarding table for each VPRN. Additionally, the PE routers exchange the routing information configured or learned from all customer sites via MP-BGP peering. Each route exchanged via the MP-BGP protocol includes a Route Distinguisher (RD), which identifies the VPRN association and handles the possibility of IP address overlap.

The service provider uses BGP to exchange the routes of a particular VPN among the PE routers that are attached to that VPN. This is done in a way which ensures that routes from different VPNs remain distinct and separate, even if two VPNs have an overlapping address space. The PE routers peer with locally connected CE routers and exchange routes with other PE routers to provide end-to-end connectivity between CEs belonging to a specific VPN. Because the CE routers do not peer with each other there is no overlay visible to the CEs.

When BGP distributes a VPN route it also distributes an MPLS label for that route. On an SR series router, the method of allocating a label to a VPN route depends on the VPRN label mode and the configuration of the VRF export policy. SR series routers support three label allocation methods: label per VRF, label per next hop, and label per prefix.

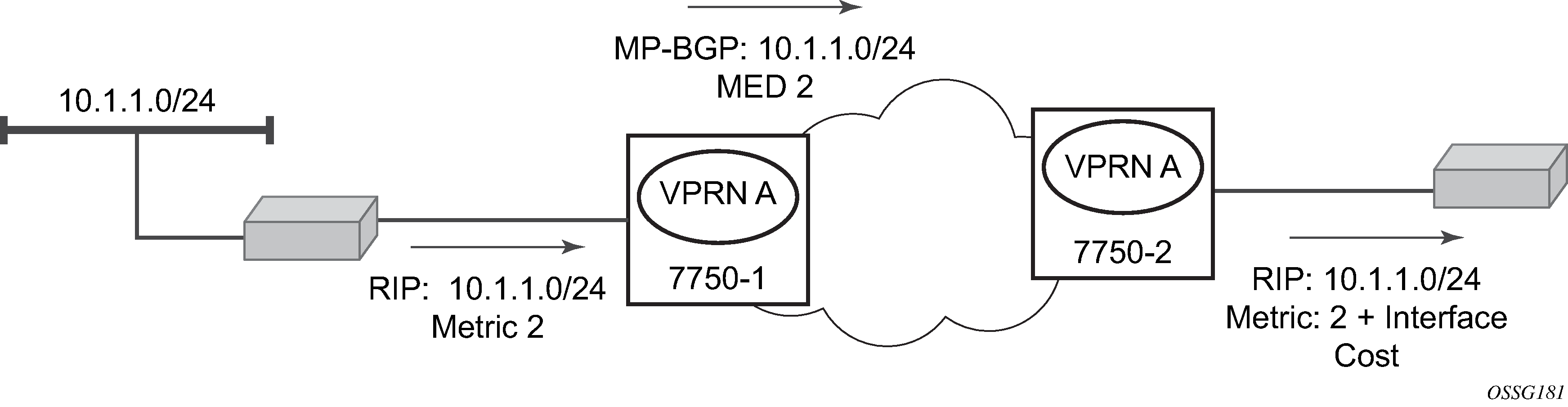

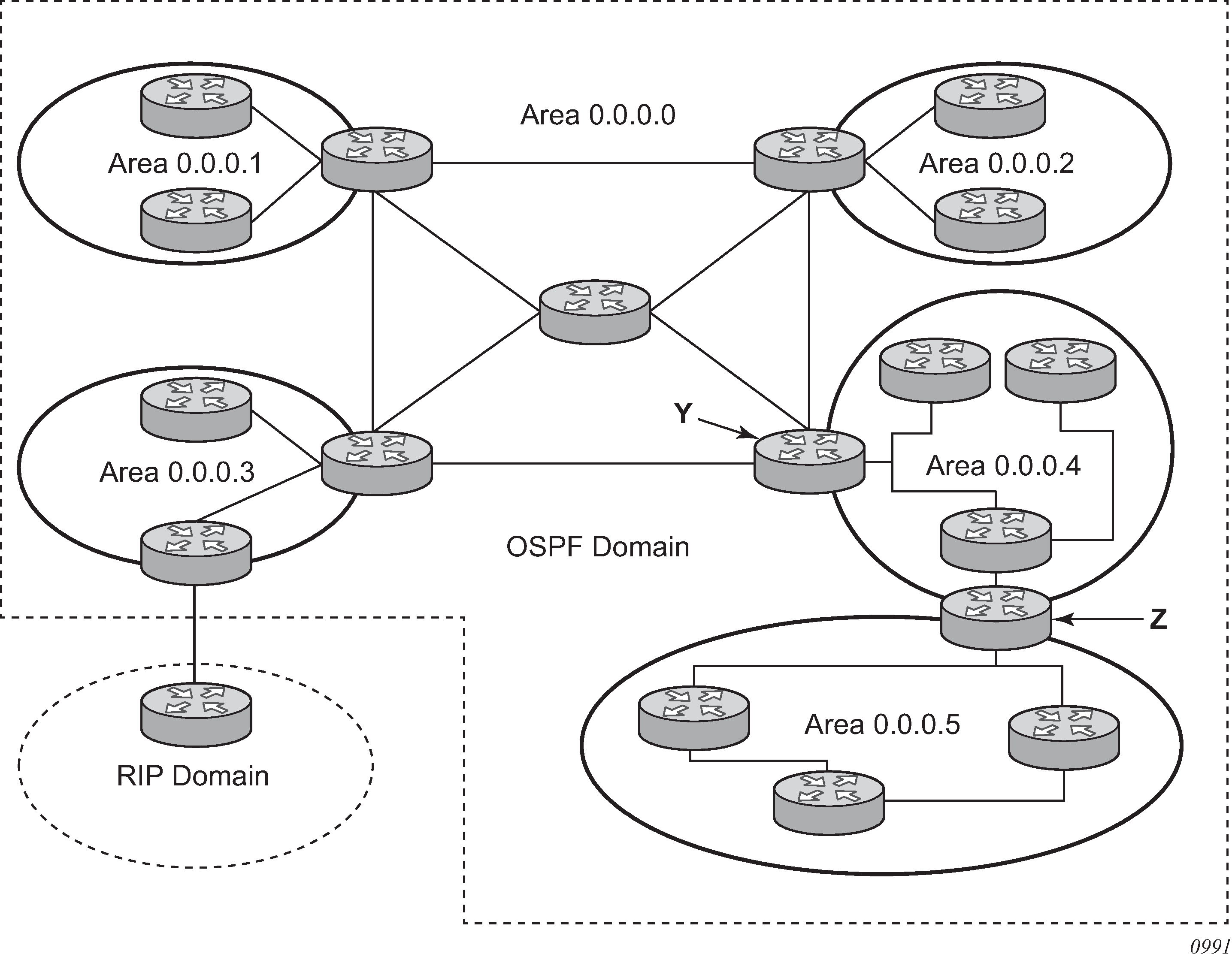

Before a customer data packet travels across the service provider's backbone, it is encapsulated with the MPLS label that corresponds, in the customer's VPN, to the route which best matches the packet's destination address. The MPLS packet is further encapsulated with one or additional MPLS labels or GRE tunnel header so that it gets tunneled across the backbone to the correct PE router. Each route exchanged by the MP-BGP protocol includes a route distinguisher (RD), which identifies the VPRN association. Thus the backbone core routers do not need to know the VPN routes. Virtual Private Routed Network displays a VPRN network diagram example.

Routing prerequisites

RFC 4364 requires the following features:

multi-protocol extensions to BGP

extended BGP community support

BGP capability negotiation

Tunneling protocol options are as follows:

Label Distribution Protocol (LDP)

MPLS RSVP-TE tunnels

Generic Router Encapsulation (GRE) tunnels

BGP route tunnel (RFC 8277)

Core MP-BGP support

BGP is used with BGP extensions mentioned in Routing prerequisites to distribute VPRN routing information across the service provider’s network.

BGP was initially designed to distribute IPv4 routing information. Therefore, multi-protocol extensions and the use of a VPN-IP address were created to extend BGP’s ability to carry overlapping routing information. A VPN-IPv4 address is a 12-byte value consisting of the 8-byte route distinguisher (RD) and the 4-byte IPv4 IP address prefix. A VPN-IPv6 address is a 24-byte value consisting of the 8-byte RD and 16-byte IPv6 address prefix. Service providers typically assign one or a small number of RDs per VPN service network-wide.

Route distinguishers

The route distinguisher (RD) is an 8-byte value consisting of two major fields, the Type field and Value field. The Type field determines how the Value field should be interpreted. The 7750 SR and 7950 XRS implementation supports the three (3) Type values as defined in the standard.

The three Type values are:

Type 0: Value Field — Administrator subfield (2 bytes)

-

Assigned number subfield (4 bytes)

-

The administrator field must contain an AS number (using private AS numbers is discouraged). The Assigned field contains a number assigned by the service provider.

-

-

Type 1: Value Field — Administrator subfield (4 bytes)

-

Assigned number subfield (2 bytes)

-

The administrator field must contain an IP address (using private IP address space is discouraged). The Assigned field contains a number assigned by the service provider.

-

-

Type 2: Value Field — Administrator subfield (4 bytes)

-

Assigned number subfield (2 bytes)

-

The administrator field must contain a 4-byte AS number (using private AS numbers is discouraged). The Assigned field contains a number assigned by the service provider.

-

eiBGP load balancing

eiBGP load balancing allows a route to have multiple next hops of different types, using both IPv4 next hops and MPLS LSPs simultaneously.

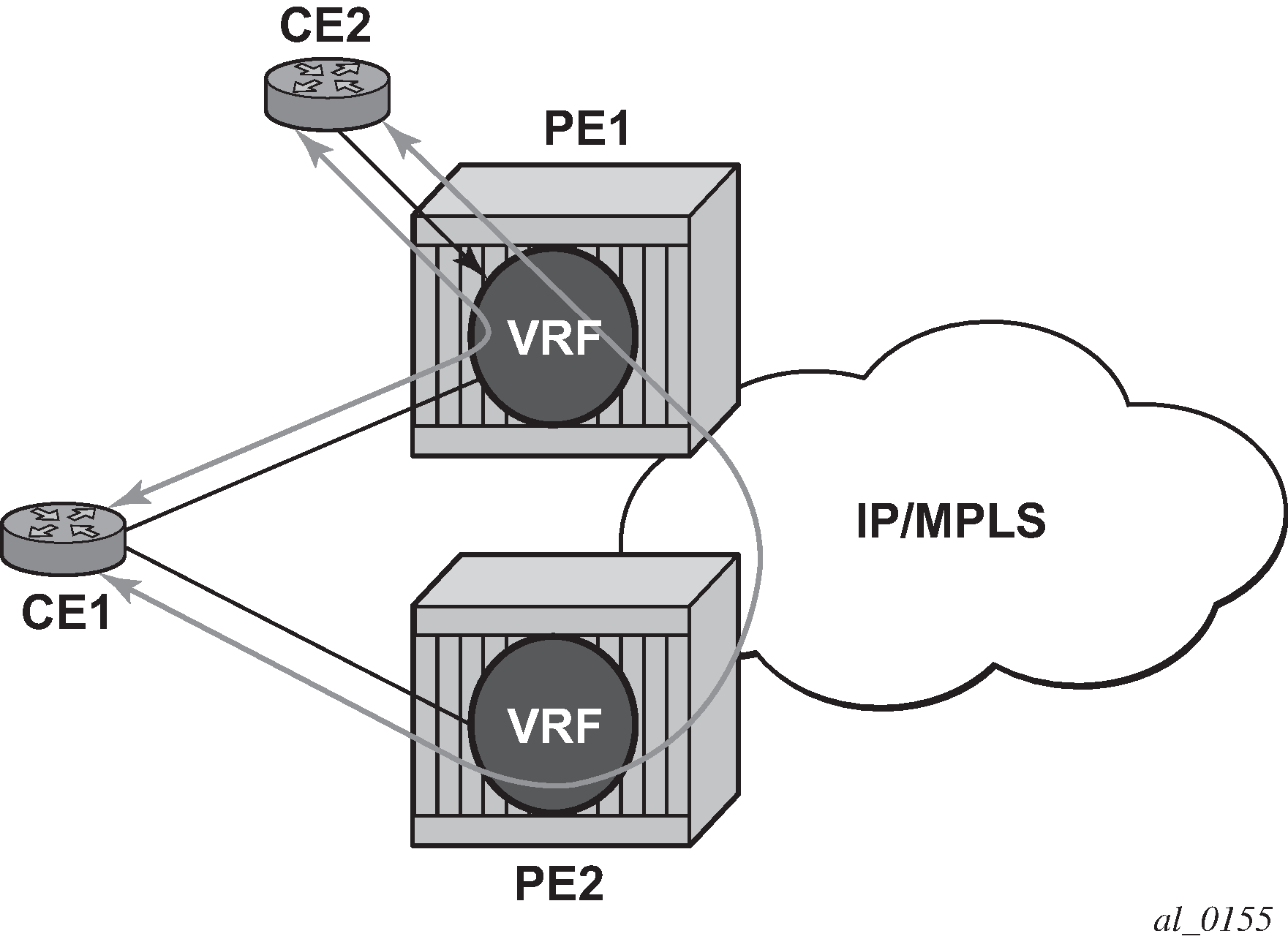

Basic eiBGP topology displays a basic topology that could use eiBGP load balancing. In this topology CE1 is dual homed and therefore reachable by two separate PE routers. CE 2 (a site in the same VPRN) is also attached to PE1. With eiBGP load balancing, PE1 uses its own local IPv4 next hop as well as the route advertised by MP-BGP, by PE2.

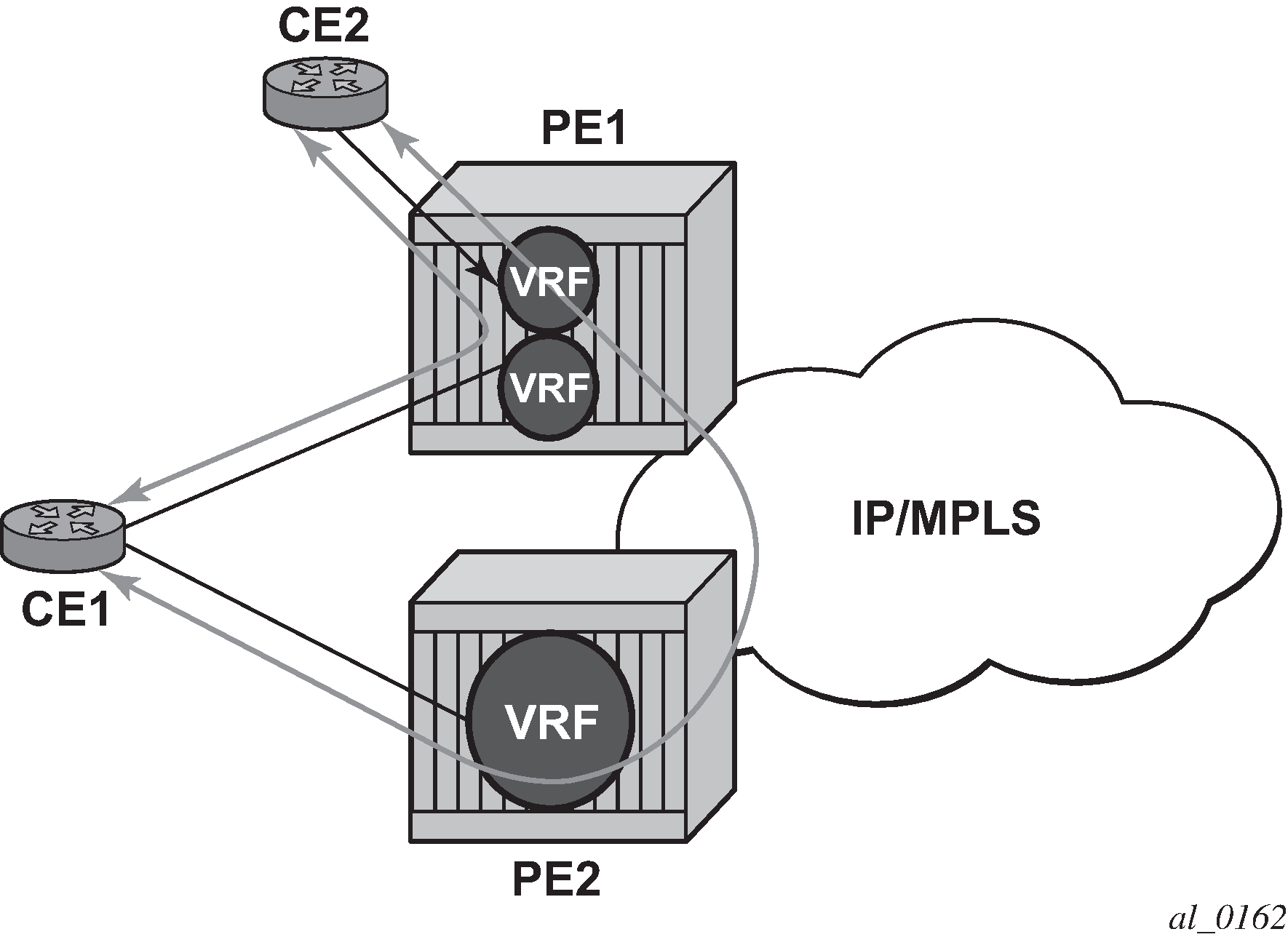

Another example displayed in Extranet load balancing shows an extra net VPRN (VRF). The traffic ingressing the PE that should be load balanced is part of a second VPRN and the route over which the load balancing is to occur is part of a separate VPRN instance and are leaked into the second VPRN by route policies.

Here, both routes can have a source protocol of VPN-IPv4 but one still has an IPv4 next hop and the other can have a VPN-IPv4 next hop pointing out a network interface. Traffic is still load balanced (if eiBGP is enabled) as if only a single VRF was involved.

Traffic is load balanced across both the IPv4 and VPN-IPv4 next hops. This helps to use all available bandwidth to reach a dual-homed VPRN.

Route reflector

The use of Route Reflectors is supported in the service provider core. Multiple sets of route reflectors can be used for different types of BGP routes, including IPv4 and VPN-IPv4 as well as multicast and IPv6 (multicast and IPv6 apply to the 7750 SR only).

CE to PE route exchange

Routing information between the Customer Edge (CE) and Provider Edge (PE) can be exchanged by the following methods:

Static Routes

EBGP

RIP

OSPF

OSPF3

Each protocol provides controls to limit the number of routes learned from each CE router.

Route redistribution

Routing information learned from the CE-to-PE routing protocols and configured static routes should be injected in the associated local VPN routing/forwarding (VRF). In the case of dynamic routing protocols, there may be protocol specific route policies that modify or reject specific routes before they are injected into the local VRF.

Route redistribution from the local VRF to CE-to-PE routing protocols is to be controlled via the route policies in each routing protocol instance, in the same manner that is used by the base router instance.

The advertisement or redistribution of routing information from the local VRF to or from the MP-BGP instance is specified per VRF and is controlled by VRF route target associations or by VRF route policies.

- MD-CLI

configure policy-options policy-statement entry from protocol name - classic

CLI

configure router policy-options policy-statement entry from protocol

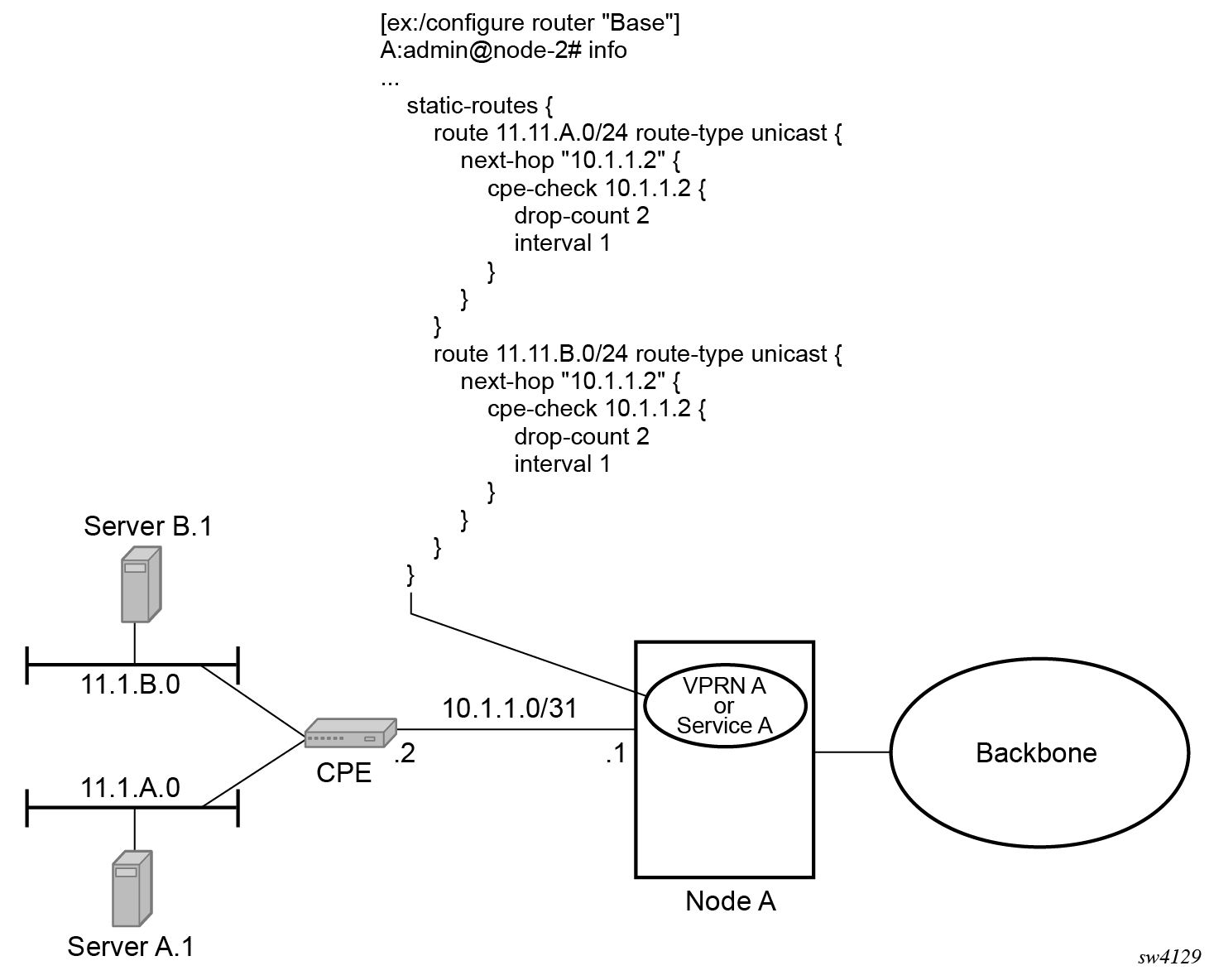

CPE connectivity check

Static routes are used within many IES services and VPRN services. Unlike dynamic routing protocols, there is no way to change the state of routes based on availability information for the associated CPE. CPE connectivity check adds flexibility so that unavailable destinations are removed from the VPRN routing tables dynamically and minimize wasted bandwidth.

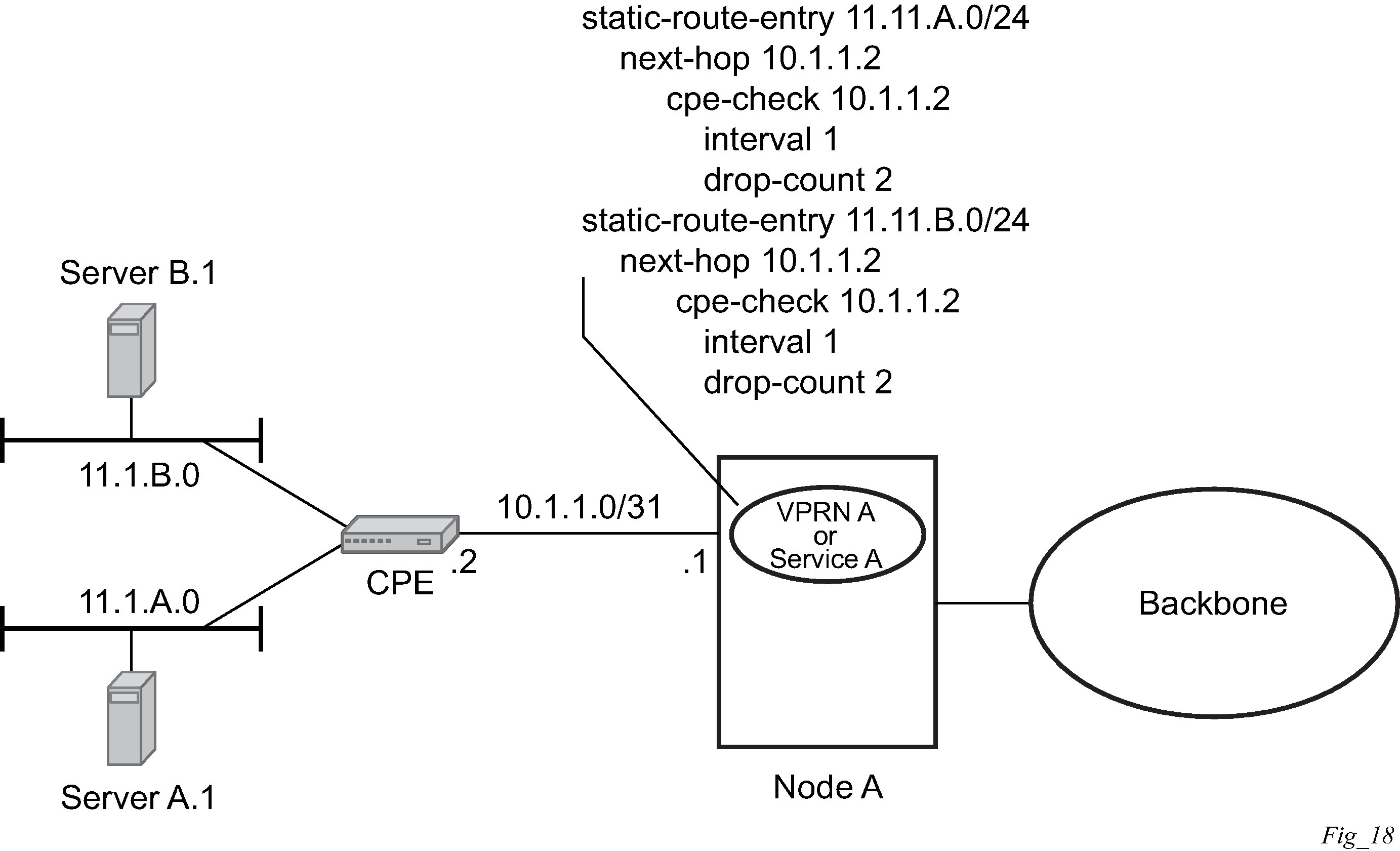

The following figure shows a setup with a directly connected IP target.

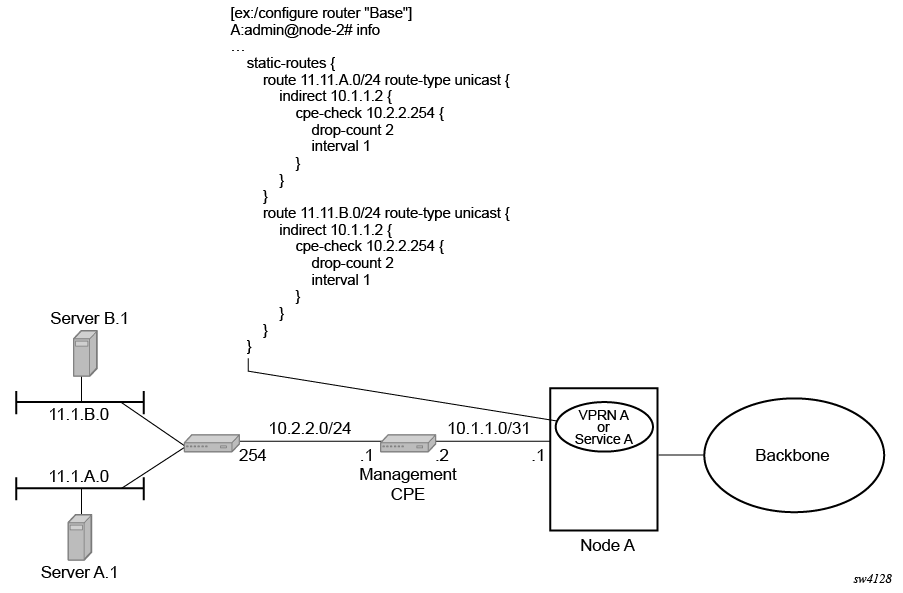

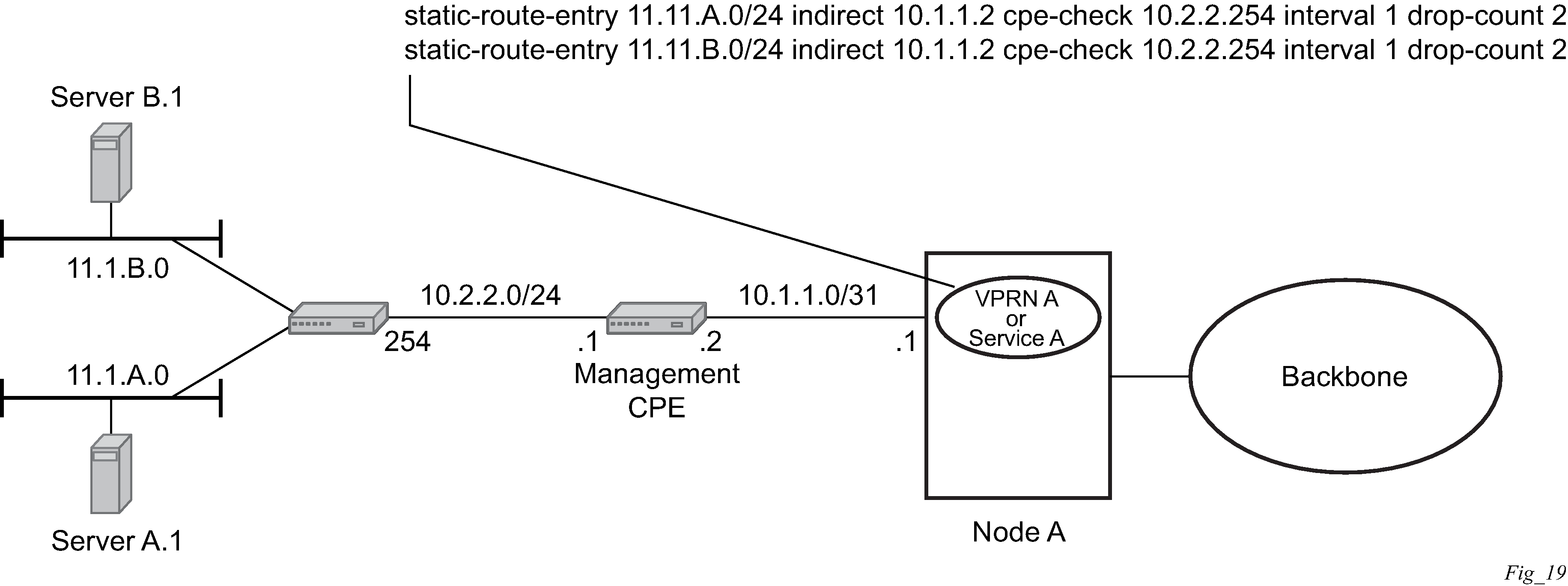

The following figure shows the setup with multiple hops to an IP target.

The availability of the far-end static route is monitored through periodic polling. The polling period is configured. If the poll fails a specified number of sequential polls, the static route is marked as inactive.

Either ICMP ping or unicast ARP mechanism can be used to test the connectivity. ICMP ping is preferred.

If the connectivity check fails and the static route is deactivated, the router continues to send polls and reactivate any routes that are restored.

RT Constraint

Constrained VPN Route Distribution based on route targets

Constrained Route Distribution (or RT Constraint) is a mechanism that allows a router to advertise Route Target membership information to its BGP peers to indicate interest in receiving only VPN routes tagged with specific Route Target extended communities. Upon receiving this information, peers restrict the advertised VPN routes to only those requested, minimizing control plane load in terms of protocol traffic and possibly also RIB memory.

The Route Target membership information is carried using MP-BGP, using an AFI value of 1 and SAFI value of 132. In order for two routers to exchange RT membership NLRI they must advertise the corresponding AFI/SAFI to each other during capability negotiation. The use of MP-BGP means RT membership NLRI are propagated, loop-free, within an AS and between ASes using well-known BGP route selection and advertisement rules.

ORF can also be used for RT-based route filtering, but ORF messages have a limited scope of distribution (to direct peers) and therefore do not automatically create pruned inter-cluster and inter-AS route distribution trees.

Configuring the route target address family

RT Constraint is supported only by the base router BGP instance. When the family command at the BGP router group or neighbor CLI context includes the route-target command option, the RT Constraint capability is negotiated with the associated set of EBGP and IBGP peers.

ORF and RT Constraint are mutually exclusive on a particular BGP session. The CLI does not attempt to block this configuration, but if both capabilities are enabled on a session, the ORF capability is not included in the OPEN message sent to the peer.

Originating RT constraint routes

When the base router has one or more RTC peers (BGP peers with which the RT Constraint capability has been successfully negotiated), one RTC route is created for each RT extended community imported into a locally-configured L2 VPN or L3 VPN service. Use the following command to configure these imported route targets.

configure service vprn

configure service vprn mvpnBy default, these RTC routes are automatically advertised to all RTC peers, without the need for an export policy to explicitly ‟accept” them. Each RTC route has a prefix, a prefix length and path attributes. The prefix value is the concatenation of the origin AS (a 4-byte value representing the 2- or 4-octet AS of the originating router, as configured using the configure router autonomous-system command) and 0 or 16-64 bits of a route target extended community encoded in one of the following formats: 2-octet AS specific extended community, IPv4 address specific extended community, or 4-octet AS specific extended community.

Use the following commands to configure a router to send the default RTC route to any RTC peer.

- MD-CLI

configure router bgp group default-route-target true configure router bgp group neighbor default-route-target true - classic

CLI

configure router bgp group default-route-target configure router bgp group neighbor default-route-target

The default RTC route is a special type of RTC route that has zero prefix length. Sending the default RTC route to a peer conveys a request to receive all VPN routes (regardless of route target extended community) from that peer. The default RTC route is typically advertised by a route reflector to its clients. The advertisement of the default RTC route to a peer does not suppress other more specific RTC routes from being sent to that peer.

Receiving and re-advertising RT Constraint routes

All received RTC routes that are deemed valid are stored in the RIB-IN. An RTC route is considered invalid and treated as withdrawn, if any of the following applies:

The prefix length is 1-31.

The prefix length is 33-47.

The prefix length is 48-96 and the 16 most-significant bits are not 0x0002, 0x0102 or 0x0202.

If multiple RTC routes are received for the same prefix value then standard BGP best path selection procedures are used to determine the best of these routes.

The best RTC route per prefix is re-advertised to RTC peers based on the following rules:

The best path for a default RTC route (prefix-length 0, origin AS only with prefix-length 32, or origin AS plus 16 bits of an RT type with prefix-length 48) is never propagated to another peer.

A PE with only IBGP RTC peers that is neither a route reflector nor an ASBR does not re-advertise the best RTC route to any RTC peer because of standard IBGP split horizon rules.

- A route reflector that receives its best RTC route for a prefix from a client peer re-advertises that route (subject to export policies) to all of its client and non-client IBGP peers (including the originator), per standard RR operation. When the route is re-advertised to client peers, the RR:

- sets the ORIGINATOR_ID to its own router ID

- modifies the NEXT_HOP to be its local address for the sessions (for example, system IP)

A route reflector that receives its best RTC route for a prefix from a non-client peer re-advertises that route (subject to export policies) to all of its client peers, per standard RR operation. If the RR has a non-best path for the prefix from any of its clients, it advertises the best of the client-advertised paths to all non-client peers.

An ASBR that is neither a PE nor a route reflector that receives its best RTC route for a prefix from an IBGP peer re-advertises that route (subject to export policies) to its EBGP peers. It modifies the NEXT_HOP and AS_PATH of the re-advertised route per standard BGP rules. No aggregation of RTC routes is supported.

An ASBR that is neither a PE nor a route reflector that receives its best RTC route for a prefix from an External Border Gateway Protocol (EBGP) peer re-advertises that route (subject to export policies) to its EBGP and IBGP peers. When re-advertised routes are sent to EBGP peers, the ASBR modifies the NEXT_HOP and AS_PATH per standard BGP rules. No aggregation of RTC routes is supported.

These advertisement rules do not handle hierarchical RR topologies properly. This is a limitation of the current RT constraint standard.

Using RT Constraint routes

In general (ignoring IBGP-to-IBGP rules, Add-Path, Best-external, and so on), the best VPN route for every prefix/NLRI in the RIB is sent to every peer supporting the VPN address family, but export policies may be used to prevent some prefix/NLRI from being advertised to specific peers. These export policies may be configured statically or created dynamically based on use of ORF or RT constraint with a peer. ORF and RT Constraint are mutually exclusive on a session.

When RT Constraint is configured on a session that also supports VPN address families using route targets (that is: VPN-IPv4,VPN-IPv6, L2-VPN, MVPN-IPv4, MVPN-IPv6, Mcast-VPN-IPv4 or EVPN), the advertisement of the VPN routes is affected as follows:

-

When the session comes up, the advertisement of the VPN routes is delayed for a short while to allow RTC routes to be received from the peer.

-

After the initial delay, the received RTC routes are analyzed and acted upon. If S1 is the set of routes previously advertised to the peer and S2 is the set of routes that should be advertised based on the most recent received RTC routes, the following applies:

-

The set of routes in S1 but not in S2 should be withdrawn immediately (subject to MRAI).

-

The set of routes in S2 but not in S1 should be advertised immediately (subject to MRAI).

-

If a default RTC route is received from a peer P1, the VPN routes that are advertised to P1 is the set that meets all of the following requirements:

-

The set is eligible for advertisement to P1 per BGP route advertisement rules.

-

The set has not been rejected by manually configured export policies.

-

The set has not been advertised to the peer.

In this context, a default RTC route is any of the following:

-

a route with NLRI length = zero

-

a route with NLRI value = origin AS and NLRI length = 32

-

a route with NLRI value = {origin AS+0x0002 | origin AS+0x0102 | origin AS+0x0202} and NLRI length = 48

-

If an RTC route for prefix A (origin-AS = A1, RT = A2/n, n > 48) is received from an IBGP peer I1 in autonomous system A1, the VPN routes that are advertised to I1 is the set that meets all of the following requirements:

-

The set is eligible for advertisement to I1 per BGP route advertisement rules.

-

The set has not been rejected by manually configured export policies.

-

The set carries at least one route target extended community with value A2 in the n most significant bits.

-

The set has not been advertised to the peer.

Note: This applies whether I1 advertised the best route for A or not. -

-

If the best RTC route for a prefix A (origin-AS = A1, RT = A2/n, n > 48) is received from an IBGP peer I1 in autonomous system B, the VPN routes that are advertised to I1 is the set that meets all of the following requirements:

-

The set is eligible for advertisement to I1 per BGP route advertisement rules.

-

The set has not been rejected by manually configured export policies.

-

The set carries at least one route target extended community with value A2 in the n most significant bits.

-

The set has not been advertised to the peer.

Note: This applies only if I1 advertised the best route for A. -

-

If the best RTC route for a prefix A (origin-AS = A1, RT = A2/n, n > 48) is received from an EBGP peer E1, the VPN routes that are advertised to E1 is the set that meets all of the following requirements:

-

The set is eligible for advertisement to E1 per BGP route advertisement rules.

-

The set has not been rejected by manually configured export policies.

-

The set carries at least one route target extended community with value A2 in the n most significant bits.

-

The set has not been advertised to the peer.

Note: This applies only if E1 advertised the best route for A. -

-

BGP fast reroute in a VPRN

BGP fast reroute is a feature that brings together indirection techniques in the forwarding plane and pre-computation of BGP backup paths in the control plane to support fast reroute of BGP traffic around unreachable/failed next-hops. In a VPRN context BGP fast reroute is supported using unlabeled IPv4, unlabeled IPv6, VPN-IPv4, and VPN-IPv6 VPN routes. The supported VPRN scenarios are described in BGP fast reroute scenarios (VPRN context).

BGP fast reroute information specific to the base router BGP context is described in the BGP Fast Reroute section of the 7450 ESS, 7750 SR, 7950 XRS, and VSR Unicast Routing Protocols Guide.

| Ingress packet | Primary route | Backup route | Prefix independent convergence |

|---|---|---|---|

IPv4 (ingress PE) |

IPv4 route with next-hop A resolved by an IPv4 route |

IPv4 route with next-hop B resolved by an IPv4 route |

Yes |

IPv4 (ingress PE) |

VPN-IPv4 route with next-hop A resolved by a GRE, LDP, RSVP or BGP tunnel |

VPN-IPv4 route with next-hop A resolved by a GRE, LDP, RSVP or BGP tunnel |

Yes |

MPLS (egress PE) |

IPv4 route with next-hop A resolved by an IPv4 route |

IPv4 route with next-hop B resolved by an IPv4 route |

Yes |

MPLS (egress PE) |

IPv4 route with next-hop A resolved by an IPv4 rout |

VPN-IPv4 route* with next-hop B resolved by a GRE, LDP, RSVP or BGP tunnel |

Yes |

IPv6 (ingress PE) |

IPv6 route with next-hop A resolved by an IPv6 route |

IPv6 route with next-hop B resolved by an IPv6 route |

Yes |

IPv6 (ingress PE) |

VPN-IPv6 route with next-hop A resolved by a GRE, LDP, RSVP or BGP tunnel |

VPN-IPv6 route with next-hop B resolved by a GRE, LDP, RSVP or BGP tunnel |

Yes |

MPLS (egress) |

IPv6 route with next-hop A resolved by an IPv6 route |

IPv6 route with next-hop B resolved by an IPv6 route |

Yes |

MPLS (egress) |

IPv6 route with next-hop A resolved by an IPv6 route |

Yes |

VPRN label mode must be VRF. VPRN must export its VPN-IP routes with RD ≠ y. For the best performance the backup next-hop must advertise the same VPRN label value with all routes (per VRF label). |

BGP fast reroute in a VPRN configuration

In a VPRN context, BGP fast reroute is optional and must be enabled. Fast reroute can be applied to all IPv4 prefixes, all IPv6 prefixes, all IPv4 and IPv6 prefixes, or to a specific set of IPv4 and IPv6 prefixes.

If all IP prefixes require backup-path protection, use a combination of the following BGP instance-level and VPRN-level commands:

- MD-CLI

configure router bgp backup-path ipv4 true configure router bgp backup-path ipv6 true configure service vprn bgp-vpn-backup ipv4 true configure service vprn bgp-vpn-backup ipv6 true - classic

CLI

configure router bgp backup-path configure service vprn enable-bgp-vpn-backup

Use the following commands to enable BGP fast reroute for all IPv4 prefixes or all IPv6 prefixes, or both, that have a best path through a VPRN BGP peer:

- MD-CLI

configure service vprn bgp backup-path ipv4 true configure service vprn bgp backup-path ipv6 true configure service vprn bgp backup-path label-ipv4 true configure service vprn bgp backup-path label-ipv6 true - classic

CLI

configure service vprn bgp backup-path

By enabling BGP VPN backup at the VPRN-level BGP fast reroute is enabled for all IPv4 prefixes or all IPv6 prefixes, or both, that have a best path through a remote PE peer.

If only some IP prefixes require backup path protection, use the following commands in the route policy to apply the install-backup-path action to the best paths of the IP prefixes requiring protection:

- MD-CLI

configure policy-options policy-statement default-action install-backup-path configure policy-options policy-statement action install-backup-path - classic

CLI

configure router policy-options policy-statement default-action install-backup-path configure router policy-options policy-statement entry action install-backup-path

See the ‟BGP Fast Reroute” section of the 7450 ESS, 7750 SR, 7950 XRS, and VSR Unicast Routing Protocols Guide for more information.

Export of inactive VPRN BGP routes

A BGP route learned from a VPRN BGP peer is exportable as a VPN-IP route, only if it is the best route for the prefix and is installed in the route table of the VPRN. Use the following command to relax this rule and allow the best inactive VPRN BGP route to be exportable as a VPN-IP route, provided that the active installed route for the prefix is an imported VPN-IP route.

configure service vprn export-inactive-bgpUse the following command to further relax the preceding rule and allow the best inactive VPRN BGP route (best amongst all routes received from all CEs) to be exportable as a VPN-IP route, regardless of the route type of the active installed route.

configure service vprn export-inactive-bgp-enhancedThe export-inactive-bgp command (or the export-inactive-bgp-enhanced command, which is a superset of the functionality) is useful in a scenario where two or more PE routers connect to a multihomed site, and the failure of one of the PE routers or a PE-CE link must be handled by rerouting the traffic over the alternate paths. The traffic failover time in this situation can be reduced if all PE routers have prior knowledge of the potential backup paths and do not have to wait for BGP route advertisements or withdrawals to reprogram their forwarding tables. Achieving this can be challenging with normal BGP procedures. A PE router is not allowed to advertise a BGP route that it has learned from a connected CE device to other PE routers, if that route is not its active route for the destination in the route table. If the multihoming scenario requires all traffic destined for an IP prefix to be carried over a preferred primary path (passing through PE1-CE1, for example), all other PE routers (PE2, PE3, and so on) have that VPN route set as their active route for the destination and are unable to advertise their own routes for the same IP prefix.

For the best inactive BGP route to be exported, it must be matched and accepted by the VRF export policy or there must be an equivalent VRF target configuration.

When a VPN-IP route is advertised because either the export-inactive-bgp command or the export-inactive-bgp-enhanced command is enabled, the label carried in the route is either a per next-hop label corresponding to the next-hop IP address of the CE-BGP route, or a per-prefix label. This helps to avoid packet looping issues caused by unsynchronized IP FIBs.

When a PE router that advertised an inactive VPRN BGP route for an IP prefix receives a withdrawal for the VPN-IP route that was the active primary route, the inactive backup path may be promoted to the primary path; that is, the CE-BGP route may become the active route for the destination. In this case, the PE router is required to readvertise the VPN-IP route with a per-VRF label, if that is the behavior specified by the default allocation policy, and there is no label-per-prefix policy override. In the time it takes for the new VPN-IP route to reach all ingress routers and for the ingress routers to update their forwarding tables, traffic continues to be received with the old per next-hop label. The egress PE drops the in-flight traffic, unless the following command is used to configure label retention.

configure router mpls-labels bgp-labels-hold-timerThe bgp-labels-hold-timer command configures a delay (in seconds) between the withdrawal of a VPN-IP route with a per next-hop label and the deletion of the corresponding label forwarding entry in the IOM. The value of the bgp-labels-hold-timer command must be large enough to account for the propagation delay of the route withdrawal to all ingress routers.

VPRN features

This section describes various VPRN features and any special capabilities or considerations as they relate to VPRN services.

IP interfaces

VPRN customer IP interfaces can be configured with most of the same options found on the core IP interfaces. The advanced configuration options supported are as follows:

VRRP

Cflowd

secondary IP addresses

ICMP options

QoS Policy Propagation Using BGP

This section discusses QPPB as it applies to VPRN, IES, and router interfaces. See the QoS Policy Propagation Using BGP (QPPB) section and the IP Router Configuration section in the 7450 ESS, 7750 SR, 7950 XRS, and VSR Router Configuration Guide.

The QoS Policy Propagation Using BGP (QPPB) feature applies only to the 7450 ESS and 7750 SR.

QoS policy propagation using BGP (QPPB) is a feature that allows a route to be installed in the routing table with a forwarding-class and priority so that packets matching the route can receive the associated QoS. The forwarding-class and priority associated with a BGP route are set using BGP import route policies. In the industry, this feature is called QPPB, and even though the feature name refers to BGP specifically. On SR OS, QPPB is supported for BGP (IPv4, IPv6, VPN-IPv4, VPN-IPv6), RIP and static routes.

While SAP ingress and network QoS policies can achieve the same end result as QPPB, the effort involved in creating the QoS policies, keeping them up-to-date, and applying them across many nodes is much greater than with QPPB. This is because of assigning a packet, arriving on a particular IP interface, to a specific forwarding-class and priority/profile, based on the source IP address or destination IP address of the packet. In a typical application of QPPB, a BGP route is advertised with a BGP community attribute that conveys a particular QoS. Routers that receive the advertisement accept the route into their routing table and set the forwarding-class and priority of the route from the community attribute.

QPPB applications

The typical applications of QPPB are as follows:

-

coordination of QoS policies between different administrative domains

-

traffic differentiation within a single domain, based on route characteristics

Inter-AS coordination of QoS policies

The user of an administrative domain A can use QPPB to signal to a peer administrative domain B that traffic sent to specific prefixes advertised by domain A should receive a particular QoS treatment in domain B. More specifically, an ASBR of domain A can advertise a prefix XYZ to domain B and include a BGP community attribute with the route. The community value implies a particular QoS treatment, as agreed by the two domains (in their peering agreement or service level agreement, for example). When the ASBR and other routers in domain B accept and install the route for XYZ into their routing table, they apply a QoS policy on selected interfaces that classifies traffic toward network XYZ into the QoS class implied by the BGP community value.

QPPB may also be used to request that traffic sourced from specific networks receive appropriate QoS handling in downstream nodes that may span different administrative domains. This can be achieved by advertising the source prefix with a BGP community, as discussed above. However, in this case other approaches are equally valid, such as marking the DSCP or other CoS fields based on source IP address so that downstream domains can take action based on a common understanding of the QoS treatment implied by different DSCP values.

In the above examples, coordination of QoS policies using QPPB could be between a business customer and its IP VPN service provider, or between one service provider and another.

Traffic differentiation based on route characteristics

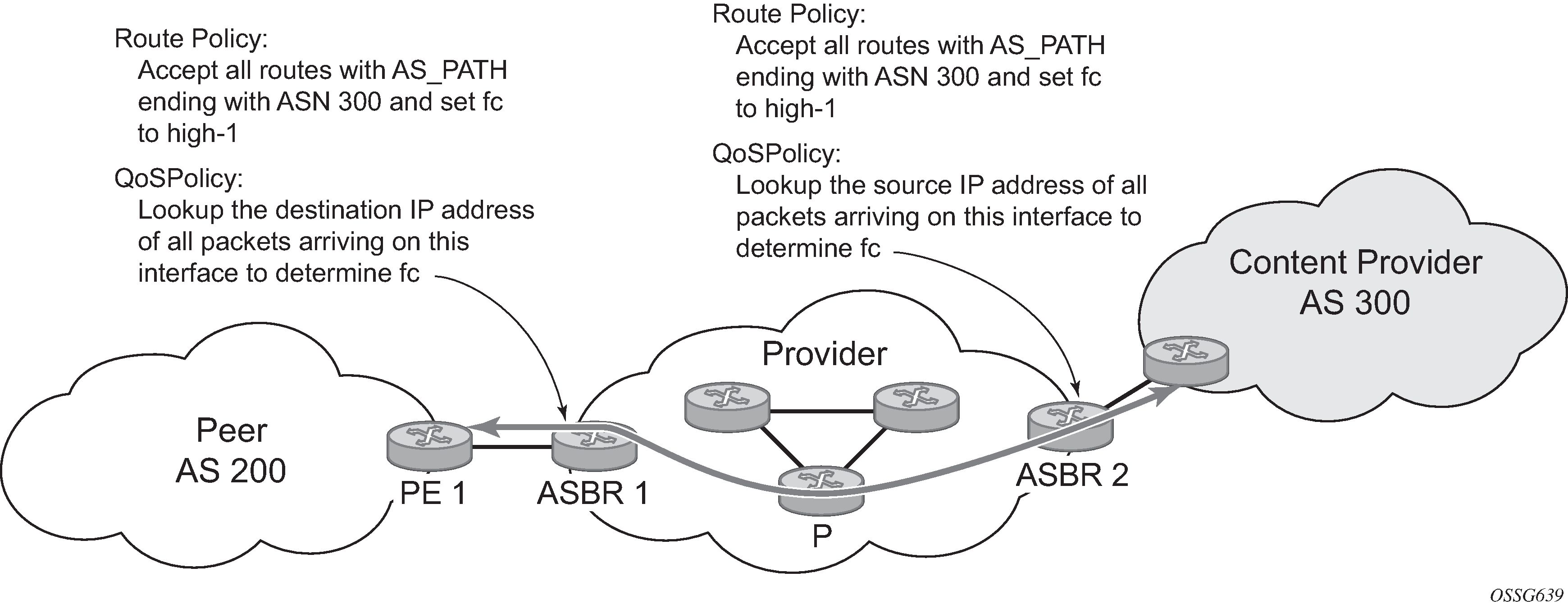

There may be times when a network user wants to provide differentiated service to certain traffic flows within its network, and these traffic flows can be identified with known routes. For example, the user of an ISP network may want to give priority to traffic originating in a particular ASN (the ASN of a content provider offering over-the-top services to the ISP’s customers), following a specific AS_PATH, or destined for a particular next-hop (remaining on-net vs. off-net).

Use of QPPB to differentiate traffic in an ISP network shows an example of an ISP that has an agreement with the content provider managing AS300 to provide traffic sourced and terminating within AS300 with differentiated service appropriate to the content being transported. In this example we presume that ASBR1 and ASBR2 mark the DSCP of packets terminating and sourced, respectively, in AS300 so that other nodes within the ISP’s network do not need to rely on QPPB to determine the correct forwarding class to use for the traffic. The DSCP or other CoS markings could be left unchanged in the ISP’s network and QPPB used on every node.

QPPB

There are two main aspects of the QPPB feature on the 7450 ESS and 7750 SR:

the ability to associate a forwarding class and priority with specific routes in the routing table

the ability to classify an IP packet arriving on a particular IP interface to the forwarding class and priority associated with the route that best matches the packet

Associating an FC and priority with a route

Use the following commands to set the forwarding class and optionally the priority associated with routes accepted by a route-policy entry:

- MD-CLI

configure policy-options policy-statement entry action forwarding-class fc configure policy-options policy-statement entry action forwarding-class priority - classic

CLI

configure router policy-options policy-statement entry action fc priority

The following example shows the configuration of the forwarding class and priority.

MD-CLI

[ex:/configure policy-options]

A:admin@node-2# info

community "gold" {

member "300:100" { }

}

policy-statement "qppb_policy" {

entry 10 {

from {

community {

name "gold"

}

protocol {

name [bgp]

}

}

action {

action-type accept

forwarding-class {

fc h1

priority high

}

}

}

}classic CLI

A:node-2>config>router>policy-options# info

----------------------------------------------

community "gold"

members "300:100"

exit

policy-statement "qppb_policy"

entry 10

from

protocol bgp

community "gold"

exit

action accept

fc h1 priority high

exit

exit

exit

----------------------------------------------The fc command is supported with all existing from and to match conditions in a route policy entry and with any action other than reject, it is supported with the next-entry, next-policy, and accept actions. If a next-entry or next-policy action results in multiple matching entries, the last entry with a QPPB action determines the forwarding class and priority.

A route policy that includes the fc command in one or more entries can be used in any import or export policy, but the fc command has no effect except in the following types of policies:

-

MD-CLI

-

VRF import policies

configure service vprn bgp-evpn mpls vrf-import configure service vprn bgp-ipvpn mpls vrf-import configure service vprn bgp-ipvpn srv6 vrf-import configure service vprn mvpn vrf-import -

BGP import policies

configure router bgp import configure router bgp group import configure router bgp neighbor import configure service vprn bgp import configure service vprn bgp group import configure service vprn bgp neighbor import -

RIP import policies

configure router rip import-policy configure router rip group import-policy configure router rip group neighbor import-policy configure service vprn rip import-policy configure service vprn rip group import-policy configure service vprn rip group neighbor import-policy

-

-

classic CLI

-

VRF import policies

configure service vprn bgp-evpn mpls vrf-import configure service vprn bgp-ipvpn mpls vrf-import configure service vprn bgp-ipvpn segment-routing-v6 vrf-import configure service vprn mvpn vrf-import -

BGP import policies

configure router bgp import configure router bgp group import configure router bgp group neighbor import configure service vprn bgp import configure service vprn bgp group import configure service vprn bgp group neighbor import -

RIP import policies

configure router rip import configure router rip group import configure router rip group neighbor import configure service vprn rip import configure service vprn rip group import configure service vprn rip group neighbor import

-

The QPPB route policies support routes learned from RIP and BGP neighbors of a VPRN as well as for routes learned from RIP and BGP neighbors of the base/global routing instance.

QPPB is supported for BGP routes belonging to any of the address families listed below:

-

IPv4 (AFI=1, SAFI=1)

-

IPv6 (AFI=2, SAFI=1)

-

VPN-IPv4 (AFI=1, SAFI=128)

-

VPN-IPv6 (AFI=2, SAFI=128)

A VPN-IP route may match both a VRF import policy entry and a BGP import policy entry (if vpn-apply-import is configured in the base router BGP instance). In this case the VRF import policy is applied first and then the BGP import policy, so the QPPB QoS is based on the BGP import policy entry.

This feature also introduces the ability to associate a forwarding class and optionally priority with IPv4 and IPv6 static routes. This is achieved by specifying the forwarding class within the static route entry using the next-hop or indirect commands.

Priority is optional when specifying the forwarding class of a static route, but when configured it can only be deleted and returned to unspecified by deleting the entire static route.

Displaying QoS information associated with routes

Use the commands in the following contexts to show the forwarding class and priority associated with the displayed routes.

show router route-table

show router fib

show router bgp routes

show router rip database

show router static-routeUse the following command to show an additional line per route entry that displays the forwarding class and priority of the route. When the qos command option is specified, the output includes an additional line per route entry that displays the forwarding class and priority of the route. If a route has no forwarding class and priority information, the third line is blank.

show router route-table 10.1.5.0/24 qos===============================================================================

Route Table (Router: Base)

===============================================================================

Dest Prefix Type Proto Age Pref

Next Hop[Interface Name] Metric

QoS

-------------------------------------------------------------------------------

10.1.5.0/24 Remote BGP 15h32m52s 0

PE1_to_PE2 0

h1, high

-------------------------------------------------------------------------------

No. of Routes: 1

===============================================================================Enabling QPPB on an IP interface

To enable QoS classification of ingress IP packets on an interface based on the QoS information associated with the routes that best match the packets, configure the qos-route-lookup command in the IP interface. The qos-route-lookup command has command options to indicate whether the QoS result is based on lookup of the source or destination IP address in every packet. There are separate qos-route-lookup commands for the IPv4 and IPv6 packets on an interface, which allows QPPB to be enabled for IPv4 only, IPv6 only, or both IPv4 and IPv6. Currently, QPPB based on a source IP address is not supported for IPv6 packets or for ingress subscriber management traffic on a group interface.

The qos-route-lookup command is supported on the following types of IP interfaces:

-

Base router network interfaces

configure router interface -

VPRN SAP and spoke SDP interfaces

configure service vprn interface -

VPRN group-interfaces

configure service vprn subscriber-interface group-interface -

IES SAP and spoke SDP interfaces

configure service ies interface -

IES group interfaces

configure service ies subscriber-interface group-interface

When the qos-route-lookup command with the destination command option is applied to an IP interface and the destination address of an incoming IP packet matches a route with QoS information, the packet is classified to the FC and priority associated with that route. The command overrides the FC and priority/profile determined from the SAP ingress or network QoS policy associated with the IP interface (see section 5.7 for more information). If the destination address of the incoming packet matches a route with no QoS information, the FC and priority of the packet remain as determined by the sap-ingress or network qos policy.

Similarly, when the qos-route-lookup command with the source command option is applied to an IP interface and the source address of an incoming IP packet matches a route with QoS information, the packet is classified to the FC and priority associated with that route. The command overrides the FC and priority/profile determined from the SAP ingress or network QoS policy associated with the IP interface. If the source address of the incoming packet matches a route with no QoS information, the FC and priority of the packet remain as determined by the SAP ingress or network QoS policy.

Currently, QPPB is not supported for ingress MPLS traffic on CsC PE or CsC CE interfaces or on network interfaces under the following context.

configure service vprn network-interfaceQPPB when next hops are resolved by QPPB routes

In some circumstances (IP VPN inter-AS model C, Carrier Supporting Carrier, indirect static routes, and so on) an IPv4 or IPv6 packet may arrive on a QPPB-enabled interface and match a route A1 whose next-hop N1 is resolved by a route A2 with next-hop N2 and perhaps N2 is resolved by a route A3 with next-hop N3, and so on. The QPPB result is based only on the forwarding-class and priority of route A1. If A1 does not have a forwarding-class and priority association then the QoS classification is not based on QPPB, even if routes A2, A3, and so on have forwarding-class and priority associations.

QPPB and multiple paths to a destination

When ECMP is enabled some routes may have multiple equal-cost next-hops in the forwarding table. When an IP packet matches such a route the next-hop selection is typically based on a hash algorithm that tries to load balance traffic across all the next-hops while keeping all packets of a specific flow on the same path. The QPPB configuration model described in Associating an FC and priority with a route allows different QoS information to be associated with the different ECMP next hops of a route. The forwarding-class and priority of a packet matching an ECMP route is based on the particular next-hop used to forward the packet.

When BGP FRR is enabled some BGP routes may have a backup next-hop in the forwarding table in addition to the one or more primary next-hops representing the equal-cost best paths allowed by the ECMP/multipath configuration. When an IP packet matches such a route a reachable primary next-hop is selected (based on the hash result) but if all the primary next-hops are unreachable then the backup next-hop is used. The QPPB configuration model described in Associating an FC and priority with a route allows the forwarding-class and priority associated with the backup path to be different from the QoS characteristics of the equal-cost best paths. The forwarding class and priority of a packet forwarded on the backup path is based on the forwarding class and priority configured for the backup route.

QPPB and policy-based routing

When an IPv4 or IPv6 packet with destination address X arrives on an interface with both QPPB and policy-based-routing enabled:

There is no QPPB classification if the IP filter action redirects the packet to a directly connected interface, even if X is matched by a route with a forwarding-class and priority

QPPB classification is based on the forwarding-class and priority of the route matching IP address Y if the IP filter action redirects the packet to the indirect next-hop IP address Y, even if X is matched by a route with a forwarding class and priority.

QPPB and GRT lookup

Source-address based QPPB is not supported on any SAP or spoke SDP interface of a VPRN configured with the grt-lookup command.

QPPB interaction with SAP ingress QoS policy

When QPPB is enabled on a SAP IP interface the forwarding class of a packet may change from fc1, the original forwarding class determined by the SAP ingress QoS policy to fc2, the new forwarding class determined by QPPB. In the ingress datapath SAP ingress QoS policies are applied in the first P chip and route lookup/QPPB occurs in the second P chip. This has the implications listed below:

Ingress remarking (based on profile state) is always based on the original fc (fc1) and sub-class (if defined).

The profile state of a SAP ingress packet that matches a QPPB route depends on the configuration of fc2 only. If the de-1-out-profile flag is enabled in fc2 and fc2 is not mapped to a priority mode queue then the packet is marked out of profile if its DE bit = 1. If the profile state of fc2 is explicitly configured (in or out) and fc2 is not mapped to a priority mode queue, then the packet is assigned this profile state. In both cases there is no consideration of whether fc1 was mapped to a priority mode queue.

The priority of a SAP ingress packet that matches a QPPB route depends on several factors. If the de-1-out-profile flag is enabled in fc2 and the DE bit is set in the packet then priority is low regardless of the QPPB priority or fc2 mapping to profile mode queue, priority mode queue or policer. If fc2 is associated with a profile mode queue then the packet priority is based on the explicitly configured profile state of fc2 (in profile = high, out profile = low, undefined = high), regardless of the QPPB priority or fc1 configuration. If fc2 is associated with a priority mode queue or policer then the packet priority is based on QPPB (unless DE=1), but if no priority information is associated with the route then the packet priority is based on the configuration of fc1 (if fc1 mapped to a priority mode queue then it is based on DSCP/IP prec/802.1p and if fc1 mapped to a profile mode queue then it is based on the profile state of fc1).

QPPB interactions with SAP ingress QoS summarizes these interactions.

| Original FC object mapping | New FC object mapping | Profile | Priority (drop preference) | DE=1 override | In/out of profile marking |

|---|---|---|---|---|---|

Profile mode queue |

Profile mode queue |

From new base FC unless overridden by DE=1 |

From QPPB, unless packet is marked in or out of profile in which case follows profile. Default is high priority. |

From new base FC |

From original FC and sub-class |

Priority mode queue |

Priority mode queue |

Ignored |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then from original dot1p/exp/DSCP mapping or policy default. |

From new base FC |

From original FC and sub-class |

Policer |

Policer |

From new base FC unless overridden by DE=1 |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then from original dot1p/exp/DSCP mapping or policy default. |

From new base FC |

From original FC and sub-class |

Priority mode queue |

Policer |

From new base FC unless overridden by DE=1 |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then from original dot1p/exp/DSCP mapping or policy default. |

From new base FC |

From original FC and sub-class |

Policer |

Priority mode queue |

Ignored |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then from original dot1p/exp/DSCP mapping or policy default. |

From new base FC |

From original FC and sub-class |

Profile mode queue |

Priority mode queue |

Ignored |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then follows original FC’s profile mode rules. |

From new base FC |

From original FC and sub-class |

Priority mode queue |

Profile mode queue |

From new base FC unless overridden by DE=1 |

From QPPB, unless packet is marked in or out of profile in which case follows profile. Default is high priority. |

From new base FC |

From original FC and sub-class |

Profile mode queue |

Policer |

From new base FC unless overridden by DE=1 |

If DE=1 override then low otherwise from QPPB. If no DEI or QPPB overrides then follows original FC’s profile mode rules. |

From new base FC |

From original FC and sub-class |

Policer |

Profile mode queue |

From new base FC unless overridden by DE=1 |

From QPPB, unless packet is marked in or out of profile in which case follows profile. Default is high priority. |

From new base FC |

From original FC and sub-class |

Object grouping and state monitoring

This feature introduces a generic operational group object which associates different service endpoints (pseudowires and SAPs) located in the same or in different service instances. The operational group status is derived from the status of the individual components using specific rules specific to the application using the concept. A number of other service entities, the monitoring objects, can be configured to monitor the operational group status and to perform specific actions as a result of status transitions. For example, if the operational group goes down, the monitoring objects are brought down.

VPRN IP interface applicability

This concept is used by an IPv4 VPRN interface to affect the operational state of the IP interface monitoring the operational group. Individual SAP and spoke SDPs are supported as monitoring objects.

The following rules apply:

-

An object can only belong to one group at a time.

-

An object that is part of a group cannot monitor the status of a group.

-

An object that monitors the status of a group cannot be part of a group.

-

An operational group may contain any combination of member types: SAP or spoke SDPs.

-

An operational group may contain members from different VPLS service instances.

-

Objects from different services may monitor the operational group.

- Identify a set of objects whose forwarding state should be considered as a whole group then group them under an operational group using the oper-group command.

-

Associate the IP interface to the operational group using the

monitor-oper-group command.

The status of the operational group is dictated by the status of one or more members according to the following rules:

-

The operational group goes down if all the objects in the operational group go down. The operational group comes up if at least one of the components is up.

-

An object in the group is considered down if it is not forwarding traffic in at least one direction. That could be because the operational state is down or the direction is blocked through some validation mechanism.

-

If a group is configured but no members are specified yet, its status is considered up.

-

As soon as the first object is configured the status of the operational group is dictated by the status of the provisioned members.

The following configuration shows the operational group g1, the VPLS SAP that is mapped to it and the IP interfaces in IES service 2001 monitoring the operational group g1. This example uses an R‑VPLS context.

Operational group g1 has a single SAP (1/1/1:2001) mapped to it and the IP interfaces in the IES service 2001 derive their state from the state of operational group g1.

MD-CLIIn the MD-CLI, the VPLS instance includes the name v1. The IES interface links to the VPLS using the vpls command.

[ex:/configure service] A:admin@node-2# info oper-group "g1" { } ies "2001" { customer "1" interface "i2001" { monitor-oper-group "g1" vpls "v1" { } ipv4 { primary { address 192.168.1.1 prefix-length 24 } } } } vpls "v1" { admin-state enable service-id 1 customer "1" routed-vpls { } stp { admin-state disable } sap 1/1/1:2001 { oper-group "g1" eth-cfm { mep md-admin-name "1" ma-admin-name "1" mep-id 1 { } } sap 1/1/2:2001 { admin-state enable } sap 1/1/3:2001 { admin-state enable } }Classic CLIIn the classic CLI, the VPLS instance includes the allow-ip-int-bind and the name v1. The IES interface links to the VPLS using the vpls command.

A:node-2>config>service# info ---------------------------------------------- oper-group "g1" create exit vpls 1 name "v1" customer 1 create allow-ip-int-bind exit stp shutdown exit sap 1/1/1:2001 create oper-group "g1" eth-cfm mep 1 domain 1 association 1 direction down ccm-enable no shutdown exit sap 1/1/2:2001 create no shutdown exit sap 1/1/3:2001 create no shutdown exit no shutdown exit ies 2001 name "2001" customer 1 create shutdown interface "i2001" create monitor-oper-group "g1" address 192.168.1.1/24 vpls "v1" exit exit exit -

Subscriber interfaces

Subscriber interfaces are composed of a combination of two key technologies, subscriber interfaces and group interfaces. While the subscriber interface defines the subscriber subnets, the group interfaces are responsible for aggregating the SAPs.

Subscriber interfaces apply only to the 7450 ESS and 7750 SR.

subscriber interface

This is an interface that allows the sharing of a subnet among one or many group interfaces in the routed CO model.

group interface

This interface aggregates multiple SAPs on the same port.

redundant interfaces

This is a special spoke-terminated Layer 3 interface. It is used in a Layer 3 routed CO dual-homing configuration to shunt downstream (network to subscriber) to the active node for a specific subscriber.

SAPs

Encapsulations

The following SAP encapsulations are supported on the 7750 SR and 7950 XRS VPRN service:

-

Ethernet null

-

Ethernet dot1q

-

QinQ

-

LAG

-

Tunnel (IPsec or GRE)

Pseudowire SAPs

Pseudowire SAPs are supported on VPRN interfaces for the 7750 SR in the same way as on IES interfaces.

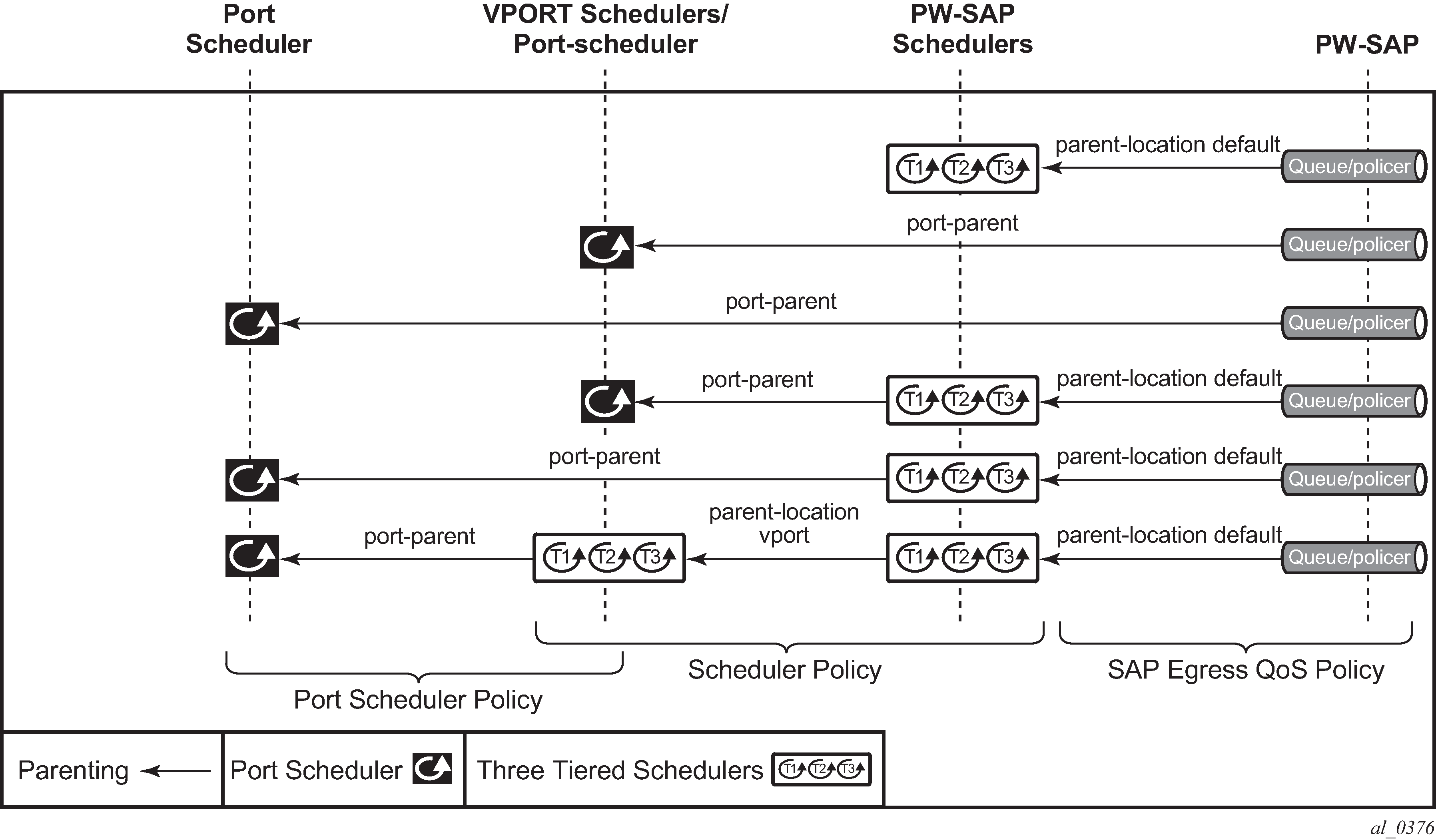

QoS policies

When applied to a VPRN SAP, service ingress QoS policies only create the unicast queues defined in the policy if PIM is not configured on the associated IP interface; if PIM is configured, the multipoint queues are applied as well.

With VPRN services, service egress QoS policies function as with other services where the class-based queues are created as defined in the policy.

Both Layer 2 and Layer 3 criteria can be used in the QoS policies for traffic classification in an VPRN.

Filter policies

Ingress and egress IPv4 and IPv6 filter policies can be applied to VPRN SAPs.

DSCP marking

Specific DSCP, forwarding class, and Dot1P command options can be specified to be used by every protocol packet generated by the VPRN. This enables prioritization or de-prioritization of every protocol (as required). The markings effect a change in behavior on ingress when queuing. For example, if OSPF is not enabled, then traffic can be de-prioritized to best effort (be) DSCP. This change de-prioritizes OSPF traffic to the CPU complex.

DSCP marking for internally generated control and management traffic by marking the DSCP value should be used for the specific application. This can be configured per routing instance. For example, OSPF packets can carry a different DSCP marking for the base instance and then for a VPRN service. ISIS and ARP traffic is not an IP-generated traffic type and is not configurable. See DSCP/FC marking.

When an application is configured to use a specified DSCP value then the MPLS EXP, Dot1P bits are marked in accordance with the network or access egress policy as it applies to the logical interface the packet is egressing.

The DSCP value can be set per application. This setting is forwarded to the egress line card. The egress line card does not alter the coded DSCP value and marks the LSP-EXP and IEEE 802.1p (Dot1P) bits according to the appropriate network or access QoS policy.

| Protocol | IPv4 | IPv6 | DSCP marking | Dot1P marking | Default FC |

|---|---|---|---|---|---|

ARP |

— |

— |

— |

Yes |

NC |

BGP |

Yes |

Yes |

Yes |

Yes |

NC |

BFD |

Yes |

— |

Yes |

Yes |

NC |

RIP |

Yes |

Yes |

Yes |

Yes |

NC |

PIM (SSM) |

Yes |

Yes |

Yes |

Yes |

NC |

OSPF |

Yes |

Yes |

Yes |

Yes |

NC |

SMTP |

Yes |

— |

— |

— |

AF |

IGMP/MLD |

Yes |

Yes |

Yes |

Yes |

AF |

Telnet |

Yes |

Yes |

Yes |

Yes |

AF |

TFTP |

Yes |

— |

Yes |

Yes |

AF |

FTP |

Yes |

— |

— |

— |

AF |

SSH (SCP) |

Yes |

Yes |

Yes |

Yes |

AF |

SNMP (get, set, and so on) |

Yes |

Yes |

Yes |

Yes |

AF |

SNMP trap/log |

Yes |

Yes |

Yes |

Yes |

AF |

syslog |

Yes |

Yes |

Yes |

Yes |

AF |

OAM ping |

Yes |

Yes |

Yes |

Yes |

AF |

ICMP ping |

Yes |

Yes |

Yes |

Yes |

AF |

Traceroute |

Yes |

Yes |

Yes |

Yes |

AF |

TACPLUS |

Yes |

Yes |

Yes |

Yes |

AF |

DNS |

Yes |

Yes |

Yes |

Yes |

AF |

SNTP/NTP |

Yes |

— |

— |

— |

AF |

RADIUS |

Yes |

— |

— |

— |

AF |

Cflowd |

Yes |

— |

— |

— |

AF |

DHCP 7450 ESS and 7750 SR only |

Yes |

Yes |

Yes |

Yes |

AF |

Bootp |

Yes |

— |

— |

— |

AF |

IPv6 Neighbor Discovery |

Yes |

— |

— |

— |

NC |

Default DSCP mapping table

DSCP NameDSCP ValueDSCP ValueDSCP ValueLabel

Decimal Hexadecimal Binary

=============================================================

Default 00x00 0b000000be

nc1 48 0x30 0b110000h1

nc2 56 0x38 0b111000nc

ef 46 0x2e 0b101110ef

af11100x0a0b001010assured

af12120x0c0b001100assured

af13140x0e0b001110assured

af21 18 0x12 0b010010l1

af22 20 0x14 0b010100l1

af23220x160b010110l1

af31 26 0x1a 0b011010l1

af32 28 0x1c 0b011100l1

af33 30 0x1d 0b011110l1

af41 34 0x22 0b100010h2

af42 36 0x24 0b100100h2

af43 38 0x26 0b100110h2

default*0*The default forwarding class mapping is used for all DSCP names or values for which there is no explicit forwarding class mapping.

Configuration of TTL propagation for VPRN routes

This feature allows the separate configuration of TTL propagation for in transit and CPM generated IP packets, at the ingress LER within a VPRN service context. Use the following commands to configure TTL propagation.

configure router ttl-propagate vprn-local

configure router ttl-propagate vprn-transitYou can enable TTL propagation behavior separately as follows:

for locally generated packets by CPM (using the vprn-local command)

for user and control packets in transit at the node (using the vprn-transit command)

The following command options can be specified:

all – enables TTL propagation from the IP header into all labels in the stack, for VPN-IPv4 and VPN-IPv6 packets forwarded in the context of all VPRN services in the system.

vc-only – reverts to the default behavior by which the IP TTL is propagated into the VC label but not to the transport labels in the stack. You can explicitly set the default behavior by configuring the vc-only value.

none – disables the propagation of the IP TTL to all labels in the stack, including the VC label. This is needed for a transparent operation of UDP traceroute in VPRN inter-AS Option B such that the ingress and egress ASBR nodes are not traced.

In the classic CLI, the vprn-local command does not use a no version.

In the MD-CLI, use the delete command to remove the configuration.

Use the following commands to override the global configuration within each VPRN instance.

configure service vprn ttl-propagate local

configure service vprn ttl-propagate transitThe default behavior for a VPRN instance is to inherit the global configuration for the same command. You can explicitly set the default behavior by configuring the inherit value.

In the classic CLI, the local and transit commands do not have no versions.

In the MD-CLI, use the delete command to remove the configuration.

The commands do not apply when the VPRN packet is forwarded over GRE transport tunnel.

If a packet is received in a VPRN context and a lookup is done in the Global Routing Table (GRT), when leaking to GRT is enabled, for example, the behavior of the TTL propagation is governed by the LSP shortcut configuration as follows:

- when the matching route is an RSVP LSP shortcut

configure router mpls shortcut-transit-ttl-propagate - when the matching route is an LDP LSP shortcut

configure router ldp shortcut-transit-ttl-propagate

When the matching route is a RFC 8277 label route or a 6PE route, It is governed by the BGP label route configuration.

When a packet is received on one VPRN instance and is redirected using Policy Based Routing (PBR) to be forwarded in another VPRN instance, the TTL propagation is governed by the configuration of the outgoing VPRN instance.

Packets that are forwarded in different contexts can use different TTL propagation over the same BGP tunnel, depending on the TTL configuration of each context. An example of this may be VPRN using a BGP tunnel and an IPv4 packet forwarded over a BGP label route of the same prefix as the tunnel.

CE to PE routing protocols

The 7750 SR and 7950 XRS VPRN supports the following PE to CE routing protocols:

BGP

Static

RIP

OSPF

PE to PE tunneling mechanisms

The 7750 SR and 7950 XRS support multiple mechanisms to provide transport tunnels for the forwarding of traffic between PE routers within the 2547bis network.

The 7750 SR and 7950 XRS VPRN implementation supports the use of:

RSVP-TE protocol to create tunnel LSPs between PE routers

LDP protocol to create tunnel LSP's between PE routers

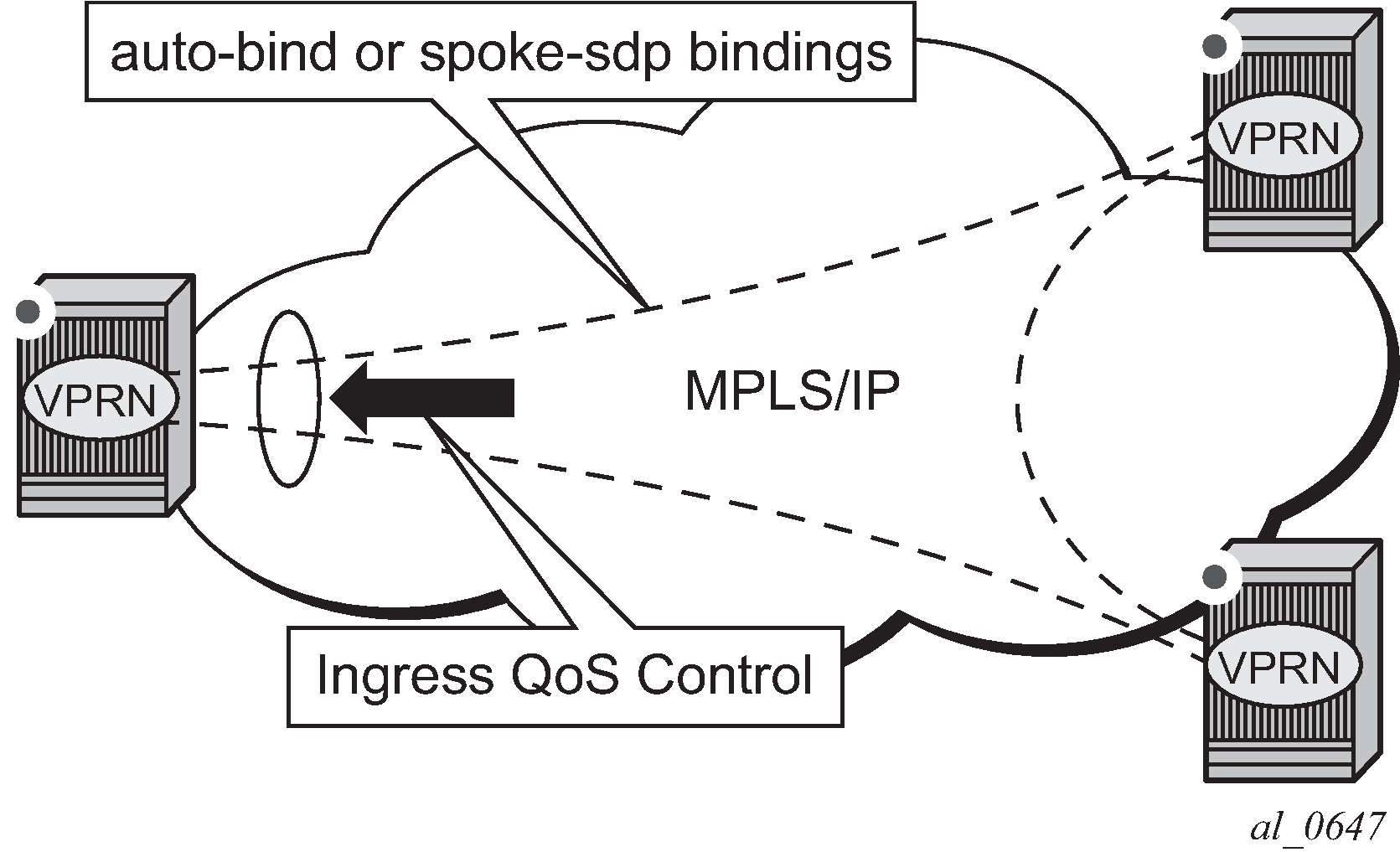

GRE tunnels between PE routers

These transport tunnel mechanisms provide the flexibility of using dynamically created LSPs where the service tunnels are automatically bound (the autobind feature) and the ability to provide specific VPN services with their own transport tunnels by explicitly binding SDPs if needed. When the autobind is used, all services traverse the same LSPs and do not allow alternate tunneling mechanisms (like GRE) or the ability to craft sets of LSPs with bandwidth reservations for specific customers as is available with explicit SDPs for the service.

Per VRF route limiting

The 7750 SR and 7950 XRS allow setting the maximum number of routes that can be accepted in the VRF for a VPRN service. There are options to specify a percentage threshold at which to generate an event that the VRF table is near full and an option to disable additional route learning when full or only generate an event.

Spoke SDPs

Distributed services use service distribution points (SDPs) to direct traffic to another router via service tunnels. SDPs are created on each participating router and then bound to a specific service. SDP can be created as either GRE or MPLS. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR Services Overview Guide for information about configuring SDPs.

This feature provides the ability to cross-connect traffic entering on a spoke SDP, used for Layer 2 services (VLLs or VPLS), on to an IES or VPRN service. From a logical point of view, the spoke SDP entering on a network port is cross-connected to the Layer 3 service as if it entered by a service SAP. The main exception to this is traffic entering the Layer 3 service by a spoke SDP is handled with network QoS policies and not access QoS policies.

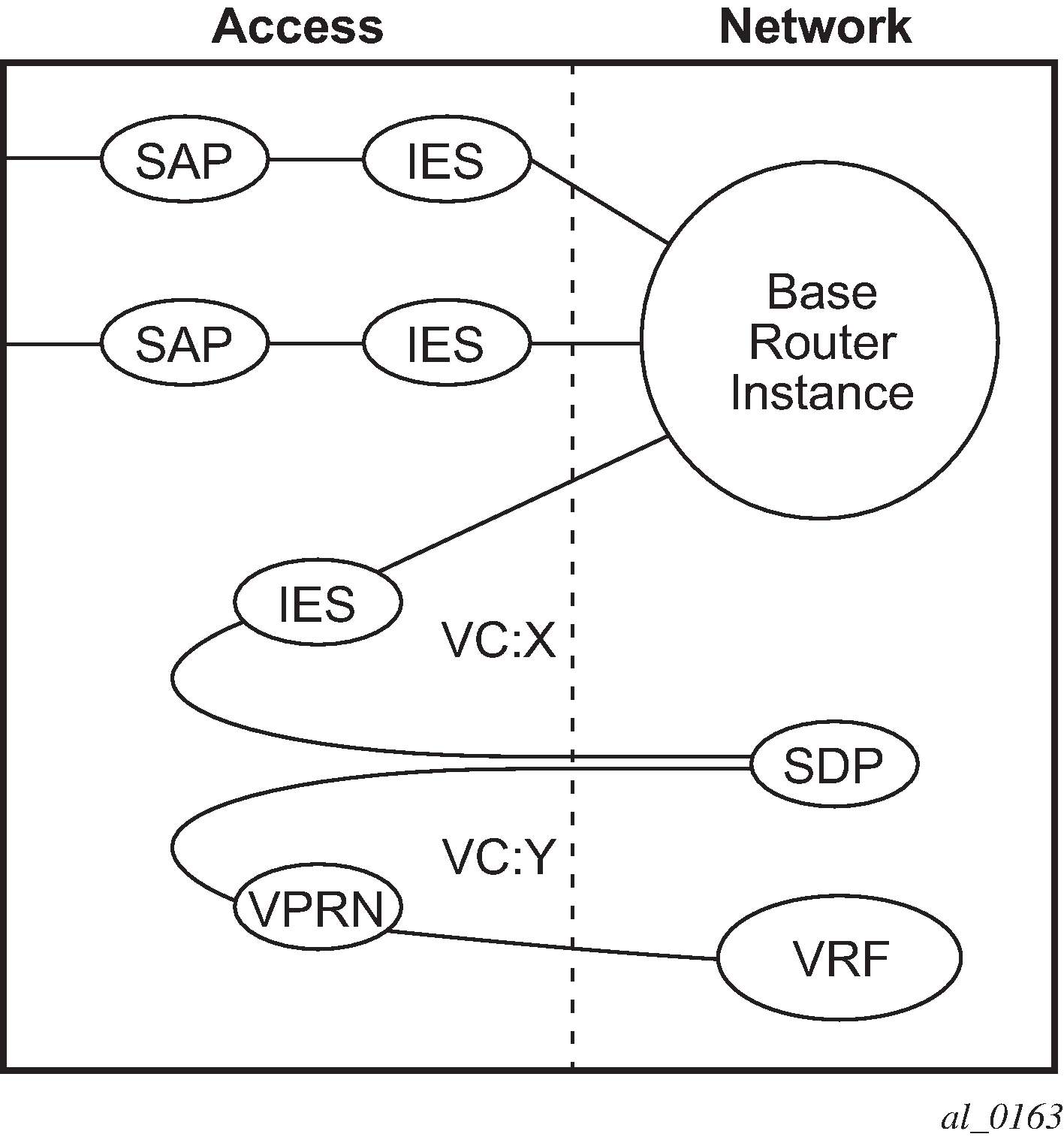

SDP-ID and VC label service identifiers depicts traffic terminating on a specific IES or VPRN service that is identified by the SDP ID and VC label present in the service packet.

See ‟VCCV BFD support for VLL, Spoke SDP Termination on IES and VPRN, and VPLS Services” in the 7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 2 Services and EVPN Guide for information about using VCCV BFD in spoke-SDP termination.

show router ldp bindings servicesT-LDP status signaling for spoke-SDPs terminating on IES/VPRN

T-LDP status signaling and PW active/standby signaling capabilities are supported on Ipipe and Epipe spoke SDPs.

Spoke SDP termination on an IES or VPRN provides the ability to cross-connect traffic entering on a spoke SDP, used for Layer 2 services (VLLs or VPLS), on to an IES or VPRN service. From a logical point of view the spoke SDP entering on a network port is cross-connected to the Layer 3 service as if it had entered using a service SAP. The main exception to this is traffic entering the Layer 3 service using a spoke SDP is handled with network QoS policies instead of access QoS policies.

When a SAP down or SDP binding down status message is received by the PE in which the Ipipe or Ethernet Spoke-SDP is terminated on an IES or VPRN interface, the interface is brought down and all associated routes are withdrawn in a similar way when the Spoke-SDP goes down locally. The same actions are taken when the standby T-LDP status message is received by the IES/VPRN PE.

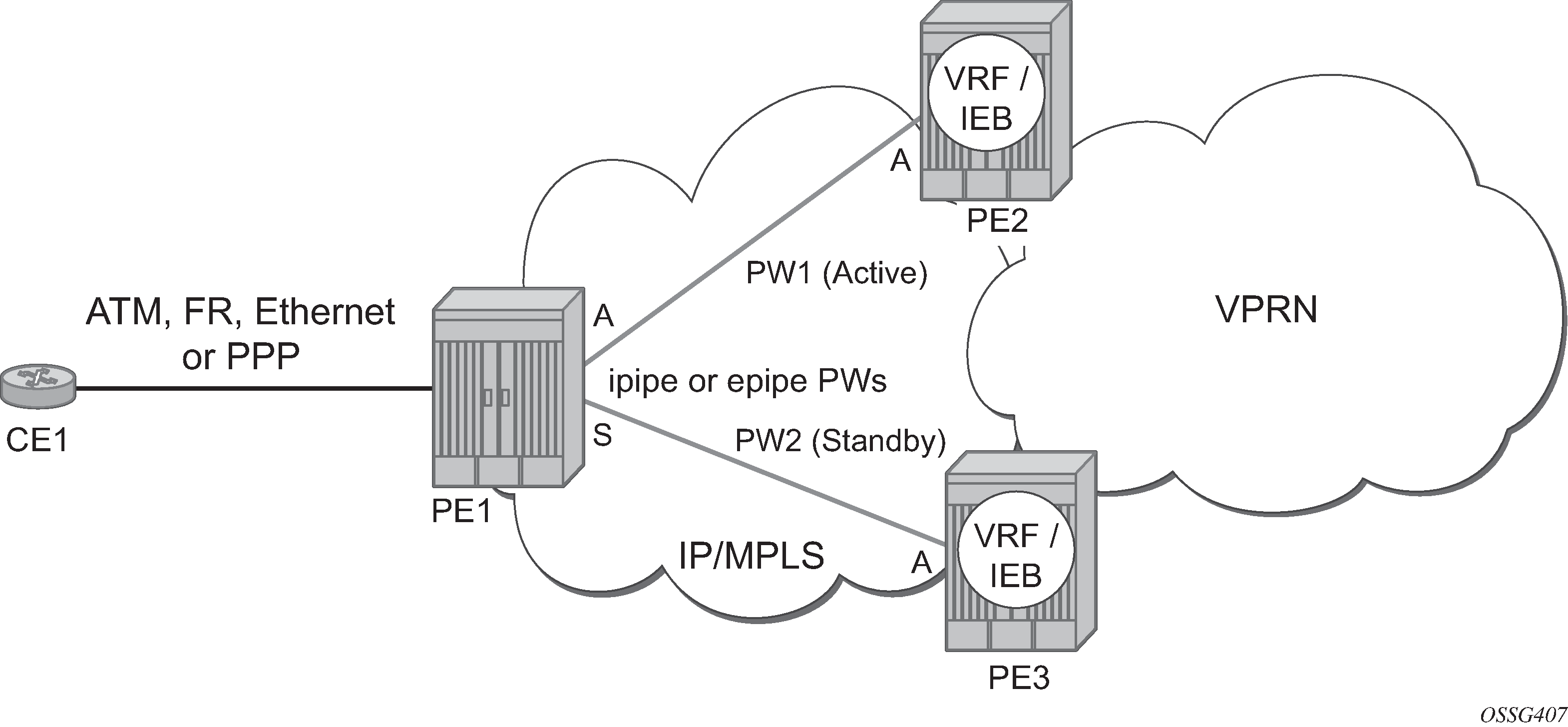

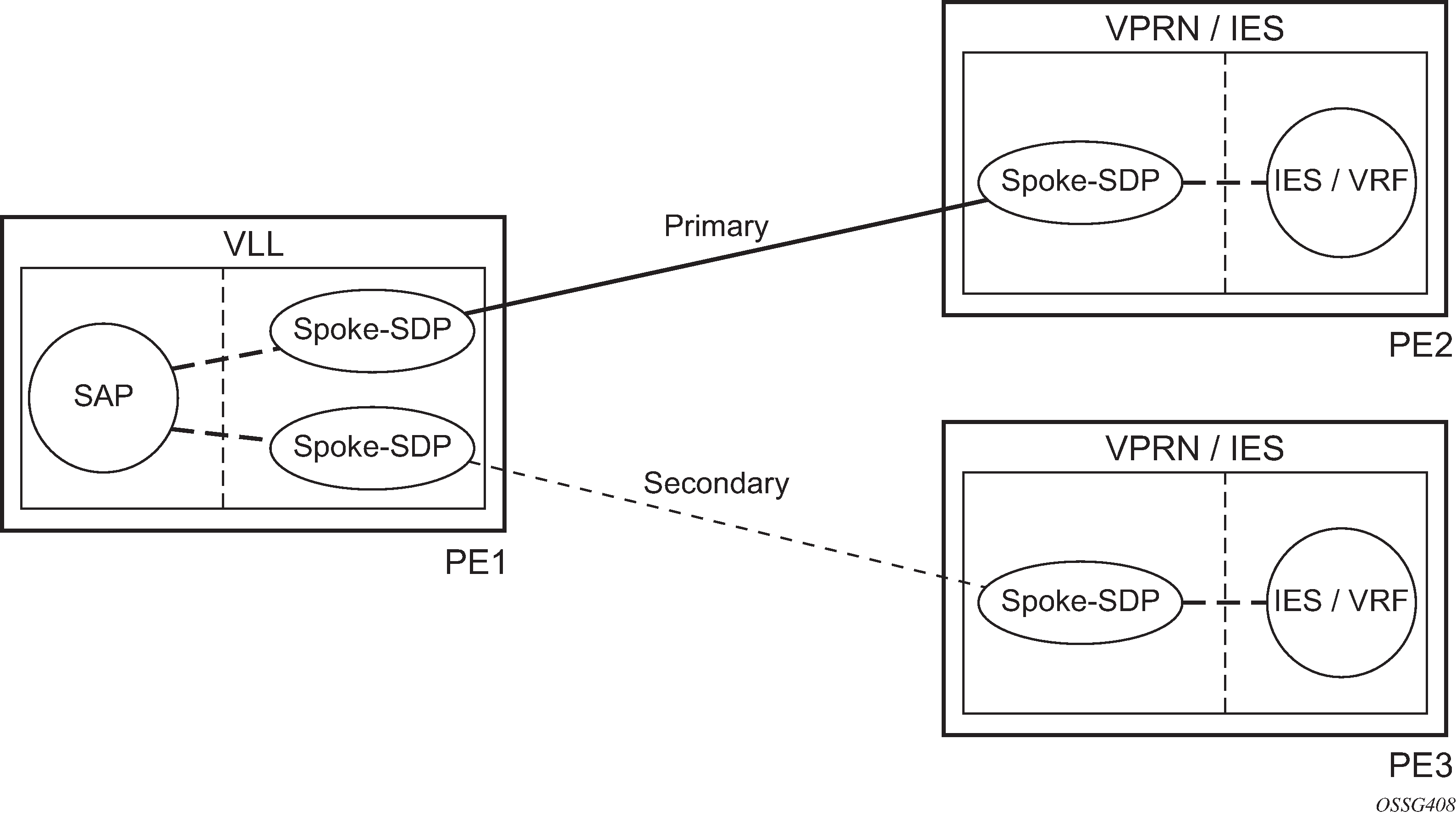

This feature can be used to provide redundant connectivity to a VPRN or IES from a PE providing a VLL service, as shown in Active/standby VRF using resilient Layer 2 circuits.

Spoke SDP redundancy into IES/VPRN

This feature can be used to provide redundant connectivity to a VPRN or IES from a PE providing a VLL service, as shown in Active/standby VRF using resilient Layer 2 circuits, using either Epipe or Ipipe spoke-SDPs. This feature is supported on the 7450 ESS and 7750 SR only.

In Active/standby VRF using resilient Layer 2 circuits, PE1 terminates two spoke SDPs that are bound to one SAP connected to CE1. PE1 chooses to forward traffic on one of the spoke SDPs (the active spoke-SDP), while blocking traffic on the other spoke SDP (the standby spoke SDP) in the transmit direction. PE2 and PE3 take any spoke SDPs for which PW forwarding standby has been signaled by PE1 to an operationally down state.

The 7450 ESS, 7750 SR, and 7950 XRS routers are expected to fulfill both functions (VLL and VPRN/IES PE), while the 7705 SAR must be able to fulfill the VLL PE function. Spoke SDP redundancy model illustrates the model for spoke SDP redundancy into a VPRN or IES.

Weighted ECMP for spoke-SDPs terminating on IES/VPRN and R-VPLS interfaces

ECMP and weighted ECMP into RSVP-TE and SR-TE LSPs is supported for Ipipe and Epipe spoke SDPs terminating on IP interfaces in an IES or VPRN, or for spoke SDP termination on a routed VPLS. It is also supported for SDPs using LDP over RSVP tunnels. The following example shows the configuration of weighted ECMP under the SDP used by the service.

MD-CLI

[ex:/configure service]

A:admin@node-2# info

...

sdp 1 {

delivery-type mpls

weighted-ecmp true

}classic CLI

A:node-2>config>service# info

----------------------------------------------

sdp 1 mpls create

shutdown

weighted-ecmp

exit

When a service uses a provisioned SDP on which weighted ECMP is configured, a path is selected based on the configured hash. Paths are then load balanced across LSPs used by an SDP according to normalized LSP load balancing weights. If one or more LSPs in the ECMP set to a specific next hop has no load-balancing-weight value configured, regular ECMP spraying is used.

IP-VPNs

Using OSPF in IP-VPNs

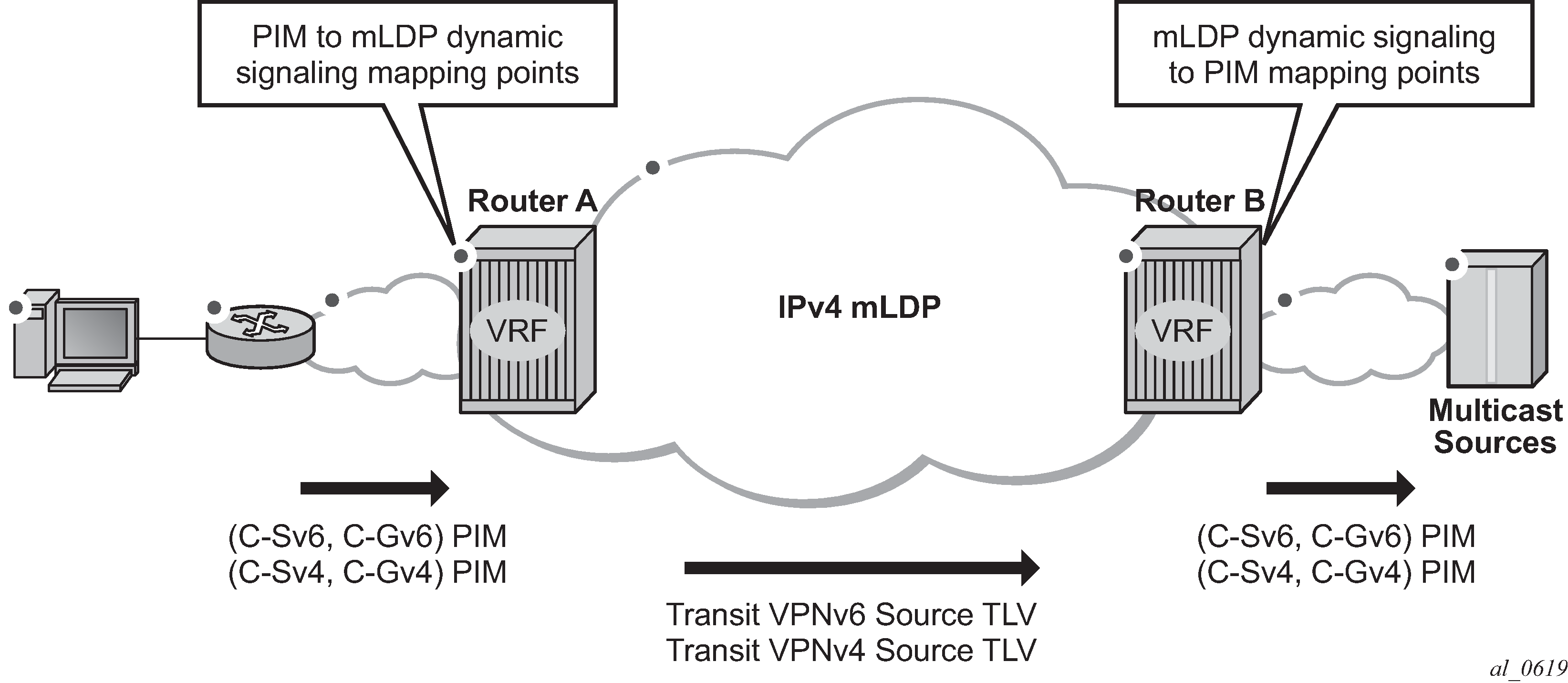

Using OSPF as a CE to PE routing protocol allows OSPF that is currently running as the IGP routing protocol to migrate to an IP-VPN backbone without changing the IGP routing protocol, introducing BGP as the CE-PE or relying on static routes for the distribution of routes into the service providers IP-VPN. The following features are supported:

Advertisement/redistribution of BGP-VPN routes as summary (type 3) LSAs flooded to CE neighbors of the VPRN OSPF instance. This occurs if the OSPF route type (in the OSPF route type BGP extended community attribute carried with the VPN route) is not external (or NSSA) and the locally configured domain-id matches the domain-id carried in the OSPF domain ID BGP extended community attribute carried with the VPN route.

OSPF sham links; a sham link is a logical PE-to-PE unnumbered point-to-point interface that essentially rides over the PE-to-PE transport tunnel. A sham link can be associated with any area and can therefore appear as an intra-area link to CE routers attached to different PEs in the VPN.

IPCP subnet negotiation

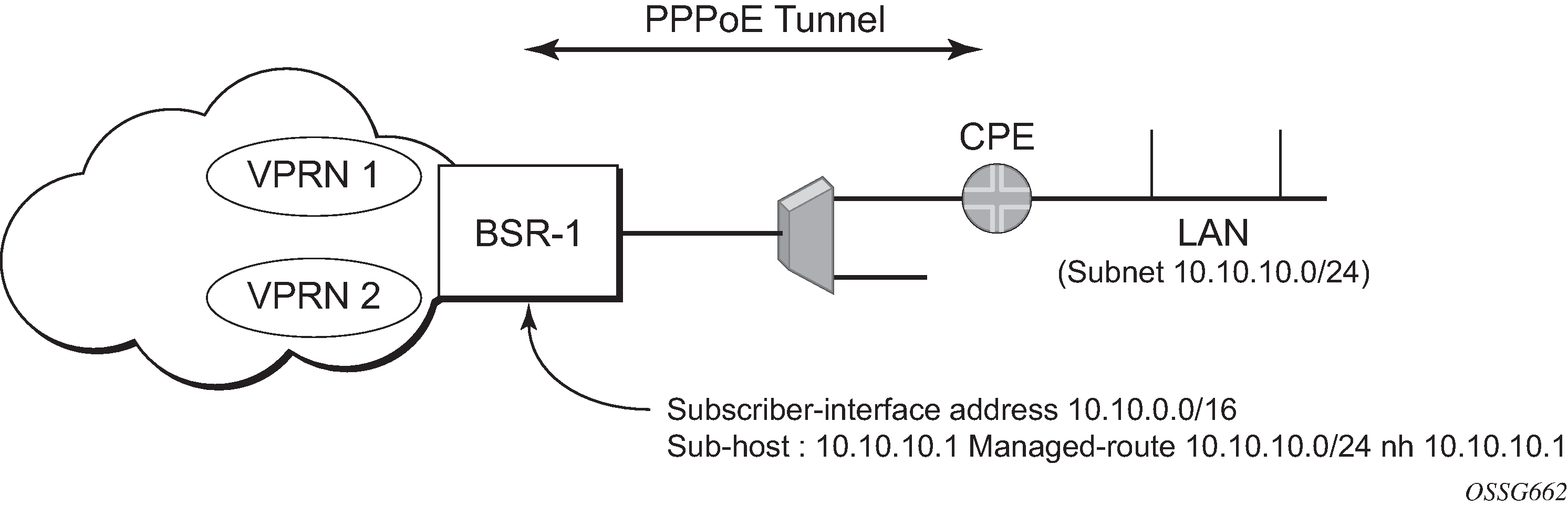

This feature enables negotiation between Broadband Network Gateway (BNG) and customer premises equipment (CPE) so that CPE is allocated to both the IP address and associated subnet.

Some CPEs use the network up-link in PPPoE mode and perform the DHCP server function for all ports on the LAN side. Instead of wasting 1 subnet for p2p uplink, CPEs use the allocated subnet for the LAN portion as shown in CPEs network up-link mode.

From a BNG perspective, the specific PPPoE host is allocated a subnet (instead of /32) by RADIUS, external DHCP server, or local user database. Locally, the host is associated with a managed route. This managed route is a subset of the subscriber-interface subnet (on a 7450 ESS or 7750 SR), and also, the subscriber host IP address is from the managed-route range. The negotiation between BNG and CPE allows CPE to be allocated both an IP address and associated subnet.

Cflowd for IP-VPNs

The cflowd feature allows service providers to collect IP flow data within the context of a VPRN. This data can used to monitor types and general proportion of traffic traversing an VPRN context. This data can also be shared with the VPN customer to see the types of traffic traversing the VPN and use it for traffic engineering.

This feature should not be used for billing purposes. Existing queue counters are designed for this purpose and provide very accurate per bit accounting records.

Inter-AS VPRNs

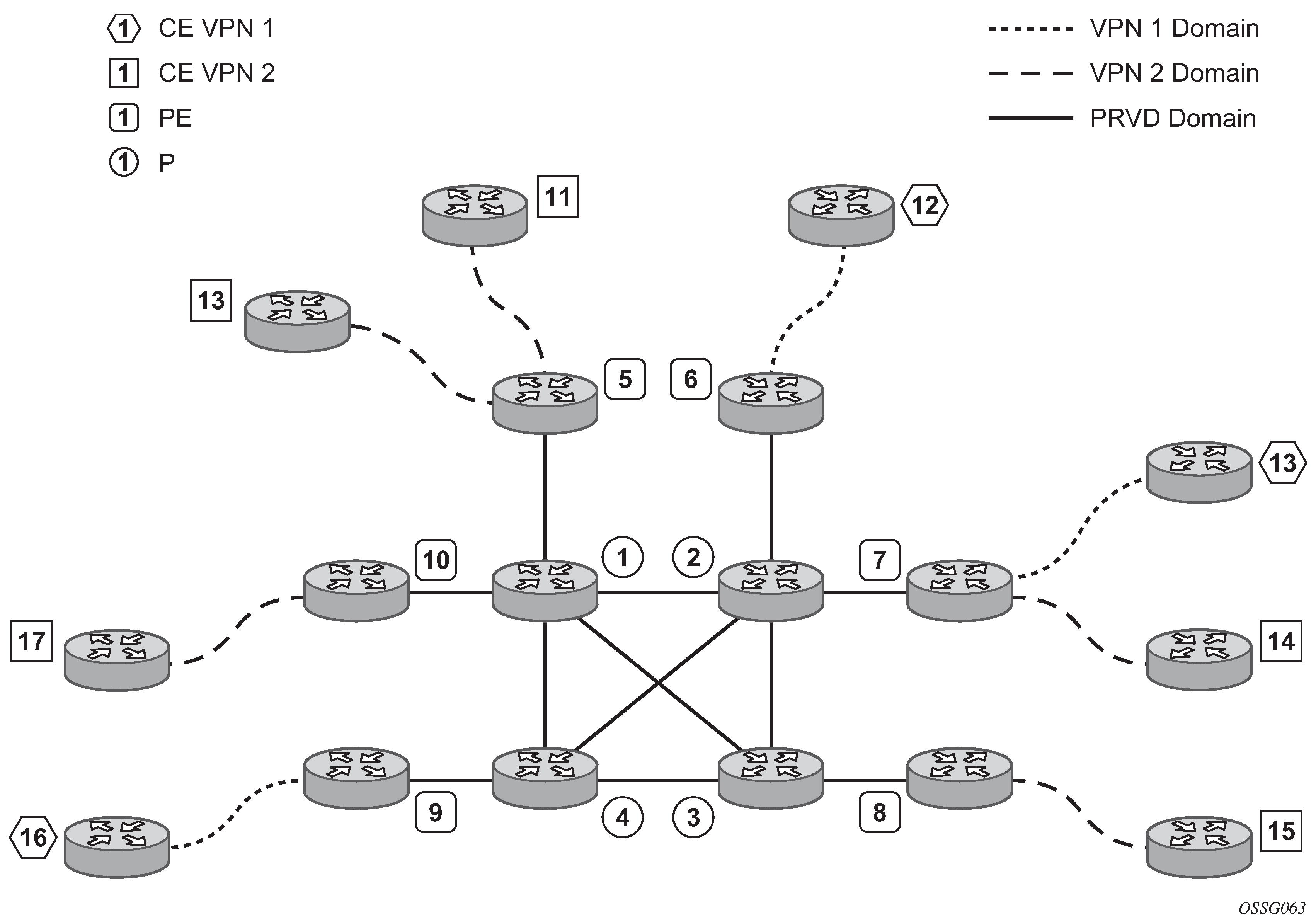

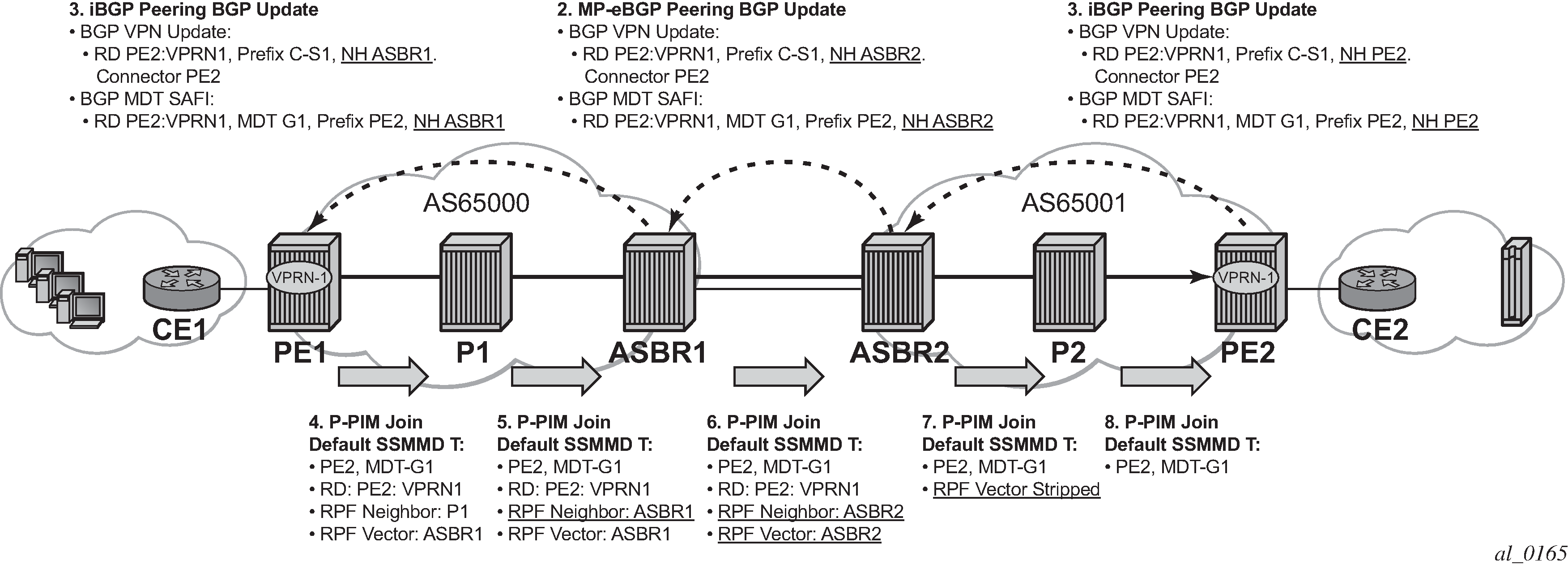

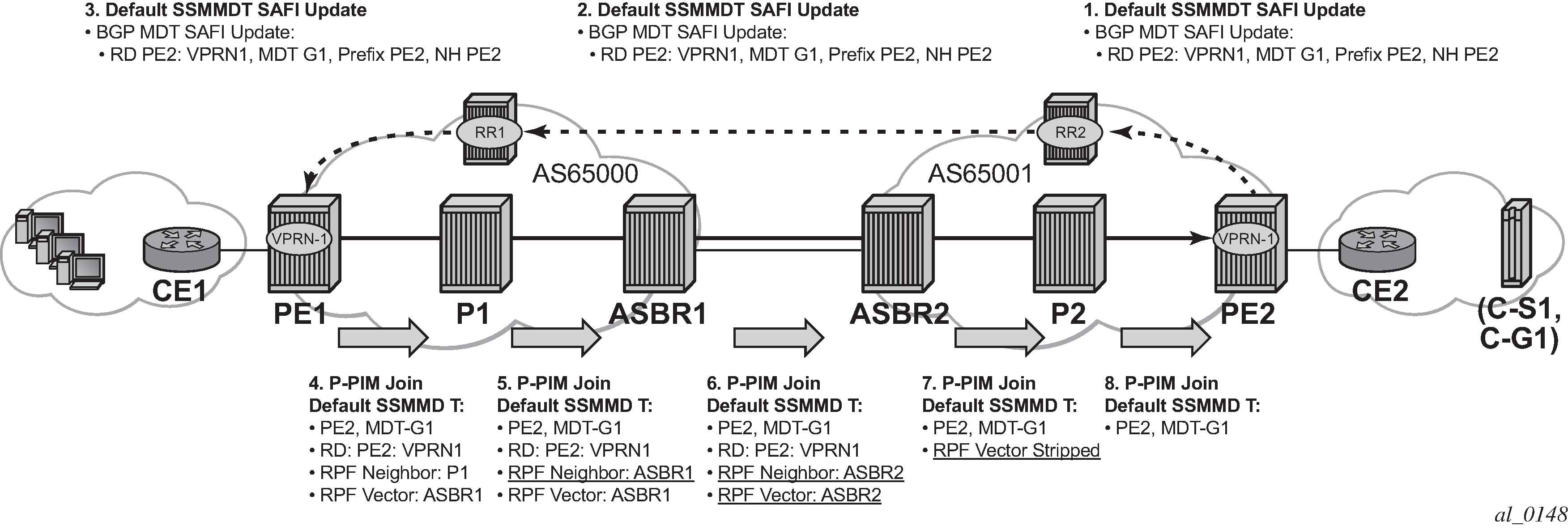

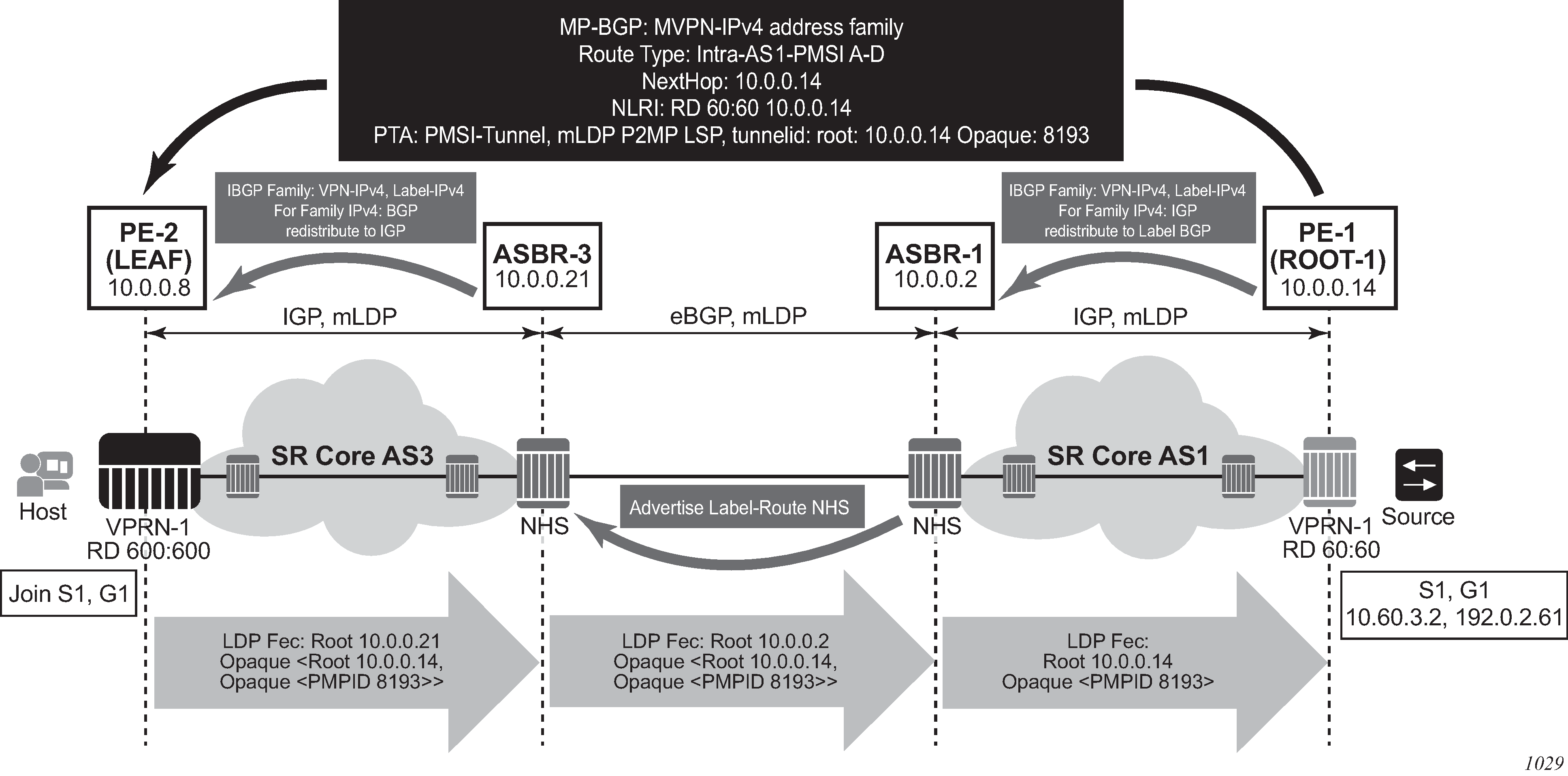

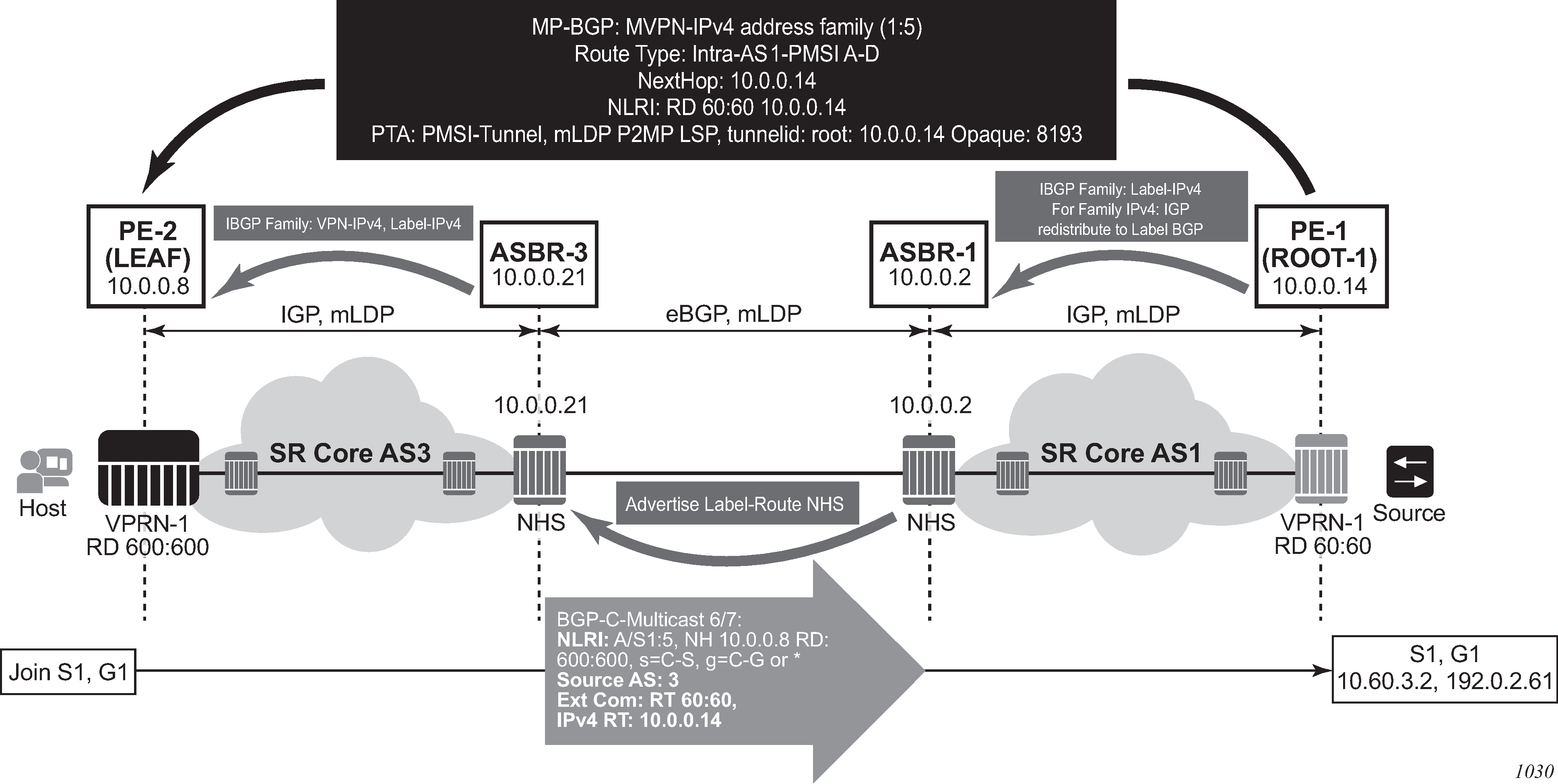

Inter-AS IP-VPN services have been driven by the popularity of IP services and service provider expansion beyond the borders of a single Autonomous System (AS) or the requirement for IP VPN services to cross the AS boundaries of multiple providers. Three options for supporting inter-AS IP-VPNs are described in RFC 4364, BGP/MPLS IP Virtual Private Networks (VPNs).

This feature applies to the 7450 ESS and 7750 SR only.

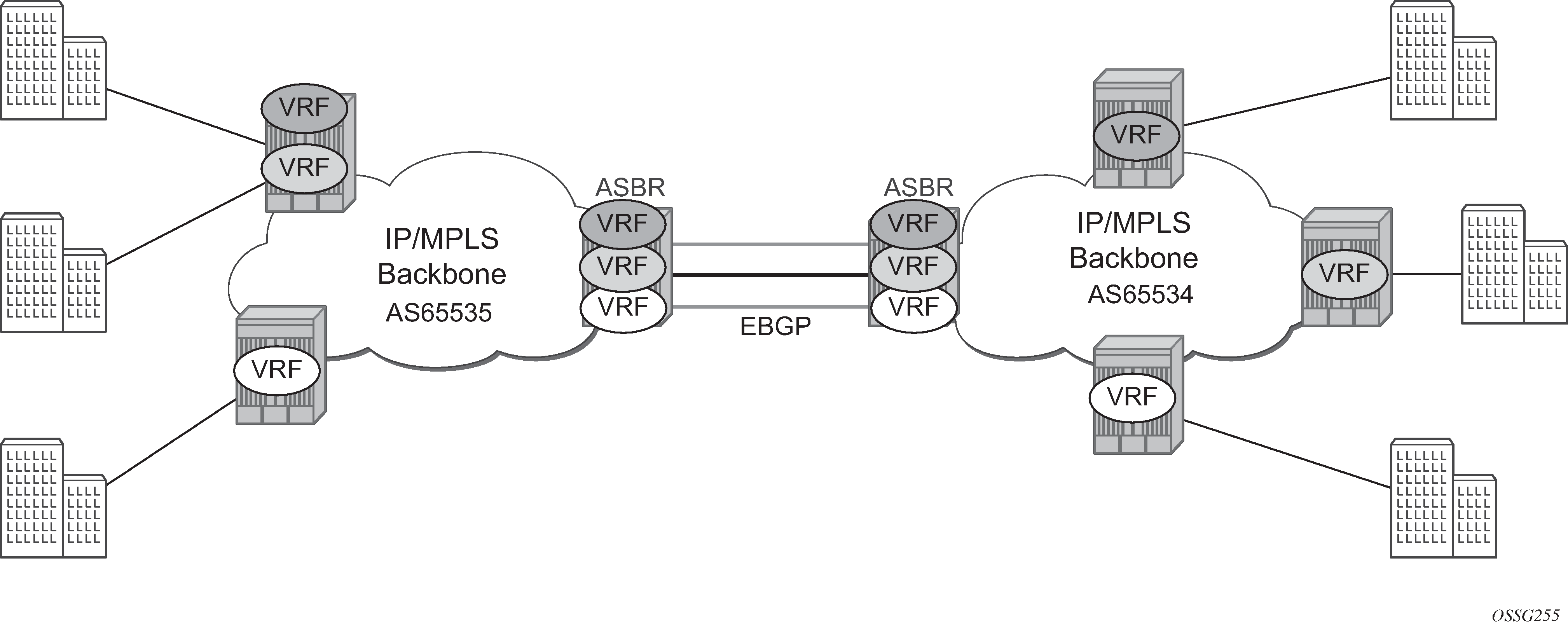

The first option, referred to as Option-A (Inter-AS Option-A: VRF-to-VRF model), is considered inherent in any implementation. This method uses a back-to-back connection between separate VPRN instances in each AS. As a result, each VPRN instance views the inter-AS connection as an external interface to a remote VPRN customer site. The back-to-back VRF connections between the ASBR nodes require individual sub-interfaces, one per VRF.

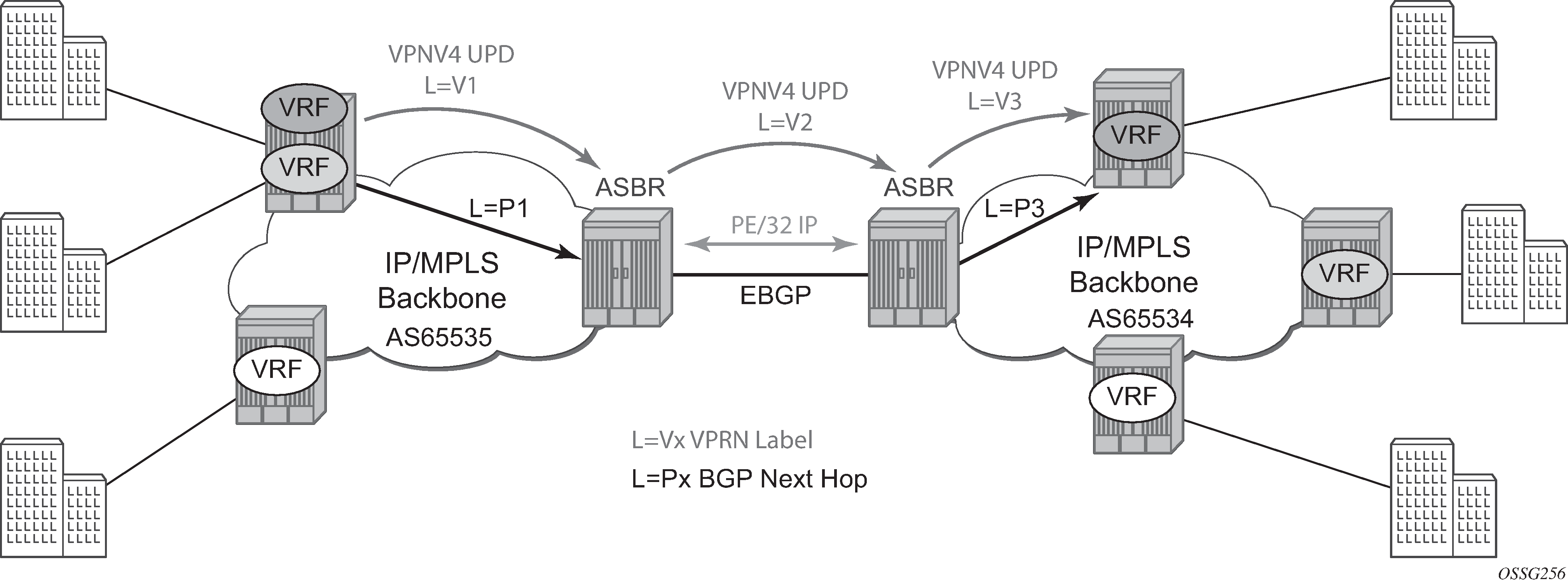

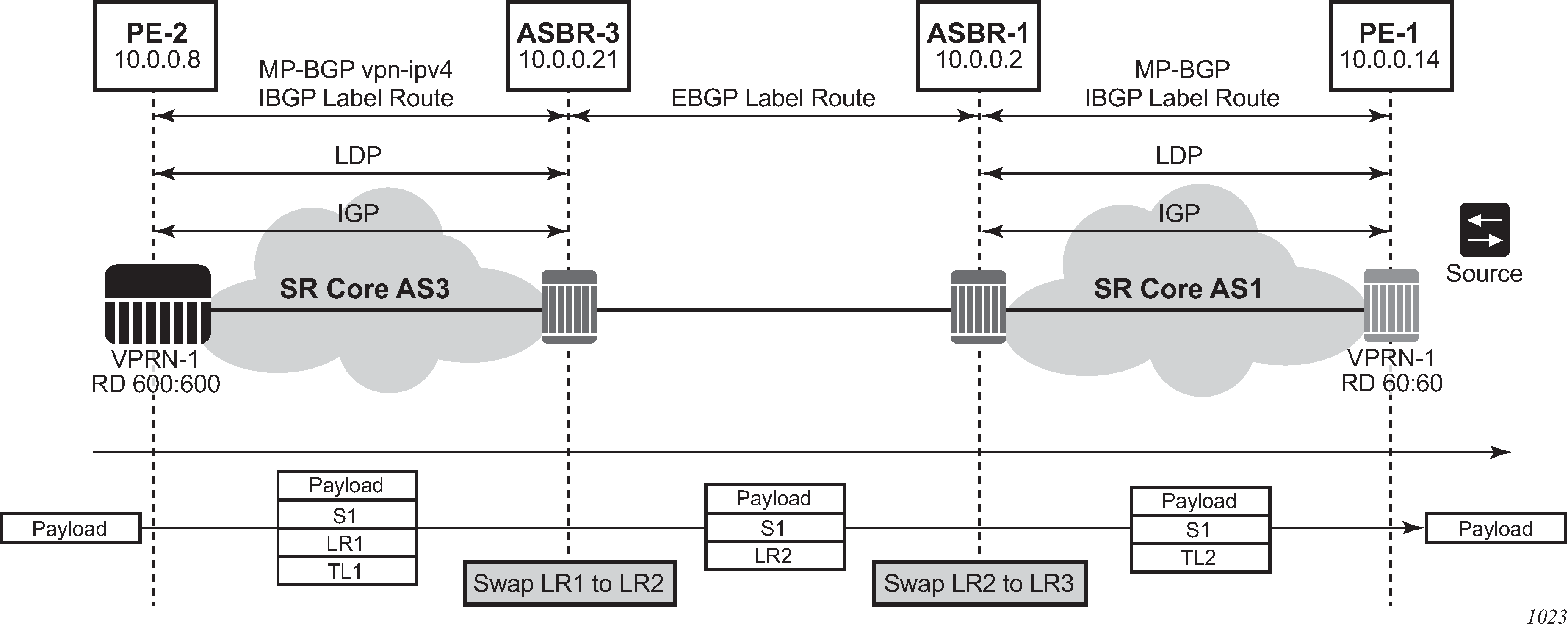

The second option, referred to as Option-B (Inter-AS Option-B), relies heavily on the AS Boundary Routers (ASBRs) as the interface between the autonomous systems. This approach enhances the scalability of the EBGP VRF-to-VRF solution by eliminating the need for per-VPRN configuration on the ASBRs. However, it requires that the ASBRs provide a control plan and forwarding plane connection between the autonomous systems. The ASBRs are connected to the PE nodes in its local autonomous system using Interior Border Gateway Protocol (IBGP) either directly or through route reflectors. This means the ASBRs receive all the VPRN information and forward these VPRN updates, VPN-IPV4, to all its EBGP peers, ASBRs, using itself as the next-hop. It also changes the label associated with the route. This means the ASBRs must maintain an associate mapping of labels received and labels issued for those routes. The peer ASBRs in turn forward those updates to all local IBGP peers.

This form of inter-AS VPRNs performs all necessary mapping functions and the PE routers do not need to perform any additional functions than in a non-Inter-AS VPRN.

On the 7750 SR, this form of inter-AS VPRNs does not require instances of the VPRN to be created on the ASBR, as in Option-A. As a result, there is less management overhead. This is also the most common form of Inter-AS VPRNs used between different service providers as all routes advertised between autonomous systems can be controlled by route policies on the ASBRs by using the following command.

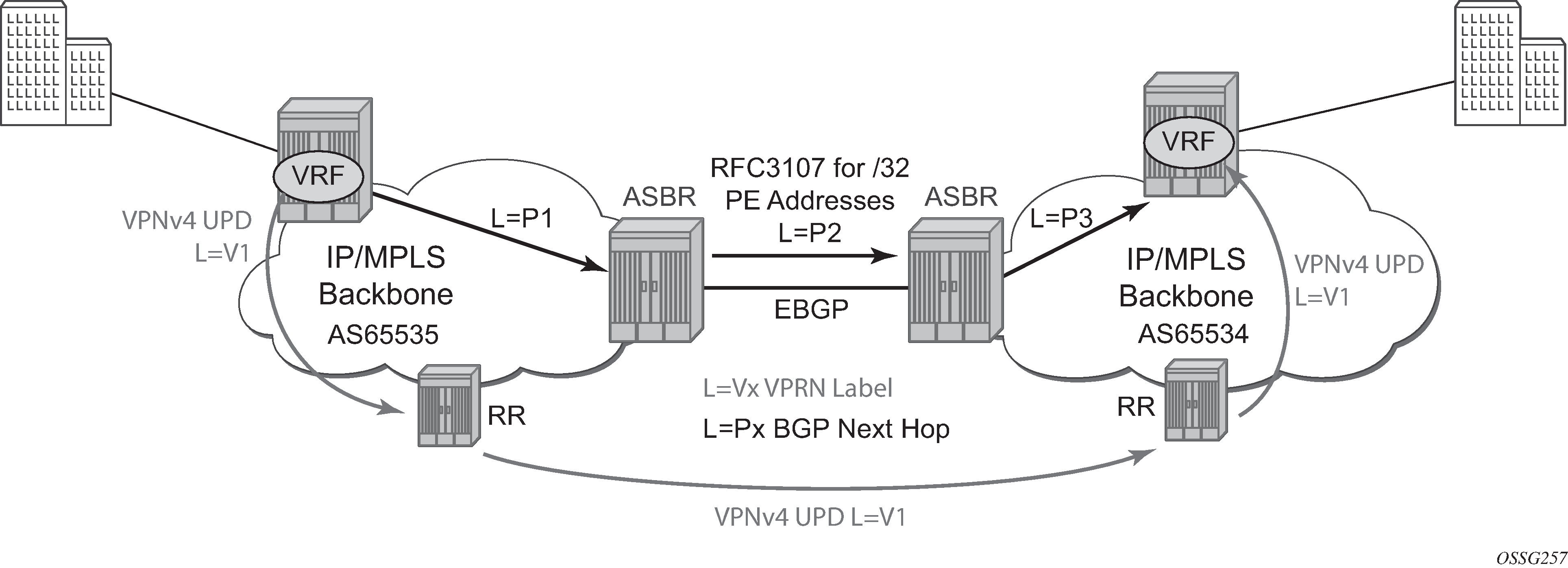

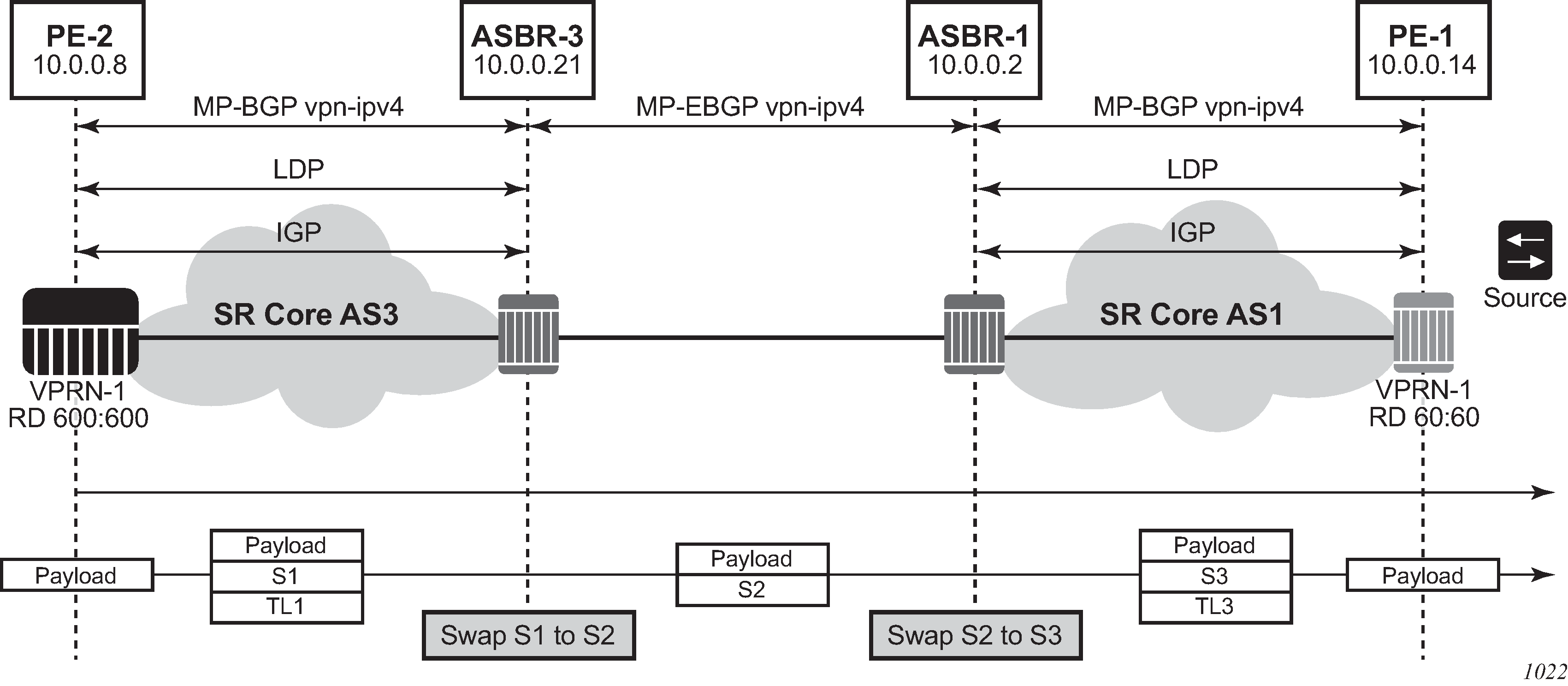

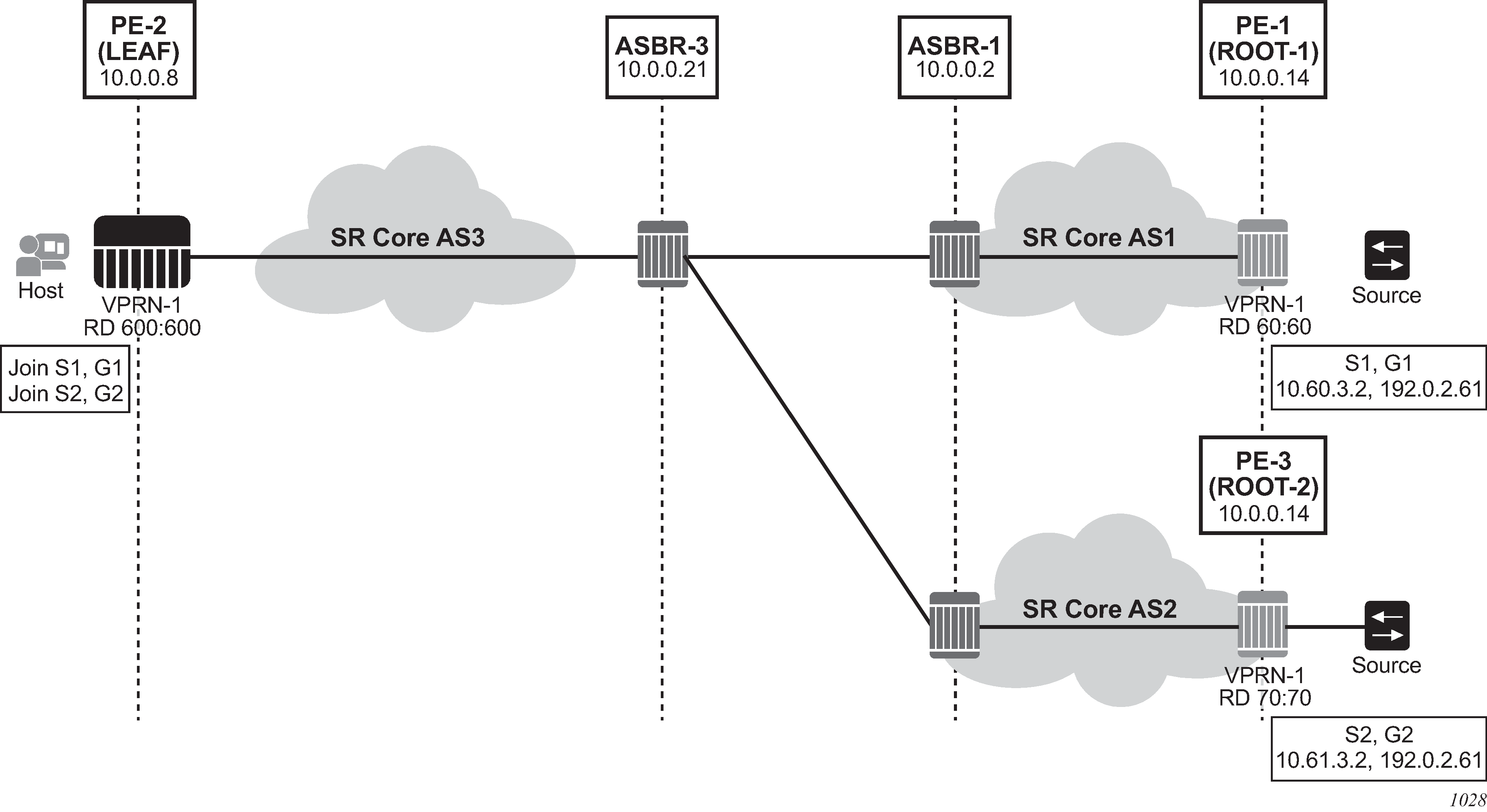

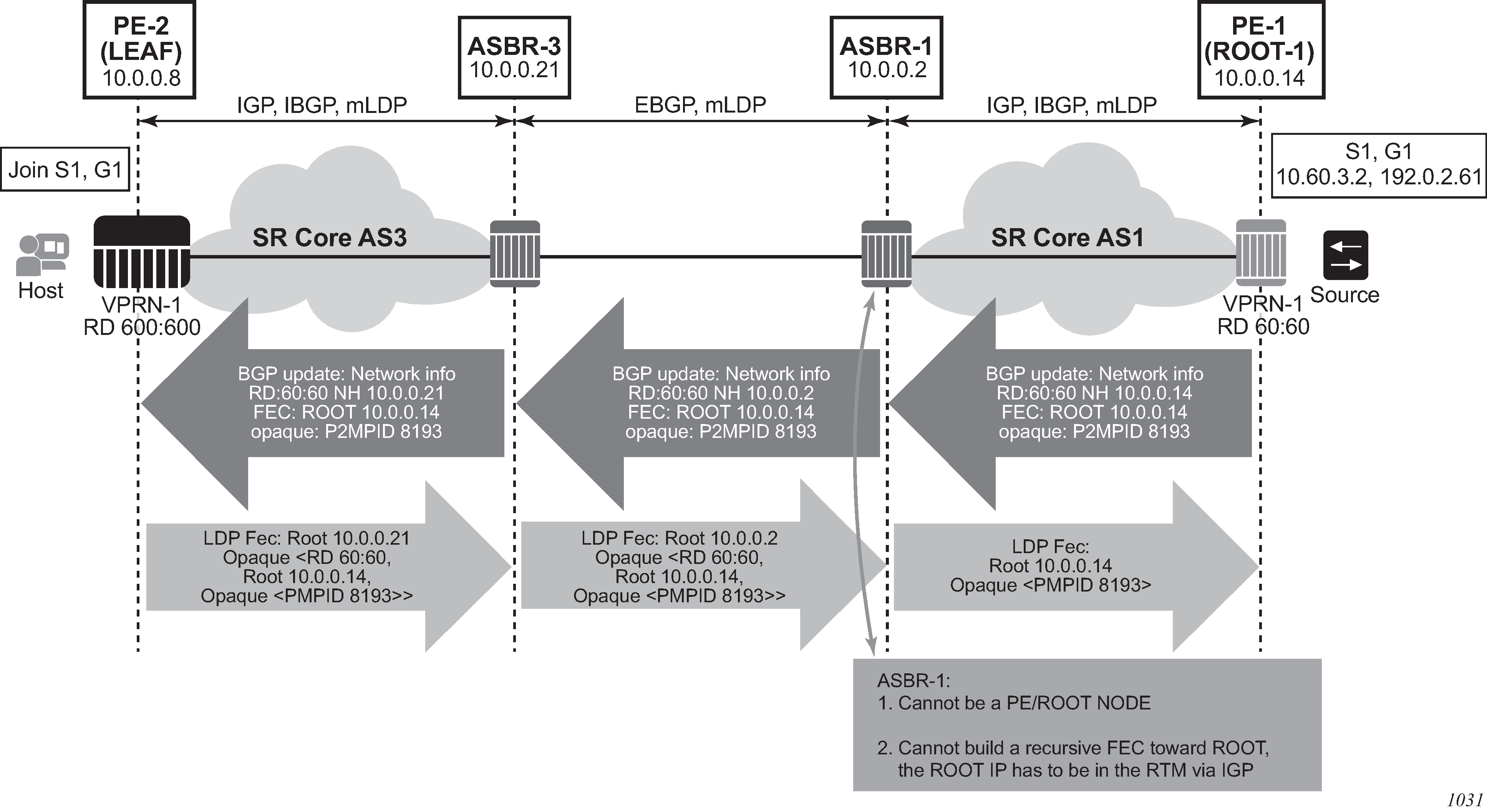

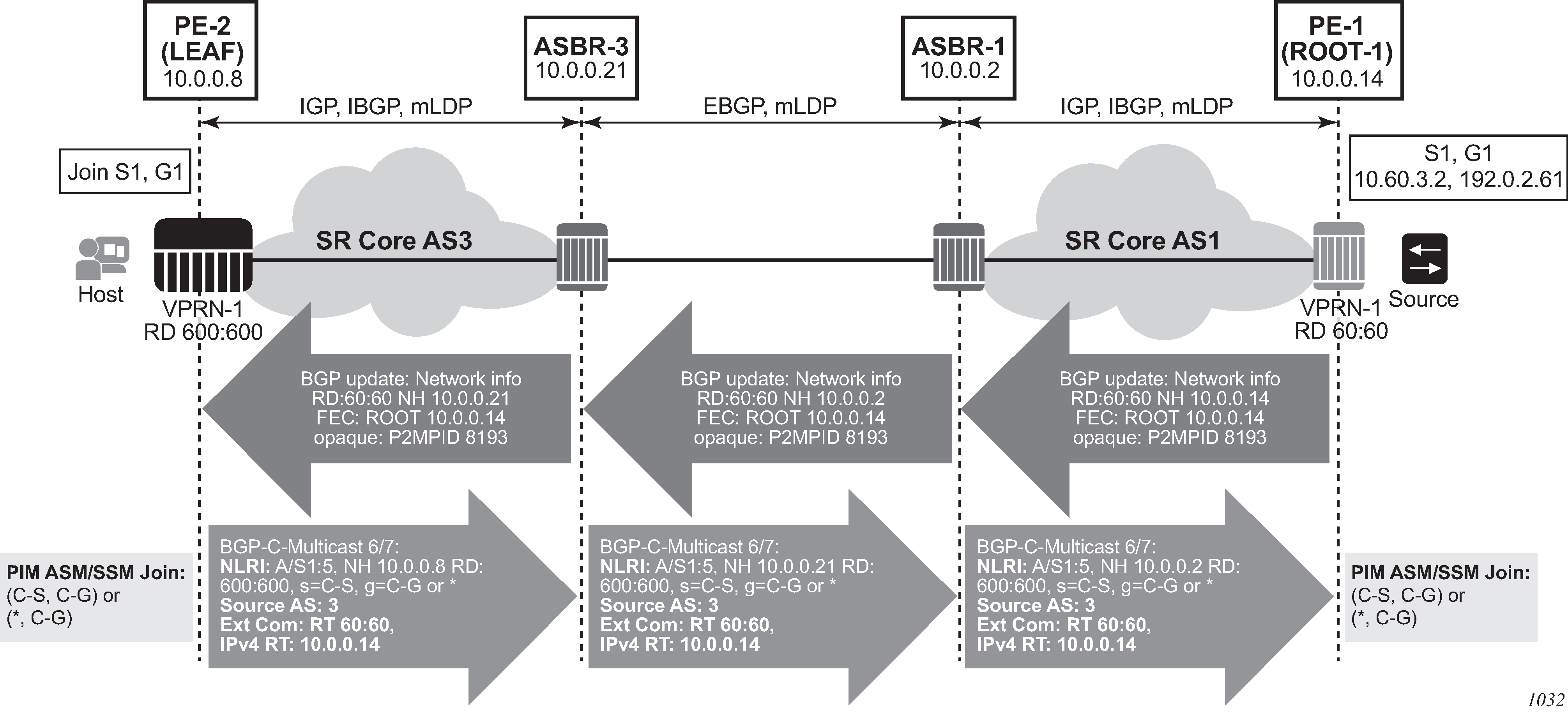

configure router bgp next-hop-resolution labeled-routes transport-tunnelThe third option, referred to as Option-C (Option-C example), allows for a higher scale of VPRNs across AS boundaries but also expands the trust model between ASNs. As a result this model is typically used within a single company that may have multiple ASNs for various reasons. This model differs from Option-B, in that in Option-B all direct knowledge of the remote AS is contained and limited to the ASBR. As a result, in Option-B the ASBR performs all necessary mapping functions and the PE routers do not need to perform any additional functions than in a non-Inter-AS VPRN.

With Option-C, knowledge from the remote AS is distributed throughout the local AS. This distribution allows for higher scalability but also requires all PEs and ASBRs involved in the Inter-AS VPRNs to participate in the exchange of inter-AS routing information.

In Option-C, the ASBRs distribute reachability information for remote PE’s system IP addresses only. This is done between the ASBRs by exchanging MP-EBGP labeled routes, using RFC 8277, Using BGP to Bind MPLS Labels to Address Prefixes. Either RSVP-TE or LDP LSP can be selected to resolve next-hop for multi-hop EBGP peering by using the following command.

configure router bgp next-hop-resolution labeled-routes transport-tunnelDistribution of VPRN routing information is handled by either direct MP-BGP peering between PEs in the different ASNs or more likely by one or more route reflectors in ASN.

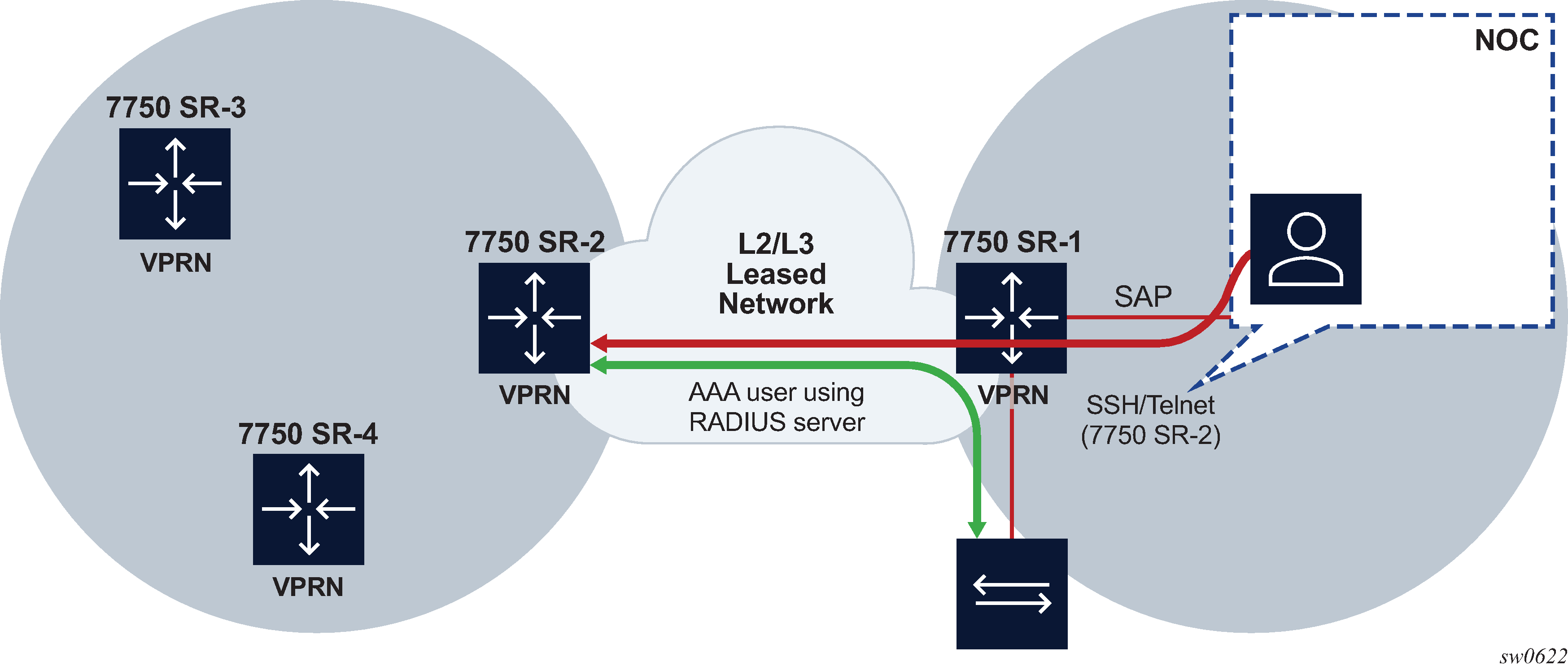

VPRN label security at inter-AS boundary

This feature allows the user to enforce security at an inter-AS boundary and to configure a router, acting in a PE role or in an ASBR role, or both, to accept packets of VPRN prefixes only from direct EBGP neighbors to which it advertised a VPRN label.

Feature configuration

To use this feature, network IP interfaces that can have the feature enabled must first be identified. Participating interfaces are identified as having the untrusted state. The router supports a maximum of 15 network interfaces that can participate in this feature.

Use the following command to configure the state of untrusted for a network IP interface.

configure router interface untrustedNormally, the user applies the untrusted command to an inter-AS interface and PIP keeps track of the untrusted status of each interface. In the datapath, an inter-AS interface that is flagged by PIP causes the default forwarding to be set to the value of the default-forwarding option (forward or drop).

For backward compatibility, default-forwarding on the interface is set to the forward command option. This means that labeled packets are checked in the normal way against the table of programmed ILMs to decide if it should be dropped or forwarded in a GRT, a VRF, or a Layer 2 service context.

If the user sets the default-forwarding argument to the drop command option, all labeled packets received on that interface are dropped. For details, see Datapath forwarding behavior.

This feature sets the default behavior for an untrusted interface in the data path and for all ILMs. To allow the data path to provide an exception to the normal way of forwarding handling away from the default for VPRN ILMs, BGP must flag those ILMs to the data path.

Use the following command to enable the exceptional ILM forwarding behavior, on a per-VPN-family basis.

configure router bgp neighbor-trust vpn-ipv4

configure router bgp neighbor-trust vpn-ipv6At a high level, BGP tracks each direct EBGP neighbor over an untrusted interface and to which it sent a VPRN prefix label. For each of those VPRN prefixes, BGP programs a bit map in the ILM that indicates, on a per-untrusted interface basis, whether the matching packets must be forwarded or dropped. For details, see CPM behavior.

CPM behavior

This feature affects PIP behavior for management of network IP interfaces and in BGP for the resolution of BGP VPN-IPv4 and VPN-IPv6 prefixes.

The following are characteristics of CPM behavior related to PIP and the VPRN label security at inter-AS boundary feature:

PIP manages the status of an untrusted interface based on the user configuration on the interface, as described in Feature configuration. It programs the interface record in the data path using a 4-bit untrusted interface identification number. A trusted interface has no untrusted record.

BGP determines the status of trusted or untrusted of an EBGP neighbor by checking the untrusted record provided by PIP for the index of the interface used by the EBGP session to the neighbor.

BGP only tracks the status of trusted or untrusted for directly connected EBGP neighbors. The neighbor address and the local address must be on the same local subnet.

BGP includes the neighbor status of trusted or untrusted in the tribe criteria. For example, if a group consists of two untrusted EBGP neighbors and one trusted EBGP neighbor and all three neighbors have the same local-AS, neighbor-AS, and export policy, then the result is two different tribes.

As a result, if the interface status changes from trusted to untrusted or untrusted to trusted, the EBGP neighbors on that interface bounce.

When the feature is enabled for a specified VPN family and BGP advertises a label for one or more resolved VPN prefixes to a group of trusted and untrusted EBGP neighbors, it creates a 16-bit map in the ILM record in which it sets the bit position corresponding to the identification number of each untrusted interface used by a EBGP session to a neighbor to which it sent the label.

A bit in the ILM record bit-map is referred to as the untrusted interface forwarding bit. The bit position corresponding to the identification number of any other untrusted interface is left clear.

For details on the data path of the ILM bit-map record, see Datapath forwarding behavior.