Shared-queue QoS policies

Overview

Shared-queue QoS policies can be implemented to facilitate queue consumption on the router. It is especially useful when VPLS, IES, and VPRN services are scaled to very high numbers. Instead of allocating multiple hardware queues for each unicast queue defined in a SAP ingress QoS policy, SAPs with the shared-queuing feature enabled only allocate one hardware queue for each SAP ingress QoS policy unicast queue.

However, as a trade-off, the total amount of traffic throughput at the ingress of the node is reduced because any ingress packet serviced by a shared-queuing SAP is recirculated for further processing. Shared-queuing can add latency. Network planners should consider these restrictions while trying to scale services on the router.

Multipoint shared queuing

Multipoint shared queuing is supported to minimize the number of multipoint queues created for ingress VPLS, IES, or VPRN SAPs or ingress subscriber SLA profiles. Normally, ingress multipoint packets are handled by multipoint queues created for each SAP or subscriber SLA profile instance. In some instances, the number of SAPs or SLA profile instances are sufficient for the in-use multipoint queues to represent many thousands of queues on an ingress forwarding plane. If multipoint shared queuing is enabled for the SAPs or SLA profile instances on the forwarding plane, the multipoint queues are not created. Instead, the ingress multipoint packets are handled by the unicast queue mapped to the forwarding class of the multipoint packet.

Functionally, multipoint shared queuing is a superset of shared queuing. With shared queuing on a SAP or SLA profile instance, only unicast packets are processed twice: one time for the initial service-level queuing and a second time for switch fabric destination queuing. Shared queuing does not affect multipoint packet handling. Multipoint packet handling in normal (service queuing) is the same as shared queuing. When multipoint shared queuing is enabled, shared queuing for unicast packets is automatically enabled.

Ingress queuing modes of operation

Three modes of ingress SAP queuing are supported for multipoint services (IES, VPLS, and VPRN): service, shared, and multipoint shared. The same ingress queuing options are available for IES and VPLS subscriber SLA profile instance queuing.

Ingress service queuing

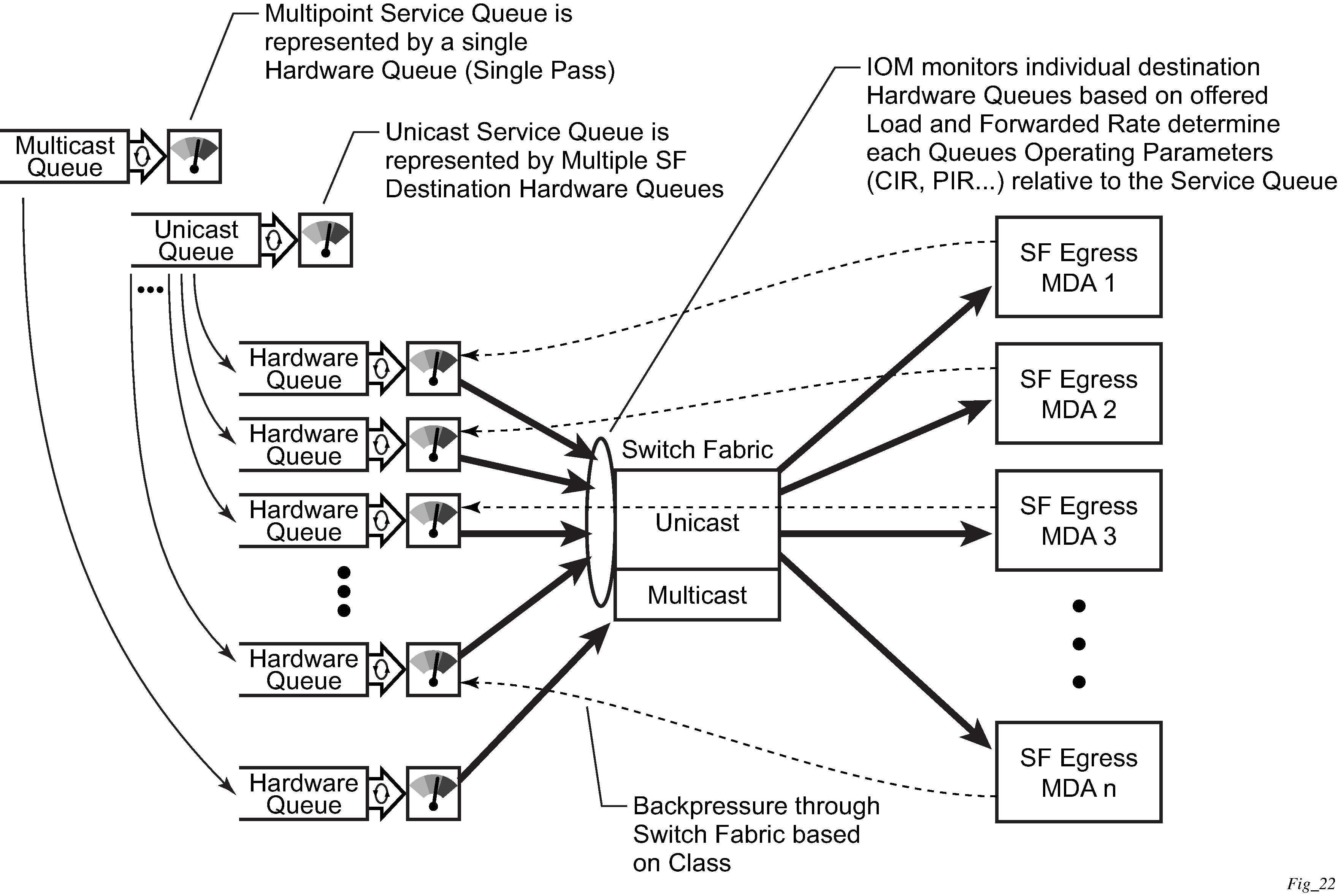

Normal or service queuing is the default mode of operation for SAP ingress queuing. Service queuing preserves ingress forwarding bandwidth by allowing a service queue defined in an ingress SAP QoS policy to be represented by a group of hardware queues. A hardware queue is created for each switch fabric destination to which the logical service queue must forward packets. For a VPLS SAP with two ingress unicast service queues, two hardware queues are used for each destination forwarding engine the VPLS SAP is forwarding to. If three switch fabric destinations are involved, six queues are allocated (2 unicast service queues multiplied by 3 destination forwarding complexes equals six hardware queues). Unicast service queue mapping to multiple destination-based hardware queues shows unicast hardware queue expansion. Service multipoint queues in the ingress SAP QoS policy are not expanded to multiple hardware queues, each service multipoint queue defined on the SAP equates to a single hardware queue to the switch fabric.

When multiple hardware queues represent a single logical service queue, the system automatically monitors the offered load and forwarding rate of each hardware queue. Based on the monitored state of each hardware queue, the system imposes an individual CIR and PIR rate for each queue that provides an overall aggregate CIR and PIR reflective of what is provisioned on the service queue.

Ingress shared queuing

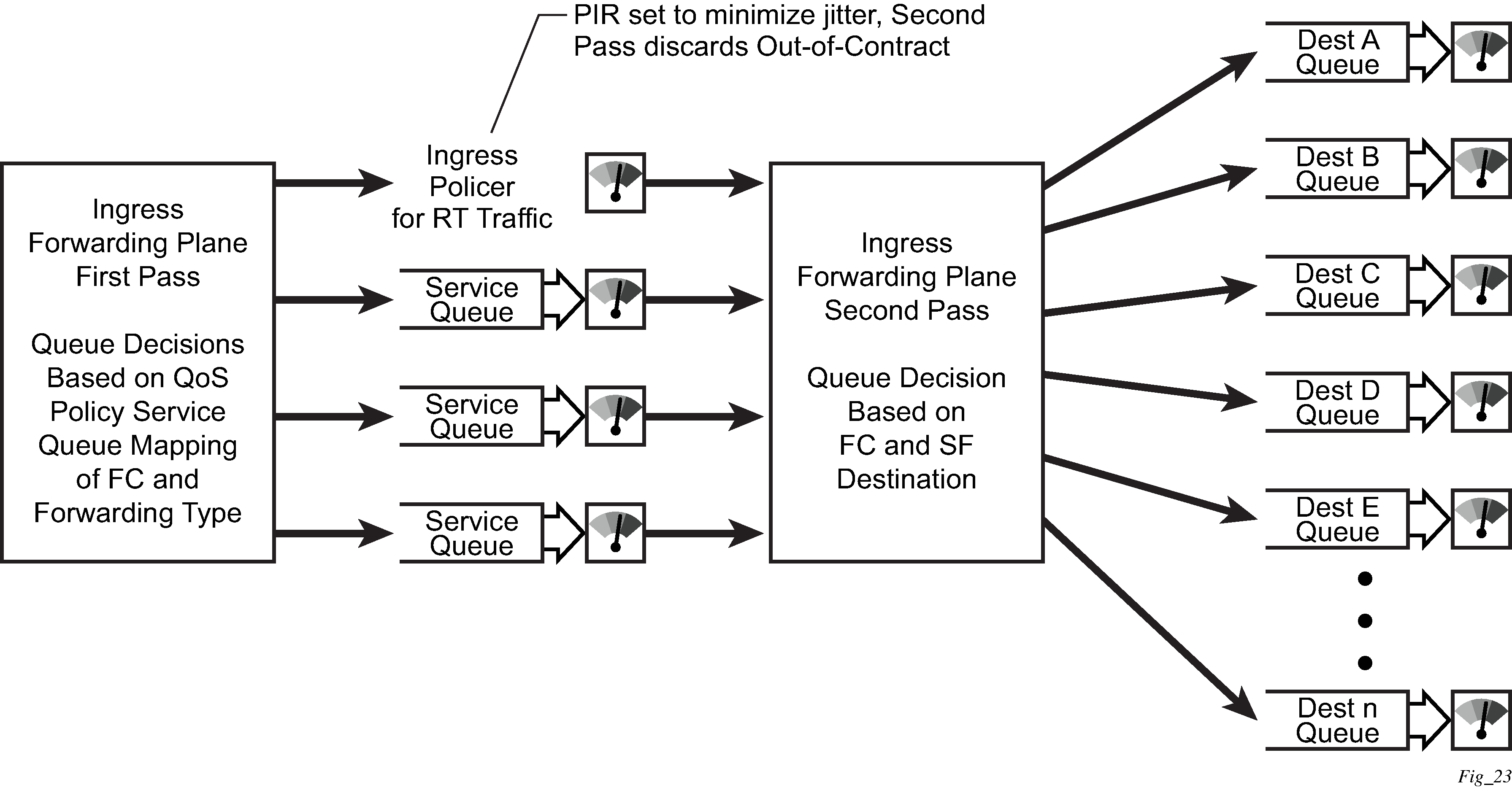

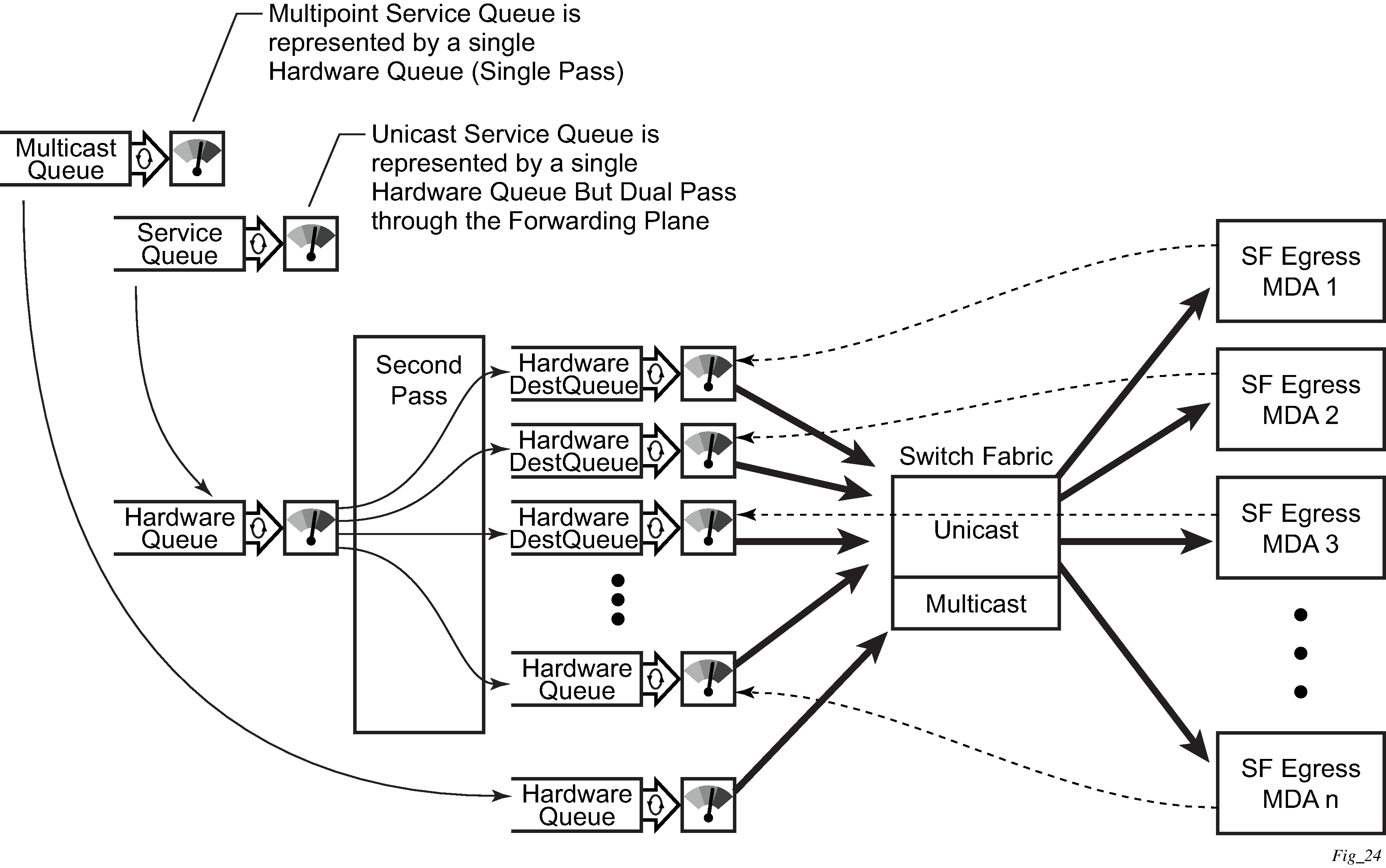

Shared queuing is only supported on FP1- and FP2-based hardware. To avoid the hardware queue expansion issues associated with normal service-based queuing, the system allows an ingress logical service queue to map to a single hardware queue when shared queuing is enabled. Shared queuing uses two passes through the ingress forwarding plane to separate ingress per service queuing from the destination switch fabric queuing. In the case of shared queuing, ingress unicast service queues are created one-for-one relative to hardware queues. Each hardware queue representing a service queue is mapped to a special destination in the traffic manager that ‛forwards’ the packet back to the ingress forwarding plane, allowing a second pass through the traffic manager. In the second pass, the packet is placed into a ‛shared’ queue for the destination forwarding plane. The shared queues are used by all services configured for shared queuing.

When the first SAP or SLA profile instance is configured for shared queuing on an ingress forwarding plane, the system allocates eight hardware queues per available destination forwarding plane, one queue per forwarding class. Twenty-four hardware queues are also allocated for multipoint shared traffic, but that is discussed in the following section. The shared queue parameters that define the relative operation of the forwarding class queues are derived from the shared queue policy defined in the QoS CLI node. Unicast service queuing with shared queuing enabled shows shared unicast queuing. SAP or SLA profile instance multipoint queuing is not affected by enabling shared queuing.

Multipoint queues are still created as defined in the ingress SAP QoS policy and ingress multipoint packets only traverse the ingress forwarding plane a single time, as shown in Multipoint queue behavior with shared queuing enabled.

Enabling shared queuing may affect ingress performance because of double packet processing through the service and shared queues.

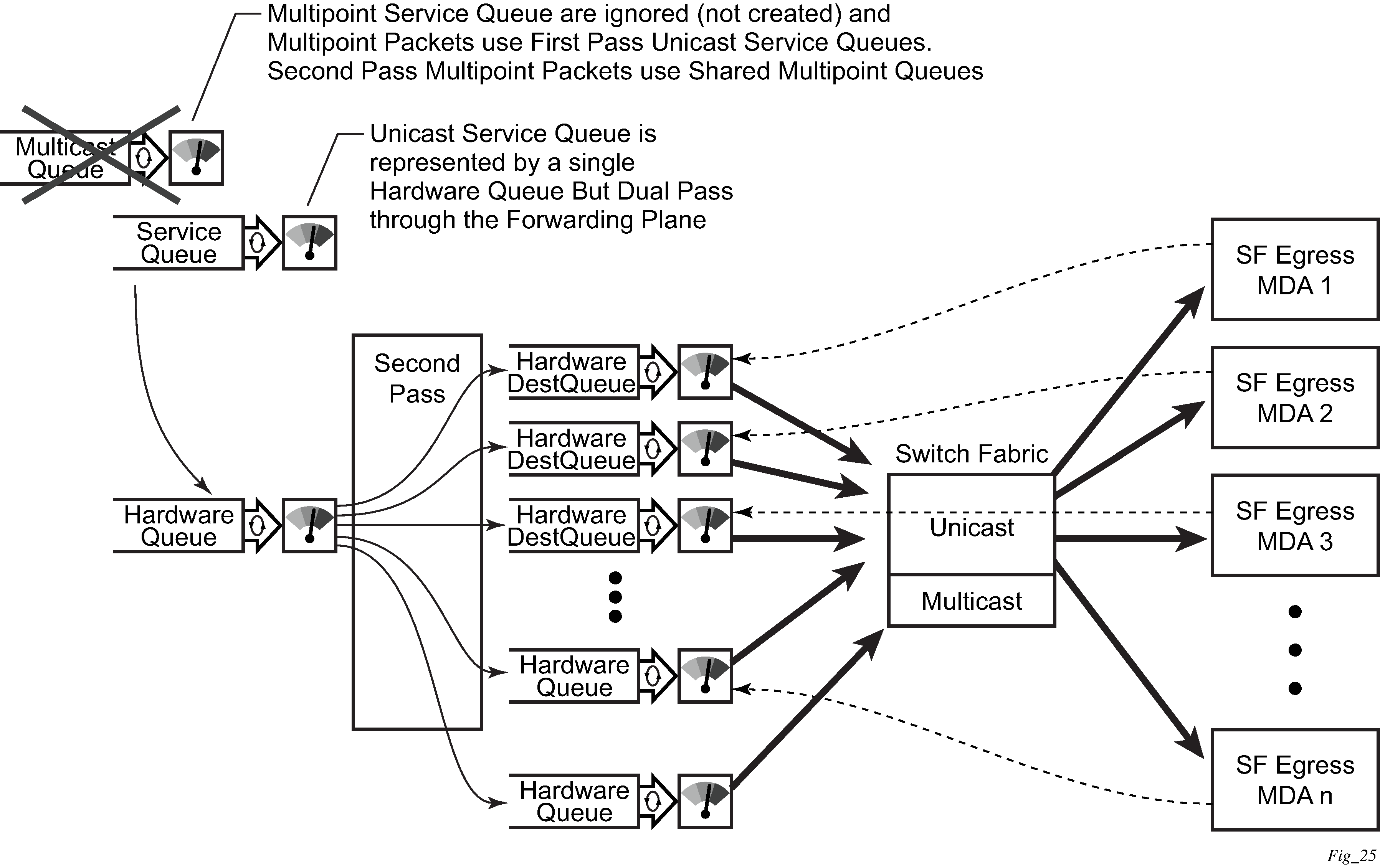

Ingress multipoint shared queuing

Shared queueing is only supported on FP1- and FP2-based hardware. Ingress multipoint shared queuing is a variation of the unicast shared queuing defined in Ingress shared queuing. With ingress multipoint shared queuing, ingress unicast service queues are mapped one-for-one with hardware queues and unicast packets traverse the ingress forwarding plane twice. In addition, the multipoint queues defined in the ingress SAP QoS policy are not created. Instead, multipoint packets (broadcast, multicast, and unknown unicast-destined) are treated to the same dual-pass ingress forwarding plane processing as unicast packets. In the first pass, the forwarding plane uses the unicast queue mappings for each forwarding plane. The second pass uses the multipoint shared queues to forward the packet to the switch fabric for special replication to all egress forwarding planes that need to process the packet.

The benefit of defining multipoint shared queuing is the savings of the multipoint queues per service. By using the unicast queues in the first pass, then the aggregate shared queues in the second pass, per service multipoint queues are not required. The predominant scenario where multipoint shared queuing may be required is with subscriber managed QoS environments using a subscriber per SAP model. Usually, ingress multipoint traffic is minimal per subscriber and the extra multipoint queues for each subscriber reduce the overall subscriber density on the ingress forwarding plane. Multipoint shared queuing eliminates the multipoint queues, sparing hardware queues for better subscriber density. Multipoint shared queuing using first pass unicast queues shows multipoint shared queuing.

One restriction of enabling multipoint shared queuing is that multipoint packets are no longer managed per service (although the unicast forwarding queues may provide limited benefit in this area). Multipoint packets in a multipoint service (VPLS, IES, and VPRN) use significant resources in the system, consuming ingress forwarding plane multicast bandwidth and egress replication bandwidth. Usually, the per service unicast forwarding queues are not rate limited to a degree that allows adequate management of multipoint packets traversing them when multipoint shared queuing is enabled. It is possible to minimize the amount of aggregate multipoint bandwidth by setting restrictions on the multipoint queue parameters in the QoS nodes shared queue policy. Aggregate multipoint traffic can be managed per forwarding class for each of the three forwarding types (broadcast, multicast, or unknown unicast – broadcast and unknown unicast are only used by VPLS).

Another restriction for multipoint shared queuing is that multipoint traffic now consumes double the ingress forwarding plane bandwidth because of dual-pass ingress processing.

Multipoint shared queuing cannot be enabled on the following services:

Epipe

Ipipe

Routed CO

For information about the tasks and commands necessary to access the CLI and to configure and maintain the router, see the Entering CLI Commands chapter in the 7450 ESS, 7750 SR, 7950 XRS, and VSR Classic CLI Command Reference Guide.

Basic configurations

The default shared queue QoS policy conforms to the following:

There is only one default shared queue policy in the system.

The default shared queue policy has fixed forwarding classes, queues, and FC-queue mapping, which cannot be modified, added, or deleted.

The only configurable entities in the default shared queue policy are the queue attributes, queue priority, and the description string. The queue priority for a shared queue can be changed to expedited, best-effort or auto-expedited.

Modifying the default shared-queue policy

The only configurable entities in the default shared queue policy are the queue attributes and the description string. The changes are applied immediately to all services where this policy is applied. Use the following CLI syntax to modify a shared-queue policy:

config>qos#

shared-queue name

description description-string

queue queue-id [queue-type] [multipoint]

cbs percent

drop-tail low percent-reduction-from-mbs percent

mbs percent

rate percent [cir percent]The following displays a shared-queue policy configuration example:

A:ALA-48>config>qos>shared-queue# info

----------------------------------------------

description "test1"

queue 1 create

cbs 2

drop-tail

low

percent-reduction-from-mbs 20

exit

exit

exit

----------------------------------------------

A:ALA-48>config>qos>shared-queue#

Applying shared-queue policies

The default shared queue policy is applied at the SAP level just as sap-ingress and sap-egress QoS policies are specified. If the shared-queuing keyword is not specified in the qos policy-id command, then the SAP is assumed to use single-pass queuing.

Apply shared-queue policies to the following entities:

Epipe services

config>service>epipe service-id [customer customer-id]

sap sap-id

ingress

qos policy-id [shared-queuing]The following output displays an Epipe service configuration with SAP ingress policy 100 applied to the SAP with shared-queuing enabled.

A:SR>config>service# info

----------------------------------------------

epipe 6 customer 6 vpn 6 create

description "Distributed Epipe to west coast"

sap 1/1/10:0 create

ingress

qos 100 shared-queuing

exit

exit

no shutdown

exit

----------------------------------------------

A:SR>config>service#

IES services

config>service# ies service-id

interface interface-name

sap sap-id

ingress

qos policy-id [shared-queuing | multipointshared]The following output displays an IES service configuration with SAP ingress policy 100 applied to the SAP with shared-queuing enabled.

A:SR>config>service# info

----------------------------------------------

ies 88 customer 8 vpn 88 create

interface "Sector A" create

sap 1/1/1.2.2 create

ingress

qos 100 multipoint-shared

exit

exit

exit

no shutdown

exit

----------------------------------------------

A:SR>config>service#

VPLS services

config>service# vpls service-id [customer customer-id]

sap sap-id

ingress

qos policy-id [shared-queuing | multipoint-shared]The following output displays a VPLS service configuration with SAP ingress policy 100 with shared-queuing enabled.

A:SR>config>service# info

----------------------------------------------

vpls 700 customer 7 vpn 700 create

description "test"

sap 1/1/9:0 create

ingress

qos 100 multipoint-shared

exit

exit

exit

----------------------------------------------

A:SR>config>service#

VPRN services

config>service# vprn service-id [customer customer-id]

interface ip-int-name

sap sap-id

ingress

qos policy-id [shared-queuing | multipointshared]The following output displays a VPRN service configuration. The default SAP ingress policy was not modified but shared queuing was enabled.

A:SR7>config>service# info

----------------------------------------------

vprn 1 customer 1 create

interface "to-ce1" create

address 192.168.0.0/24

sap 1/1/10:1 create

ingress

qos 1 multipoint-shared

exit

exit

exit

no shutdown

exit

----------------------------------------------

A:SR7>config>service#

Default shared queue policy values

The shared queue policies listed in Default shared queue policies are created by the system and cannot be deleted.

| Policy-ID | Description |

|---|---|

default |

Default Shared Queue Policy |

policer-output-queues |

Default Policer Output Shared Queue Policy |

egress-pbr-ingress-queues |

Egress PBR Ingress Shared Queue Policy |

Creating additional shared queue policies is not permitted.

The following output displays the default configuration:

*A:ian1>config>qos>shared-queue# info detail

----------------------------------------------

description "Default Shared Queue Policy"

queue 1 auto-expedite create

rate 100 cir 0 fir 0

mbs 50

cbs 1

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 2 auto-expedite create

rate 100 cir 25 fir 0

mbs 50

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 3 auto-expedite create

rate 100 cir 25 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 4 auto-expedite create

rate 100 cir 25 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 5 auto-expedite create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 6 auto-expedite create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 7 auto-expedite create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 8 auto-expedite create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 9 auto-expedite multipoint create

rate 100 cir 0 fir 0

mbs 50

cbs 1

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 10 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 11 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 12 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 13 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 14 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 15 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 16 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 17 auto-expedite multipoint create

rate 100 cir 0 fir 0

mbs 50

cbs 1

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 18 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 19 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 20 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 21 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 22 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 23 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 24 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 25 auto-expedite multipoint create

rate 100 cir 0 fir 0

mbs 50

cbs 1

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 26 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 27 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 28 auto-expedite multipoint create

rate 100 cir 25 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 29 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 30 auto-expedite multipoint create

rate 100 cir 100 fir 0

mbs 50

cbs 10

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 31 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

queue 32 auto-expedite multipoint create

rate 100 cir 10 fir 0

mbs 25

cbs 3

drop-tail

low

percent-reduction-from-mbs default

exit

exit

exit

fc af create

queue 3

multicast-queue 11

broadcast-queue 19

unknown-queue 27

exit

fc be create

queue 1

multicast-queue 9

broadcast-queue 17

unknown-queue 25

exit

fc ef create

queue 6

multicast-queue 14

broadcast-queue 22

unknown-queue 30

exit

fc h1 create

queue 7

multicast-queue 15

broadcast-queue 23

unknown-queue 31

exit

fc h2 create

queue 5

multicast-queue 13

broadcast-queue 21

unknown-queue 29

exit

fc l1 create

queue 4

multicast-queue 12

broadcast-queue 20

unknown-queue 28

exit

fc l2 create

queue 2

multicast-queue 10

broadcast-queue 18

unknown-queue 26

exit

fc nc create

queue 8

multicast-queue 16

broadcast-queue 24

unknown-queue 32

exit

----------------------------------------------

*A:PE>config>qos>shared-queue#