Point-to-Point Protocol over Ethernet management

PPPoE

A Broadband Remote Access Server (BRAS) is a device that terminates PPPoE sessions. The Point-to-Point Protocol (PPP) is used for communications between a client and a server. Point-to-Point Protocol over Ethernet (PPPoE) is a network protocol used to encapsulate PPP frames inside Ethernet frames.

Ethernet networks are packet-based, unaware of connections or circuits. Using PPPoE, Nokia users can dial from one router to another over an Ethernet network, then establish a point-to-point connection and transport data packets over the connection. In this application subscriber hosts can connect to the router using a PPPoE tunnel. There are two command available under PPPoE to limit the number of PPPoE hosts, one to set a limit that is applied on each SAP of the group-interface and one to set the limit per group-interface.

PPPoE is commonly used in subscriber DSL networks to provide point-to-point connectivity to subscriber clients running the PPP protocol encapsulated in Ethernet. IP packets are tunneled over PPP using Ethernet ports to provide the client’s software or RG the ability to dial into the provider network. Most DSL networks were built with the use of PPPoE clients as a natural upgrade path from using PPP over dial-up connections. Because the PPP packets were used, many of the client software was reusable while enhancements were made such that the client could use an Ethernet port in a similar manner as it did a serial port. The protocol is defined by RFC 2516, A Method for Transmitting PPP Over Ethernet (PPPoE).

PPPoE has two phases, the discovery phase and the session phase.

Discovery: The client identifies the available servers. To complete the phase the client and server must communicate a session-id. During the discovery phase all packets are delivered to the PPPoE control plane (CPM or MDA). The IOM identifies these packets by their Ethertype (0x8863).

PPPoE Active Discovery Initiation (PADI)

This broadcast packet is used by the client to search for an active server (Access Concentrator) providing access to a service.

PPPoE Active Discovery Offer (PADO)

If the access server can provide the service it should respond with a unicast PADO to signal the client it may request connectivity. Multiple servers may respond and the client may choose a server to connect to.

PPPoE Active Discovery Request (PADR)

After the client receives a PADO it uses this unicast packet to connect to a server and request service.

PPPoE Active Discovery Session-confirmation (PADS)

A server may respond to the client with this unicast packet to establish the session and provide the session-id. After the PADS was provided the PPP phase begins.

Session

After the session ID is established connectivity is available for the duration of the session, using Ethertype 0x8864. Either client or server can terminate a session.

During the life of the session the packets may be uniquely identified by the client’s MAC address and session-id. The session can terminate either by PADT sent by the client or server or by an LCP Terminate-Request packet.

During session creation, the following occurs:

PADI (control packet upstream)

This packet is delivered to the control plane. The control plane checks the service tag for service name. In the case multiple nodes are in the same broadcast domain the service tag can be used to decide whether to respond to the client. A relay tag can also be present.

PADO (control packet downstream)

The packet is generated by the control plane as response to the PADI message. The packet is forwarded to the client using the unicast packet directed at the client’s MAC address. The node populates the AC-name tag and service tag. The packet sources the forwarding Ethernet MAC address of the node. If SRRP is used on the interface, it uses the gateway address as the source MAC. When in a backup state, the packet is not generated.

PADR (control packet upstream)

This packet is delivered to the control plane. The packet is destined for the node’s MAC address. The control plane then generates the PADS to create the session for this request.

PADS (control packet downstream)

The control plane prepares for the session creation and sends it to the client using the client’s MAC address. The session-id (16-bit value) is unique per client. The session-id is populated in the response. After a session-id is generated, the client uses it in all packets. When the server does not agree with the client’s populated service tags, the PADS can be used to send a service error tag with a zero session-id to indicate the failure.

PADT (control packet upstream/downstream)

The packet is used to terminate a session. It can be generated by either the control plane or the client. The session-id must be populated. The packet is a unicast packet.

PPP session creation supports the LCP authentication phase.

During a session, the following forwarding actions occur:

Upstream, in the PPPoE before PPP phase, there is no anti-spoofing. All packets are sent to the CPM. During anti-spoof lookup with IP and MAC addressing, regular filtering, QoS and routing in context continue. All unicast packets are destined for the node’s MAC address. Only control packets (broadcast) are sent to the control plane. Keep-alive packets are handled by the CPM.

Downstream, packets are matched in the subscriber lookup table. The subscriber information provides queue and filter resources. The subscriber information also provides PPPoE information, such as the dest-mac-address and session-id, to build the packet sent to the client.

PPPoE-capable interfaces can be created in a subscriber interface in both IES and VPRN services (VPRN is supported on the 7750 SR only). Each SAP can support one or more PPPoE sessions depending on the configuration. A SAP can simultaneously have static hosts, DHCP leases and PPPoE sessions. See Limiting subscribers, hosts, and sessions for a detailed description of the configuration options to limit the number of PPPoE sessions per SAP, per group-interface, per SLA profile instance, or per subscriber.

RADIUS can be used for authentication. IP addresses can be provided by both RADIUS and the local IP pool, with the possibility of choosing the IP pool through RADIUS.

DHCP clients and PPPoE clients are allowed on a single SAP or group interface. If DHCP clients are not allowed, the operator should not enable lease-populate and similarly if PPPoE clients are not allowed, the operator should not enable the PPPoE node.

The DHCP lease-populate is for DHCP leases only. A similar command host-limit is made available under PPPoE for limits on the number of PPPoE hosts. The existing per sla-profile instance host limit is for combined DHCP and PPPoE hosts for that instance.

For authentication, local and RADIUS are supported.

RADIUS is supported through an existing policy. A username attribute has been added.

For PAP/CHAP, a local user database is supported and must be referenced from the interface configuration.

The host configuration can come directly from the local user database or from the RADIUS or DHCP server. A local host configuration is allowed through a local DHCP server with a local user database.

IP information can be obtained from the local user database, RADIUS, a DHCP server, or a local DHCP server.

If IP information is returned from a DHCP server. PPPoE options such as the DNS name are retrieved from the DHCP ACK and provided to the PPPoE client. An open authentication option is maintained for compatibility with existing DHCP-based infrastructure.

The DHCP server can be configured to run on a loopback address with a relay defined in the subscriber or group interfaces. The DHCP proxy functionality that is provided by the DHCP relay (getting information from RADIUS, lease-split, option 82 rewriting) cannot be used for requests for PPPoE clients.

PPPoE authentication and authorization

General flow

When a new PPPoE session is setup, the authentication policy assigned to the group interface is examined to determine how the session should be authenticated.

If no authentication policy is assigned to the group interface or the pppoe-access-method is set to none, the local user database assigned to the PPPoE node under the group interface is queried either during the PADI phase or during the LCP authentication phase, depending on whether the match-list of the local user database contains the requirement to match on username. If the match-list does not contain the username option, PADI authentication is performed and can specify an authentication policy in the local user database host for an extra RADIUS PAP-CHAP authentication point.

If an authentication policy is assigned and the pppoe-access-method is set to PADI, the RADIUS server is queried for authenticating the session based on the information available when the PADI packet is received (any PPP username and password are not known here). When it is set to PAP-CHAP, the RADIUS server is queried during the LCP authentication phase and the PPP username and password is used for authentication instead of the username and password configured in the authentication policy.

If this authentication is successful, the data returned by RADIUS or the local user database is examined. If no IP address was returned, the DHCP server is now queried for an IP address and possibly other information, such as other DHCP options and ESM strings.

The final step consists of complementing the available information with configured default values (ESM data), after which the host is created if sufficient information is available to instantiate it in subscriber management (at least subscriber ID, subscriber profile, SLA profile, and IP address).

The information that needs to be gathered is divided in three groups, subscriber ID, ESM strings, and IP data. When one of the data sources has offered data for one of these groups, the other sources are no longer allowed to overwrite this data (except for the default ESM data). For example, if RADIUS provides an SLA profile but no subscriber ID and IP address, the data coming from the DHCP server (either through Python or directly from the DHCP option) can no longer overwrite any ESM string, only the subscriber ID and IP data. However, after the DHCP data is processed, a configured default subscriber profile is added to the data before instantiating the host.

RADIUS

Refer the 7450 ESS, 7750 SR, and VSR RADIUS Attributes Reference Guide for attributes that are applicable in RADIUS authentication of a PPPoE session.

Local user database directly assigned to PPPoE node

The following are relevant settings for a local user database directly assigned to PPPoE node:

Host identification parameters (user name only)

Username

Password

Address

DNS servers (under DHCP options)

NBNS servers (under DHCP options)

Identification (ESM) strings

Incoming PPPoE connections are always authenticated through the PPPoE tree in the local user database.

The match list for a local user database that is assigned directly to the PPPoE node under the group interface is always user-name, independent of the match list setting in the database.

For username matching, the incoming username (user[@domain]) is always first converted to a user and a domain entity by splitting it on the first @-sign. If the no-domain parameter to the username is specified, the user component should be equal to the specific username, if the domain-only portion of the username is specified, the domain entity should be equal to the specified username and if no extra parameters are provided, the user and domain components are concatenated again and compared to the specific username.

The option number for the identification strings is not used if the local user database is assigned directly to the PPPoE node (it is only necessary if it is connected to a local DHCP server). Any valid value may be chosen in this case (if omitted, the default value chosen is 254).

If a pool name is specified for the address, this pool name is sent to the DHCP server in a vendor-specific sub-option of Option 82 to indicate from which pool the server should take the address. If the gi-address option is specified for the address, this is interpreted as if no address was provided.

PPP policy override parameters

The group interface PPP policy parameters apply when a PPPoE session is created in the system. At PPPoE session authentication, it is possible to override the following PPP policy parameters such that they can have different values per session or group of sessions.

pado-delay

An override of the pado-delay command applies to PADI authentication only in the following circumstances:

-

via local user database authentication

- MD-CLI

configure subscriber-mgmt local-user-db "ludb-1" ppp host "user-1@csp.net" { pado-delay 2 } - classic

CLI

A:node-2>config>subscr-mgmt# info local-user-db "ludb-1" create ppp host "user-1@csp.net" create pado-delay 2 exit exit exit

The system ignores the pado-delay command in the local user database when not applicable, such as during PAP or CHAP authentication.

- MD-CLI

- via RADIUS authentication, using the 26.6527.34 Alc-PPPoE-PADO-Delay VSA in an Access-Accept

max-sessions-per-mac

An override of the max-sessions-per-mac command applies to PADI authentication only in the following circumstance:

- via local user database authentication

- MD-CLI

configure subscriber-mgmt local-user-db "ludb-1" ppp host "user-1@csp.net" { ppp-policy-parameters { max-sessions-per-mac 1 } } - classic

CLI

A:node-2>config>subscr-mgmt# info local-user-db "ludb-1" create ppp host "user-1@csp.net" create ppp-policy-parameters max-sessions-per-mac 1 exit exit exit exit

- MD-CLI

The system ignores the max-sessions-per-mac command in the local user database when not applicable, such as during PAP or CHAP authentication.

LCP keepalive interval and hold-up-multiplier

An override of the LCP keepalive interval and hold-up-multiplier commands applies to both PADI and PAP or CHAP authentication in the following circumstances:

-

via local user database authentication

- MD-CLI

configure subscriber-mgmt local-user-db "ludb-1" ppp host "user-1@csp.net" { ppp-policy-parameters { keepalive { hold-up-multiplier 2 interval 15 } } - classic

CLI

host "user-1@csp.net" create ppp-policy-parameters keepalive 15 hold-up-multiplier 2 exit exit

- MD-CLI

- via RADIUS authentication, by including the 241.26.6527.92 Alc-PPPoE-LCP-Keepalive-Interval and 241.26.6527.93 Alc-PPPoE-LCP-Keepalive-Multiplier VSAs in an Access-Accept

- via Diameter Gx policy management, by including the 92

Alc-PPPoE-LCP-Keepalive-Interval and 93 Alc-PPPoE-LCP-Keepalive-Multiplier Nokia

specific AVPs in the Charging-Rule-Definition AVP of a CCA message at initial PPPoE

session

setup

Charging-Rule-Install ::= <AVP Header: 1001> +-- Charging-Rule-Definition <AVP Header: 1003> +-- Charging-Rule-Name <AVP Header: 1005> = “LCP keepalive” +-- Alc-PPPoE-LCP-Keepalive-Interval <AVP Header; vendor 6527; 92> +-- Alc-PPPoE-LCP-Keepalive-Multiplier <AVP Header; vendor 6527; 93>

Both LCP keepalive interval and hold-up multiplier override parameters must be specified when overriding.

The active LCP keepalive interval and hold-up multiplier values for a PPPoE session is available in the output of the following CLI commands.

show service id ppoe session detail

show service id ppp session detailLCP keepalive parameter overrides apply to PPPoE PTA sessions and L2TP LNS sessions.

Subscriber per PPPoE session index

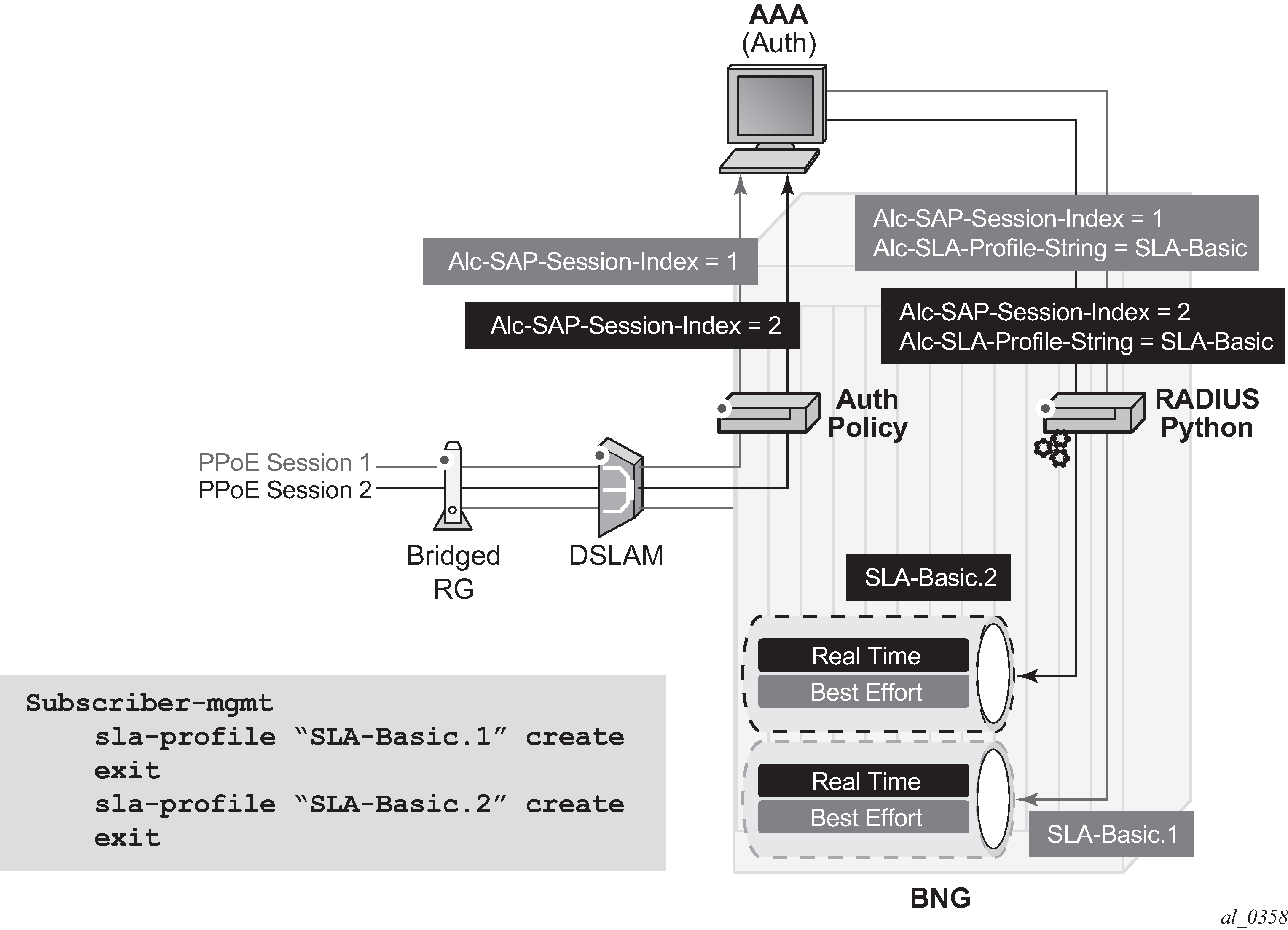

The system keeps track of the number of PPPoE sessions active on a specific SAP and assign a per SAP session index to each such that always the lowest free index is assigned to the next active PPPoE session. When PAP/CHAP RADIUS authentication is used, the PPPoE SAP session index can be sent to, and received from, the RADIUS server using the following VSA:

ATTRIBUTE Alc-SAP-Session-Index 180 integer

This is supported for all PPPoE sessions, including those using LAC and LNS, but is not supported in a dual-homing topology. It should only be used in a subscriber per VLAN model as the session index is per SAP.

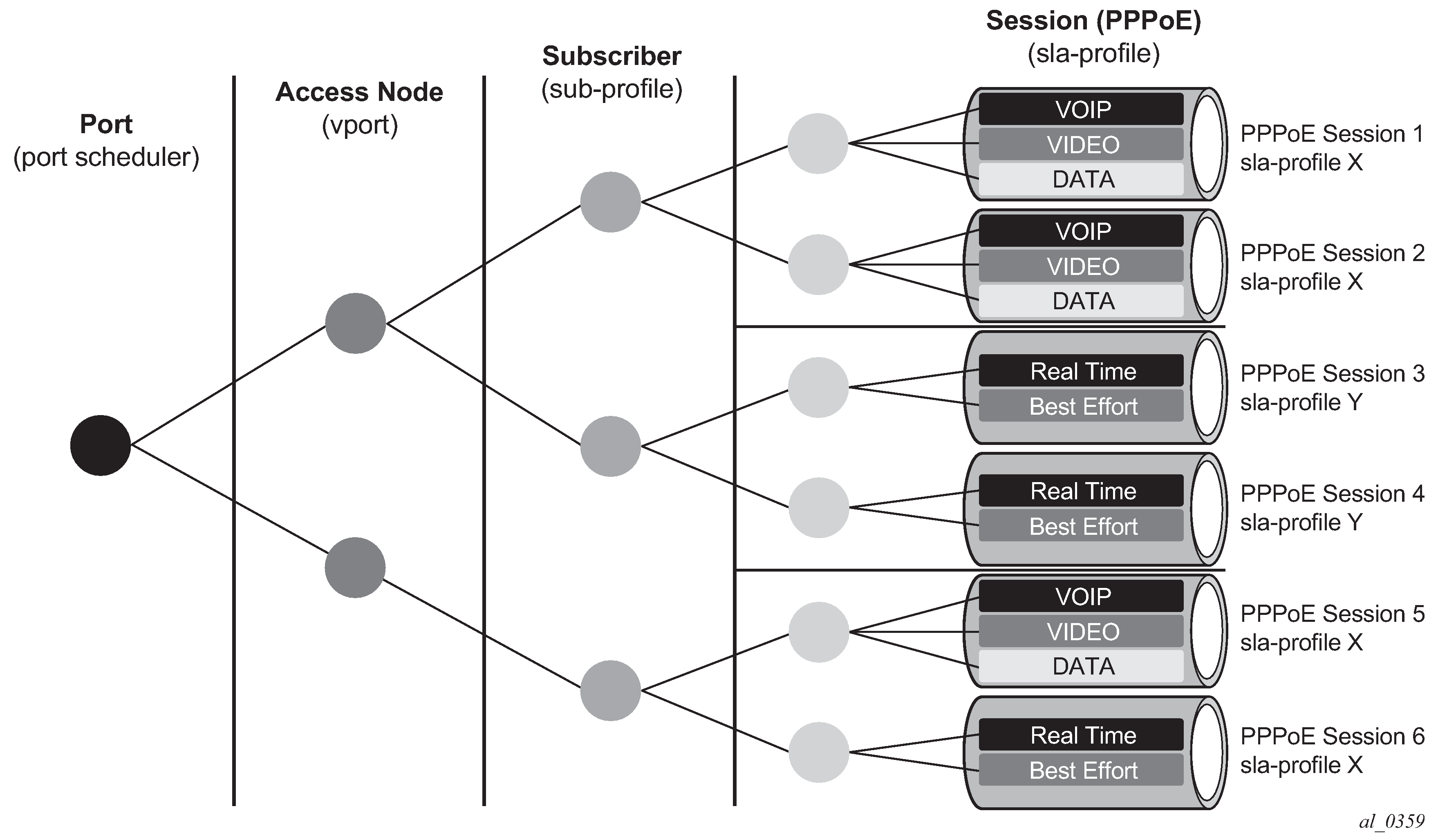

The SAP session index allows PPPoE sessions to have their own set of queues for QoS and accounting purposes when using the same SLA profile name as that received from a RADIUS server. An example of this with multiple levels of HQoS egress scheduling is shown in Egress QoS per PPPoE session. Alternatively, this can be achieved by configuring per-session SPI sharing in the SLA profile as described in SLA Profile Instance Sharing.

This requires a set of identical SLA profiles to be configured which only differ by an index being, for example, appended to their name. The SAP session index must be sent to RADIUS in the Access-Request message, which is achieved by configuring the RADIUS authentication policy to include it as follows:

Example:

configure subscriber-mgmt authentication-policy name

include-radius-attribute

[no] sap-session-index

The RADIUS server must then reflect the SAP session index back to the system in the RADIUS Access-Accept message together with the SLA profile name.

A Python script processes the RADIUS Access-Accept message to append the SAP session index to the SLA profile name to create the unique SLA profile name, in this example with the format:

sla-profile sla-profile-name.suffix

The exact format (for example, the separator used) is not fixed and merely needs to match the pre-provisioned SLA profiles, while not exceeding 16 characters. This ensures that each PPPoE session is provided its own SLA profile and consequently its own set of queues.

This processing is shown in Per PPPoE session SLA profile selection.

Below is an example Python script for this purpose:

import alc

import struct

from alc import radius

from alc import sub_svc

PROXY_STATE = 33

ALU = 6527

SLA_PROF_STR = 13

SAP_SESSION_INDEX = 180

##################################

## QoS for Multiple PPPoE Sessions

# This script checks if a sap-session-

index (sid) is included in the authentication

# accept. If present, the sla-profile-string (sla) is adapted to "sla.sid"

if alc.radius.attributes.isVSASet(ALU,SLA_PROF_STR):

sla = alc.radius.attributes.getVSA(ALU,SLA_PROF_STR)

if alc.radius.attributes.isVSASet(ALU,SAP_SESSION_INDEX):

ssi = alc.radius.attributes.getVSA(ALU,SAP_SESSION_INDEX)

suffix = "" .join(["%x" % ord(x) for x in ssi])

alc.radius.attributes.setVSA(ALU,SLA_PROF_STR,sla + '.' + "%d" %

int(suffix,16))

To use a CoA to change the SLA profile used, the new SLA profile name must be constructed with the same suffix (in this example) as that used for the current SLA profile. This is necessary to ensure unique use of a specifically provisioned SLA profile. This mandates that the SAP session index is included in the CoA information. Two options are proposed to achieve this:

The CoA can specify a new SLA profile name and include the SAP session index. A Python script would then process the CoA and construct the new SLA profile name to be used by appending the suffix in the same way as was done with the RADIUS Access-Accept.

The CoA could be using a RADIUS proxy which may make the first option unattractive. An alternative solution would be to use a Python script to append the suffix to the acct-session-id in all messages sent so that the suffix can be identified when a CoA is received that uses the acct-session-id(+suffix) for session identification. This would need to be performed for all messages sent that include the acct-session-id. CoAs would reference the session using the acct-session-id+suffix. A Python script would be required to remove the suffix and append it to the new SLA profile name. All messages received with the acct-session-id+suffix would be processed by the Python script to remove the suffix before sending the acct-session-id to the system.

To ensure that the acct-session-id sent in RADIUS accounting messages is updated with the suffix, the user must configure include-radius-attribute sla-profile in the RADIUS accounting policy to be applied. The Python script needs to remove the suffix from the SLA profile and add it to the acct-session-id for all messages sent. Clearly, the acct-session-id used by any external server would then be different to that seen on the system.

Local DHCP server with local user database

If a DHCP server is queried for IP or ESM information, the following information is sent in the DHCP request:

Option 82 sub-options 1 and 2 (Circuit-ID and Remote-ID)

These options contain the circuit-ID and remote-ID that were inserted as tags in the PPPoE packets).

Option 82 sub-option 9 vendor-id 6527 VSO 6 (client type)

This value is set to 1 to indicate that this information is requested for a PPPoE client. The local DHCP server uses this information to match a host in the list of PPPoE users and not in the DHCP list.

Option 82 sub-option 6 (Subscriber-ID)

This option contains the username that was used if PAP/CHAP authentication was performed on the PPPoE connection.

Option 82 sub-option 13 (DHCP pool)

This option indicates to the DHCP server that the address from the client should be taken from the specified pool. The local DHCP server only honors this request if the use-pool-from-client option is configured in the server configuration.

Option 82 sub-option 14 (PPPoE Service-Name)

This option contains the service name that was used in the PADI packet during PPPoE setup.

Option 60 (Vendor class ID)

This option contains the string ‟ALU7XXXSBM” to identify the DHCP client vendor.

Option 61 (Client Identifier) (optional)

When client-id mac-pppoe-session-id is configured in the config>service>vprn>sub-if>grp-if>pppoe>dhcp-client or config>service>ies>sub-if>grp-if>pppoe>dhcp-client context, the client identifier option is included and contains a type-value with type set to zero and value set to a concatenation of the PPPoE client MAC address and the PPPoE session ID.

The WT-101 access loop options are not sent for PPPoE clients

Local user database settings relevant to PPPoE hosts when their information is retrieved from the local DHCP server using this database:

Host identification parameters (including username)

address

DNS servers (under DHCP options)

NBNS servers (under DHCP options)

identification (ESM) strings

For username matching, the incoming username (user[@domain]) is always first converted to a user and a domain entity by splitting it on the first @-sign. If the no-domain parameter to the username is provided, the user component should be equal to the specified username, if the domain-only portion of the username is provided, the domain entity should be equal to the specified username and if no extra parameters are provided, the user and domain components are concatenated again and compared to the specified username.

To prevent load problems, if DHCP lease times of less than 10 minutes are returned, these are not accepted by the PPPoE server.

PPPoE session ID allocation

The following two parameters in the PPP policy control the PPPoE session ID allocation method in the discovery phase.

config>subscr-mgmt>ppp-policy>sid-allocation {sequential | random}

config>subscr-mgmt>ppp-policy>unique-sid-per-sap [per-msap]

The following list describes the various combinations of these two parameters:

The session ID range is 1 to 8191.

sid-allocation sequential and no unique-sid-per-sap (the default allocation method)

Each first PPPoE session with a specific client MAC address and active on a specific SAP or MSAP is configured with a session ID value of 1. The session ID for subsequent PPPoE sessions with the same client MAC address and active on the same SAP or MSAP is allocated in sequentially-increasing order.

The PPPoE session ID is unique per (client MAC address, SAP) and per (client MAC address, MSAP).

sid-allocation sequential and unique-sid-per-sap

Each PPPoE session that is active on a specific SAP is assigned a unique, sequentially-increasing session ID starting with a session ID value of 1.

A unique, sequentially-increasing session ID starting with a session ID value of 1 is assigned per capture SAP: PPPoE sessions with the same or different client MAC address and that are active on the same or different MSAP have a unique session ID per capture SAP.

The PPPoE session ID is unique per SAP and per capture SAP.

For a unique-sid-per-sap, the maximum number of PPPoE sessions per SAP or per capture SAP is 8191. This limit is enforced across all derived MSAPs.

sid-allocation sequential and unique-sid-per-sap per-msap

Each PPPoE session that is active on a specific SAP is assigned a unique, sequentially-increasing sessions ID starting with a session ID value of 1.

A unique, sequentially-increasing session ID starting with a session ID value of 1 is assigned per capture SAP. PPPoE sessions with the same or different client MAC address and that are active on the same MSAP have a unique session ID.

The PPPoE session ID is unique per SAP and per MSAP.

For a unique-sid-per-sap per-msap, the maximum number of PPPoE sessions per SAP or per MSAP is 8191.

sid-allocation random and no unique-sid-per-sap

Each PPPoE session with the same client MAC address and active on the same SAP or MSAP is assigned a unique, randomly-assigned session ID.

The PPPoE session ID is unique per client MAC address and SAP and per client MAC address and MSAP.

sid-allocation random and unique-sid-per-sap

Each PPPoE session that is active on a specific SAP is assigned a unique, randomly-assigned session ID.

A unique session ID is randomly-assigned per capture SAP: PPPoE sessions with the same or different client MAC address and that are active on the same or different MSAP have a unique session ID per capture SAP.

The PPPoE session ID is unique per SAP and per capture SAP.

For a unique-sid-per-sap configured, the maximum number of PPPoE sessions per SAP or per capture SAP is 8191. This limit is enforced across all derived MSAPs.

sid-allocation random and unique-sid-per-sap per-msap

Each PPPoE session that is active on a specific SAP or MSAP is assigned a unique, randomly-assigned session ID.

The PPPoE session ID is unique per SAP and per MSAP.

With unique-sid-per-sap per-msap configured, the maximum number of PPPoE sessions per SAP or per MSAP is 8191.

Multiple Sessions per MAC Address

To support MAC-concentrating network equipment, which translates the original MAC address of a number of subscribers to a single MAC address toward the PPPoE service, the SR OS supports up to 8191 PPPoE sessions per MAC address. Each of these sessions are identified by a unique combination of MAC address and PPPoE session ID.

To set up multiple sessions per MAC, the following limits should be set sufficiently high:

Maximum sessions per MAC in the PPPoE policy

The PPPoE interface session limit in the PPPoE node of the group interface

The PPPoE SAP session limit in the PPPoE node of the group interface

The multiple-subscriber-sap limit in the subscriber management node of the SAP

If host information is retrieved from a local DHCP server, care must be taken that, although a host can be identified by MAC address, circuit ID, remote ID or username, a lease in the DHCP server is, by default, only indexed by MAC address and circuit ID. For example, multiple sessions per MAC address are only supported in this scenario if every host with the same MAC address has a unique Circuit-ID value.

To enable IPv4 address allocation using the internal DCHCPv4 client for multiple PPPoE sessions on a single SAP and having the same MAC address and circuit-ID, the optional CLI parameter allow-same-circuit-id-for-dhcp should be added to the max-sessions-per-mac configuration in the PPP policy. The SR OS local DHCP server detects the additional vendor-specific options inserted by the internal DCHCPv4 client and uses an extended unique key for lease allocation.

Session limit per circuit ID

The config>subscr-mgmt>ppp-policy max-sessions-per-cid command limits the number of PPPoE sessions with the same Agent Circuit ID that can be active on the same SAP or MSAP.

The limit is enforced in the discovery phase, before PAP or CHAP authentication based on the Agent Circuit ID sub-option that is present in the vendor-specific PPPoE access loop identification tag added in PADI and PADR messages by a PPPoE intermediate agent.

By default, PPPoE PADI messages without the Agent Circuit ID sub-option are dropped when a max-sessions-per-cid limit is configured.

3502 2020/06

16 11:02:57.949 UTC MINOR: SVCMGR #2214 Base Managed SAP creation failure

"The system could not create Managed SAP:1/1/

4:2111.100, MAC:00:00:64:6b:01:07, Capturing SAP:1/1/

4:*.*, Service:10. Description: Agent Circuit ID sub-

option missing in PADI and per circuit id limit configured"

The default behavior can be overruled with the optional allow-sessions-without-cid keyword. PPPoE sessions without an Agent Circuit ID can be established on a SAP with a max-sessions-per-cid limit configured. The max-sessions-per-cid max-sessions-per-cid limit is not applied to these sessions.

When the max-sessions-per-cid limit is not configured, no limit is placed on the number of PPPoE sessions with the same Agent Circuit ID on the same SAP or MSAP and PPPoE sessions with or without an Agent Circuit ID can be established.

PPP session re-establishment

The re-establish-session command allows a host to re-establish a PPP session if the previous PPP session has yet to be terminated. The only way for a host to reconnect is to wait for the health check to fail or a manual termination by the operator. To allow a faster reconnect, the feature allows a PADI request to terminate the host previous session and allow the host to re-establish a new PPP session. As the old PPP session terminates, the accounting record also stops. The new PPP session starts a new accounting record; this is to ensure that the subscriber is not charged for the unused time in the previous PPP session.

Subscribers that use credit-control-policy along with PPP re-establish-session can experience a longer attempt at re-establishing a PPP connection.

When PPP subscribers closes an L2TP connection, the terminate cause on the accounting record would show ‟user-request”. For cases, where PPP re-establishment is enabled, stale connections are closed without requests. The terminate cause on the accounting record would show ‟loss of service”.

Private retail subnets

IPoE and PPPoE are commonly used in residential networks and have expanded into business applications.

Both IPoE and PPPoE subscriber hosts terminate in a retail VPRN. It is possible for the subscriber to connect to one or more 7750 SRs for dual homing purposes. When the subscriber is dual-homed, routing between the two BNGs is required and can be performed with either a direct spoke SDP between the two nodes or by MP-BGP route learning.

For PPPoE, both PADI and PAP/CHAP authentication are supported. The PPPoE session is negotiated with the parameters defined by the retail VPRN interface. Because the IP address space of the sub-mgmt host may overlap between VPRN services, the node must anti-spoof the packets at access ingress with the session ID.

When the config>service>vprn>sub-if>private-retail-subnets command is enabled on the subscriber interface, the retail IP subnets are not pushed into the wholesale context. This allows IP addresses to overlap between different retail VPRNs (those VPRNs can have IPoE or PPPoE sessions). If an operator requires both residential and business services, two VPRNs connected to the same wholesaler can be created and use the flag in only one of them.

To update a subscriber attribute (such as an SLA-profile or subscriber-profile), either perform a RADIUS CoA or enter the tools>perform>subscriber-mgmt>coa command. To identify a subscriber for a CoA, the subscriber IP address can be used as part of the subscriber's key. Because it is possible for different subscribers to use the same IP address in multiple private retail VPRNs, additional parameters are required in addition to the subscriber IP address. When performing a CoA on a retail subscriber for PPPoE hosts using a private retail subnet, the following conditions apply:

If NAS-port-ID is used, the VSA Alc-Retail-Serv-Id or Alc-Retail-Serv-Name must be included. The subscriber IP address and prefix must also be included.

If Alc-serv-ID or Alc-Serv-Name is used, the subscriber retail service ID or name must be referenced. The subscriber IP address and prefix must also be included.

When performing a CoA on a retail subscriber for IPoE hosts using a private retail subnet, the following conditions apply:

If NAS-port-ID is used, the VSA Alc-Client-Hardware-Addr referencing the subscriber MAC and Alc-Retail-Serv-Name must be included. The VSA Alc-Retail-Serv-Id must not be included. The subscriber IP address and prefix must also be included.

If Alc-serv-ID or Alc-Serv-Name is used, it must reference the subscriber wholesale service ID or name. The VSA Alc-Client-Hardware-Address and the subscriber IP address and prefix must also be included.

To perform a DHCP force renew on IPoE hosts, enter the tools>perform>subscriber-mgmt>forcerenew command. To identify between subscribers within a private retail VPRN where overlapping IP addresses are possible, the MAC address must be used instead of the IP address in the tools command.

Private retail subnet are supported on both numbered and unnumbered subscriber interfaces. RADIUS host creation is possible for IPoEv4 hosts.

When IPoE and PPPoE session terminates in the retail VPRN, the node must learn the retail VPRN service ID. This can be provided by the LUDB or RADIUS. If the local user database is used, the host configuration provides a reference to the VPRN service ID. If RADIUS is used, RADIUS can return the service ID or name VSA. The retail subscriber interface must also reference the subscriber interface on the wholesale subscriber interfaces.

The following are not supported on private retail subnets:

-

ARP hosts

-

static hosts

ESM multicast is not supported for IPoE hosts that use overlapping IP address between private retail VPRNs. ESM multicast is only supported for IPoE hosts that have unique IP addresses in the system. It is possible for a IPoE subscriber to contain both type of hosts, only the hosts with overlapping private IP address do not support ESM multicast.

IPCP subnet negotiation

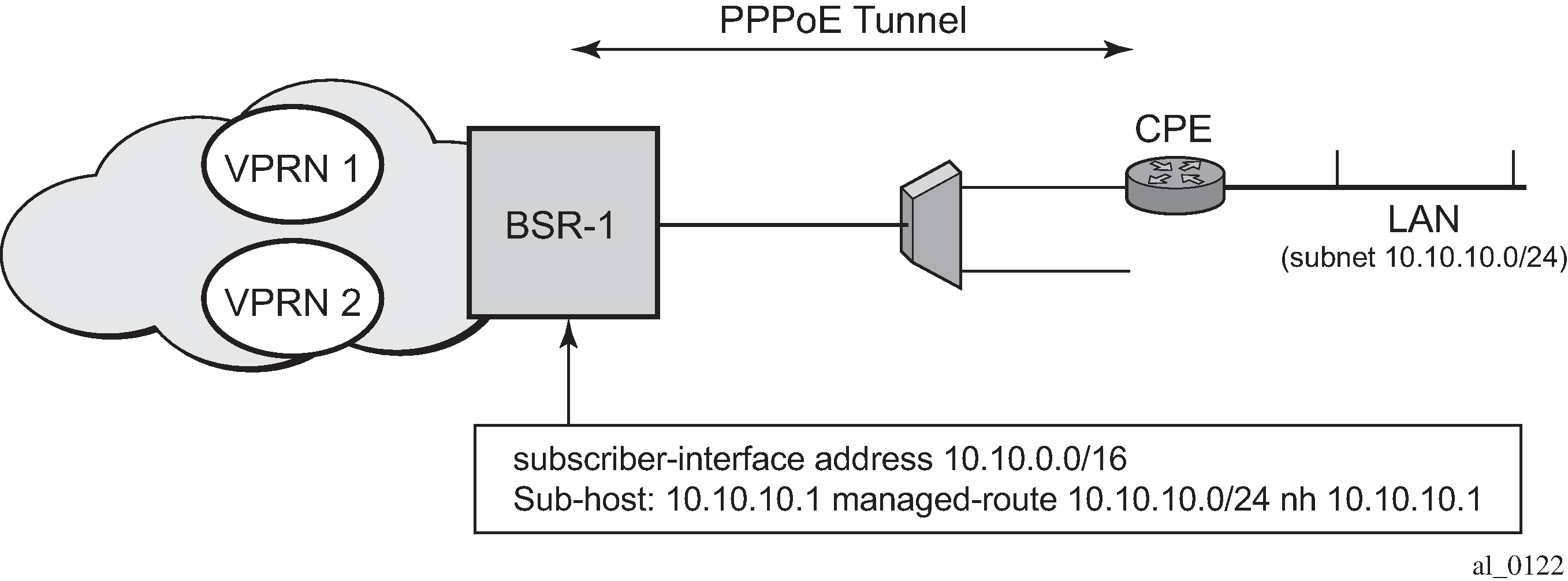

This feature enables negotiation between Broadband Network Gateway (BNG) and customer premises equipment (CPE) so that CPE is allocated both ip-address and associated subnet.

Some CPEs use the network up-link in PPPoE mode and perform dhcp-server function for all ports on the LAN side. Instead of wasting one subnet for P2P uplink, CPEs use allocated subnet for LAN portion as shown in CPEs network up-link mode.

From a BNG perspective, the specified PPPoE host is allocated a subnet (instead of /32) by RADIUS, external dhcp-server, or local-user-db. And locally, the host is associated with managed-route. This managed-route is a subset of the subscriber-interface subnet, and also, subscriber-host ip-address is from managed-route range. The negotiation between BNG and CPE allows CPE to be allocated both ip-address and associated subnet.

Numbered WAN support for Layer 3 RGs

Numbered WAN interfaces on RGs is useful to manage v6-capable RGs. Dual-stack RGs can be managed through IPv4. However, with v6-only RGs or with dual-stack RGs with private only v4 address, RGs require a globally routable v6 WAN prefix (or address) for management. This feature provides support to assign WAN prefix to PPP based Layer 3 RG using SLAAC. The feature also adds a new RADIUS VSA (Alc-PPP-Force-IPv6CP, Boolean) to control triggering of IPv6CP on completion of PPP LCP. RA messages are sent as soon as IPv6CP goes into open state, and the restriction to hold off on sending RAs until DHCP6-PD is complete if a dual-stack PPP is no longer applicable.

IES as retail service for PPPoE host

In this application, the PPPoE subscriber host terminates in a retail IES service. The IES service ID or name can be obtained by the Alc-Retail-Serv-Id or Alc-Retail-Serv-Name attribute in the RADIUS Access-Accept packet.

Be aware that Alc-Retail-Serv-Name takes precedence over Alc-Retail-Serv-Id if both are specified. The Access-Accept packet for initial authentication can contain both the Alc-Retail-Serv-ID and Alc-Retail-Serv-Name, but the Access-Accept packet for re-authentication and CoA can have only one AVP that the subscriber session is using.

If MSAP is used, the SAP is created in the wholesale VPRN.

The PPPoE session is negotiated with the command options defined by the wholesale VPRN group interface. The connectivity to the retailer is performed using the linkage between the two interfaces.

Because of the nature of IES service, there is no IP address overlap between different IES retail services; therefore, the private-retail-subnets flag is not needed in this case.

Unnumbered PPPoE

Unlike regular IP routes which are mainly concerned with next-hop information, subscriber-hosts are associated with an extensive set of parameters related to filtering, qos, stateful state (PPPoE/DHCP), antispoofing, and so on. Forwarding Database (FDB) is not suitable to maintain all this information. Instead, each subscriber host record is maintained in separate set of subscriber-host tables.

By pre-provisioning the IP prefix (IPv4 and IPv6) under the subscriber-interface and sub-if>ipv6 CLI hierarchy, only a single prefix aggregating the subscriber host entries is installed in the FDB. This FDB entry points to the corresponding subscriber-host tables that contain subscriber-host records.

When a IPv4/IPv6 prefix is not pre-provisioned, or the subscriber-hosts falls out of pre-provisioned prefix, each subscriber-host is installed in the FDB. The result of the subscriber-host FDB lookup points to the corresponding subscriber-host record in the subscriber-host table. This scenario is referred to as unnumbered subscriber-interfaces.

Unnumbered does not mean that the subscriber hosts do not have an IP address or prefix assigned. It only means that the IP address range out of which the address or prefix is assigned to the host does not have to be known in advance through configuration under the subscriber-interface or sub-if>ipv6 node.

An IPv6 example would be:

configure

router/service

subscriber-interface <name>

ipv6

[no] allow-unmatching-prefixes

delegated-prefix-length <bits>

subscriber-prefixes

This CLI indicates the following:

There is no need for any indication of anticipated IPv6 prefixes in CLI.

However, the delegated-prefix-length (DPL) command is required. The DPL (or the length of the prefix assigned to each Residential Gateway) must be known in advance. All Residential Gateways (or subscribers) under the same subscriber-interface share this pre-configured DPL.

The DPL range is 48 to 64.

If the prefix length in the received PD (through the DHCP server, RADIUS or LUDB) and the DPL do not match, the host creation fails.

If the assigned IP prefix/address (DHCP server, RADIUS, LUDB) for the host falls outside of the CLI defined prefixes and the allow-unmatching-prefixes command is configured, then the new address and prefix automatically installs in the FDB.

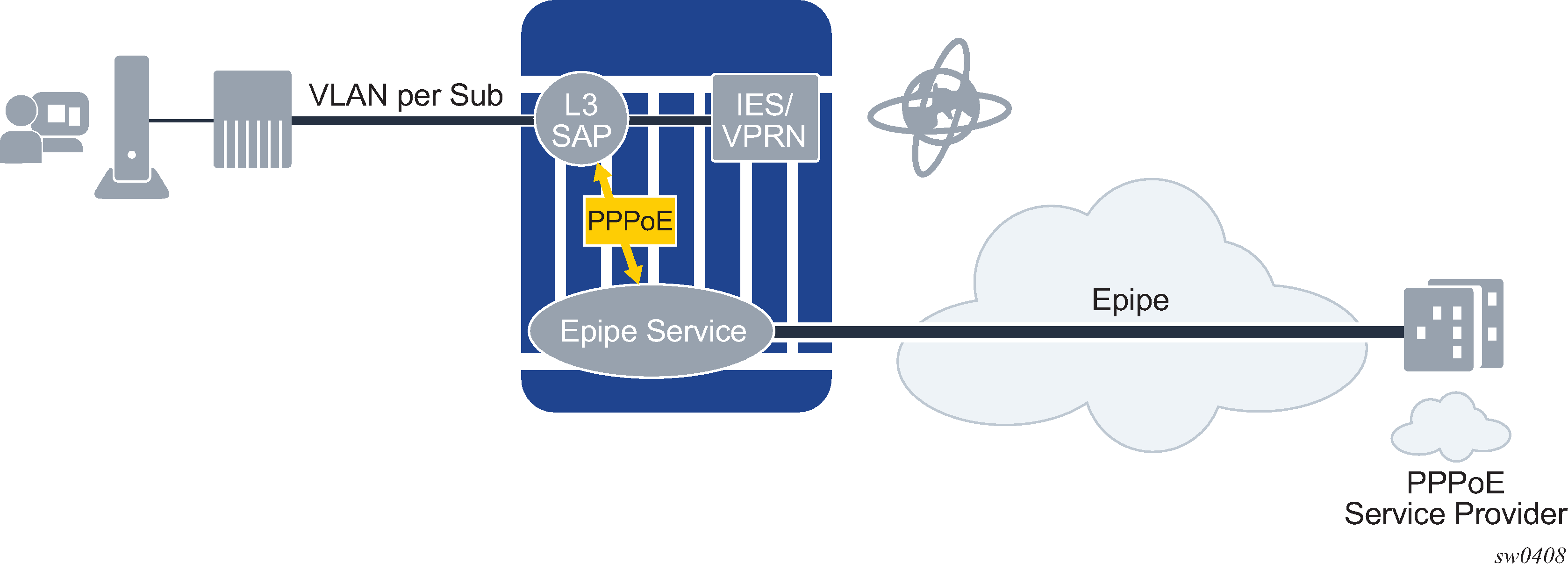

Selective backhaul of PPPoE traffic using an Epipe service

The router supports the redirection of PPPoE packets on ingress to a Layer 3 static subscriber SAP and PW-SAPs (for example, bound to an IES or VPRN service) in the upstream direction toward an Epipe service. The Epipe, which is bound to a specific SAP on a group interface of a Layer 3 service, is used to backhaul the PPPoE traffic toward a remote destination, such as, a wholesale service provider. Other non-PPPoE packets are still forwarded to the group interface on the IES or VPRN.

There is a one-to-one mapping from backhaul Epipe to the subscriber. A single backhaul Epipe service per subscriber SAP is supported. Only static configuration of subscriber SAPs is supported.

In the downstream direction, all traffic arriving on the backhaul Epipe is forwarded to the subscriber SAP and merged with IPoE traffic.

This architecture is shown in Redirection of traffic to Epipe for backhaul.

If the SAP on the Layer 3 service is configured to indicate PPPoE redirection, in addition to ESM anti-spoofing, then the following processing occurs in the upstream direction:

All PPPoE traffic, such as traffic with Ethertype 0x8863 and 0x8864, skips the anti-spoof lookup and is then forwarded to a configured Epipe service.

All non-PPPoE is subject to anti-spoofing lookup. If the anti-spoofing fails, then the packet is dropped. Otherwise, processing continues using the host configuration.

The fwd-wholesale context in the Layer 3 SAP is used to configure the forwarding of PPPoE packets toward a specified Epipe service:

config

service

ies | vprn

subscriber-interface

group-interface

sap 1/1/1:10.20

anti-spoof ...

fwd-wholesale

pppoe 10

exit

The PPPoE option specifies that only packets matching Ethertype 0x8863 and 0x8864 are redirected to the Epipe of service ID '10'. This includes all PPPoE control plane packets. The service IDs specified under fwd-wholesale cannot refer to a vc-switching Epipe service.

When fwd-wholesale is configured to an Epipe with a specified service ID, the system ensures that the Epipe exists and meets all the requirements to participate in the PPPoE redirect. There is no need for additional configuration under the Epipe. For the previous example, only the following configuration is required for the Epipe:

epipe 10 name "10" customer 1 create

service-mtu 1400

spoke-sdp 10:9 create

no shutdown

exit

no shutdown

exit

The system generates a CLI error if a user tries to configure additional SAPs on an Epipe that is already referenced from a fwd-wholesale context.

In the upstream direction, subscriber queues are used for IPoE packets destined for a local host and PPPoE backhaul traffic. However, CPM traffic is not consuming subscriber queue resources; QoS profiles are instantiated while single-sub-parameters profiled-traffic-only is enabled.

In the downstream direction, PPPoE traffic arriving on the Epipe is merged back into the subscriber SAP referencing the Epipe service. ESM traffic from the host uses the subscriber queues that the host is configured to use, and the backhaul traffic uses the SAP queues. If profile-traffic-only is configured, then all traffic uses the SAP queues.

The operational status of the Epipe with a matching service ID can only be up if the corresponding Layer 3 SAP's operational status is up. The operational or administrative status of the Epipe does not affect the status of the Layer 3 service SAP. If the Layer 3 service SAP is up, but the backhaul Epipe is down, then the system continues to redirect packets to the Epipe, but they are dropped by the Epipe service. The status of the Epipe service is indicated in the output of the show>service>service-using command.

PPPoE interoperability enhancements

Interoperability with non-conforming PPPoE client implementations is supported with the following behavior:

The PPPoE client continues to send IPCP renegotiation messages (such as ConfReq) after negotiation is complete. BNG terminates the PPP session by default, however, the following command allows BNG to ignore the renegotiation messages:

Classic CLI:

config>subscr-mgmt>ppp-policy>ncp-renegotiation ignore

MD-CLI:

configure subscriber-mgmt ppp-policy ncp-renegotiation

The PPPoE client sets incorrect identifier values in the Echo-Reply messages that are sent to BNG. BNG discards such messages and the PPP session is terminated because of the Echo timeout. However, the following command instructs BNG to ignore the Identifier field of the Echo-Reply message to keep the PPP session up:

Classic CLI:

config>subscr-mgmt>ppp-policy>lcp-ignore-identifier

MD-CLI:

configure subscriber-mgmt ppp-policy lcp-ignore-identifier

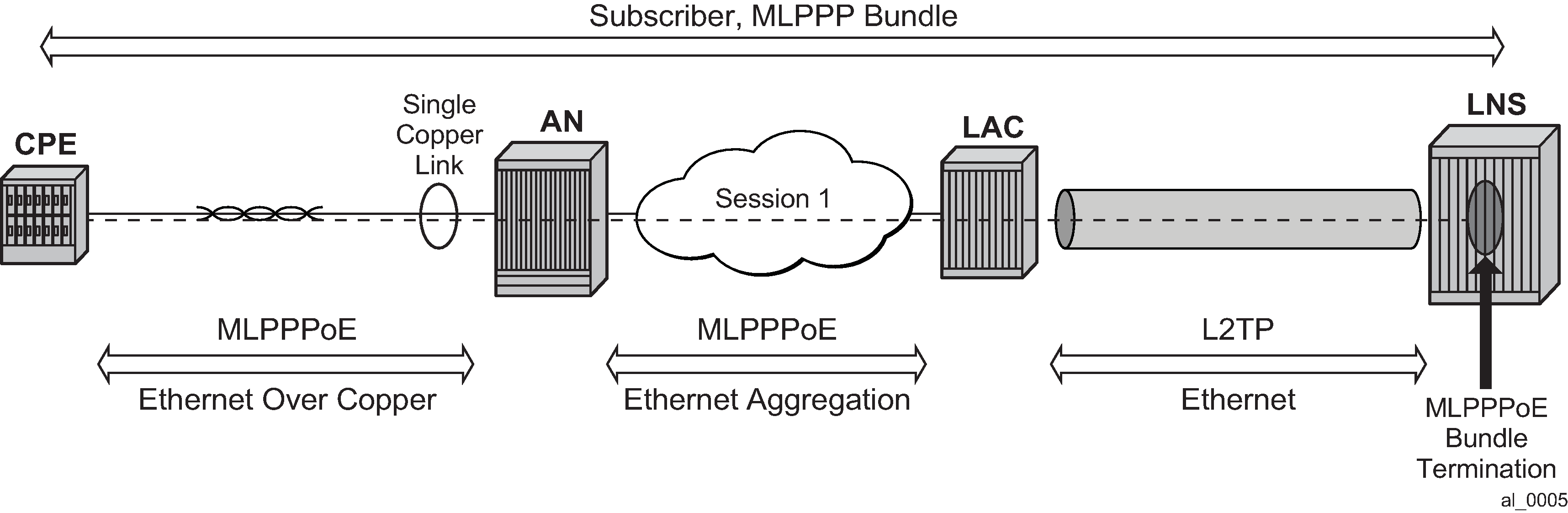

MLPPPoE with LFI on LNS

MLPPPoX is generally used to address bandwidth constraints in the last mile. The following are other uses for MLPPPoX:

To increase bandwidth in the access network by bundling multiple links and VCs together. For example it is less expensive for a customer with an E1 access to add another E1 link to increase the access bandwidth, instead of to upgrade to the next circuit speed (E3).

LFI on a single link to prioritize small packet size traffic over traffic with large size packets. This is needed in the upstream and downstream direction.

PPPoE and PPPoEoA/PPPoA v4/v6 host types are supported.

Terminology

The term MLPPPoX is used to reference MLPPP sessions over ATM transport (oA), Ethernet over ATM transport (oEoA) or Ethernet transport (oE). Although MLPPP in subscriber management context is not supported natively over PPP/HDLC links, the terms MLPPP and MLPPPoX terms can be used interchangeably. The reason for this is that link bundling, MLPPP encapsulation, fragmentation and interleaving can be in a broader scope observed independently of the transport in the first mile. However, MLPPPoX terminology prevails in this section in an effort to distinguish MLPPP functionality on an ASAP MDA (outside of ESM) and MLPPPoX in LNS (inside of ESM).

The terms speed and rate are interchangeably used throughout this section. Usually, speed refers to the speed of the link in general context (high or low) while rate quantitatively describes the link speed and associates it with the specific value in b/s.

LNS MLPPPoX

This functionality is supported through LNS on BB-ISA. LNS MLPPPoX can be used then as a workaround for PTA deployments, whereby LAC and LNS can be run back-to-back in the same system (connected by an external loop or a VSM2 module), and therefore locally terminate PPP sessions.

MLPPPoX can:

Increase bandwidth in the last mile by bundling multiple links together.

LFI/reassembly over a single MLPPPoX capable link (plain PPP does not support LFI).

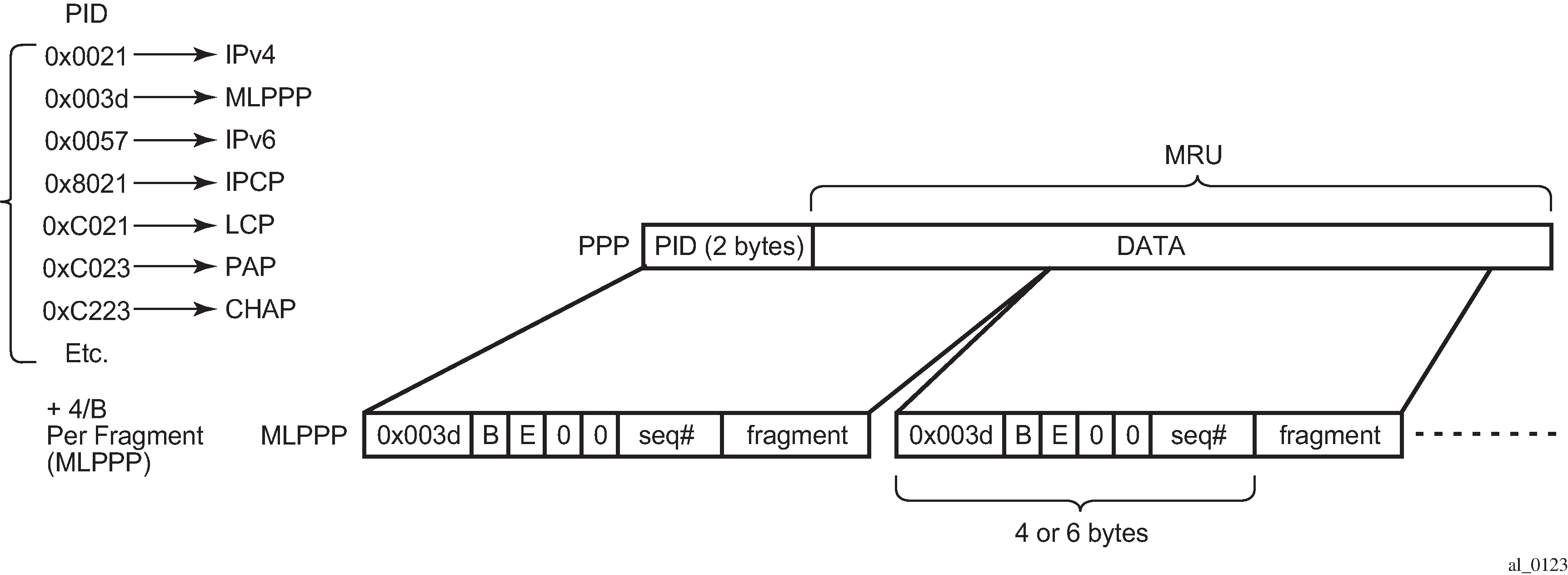

MLPPP encapsulation

After the MLPPP bundle is created in the 7750 SR, traffic can be transmitted by using MLPPP encapsulation. However, MLPPP encapsulation is not mandatory over an MLPPP bundle.

MLPPP header is primarily required for sequencing the fragments. If a packet is not fragmented, it can be transmitted over the MLPPP bundle using either plain PPP encapsulation or MLPPP encapsulation. MLPPP encapsulation for fragmented traffic is shown in MLPPP encapsulation.

MLPPPoX negotiation

MLPPPoX is negotiated during the LCP session negotiation phase by the presence of the Max-Received-Reconstructed Unit (MRRU) field in the LCP ConfReq. MRRU option is a mandatory field required in MLPPPoX negotiation. It represents the maximum number of octets in the Information field (Data part in MLPPP encapsulation) of a reassembled packet. The MRRU value negotiated in the LCP phase must be the same on all member links and it can be greater or lesser than the PPP negotiated MRU value of each member link. This means that the reassembled payload of the PPP packet can be greater than the transmission size limit imposed by individual member links within the MLPPPoX bundle. Packets are always be fragmented so that the fragments are within the MRU size of each member link.

Another field that could be optionally present in an MLPPPoX LCP Conf Req is an Endpoint Discriminator (ED). Along with the authentication information, this field can be used to associate the link with the bundle.

The last MLPPPoX negotiated option is the Short Sequence Number Header Format Option which allows the sequence numbers in MLPPPoX encapsulated frames/fragments to be 12-bit long (instead 24-bit long, by default).

After the multilink capability is successfully negotiated by LCP, PPP sessions can be bundled together over MLPPPoX capable links.

The basic operational principles are:

LCP session is negotiated on each physical link with MLPPPoX capabilities between the two nodes.

Based on the ED and the authentication outcome, a bundle is created. A subsequent IPCP negotiation is conveyed over this bundle. User traffic is sent over the bundle.

If a new link tries to join the bundle by sending a new MLPPPoX LCP Conf Request, the LCP session is negotiated, authentication performed and the link is placed under the bundle containing the links with the same ED and authentication outcome.

IPCP and IPv6CP is in the whole process negotiated only once over the bundle. This negotiation occurs at the beginning, when the first link is established and MLPPPoX bundle created. IPCP and IPc6CP messages are transmitted from the 7750 SR LNS without MLPPPoX encapsulation, while they can be received as MLPPPoX encapsulated or non-MLPPPoX encapsulated.

Enabling MLPPPoX

The lowest granularity at which MLPPPoX can be enabled is an L2TP tunnel. An MLPPPoX enabled tunnel is not limited to carrying only MLPPPoX sessions but can carry normal PPP(oE) sessions as well.

In addition to enabling MLPPPoX on the session terminating node LNS, MLPPPoX can also be enabled on the LAC by a PPP policy. The purpose of enabling MLPPPoX on the LAC is to negotiate MLPPPoX LCP parameters with the client. After the LAC receives the MRRU option from the client in the initial LCP ConfReq, it changes its tunnel selection algorithm so that all sessions of an MLPPPoX bundle are mapped into the same tunnel.

The LAC negotiates MLPPPoX LCP parameters regardless of the transport technology connected to it (ATM or Ethernet). LCP negotiated parameters are passed by the LAC to the LNS by Proxy LCP in a ICCN message. This way, the LNS has an option to accept the LCP parameters negotiated by the LAC or to reject them and restart the negotiation directly with the client.

The LAC transparently passes session traffic handed to it by the LNS in the downstream direction and the MLPPPoX client in the upstream direction. The LNS and the MLPPPoX client performs all data processing functions related to MLPPPoX such as fragmentation and interleaving.

After the LCP negotiation is completed and the LCP transition into an open state (configuration ACKs are sent and received), the Authentication phase on the LAC begins. During the Authentication phase the L2TP parameters become known (l2tp group, tunnel, and so on), the session is extended by the LAC to the LNS by L2TP. If the Authentication phase does not return L2TP parameters, the session is terminated because the 7750 SR does not support directly terminated MLPPPoX sessions.

In the case that MLPPPoX is not enabled on the LAC, the LAC negotiates plain PPP session with the client. If the client accepts plain PPP instead of MLPPPoX as offered by the LAC, when the session is extended to the LNS, the LNS re-negotiates MLPPPoX LCP with the client on a MLPPPoX enabled tunnel. The LNS learns about the MLPPPoX capability of the client by a Proxy LCP message in ICCN (first Conf Req received from the client is also send in a Proxy LCP). If the there is no indication of the MLPPPoX capability of the client, the LNS establishes a plain PPP(oE) session with the client.

Link Fragmentation and Interleaving

The purpose of Link Fragmentation and Interleaving (LFI) is to ensure that short high priority packets are not delayed by the transmission delay of large low priority packets on slow links.

For example it takes ~150ms to transmit a 5000B packet over a 256 kb/s link, while the same packet is transmitted in only 40us over a 1G link (~4000 times faster transmission). To avoid the delay of a high priority packet by waiting in the queue while the large packet is being transmitted, the large packet can be segmented into smaller chunks. The high priority packet can be then interleaved with the smaller fragments. This approach can significantly reduce the delay of high priority packets.

The interleaving functionality is only supported on MLPPPoX bundles with a single link. If more than one link is added into an interleaving capable MLPPPoX bundle, then interleaving is internally disabled and the tmnxMlpppBundleIndicatorsChange trap generated.

With interleaving enabled on an MLPPPoX enabled tunnel, the following session types are supported:

Multiple LCP sessions tied into a single MLPPPoX bundle. This scenario assumes multiple physical links on the client side. Theoretically it would be possible to have multiple sessions running over the same physical link in the last mile. For example, two PPPoE sessions going over the same Ethernet link in the last mile, or two ATM VCs o2q1231qa23n the same last mile link. Whichever the case may be, the LAC/LNS is unaware of the physical topology in the last mile (single or multiple physical links). Interleaving functionality is internally disabled on such MLPPPoX bundle.

A single LCP session (including dual stack) over the MLPPPoX bundle. This scenario assumes a single physical link on the client side. Interleaving is supported on such single session MLPPPoX bundle as long as the conditions for interleaving are met. Those conditions are governed by max-fragment-delay parameter and calculation of the fragment size as described in subsequent sections.

An LCP session (including dual stack) over a plain PPP/PPPoE session. This type of session is a regular PPP(oE) session outside of any MLPPPoX bundle and therefore its traffic is not MLPPPoX encapsulated.

Packets on an MLPPPoX bundle are MLPPPoX encapsulated unless they are classified as high priority packets when interleaving is enabled.

MLPPPoX fragmentation, MRRU and MRU considerations

MLPPPoX in the 7750 SR is concerned with two MTUs:

bundle-mtu determines the maximum length of the original IP packet that can be transmitted over the entire bundle (collection of links) before any MLPPPoX processing takes place on the transmitting side. This is also the maximum size of the IP packet that the receiving node can accept after it de-encapsulates and assembles received MLPPPoX fragments of the same packet. Bundle-mtu is relevant in the context of the collection of links.

link-mtu determines the maximum length of the payload before it is PPP encapsulated and transmitted over an individual link within the bundle. Link-mtu is relevant in the context of the single link within the bundle.

Assuming that the CPE advertised MRRU and MRU values are smaller than any configurable mtu on MLPPPoX processing modules in 7750 SR (carrier IOM and BB-ISA), the bundle-mtu and the link-mtu are based on the received MRRU and MRU values, respectively. For example, the bundle-mtu is set to the received MRRU value while link-bundle is set to the MRU value minus the MLPPPoX encapsulation overhead (4 or 6 bytes).

In addition to mtu values, fragmentation requires a fragment length value for each MLPPP bundle on LNS. This fragment length value is internally calculated according to the following parameters:

Minimum transmission delay in the last mile.

Fragment ‟payload to encapsulation overhead” efficiency ratio.

Various MTU sizes in the 7750 SR dictated mainly by received MRU, received MRRU and configured PPP MTU under the following hierarchy:

configure subscriber-mgmt ppp-policy ppp-mtu (ignored on LNS)

configure service vprn l2tp group ppp mtu

configure service vprn l2tp group tunnel ppp mtu

configure router l2tp group ppp mtu

configure router l2tp group tunnel ppp mtu

The decision whether to fragment and encapsulate a packet in MLPPPoX depends on the mode of operation, the packet length and the packet priority as follows:

LFI

When Interleave is enabled in a bundle, low priority packets are always MLPPPoX encapsulated. If a low-priority packet’s length exceeds the internally calculated Fragment Length, the packet is MLPPPoX fragmented and encapsulated. High priority packets whose length is smaller than the link-mtu is PPP encapsulated and transmitted without MLPPP encapsulation.

Non-LFI

When Interleave is disabled in a bundle, all packets are MLPPPoX encapsulated. If a packet’s length exceeds the internally calculated fragment length, the packet is MLPPPoX fragmented and encapsulated.

A packet of the size greater than the link-mtu cannot be natively transmitted over an MLPPPoX bundle. This packet is MLPPPoX encapsulated and consequently fragmented. This is regardless of the priority of the packet in interleaving case or whether the fragmentation is enabled or disabled.

When MLPPPoX fragmentation is disabled with the no max-fragment-delay command, it is expected that packets are not MLPPPoX fragmented but rather only MLPPPoX encapsulated to be load balanced over multiple physical links in the last mile. However, even if MLPPPoX fragmentation is disabled, it is possible that fragmentation occurs under specific circumstances. This behavior is related to the calculation of the MTU values on an MLPPPoX bundle.

Consider an example where received MRRU value sent by CPE is 1500B while received MRU is 1492B. In this case, the bundle-mtu is set to 1500B and the link-mtu is set to 1488B (or 1486B) to allow for the additional 4/6B of MLPPPoX encapsulation overhead. Consequently, IP payload of 1500B can be transmitted over the bundle but only 1488B can be transmitted over any individual link. If an IP packet with the size between 1489B and 1500B needs to be transmitted from 7750 SR toward the CPE, this packet would be MLPPPoX fragmented in 7750 SR as dictated by the link-mtu. This is irrespective of whether MLPPPoX fragmentation is enabled or disabled (as set by no max-fragment-delay flag).

To entirely avoid MLPPPoX fragmentation in this case, the received MRRU sent by CPE should be lower than the received MRU for the length of the MLPPPoX header (4 or 6 bytes). In this case, for IP packets larger than 1488B, IP fragmentation would occur (assuming that DF flag in the IP header allows it) and MLPPPoX fragmentation would be avoided.

On the 7750 SR side, it is not possible to set different advertised MRRU and MRU values with the ppp-mtu command. Both MRRU and MRU advertised values adhere to the same configured ppp mtu value.

LFI functionality implemented in LNS

As mentioned in the previous section, LFI on LNS is implemented only on MLPPPoX bundles with a single LCP session.

There are two major tasks (Most of this is also applicable to non-lfi case. The only difference between lfi and non-lfi is that there is no artificial delay performed in non-lfi case) associated with LFI on the LNS:

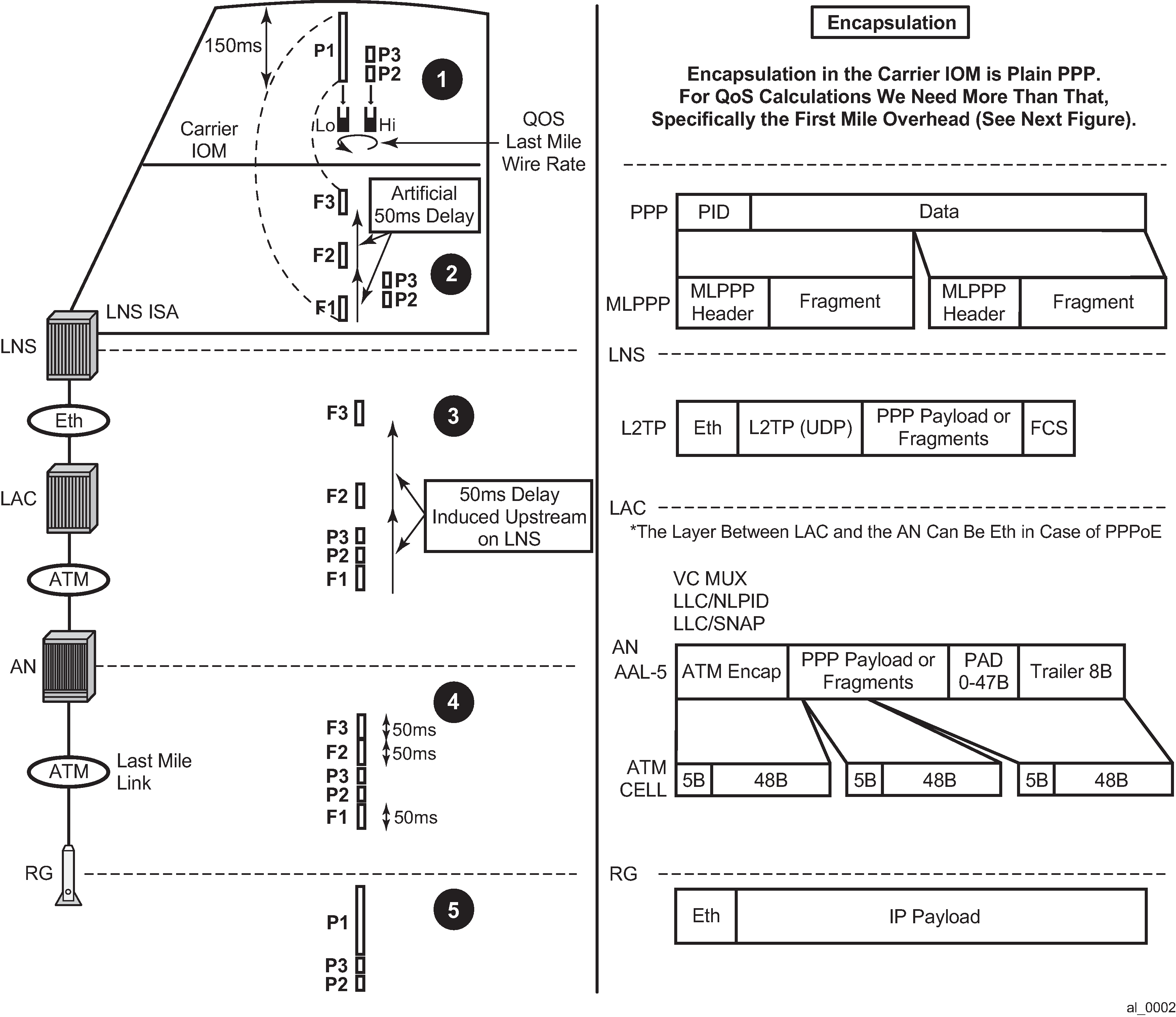

Executing subscriber QoS in the carrier IOM based on the last mile conditions. The subscriber QoS rates are the last mile on-the-wire rates. After traffic is QoS conditioned, it is sent to the BB-ISA for further processing.

Fragmentation and artificial delay (queuing) of the fragments so that high priority packets can be injected in-between low priority fragments (interleaved). This operation is performed by the BB-ISA.

Examine an example to further clarify functionality of LFI. The parameters, conditions and requirements that are used in the example to describe the wanted behavior are the following:

High priority packets must not be delayed for more than 50ms in the last mile because of the transmission delay of the large low priority packets. Considering that tolerated end-to-end VoIP delay must be under 150ms, limiting the transmission delay to 50ms on the last mile link is a reasonable option.

The link between the LNS and LAC is 1Gb/s Ethernet.

The last mile link rate is 256 kb/s.

Three packets arrive back-to-back on the network side of the LNS (in the downstream direction). The large 5000B low priority packet P1 arrives first, followed by two smaller high priority packets P2 and P3, each 100B.

Note:Packets P1, P2 and P3 can be originated by independent sources (PCs, servers, and so on) and therefore can theoretically arrive in the LNS from the network side back-to-back at the full network link rate (10Gb/s or 100Gb/s).

The transmission time on the internal 10G link between the BB-ISA and the carrier IOM for the large packet (5000B) is 4us while the transmission time for the small packet (100B) is 80ns.

The transmission time on the 1G link (LNS->LAC) for the large packet (5000B) is 40us while the transmission time for the small packet (100B) is 0.8us.

The transmission time in the last mile (256 kb/s) for the large packet is ~150ms while the transmission time for the small packet on the same link is ~3ms.

Last mile transport is ATM.

To satisfy the delay requirement for the high priority packets, the large packets are fragmented into three smaller fragments. The fragments are carefully sized so that their individual transmission time in the last mile does not exceed 50ms. After the first 50ms interval, there is an opportunity to interleave the two smaller high priority packets.

This entire process is further clarified by the five points (1-5) in the packet route from the LNS to the Residential Gateway (RG) as depicted in Packet route from the LNS to the RG.

The five points are:

-

Figure 6. Packet route from the LNS to the RG

Last mile QoS awareness in the LNS

By implementing MLPPPoX in LNS, the traffic treatment functions (QoS/LFI) of the last mile to the node (LNS) that is multiple hops away is transferred.

The success of this operation depends on the accuracy at which the last mile conditions in the LNS can be simulated. The assumption is that the LNS is aware of the two most important parameters of the last mile:

The last mile encapsulation — This is needed for the accurate calculation of the overhead associated of the transport medium in the last mile for traffic shaping and interleaving.

The last mile link rate — This is crucial for the creation of artificial congestion and packet delay in the LNS.

The subscriber QoS in the LNS is implemented in the carrier IOM and is performed on a per packets basis before the packet is handed over to the BB-ISA. Per packet, instead of per fragment QoS processing ensures a more efficient utilization of network resources in the downstream direction. Discarding fragments in the LNS would have detrimental effects in the RG as the RG would be unable to reconstruct a packet without all of its fragments.

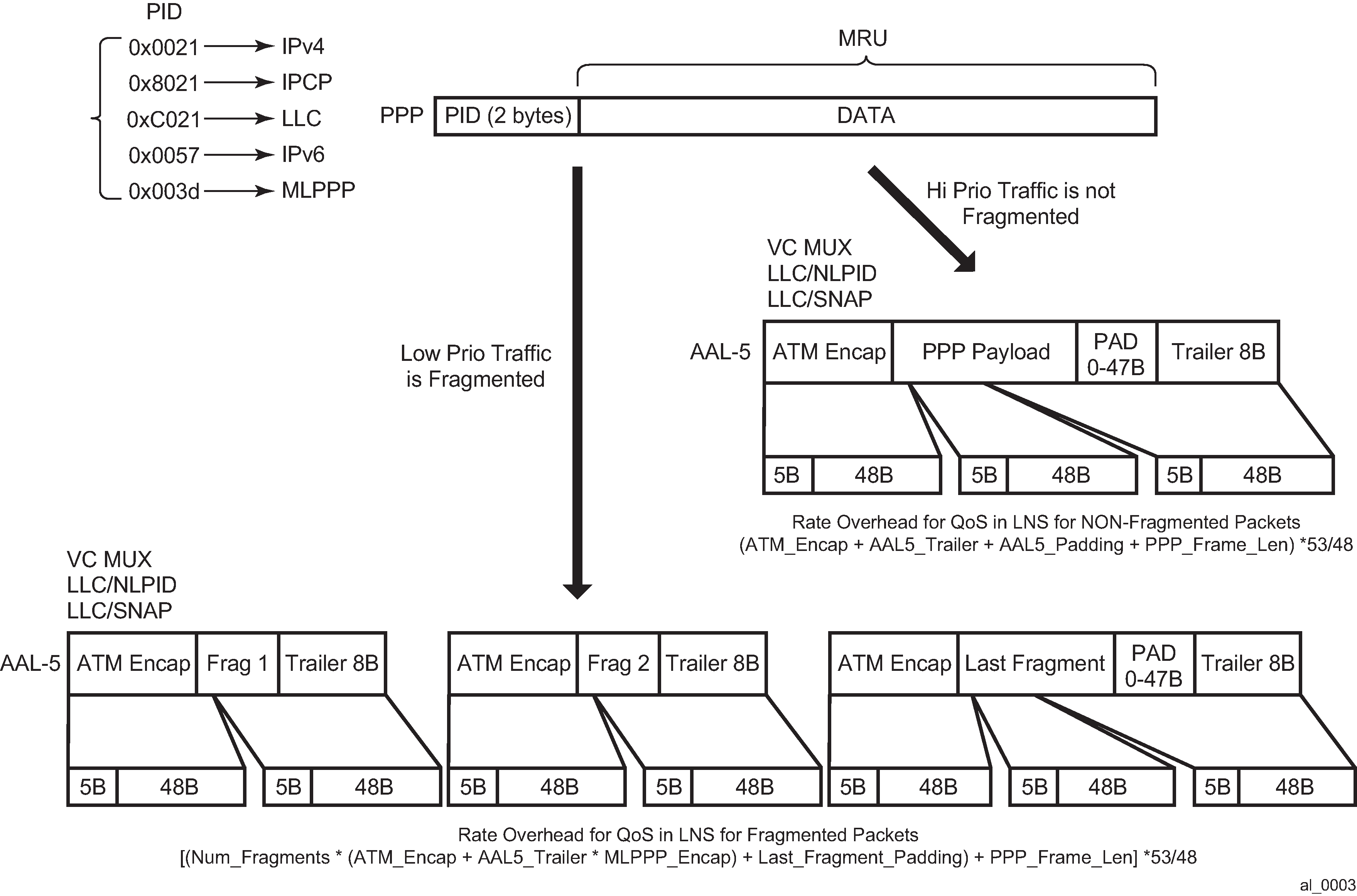

High priority traffic within the bundle is classified into the high priority queue. This type of traffic is not MLPPPoX encapsulated unless its packet size exceeds the link MTU as described in MLPPPoX fragmentation, MRRU and MRU considerations. Low priority traffic is classified into a low priority queue and is always MLPPPoX encapsulated. If the high priority traffic becomes MLPPPoX encapsulated or fragmented, the MLPPPoX processing module (BB-ISA) considers it as low-priority. The assumption is that the high priority traffic is small in size and consequently MLPPPoX encapsulation or fragmentation and degradation in priority can be avoided. The aggregate rate of the MLPPPoX bundle is on-the-wire rate of the last mile as shown in Last mile encapsulation.

ATM on-the-wire overhead for non-MLPPPoX encapsulated high priority traffic includes:

ATM encapsulation (VC-MUX, LLC/NLPID, LLC/SNAP).

AAL5 trailer (8B).

AAL5 padding to 48B cell boundary (this makes the overhead dependent on the packet size).

Multiplication by 53/48 to account for the ATM cell headers.

For low priority traffic, which is always MLPPPoX encapsulated, an additional overhead related to MLPPPoX encapsulation and possibly fragmentation must be added. In other words, each fragment carries ATM+MLPPPoX overhead.

For Ethernet in the last mile, the implementation always assures that the fragment size plus the encapsulation overhead is always larger or equal to the minimum Ethernet packet length (64B).

BB-ISA processing

MLPPPoX encapsulation, fragmentation and interleaving are performed by the LNS in BB-ISA. According to the example, a large low priority packet (P1) is received by the BB-ISA, immediately followed by the two small high priority packets (P2 and P3). Because the requirement stipulates that there is no more than 50ms of transmission delay in the last mile (including on-the-wire overhead), the large packet must be fragmented into three smaller fragments each of which do not cause more than 50ms of transmission delay.

The BB-ISA would normally send packets or fragments to the carrier IOM at the rate of 10Gb/s. In other words, by default the three fragments of the low priority packet would be sent out of the BB-ISA back-to-back at the very high rate before the high priority packets even arrive in the BB-ISA. To interleave, the BB-ISA must simulate the last mile conditions by delaying the transmission of the fragments. The fragments are be paced out of the BB-ISA (and out of the box) at the rate of the last mile. High priority packets can be injected in front of the fragments while the fragments are being delayed.

In Packet route from the LNS to the RG (point 2) the first fragment F1 is sent out immediately (transmission delay at 10G is in the 1us range). The transmission of the next fragment F2 is delayed by 50ms. While the transmission of the second fragment F2 is being delayed, the two high priority packets (P1 and P2 in red) are received by the BB-ISA and are immediately transmitted ahead of fragments F2 and F3. This approach relies on the imperfection of the IOM shaper which is releasing traffic in bursts (P2 and P3 right after P1). The burst size is dependent on the depth of the rate token bucket associated with the IOM shaper.

This is not applicable for this discussion, but worth noticing is that the LNS BB-ISA also adds the L2TP encapsulation to each packet or fragment. The L2TP encapsulation is removed in the LAC before the packet or fragment is transmitted toward the AN.

LNS-LAC link

This is the high rate link (1Gb/s) on which the first fragment F1 and the two consecutive high priority packets, P2 and P3, are sent back-to-back by the BB-ISA.

(BB-ISA->carrier IOM->egress IOM-> out-of-the-LNS).

The remaining fragments (F2 and F3) are still waiting in the BB-ISA to be transmitted. They are artificially delayed by 50ms each.

Additional QoS based on the L2TP header can be performed on the egress port in the LNS toward the LAC. This QoS is based on the classification fields inside of the packet or fragment headers (DSCP, dot1.p, EXP).

AN-RG link

Finally, this is the slow link of the last mile, the reason why LFI is performed in the first place. Assuming that LFI played its role in the network as designed, by the time the transmission of one fragment on this link is completed, the next fragment arrives just in time for unblocked transmission. In between the two fragments, there can be one or more small high priority packets waiting in the queue for the transmission to complete.

On the AN-RG link in Packet route from the LNS to the RG that packets P2 and P3 are ahead of fragments F2 and F3. Therefore the delay incurred on this link by the low priority packets is never greater than the transmission delay of the first fragment (50ms). The remaining two fragments, F2 and F3, can be queued and further delayed by the transmission time of packets P2 and P3 (which is normally small, in the example, 3ms for each).

If many low priority packets are waiting in the queue, then they would have caused delay and would have further delayed the fragments that are in transit from the LNS to the LAC. This condition is normally caused by bursts and it should clear itself out over time.

Home link

High priority packets P2 and P3 are transmitted by the RG into the home network ahead of the packet P1 although the fragment F1 has arrived in the RG first. The reason for this is that the RG must wait for the fragments F2 and F3 before it can re-assemble packet P1.

Optimum fragment size calculation by LNS

Fragmentation in LFI is based on the optimal fragment size. LNS implementation calculates the two optimal fragment sizes, based on two different criteria:

Optimal fragment size based on the payload efficiency of the fragment considering the fragmentation and transportation header overhead associated with the fragment encapsulation based fragment size.

Optimal fragment size based on the maximum transmission delay of the fragment set by configuration delay based fragment size.

At the end, only one optimal fragment size is selected. The actual fragment’s length is the optimal fragment size.

The parameters required to calculate the optimal fragment sizes are known to the LNS either through configuration or signaling. These, in-advance known parameters are:

Last mile maximum transmission delay (max-fragment-delay obtained by CLI)

Last mile ATM Encapsulation (in the example the last mile is ATM but in general it can be Ethernet for MLPPPoE)

MLPPP encapsulation length (depending on the fragment sequence number format)

The last mile on-the-wire rate for the MLPPPoX bundle

Examine closer each of the two optimal fragment sizes.

Encapsulation-based fragment size

Be mindful that fragmentation may cause low link utilization. In other words, during fragmentation a node may end up transporting mainly overhead bytes in the fragment as opposed to payload bytes. This would only intensify the problem that fragmentation is intended to solve, especially on an ATM access link that tend to carry larger encapsulation overhead.

To reduce the overhead associated with fragmentation, the following is enforced in the 7750 SR:

The minimum fragment payload size is at least 10times greater than the overhead (MLPPP header, ATM Encapsulation and AAL5 trailer) associated with the fragment.

The optimal fragment length (including the MLPPP header, the ATM Encapsulation and the AAL5 trailer) is a multiple of 48B. Otherwise, the AAL5 layer would add an additional 48B boundary padding to each fragment which would unnecessarily expand the overhead associated with fragmentation. By aligning all-but-last fragments to a 48B boundary, only the last fragment potentially contains the AAL5 48B boundary padding which is no different from a non-fragmented packet. All fragments, except for the last fragment, are referred to as non-padded fragments. The last fragment is padded if it is not already natively aligned to a 48B boundary.

As an example, calculate the optimal fragment size based on the encapsulation criteria with the maximum fragment overhead of 22B. To achieve >10x transmission efficiency the fragment payload size must be 220B (10*22B). To avoid the AAL5 padding, the entire fragment (overhead + payload) is rounded UP on a 48B boundary. The final fragment size is 288B [22B + 22B*10 + 48B_allignment].

In conclusion, an optimal fragment size was selected that carries the payload with at least 90% efficiency. The last fragment of the packet cannot be artificially aligned on a 48B boundary (it is a natural reminder), so it is be padded by the AAL5 layer. Therefore, the efficiency of the last fragment is less than 90% in the example. In the extreme case, the efficiency of this last fragment may be only 2%.

For the Ethernet-based last mile, the CPM always makes sure that the fragment size plus encapsulation overhead is larger or equal to the minimum Ethernet packet length of 64B.

Fragment size based on the maximum transmission delay

The first criterion in selecting the optimal fragment size based on the maximum transmission delay mandates that the transmission time for the fragment, including all overheads (MLPPP header, ATM encapsulation header, AAL5 overhead and ATM cell overhead) must be less than the configured max-fragment-delay time.

The second criterion mandates that each fragment, including the MLPPP header, the ATM encapsulation header, the AAL5 trailer and the ATM cellification overhead be a multiple of 48B. The fragment size is rounded down to the nearest 48B boundary during the calculations to minimize the transmission delay. Aligning the fragment on the 48B boundary eliminates the AAL5 padding and therefore reduces the overhead associated with the fragment. The overhead reduction improves the transmission time and also increases the efficiency of the fragment.

These two criteria along with the configuration parameters (ATM Encapsulation, MLPPP header length, max-fragment-delay time, rate in the last mile), the implementation calculates the optimal non-padded fragment length as well as the transmission time for this optimal fragment length.

Selection of the optimum fragment length

So far, the implementation has calculated the two optimum fragment lengths, one based on the length of the MLPPP/transport encapsulation overhead of the fragment, the other one based on the maximum transmission delay of the fragment. Both of them are aligned on a 48B boundary. The larger of the two is chosen and the BB-ISA performs LFI based on this selected optimal fragment length.

Upstream traffic considerations

Fragmentation and interleaving is implemented on the originating end of the traffic. In other words, in the upstream direction the CPE (or RG) is fragmenting and interleaving traffic. There is no interleaving or fragmentation processing in the upstream direction in the 7750 SR. The 7750 SR are on the receiving end and is only concerned with the reassembly of the fragments arriving from the CPE. Fragments are buffered until the packet can be reconstructed. If all fragments of a packet are not received within a preconfigured time frame, the received fragments of the partial packet are discarded (a packet cannot be reconstructed without all of its fragments). This time-out and discard is necessary to prevent buffer starvation in the BB-ISA. Two values for the time-out can be configured: 100ms and 1s.

Multiple links MLPPPoX with no interleaving

Interleaving over MLPPPoX bundles with multiple links are not supported. However, fragmentation is supported.

To preserve packet order, all packets on an MLPPPoX bundle with multiple links are MLPPPoX encapsulated (monotonically increased sequence numbers).

Multiclass MLPPP (RFC 2686, The Multi-Class Extension to Multi-Link PPP) is not supported.

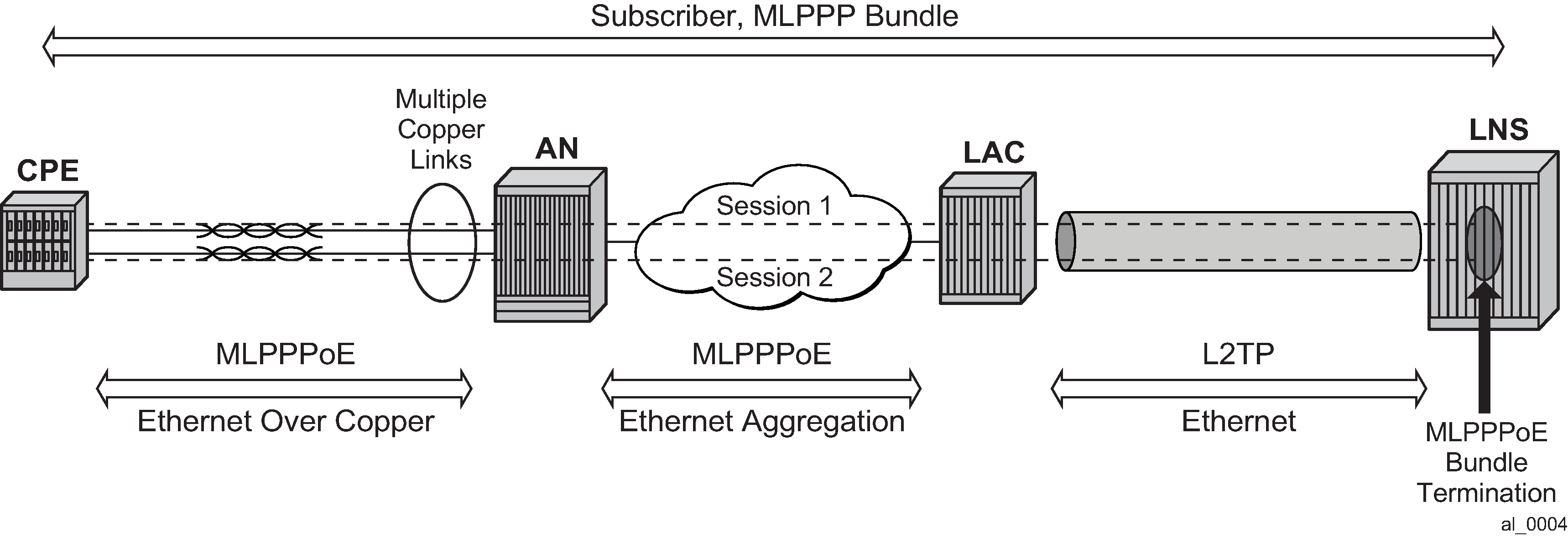

MLPPPoX session support

The following session types in the last mile are supported:

MLPPPoE

Single physical link or multilink. The last mile encapsulation is Ethernet over copper (This could be Ethernet over VDSL or HSDSL). The access rates (especially upstream) are still limited by the xDSL distance limitation and therefore interleaving is required on a slow speed single link in the last mile. It is possible that the last mile encapsulation is Ethernet over fiber (FTTH) but in this case, users would not be concerned with the link speed to the point where interleaving and link aggregation is required.

Finally, this is the slow link of the last mile, the reason why LFI is performed in the first place. Assuming that LFI played its role in the network as designed, by the time the transmission of one fragment on this link is completed, the next fragment arrives just in time for unblocked transmission. In between the two fragments are one or more small high priority packets waiting in the queue for the transmission to complete.

As shown in MLPPPoE — multiple physical links, the AN-RG link in that packets P2 and P3 are ahead of fragments F2 and F3. Therefore the delay incurred on this link by the low priority packets is never greater than the transmission delay of the first fragment (50ms). The remaining two fragments, F2 and F3, can be queued and further delayed by the transmission time of packets P2 and P3 (which is normally small, in the example 3ms for each).

MLPPP(oEo)A — A single physical link or multilink. The last mile encapsulation is ATM over xDSL.

Figure 10. MLPPP(oE)oA — multiple physical links oa_mult.png)

Figure 11. MLPPP(oE)oA — single physical link oa_sngl.png)

Some other combinations are also possible (ATM in the last mile, Ethernet in the aggregation) but they all come down to one of the above models that are characterized by:

Ethernet or ATM in the last mile.

Ethernet or ATM access on the LAC.

MLPPP/PPPoE termination on the LNS.

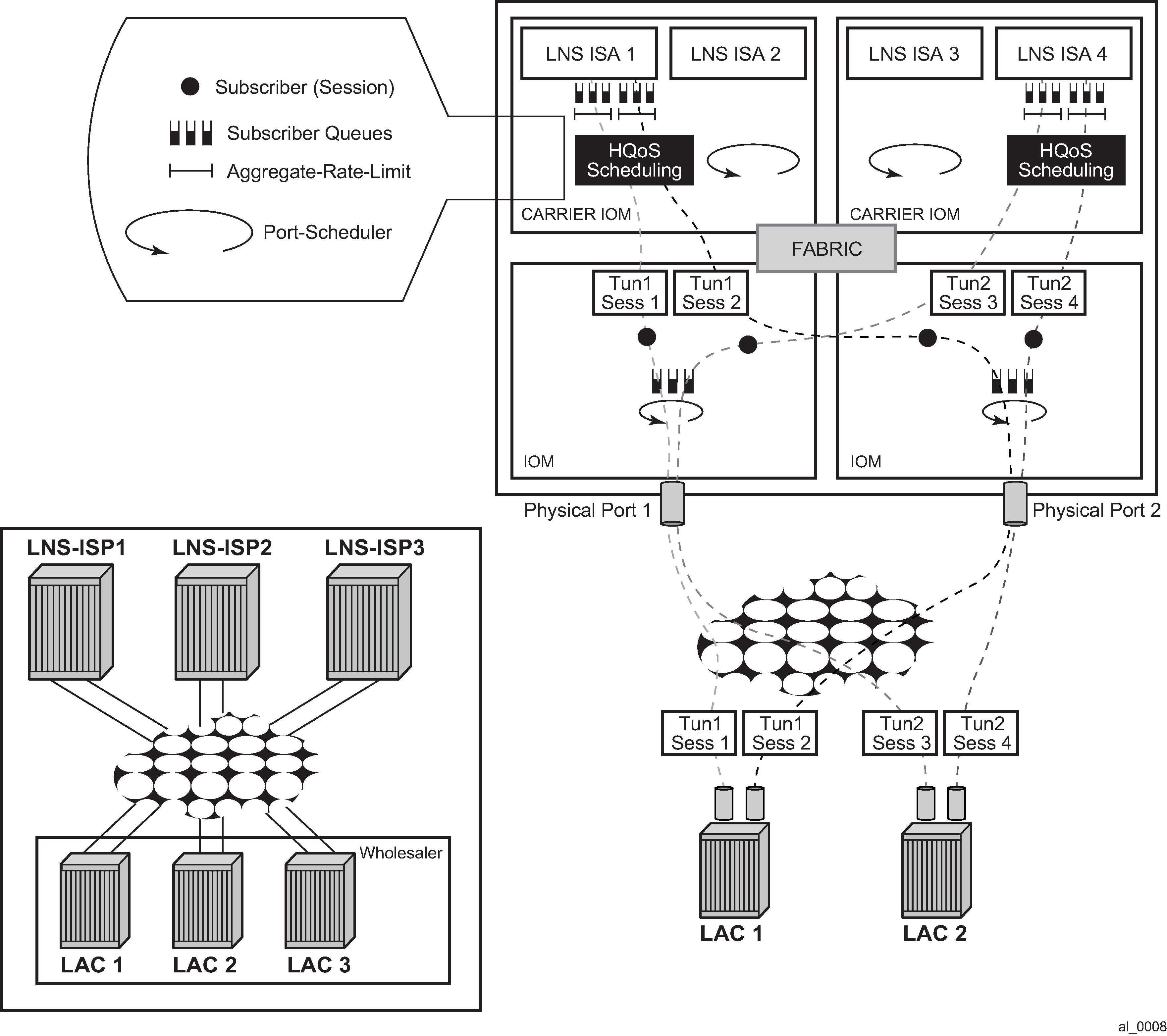

Session load balancing across multiple BB-ISAs

PPP/PPPoE sessions are by default load balanced across multiple BB-ISAs (max 6) in the same group. The load balancing algorithm considers the number of active session on each BB-ISA in the same group. The load balancing algorithm does not consider the number of queues consumed on the carrier IOM. Therefore, a session can be refused if queues are depleted on the carrier IOM even though the BB-ISA may be lightly loaded in terms of the number of sessions that is hosting.

With MLPPPoX, it is important that multiple sessions per bundle be terminated on the same LNS BB-ISA. This can be achieved by per tunnel load balancing mode where all sessions of a tunnel are terminated in the same BB-ISA. Per tunnel load balancing mode is mandatory on LNS BB-ISAs that are in the group that supports MLPPPoX.

On the LAC side, all sessions in an MLPPPoX bundle are automatically assigned to the same tunnel. In other words an MLPPPoX bundle is assigned to the tunnel. There can be multiple tunnels created between the same pair of LAC/LNS nodes.

BB-ISA hashing considerations