Hardware-assisted hierarchical QoS

Hardware-assisted HQoS is an alternative to traditional HQoS which is described in Scheduler QoS policies.

This model improves reaction time and provides more accurate control of downstream bursts for aggregate rate enforcement. It is based on:

hardware aggregate shapers (hw-agg-shaper)

additional hardware scheduling priorities (6 scheduling classes)

fair bandwidth distribution algorithm

software-based algorithm for enforcing HQoS at the vport level

Hardware aggregate shapers

Hardware-assisted HQoS is based on hardware aggregate shapers, which provide enforcement of aggregate rates for a group of queues in the hardware. In the FP4-based and later chipset, hardware aggregate shapers are available at egress only.

Hardware aggregate shapers are controlled by four parameters:

- rate

- sets the aggregate rate of the hardware shaper

- burst-limit

- sets the aggregate burst for the specific hardware aggregate shaper

- adaptation-rule

- sets how the administrative rates are translated into hardware operational values

- queue-set size

- sets the number of queues allocated as a block to the hardware aggregate shaper

Traffic is scheduled from the queues in a strict priority order based on the hardware scheduling priority assigned to each queue. This can be controlled using the sched-class command. There are 6 scheduling-classes available.

Hardware aggregate shapers do not need software-based HQoS to modify the oper-PIR of the queues to enforce an aggregate rate. This results in a significantly faster reaction time compared to traditional HQoS based on virtual schedulers.

The following examples show the configuration of a hardware aggregate shaper on a subscriber profile. The configuration is similar to traditional QoS configuration.

MD-CLI

[ex:/configure subscriber-mgmt]

A:admin@node-2# info

sub-profile "trialSub" {

egress {

qos {

agg-rate {

rate 1500000

burst-limit auto

}

}

}

}

sla-profile "trialSLA" {

egress {

qos {

sap-egress {

policy-name "trial-egr"

}

}

}

ingress {

qos {

sap-ingress {

policy-name "trial-ingr"

}

}

}

Classic CLI

*A:node-2>config>subscr-mgmt# info

----------------------------------------------

sla-profile "trialSLA" create

ingress

qos 2

exit

exit

egress

qos 2

exit

exit

exit

sub-profile "trialSub" create

egress

agg-rate-limit 1500000

exit

exit

----------------------------------------------Fair bandwidth-distribution algorithm

Hardware-assisted HQoS introduces a new way to implement queue-weight based bandwidth distribution.

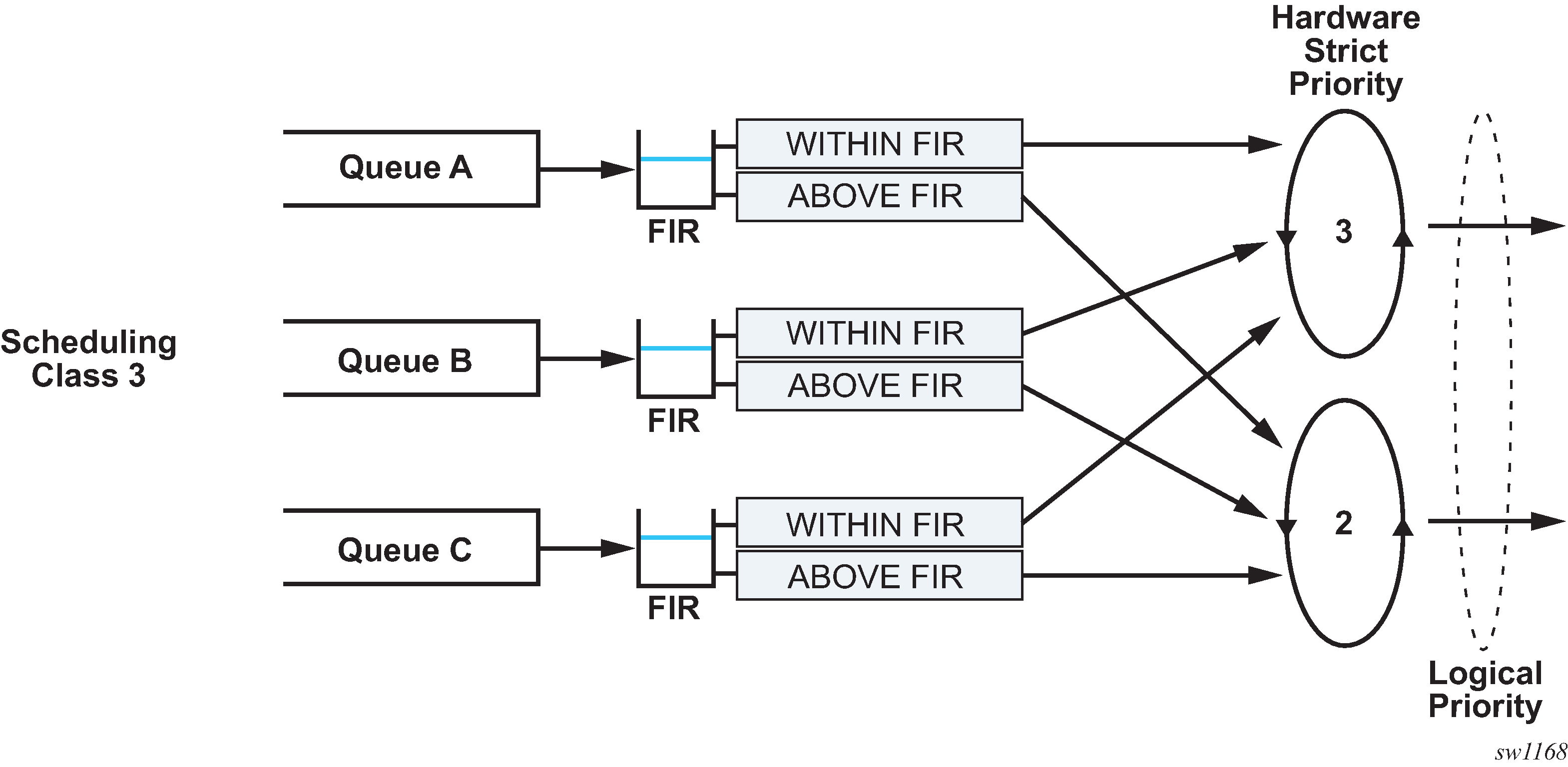

The queue hardware-enforced FIR rate is used to provide weighted fair access between queues assigned to the same hardware scheduling priority (scheduling class) within a hardware aggregate shaper, by dynamically changing the queue's hardware scheduling priority as shown in Hardware FIR enforcement for dynamic fairness control.

Every queue in a hardware aggregate shaper is described by the following parameters:

- scheduling class

- sets assignment to hardware priorities

- aggregate shaper weight

- sets the queue fair-share of bandwidth at the specific scheduling class level

- burst limit

- sets the individual queue burst limit at PIR

- FIR burst limit

- sets the individual queue burst limit at FIR

The aggregate shaper weight algorithm operates similarly to the virtual-scheduler HQoS algorithm, meaning that the offered load of the queues at the same scheduling class is weighted against the aggregate shaper weight to determine the FIR, which then controls hardware-based scheduling.

A FIR-based algorithm is more efficient and more responsive to dynamic changes in offered load. The PIR is not adjusted, which prevents aggregate-rate underruns in case of a sudden change in an incoming load for some queues.

When hardware aggregate shapers are enabled, CIR as a queue parameter has no impact on the BW allocation algorithm, as in traditional HQoS. The CIR is still used for calculation of the default CBS. The queue-frame-based-accounting setting is also ignored when hardware aggregate shaping is configured.

The following examples show a SAP egress policy configuration. Weight-based bandwidth distribution in this example is only between queue 1 and queue 2. These queues are both assigned to the same scheduling class (sched-class 3), while queue 3 has a higher priority scheduling-class (sched-class 5) and is served with strict priority.

MD-CLI

*[ex:/configure qos]

A:admin@node-2# info

sap-egress "trial-egr" {

queue 1 {

agg-shaper-weight 5

burst-limit 500

mbs 102400

sched-class 3

}

queue 2 {

agg-shaper-weight 15

burst-limit 500

mbs 102400

sched-class 3

}

queue 3 {

burst-limit 500

mbs 102400

sched-class 5

}

fc be {

queue 1

}

fc af {

queue 2

}

fc ef {

queue 3

}

Classic CLI

*A:node-2>config>qos>sap-egress# info

----------------------------------------------

queue 1 create

mbs 100 kilobytes

burst-limit 500 bytes

agg-shaper-weight 5

sched-class 3

exit

queue 2 create

mbs 100 kilobytes

burst-limit 500 bytes

agg-shaper-weight 15

sched-class 3

exit

queue 3 create

mbs 100 kilobytes

burst-limit 500 bytes

sched-class 5

exit

fc af create

queue 2

exit

fc be create

queue 1

exit

fc ef create

queue 3

exit

----------------------------------------------

*A:SR-1s-test>config>qos>sap-egress#

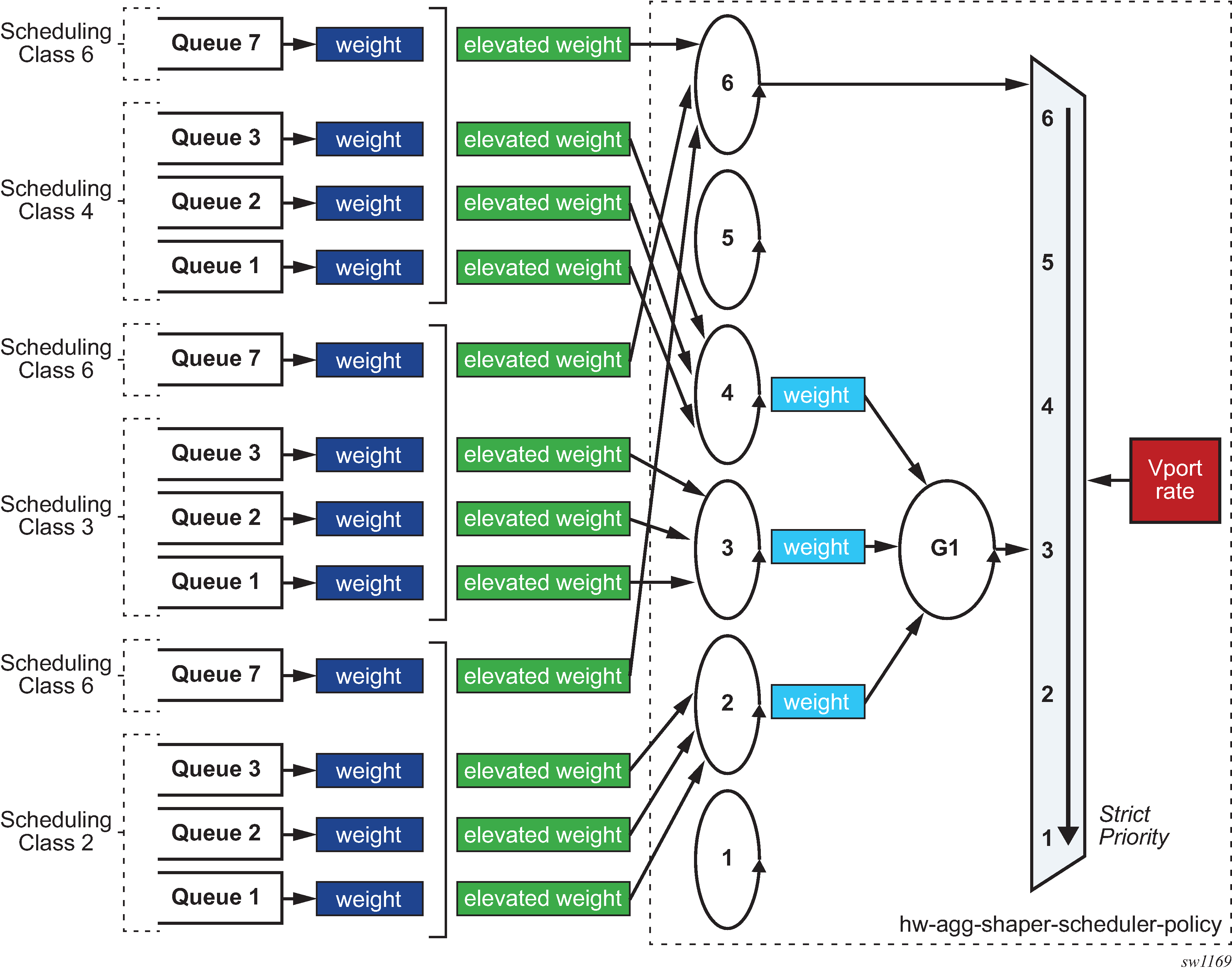

Hardware aggregate shaper scheduler policy

A hardware aggregate shaper scheduler policy is an alternative to a port scheduler. A hardware aggregate shaper policy can be assigned at the Vport level or at the port level for subscribers or SAPs.

A virtual Vport scheduler is added to implement a bandwidth-distribution software algorithm to handle traffic oversubscription by subscriber or SAP hardware aggregate shapers at a Vport.

The Vport represents a downstream bandwidth constraint and, therefore, the algorithm enforces a maximum rate, which is an Ethernet on-the-wire rate. This algorithm also implements the logic to distribute the available bandwidth at the Vport to its child hardware aggregate shapers.

Similarly, a port scheduler enforces bandwidth distribution between SAPs attached to the specific hardware aggregate shaper policy assigned to the specific port. For the bandwidth-distribution algorithm to operate effectively, all SAPs attached to it must have an explicit aggregate rate defined.

The algorithm performs a similar function to the existing H-QoS port scheduler, however, instead of modifying the oper-PIRs of queues, policers, or schedulers, it modifies the oper-PIR of the hardware aggregate shapers connected to it, and consequently triggers recalculation of the individual queue’s FIR, if required.

Vport or port bandwidth distribution shows the functionality of a hardware aggregate shaper scheduler, including all parameters which the user can set. This architecture allows the user full flexibility to define SLA models.

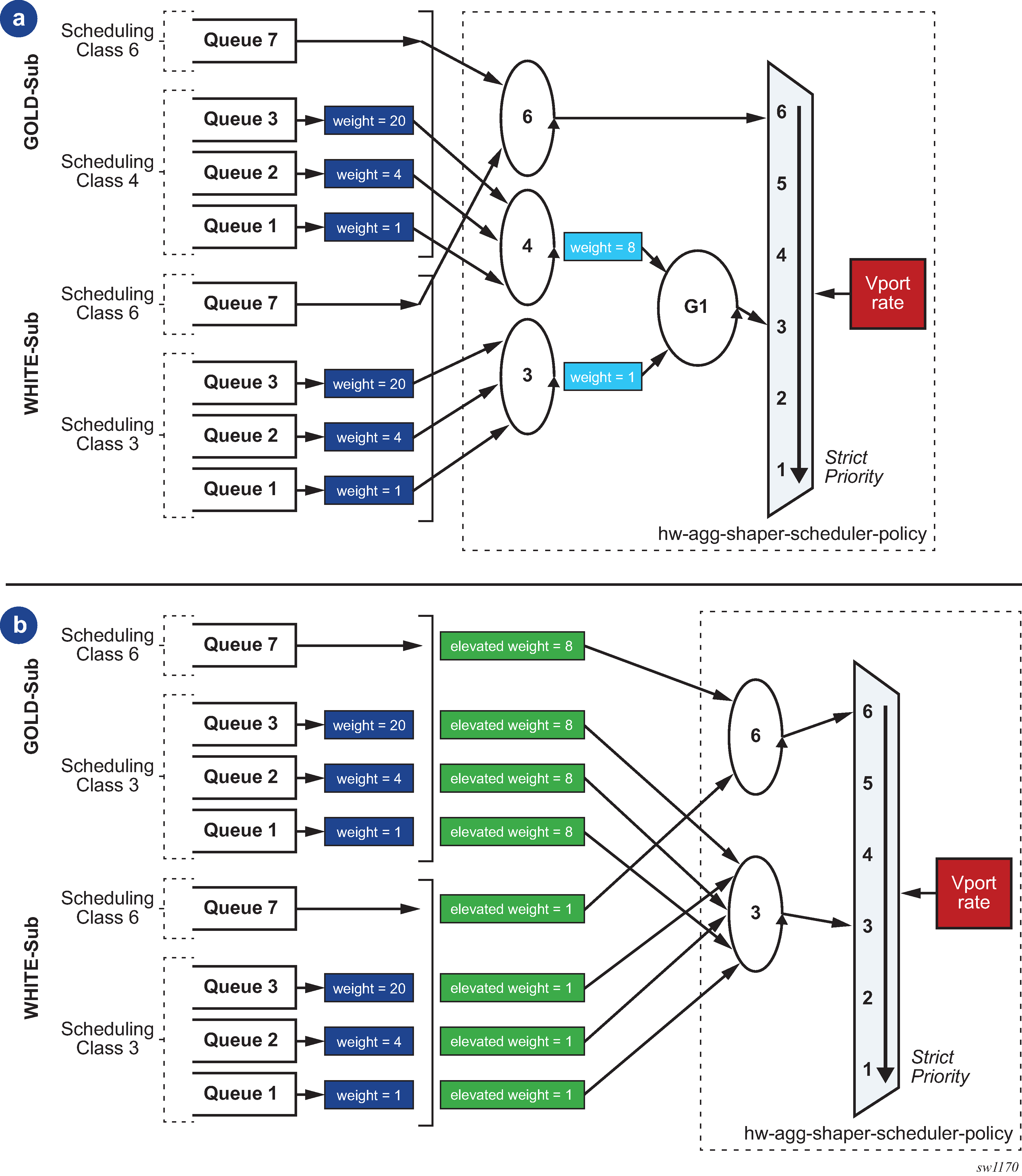

The generic design in Vport or port bandwidth distribution can be mapped into two SLA models as shown in SLA models using subscriber management as an example. Similar examples can be constructed using SAPs

Both SLA models show two subscriber categories for simplicity, but this model can be extended to any number of subscriber categories.

The SLA model (a) in SLA models shows the overall Vport bandwidth distribution between gold and white categories in a ratio of 8:1 regardless of the number of subscribers in either category, and assuming that traffic passing through queue 7 for both subscriber categories is delay-critical but not significant in terms of volume.

The SLA model (b) in SLA models guarantees that the ratio between any gold and any white subscriber is 8:1. The overall bandwidth distribution between gold and white categories, however, it depends on the number of subscribers in each category.

SLA models are a design goal and must be tested to guarantee the expected behavior. SR OS configuration allows a user to construct other models by combining concepts and parameters in other ways. The resulting behavior is subject to a specific configuration and offered load.

When alternative SLA models are configured, the following considerations apply:

-

If a group is used to enforce weighting between different scheduling classes, all queues attached to a specific hardware aggregate shaper must have the same scheduling class. This is because the software algorithm enforcing the hardware aggregate scheduler policy only controls the rate of the hardware aggregate shaper and not the rate of individual queues. If this recommendation is not followed, weights enforced at the group level may contradict weights enforced between queues within a single hardware aggregate shaper.

-

Elevation-weight and groups for any set of queues are mutually exclusive.

The hardware aggregate scheduler policy implements a congestion threshold that is used to trigger software recalculation. If the overall offered load for a specific Vport is below this threshold (expressed as a percentage of the Vport aggregate rate), the software recalculation of all parameters is not performed to save CPU resources because it is assumed that all offered traffic passes through.

Similarly to a port scheduler policy, a hardware aggregate rate scheduler policy supports a monitor threshold that detects congestion. The configuration and operation are identical as described in Scheduler QoS policies.

The following example hardware aggregate shaper scheduler policy describes the SLA model from SLA models (a).

MD-CLI

[ex:/configure qos hw-agg-shaper-scheduler-policy "trial"]

A:admin@node-2# info

max-percent-rate 80.0

group "g1" { }

sched-class 3 {

group "g1"

weight 1

}

sched-class 4 {

group "g1"

weight 8

}

[ex:/configure port 1/1/1/c1/1 ethernet access egress]

A:admin@ode-2# info

virtual-port "dslam-a" {

hw-agg-shaper-scheduler-policy "trial"

}

Classic CLI

*A:node-2>config>qos>hw-agg-shap-sched-plcy# info

----------------------------------------------

max-rate percent 80.00

group "g1" create

exit

sched-class 3 group "g1" weight 1

sched-class 4 group "g1" weight 8

----------------------------------------------

*A:node-2# /configure port 1/1/1/c1/1 ethernet access egress

*A:SR-1s-test>config>port>ethernet>access>egress# info

----------------------------------------------

vport "dslam-a" create

hw-agg-shaper-scheduler-policy "trial"

exit

----------------------------------------------

Interactions with other QoS features

The FP4-based and later chipsets support all existing QoS features including software-driven HQoS and hardware-assisted HQoS. The following sections describe how these features interact.

Activating hardware aggregate shapers

Hardware aggregate shapers are applicable to Ethernet and PXC port subscriber management and SAPs; for other objects, such queue groups, only software-driven H-QoS can be used. Hardware aggregate shapers and software-driven H-QoS can coexist on the same FP.

-

Use the following command to enable hardware aggregate shapers on a specific

FP:

- MD-CLI

configure qos fp-resource-policy aggregate-shapers queue-sets size allocation-weight auto-creation true - classic

CLI

configure qos fp-resource-policy aggregate-shapers queue-sets size allocation-weight

There are seven queue-set sizes available for queues two to eight. Queue-sets with different sizes are allowed. The distribution of hardware aggregate shapers between different queue-sets is controlled through the allocation-weight command. The default queue-set size is 8.

- MD-CLI

-

Use the following command to configure the number of queues for use outside of

the hardware aggregate shapers:

- MD-CLI

configure qos fp-resource-policy aggregate-shapers reserved-non-shaper-queues - classic

CLI

configure qos fp-resource-policy aggregate-shapers reserved-non-shaper-queues

When using a queue-set with less than eight queues, a certain number of queues will be unused by the hardware aggregate shapers. These queues can be used for objects not using hardware aggregate shapers. To use these queues for this purpose, the reserved-non-shaper-queues command must be configured. The minimum number of reserved non-shaper queues is set to 2048, which covers all system-related requirements.

- MD-CLI

-

Use the following command to configure a default queue-set for subscribers or

SAPs:

- MD-CLI

configure qos fp-resource-policy aggregate-shapers queue-sets default-size saps <size> configure qos fp-resource-policy aggregate-shapers queue-sets default-size subscribers - classic

CLI

configure qos fp-resource-policy aggregate-shapers queue-sets default-size saps configure qos fp-resource-policy aggregate-shapers queue-sets default-size subscribers

The default queue-set can be set to the queue-set size which has an allocation weight equal to 100. It can also be set to use non-shaper queues, by using the reserved-non-shaper-queues keyword.

This step may appear to be redundant, since there is only a single queue set supported. However, it is important to configure this to ensure forward compatibility of the configuration file for future releases.

- MD-CLI

-

Use the following command to enable hardware aggregate shapers.

-

Use the following command to enable hardware aggregate shapers.

-

Use the following command to assign a specific queue-set size to a specified

SAP or subscriber SLA profile.

The specific queue-set size or the use of non-shaper queues is defined within SAP egress as follows:

- MD-CLI

configure qos sap-egress hw-agg-shaper-queues queue-set-size {number | non-shaper-queues} - classic

CLI

configure qos sap-egress hw-agg-shaper-queues {queue-set-size number | non-shaper-queues}

- MD-CLI

After the preceding commands are applied to a specific FP, all subscriber hosts created on this FP use hardware aggregate shaping and any traditional H-QoS-related configuration is ignored.

SAP and queue group objects always use traditional H-QoS configuration whether they are created on the same or different ports as subscriber hosts. Any hardware aggregate shaping configuration associated with a SAP or queue group is ignored.

Hardware aggregate shapers and LAG

LAGs using link or port-fair adapt-qos modes support hardware aggregate shapers; LAGs in distribute mode do not.

When a LAG spans multiple FPs, only one set of subscriber queues is created per FP (on the elected port); consequently, there is only one hardware aggregate shaper created per FP for subscribers on a LAG. Egress subscriber traffic is hashed only to the active FP for that subscriber.

For SAPs, the queues and hardware aggregate shapers are instantiated on each member port. The operational hardware aggregate shaper rate is allocated to individual instances based on the LAG mode:

- in link mode all instances have the same rate

- in port-fair mode the rate is proportional to the port rates

The Vport bandwidth distribution algorithm manages only the hardware aggregate shaper associated with the elected LAG port on the active FP. All hardware aggregate shapers not associated with the active FP have their oper-PIRs set to their admin-PIRs. This eliminates the need for the Vport bandwidth distribution algorithm to manage the nonactive hardware aggregate shapers and reduces the processing required.

Nokia recommends that for LAGs spanning different FPs, all participating FPs are configured to use hardware aggregate shapers. However, there are no checks to flag a mismatch of configuration between all member FPs.

Encapsulation offset

In traditional HQoS for subscribers it is possible to define the subscriber aggregate rates, which should be adjusted with respect to last-mile encapsulation using the following command:

- MD-CLI

configure subscriber-mgmt sub-profile egress qos encap-offset type - classic

CLI

configure subscriber-mgmt sub-profile egress encap-offset type

There are multiple types of encapsulations available.

This causes the offered-load measurements and operational-rate values to be normalized with respect to encap-offset and avg-frame-overhead values. Based on these values, the corresponding operational rates are calculated, and the adjustment is transparent to users.

For hardware aggregate shapers, the shapers and FIR distribution rely on hardware enforcement while the hardware aggregate shaper scheduler policy is software-based. Because of this, queue weights in FIR calculations are normalized with respect to the encap-offset value. Therefore, in show commands related to hardware aggregate shapers the queue weights may differ from the configured values due to such adjustments.

However, weights in hardware aggregate shaper scheduler policy remains as configured, because offered-load and rate calculations in software consider last-mile overhead in the same way as in software-driven HQoS.

If no encap-offset is set for the hardware aggregate shaper, the on-the-wire-rate is enforced. Considering that offered-rates do not account for on-the-wire Ethernet overhead, the QoS software adjusts the aggregate rate of the shaper based on the average frame overhead calculated during HQoS calculation.

Advanced QoS configuration policy

The following configuration parameters are not applicable when hardware aggregate shaping is used:

configure qos adv-config-policy name child-control bandwidth-distribution

above-offered-allowance delta-consumed-agg-rate

above-offered-allowance delta-consumed-agg-rate percent

above-offered-allowance delta-consumed-higher-tier-rate

above-offered-allowance delta-consumed-higher-tier-rate percent

above-offered-allowance unconsumed-agg-rate

above-offered-allowance unconsumed-agg-rate percent

above-offered-allowance unconsumed-higher-tier-rate

above-offered-allowance unconsumed-higher-tier-rate percent

above-offered-cap percent

above-offered-cap rate

enqueue-on-pir-zero

granularity percent

granularity rate

internal-scheduler-weight-mode

limit-pir-zero-drain

lub-init-min-pir

configure card 1 virtual-scheduler-adjustment

internal-scheduler-weight-mode