Scheduler QoS policies

Scheduler policies

Virtual schedulers are created within the context of a scheduler policy that is used to define the hierarchy and parameters for each scheduler. A scheduler is defined in the context of a tier that is used to place the scheduler within the hierarchy. Three tiers of virtual schedulers are supported. Root schedulers are often defined without a parent scheduler, meaning it is not subject to obtaining bandwidth from a higher tier scheduler. A scheduler has the option of enforcing a maximum rate of operation for all child queues, policers, and schedulers associated with it.

Because a scheduler is designed to arbitrate bandwidth between many inputs, a metric must be assigned to each child queue, policer, or scheduler vying for transmit bandwidth. This metric indicates whether the child is to be scheduled in a strict or weighted fashion and the level or weight the child has to other children.

Egress port-based schedulers

H-QoS root (top-tier) schedulers always assumed that the configured rate was available, regardless of egress port-level oversubscription and congestion. This resulted in the possibility that the aggregate bandwidth assigned to queues was not actually available at the port level. When the H-QoS algorithm configures policers and queues with more bandwidth than available on an egress port, the actual bandwidth distribution to the policers and queues on the port is solely based on the action of the hardware scheduler. This can result in a forwarding rate at each queue that is very different than the wanted rate.

The port-based scheduler feature was introduced to allow H-QoS bandwidth allocation based on available bandwidth at the egress port level. The port-based scheduler works at the egress line rate of the port to which it is attached. Port-based scheduling bandwidth allocation automatically includes the Inter-Frame Gap (IFG) and preamble for packets forwarded on policers and queues servicing egress Ethernet ports. However, on PoS and SDH based ports, the HDLC encapsulation overhead and other framing overhead per packet is not known by the system. Instead of automatically determining the encapsulation overhead for SDH or SONET queues, the system provides a configurable frame encapsulation efficiency parameter that allows the user to select the average encapsulation efficiency for all packets forwarded out the egress queue.

A special port scheduler policy can be configured to define the virtual scheduling behavior for an egress port. The port scheduler is a software-based state machine managing a bandwidth allocation algorithm that represents the scheduling hierarchy shown in Port-level virtual scheduler bandwidth allocation based on priority and CIR.

The first tier of the scheduling hierarchy manages the total frame-based bandwidth that the port scheduler allocates to the eight priority levels.

The second tier receives bandwidth from the first tier in two priorities: a within-CIR loop and an above-CIR loop. The second-tier within-CIR loop provides bandwidth to the third-tier within-CIR loops, one for each of the eight priority levels. The second tier above-CIR loop provides bandwidth to the third-tier above-CIR loops for each of the eight priority levels.

The within-CIR loop for each priority level on the third tier supports an optional rate limiter used to restrict the maximum amount of within-CIR bandwidth the priority level can receive. A maximum priority level rate limit is also supported that restricts the total amount of bandwidth the level can receive for both within-CIR and above-CIR. The amount of bandwidth consumed by each priority level for within-CIR and above-CIR is predicated on the rate limits described and the ability for each child queue, policer, or scheduler attached to the priority level to use the bandwidth.

The priority 1 above-CIR scheduling loop has a special two-tier strict-distribution function. The high-priority level 1 above-CIR distribution is weighted between all queues, policers, and schedulers attached to level 1 for above-CIR bandwidth. The low-priority distribution for level 1 above-CIR is reserved for all orphaned policers, queues, and schedulers on the egress port. Orphans are policers, queues, and schedulers that are not explicitly or indirectly attached to the port scheduler through normal parenting conventions. By default, all orphans receive bandwidth after all parented queues and schedulers and are allowed to consume whatever bandwidth is remaining. This default behavior for orphans can be overridden on each port scheduler policy by defining explicit orphan port parent association parameters.

Ultimately, any bandwidth allocated by the port scheduler is given to a child policer or queue. The bandwidth allocated to the policer or queue is converted to a value for the PIR (maximum rate) setting of the policer or queue. This way, the hardware schedulers operating at the egress port level only schedule bandwidth for all policers or queues on the port up to the limits prescribed by the virtual scheduling algorithm.

The following lists the bandwidth allocation sequence for the port virtual scheduler:

-

Priority level 8 offered load up to priority CIR

-

Priority level 7 offered load up to priority CIR

-

Priority level 6 offered load up to priority CIR

-

Priority level 5 offered load up to priority CIR

-

Priority level 4 offered load up to priority CIR

-

Priority level 3 offered load up to priority CIR

-

Priority level 2 offered load up to priority CIR

-

Priority level 1 offered load up to priority CIR

-

Priority level 8 remaining offered load up to remaining priority rate limit

-

Priority level 7 remaining offered load up to remaining priority rate limit

-

Priority level 6 remaining offered load up to remaining priority rate limit

-

Priority level 5 remaining offered load up to remaining priority rate limit

-

Priority level 4 remaining offered load up to remaining priority rate limit

-

Priority level 3 remaining offered load up to remaining priority rate limit

-

Priority level 2 remaining offered load up to remaining priority rate limit

-

Priority level 1 remaining offered load up to remaining priority rate limit

-

Priority level 1 remaining orphan offered load up to remaining priority rate limit (default orphan behavior unless orphan behavior has been overridden in the scheduler policy)

When a policer or queue is inactive or has a limited offered load that is below its fair share (fair share is based on the bandwidth allocation a policer or queue would receive if it was registering adequate activity), its operational PIR must be set to some value to handle what would happen if the queues offered load increased before the next iteration of the port virtual scheduling algorithm. If an inactive policer or queue PIR was set to zero (or near zero), the policer or queue would throttle its traffic until the next algorithm iteration. If the operational PIR was set to its configured rate, the result could overrun the expected aggregate rate of the port scheduler.

To accommodate inactive policers and queues, the system calculates a Minimum Information Rate (MIR) for each policer and queue. To calculate each policer or queue MIR, the system determines what the Fair Information Rate (FIR) of the queue or policer would be if that policer or queue had actually been active during the latest iteration of the virtual scheduling algorithm. For example, if three queues are active (1, 2, and 3) and two queues are inactive (4 and 5), the system first calculates the FIR for each active queue. Then, it recalculates the FIR for queue 4 assuming queue 4 was active with queues 1, 2, and 3, and uses the result as the queue’s MIR. The same is done for queue 5 using queues 1, 2, 3, and 5. The MIR for each inactive queue is used as the operational PIR for each queue.

H-QoS algorithm selection

The default port scheduler H-QoS algorithm distributes bandwidth to its members by performing two allocation passes, one within CIR and one above CIR. Within each pass, it loops through the bandwidth distribution logic for its members by allocating bandwidth that is either consumed or unconsumed by each member (based on the member offered rate).

A second algorithm allows user control of the amount of bandwidth in excess of the offered rate that is given to a queue. In this algorithm, the unconsumed bandwidth is only distributed at the end of each pass and not during the loops within the pass. The algorithm is configured within the port scheduler configuration as follows:

configure qos port-scheduler-policy hqos-algorithm above-offered-allowance-control

When the above-offered-allowance-control H-QoS algorithm is selected, the port scheduler is supported on both Ethernet Vports and Ethernet physical ports with queues and schedulers parented to the port scheduler.

The distribution of unconsumed bandwidth for parented queues and schedulers is determined by the following parameters in an advanced configuration policy (see Port scheduler above offered allowance control):

configure

qos

adv-config-policy

child-control

bandwidth-distribution

above-offered-allowance

unconsumed-agg-rate percent <percent-of-unconsumed-agg-rate>

delta-consumed-agg-rate percent <percent-of-delta-consumed-agg-rate>

unconsumed-higher-tier-rate percent <percent-of-unconsumed-higher-tier-rate>

delta-consumed-higher-tier-rate percent <percent-of-delta-consumed-high-tier-rate>

The distribution of unconsumed bandwidth can be configured separately for aggregate rates (applied at the egress of a SAP or subscriber profile) and higher-tier rates (Vport aggregate rate and port scheduler level, group, and maximum rates). This is useful because there are normally only a few members of an aggregate rate but likely more members of a Vport or port scheduler.

For the aggregate and all higher-tier rates, the algorithm uses:

the percentage of the unconsumed bandwidth at that tier that can be given to the queue or scheduler. If there is unconsumed bandwidth at the end of a bandwidth distribution pass, then the configured percentage of this bandwidth is used in the subsequent processing. For the higher tiers, the value is the minimum of the unconsumed bandwidth at each tier. Configure higher percentages when a large portion of the aggregate rate and higher-tier rates are unconsumed.

the percentage of the delta of consumed bandwidth at that tier that can be given to the queue or scheduler. The delta of consumed bandwidth is determined by subtracting the bandwidth consumed by the other members of the rate (not including that consumed by this queue or scheduler) at the beginning of the pass from that at the end of the pass. This aspect allows a queue or scheduler to be given additional operational PIR when the rate at the tier is mostly consumed but the queue or scheduler itself does not have a high offered rate compared to its maximum rate. In these cases, most of the available rate is being consumed so the configured percentages are low, particularly for the higher-tier rates with many members.

The algorithm takes the maximum of the consumed bandwidth and delta of consumed bandwidth for each of the aggregate rate and higher-tier rates. Then the algorithm uses the minimum of these rates to set the queue or scheduler operational PIR. See Default values of the percentages for information about the default values for the percentages.

Service/subscriber or multiservice site egress port bandwidth allocation

The port-based egress scheduler can be used to allocate bandwidth to each service or subscriber or multiservice site associated with the port. While egress policers and queues on the service can have a child association with a scheduler policy on the SAP or multiservice site, all policers and queues must vie for bandwidth from an egress port. Two methods are supported to allocate bandwidth to each service or subscriber or multiservice site queue:

-

service or subscriber or multiservice site queue association with a scheduler on the SAP or multiservice site, either of which is associated with a port-level scheduler

-

service or subscriber or multiservice site queue association directly with a port-level scheduler

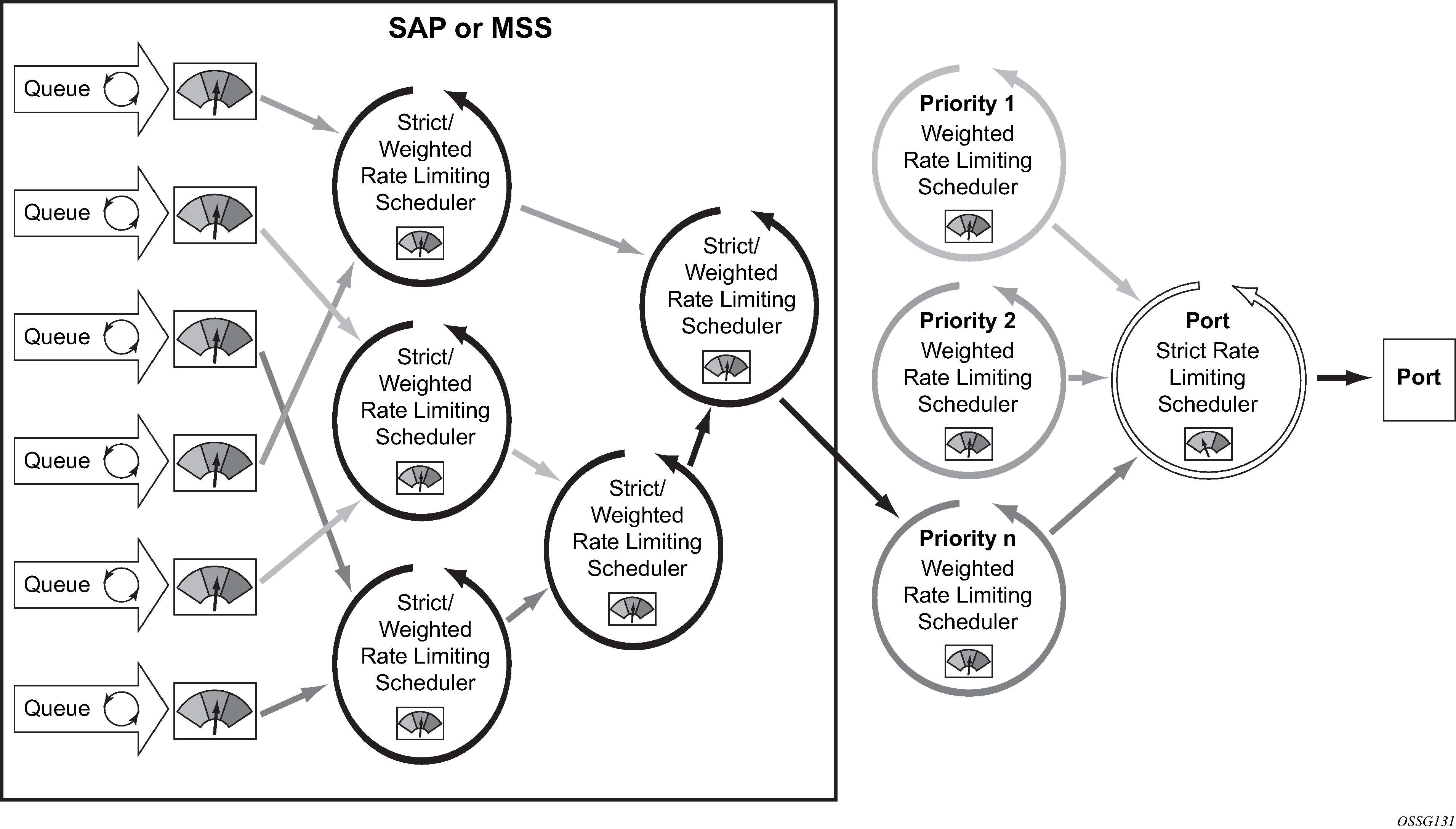

Figure 1. Port-level virtual scheduler bandwidth allocation based on priority and CIR

Service or subscriber or multiservice site scheduler child to port scheduler parent

The service or subscriber or multiservice site scheduler to port scheduler association model allows for multiple services or subscribers or multiservice sites to have independent scheduler policy definitions while the independent schedulers receive bandwidth from the scheduler at the port level. By using two-scheduler policies, available egress port bandwidth can be allocated fairly or unfairly depending on the needed behavior. Two-scheduler policy model for access ports shows this model.

When a two-scheduler policy model is defined, the bandwidth distribution hierarchy allocates the available port bandwidth to the port schedulers based on priority, weights, and rate limits. The service or subscriber or multiservice site level schedulers and the policers and queues they service become an extension of this hierarchy.

Because of the nature of the two-scheduler policy, bandwidth is allocated on a per-service or per-subscriber or multiservice site basis as opposed to a per-class basis. A common use of the two-policy model is for a carrier-of-carriers mode of business. with the goal of a carrier to provide segments of bandwidth to providers who purchase that bandwidth as services. While the carrier does not care about the interior services of the provider, it does care how congestion affects the bandwidth allocation to each provider’s service. As an added benefit, the two-policy approach provides the carrier with the ability to preferentially allocate bandwidth within a service or subscriber or multiservice site context through the service or subscriber or multiservice site level policy, without affecting the overall bandwidth allocation to each service or subscriber or multiservice site. Schedulers on SAP or multiservice site receive bandwidth from port priority levels shows a per-service bandwidth allocation using the two-scheduler policy model. While the figure shows services grouped by scheduling priority, it is expected that many service models place the services in a common port priority and use weights to provide a weighted distribution between the service instances. Higher weights provide for relatively higher amounts of bandwidth.

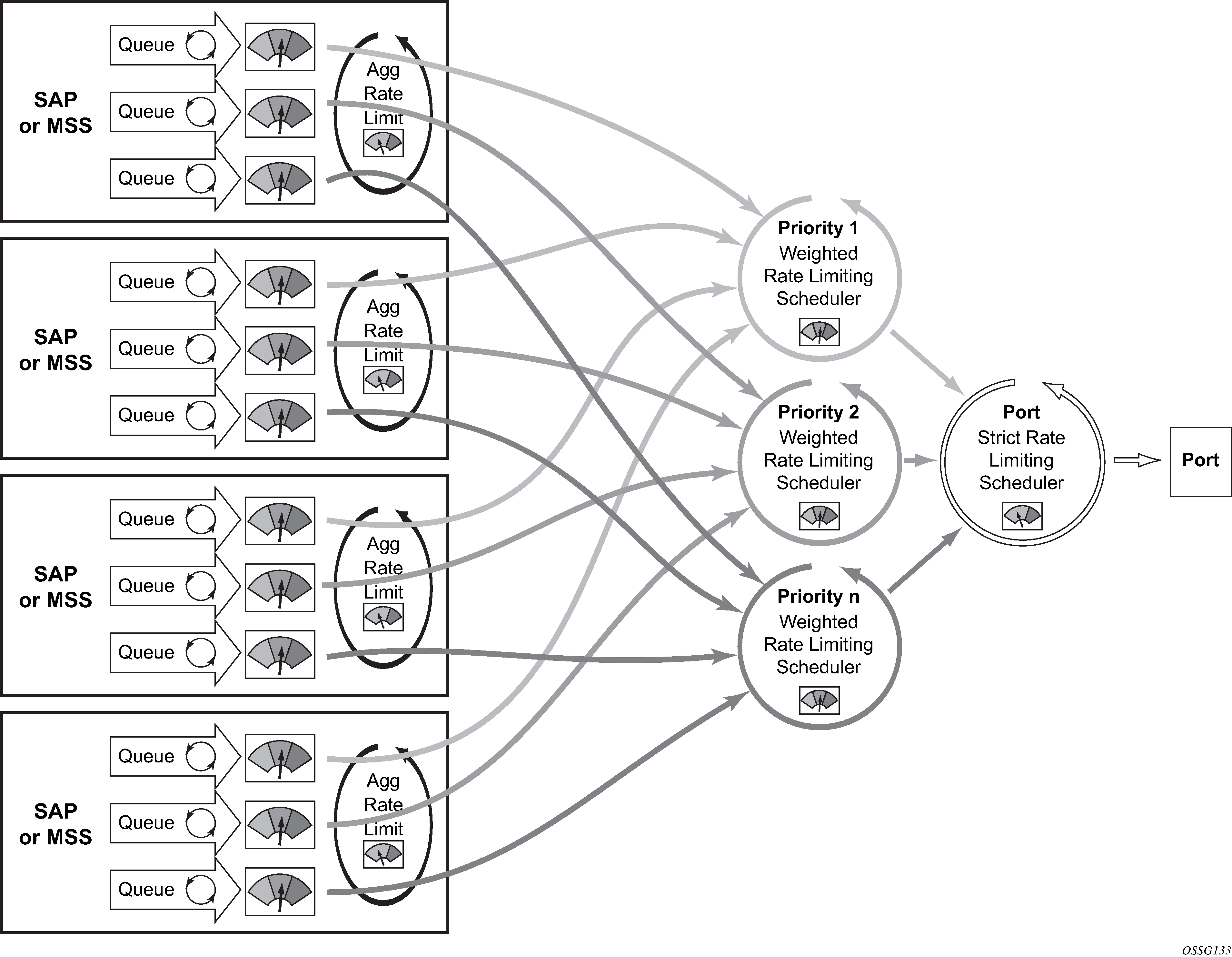

Direct service or subscriber or multiservice site queue association to port scheduler parents

The second model of bandwidth allocation on an egress access port is to directly associate a service or subscriber or multiservice site policer or queue to a port-level scheduler. This model allows the port scheduler hierarchy to allocate bandwidth on a per class or priority basis to each service or subscriber or multiservice site policer or queue. This allows the provider to manage the available egress port bandwidth on a service tier basis ensuring that during egress port congestion, a deterministic behavior is possible from an aggregate perspective. While this provides an aggregate bandwidth allocation model, it does not inhibit per-service or per-subscriber or multiservice site queuing. Direct service or subscriber or multiservice site association to port scheduler model shows the single, port scheduler policy model.

Direct service or subscriber or multiservice site association to port scheduler model also shows the optional aggregate rate limiter at the SAP, multiservice site or subscriber or multiservice site level. The aggregate rate limiter is used to define a maximum aggregate bandwidth at which the child queues and policers, if used, can operate. While the port-level scheduler is allocating bandwidth to each child queue, the current sum of the bandwidth for the service or subscriber or multiservice site is monitored. When the aggregate rate limit is reached, no more bandwidth is allocated to the children associated with the SAP, multiservice site, or subscriber or multiservice site. Aggregate rate limiting is restricted to the single scheduler policy model and is mutually exclusive to defining SAP, multiservice site, or subscriber or multiservice site scheduling policies.

The benefit of the single scheduler policy model is that the bandwidth is allocated per priority for all queues associated with the egress port. This allows a provider to preferentially allocate bandwidth to higher priority classes of service independent of service or subscriber or multiservice site instance. In many cases, a subscriber can purchase multiple services from a single site (VoIP, HSI, Video) and each service can have a higher premium value relative to other service types. If a subscriber has purchased a premium service class, that service class should get bandwidth before another subscriber’s best effort service class. When combined with the aggregate rate limit feature, the single port-level scheduler policy model provides a per-service instance or per-subscriber instance aggregate SLA and a class-based port bandwidth allocation function.

Frame and packet-based bandwidth allocation

A port-based bandwidth allocation mechanism must consider the effect that line encapsulation overhead plays relative to the bandwidth allocated per service or subscriber or multiservice site. The service or subscriber or multiservice site level bandwidth definition (at the queue level) operates on a packet accounting basis. For Ethernet, this includes the DLC header, the payload, and the trailing CRC. This does not include the IFG or the preamble. This means that an Ethernet packet consumes 20 bytes more bandwidth on the wire than what the policer or queue accounted for. When considering HDLC encoded PoS or SDH ports on the 7750 SR, the overhead is variable, based on ‛7e’ insertions (and other TDM framing issues). The HDLC and SONET/SDH frame overhead is not included for queues forwarding on PoS and SDH links.

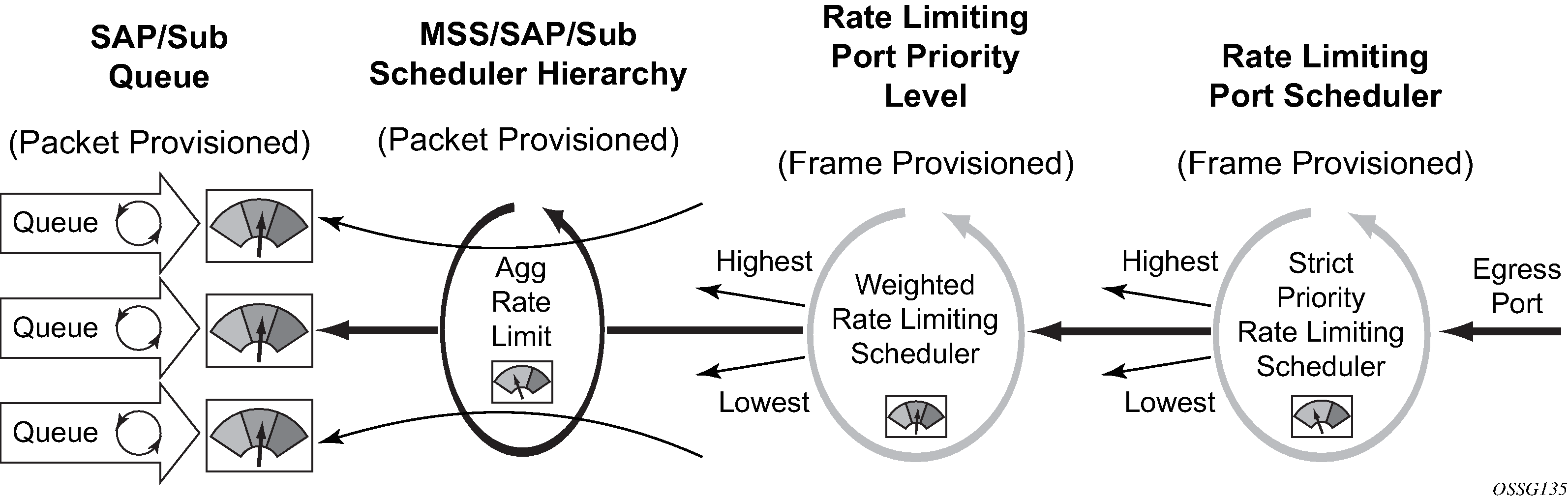

The port-based scheduler hierarchy must translate the frame-based accounting (on-the-wire bandwidth allocation) it performs to the packet-based accounting in the queues. When the port scheduler considers the maximum amount of bandwidth a queue should get, it must first determine how much bandwidth the policer or queue can use. This is based on the offered load the policer or queue is currently experiencing (how many octets are being offered). The offered load is compared to the configured CIR and PIR of the queue or policer. The CIR value determines how much of the offered load should be considered in the within-CIR bandwidth allocation pass. The PIR value determines how much of the remaining offered load (after within-CIR) should be considered for the above-CIR bandwidth allocation pass.

For Ethernet policers or queues (associated with an egress Ethernet port), the packet to frame conversion is relatively easy. The system multiplies the number of offered packets by 20 bytes and adds the result to the offered octets (offeredPackets x 20 + offeredOctets = frameOfferedLoad). This frame-offered-load value represents the amount of line rate bandwidth the policer or queue is requesting. The system computes the ratio of increase between the offered-load and frame-offered-load and calculates the current frame-based CIR and PIR. The frame-CIR and frame-PIR values are used as the limiting values in the within-CIR and above-CIR port bandwidth distribution passes.

For PoS or SDH queues on the 7750 SR, the packet to frame conversion is more difficult to dynamically calculate because of the variable nature of HDLC encoding. Wherever a ‛7e’ bit or byte pattern appears in the data stream, the framer performing the HDLC encoding must place another ‛7e’ within the payload. Because this added HDLC encoding is unknown to the forwarding plane, the system allows for an encapsulation overhead parameter that can be provisioned on a per queue basis. This is provided on a per queue basis to allow for differences in the encapsulation behavior between service flows in different queues. The system multiplies the offered load of the queue by the encapsulation-overhead parameter and adds the result to the offered load of the queue (offeredOctets * configuredEncapsulationOverhead + offeredOctets = frameOfferedLoad). The frame-offered-load value is used by the egress PoS/SDH port scheduler in the same manner as the egress Ethernet port scheduler.

From a provisioning perspective, queues, policers, and service level (and subscriber level) scheduler policies are always provisioned with packet-based parameters. The system converts these values to frame-based on-the-wire values for the purpose of port bandwidth allocation. However, port-based scheduler policy scheduler maximum rates and CIR values are always interpreted as on-the-wire values and must be provisioned accordingly. Port bandwidth distribution for service and port scheduler hierarchies and Port bandwidth distribution for direct queue to port scheduler hierarchy show a logical view of bandwidth distribution from the port to the queue level and shows the packet or frame-based provisioning at each step.

Parental association scope

A port-parent command in the sap-egress and network-queue QoS policy policer or queue context defines the direct child/parent association between an egress policer or queue and a port scheduler priority level. The port-parent command is mutually exclusive to the already-existing scheduler-parent or parent command, which associates a policer or queue with a scheduler at the SAP, multiservice site, or subscriber or multiservice site profile level. It is possible to mix local parented (parent to service or subscriber or multiservice site level scheduler) and port parented policers and queues with schedulers on the same egress port.

The port-parent command only accepts a child/parent association to the eight priority levels on a port scheduler hierarchy. Similar to the local parent command, two associations are supported: one for within-CIR bandwidth (cir-level) and a second one for above-CIR bandwidth (level). The within-CIR association is optional and can be disabled by using the default within-CIR weight value of 0. If a policer or queue with a defined parent port is on a port without a port scheduler policy applied, that policer or queue is considered orphaned. If a policer or queue with a scheduler-parent or parent command is defined on a port and the named scheduler is not found due a missing scheduler policy or a missing scheduler of that name, the policer or queue is considered orphaned as well.

A queue or policer can be moved from a local parent (on the SAP, multiservice site, or subscriber or multiservice site profile) to a port parent priority level simply by executing the port-parent command. When the port-parent command is executed, any local parent information for the policer or queue is lost. The policer or queue can also be moved back to a local scheduler-parent or parent at any time by executing the scheduler-parent or parent command. Lastly, the local scheduler parent, parent, or port parent association can be removed at any time by using the no form of the appropriate parent command.

Service or subscriber or multiservice site-level scheduler parental association scope

The port-parent command in the scheduler-policy scheduler context (at all tier levels) allows a scheduler to be associated with a port scheduler priority level. The port-parent command, the scheduler-parent command, and the parent command for schedulers at tiers 2 and 3 within the scheduler policy are mutually exclusive. The port-parent command is the only parent command allowed for schedulers in tier 1.

The port-parent command only accepts a child/parent association to the eight priority levels on a port scheduler hierarchy. Similar to the normal local parent command, two associations are supported: one for within-CIR bandwidth (cir-level) and a second one for above-CIR bandwidth (level). The within-CIR association is optional and can be disabled by using the default within-CIR weight value of 0. If a scheduler with a port parent defined is on a port without a port scheduler policy applied, that scheduler is considered an orphaned scheduler.

A scheduler in tiers 2 and 3 can be moved from a local (within the policy) parent to a port parent priority level simply by executing the port-parent command. When the port-parent command is executed, any local scheduler-parent or parent information for the scheduler is lost. The schedulers at tiers 2 and 3 can also be moved back to a local scheduler-parent or parent at any time by executing the local scheduler-parent or parent command. Lastly, the local scheduler parent, parent, or port parent association can be removed at any time by using the no form of the appropriate parent command. A scheduler in tier 1 with a port parent definition can be added or removed at any time.

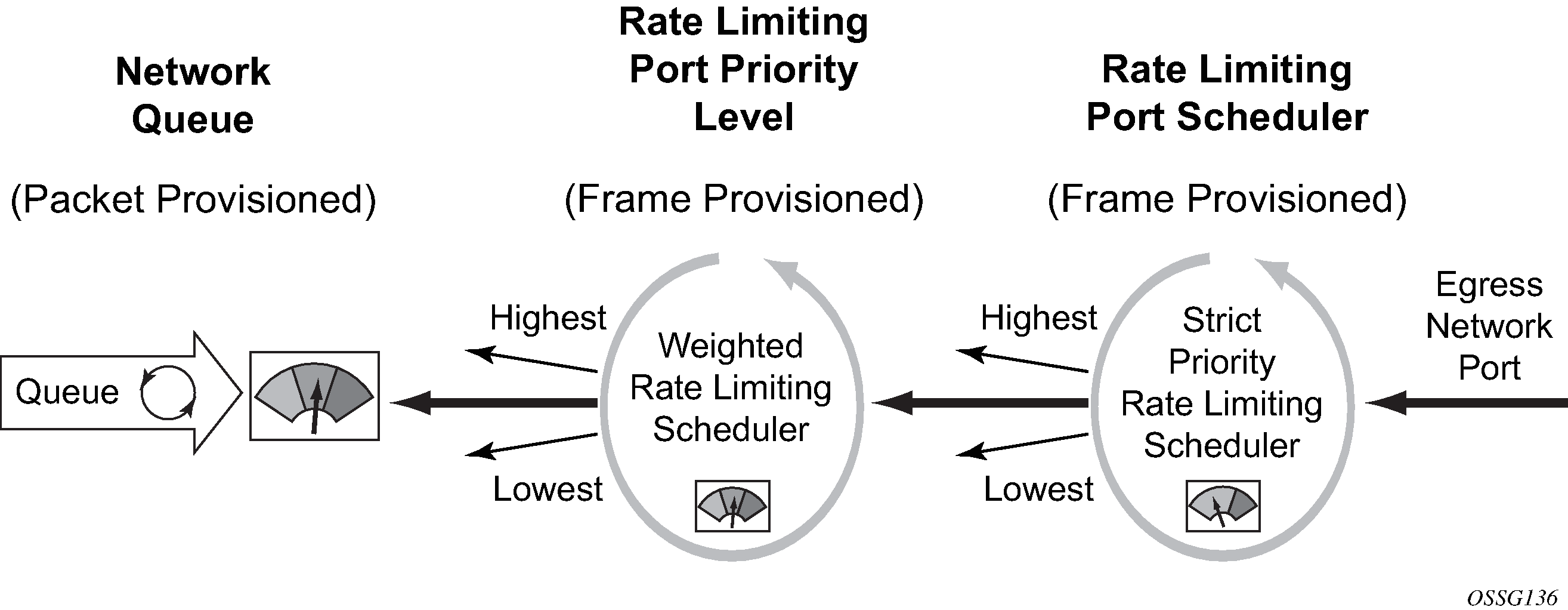

Network queue parent scheduler

Network queues support port scheduler parent priority-level associations. Using a port scheduler policy definition and mapping network queues to a port parent priority level, H-QoS functionality is supported providing eight levels of strict priority and weights within the same priority. A network queue’s bandwidth is allocated using the within-CIR and above-CIR scheme normal for port schedulers.

Queue CIR and PIR percentages when port-based schedulers are in effect are based on frame-offered-load calculations. Bandwidth distribution on network port with port-based scheduling shows port-based virtual scheduling bandwidth distribution.

A network queue with a port parent association that exists on a port without a scheduler policy defined is considered to be orphaned.

Foster parent behavior for orphaned queues and schedulers

All queues, policers, and schedulers on a port that has a port-based scheduler policy configured are subject to bandwidth allocation through the port-based schedulers. All queues, policers, and schedulers that are not configured with a scheduler parent are considered to be orphaned when port-based scheduling is in effect. This includes access and network queue schedulers at the SAP, multiservice site, subscriber, and port level.

By default, orphaned queues, policers, and schedulers are allocated bandwidth after all queues, policers, and schedulers in the parented hierarchy have had bandwidth allocated within-CIR and above-CIR. Therefore, an orphaned scheduler, policer, or queue can be considered as being foster parented by the port scheduler. Orphaned queues, policers, and schedulers have an inherent port scheduler association as shown below:

Within-CIR priority = 1

Within-CIR weight = 0

Above-CIR priority = 1

Above-CIR weight = 0

The above-CIR weight = 0 value is only used for orphaned policers, queues, and schedulers on port scheduler enabled egress ports. The system interprets weight = 0 as priority level 0 and only distributes bandwidth to level 0 when all other properly parented queues, policers, and schedulers have received bandwidth. Orphaned queues, policers, and schedulers all have equal priority to the remaining port bandwidth.

The default orphan behavior can be overridden for each port scheduler policy by using the orphan override command. The orphan override command accepts the same parameters as the port parent command. When the orphan override command is executed, all orphan queues, policers, and schedulers are treated in a similar fashion as other properly parented queues, policers, and schedulers based on the override parenting parameters.

It is expected that an orphan condition is not the wanted state for a queue, policer, or scheduler and is the result of a temporary configuration change or configuration error.

Congestion monitoring on egress port scheduler

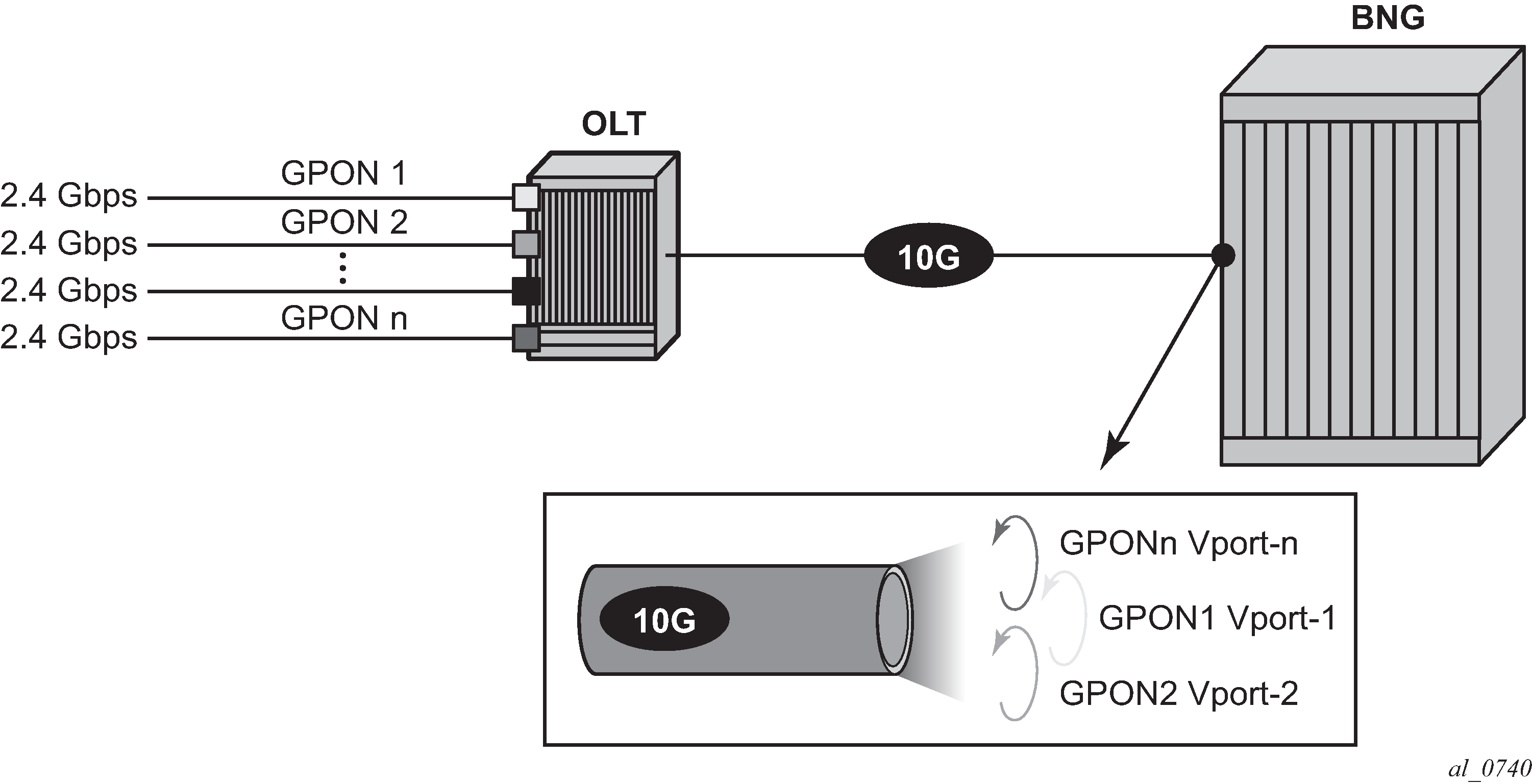

A typical example of congestion monitoring on an Egress Port Scheduler (EPS) is when the EPS is configured within a Vport. A Vport is a construct in an H-QoS hierarchy that can be used to control the bandwidth associated with an access network element (such as, GPON port, OLT, DSLAM) or a retailer that has subscribers on an access node (among other retailers).

The example in GPON bandwidth control through Vport shows Vports representing GPON ports on an OLT. For capacity planning purposes, it is necessary to know if the GPON ports (Vports) are congested. Frequent and prolonged congestion on the Vport prompts the operator to increase the offered bandwidth to its subscribers by allocating additional GPON ports and subsequently moving the subscribers to the newly allocated GPON ports.

There are no forward/drop counters directly associated with the EPS. Instead, the counters are maintained on a per queue level. Consequently, any indication of the congestion level on the EPS is derived from the queue counters that are associated with the specific EPS.

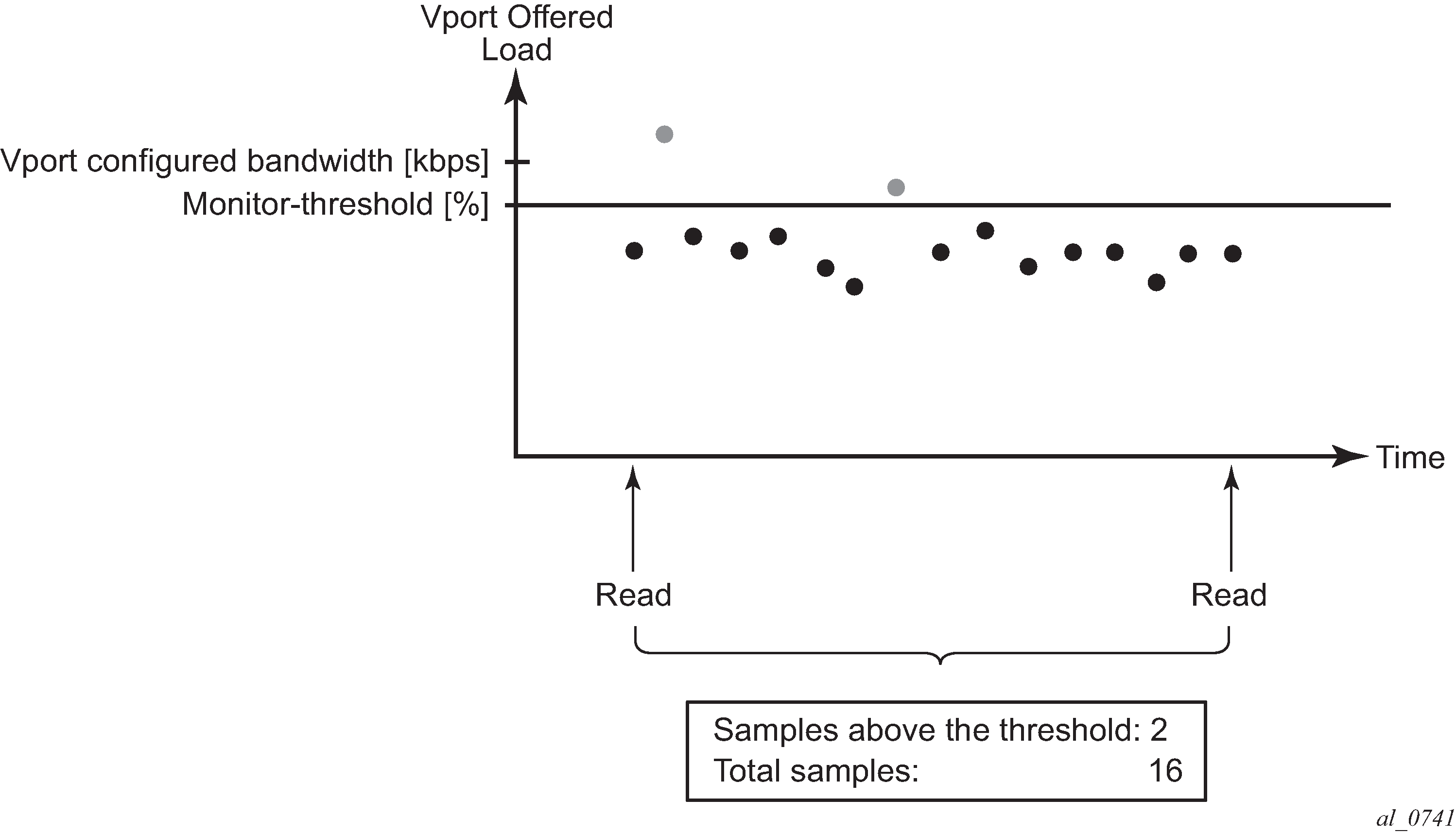

The EPS congestion monitoring capabilities rely on a counter that records the number of times that the offered EPS load (measured at the queue level) crossed the predefined bandwidth threshold levels within an operator-defined timeframe. This counter is called the exceed counter. The rate comparison calculation (offered rate vs threshold) is executed several times per second and the calculation interval cannot be influenced externally by the operator.

The monitoring threshold can be configured via CLI per aggregate EPS rate, EPS level or EPS group. The threshold is applicable to PIR rates.

To enable congestion monitoring on EPS, monitoring must be explicitly enabled under the Vport object itself or under the physical port when the EPS is attached directly to the physical port. In addition, the monitoring threshold within the EPS must be configured.

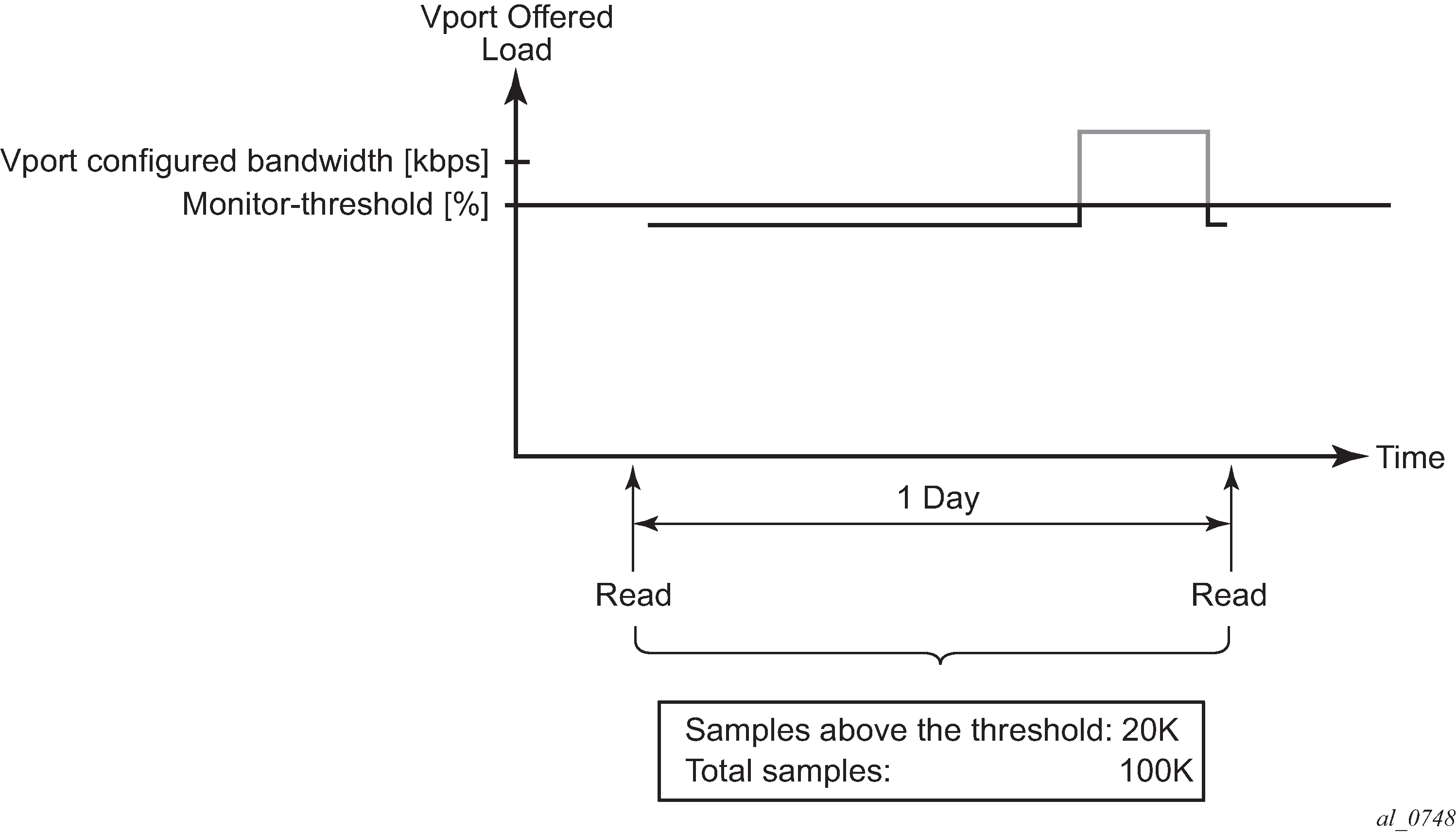

Two examples of congestion monitoring on an EPS that is configured under the Vport are shown in Exceed counts and Exceed counts (severe congestion). Exceed counts (severe congestion) shows more severe congestion than Exceed counts. The EPS exceed counter (the number of dots above the threshold line) can be obtained via a CLI show command or read directly via MIBs.

When the exceed counter value is obtained, the counter should be cleared, which resets the exceed counter and number of samples to zero. This is because the longer the interval between a clear and a show or read, the more diluted the congestion information becomes. For example, 100 threshold exceeds within a 5-minute interval depicts a more accurate congestion picture compared to 100 threshold exceeds within a 5-hour interval.

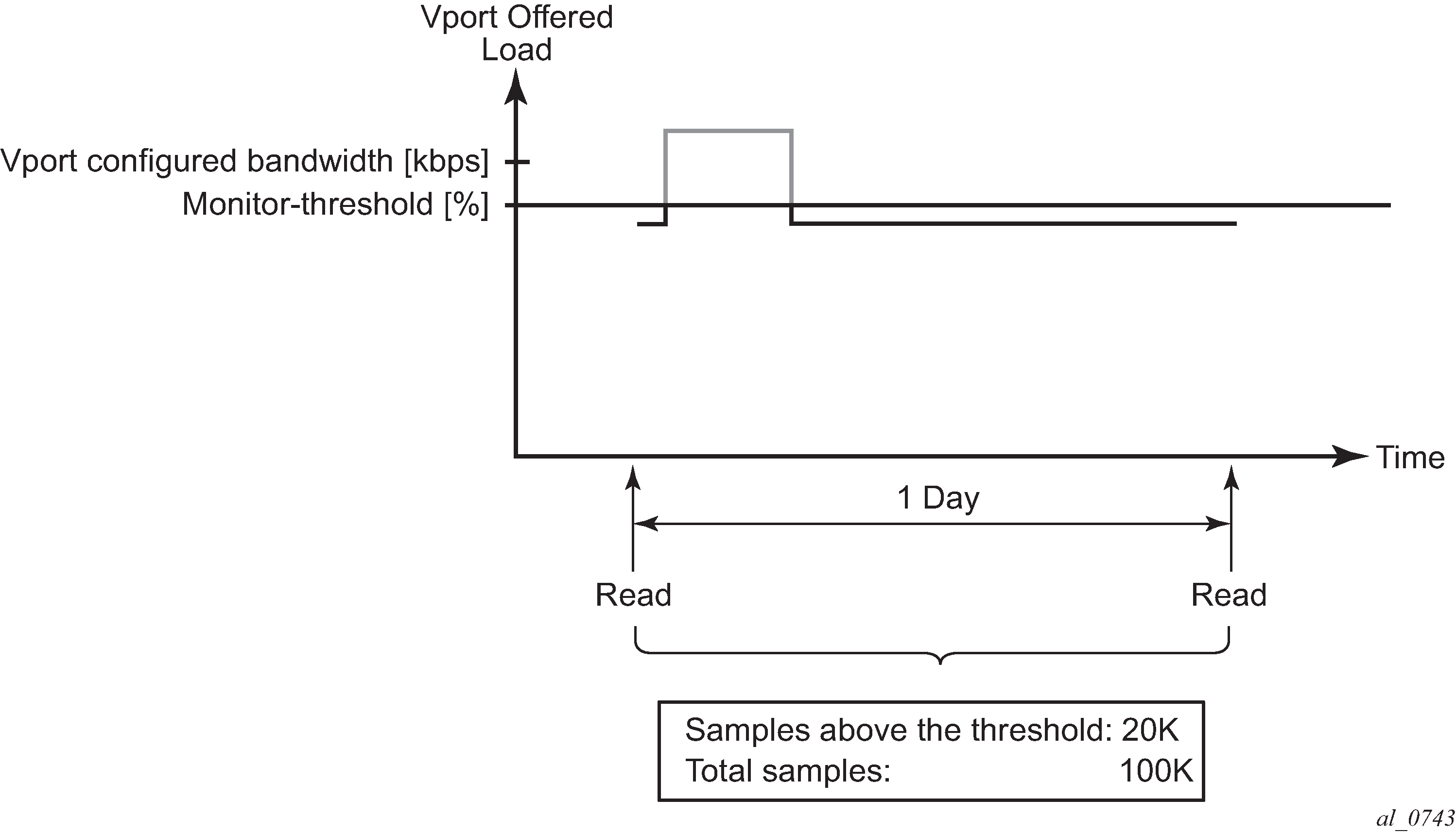

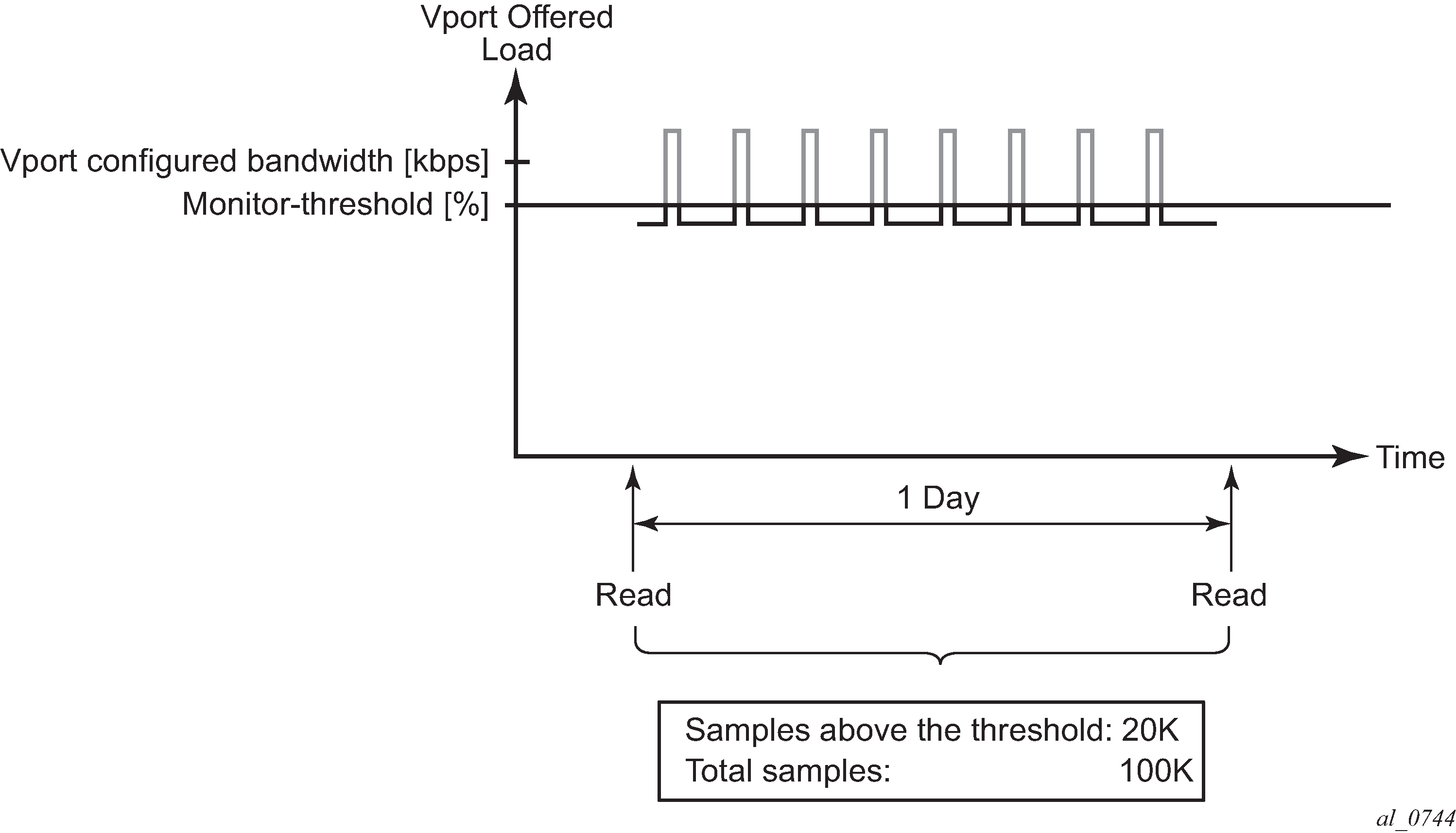

The reduced ability to determine the time of congestion if the reading interval is too long is shown in Determining the time of congestion (example 1), Determining the time of congestion (example 2), and Determining the time of congestion (example 3). It can be seen that the same readings (in the 3 examples) can represent different congestion patterns that occur at different times between the two consecutive reads. The congestion pattern, or the exact time of congestion cannot be determined from the reading itself. The reading only indicates that the congestion occurred x number of times between the two consecutive readings. In the example shown in Determining the time of congestion (example 1), Determining the time of congestion (example 2), and Determining the time of congestion (example 3), an operator can decipher that the link was congested 20% of the time during a one-day period without being able to pinpoint the exact time of congestion within the one-day period. To determine the time of the congestion more accurately, the operator must collect the information more frequently. For example, if the information is collected every 30 minutes, then the operator can determine the part of the day during which congestion occurred within 30 minutes of accuracy.

Scalability, performance, and operation

Scalability and performance are driven by the number of entities for which congestion monitoring is enabled on each line card.

Each statistics-gathering operation requires a show or read followed by a clear. The shorter the time between the two, the more accurate the information about the congestion state of the EPS is.

If the clear operation is not executed after the show or read operation, the external statistics gathering entity (external server) would need to perform additional operations (such as, subtract statistics between the two consecutive reads) to obtain the delta between the two reads.

The recommended minimum polling interval at a higher scale (high number of monitoring entities) is 15 minutes per monitoring entity.

If statistics are obtained via SNMP, the relevant MIB entries corresponding to the show command are:

tPortEgrVPortMonThrEntry

tPortEgrMonThrEntry

Clearing of the statistics can also be performed through a common MIB entry, corresponding to a clear command: tmnxClearEntry.

Restrictions

The scalability and performance are driven by the number of entities for which congestion monitoring is enabled on each line card.

EPS congestion monitoring is supported only on Ethernet ports.

If EPS is applied under Vports, the congestion monitoring mechanism does not provide any indication of whether the physical port was congested. For example, if the physical port is congested, it distributes less bandwidth to its Vports than it otherwise would. Therefore, the Vport offered load appears as less than it actually is, giving an impression of no congestion.

Changing EPS parameters dynamically does not automatically update congestion monitoring statistics. Therefore, it is recommended that the congestion monitoring statistics are cleared after changing the values of EPS parameters.

If EPS is configured under a LAG, the failure of the active link causes an interruption in statistics collection.

Frame-based accounting

The standard accounting mechanism uses ‛packet-based’ rules that account for the DLC header, any existing tags, Ethernet payload, and the 4-byte CRC. The Ethernet framing overhead that includes the Inter-Frame Gap (IFG) and preamble (20 bytes total) are not included in packet-based accounting. When frame-based accounting is enabled, the 20-byte framing overhead is included in the queue or policer CIR, PIR, and scheduling operations, allowing the operations to take into consideration on-wire bandwidth consumed by each Ethernet packet.

Because the native queue accounting functions (stats, CIR, and PIR) are based on packet sizes and do not include Ethernet frame encapsulation overhead, the system must manage the conversion between packet-based and frame-based accounting. To accomplish this, the system requires that a policer or queue operates in frame-based accounting mode and must be managed by a virtual scheduler policy or by a port virtual scheduler policy. Egress policers or queues can use either port or service schedulers to accomplish frame-based accounting, but ingress queues are limited to service-based scheduling policies.

Turning on frame-based accounting for a policer or queue is accomplished through a frame-based accounting command defined on the scheduling policy level associated with the policer or queue or through a policer or queue frame-based accounting parameter on the aggregate rate limit command associated with the queues SAP, multiservice site or subscriber or multiservice site context. Packet byte offset settings are not included in the applied rate when frame-based accounting is configured, however the offsets are applied to the statistics.

Operational modifications

To add frame overhead to the existing QoS Ethernet packet handling functions, the system uses the already existing virtual scheduling capability of the system. The system currently monitors each queue included in a virtual scheduler to determine its offered load. This offered load value is interpreted based on the defined CIR and PIR threshold rates of the policer or queue to determine bandwidth offerings from the policer or queue virtual scheduler. Frame-based usage on the wire allows the port bandwidth to be accurately allocated to each child policer and queue on the port.

Existing egress port-based virtual scheduling

The port-based virtual scheduling mechanism takes the native packet-based accounting results from the policer or queue and adds 20 bytes to each packet to derive the frame-based offered load of the policer or queue. The ratio between the frame-based offered load and the packet-based offered load is then used to determine the effective frame-based CIR and frame-based PIR thresholds for the policer or queue. When the port virtual scheduler computes the amount of bandwidth allowed to the policer or queue (in a frame-based fashion), the bandwidth is converted back to a packet-based value and used as the operational PIR or the policer or queue. The native packet-based mechanisms of the policer or queue continue to function, but the maximum operational rate is governed by frame-based decisions.

Behavior modifications for frame-based accounting

The frame-based accounting feature extends this capability to allow the policer or queue CIR and PIR thresholds to be defined as frame-based values as opposed to packet-based values. The policer or queue continues to internally use its packet-based mechanisms, but the provisioned frame-based CIR and PIR values are continuously revalued based on the ratio between the calculated frame-based offered load and actual packet-based offered load. As a result, the operational packet-based CIR and PIR of the policer or queue are accurately modified during each iteration of the virtual scheduler to represent the provisioned frame-based CIR and PIR. Packet byte offset settings are not included in the applied rate when frame-based accounting is configured, however the offsets are applied to the statistics.

Virtual scheduler rate and queue rate parameter interpretation

Normally, a scheduler policy contains rates that indicate packet-based accounting values. When the children associated with the policy are operating in frame-based accounting mode, the parent schedulers must also be governed by frame-based rates. Because either port-based or service-based virtual scheduling is required for queue or policer frame-based operation, enabling frame-based operation is configured at either the scheduling policy or aggregate rate limit command level. All policers and queues associated with the policy or the aggregate rate limit command inherit the frame-based accounting setting from the scheduling context.

When frame-based accounting is enabled, the policer and queue CIR and PIR settings are automatically interpreted as frame-based values. If a SAP ingress QoS policy is applied with a queue PIR set to 100 Mb/s on two different SAPs, one associated with a policy with frame-based accounting enabled and the other without frame-based accounting enabled, the 100 Mb/s rate is interpreted differently for each queue. The frame-based accounting queue adds 20 bytes to each packet received by the queue and limits the rate based on the extra overhead. The packet-based accounting queue does not add the 20 bytes per packet and therefore allows more packets through per second. Packet byte offset settings are not included in the applied rate when frame-based accounting is configured; however, the offsets are applied to the statistics.

Similarly, the rates defined in the scheduling policy with frame-based accounting enabled are automatically interpreted as frame-based rates.

The port-based scheduler aggregate rate limit command always interprets its configured rate limit value as a frame-based rate. Setting the frame-based accounting parameter on the aggregate rate limit command only affects the policers and queues managed by the aggregate rate limit and converts them from packet-based to frame-based accounting mode.

Virtual scheduling unused bandwidth distribution

The Hierarchical QoS (H-QoS) mechanism is designed to enforce a user definable hierarchical shaping behavior on an arbitrary set of policers and queues. The mechanism accomplishes this by monitoring the offered rate of each policer and queue and using the result as an input to a virtual scheduler hierarchy defined by the user. The hierarchy consists of a number of virtual schedulers with configurable maximum rates per scheduler and attachment parameters between each. The parameters consist of weights and priority levels used to distribute the available bandwidth in a top-down fashion through the hierarchy with the queues at the bottom. The resulting bandwidth provided to each member policer and queue by the virtual schedulers is then configured as an operational PIR on the corresponding hardware policer or queue, which prevents that policer or queue from receiving more hardware scheduler bandwidth than dictated by the virtual scheduler.

Default unused bandwidth distribution

The default behavior of H-QoS is to only throttle active policers and queues currently exceeding their allocated bandwidth. by the virtual schedulers controlling the active policer or queue. A policer or queue that is currently operating below its share of bandwidth is allowed an operational PIR greater than its current rate; this includes inactive policers and queues. The operational PIR for a policer or queue is capped by its admin PIR and set to the fair-share of the available bandwidth for the policer or queue based on its priority level in the H-QoS hierarchy and its weight within that priority level. The result is that between H-QoS iterations, a policer or queue below its share of bandwidth may burst to a higher rate and momentarily overrun the prescribed aggregate rate.

This default behavior works well in situations where an aggregate rate is being applied as a customer capping function to limit excessive use of network resources. However, in specific circumstances where an aggregate rate must be maintained because of limited downstream QoS abilities or because of downstream priority-unaware aggregate policing, a more conservative behavior is required. The following functions can be used to control the unused bandwidth distribution:

The above-offered-cap command within the adv-config-policy provides control of the operational PIR to prevent aggregate rate overrun of each policer or queue. This is accomplished by defining how much the operational PIR of a queue or policer is allowed to exceed its current allocated bandwidth.

The limit-unused-bandwidth (LUB) command.

Limit unused bandwidth

The limit-unused-bandwidth (LUB) command protects against exceeding the aggregated bandwidth by adding a LUB second-pass to the H-QoS function, which ensures that the aggregate fair-share bandwidth does not exceed the aggregate rate.

The command can be applied on any tier 1 scheduler within an egress scheduler policy or within any agg-rate node and affects all policers and queues controlled by the object.

When LUB is enabled, the LUB second pass is performed as part of the H-QoS algorithm The order of operation between H-QoS and LUB is as follows:

-

Policer or queue offered rate calculation.

-

Offered rate modifications based on adv-config-policy offered-measurement parameters.

-

H-QoS Bandwidth determination based on modified offered-rates.

-

LUB second pass to ensure aggregate rates are not exceeded where LUB enabled.

-

Bandwidth distribution modification based on adv-config-policy bandwidth-distribution parameters.

-

Each policer or queue operational PIR is then modified.

When LUB is enabled on a scheduler rate or aggregate rate, a LUB context is created containing the rate and the associated policers and queues that the rate controls. Because a policer or queue may be controlled by multiple LUB enabled rates in a hierarchy, the policer or queue may be associated with multiple LUB contexts.

LUB is applied to the contexts where it is enabled. LUB first considers how much of the aggregate rate is unused by the aggregate rates of each member policer or queue after the first pass of the H-QoS algorithm. This represents the current bandwidth that may be distributed between the members. LUB then distributes the available bandwidth to its members based on each LUB-weight. The LUB-weight is determined as follows:

If a policer or queue is using all of its default H-QoS assigned rate, then its LUB-weight is 0. It is not participating in the bandwidth distribution as it cannot accept more bandwidth.

If a policer or queue has accumulated work, its LUB-weight is set to 50. The work is determined by the queue having built up a depth of packets, or the offered rate of a policer or queue increasing since last sample period. The aim is to assign more of the unused bandwidth to policers and queues needing more capacity. No attempt is made to distribute based on the relative priority level or weight within the hierarchy of a policer or queue.

Otherwise, a queue’s LUB-weight is 1

The resulting operational PIRs are then set such that the scheduler or agg-rate rate is not exceeded. To achieve the best precision, policers and queues must be configured to use adaptation-rule pir max cir max to prevent the actual rate used exceeding that determined by LUB.

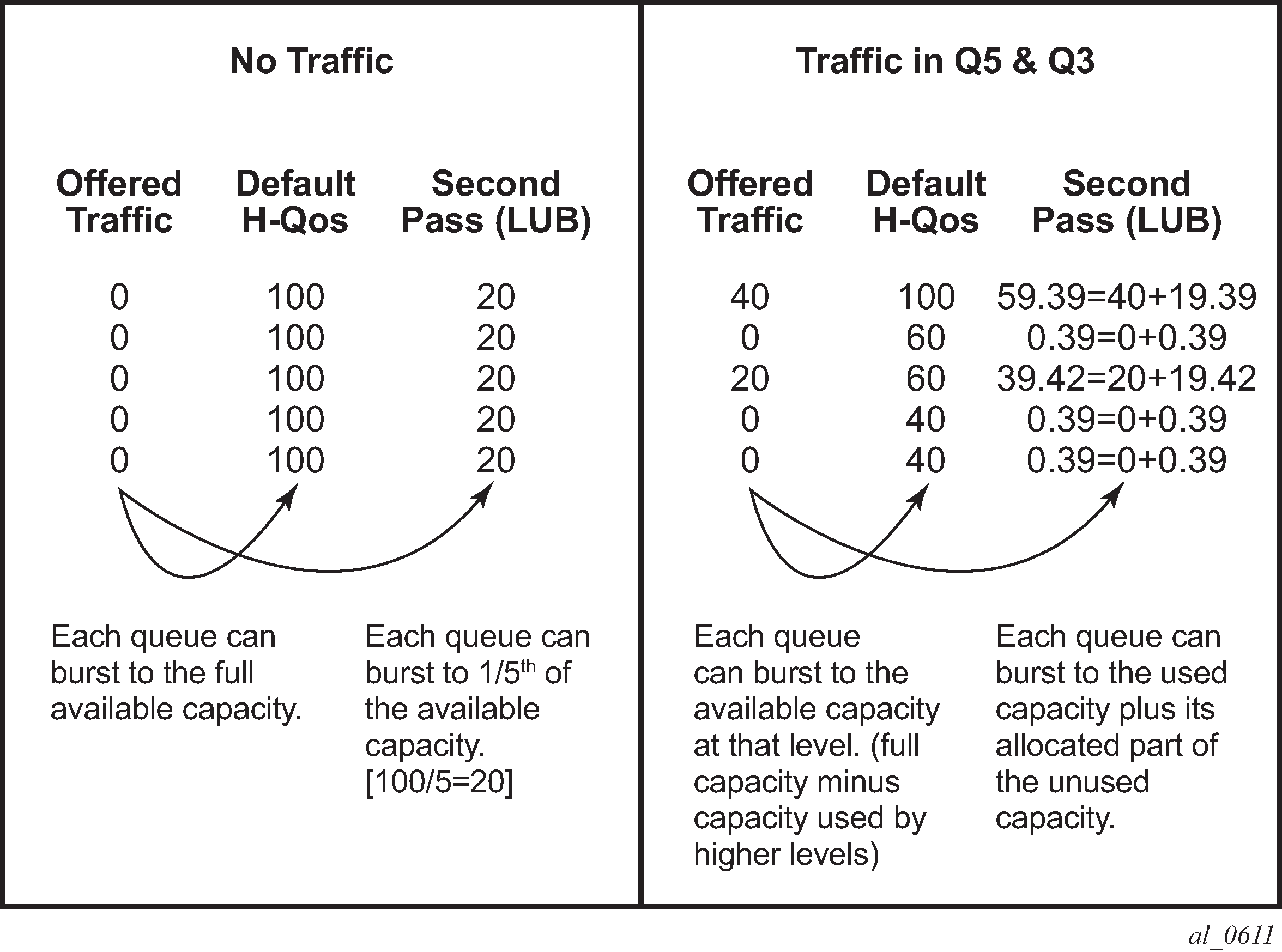

Example

For a simple scenario with 5 egress SAP queues all without rates configured but with each queue parented to a different level in a parent scheduler that has a rate of 100Mb/s, see Limit unused bandwidth example.

The resulting bandwidth distribution is shown in Resulting bandwidth distribution; first, when no traffic is being sent with and without LUB applied, then when 20 Mb/s and 40 Mb/s are sent on queues 3 and 5, respectively, again with and without LUB applied. As shown, the distribution of bandwidth in the case where traffic is sent and LUB is enabled is based on the LUB-weights.

Configuring port scheduler policies

Port scheduler structure

Every port scheduler supports eight strict priority levels with a two-pass bandwidth allocation mechanism for each priority level. Priority levels 8 through 1 (level 8 is the highest priority) are available for port-parent association for child queues, policers, and schedulers. Each priority level supports a maximum rate limit parameter that limits the amount of bandwidth that may be allocated to that level. A CIR parameter is also supported that limits the amount of bandwidth allocated to the priority level for the child queue or policer offered load, within their defined CIR. An overall maximum rate parameter defines the total bandwidth that is allocated to all priority levels.

Special orphan queue and scheduler behavior

When a port scheduler is present on an egress port or channel, the system ensures that all policers, queues, and schedulers receive bandwidth from that scheduler to prevent free-running policers or queues that can cause the aggregate operational PIR of the port or channel to oversubscribe the bandwidth available. When the aggregate maximum rate for the policers and queues on a port or channel operates above the available line rate, the forwarding ratio between the policers and queues is affected by the hardware schedulers on the port and may not reflect the scheduling defined on the port or intermediate schedulers. Policers, queues, and schedulers that are either explicitly attached to the port scheduler using the port-parent command or are attached to an intermediate scheduler hierarchy that is ultimately attached to the port scheduler are managed through the normal eight priority levels. Queues, policers, and schedulers that are not attached directly to the port scheduler and are not attached to an intermediate scheduler that itself is attached to the port scheduler are considered orphaned and, by default, are tied to priority 1 with a weight of 0. All weight 0 policers, queues, and schedulers at priority level 1 are allocated bandwidth after all other children and each weight 0 child is given an equal share of the remaining bandwidth. This default orphan behavior may be overridden at the port scheduler policy by using the orphan-override command. The orphan-override command accepts the same parameters as the port-parent command. When the orphan-override command is executed, the parameters are used as the port parent parameters for all orphans associated with a port using the port scheduler policy.

Packet to frame bandwidth conversion

Another difference between the service-level scheduler-policy and the port-level port-scheduler-policy is in bandwidth allocation behavior. The port scheduler is designed to offer on-the-wire bandwidth. For Ethernet ports, this includes the IFG and the preamble for each frame and represents 20 bytes total per frame. The policers, queues, and intermediate service-level schedulers (a service-level scheduler is a scheduler instance at the SAP, multiservice site, or subscriber or multiservice site profile level) operate based on packet overhead that does not include the IFG or preamble on Ethernet packets. In order for the port-based virtual scheduling algorithm to function, it must convert the policer, queue, and service scheduler packet-based required bandwidth and bandwidth limiters (CIR and rate PIR) to frame-based values. This is accomplished by adding 20 bytes to each Ethernet frame offered at the queue or policer level to calculate a frame-based offered load. Then, the algorithm calculates the ratio increase between the packet-based offered load and the frame-based offered load and uses this ratio to adapt the CIR and rate PIR values for the policer or queue to frame-CIR and frame-PIR values. When a service-level scheduler hierarchy is between the policers, queues, and the port-based schedulers, the ratio between the average frame-offered-load and the average packet-offered-load is used to adapt the scheduler’s packet-based CIR and rate PIR to frame-based values. The frame-based values are then used to distribute the port-based bandwidth down to the policer and queue level.

Packet over SONET (PoS) and SDH queues on the 7450 ESS and 7750 SR also operate based on packet sizes and do not include on-the-wire frame overhead. Unfortunately, the port-based virtual scheduler algorithm does not have access to all the frame encapsulation overhead occurring at the framer level. Instead of automatically calculating the difference between packet-offered-load and frame-offered-load, the system relies on a provisioned value at the queue level. This avg-frame-overhead parameter is used to calculate the difference between the packet-offered-load and the frame-offered-load. This difference is added to the packet-offered-load to derive the frame-offered-load. correct setting of this percentage value is required for correct bandwidth allocation between queues and service schedulers. If this value is not attainable, another approach is to artificially lower the maximum rate of the port scheduler to represent the average port framing overhead. This, in conjunction with a zero or low value for avg-frame-overhead, ensures that the allocated queue bandwidth controls forwarding behavior instead of the low-level hardware schedulers.

Aggregate rate limits for directly attached queues

When all policers and queues for a SAP, multiservice site or subscriber or multiservice site instance are attached directly to the port scheduler (using the port-parent command), it is possible to configure an agg-rate limit for the queues. This is beneficial because the port scheduler does not provide a mechanism to enforce an aggregate SLA for a service or subscriber or multiservice site and the agg-rate limit provides this ability. Queues and policers may be provisioned directly on the port scheduler when it is desirable to manage the congestion at the egress port-based on class priority instead of on a per service object basis.

The agg-rate limit is not supported when one or more policers or queues on the object are attached to an intermediate service scheduler. In this event, it is expected that the intermediate scheduler hierarchy is used to enforce the aggregate SLA. Attaching an agg-rate limit is mutually exclusive to attaching an egress scheduler policy at the SAP or subscriber or multiservice site profile level. When an aggregate rate limit is in effect, a scheduler policy cannot be assigned. When a scheduler policy is assigned on the egress side of a SAP or subscriber or multiservice site profile, an agg-rate limit cannot be assigned.

Because the sap-egress policy defines a policer or queue parent association before the policy is associated with a service SAP or subscriber or multiservice site profile, it is possible for the policy to either not define a port-parent association or define an intermediate scheduler parenting that does not exist. Policers and queues in this state are considered to be orphaned and automatically attached to port scheduler priority 1. Orphaned policers and queues are included in the aggregate rate limiting behavior on the SAP or subscriber or multiservice site instance they are created within.

SAP egress QoS policy queue parenting

A SAP-egress QoS policy policer or queue may be associated with either a port parent or an intermediate scheduler parent. The validity of the parent definition cannot be checked at the time that it is provisioned because the application of the QoS policy is not known until it is applied to an egress SAP, subscriber, or multiservice site profile. Port or intermediate parenting can be decided on a queue-by-queue or policer-by-policer basis, some policers and queues tied directly to the port scheduler priorities while others are attached to intermediate schedulers.

Network queue QoS policy queue parenting

A network-queue policy only supports direct port parent priority association. Intermediate schedulers are not supported on network ports or channels.

Egress port scheduler overrides

When a port scheduler has been associated with an egress port, it is possible to override the following parameters:

the max-rate allowed for the scheduler

the maximum rate for each priority level 8 through 1

the CIR associated with each priority level 8 through 1

The orphan priority level (level 1) has no configuration parameters and cannot be overridden.

Applying a port scheduler policy to a virtual port

To represent a downstream network aggregation node in the local node scheduling hierarchy, a new scheduling node, referred to as virtual port (Vport in CLI) have been introduced. The Vport operates exactly like a port scheduler except multiple Vport objects can be configured on the egress context of an Ethernet port.

This feature applies to the 7450 ESS and 7750 SR only.

Applying a port scheduler policy to a Vport shows the use of the Vport on an Ethernet port of a Broadband Network Gateway (BNG). In this case, the Vport represents a specific downstream DSLAM.

The user adds a Vport to an Ethernet port using the following command:

config>port>ethernet>access>egress>vport vport-name create

The Vport is always configured at the port level even when a port is a member of a LAG. The Vport name is local to the port it is applied to but must be the same for all member ports of a LAG. However, it does not need to be unique globally on a chassis.

The user applies a port scheduler policy to a Vport using the following command:

config>port>ethernet>access>egress>vport>port-scheduler-policy port-scheduler-policy-name

A Vport cannot be parented to the port scheduler when the Vport is using a port scheduler policy. It is important that the user ensures that the sum of the max-rate parameter value in the port scheduler policies of all Vport instances on a specific egress Ethernet port does not oversubscribe the port’s rate. If it does, the scheduling behavior degenerates to that of the H/W scheduler on that port. A Vport that uses an agg-rate, or a scheduler-policy, can be parented to a port scheduler. This is described in Applying aggregate rate limit to a Vport. The application of the agg-rate rate, port-scheduler-policy, and scheduler-policy commands under a Vport are mutually exclusive.

Each subscriber host policer or queue is port parented to the Vport that corresponds to the destination DSLAM using the existing port-parent command:

config>qos>sap-egress>queue>port-parent [weight weight]

[level level] [cir-weight cir-weight] [cir-level cir-

level]config>qos>sap-egress>policer>port-parent [weight

weight] [level level] [cir-weight cir-weight] [cir-

level cir-level]This command can parent the policer or queue to either a port or to a Vport. These operations are mutually exclusive in CLI. When parenting to a Vport, the parent Vport for a subscriber host policer or queue is not explicitly indicated in the command; it is determined indirectly. The determination of the parent Vport for a specific subscriber host queue is described in the 7450 ESS, 7750 SR, and VSR Triple Play Service Delivery Architecture Guide.

Subscriber host policers or queues, SLA profile schedulers, subscriber profile schedulers, and PW SAPs (in IES or VPRN services) can be parented to a Vport.

Applying aggregate rate limit to a Vport

The user can apply an aggregate rate limit to the Vport and apply a port scheduler policy to the port.

This model allows the user to oversubscribe the Ethernet port. The application of the agg-rate option and the application of a port scheduler policy, or a scheduler policy to a Vport are mutually exclusive.

When using this model, a subscriber host queue with the port-parent option enabled is scheduled within the context of the port’s port scheduler policy. More details are provided in the 7450 ESS, 7750 SR, and VSR Triple Play Service Delivery Architecture Guide.

Applying a scheduler policy to a Vport

The user can apply a scheduler policy to the Vport. This allows scheduling control of subscriber tier 1 schedulers in a scheduler policy applied to the egress of a subscriber or SLA profile, or to a PW SAP in an IES or VPRN service. This feature applies only to the 7450 ESS and 7750 SR.

The advantage of using a scheduler policy under a Vport, compared to the use of a port scheduler (with or without an agg-rate rate), is that it allows a port parent to be configured at the Vport level as well as allowing the user to oversubscribe the Ethernet port.

Bandwidth distribution from an egress port scheduler to a Vport configured with a scheduler policy can be performed based on the level/cir-level and weight/cir-weight configured under the scheduler’s port parent. The result is in allowing multiple Vports, for example, representing different DSLAMs, to share the port bandwidth capacity in a flexible way that is under the control of the user.

The configuration of a scheduler policy under a Vport and the configuration of a port scheduler policy or an aggregate rate limit are mutually exclusive.

A scheduler policy is configured under a Vport as follows:

config>port>ethernet>access>egress>vport# scheduler-policy scheduler-policy-name

When using this model, a tier 1 scheduler in a scheduling policy applied to a subscriber profile or SLA profiles must be configured as follows:

config>qos>scheduler-policy>tier# parent-location vport

If the Vport exists, but the port does not have a port scheduler policy applied, then its schedulers are orphaned and no port-level QoS control can be enforced.

The following show/clear commands are available related to the Vport scheduler:

show qos scheduler-hierarchy port port-id vport name

[scheduler scheduler-name] [detail]show qos scheduler-stats port port-id vport name

[scheduler scheduler-name] [detail]clear qos scheduler-stats port port-id vport name

[scheduler scheduler-name] [detail]H-QoS adjustment and host tracking are not supported on schedulers that are configured in a scheduler policy on a Vport, so the configuration of a scheduler policy under a Vport and the configuration of the egress-rate-modify parameter are mutually exclusive.

ESM over MPLS pseudowires are not supported when a scheduler policy is configured on a Vport.

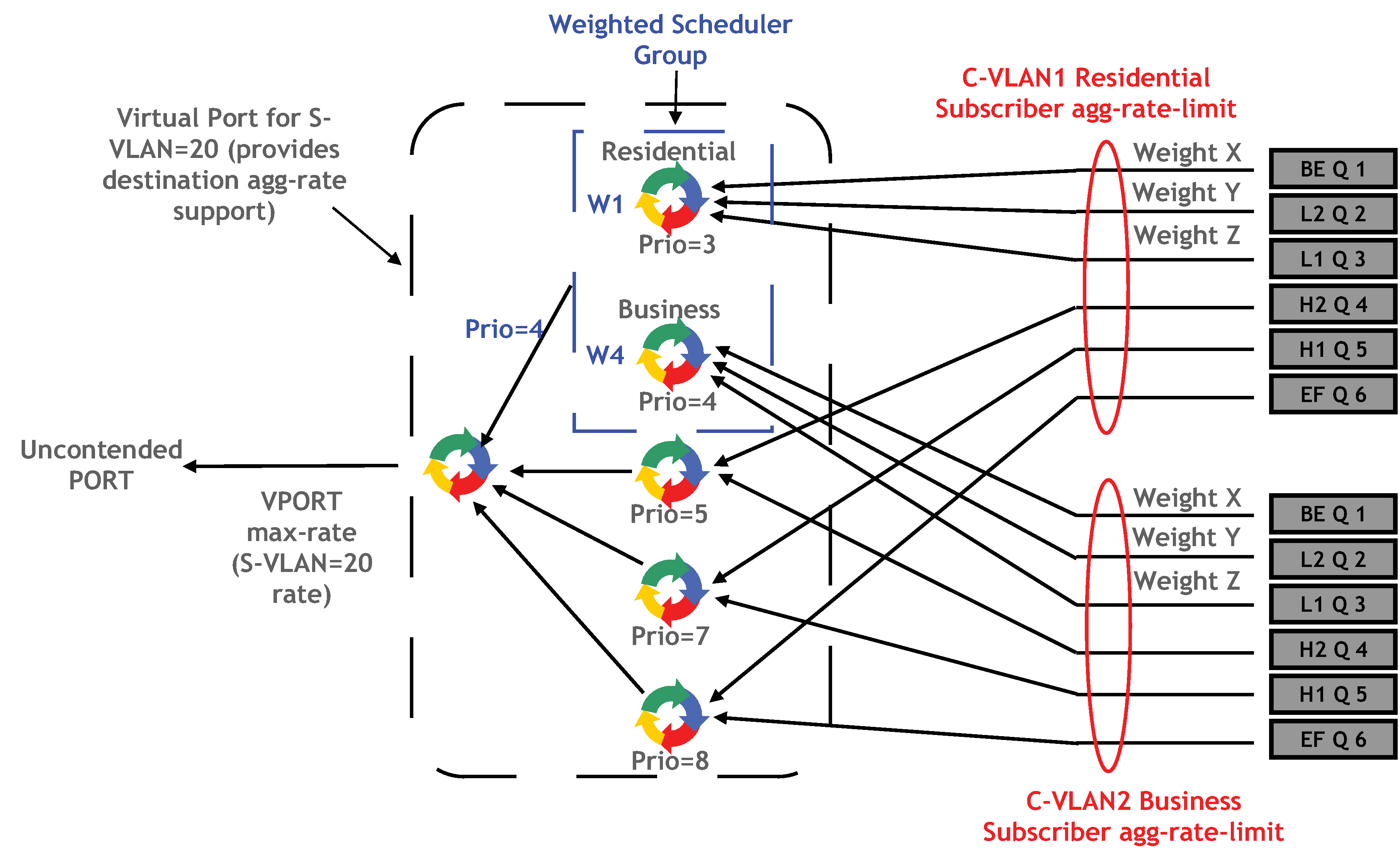

Weighted scheduler group in a port scheduler policy

The port scheduler policy defines a set of eight priority levels. To allow for the application of a scheduling weight to groups of queues competing at the same priority level of the port scheduler policy applied to the Vport, or to the Ethernet port, a group object is defined under the port scheduler policy, as follows:

config>qos>port-scheduler-policy>group group-name rate pir-rate [cir cir-rate]

Up to eight groups can be defined within each port scheduler policy. One or more levels can map to the same group. A group has a rate and, optionally, a cir-rate, and inherits the highest scheduling priority of its member levels. For example, the scheduler group for the 7450 ESS and 7750 SR shown in the Vport in Applying a port scheduler policy to a Vport consists of level priority 3 and level priority 4. Therefore, it inherits priority 4 when competing for bandwidth with the standalone priority levels 8, 7, and 5.

A group receives bandwidth from the port or from the Vport and distributes it within the member levels of the group according to the weight of each level within the group. Each priority level competes for bandwidth within the group based on its weight under congestion situation. If there is no congestion, a priority level can achieve up to its rate (cir-rate) worth of bandwidth.

The mapping of a level to a group is performed as follows:

config>qos>port-scheduler-policy>level priority-level rate pir-rate [cir cir-rate] group group-name [weight weight-in-group]

The CLI enforces that mapping of levels to a group are contiguous. In other words, a user would not be able to add a priority level to group unless the resulting set of priority levels is contiguous.

When a level is not explicitly mapped to any group, it maps directly to the root of the port scheduler at its own priority like in existing behavior.

Basic configurations

A basic QoS scheduler policy must conform to the following:

Each QoS scheduler policy must have a unique policy ID.

A tier level 1 parent scheduler name cannot be configured.

A basic QoS port scheduler policy must conform to the following:

Each QoS port scheduler policy must have a unique policy name.

Creating a QoS scheduler policy

Configuring and applying QoS policies is optional. If no QoS policy is explicitly applied to a SAP or IP interface, a default QoS policy is applied.

To create a scheduler policy, define the following:

Define a scheduler policy ID value. The system does not dynamically assign a value.

Include a description. The description provides a brief overview of policy features.

Specify the tier level. A tier identifies the level of hierarchy that a group of schedulers are associated with.

Specify a scheduler name. A scheduler defines bandwidth controls that limit each child (other schedulers and queues) associated with the scheduler.

Specify a parent scheduler name to be associated with a level 1, 2, or 3 tier.

Optionally, modify the bandwidth that the scheduler can offer its child queues or schedulers. Otherwise, the scheduler is allowed to consume bandwidth without a scheduler-defined limit.

The following displays a scheduler policy configuration:

A:ALA-12>config>qos# info

#------------------------------------------

echo "QoS Policy Configuration"

#------------------------------------------

...

scheduler-policy "SLA1" create

description "NetworkControl(3), Voice(2) and NonVoice(1) have strict

priorities"

tier 1

scheduler "All_traffic" create

description "All traffic goes to this scheduler eventually"

rate 11000

exit

exit

tier 2

scheduler "NetworkControl" create

description "network control traffic within the VPN"

parent All_traffic level 3 cir-level 3

rate 100

exit

scheduler "NonVoice" create

description "NonVoice of VPN and Internet traffic will be serviced

by this scheduler"

parent All_traffic cir-level 1

rate 11000

exit

scheduler "Voice" create

description "Any voice traffic from VPN and Internet use this

scheduler"

parent All_traffic level 2 cir-level 2

rate 5500

exit

exit

tier 3

scheduler "Internet_be" create

parent NonVoice cir-level 1

exit

scheduler "Internet_priority" create

parent NonVoice level 2 cir-level 2

exit

scheduler "Internet_voice" create

parent Voice

exit

scheduler "VPN_be" create

parent NonVoice cir-level 1

exit

scheduler "VPN_nc" create

parent NetworkControl

rate 100 cir 36

exit

scheduler "VPN_priority" create

parent NonVoice level 2 cir-level 2

exit

scheduler "VPN_reserved" create

parent NonVoice level 3 cir-level 3

exit

scheduler "VPN_video" create

parent NonVoice level 5 cir-level 5

rate 1500 cir 1500

exit

scheduler "VPN_voice" create

parent Voice

rate 2500 cir 2500

exit

exit

exit

sap-ingress 100 create

description "Used on VPN sap"

...

----------------------------------------------

A:ALA-12>config>qos#

Applying scheduler policies

Apply scheduler policies to the entities in subsequent sections.

Customer

Use the following CLI syntax to associate a scheduler policy to a customer’s multiservice site:

config>customer customer-id

multiservice-site customer-site-name

egress

scheduler-policy scheduler-policy-name

ingress

scheduler-policy scheduler-policy-nameEpipe

Use the following CLI syntax to apply QoS policies to ingress or egress, or both, Epipe SAPs:

config>service# epipe service-id [customer customer-id]

sap sap-id

egress

scheduler-policy scheduler-policy-name

ingress

scheduler-policy scheduler-policy-name

config>service# epipe service-id [customer customer-id]

sap sap-id

egress

qos sap-egress-policy-id

ingress

qos sap-ingress-policy-idThe following output displays an Epipe service configuration with SAP scheduler policy SLA2 applied to the SAP ingress and egress.

A:SR>config>service# info

----------------------------------------------

epipe 6 customer 6 vpn 6 create

description "Distributed Epipe service to west coast"

sap 1/1/10:0 create

ingress

scheduler-policy "SLA2"

qos 100

exit

egress

scheduler-policy "SLA2"

qos 1010

exit

exit

...

----------------------------------------------

A:SR>config>service#

IES

Use the following CLI syntax to apply scheduler policies to ingress or egress, or both, IES SAPs:

config>service# ies service-id [customer customer-id]

interface ip-int-name

sap sap-id

egress

scheduler-policy scheduler-policy-name

ingress

scheduler-policy scheduler-policy-nameThe following output displays an IES service configuration with scheduler policy SLA2 applied to the SAP ingress and egress.

A:SR>config>service# info

----------------------------------------------

ies 88 customer 8 vpn 88 create

interface "Sector A" create

sap 1/1/1.2.2 create

ingress

scheduler-policy "SLA2"

qos 101

exit

egress

scheduler-policy "SLA2"

qos 1020

exit

exit

exit

no shutdown

exit

----------------------------------------------

A:SR>config>service#

VPLS

Use the following CLI syntax to apply scheduler policies to ingress or egress, or both, VPLS SAPs:

config>service# vpls service-id [customer customer-id]

sap sap-id

egress

scheduler-policy scheduler-policy-name

ingress

scheduler-policy scheduler-policy-nameThe following output displays an VPLS service configuration with scheduler policy SLA2 applied to the SAP ingress and egress.

A:SR>config>service# info

----------------------------------------------

...

vpls 700 customer 7 vpn 700 create

description "test"

stp

shutdown

exit

sap 1/1/9:0 create

ingress

scheduler-policy "SLA2"

qos 100

exit

egress

scheduler-policy "SLA2"

exit

exit

spoke-sdp 2:222 create

exit

mesh-sdp 2:700 create

exit

no shutdown

exit

...

----------------------------------------------

A:SR>config>service#

VPRN

Use the following CLI syntax to apply scheduler policies to ingress or egress VPRN SAPs on the 7750 SR and 7950 XRS:

config>service# vprn service-id [customer customer-id]

interface ip-int-name

sap sap-id

egress

scheduler-policy scheduler-policy-name

ingress

scheduler-policy scheduler-policy-nameThe following output displays a VPRN service configuration with the scheduler policy SLA2 applied to the SAP ingress and egress.

A:SR7>config>service# info

----------------------------------------------

...

vprn 1 customer 1 create

ecmp 8

autonomous-system 10000

route-distinguisher 10001:1

auto-bind-tunnel

resolution-filter

resolution-filter ldp

vrf-target target:10001:1

interface "to-ce1" create

address 192.168.0.0/24

sap 1/1/10:1 create

ingress

scheduler-policy "SLA2"

exit

egress

scheduler-policy "SLA2"

exit

exit

exit

no shutdown

exit

epipe 6 customer 6 vpn 6 create

----------------------------------------------

A:SR7>config>service#

Creating a QoS port scheduler policy

Configuring and applying QoS port scheduler policies is optional. If no QoS port scheduler policy is explicitly applied to a SAP or IP interface, a default QoS policy is applied.

To create a port scheduler policy, define the following:

a port scheduler policy name

a description. The description provides a brief overview of policy features.

Use the following CLI syntax to create a QoS port scheduler policy.

The create keyword is included in the command syntax upon creation of a policy.

config>qos

port-scheduler-policy scheduler-policy-name [create]

description description-string

level priority-level rate pir-rate [cir cir-rate]

max-rate rate

orphan-override [level priority-level] [weight weight]

[cir-level priority-level] [cir-weight cir-weight]The following displays a scheduler policy configuration example:

*A:ALA-48>config>qos>port-sched-plcy# info

----------------------------------------------

description "Test Port Scheduler Policy"

orphan-override weight 50 cir-level 4 cir-weight 50

----------------------------------------------

*A:ALA-48>config>qos>port-sched-plcy#

Configuring port parent parameters

The port-parent command defines a child/parent association between an egress queue and a port-based scheduler or between an intermediate service scheduler and a port-based scheduler. The command may be issued in the following contexts:

sap-egress>queue queue-id

sap-egress>policer policer-id

cfg>qos>qgrps>egr>qgrp>queue queue-id

network-queue> queue queue-id

scheduler-policy>scheduler scheduler-name

The port-parent command allows for a set of within-CIR and above-CIR parameters that define the port priority levels and weights for the policer, queue, or scheduler. If the port-parent command is executed without any parameters, the default parameters are assumed.

Within-CIR priority level parameters

The within-CIR parameters define which port priority level the policer, queue, or scheduler should be associated with when receiving bandwidth for the policer, queue, or schedulers within-CIR offered load. The within-CIR offered load is the amount of bandwidth the policer, queue, or scheduler could use that is equal to or less than its defined or summed CIR value. The summed value is only valid on schedulers and is the sum of the within-CIR offered loads of the children attached to the scheduler. The parameters that control within-CIR bandwidth allocation are the port-parent command cir-level and cir-weight keywords. The cir-level keyword defines the port priority level that the scheduler, policer, or queue uses to receive bandwidth for its within-CIR offered load. The cir-weight is used when multiple queues, policers, or schedulers exist at the same port priority level for within-CIR bandwidth. The weight defines the relative ratio that is used to distribute bandwidth at the priority level when more within-CIR offered load exists than the port priority level has bandwidth.

A cir-weight equal to zero (the default value) has special meaning and informs the system that the queue, policer, or scheduler does not receive bandwidth from the within-CIR distribution. Instead, all bandwidth for the queue or scheduler must be allocated in the port scheduler’s above-CIR pass.

Above-CIR priority level parameters

The above-CIR parameters define which port priority level the policer, queue, or scheduler should be associated with when receiving bandwidth for the above-CIR offered load of the policer, queue, or scheduler. The above-CIR offered load is the amount of bandwidth the queue, policer, or scheduler could use that is equal to or less than its defined PIR value (based on the queue, policer, or scheduler rate command) less any bandwidth that was given to the queue, policer, or scheduler during the above-CIR scheduler pass. The parameters that control above-CIR bandwidth allocation are the port-parent commands level and weight keywords. The level keyword defines the port priority level that the policer, scheduler, or queue uses to receive bandwidth for its above-CIR offered load. The weight is used when multiple policers, queues, or schedulers exist at the same port priority level for above-CIR bandwidth. The weight defines the relative ratio that is used to distribute bandwidth at the priority level when more above-CIR offered load exists than the port priority level has bandwidth.

config>qos# scheduler-policy scheduler-policy-name

tier {1 | 2 | 3}

scheduler scheduler-name

port-parent [level priority-level] [weight

priority-weight] [cir-level cir-priority-level]

[cir-weight cir-priority-weight]config>qos#

sap-egress sap-egress-policy-id [create]

queue queue-id [{auto-expedite | best-effort |

expedite}] [priority-mode | profile-mode] [create]

port-parent [level priority-level] [weight

priority-weight] [cir-level cir-priority-level]

[cir-weight cir-priority-weight]

policer policer-id [create]

port-parent [level priority-level] [weight

priority-weight] [cir-level cir-priority level]

[cir-weight cir-priority-weight]config>qos#

network-queue network-queue-policy-name [create]

no network-queue network-queue-policy-name

queue queue-id [multipoint] [{auto-expedite | best-

effort | expedite}] [priority-mode | profile-mode]

[create]

port-parent [level priority-level] [weight

priority-weight] [cir-level cir-priority-level]

[cir-weight cir-priority-weight]Configuring distributed LAG rate

The following output displays an example configuration and explanation with and without dist-lag-rate-shared.

*B:ALU-A>config>port# info

----------------------------------------------

ethernet

mode access

egress-scheduler-policy "psp"

autonegotiate limited

exit

no shutdown

----------------------------------------------

*B:ALU-A>config>port# /configure lag 30

*B:ALU-A>config>lag# info

----------------------------------------------

description "Description For LAG Number 30"

mode access

port 2/1/6

port 2/1/10

port 3/2/1

port 3/2/2

no shutdown

*B:ALU-A>config>service>ies>if>sap# /configure qos port-scheduler-policy "psp"

*B:ALU-A>config>qos>port-sched-plcy# info

----------------------------------------------

max-rate 413202

In this example, before enabling dist-lag-rate-shared, in the port-scheduler-policy psp, the max-rate achieved is twice 413202 kb/s (826 Mb/s). This is because LAG has members from two different cards.

Two port-scheduler-instances are created: one on each card with the max-rate of 413202 kb/s. This can be confirmed using the following show output.

When dist-lag-rate-shared is enabled in port-scheduler-policy, this max-rate is enforced across all members of the LAG.

*B:ALU-A>config>service>ies>if>sap# /show qos scheduler-hierarchy sap lag-30 egress

detail

===============================================================================

Scheduler Hierarchy - Sap lag-30

===============================================================================

Egress Scheduler Policy :

-------------------------------------------------------------------------------

Legend :

(*) real-time dynamic value

(w) Wire rates

B Bytes

-------------------------------------------------------------------------------

Root (Egr)

| slot(2)

|--(S) : Tier0Egress:1->lag-30:0.0->1 (Port lag-30 Orphan)

| | AdminPIR:2000000 AdminCIR:0(sum)

| | Parent Limit Unused Bandwidth: not-found

| |

| | AvgFrmOv:101.65(*)

| | AdminPIR:2000000(w) AdminCIR:0(w)

| |

| | [Within CIR Level 0 Weight 0]

| | Assigned:0(w) Offered:0(w)

| | Consumed:0(w)

| |

| | [Above CIR Level 1 Weight 0]

| | Assigned:413202(w) Offered:2000000(w) <----without dist-lag-rate-shared 413MB

is assigned to slot 2

| | Consumed:413202(w)

| |

| |

| | TotalConsumed:413202(w)

| | OperPIR:406494

| |

| | [As Parent]

| | OperPIR:406494 OperCIR:0

| | ConsumedByChildren:406494

| |

| |

| |--(Q) : 1->lag-30(2/1/6)->1

| | | AdminPIR:1000000 AdminCIR:0