Filter policies

This chapter provides information about filter policies and management.

ACL filter policy overview

ACL filter policies, also referred to as Access Control Lists (ACLs) or just ‟filters”, are sets of ordered rule entries specifying packet match criteria and actions to be performed to a packet upon a match. Filter policies are created with a unique filter ID and filter name. The filter name needs to be assigned during the creation of the filter policy. If a name is not specified at creation time, then SR OS assigns a string version of the filter ID as the name.

There are three main filter policies: ip-filter for IPv4, ipv6-filter for IPv6, and mac-filter for MAC level filtering. Additionally, the filter policy scope defines if the policy can be reused between different interfaces, embedded in another filter policy or applied at the system level:

-

exclusive filter

An exclusive filter defines policy rules explicitly for a single interface. An exclusive filter allows the highest level of customization but uses the most resources, because each exclusive filter consumes hardware resources on line cards on which the interface exists.

-

template filter

A template filter uses an identical set of policy rules across multiple interfaces. Template filters use a single set of resources per line card, regardless of how many interfaces use a specific template filter policy on that line card. Template filter policies used on access interfaces consume resources on line cards only if at least one access interface for a specific template filter policy is configured on a specific line card.

-

embedded filter

An embedded filter defines a common set of policy rules that can then be used (embedded) by other exclusive or template filters in the system. This allows optimized management of filter policies.

-

system filter

A system filter policy defines a common set of policy rules that can then be activated within other exclusive/template filters. It can be used, for example, as a system-level set of deny rules. This allows optimized management of common rules (similarly to embedded filters). However, active system filter policy entries are not duplicated inside each policy that activates the system policy (as is the case when embedding is used). The active system policy is downloaded after to line cards, and activated filter policies are chained to it.

After the filter policy is created, the policy must then be associated with interfaces, services, subscribers, or with other filter policies (if the created policy cannot be directly deployed on an interface, service, or subscriber), so the incoming or outgoing traffic can be subjected to filter rules. Filter policies are associated with interfaces, services, or subscribers separately in the ingress and egress directions. A policy deployed on ingress and egress direction can be the same or different. In general, Nokia recommends using different filter policies for the ingress and egress directions and to use different filter policies per service type, because filter policies support different match criteria and different actions for different directions/service contexts.

A filter policy is applied to a packet in the ascending rule entry order. When a packet matches all the command options specified in a filter entry’s match criteria, the system takes the action defined for that entry. If a packet does not match the entry command options, the packet is compared to the next higher numerical filter entry rule, and so on.

In classic CLI, if the packet does not match any of the entries, the system executes the default action specified in the filter policy: drop or forward.

In MD-CLI, if the packet does not match any of the entries, the system executes the default action specified in the filter policy: drop or accept.

For Layer 2, either an IPv4/IPv6 or MAC filter policy can be applied. For Layer 3 and network interfaces, an IPv4/IPv6 policy can be applied. For R-VPLS service, a Layer 2 filter policy can be applied to Layer 2 forwarded traffic and a Layer 3 filter policy can be applied to Layer 3 routed traffic. For dual-stack interfaces, if both IPv4 and IPv6 filter policies are configured, the policy applied are based on the outer IP header of the packet. Non-IP packets do not affect an IP filter policy, so the default action in the IP filter policy do not apply to these packets. Egress IPv4 QoS-based classification criteria are ignored when egress MAC filter policy is configured on the same interface.

Additionally, platforms that support Network Group Encryption (NGE) can use IP exception filters. IP exception filters scan all outbound traffic entering an NGE domain and allow packets that match the exception filter criteria to transit the NGE domain unencrypted. See Router encryption exceptions using ACLs for information about IP exception filters supported by NGE nodes.

Filter policy basics

The following subsections define main functionality supported by filter policies.

Filter policy packet match criteria

This section defines packet match criteria supported on SR OS for IPv4, IPv6, and MAC filters. Supported criteria types depend on the hardware platform and filter direction, see your Nokia representative for more information.

General notes:

If multiple unique match criteria are specified in a single filter policy entry, all criteria must be met in order for the packet to be considered a match against that filter policy entry (logical AND).

Any match criteria not explicitly defined is ignored during match.

An ACL filter policy entry with match criteria defined, but no action configured, is considered incomplete and inactive (an entry is not downloaded to the line card). A filter policy must have at least one entry active for the policy to be considered active.

An ACL filter entry with no match conditions defined matches all packets.

Because an ACL filter policy is an ordered list, entries should be configured (numbered) from the most explicit to the least explicit.

IPv4/IPv6 filter policy entry match criteria

This section describes the IPv4 and IPv6 match criteria supported by SR OS. The criteria are evaluated against the outer IPv4 or IPv6 header and a Layer 4 header that follows (if applicable). Support for match criteria may depend on hardware or filter direction. Nokia recommends not configuring a filter in a direction or on hardware where a match criterion is not supported because this may lead to unwanted behavior.

IPv4 and IPv6 filter policies support three four filter types, including normal, source MAC, packet length, and destination class, with each supporting a different set of match criteria.

The match criteria available using the normal filter type are defined in this section. Layer 3 match criteria include:

DSCP

Match the specified DSCP command option against the Differentiated Services Code Point/Traffic Class field in the IPv4 or IPv6 packet header.

source IP, destination IP, or IP

Match the specified source or destination IPv4 or IPv6 address prefix against the IP address field in the IPv4 or IPv6 packet header. The user can optionally configure a mask to be used in a match. The ip command can be used to configure a single filter-policy entry that provides non-directional matching of either the source or destination (logical OR).

flow label

Match the specified flow label against the Flow label field in IPv6 packets. The user can optionally configure a mask to be used in a match. This operation is supported on ingress filters.

protocol

Match the specified protocol against the Protocol field in the IPv4 packet header (for example, TCP, UDP, IGMP) of the outer IPv4. ‟*” can be used to specify TCP or UDP upper-layer protocol match (Logical OR).

-

Next Header

Match the specified upper-layer protocol (such as, TCP, UDP, IGMPv6) against the Next Header field of the IPv6 packet header. ‟*” can be used to specify TCP or UDP upper-layer protocol match (Logical OR).

Use the following command to match against up to six extension headers.

configure system ip ipv6-eh maxUse the following command to match against the Next Header value of the IPv6 header.

configure system ip ipv6-eh limited

Fragment match criteria

Match for the presence of fragmented packet. For IPv4, match against the MF bit or Fragment Offset field to determine whether the packet is a fragment. For IPv6, match against the Next Header field for the Fragment Extension Header value to determine whether the packet is a fragment. Up to six extension headers are matched against to find the Fragmentation Extension Header.

IPv4 and IPv6 filters support matching against initial fragment using first-only or non-initial fragment non-first-only.

IPv4 match fragment true or false criteria are supported on both ingress and egress.

IPv4 match fragment first-only or non-first-only are supported on ingress only.

Operational note for fragmented traffic

IP and IPv6 filters defined to match TCP, UDP, ICMP, or SCTP criteria (such as source port, destination port, port, TCP ACK, TCP SYN, ICMP type, ICMP code) with command options of zero or false also match non-first fragment packets if other match criteria within the same filer entry are also met. Non-initial fragment packets do not contain a UDP, TCP, ICMP or SCTP header.

IPv4 options match criteria

You can configure the following IPv4 options match criteria exist:

-

IP option

Matches the specified command option value in the first option of the IPv4 packet. A user can optionally configure a mask to be used in a match.

-

option present

Matches the presence of IP options in the IPv4 packet. Padding and EOOL are also considered as IP options. Up to six IP options are matched against.

-

multiple option

Matches the presence of multiple IP options in the IPv4 packet.

-

source route option

Matches the presence of IP Option 3 or 9 (Loose or Strict Source Route) in the first three IP options of the IPv4 packet. A packet also matches this rule if the packet has more than three IP options.

IPv6 extension header match criteria

You can configure the following IPv6 Extension Header match criteria:

-

Authentication Header extension header

Matches for the presence of the Authentication Header extension header in the IPv6 packet. This match criterion is supported on ingress only.

-

Encapsulating Security Payload extension header

Matches for the presence of the Encapsulating Security Payload extension header in the IPv6 packet. This match criterion is supported on ingress only.

-

hop-by-hop options

Matches for the presence of hop-by-hop options extension header in the IPv6 packet. This match criterion is supported on ingress only.

-

Routing extension header type 0

Matches for the presence of Routing extension header type 0 in the IPv6 packet. This match criterion is supported on ingress only.

Upper-layer protocol match criteria

You can configure the following upper-layer protocol match criteria:

-

ICMP/ICMPv6 code field header

Matches the specified value against the code field of the ICMP or ICMPv6 header of the packet. This match is supported only for entries that also define protocol or next-header match for the ICMP or ICMPv6 protocol.

-

ICMP/ICMPv6 type field header

Matches the specified value against the type field of the ICMP or ICMPv6 header of the packet. This match is supported only for entries that also define the protocol or next-header match for the ICMP or ICMPv6 protocol.

-

source port number, destination port number, or port

Matches the specified port, port list, or port range against the source port number or destination port number of the UDP, TCP, or SCTP packet header. An option to match either source or destination (Logical OR) using a single filter policy entry is supported by using a directionless port. Source or destination match is supported only for entries that also define protocol/next-header match for TCP, UDP, SCTP, or TCP or UDP protocols. Match on SCTP source port, destination port, or port is supported on ingress filter policy.

-

TCP ACK, TCP CWR, TCP ECE, TCP FIN, TCP NS, TCP PSH, TCP RST, TCP SYN, TCP URG

Matches the presence or absence of the TCP flags defined in RFC 793, RFC 3168, and RFC 3540 in the TCP header of the packet. This match criteria also requires defining the protocol/next-header match as TCP in the filter entry. TCP CWR, TCP ECE, TCP FIN, TCP NS, TCP PSH, TCP URG are supported on FP4 and FP5-based line cards only. TCP ACK, TCP RST, and TCP SYN are supported on all FP-based cards. When configured on other line cards, the bit for the unsupported TCP flags is ignored.

-

tcp-established

Matches the presence of the TCP flags ACK or RST in the TCP header of the packet. This match criteria requires defining the protocol/next-header match as TCP in the filter entry.

For filter type match criteria

Additional match criteria for source MAC, packet length, and destination class are available using different filter types. See Filter policy type for more information.

IP prefixes, protocol numbers, TCP-UDP ports, and packet length values or ranges can also be grouped in the command configure filter match-list; see Filter policy advanced topics for more information.

MAC filter policy entry match criteria

MAC filter policies support three different filter types with normal, ISID, and VID each supporting a different set of match criteria.

The following list describes the MAC match criteria supported by SR OS or switches for all types of MAC filters (normal, ISID, and VID). The criteria are evaluated against the Ethernet header of the Ethernet frame. Support for match criteria may depend on H/W or filter direction as described in the following description. Match criteria is blocked if it is not supported by a specified frame-type or MAC filter type. Nokia recommends not configuring a filter in a direction or on hardware where a match condition is not supported as this may lead to unwanted behavior.

You can configure the following MAC filter policy entry match criteria:

-

frame format

The filter searches to match a specific type of frame format. For example, configuring frame-type ethernet_II matches only ethernet-II frames.

-

source MAC address

The filter searches to match source MAC address frames. The user can optionally configure a mask to be used in a match.

-

destination MAC address

The filter searches to match destination MAC address frames. The user can optionally configure a mask to be used in a match.

-

802.1p frames

The filter searches to match 802.1p frames. The user can optionally configure a mask to be used in a match.

-

Ethernet II frames

The filter searches to match Ethernet II frames. The Ethernet type field is a two-byte field used to identify the protocol carried by the Ethernet frame.

-

source access point

The filter searches to match frames with a source access point on the network node designated in the source field of the packet. The user can optionally configure a mask to be used in a match.

-

destination access point

The filter searches to match frames with a destination access point on the network node designated in the destination field of the packet. The user can optionally configure a mask to be used in a match.

-

specified three-byte OUI

The filter searches to match frames with the specified three-byte OUI field.

-

specified two-byte protocol ID

The filter searches to match frames with the specified two-byte protocol ID that follows the three-byte OUI field.

-

ISID

The filter searches to match for the matching Ethernet frames with the 24-bit ISID value from the PBB I-TAG. This match criterion is mutually exclusive of all the other match criteria under a specific MAC filter policy and is applicable to MAC filters of type ISID only. The resulting MAC filter can only be applied on a BVPLS SAP or PW in the egress direction.

-

inner-tag or outer-tag

The filter searches to match Ethernet frames with the non-service delimiting tags, as described in the VID MAC filters section. This match criterion is mutually exclusive of all other match criteria under a specific MAC filter policy and is applicable to MAC filters of type VID only.

IP exception filters

An NGE node supports IPv4 exception filters. See Router encryption exceptions using ACLs for information about IP exception filters supported by NGE nodes.

Filter policy actions

The following actions are supported by ACL filter policies:

-

drop

Allows users to deny traffic to ingress or egress the system.

-

IPv4 packet-length and IPv6 payload-length conditional drop

Traffic can be dropped based on IPv4 packet length or IPv6 payload length by specifying a packet length or payload length value or range within the drop filter action (the IPv6 payload length field does not account for the size of the fixed IP header, which is 40 bytes).

This filter action is supported on ingress IPv4 and IPv6 filter policies only, if the filter is configured on an egress interface the packet-length or payload-length match condition is always true.

This drop condition is a filter entry action evaluation, and not a filter entry match evaluation. Within this evaluation, the condition is checked after the packet matches the entry based on the specified filter entry match criteria.

Packets that match a filter policy entry match criteria and the drop packet-length-value or payload-length-value are dropped. Packets that match only the filter policy entry match criteria and do not match the drop packet-length-value or drop payload-length value are forwarded with no further matching in following filter entries.

Packets matching this filter entry and not matching the conditional criteria are not logged, counted, or mirrored.

IPv4 TTL and IPv6 hop limit conditional drop

Traffic can be dropped based on a IPv4 TTL or IPv6 hop limit by specifying a TTL or hop limit value or range within the drop filter action.

This filter action is supported on ingress IPv4 and IPv6 filter policies only. If the filter is configured on an egress interface the packet-length or payload-length match condition is always true.

This drop condition is a filter entry action evaluation, and not a filter entry match evaluation. Within this evaluation, the condition is checked after the packet matches the entry based on the specified filter entry match criteria.

Packets that match filter policy entry match criteria and the drop TTL or drop hop limit value are dropped. Packets that match only the filter policy entry match criteria and do not match the drop TTL value or drop hop limit value are forwarded with no further match in following filter entries.

Packets matching this filter entry and not matching the conditional criteria are not logged, counted, or mirrored.

-

pattern conditional drop

Traffic can be dropped when it is based on a pattern found in the packet header or data payload. The pattern is defined by an expression, mask, offset type, and offset value match in the first 256 bytes of a packet.

The pattern expression is up to 8 bytes long. The offset-type command identifies the starting point for the offset value and the supported offset-type command options are:

-

layer-3: layer 3 IP header

-

layer-4: layer 4 protocol header

-

data: data payload for TCP or UDP protocols

-

dns-qtype: DNS request or response query type

The content of the packet is compared with the expression/mask value found at the offset type and offset value as defined in the filter entry. For example, if the pattern is expression 0xAA11, mask 0xFFFF, offset-type data, offset-value 20, the filter entry compares the content of the first 2 bytes in the packet data payload found 20 bytes after the TCP/UDP header with 0xAA11.

This drop condition is a filter entry action evaluation, and not a filter entry match evaluation. Within this evaluation, the condition is checked after the packet matches the entry based on the specified filter entry match criteria.

Packets that match a filter policy's entry match criteria and the pattern, are dropped. Packets that match only the filter policy's entry match criteria and do not match the pattern, are forwarded without a further match in subsequent filter entries.

This filtering capability is supported on ingress IPv4 and IPv6 policies using FP4-based line cards, and cannot be configured on egress. A filter entry using a pattern, is not supported on FP2 or FP3-based line cards. If programmed, the pattern is ignored and the action is forward.

Packets matching this filter entry and not matching the conditional criteria are not logged, counted, or mirrored.

-

-

-

drop extracted traffic

Traffic extracted to the CPM can be dropped using ingress IPv4 and IPv6 filter policies based on filter match criteria. Any IP traffic extracted to the CPM is subject to this filter action, including routing protocols, snooped traffic, and TTL expired traffic.

Packets that match the filter entry match criteria and extracted to the CPM are dropped. Packets that match only the filter entry match criteria and are not extracted to the CPM are forwarded with no further match in the subsequent filter entries.

Cflowd, log, mirror, and statistics apply to all traffic matching the filter entry, regardless of the drop or forward action.

-

forward

Allows users to accept traffic to ingress or egress the system and be subject to regular processing.

-

accept a conditional filter action

Allows users to accept a conditional filter action. Use the following commands to configure a conditional filter action:

-

MD-CLI

configure filter ip-filter entry action accept-when configure filter ipv6-filter entry action accept-when -

classic CLI

configure filter ip-filter entry action forward-when configure filter ipv6-filter entry action forward-when

-

pattern conditional accept

Traffic can be accepted based on a pattern found in the packet header or data payload. The pattern is defined by an expression, mask, offset type, and offset value match in the first 256 bytes of a packet. The pattern expression is up to 8 bytes long. The offset type identifies the starting point for the offset value and the supported offset types are:

-

layer-3: Layer 3 IP header

-

layer-4: Layer 4 protocol header

-

data: data payload for TCP or UDP protocols

-

dns-qtype: DNS request or response query type

-

-

The content of the packet is compared with the expression/mask value found at the offset type and offset value defined in the filter entry. For example, if the pattern is expression 0xAA11, mask 0xFFFF, offset-type data, and offset-value 20, then the filter entry compares the content of the first 2 bytes in the packet data payload found 20 bytes after the TCP/UDP header with 0xAA11.

This accept condition is a filter entry action evaluation, and not a filter entry match evaluation. Within this evaluation, the condition is checked after the packet matches the entry based on the specified filter entry match criteria. Packets that match a filter policy's entry match criteria and the pattern, are accepted. Packets that match only the filter policy's entry match criteria and do not match the pattern, are dropped without a further match in subsequent filter entries.

This filtering capability is supported on ingress IPv4 and IPv6 policies using FP4-based line cards and cannot be configured on egress. A filter entry using a pattern is not supported on FP2 or FP3-based line cards. If programmed, the pattern is ignored and the action is drop.

Packets matching this filter entry and not matching the conditional criteria are not logged, counted, or mirrored.

-

-

FC

Allows users to mark the forwarding class (FC) of packets. This command is supported on ingress IP and IPv6 filter policies. This filter action can be combined with the rate-limit action.

Packets matching this filter entry action bypass QoS FC marking and are still subject to QoS queuing, policing and priority marking.

The QPPB forwarding class takes precedence over the filter FC marking action.

-

rate limit

This action allows users to rate limit traffic matching a filter entry match criteria using IPv4, IPv6, or MAC filter policies.

If multiple interfaces (including LAG interfaces) use the same rate-limit filter policy on different FPs, then the system allocates a rate limiter resource for each FP; an independent rate limit applies to each FP.

If multiple interfaces (including LAG interfaces) use the same rate-limit filter policy on the same FP, then the system allocates a single rate limiter resource to the FP; a common aggregate rate limit is applied to those interfaces.

Note that traffic extracted to the CPM is not rate limited by an ingress rate-limit filter policy while any traffic generated by the router can be rate limited by an egress rate-limit filter policy.

rate-limit filter policy entries can coexist with cflowd, log, and mirror regardless of the outcome of the rate limit. This filter action is not supported on egress on 7750 SR-a.

Rate limit policers are configured with the maximum burst size (MBS) equals the committed burst size (CBS) equals 10 ms of the rate and high-prio-only equals 0.

Interaction with QoS: Packets matching an ingress rate-limit filter policy entry bypass ingress QoS queuing or policing, and only the filter rate limit policer is applied. Packets matching an egress rate-limit filter policy bypass egress QoS policing, normal egress QoS queuing still applies.

- Kilobits-per-second and packets-per-second rate limit

The rate-limit action can be defined using kilobits per second or packets per second and is supported on both ingress and egress filter policies. The MBS value can also be configured using the kilobits-per-second policer.

The packets-per-second rate limit and kilobits-per-second MBS are not supported when using a MAC filter policy and not supported on 7750 SR-a.

- IPv4 packet-length and IPv6 payload-length conditional rate

limit

Traffic can be rate limited based on the IPv4 packet length and IPv6 payload length by specifying a packet-length value or payload-length value or range within the rate-limit filter action. The IPv6 payload-length field does not account for the size of the fixed IP header, which is 40 bytes.

This filter action is supported on ingress IPv4 and IPv6 filter policies only and cannot be configured on egress access or network interfaces.

This rate-limit condition is part of a filter entry action evaluation, and not a filter entry match evaluation. It is checked after the packet is determined to match the entry based on the configured filter entry match criteria.

Packets that match a filter policy’s entry match criteria and the rate-limit packet-length value or rate-limit payload-length value are rate limited. Packets that match only the filter policy’s entry match criteria and do not match the rate-limit packet-length value or rate-limit payload-length value are forwarded with no further match in subsequent filter entries.

Cflowd, logging, and mirroring apply to all traffic matching the ACL entry regardless of the outcome of the rate limiter and regardless of the packet-length value or payload-length value.

-

IPv4 TTL and IPv6 hop-limit conditional rate limit

Traffic can be rate limited based on the IPv4 TTL or IPv6 hop-limit by specifying a TTL or hop-limit value or range within the rate-limit filter action using ingress IPv4 or IPv6 filter policies.

The match condition is part of action evaluation (for example, after the packet is determined to match the entry based on other match criteria configured). Packets that match a filter policy entry match criteria and the rate-limit ttl or hop-limit value are rate limited. Packets that match only the filter policy entry match criteria and do not match the rate-limit ttl or hop-limit value are forwarded with no further matching in the subsequent filter entries.

Cflowd, logging, and mirroring apply to all traffic matching the ACL entry regardless of the outcome of the rate-limit value and the ttl-value or hop-limit-value.

-

pattern conditional rate limit

Traffic can be rate limited when it is based on a pattern found in the packet header or data payload. The pattern is defined by an expression, mask, offset type, and offset value match in the first 256 bytes of a packet. The pattern expression is up to 8 bytes long. The offset-type command identifies the starting point for the offset value and the supported offset-type command options are:

-

layer-3: layer 3 IP header

-

layer-4: layer 4 protocol header

-

data: data payload for TCP or UDP protocols

-

dns-qtype: DNS request or response query type

The content of the packet is compared with the expression/mask value found at the offset-type command option and offset value defined in the filter entry. For example, if the pattern is expression 0xAA11, mask 0xFFFF, offset-type data, and offset value 20, then the filter entry compares the content of the first 2 bytes in the packet data payload found 20 bytes after the TCP/UDP header with 0xAA11.

This rate limit condition is a filter entry action evaluation, and not a filter entry match evaluation. Within this evaluation, the condition is checked after the packet matches the entry based on the specified filter entry match criteria.

Packets that match a filter policy's entry match criteria and the pattern, are rate limited. Packets that match only the filter policy's entry match criteria and do not match the pattern, are forwarded without a further match in subsequent filter entries.

This filtering capability is supported on ingress IPv4 and IPv6 policies using FP4 and FP5-based line cards and cannot be configured on egress. A filter entry using a pattern is not supported on FP2 or FP3-based line cards. If programmed, the pattern is ignored and the system forwards the packet.

Cflowd, logging, and mirroring apply to all traffic matching this filter entry regardless of the pattern value.

-

- extracted traffic conditional rate limit

Traffic extracted to the CPM can be rate limited using ingress IPv4 and IPv6 filter policies based on filter match criteria. Any IP traffic extracted to the CPM is subject to this filter action, including routing protocols, snooped traffic, and TTL expired traffic.

Packets that match the filter entry match criteria and are extracted to the CPM are rate limited by this filter action and not subject to distributed CPU protection policing.

Packets that match only the filter entry match criteria and are not extracted to the CPM are forwarded with no further match in the subsequent filter entries.

Cflowd, logging, and mirroring apply to all traffic matching the ACL entry regardless of the outcome of the rate limit or the extracted conditional match.

- Kilobits-per-second and packets-per-second rate limit

-

Forward Policy-based Routing and Policy-based Forwarding (PBR/PBF) actions

Allows users to allow ingress traffic but change the regular routing or forwarding that a packet would be subject to. The PBR/PBF is applicable to unicast traffic only. The following PBR or PBF actions are supported (See Configuring filter policies with CLI for more information):

-

egress PBR

Enabling egress-pbr activates a PBR action on egress, while disabling egress-pbr activates a PBR action on ingress (default).

The following subset of the PBR actions (defined as follows) can be activated on egress: redirect-policy, next-hop router, and ESI.

Egress PBR is supported in IPv4 and IPv6 filter policies for ESM only. Unicast traffic that is subject to slow-path processing on ingress (for example, IPv4 packets with options or IPv6 packets with hop-by-hop extension header) does not match egress PBR entries. Filter logging, cflowd, and mirror source are mutually exclusive of configuring a filter entry with an egress PBR action. Configuring pbr-down-action-override, if supported with a specific PBR ingress action type, is also supported when the action is an egress PBR action. Processing defined by pbr-down-action-override does not apply if the action is deployed in the wrong direction. If a packet matches a filter PBR entry and the entry is not activated for the direction in which the filter is deployed, the system forwards the packet. Egress PBR cannot be enabled in system filters.

-

ESI

Forwards the incoming traffic using VXLAN tunnel resolved using EVPN MP BGP control plane to the first service chain function identified by ESI (Layer 2) or ESI/SF-IP (Layer 3). Supported with VPLS (Layer 2) and IES/VPRN (Layer 3) services. If the service function forwarding cannot be resolved, traffic matches an entry and action forward is executed.

For VPLS, no cross-service PBF is supported; that is, the filter specifying ESI PBF entry must be deployed in the VPLS service where BGP EVPN control plane resolution takes place as configured for a specific ESI PBF action. The functionality is supported in filter policies deployed on ingress VPLS interfaces. BUM traffic that matches a filter entry with ESI PBF is unicast forwarded to the VTEP:VNI resolved through PBF forwarding.

For IES/VPRN, the outgoing R-VPLS interface can be in any VPRN service. The outgoing interface and VPRN service for BGP EVPN control plane resolution must again be configured as part of ESI PBR entry configuration. The functionality is supported in filter policies deployed on ingress IES/VPRN interfaces and in filter policies deployed on ingress and egress for ESM subscribers. Only unicast traffic is subject to ESI PBR; any other traffic matching a filter entry with Layer 3 ESI action is subjected to action forward.

When deployed in unsupported direction, traffic matching a filter policy ESI PBR/PBF entry is subject to action forward.

-

lsp

Forwards the incoming traffic onto the specified LSP. Supports RSVP-TE LSPs (type static or dynamic only), MPLS-TP LSPs, or SR-TE LSPs. Supported for ingress IPv4/IPv6 filter policies and only deployed on IES SAPs or network interfaces. If the configured LSP is down, traffic matches the entry and action forward is executed.

-

mpls-policy

Redirects the incoming traffic to the active instance of the MPLS forwarding policy specified by its endpoint. This policy is applicable on any ingress interface (egress is blocked). The traffic is subject to a plain forward if no policy matches the one specified, or if the policy has no programmed instance, or if it is applied on non-L3 interface.

-

next-hop address

Changes the IP destination address used in routing from the address in the packet to the address configured in this PBR action. The user can configure whether the next-hop IP address must be direct (local subnet only) or indirect (any IP). In the indirect case, 0.0.0.0 (for IPv4) or :: (for IPv6) is allowed. Default routes may be different per VRF. This functionality is supported for ingress IPv4/IPv6 filter policies only, and is deployed on Layer 3 interfaces.

If the configured next-hop is not reachable, traffic is dropped and a ‟ICMP destination unreachable” message is sent. If indirect is not specified but the IP address is a remote IP address, traffic is dropped.

-

redirect policy

Implements PBR next-hop or PBR next-hop router action with the ability to select and prioritize multiple redirect targets and monitor the specified redirect targets so PBR action can be changed if the selected destination goes down. Supported for ingress IPv4 and IPv6 filter policies deployed on Layer 3 interfaces only. See sectionRedirect policies for further details.

-

remark DSCP

Allows a user to remark the DiffServ Code Points (DSCP) of packets matching filter policy entry criteria. Packets are remarked regardless of QoS-based in- or out-of-profile classification and QoS-based DSCP remarking is overridden. DSCP remarking is supported both as a main action and as an extended action. As a main action, this functionality applies to IPv4 and IPv6 filter policies of any scope and can only be applied at ingress on either access or network interfaces of Layer 3 services only. Although the filter is applied on ingress the DSCP remarking effectively performed on egress. As an extended action, this functionality applies to IPv4 and IPv6 filter policies of any scope and can be applied at ingress on either access or network interfaces of Layer 3 services, or at egress on Layer 3 subscriber interfaces.

-

router

Changes the routing instance a packet is routed in from the upcoming interface’s instance to the routing instance specified in the PBR action (supports both GRT and VPRN redirect). It is supported for ingress IPv4/IPv6 filter policies deployed on Layer 3 interfaces. The action can be combined with the next-hop action specifying direct/indirect IPv4/IPv6 next hop. Packets are dropped if they cannot be routed in the configured routing instance. See section ‟Traffic Leaking to GRT” in the 7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 3 Services Guide: IES and VPRN for more information.

-

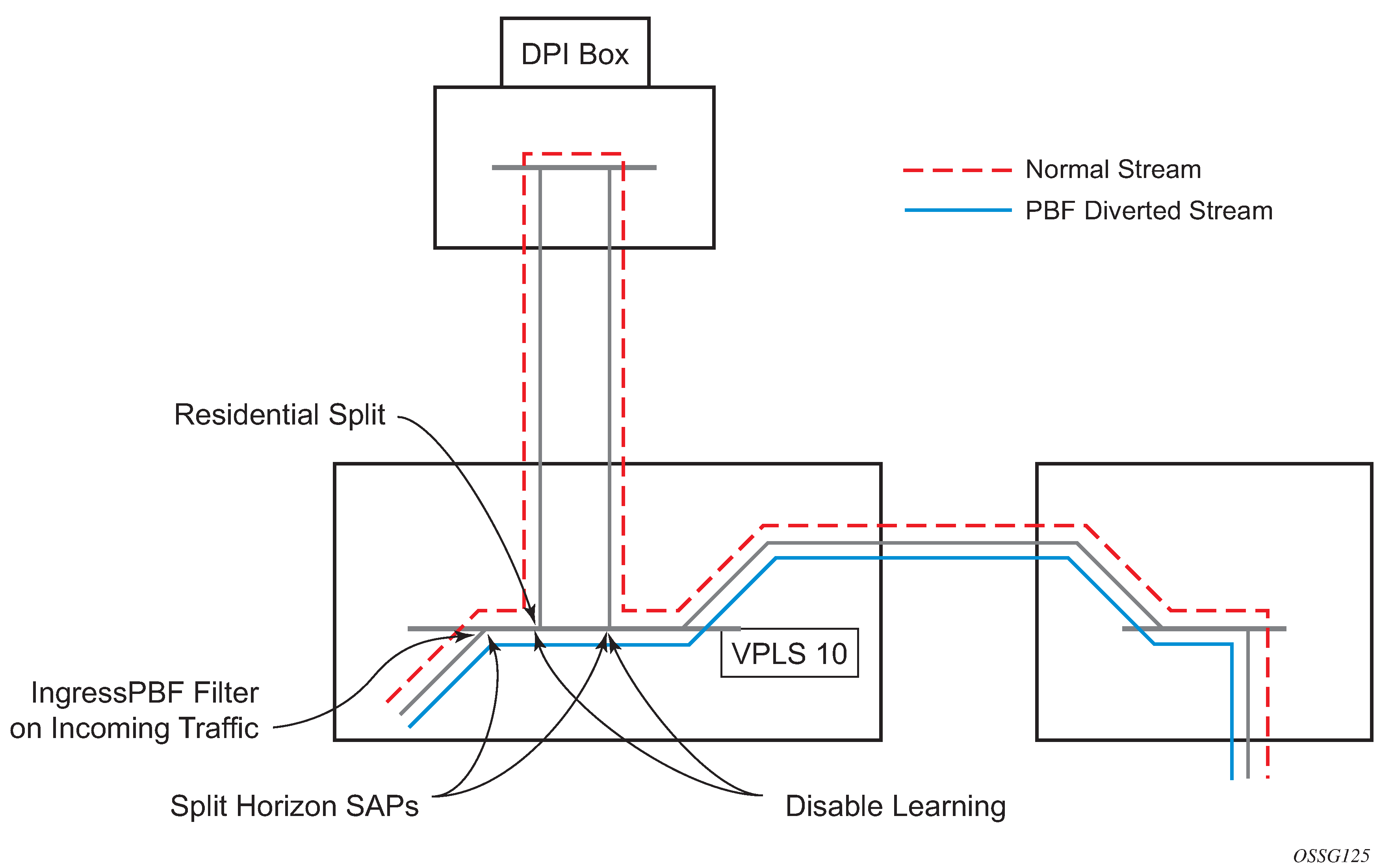

SAP

Forwards the incoming traffic onto the specified VPLS SAP. Supported for ingress IPv4/IPv6 and MAC filter policies deployed in VPLS service. The SAP that the traffic is to egress on must be in the same VPLS service as the incoming interface. If the configured SAP is down, traffic is dropped.

-

sdp

Forwards the incoming traffic onto the specified VPLS SDP. Supported for ingress IPv4/IPv6 and MAC filter policies deployed in VPLS service. The SDP that the traffic is to egress on must be in the same VPLS service as the incoming interface. If the configured SDP is down, traffic is dropped.

-

srte-policy

Redirects the incoming traffic to the active instance of the SR-TE forwarding policy specified by its endpoint and color. This policy is applicable on any ingress interface (egress is blocked). The traffic is subject to a plain forward if no policy matches the one specified, or if the policy has no programmed instance, or if it is applied on non-Layer 3 interface.

-

srv6-policy

Redirects the incoming traffic to the active candidate path of the srv6-policy identified by its endpoint and color. The user must specify a service SID that exists at the far end. The service SID does not need to be from the same context in which the rule is applied. This rule is applicable on any ingress interface and is blocked on egress. The traffic is subject to a simple forwarding process when:-

no policy matches the specified policy

-

the policy has no programmed candidate path

-

the policy is applied on a non-Layer 3 interface

-

the system performs a simple forward action if no SRv6 source address is configured. No SRv6 source address is configured

-

-

vprn-target

Redirects the incoming traffic in a similar manner to combined next-hop and LSP redirection actions, but with greater control and slightly different behavior. This action is supported for both IPv4 and IPv6 filter policies and is applicable on ingress of access interfaces of IES/VPRN services. See Filter policy advanced topics for further details.

Configuring a null endpoint is not blocked but not recommended.

-

-

ISA forward processing actions

ISA processing actions allow users to allow ingress traffic and send it for ISA processing as per specified ISA action. See Configuring filter policies with CLI for command details. The following ISA actions are supported:

-

GTP local breakout

Forwards matching traffic to NAT instead of being GTP tunneled to the mobile user’s PGW or GGSN. The action applies to GTP-subscriber-hosts. If filter is deployed on other entities, action forward is applied. Supported for IPv4 ingress filter policies only. If ISAs performing NAT are down, traffic is dropped.

-

NAT

Forwards matching traffic for NAT. Supported for IPv4/IPv6 filter policies for Layer 3 services in GRT or VPRN. If ISAs performing NAT are down, traffic is dropped.

For classic CLI options, see the 7450 ESS, 7750 SR, 7950 XRS, and VSR Classic CLI Command Reference Guide.

For MD-CLI options, see 7450 ESS, 7750 SR, 7950 XRS, and VSR MD-CLI Command Reference Guide.

-

reassemble

Forwards matching packets to the reassembly function. Supported for IPv4 ingress filter policies only. If ISAs performing reassemble are down, traffic is dropped.

-

TCP for MSS adjustment

Forwards matching packets (TCP SYN) to an ISA BB group for MSS adjustment. In addition to the IP filter, the user also needs to configure the MSS adjust group under the Layer 3 service to specify the group ID and the new segment-size.

-

-

HTTP redirect

Implements the HTTP redirect captive portal. HTTP GET is forwarded to CPM card for captive portal processing by router. See the HTTP redirect (captive portal) section for more information.

-

ignore match

This action allow the user to disable a filter entry, as a result the entry is not programmed in hardware.

In addition to the preceding actions:

A user can select a default action for a filter policy. The default action is executed on packets subjected to an active filter when none of the filter’s active entries matches the packet. By default, filter policies have default action set to drop but the user can select a default action to be forward instead.

-

A user can override default action applied to packets matching a PBR/PBF entry when the PBR/PBF target is down using pbr-down-action-override. Supported options are to drop the packet, forward the packet, or apply the same action as configured for the filter policy's default action. The override is supported for the following PBR/PBF actions. For the last three actions, the override is supported whether in redundancy mode or not.

-

forward ESI (Layer 2 or Layer 3)

-

forward SAP

-

forward SDP

-

forward next-hop indirect router

-

forward vprn-target

Default behavior when a PBR/PBF target is down defines default behavior for packets matching a PBR/PBF filter entry when a target is down.

Table 1. Default behavior when a PBR/PBF target is down PBR/PBF action Default behavior when down Forward esi (any type)

Forward

Forward lsp

Forward

Forward mpls-policy

Forward

Forward next-hop (any type)

Drop

Forward redirect-policy

Forward when redirect policy is shutdown

Forward redirect-policy

Forward when destination tests are enabled and the best destination is not reachable

Forward redirect-policy

Drop when destination tests are not enabled and the best destination is not reachable

Forward sap

Drop

Forward sdp Drop

Forward srte-policy

Forward

Forward router

Drop

Forward vprn-target

Forward

-

Viewing filter policy actions

A number of parameters determine the behavior of a packet after it has been matched to a defined criterion or set of criteria:

the action configured by the user

the context in which a filter policy is applied. For example, applying a filter policy in an unsupported context can result in simply forwarding the packet instead of applying the configured action.

external factors, such as the reachability (according to specific test criteria) of a target

Use the following commands to display how a packet is handled by the system.

show filter ip

show filter ipv6

show filter macThis section describes the key information displayed as part of the output for the preceding show commands, and how to interpret the information.

From a configuration point of view, the show command output displays the main action (primary and secondary), as well as the extended action.

The ‟PBR Target Status” field shows the basic information that the system has of the target based on simple verification methods. This information is only shown for the filter entries which are configured in redundancy mode (that is, with both primary and secondary main actions configured), and for ESI redirections. Specifically, the target status in the case of redundancy depends on several factors; for example, on a match in the routing table for next-hop redirects, or on VXLAN tunnel resolution for ESI redirects.

The ‟Downloaded Action” field specifically describes the action that the system performs on the packets that match the criterion (or criteria). This typically depends on the context in which the filter has been applied (whether it is supported or not), but in the case of redundancy, it also depends on the target status. For example, the downloaded action is the secondary main action when the target associated with the primary action is down. In the nominal (for example, non-failure condition) case the ‟Downloaded Action” reflects the behavior a packet is subject to. However, in transient cases (for example, in the case of a failure) it may not be able to capture what effectively happens to the packet.

The output also displays relevant information such as the default action when the target is down (see Default behavior when a PBR/PBF target is down) as well as the overridden default action when pbr‑down‑action‑override has been configured.

show filter ip effective-action

show filter ipv6 effective-action

show filter mac effective-actionThe criteria for determining when a target is down. While there is little ambiguity on that aspect when the target is local to the system performing the steering action, ambiguity is much more prominent when the target is distant. Therefore, because the use of effective-action triggers advanced tests, a discrepancy is introduced compared to the action when effective-action command option is not used. This is, for example, be the case for redundant actions.

Filter policy statistics

Filter policies support per-entry, packet/byte match statistics. The cumulative matched packet/Byte counters are available per ingress and per egress direction. Every packet arriving on an interface/service/subscriber using a filter policy increments ingress or egress (as applicable) matched packet/Byte count for a filter entry the packet matches (if any) on the line card the packet ingresses/egresses. For each policy, the counters for all entries are collected from all line cards, summarized and made available to an operator.

Filter policies applied on access interfaces are downloaded only to line cards that have interfaces associated with those filter policies. If a filter policy is not downloaded to any line card, the statistics show 0. If a filter policy is being removed from any of the line cards the policy is currently downloaded to (as result of association change or when a filter becomes inactive), the associated statistics are reset to 0.

Downloading a filter policy to a new line card continues incrementing existing statistics.

Operational notes:

Conditional action match criteria filter entries for ttl, hop-limit, packet-length, and payload-length support logging and statistics when the condition is met, allowing visibility of filter matched and action executed. If the condition is not met, packets are not logged and statistics against the entry are not incremented.

Filter policy logging

The SR OS supports logging of information from the packets that match a specific filter policy. Logging is configurable per filter policy entry by specifying a preconfigured filter log using the following command.

configure filter logA filter log can be applied to ACL filters. Operators can configure multiple filter logs and specify the following:

memory allocated to a filter log destination

Syslog ID for a filter log destination

filter logging summarization

wrap-around behavior

The following are notes related to filter log summarization.

The implementation of the feature applies to filter logs with destination Syslog.

Summarization logging is the collection and summarization of log messages for one specific log ID within a period of time.

The summarization interval is 100 seconds.

-

Upon activation of a summary, a mini-table with a source and destination address and a count is created for each filter type (IPv4, IPv6, and MAC).

Every received log packet (according to filter match) is examined for source or destination address.

If the log packet (source or destination address) matches a source or destination address entry in the mini-table as a result of a packet previously received, the summary counter of the matching address is incremented.

If the source or destination address of the log messages does not match an entry already present in the table, the source or destination address is stored in a free entry in the mini-table.

When the mini-table has no more free entries, only the total counter is incremented.

Upon the expiry of the summarization interval, the mini-table for each type is flushed to the Syslog destination.

Operational note

Conditional action match criteria filter entries for TTL, hop limit, packet length, and payload length support logging and statistics when the condition is met, allowing visibility of filter matched and action executed. If the condition is not met, packets are not logged and statistics against the entry are not incremented.

Filter policy cflowd sampling

Filter policies can be used to control how cflowd sampling is performed on an IP interface. If an IP interface has cflowd sampling enabled, a user can exclude some flows for interface sampling by configuring filter policy rules that match the flows and by disabling interface sampling as part of the filter policy entry configurations. Use the following commands to disable interface sampling:

-

MD-CLI

configure filter ip-filter entry interface-sample false configure filter ipv6-filter entry interface-sample false -

classic CLI

configure filter ip-filter entry interface-disable-sample configure filter ipv6-filter entry interface-disable-sample

If an IP interface has cflowd sampling disabled, a user can enable cflowd sampling on a subset of flows by configuring filter policy rules that match the flows and by enabling cflowd sampling as part of the filter policy entry configurations. Use the following commands to enable cflowd sampling on a subset of flows:

-

MD-CLI

configure filter ip-filter entry filter-sample true configure filter ipv6-filter entry filter-sample true -

classic CLI

configure filter ip-filter entry filter-sample configure filter ipv6-filter entry filter-sample

The preceding cflowd filter sampling behavior is exclusively driven by match criteria. The sampling logic applies regardless of whether an action was executed (including evaluation of conditional action match criteria, for example, packet length or TTL).

Filter policy management

Modifying Existing Filter Policy

There are several ways to modify an existing filter policy. A filter policy can be modified through configuration change or can have entries populated through dynamic, policy-controlled dynamic interfaces; for example, RADIUS, OpenFlow, FlowSpec, or Gx. Although in general, SR OS ensures filter resources exist before a filter can be modified, because of the dynamic nature of the policy-controlled interfaces, a configuration that was accepted may not be applied in H/W because of lack of resources. When that happens, an error is raised.

A filter policy can be modified directly—by changing/adding/deleting the existing entry in that filter policy—or indirectly. Examples of indirect change to filter policy include changing embedded filter entry this policy embeds (see the Filter policy scope and embedded filters section) or changing redirect policy this filter policy uses.

Finally, a filter policy deployed on a specific interface can be changed by changing the policy the interface is associated with.

All of the preceding changes can be done in service. A filter policy that is associated with service/interface cannot be deleted unless all associations are removed first.

For a large (complex) filter policy change, it may take a few seconds to load and initiate the filter policy configuration. Filter policy changes are downloaded to line cards immediately; therefore, users should use filter policy copy or transactional CLI to ensure partial policy change is not activated.

Filter policy copy

Perform bulk operations on filter policies by copying one filter’s entries to another filter. Either all entries or a specified entry of the source filter can be selected to copy. When entries are copied, entry order is preserved unless the destination filter’s entry ID is selected (applicable to single-entry copy).

Filter policy copy and renumbering in classic CLI

SR OS supports entry copy and entry renumbering operations to assist in filter policy management.

Use the following commands to copy and overwrite filter entries.

configure filter copy ip-filter

configure filter copy ipv6-filter

configure filter copy mac-filterThe copy command allows overwriting of the existing entries in the destination filter by specifying the overwrite command option when using the copy command. Copy can be used, for example, when creating new policies from existing policies or when modifying an existing filter policy (an existing source policy is copied to a new destination policy, the new destination policy is modified, then the new destination policy is copied back to the source policy with overwrite specified).

Entry renumbering allows you to change the relative order of a filter policy entry by changing the entry ID. Entry renumbering can also be used to move two entries closer together or further apart, thereby creating additional entry space for new entries.

Filter policy advanced topics

Match list for filter policies

The filter match lists ip-prefix-list, ipv6-prefix-list, protocol-list, port-list, ip-packet-length-list, and ipv6-packet-length-list define a list of IP prefixes, IP protocols, TCP-UDP ports, and packet-length values or ranges that can be used as match criteria for line card IP and IPv6 filters. Additionally, ip-prefix-list, ipv6-prefix-list, and port-list can also be used in CPM filters.

A match list simplifies the filter policy configuration with multiple prefixes, protocols, or ports that can be matched in a single filter entry instead of creating an entry for each.

The same match list can be used in one or many filter policies. A change in match list content is automatically propagated across all policies that use that list.

Apply-path

The router supports the autogeneration of IPv4 and IPv6 prefix list entries for BGP peers which are configured in the base router or in VPRN services. Use the following commands to configure the autogeneration of IPv6 or IPv4 prefix list entries.

configure filter match-list ip-prefix-list apply-path

configure filter match-list ipv6-prefix-list apply-path

This capability simplifies the management of CPM filters to allow BGP control traffic from trusted configured peers only. By using the apply-path filter, the user can:

specify one or more regex expression matches per match list, including wildcard matches (".*")

mix auto-generated entries with statically configured entries within a match list

Additional rules are applied when using apply-path as follows:

Operational and administrative states of a specific router configuration are ignored when auto-generating address prefixes.

Duplicates are not removed when populated by different auto-generation matches and static configuration.

Configuration fails if auto-generation of an address prefix results in the filter policy resource exhaustion on a filter entry, system, or line card level.

Prefix-exclude

A prefix can be excluded from an IPv4 or IPv6 prefix list by using the prefix-exclude command.

For example, when the user needs to drop or forward traffic to 10.0.0.0/16 with the exception of 10.0.2.0/24, the following options are available.

By applying prefix-exclude, a single IP prefix list with two prefixes is configured:

MD-CLI

[ex:/configure filter match-list]

A:admin@node-2# info

ip-prefix-list "list-1" {

prefix 10.0.0.0/16 { }

prefix-exclude 10.0.2.0/24 { }

}classic CLI

A:node-2>config>filter>match-list# info

----------------------------------------------

ip-prefix-list "list-1" create

prefix 10.0.0.0/16

prefix-exclude 10.0.2.0/24

exit

----------------------------------------------Without applying prefix-exclude, all eight included subnets should be manually configured in the IP prefix list. The following example shows the manual configuration of an IP prefix list.

MD-CLI

[ex:/configure filter match-list]

A:admin@node-2# info

ip-prefix-list "list-1" {

prefix 10.0.0.0/16 { }

prefix 10.0.0.0/23 { }

prefix 10.0.3.0/24 { }

prefix 10.0.4.0/22 { }

prefix 10.0.8.0/21 { }

prefix 10.0.16.0/20 { }

prefix 10.0.32.0/19 { }

prefix 10.0.64.0/18 { }

prefix 10.0.128.0/17 { }

}classic CLI

A:node-2>config>filter>match-list# info

----------------------------------------------

ip-prefix-list "list-1" create

prefix 10.0.0.0/16

prefix 10.0.0.0/23

prefix 10.0.3.0/24

prefix 10.0.4.0/22

prefix 10.0.8.0/21

prefix 10.0.16.0/20

prefix 10.0.32.0/19

prefix 10.0.64.0/18

prefix 10.0.128.0/17

exit

----------------------------------------------This is a time consuming and error-prone task compared to using the prefix-exclude command.

The filter resources, consumed in hardware, are identical between the two configurations.

A filter match-list using prefix-exclude is mutually exclusive with apply-path, and is not supported as a match criterion in CPM filter.

Configured prefix-exclude prefixes are ignored when no overlapping larger subnet is configured in the prefix-list. For example: prefix-exclude 1.1.1.1/24 is ignored if the only included subnet is 10.0.0.0/16.

Filter policy scope and embedded filters

The system supports four different filter policies:

-

scope template

-

scope exclusive

-

scope embedded

-

scope system

Each scope provides different characteristics and capabilities to deploy a filter policy on a single interface, multiple interfaces or optimize the use of system resources or the management of the filter policies when sharing a common set of filter entries.

Template and exclusive

A scope template filter policy can be reused across multiple interfaces. This filter policy uses a single set of resources per line card regardless of how many interfaces use it. Template filter policies used on access interfaces consume resources on line cards where the access interfaces are configured only. A scope template filter policy is the most common type of filter policies configured in a router.

A scope exclusive filter policy defines a filter dedicated to a single interface. An exclusive filter allows the highest level of customization but uses the most resources on the system line cards as it cannot be shared with other interfaces.

Embedded

To simplify the management of filters sharing a common set of filter entries, the user can create a scope embedded filter policy. This filter can then be included in (embedded into) a scope template, scope exclusive, or scope system filter.

Using a scope embedded filter, a common set of filter entries can be updated in a single place and deployed across multiple filter policies. The scope embedded is supported for IPv4 and IPv6 filter policies.

A scope embedded filter policy is not directly downloaded to a line card and cannot be directly referenced in an interface. However, this policy helps the network user provision a common set of rules across different filter policies.

The following rules apply when using a scope embedded filter policy:

-

The user explicitly defines the offset at which to insert a filter of scope embedded in a template, exclusive, or system filter. The embedded filter entry-id X becomes entry-id (X + offset) in the main filter.

-

Multiple filter scope embedded policies can be included (embedded into) in a single filter policy of scope template, exclusive, or system.

-

The same scope embedded filter policy can be included in multiple filter policies of scope template, exclusive, or system.

-

Configuration modifications to embedded filter policy entries are automatically applied to all filter policies that embed this filter.

-

The system performs a resource management check when a filter policy of scope embedded is updated or embedded in a new filter. If resources are not available, the configuration is rejected. In rare cases, a filter policy resource check may pass but the filter policy can still fail to load because of a resource exhaustion on a line card (for example, when other filter policy entries are dynamically configured by applications like RADIUS in parallel). If that is the case, the embedded filter policy configured is deactivated (configuration is changed from activate to inactivate).

-

An embedded filter is never embedded partially in a single filter and resources must exist to embed all the entries in a specific exclusive, template or system filter. However, an embedded filter may be embedded only in a subset of all the filters it is referenced into, only those where there are sufficient resources available.

-

Overlapping of filter entries between an embedded filter and a filter of scope template, exclusive or system filter can happen but should be avoided. It is recommended instead that network users use a large enough offset value and an appropriate filter entry-id in the main filter policy to avoid overlapping. In case of overlapping entries, the main filter policy entry overwrites the embedded filter entry.

-

Configuring a default action in a filter of scope embedded is not required as this information is not used to embed filter entries.

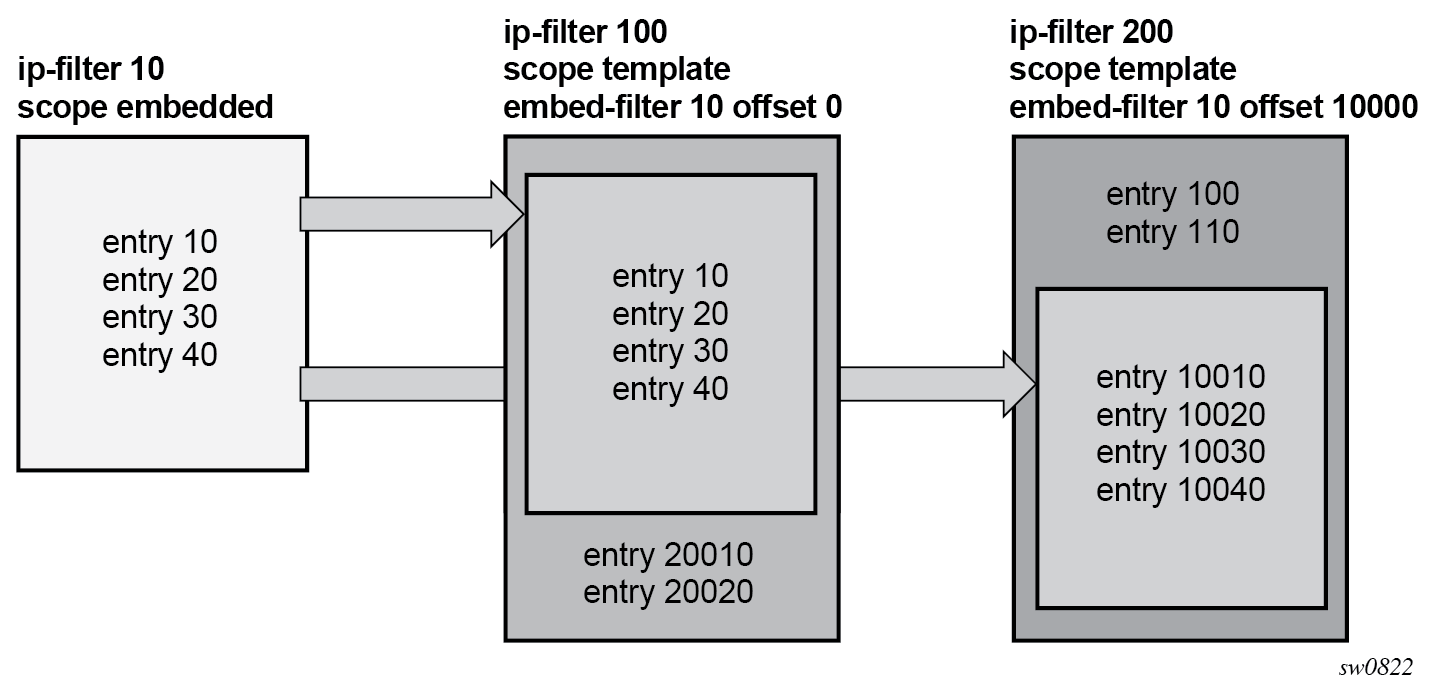

Embedded Filter Policy shows a configuration with two filter policies of scope template, filter 100 and 200 each embed filter policy 10 at a different offset:

-

Filter policy 100 and 200 are of scope template.

-

Filter policy 10 of scope embedded is configured with 4 filter entries: entry-id 10, 20, 30, 40.

-

Filter policy 100 embed filter 10 at offset 0 and includes two additional static entries with entry-id 20010 and 20020.

-

Filter policy 200 embed filter 10 at offset 10000 and includes two additional static entries with entry-id 100 and 110.

-

As a result, filter 100 automatically creates entry 10, 20, 30, 40 while filter 200 automatically creates entry 10010, 10020, 10030, 10040. Filter policy 100 and 200 consumed in total 12 entries when both policies are installed in the same line card.

Scope embedded filter configuration (MD-CLI)

[ex:/configure filter]

A:admin@node-2# info

...

ip-filter "10" {

scope embedded

entry 10 {

}

entry 20 {

}

entry 30 {

}

entry 40 {

}

}

ip-filter "100" {

scope template

entry 20010 {

}

entry 20020 {

}

embed {

filter "10" offset 0 {

}

}

}

ip-filter "200" {

scope template

entry 100 {

}

entry 110 {

}

embed {

filter "10" offset 10000 {

}

}

}

Scope embedded filter configuration (classic CLI)

A:node-2>config>filter# info

----------------------------------------------

ip-filter 10 name "10" create

scope embedded

entry 10 create

exit

entry 20 create

exit

entry 30 create

exit

entry 40 create

exit

exit

ip-filter 100 name "100" create

scope template

embed-filter 10

entry 20010 create

exit

entry 20020 create

exit

exit

ip-filter 200 name "200" create

scope template

embed-filter 10 offset 10000

entry 100 create

exit

entry 110 create

exit

exit

----------------------------------------------

System

The scope system filter policy provides the most optimized use of hardware resources by programming filter entries after the line cards regardless of how many IPv4 or IPv6 filter policies of scope template or exclusive use this filter. The system filter policy entries are not duplicated inside each policy that uses it, instead, template or exclusive filter policies can be chained to the system filter using the chain-to-system-filter command.

When a template of exclusive filter policy is chained to the system filter, system filter rules are evaluated first before any rules of the chaining filter are evaluated (that is chaining filter's rules are only matched against if no system filter match took place).

The system filter policy is intended primarily to deploy a common set of system-level deny rules and infrastructure-level filtering rules to allow, block, or rate limit traffic. Other actions like, for example, PBR actions, or redirect to ISAs should not be used unless the system filter policy is activated only in filters used by services that support such action. The NAT action is not supported and should not be configured. Failure to observe these restrictions can lead to unwanted behavior as system filter actions are not verified against the services the chaining filters are deployed for. System filter policy entries also cannot be the sources of mirroring.

System filter policies can be populated using CLI, SNMP, NETCONF, OpenFlow and FlowSpec. System filter policy entries cannot be populated using RADIUS or Gx.

The following example shows the configuration of an IPv4 system filter:

-

System filter policy 10 includes a single entry to rate limit NTP traffic to the Infrastructure subnets.

-

Filter policy 100 of scope template is configured to use the system filter using the chain-to-system-filter command.

IPv4 system filter configuration (MD-CLI)

[ex:/configure filter]

A:admin@node-2# info

ip-filter "10" {

scope system

entry 10 {

description "Rate Limit NTP to the Infrastructure"

match {

protocol udp

dst-ip {

ip-prefix-list "Infrastructure IPs"

}

dst-port {

eq 123

}

}

action {

accept

rate-limit {

pir 2000

}

}

}

}

ip-filter "100" {

description "Filter scope template for network interfaces"

chain-to-system-filter true

}

system-filter {

ip "10" { }

}

IPv4 system filter configuration (classic CLI)

A:node-2>config>filter# info

----------------------------------------------

ip-filter 10 name "10" create

scope system

entry 10 create

description "Rate Limit NTP to the Infrastructure"

match protocol udp

dst-ip ip-prefix-list "Infrastructure IPs"

dst-port eq 123

exit

action

rate-limit 2000

exit

exit

exit

ip-filter 100 name "100" create

chain-to-system-filter

description "Filter scope template for network interfaces"

exit

system-filter

ip 10

exit

----------------------------------------------

Filter policy type

The filter policy type defines the list of match criteria available in a filter policy. It provides filtering flexibility by reallocating the CAM in the line card at the filter policy level to filter traffic using additional match criteria not available using filter type normal. The filter type is specific to the filter policy, it is not a system wide or line card command option. You can configure different filter policy types on different interfaces of the same system and line card.

MAC filter supports three different filter types: normal, ISID, or VID.

IPv4 and IPv6 filters support four different filter types: normal, source MAC, packet length, or destination class.

IPv4, IPv6 filter type source MAC

This filter policy type provides source MAC match criterion for IPv4 and IPv6 filters.

The following match criteria are not available for filter entries in a source MAC filter policy type:

-

IPv4

source IP, DSCP, IP option, option present, multiple option, source-route option

IPv6

source IP

For a QoS policy assigned to the same service or interface endpoint as a filter policy of type source MAC, QoS IP criteria cannot use source IP or DSCP and QoS IPv6 criteria cannot use source IP.

Filter type source MAC is available for egress filtering on VPLS services only. R-VPLS endpoints are not supported.

Dynamic filter entry embedding using Openflow, FlowSpec and VSD is not supported using this filter type.

IPv4, IPv6 filter type packet-length

The following match criteria are available using packet-length filter type, in addition to the match criteria that are available using the normal filter type:

-

packet length

Total packet length including both the IP header and payload for IPv4 and IPv6 ingress and egress filter policies.

-

TTL or hop limit

Match criteria available using FP4-based cards and ingress filter policies; if configured on FP2- or FP3-based cards, the TTL or \hop-limit match criteria part of the filter entries are not programmed in the line card.

The following match criteria are not available for filter entries in a packet-length type filter policy:

-

IPv4

DSCP, IP option, option present, multiple option, source-route option

-

IPv6

flow label

For a QoS policy assigned to the same service or interface endpoint on egress as a packet-length type filter policy, QoS IP criteria cannot use DSCP match criteria with no restriction to ingress.

This filter type is available for both ingress and egress on all service and router interfaces endpoints with the exception of video ISA, service templates, and PW templates.

Dynamic filter entry embedding using OpenFlow and VSD is not supported using this filter type.

IPv4, IPv6 filter type destination-class

This filter policy provides BGP destination-class value match criterion capability using egress IPv4 and IPv6 filters, and is supported on network, IES, VPRN, and R-VPLS.

The following match criteria from the normal filter type are not available using the destination-class filter type:

-

IPv4

DSCP, IP option, option present, multiple option, source-route option

-

IPv6

flow label

Filtering egress on destination class requires the destination-class-lookup command to be enabled on the interface that the packet ingresses on. For a QoS policy or filter policy assigned to the same interface, the DSCP remarking action is performed only if a destination-class was not identified for this packet.

System filters, as well as dynamic filter embedding using OpenFlow, FlowSpec, and VSD, are not supported using this filter type.

IPv4 and IPv6 filter type and embedding

IPv4 and IPv6 filter policy of scope embedded must have the same type as the main filter policy of scope template, exclusive or system embedding it:

If this condition is not met the filter cannot be embedded.

When embedded, the main filter policy cannot change the filter type if one of the embedded filters is of a different type.

When embedded, the embedded filter cannot change the filter type if it does not match the main filter policy.

Similarly, the system filter type must be identical to the template or exclusive filter to allow chaining when using the chain-to-system-filter command.

Rate limit and shared policer

By default, when a user assigns a filter policy to a LAG endpoint, the system allocates the same user-configured rate limit policer value for each FP of the LAG.

The shared policer feature changes this default behavior. When configured to true, the filter policy can only be assigned to endpoints of the same LAG and the configured rate limit policer value is shared between the LAG complexes based on the number of active ports in the LAG on each complex.

The shared policer feature is supported for IPv4 and IPv6 filter policies with the template or exclusive scope, in ingress and egress directions.

Filter policies and dynamic policy-driven interfaces

Filter policy entries can be statically configured using CLI, SNMP, or NETCONF or dynamically created using BGP FlowSpec, OpenFlow, VSD (XMPP), or RADIUS/Diameter for ESM subscribers.

Dynamic filter entries for FlowSpec, OpenFlow, and VSD can be inserted into an IPv4 or IPv6 filter policy. The filter policy must be either exclusive or a template. Additionally, FlowSpec embedding is supported when using a filter policy that defines system-wide filter rules.

BGP FlowSpec

BGP FlowSpec routes are associated with a specific routing instance (based on the AFI/SAFI and possibly VRF import policies) and can be used to create filter entries in a filter policy dynamically.

Configure FlowSpec embedding using the following contexts:

-

MD-CLI

configure filter ip-filter embed flowspec configure filter ipv6-filter embed flowspec -

classic CLI

configure filter ip-filter embed-filter flowspec configure filter ipv6-filter embed-filter flowspec

The following rules apply to FlowSpec embedding:

-

The user explicitly defines both the offset at which to insert FlowSpec filter entries and the router instance the FlowSpec routes belong to. The embedded FlowSpec filter entry ID is chosen by the system, in accordance with RFC 5575 Dissemination of Flow Specification Rules.

Note: These entry IDs are not necessarily sequential and do not necessarily follow the order at which a rule is received. -

The user can configure the maximum number of FlowSpec filter entries in a specific filter policy at the router or VPRN level using the ip-filter-max-size and ipv6-filter-max-size commands. This limit defines the boundary for FlowSpec embedding in a filter policy (the offset and maximum number of IPv4 or IPv6 FlowSpec routes).

-

When the user configures a template or exclusive filter policy, the router instance defined in the dynamic filter entry for FlowSpec must match the router interface that the filter policy is applied to.

-

When using a filter policy that defines system-wide rules, embedding FlowSpec entries from different router instances is allowed and can be applied to any router interfaces.

-

See section IPv4/IPv6 filter policy entry match criteria on embedded filter scope for recommendations on filter entry ID spacing and overlapping of entries.

The following information describes the FlowSpec configuration that follows:

-

The maximum number of FlowSpec routes in the base router instance is configured for 50,000 entries using the ip-filter-max-size command.

-

The filter policy 100 (template) is configured to embed FlowSpec routes from the base router instance at offset 100,000. The offset chosen in this example avoids overlapping with statically defined entries in the same policy. In this case, the statically defined entries can use the entry ID range 1-99999 and 149999-2M for defining static entries before or after the FlowSpec filter entries.

The following example shows the FlowSpec configuration.

FlowSpec configuration (MD-CLI)

[ex:/configure router "Base"]

A:admin@node-2# info

flowspec {

ip-filter-max-size 50000

}

[ex:/configure filter ip-filter "100"]

A:admin@node-2# info

...

ip-filter "100" {

embed {

flowspec offset 100000 {

router-instance "Base"

}

}

}

FlowSpec configuration (classic CLI)

A:node-2>config>router# info

----------------------------------------------

flowspec

ip-filter-max-size 50000

exit

----------------------------------------------

A:7750>config>filter# info

----------------------------------------------

ip-filter 100 name "100" create

embed-filter flowspec router "Base" offset 100000

exit

----------------------------------------------

OpenFlow

The embedded filter infrastructure is used to insert OpenFlow rules into an existing filter policy. See Hybrid OpenFlow switch for more information. Policy-controlled auto-created filters are re-created on system reboot. Policy controlled filter entries are lost on system reboot and need to be reprogrammed.

VSD

VSD filters are created dynamically using XMPP and managed using a Python script so rules can be inserted into or removed from the correct VSD template or embedded filters. XMPP messages received by the 7750 SR are passed transparently to the Python module to generate the appropriate CLI. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 2 Services and EVPN Guide for more information about VSD filter provisioning, automation, and Python scripting details.

RADIUS or Diameter for subscriber management:

The user can assign filter policies or filter entries used by a subscriber within a preconfigured filter entry range defined for RADIUS or Diameter. See the 7450 ESS, 7750 SR, and VSR Triple Play Service Delivery Architecture Guide and filter RADIUS-related commands for more information.