Port Cross-Connect

PXC terminology

The following describes Port Cross-Connect (PXC) terminology:

- Port Cross-Connect (PXC)

PXC is a software concept representing a pair of logical ports interconnecting egress and ingress forwarding paths within the same forwarding complex.

The physical underpinning of a PXC can be either of the following:

-

a faceplate (physical) port in a loopback mode

The PXC is referred to as a port-based PXC. Multiple PXCs can be created per a faceplate port.

-

a loopback configuration in the MAC chip

The PXC is referred to as an internal or MAC-based PXC. Multiple PXCs can be created per MAC loopback.

-

-

PXC sub-port

PXC sub-port is a logical port that is created under the PXC. Two interconnected PXC sub-ports are created per PXC. This is further described in Port-based PXC.

-

Forwarding Complex (FC)

FC is a chipset connected to a set of faceplate ports that processes traffic in the ingress direction (the ingress path) and the egress direction (the egress path). A line card can contain multiple FCs for increased throughput, while the inverse is not true, a single FC cannot be distributed over multiple line cards.

The terms cross-connect and loopback can be used interchangeably.

Overview

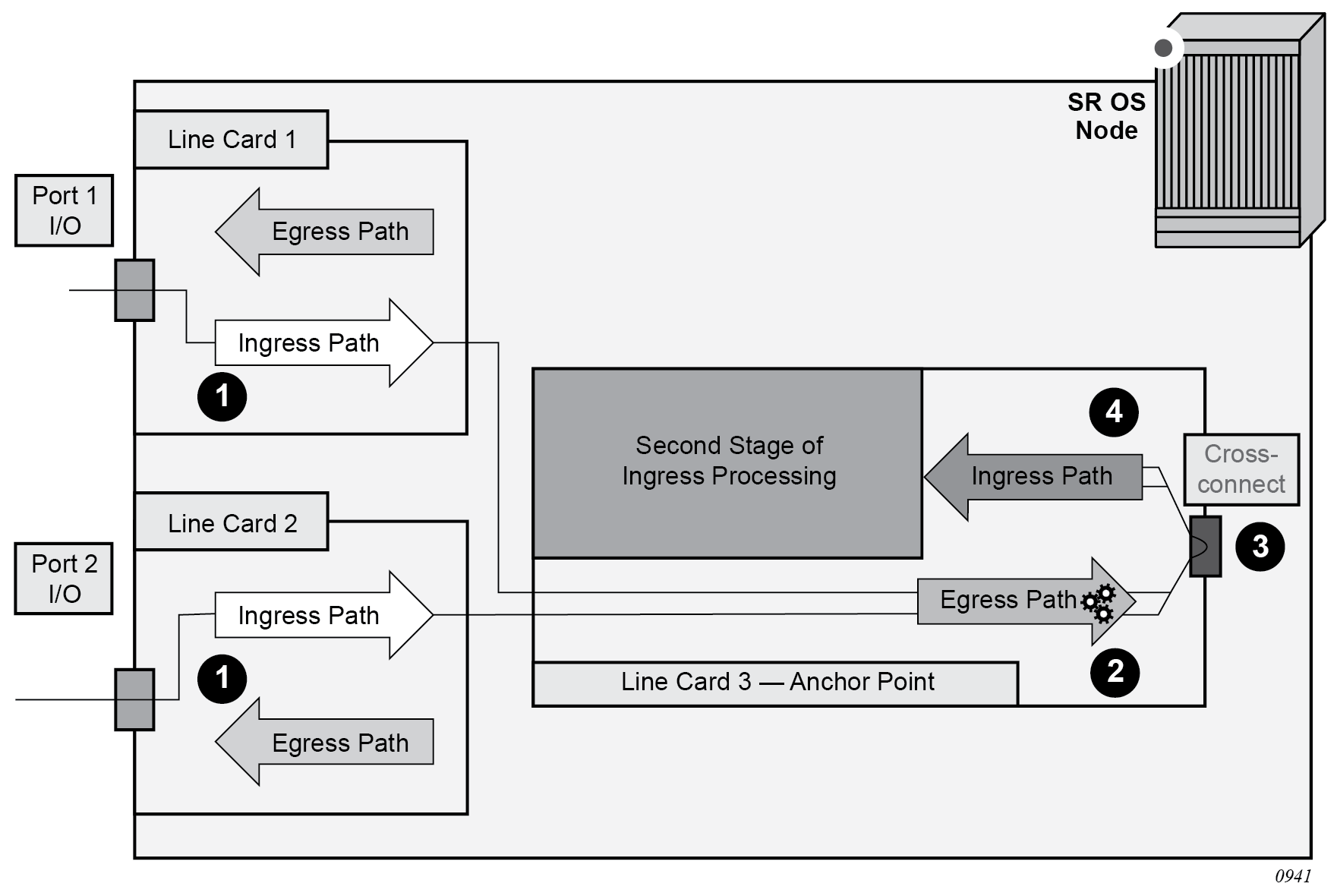

This section describes the Port Cross-Connect (PXC) feature implementation. PXC is a software concept representing a pair of logical ports interconnecting egress and ingress forwarding paths within the same forwarding complex (FC). In cross-connect functionality, an egress forwarding path is looped back to the ingress forwarding path on the same forwarding complex instead of leading out of the system. The FC is a chipset connected to a set of faceplate ports that processes traffic in the ingress direction (the ingress path) and the egress direction (the egress path). A line card can contain multiple FCs for increased throughput, but a single FC cannot be distributed over multiple line cards. The most common use for a cross-connect configuration is to process traffic entering the node. In this case, traffic passes through the ingress path twice. The first ingress pass is always on the FC on which traffic enters the node (an ingress line card), while the second ingress pass, achieved through the cross-connect, can be on any forwarding complex. The user can select to co-locate the ingress line card and the line card hosting the cross-connect. In this co-located case, traffic is looped through the same ingress forwarding path twice.

The reasons for dual-stage ingress processing are related to the manipulation of multilayer headers in the frame within the service termination context. This operation is, in some instances, too complex to perform in a single stage. Feeding the traffic from the first ingress stage to the second through the cross-connect is shown in Traffic preprocessing using PXC. A cross-connect can be created in two ways:

using a faceplate (physical) port in a loopback mode

using a loopback configuration in the MAC chip, which does not require a faceplate port

In both cases, the cross-connect is modeled in the system and in the CLI as a port, appropriately naming the feature Port Cross-Connect (PXC) software concept representing a pair of logical ports interconnecting egress and ingress forwarding paths within the same forwarding complex.

Conceptually, PXC functionality is similar to the functionality provided by two externally interconnected faceplate ports where traffic exits the system through one port (the egress path) and is immediately looped back into another port (the ingress path) through a cable.

Traffic preprocessing using PXC shows the traffic flow from the first to the second stage through a cross-connect in a system with PXC:

-

Traffic entering a node through a faceplate port is processed by the local ingress forwarding path (1) on the line cards 1 and 2. Traffic is then directed toward the PXC (3) on the line card 3.

-

The PXC (3) loops the traffic from the local egress path (2) into the local ingress forwarding path (4) where it is further processed.

Port-based PXC

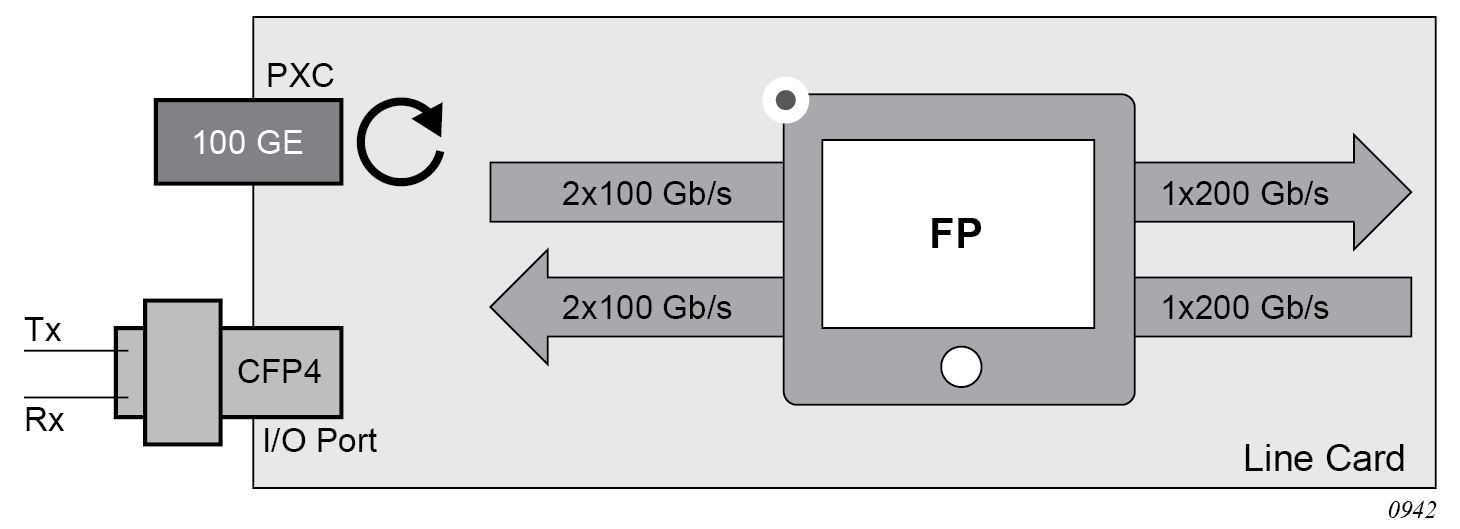

The concept of a port-based PXC (a PXC based on a faceplate port in loopback mode) is shown in Port-based PXC. This PXC does not require an optical transceiver.

Place the faceplate port into a cross-connect mode (MD-CLI)

[ex:/configure]

A:admin@node-2# info

port-xc {

pxc 1 {

admin-state enable

port-id 1/x1/1/c1/1

}

}Place the faceplate port into a cross-connect mode (classic CLI)

A:node-2>config>port-xc# info

----------------------------------------------

pxc 1 create

port 1/x1/1/c1/1

no shutdown

exit

exit

----------------------------------------------Multiple PXCs on the same underlying cross-connect configuration (MD-CLI)

[ex:/configure]

A:admin@node-2# info

port-xc {

pxc 1 {

admin-state enable

port-id 1/x1/1/c1/1

}

pxc 2 {

admin-state enable

port-id 1/x1/1/c1/1

}

pxc 3 {

admin-state enable

port-id 1/x1/1/c1/1

}

}Multiple PXCs on the same underlying cross-connect configuration (classic CLI)

A:node-2>config>port-xc# info

----------------------------------------------

pxc 1 create

port 1/x1/1/c1/1

no shutdown

exit

pxc 2 create

shutdown

port 1/x1/1/c1/1

exit

pxc 3 create

shutdown

port 1/x1/1/c1/1

exit

exit

A faceplate port that has been placed in the loopback mode for PXC use, supports only hybrid mode of operation and dot1q encapsulation. The recommendation is that the MTU value be configured to the maximum value. dot1x tunneling is enabled and cannot be changed.

The pre-set dot1q Ethernet encapsulation on the faceplate port is irrelevant from the user’s perspective and there is no need to change it. The relevant encapsulation carrying service tags defined on PXC subports and that encapsulation is configurable. For more information, see PXC sub-ports.

The following guidelines apply to a PXC configuration based on faceplate ports:

-

Only unused faceplate ports (not associated with an interface or SAP) can be referenced within a PXC ID configuration.

-

When the faceplate port is allocated to a PXC, it cannot be used outside of the PXC context. For example, an IP interface cannot use the faceplate port directly, or a SAP under a such port cannot be associated with an Epipe or VPLS service.

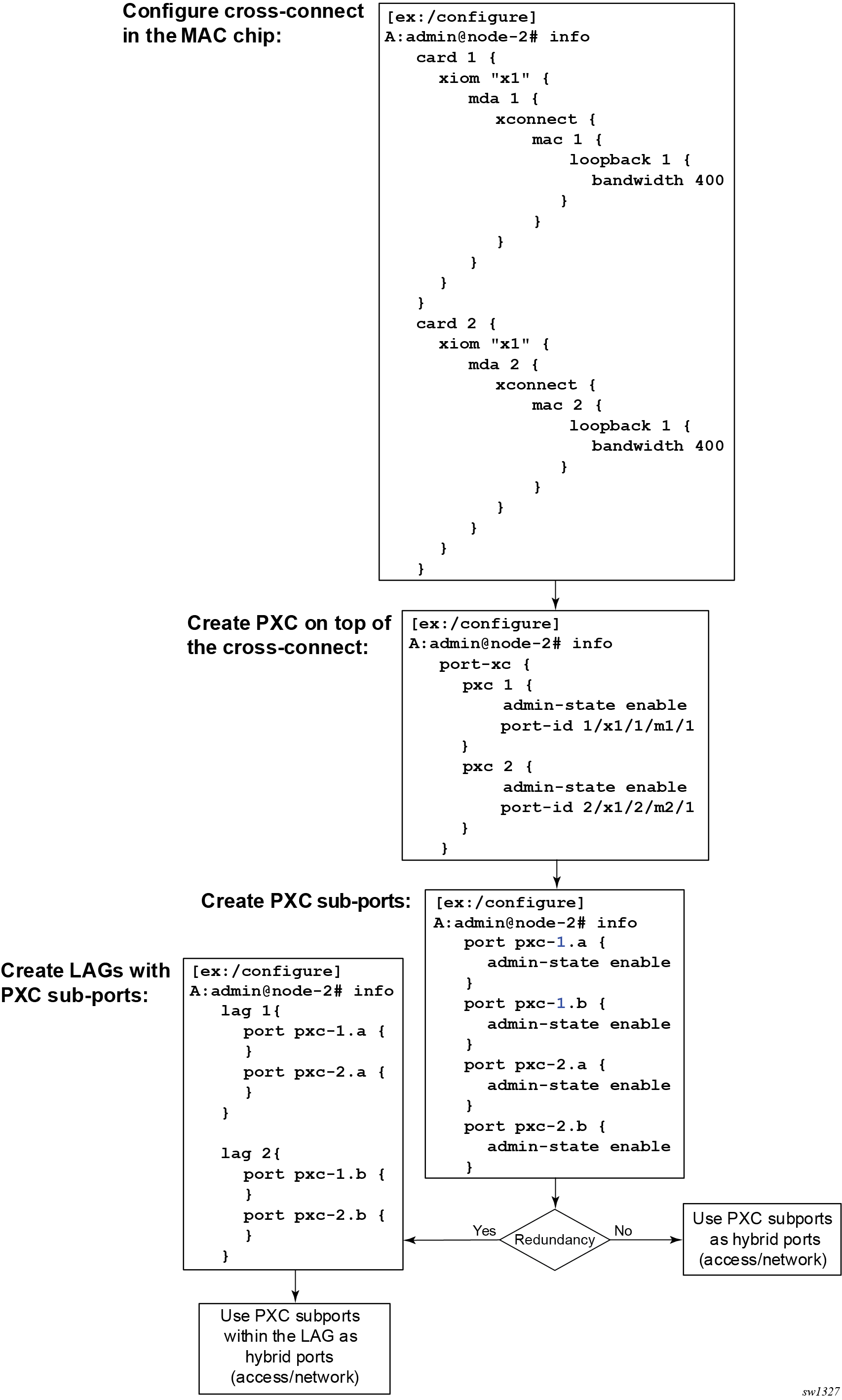

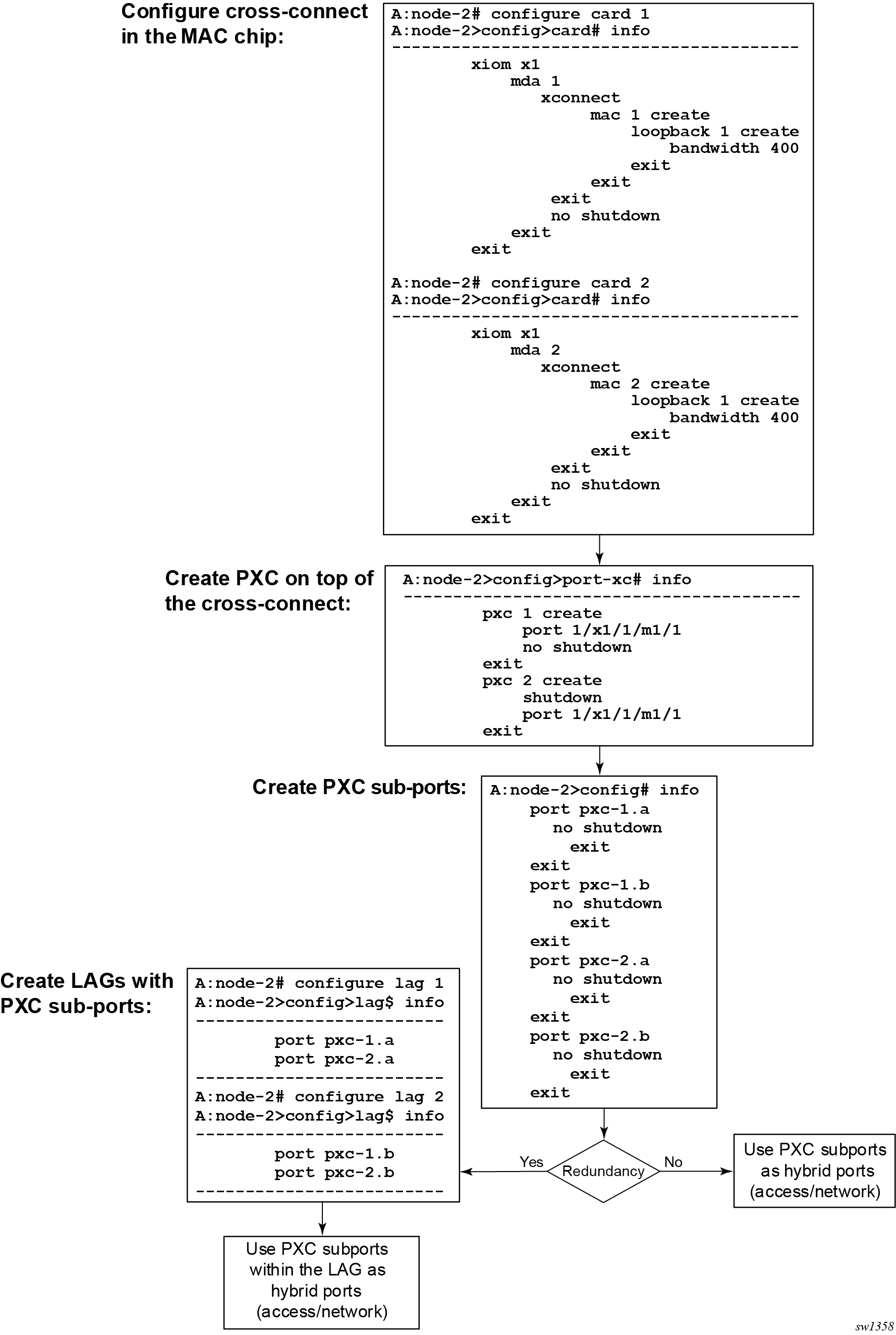

Internal PXC

With internal (or MAC-based) PXC, the egress path is cross-connected to the ingress path in the MAC chip, without the need to consume a faceplate port, as shown in Internal cross-connect (loopback) in a MAC chip. The number of the MAC chips on a line card varies with the line card type. The show datapath command shows the MAC chip related connectivity information in the datapath (forwarding complex). This information is essential for the correct configuration of the cross-connect.

The following example shows the configuration of cross-connect in the MAC chip represented in the CLI as a loopback.

MAC chip cross-connect configuration (MD-CLI)

[ex:/configure card 1]

A:admin@node-2# info

mda 1 {

mda-type s36-100gb-qsfp28

xconnect {

mac 1 {

description "test description"

loopback 1 {

description "loopback description"

bandwidth 100

}

}

}

}

MAC chip cross-connect configuration (classic CLI)

A:node-2>config>card# info

----------------------------------------------

...

mda 1

mda-type s36-100gb-qsfp28

xconnect

mac 1 create

description "test description"

loopback 1 create

description "loopback description"

bandwidth 100

exit

exit

exit

no shutdown

exit

no shutdown

----------------------------------------------On the cards with IOM-s modules, an addition of xiom node is used:

Cross-connect configuration on IOM-s modules (MD-CLI)

[ex:/configure card 1]

A:admin@node-2# info

...

xiom "x2" {

level cr1600g+

xiom-type iom-s-3.0t

mda 1 {

mda-type ms2-400gb-qsfpdd+2-100gb-qsfp28

xconnect {

mac 1 {

description "test description"

loopback 1 {

description "loopback description"

}

}

}

}Cross-connect configuration on IOM-s modules (classic CLI)

A:node-2>config>card# info

----------------------------------------------

...

xiom x2

xiom-type iom-s-3.0t level cr1600g+

mda 1

mda-type ms2-400gb-qsfpdd+2-100gb-qsfp28

xconnect

mac 1 create

description "test description"

loopback 1 create

description "loopback description"

exit

exit

exit

no shutdown

exit

exitThe loopback created on the MAC chip at location card, xiom, mda, or mac is assigned a bandwidth in discrete steps. This is a Layer 2 bandwidth, which includes the Ethernet Layer 2 header, but excludes the 20 bytes of Ethernet preamble and the inter-packet gap.

The cross-connect cannot be used in this form, but instead, it must be represented as a port in the system so other software components, such as services, can access it.

In classic CLI, the port associated with the loopback is automatically created.

In MD-CLI, use the configure port command to manually create the port. Use the following format for the cross-connect port ID.

slotNum/mdaNum/mMACchipNum/loopback-id

- slotNum

- the slot number

- xiom

- the xiom-slot

- mdaNum

- the MDA number

- m

- the keyword indicating that this is a cross-connect type port created in a MAC chip

- MACchipNum

- the MAC chip number, the physical location of the loopback on a forwarding complex

- loopback-id

- the loopback ID

For example, a cross-connect (loopback) port 1 on the card 1, xiom ‛x1’, mda 1, MAC chip 1 is created with the following commands:

Port configuration (MD-CLI only)

[ex:/configure]

A:admin@node-2# info

...

port 1/x1/1/m1/1 {

admin-state enable

}This port is ready to be used as a PXC, without having to reserve external ports. Multiple PXCs can be created on the same underlying cross-connect:

Port configured as a PXC (MD-CLI)

[ex:/configure]

A:admin@node-2# info

port-xc {

pxc 1 {

admin-state enable

port-id 1/x1/1/m1/1

}

pxc 2 {

admin-state enable

port-id 1/x1/1/m1/1

}

pxc 3 {

admin-state enable

port-id 1/x1/1/m1/1

}

}Port configured as a PXC (classic CLI)

A:node-2>config>port-xc# info

----------------------------------------------

pxc 1 create

port 1/x1/1/m1/1

no shutdown

exit

pxc 2 create

shutdown

port 1/x1/1/m1/1

exit

pxc 3 create

shutdown

port 1/x1/1/m1/1

exit

exit

The loopback created in the MAC chip does not have an administrative state, but the port created on top of it does have an administrative state.

PXC sub-ports

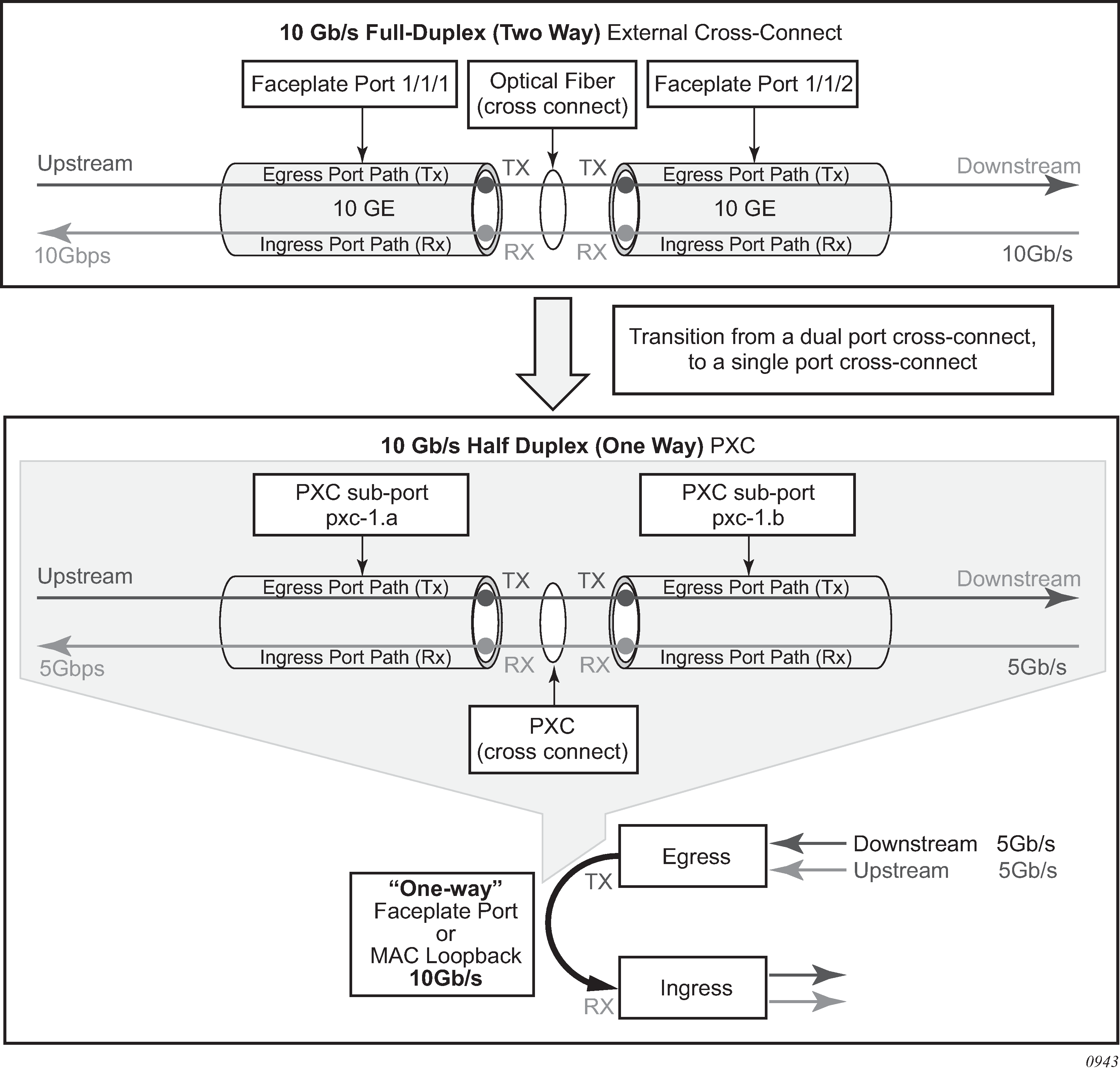

Two cross-connected external ports versus a single cross-connect displays the benefit of PXC sub-ports on top of the cross-connect, which is analogous to two distinct faceplate ports that are connected by a fiber cable.

Bidirectional connectivity provided by PXC requires two sub-ports, one in each direction. The router uses these PXC sub-ports as logical configurations to transmit traffic in both directions over a half-duplex (one-way) cross-connect created in the system. As a result, the total bandwidth capacity supported by the mated PXC sub-ports is limited by the bandwidth capacity of the underlying cross-connect (a single faceplate port or a MAC loopback).

For example, if a 10 Gb/s faceplate port is allocated for PXC functions, the sum of downstream and upstream traffic on the mated PXC sub-ports is always less than or equal to 10 Gb/s. The bandwidth distribution is flexible; it can be symmetric (5 Gb/s downstream and 5 Gb/s upstream), or asymmetric (9 Gb/s downstream and 1 Gb/s upstream, 8 Gb/s downstream and 2 Gb/s upstream, or any other downstream and upstream distribution combination). Therefore, the faceplate port speed from the PXC perspective is half-duplex.

Similar logic can be followed for MAC-based PXC, with two key differences:

-

The bandwidth (for example, 100 Gb/s) is configured under the MAC loopback and there is no need to allocate an additional faceplate port.

-

PXC traffic is not reserved as part of the faceplate port bandwidth, as it is in the port-based PXC where a faceplate port is reserved only for PXC traffic. Instead, the PXC traffic is added to the traffic from the faceplate ports even in situations where all faceplate ports are 100% used, potentially oversubscribing the forwarding complex.

After the faceplate port or the port based on MAC loopback is associated with a PXC ID, a pair of mated PXC sub-ports is automatically created in the classic CLI by the SR OS.

In MD-CLI, the user must manually create the sub-ports.

The sub-ports must be explicitly enabled. Use the following commands to enable the subports:

-

MD-CLI

admin-state enable -

classic CLI

no shutdown

The two PXC sub-ports are distinguishable by ‟.a” and ‟.b” suffixes. They transmit traffic toward each other, simulating two ports that are interconnected.

Although, the most PXC sub-ports command options are configurable, specific command options are fixed and cannot be changed. For example, PXC sub-ports are created in a hybrid mode and this cannot be modified.

Each PXC sub-port is internally (within the system) represented by an internal four-byte VLAN tag which is not visible to the user. Therefore, traffic carried over the PXC contains four extra bytes, which must be accounted for in the QoS configured on PXC sub-ports.

MD-CLI

[ex:/configure port-xc]

A:admin@node-2# info

pxc 1 {

admin-state enable

port-id 1/1/1

}

pxc 2 {

admin-state enable

port-id 1/1/2

}

classic CLI

A:node-2>config>port-xc# info

----------------------------------------------

pxc 1 create

port 1/1/1

no shutdown

exit

pxc 2 create

port 1/1/2

no shutdown

exit

----------------------------------------------

The preceding configuration automatically creates the following PXC sub-ports. In the following example, the following ports are cross-connected:

- pxc-1.a is cross-connected with pxc-1.b

- pxc-1.b is cross-connected with pxc-1.a

- pxc-2.a is cross-connected with pxc-2.b

- pxc-2.b is cross-connected with pxc-2.a

MD-CLI

[ex:/configure]

A:admin@node-2# info

...

port pxc-1.a {

}

port pxc-1.b {

}

port pxc-2.a {

}

port pxc-2.b {

}

classic CLI

A:node-2# admin display-config

...

#--------------------------------------------------

echo "Port Configuration"

#--------------------------------------------------

port pxc-1.a

exit

exit

port pxc-1.b

exit

exit

port pxc-2.a

exit

exit

port pxc-2.b

exit

exit

Bandwidth considerations and QoS

Bandwidth consumed by PXCs based on faceplate ports correlates with the faceplate’s port capacity. Because each PXC allocates a faceplate port for exclusive use, the PXC capacity cannot exceed the card capacity that is already allocated for the faceplate ports. In other words, a PXC based on a faceplate port does not add any additional bandwidth to the forwarding complex. This is in contrast to the MAC-based PXCs, where bandwidth consumed on each PXC is added to the faceplate port capacity. If bandwidth is not carefully managed on cards with MAC- based PXC, extensive periods of oversubscription can occur. Operating under extended periods of congestion is not recommended and should be avoided. Therefore, practical use of a MAC-based PXC makes sense in an environment where the utilization of faceplate ports is relatively low so the remaining bandwidth can be used for traffic flowing through the MAC-based PXC.

The bandwidth management in the PXC environment is performed through existing QoS mechanisms, and in addition, for MAC-based PXCs by careful selection of the MAC chip and in some instances in fabric taps. The user can control the selection of these entities.

Location selection for internal PXC

The following are general guidelines for MAC chip selection and the loopback naming:

A suitable card candidate has lower actual traffic volumes through faceplate ports so the unused bandwidth in the forwarding complex can be used for PXC.

A suitable MAC chip candidate is connected to the faceplate ports with lower bandwidth utilization.

-

SR-s platforms with IOM-s cards (XIOM configuration), a loopback ID influences the selection of the fabric tap to which the PXC traffic is mapped. In these platforms, loopback should be distributed over the fabric taps in a way that avoids congestion. This is described in Internal PXC and source fabric taps.

Use the following command to show the connectivity layout between the MAC chips, faceplate ports, and fabric taps.

show datapath 1 detail===============================================================================

Card [XIOM/]MDA FP TAP MAC Chip Num Connector Port

-------------------------------------------------------------------------------

1 x1/1 1 1 1 c1 1/x1/1/c1/1

1 x1/1 1 1 1 c1 1/x1/1/c1/2

1 x1/1 1 1 1 c2 1/x1/1/c2/1

1 x1/1 1 1 1 c2 1/x1/1/c2/2

1 x1/1 1 1 1 c3 1/x1/1/c3/1

1 x1/1 1 1 1 c3 1/x1/1/c3/2

1 x1/1 1 N/A 2 c4

1 x1/1 1 N/A 2 c5

1 x1/1 1 N/A 2 c6

1 x1/1 1 1 1 N/A 1/x1/1/m1/1

1 x1/2 2 1 1 c1 1/x1/2/c1/1

1 x1/2 2 1 1 c1 1/x1/2/c1/2

1 x1/2 2 1 1 c1 1/x1/2/c1/3

1 x1/2 2 1 1 c1 1/x1/2/c1/4

1 x1/2 2 1 1 c1 1/x1/2/c1/5

1 x1/2 2 1 1 c1 1/x1/2/c1/6

1 x1/2 2 1 1 c1 1/x1/2/c1/7

1 x1/2 2 1 1 c1 1/x1/2/c1/8

1 x1/2 2 1 1 c1 1/x1/2/c1/9

1 x1/2 2 1 1 c1 1/x1/2/c1/10

1 x1/2 2 N/A 1 c2

1 x1/2 2 N/A 1 c3

1 x1/2 2 N/A 1 c4

1 x1/2 2 N/A 1 c5

1 x1/2 2 N/A 1 c6

1 x1/2 2 N/A 2 c7

1 x1/2 2 N/A 2 c8

1 x1/2 2 N/A 2 c9

1 x1/2 2 2 2 c10 1/x1/2/c10/1

1 x1/2 2 2 2 c10 1/x1/2/c10/2

1 x1/2 2 2 2 c10 1/x1/2/c10/3

1 x1/2 2 2 2 c10 1/x1/2/c10/4

1 x1/2 2 2 2 c10 1/x1/2/c10/5

1 x1/2 2 2 2 c10 1/x1/2/c10/6

1 x1/2 2 2 2 c10 1/x1/2/c10/7

1 x1/2 2 2 2 c10 1/x1/2/c10/8

1 x1/2 2 2 2 c10 1/x1/2/c10/9

1 x1/2 2 2 2 c10 1/x1/2/c10/10

1 x1/2 2 N/A 2 c11

1 x1/2 2 N/A 2 c12

1 x1/2 2 N/A 3 c13

1 x1/2 2 N/A 3 c14

1 x1/2 2 N/A 3 c15

1 x1/2 2 N/A 3 c16

1 x1/2 2 N/A 3 c17

1 x1/2 2 N/A 3 c18

Internal PXC and source fabric taps

PXC traffic passing through the MAC loopback is mapped to a specific source fabric tap that moves the traffic from the local source forwarding complex into the fabric and toward the destination forwarding complex. A fabric tap represents a chip that connects a forwarding complex to the system fabric. Traffic that is on its way from an ingress port (any port, including a PXC port) to the destination port, is always mapped to the same fabric tap (source fabric tap) on the ingress forwarding complex. If the source forwarding complex has two fabric taps, the fabric tap selection plays a role in optimal bandwidth distribution. An example of these forwarding complexes can be found on IOM-s cards in SR-s platforms.

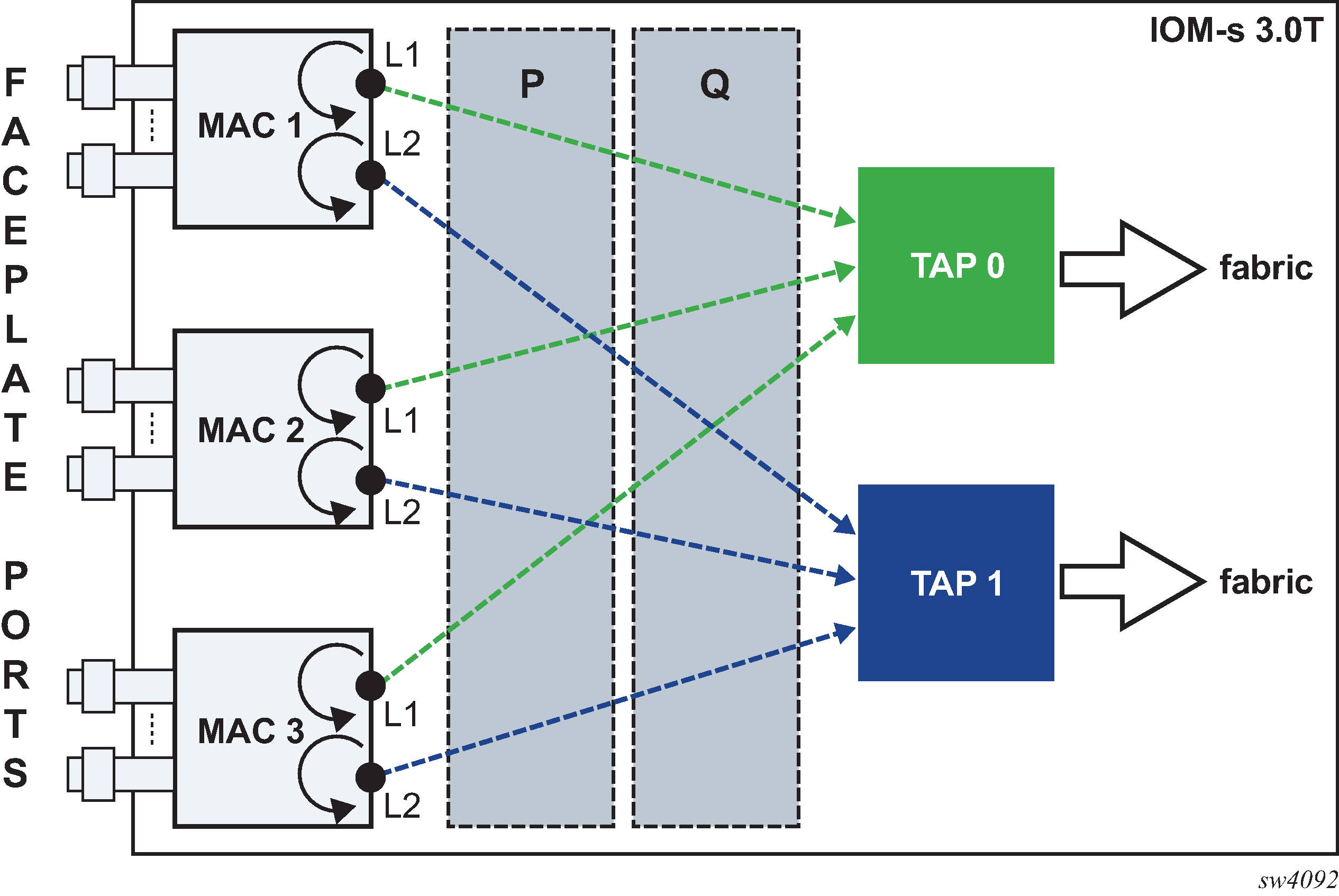

On IOM-s 3.0T, the source tap selection is based on the loopback ID. The mapping scheme is simple; loopbacks with even IDs are mapped to one source tap while loopbacks with odd IDs are mapped to the other. This is shown in Mapping of internal loopbacks to source taps. On IOM-s 1.5T, the mapping is based on the MDA number.

QoS

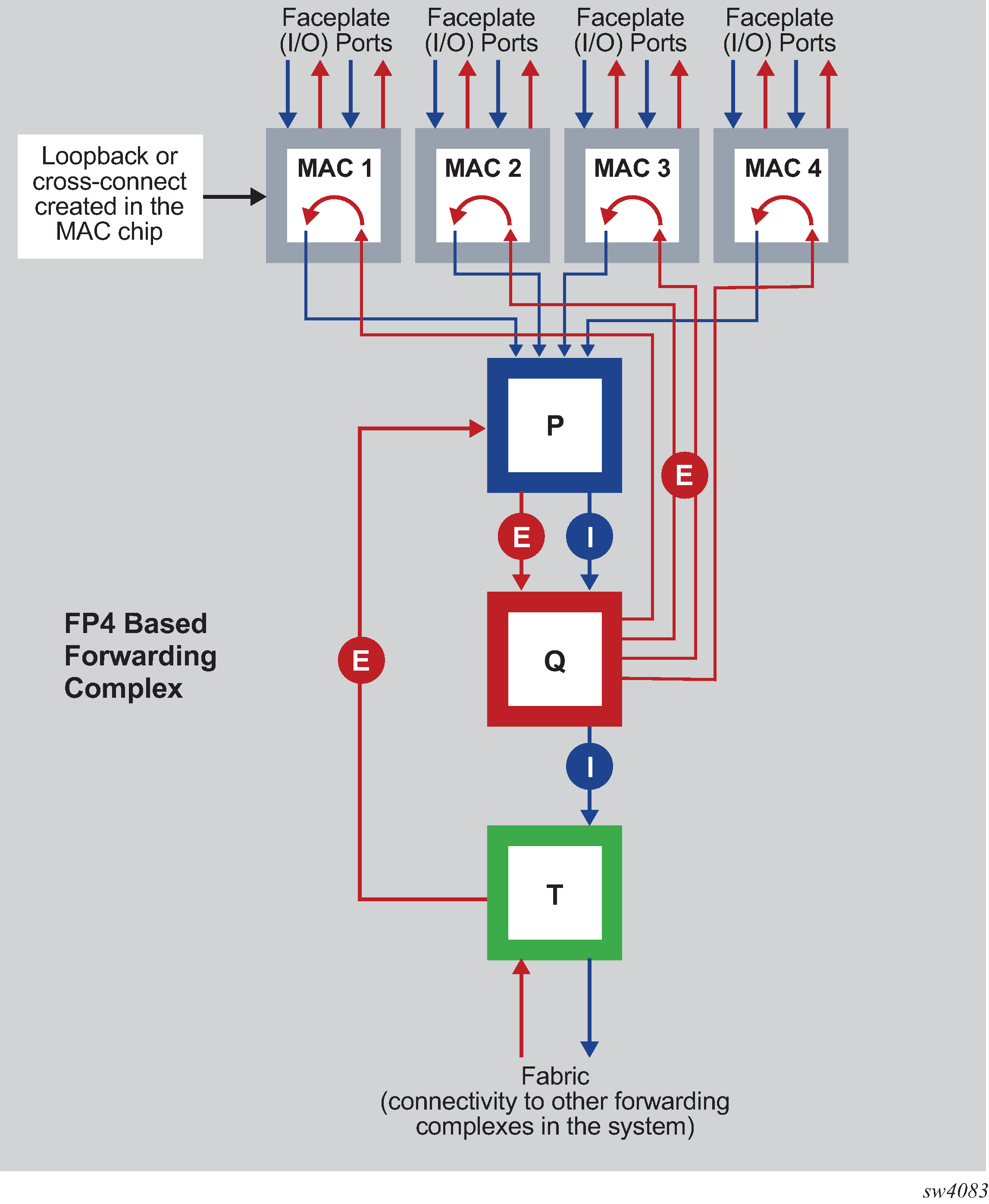

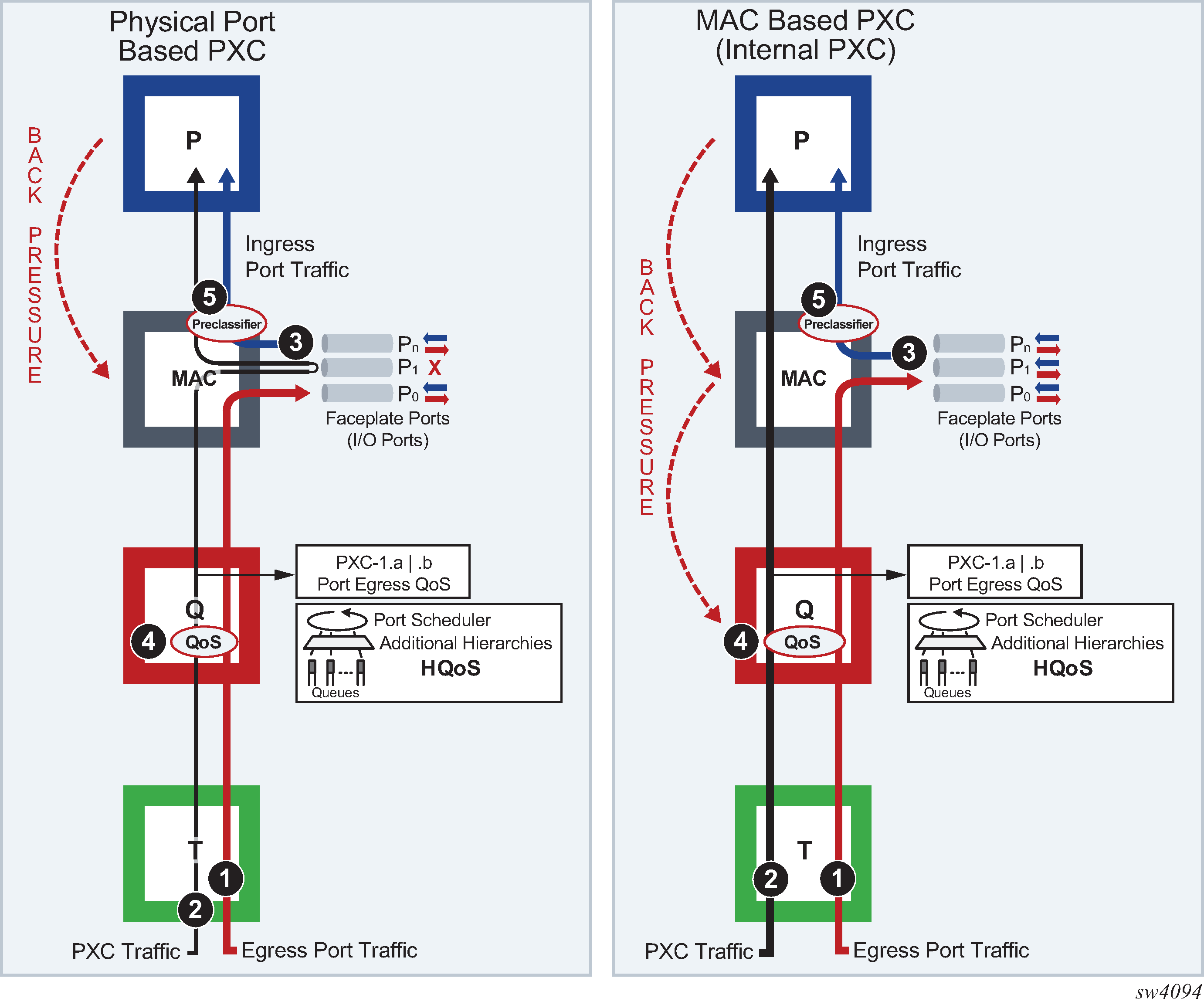

Interaction points between the PXC traffic and non-PXC traffic in the FC depends on the configured PXC type. Interaction between PXC and non-PXC traffic shows this interaction as the traffic enters the egress forwarding path from the fabric tap (T). This traffic consists of non-PXC traffic (1) destined for the egress faceplate ports and PXC traffic (2) that is sent (cross-connected) to the ingress forwarding path (P) within the same forwarding complex. Regular ingress traffic from the faceplate ports (3) is added to the stream and merged into the same ingress forwarding path as the PXC traffic.

The physical port-based PXC configuration on the left side of Interaction between PXC and non-PXC traffic, shows interaction of the three traffic streams on the forwarding complex with a PXC based on the faceplate ports. To manage congestion, the user-configured input can be exserted in points 4 and 5.

Point 4 represents regular egress QoS in the traffic manager (Q) applied to an egress port. In this setup, the faceplate port P1 is reserved for PXC traffic which is represented by the two sub ports (PXC sub-ports pxc-id.a and pxc-id.b). Egress QoS is applied to each PXC subport.

Point 5 represents a pre-classifier in the MAC chip that manages ingress bandwidth if transient bursts occur in the ingress datapath (P), which then exserts back pressure toward the MAC. During congestion, the pre-classifier arbitrates between regular ingress traffic from the faceplate ports and the PXC traffic.

The MAC-based PXC configuration on the right side of Interaction between PXC and non-PXC traffic shows the traffic flow in the FC with the MAC-based PXC. Similar to the previous case, the existing egress QoS in the traffic manager (Q) in point 4 is applied to the egress ports. However, the egress port in the PXC case is not a faceplate port but instead it is represented by the configured loopback in the MAC chip. This loopback is configured with the maximum bandwidth using the following command.

configure card xiom mda xconnect mac loopbackThe congestion management logic with internal PXC diverges from the previous scenario because the PXC traffic is moved through the MAC chip straight to the ingress datapath (P), bypassing the egress faceplate ports. Therefore, the pre-classifier in point 5 has no effect on PXC traffic. Any congestion in the (P) and MAC is managed in the traffic manager (Q) at point 4, as a result of the back pressure from the (P) and MAC toward the (Q).

QoS on PXC sub-ports

The network user must understand the concept of the PXC sub-ports described in Port-based PXC for correct egress QoS configuration in the traffic manager (Q).

The following summarizes key points for the PXC sub ports:

-

Each subport (pxc-id.a and pxc-id.b) in a PXC is, in the context of egress QoS, treated as a separate port with its own port scheduler policy.

Both sub-ports are created on top of the same loopback configuration (port- based or MAC-based). For faceplate ports, this bandwidth is determined by the port capabilities (for example, a 100 Gb\s port versus a 400 Gb\s port) and for the MAC loopback, this bandwidth is configurable.

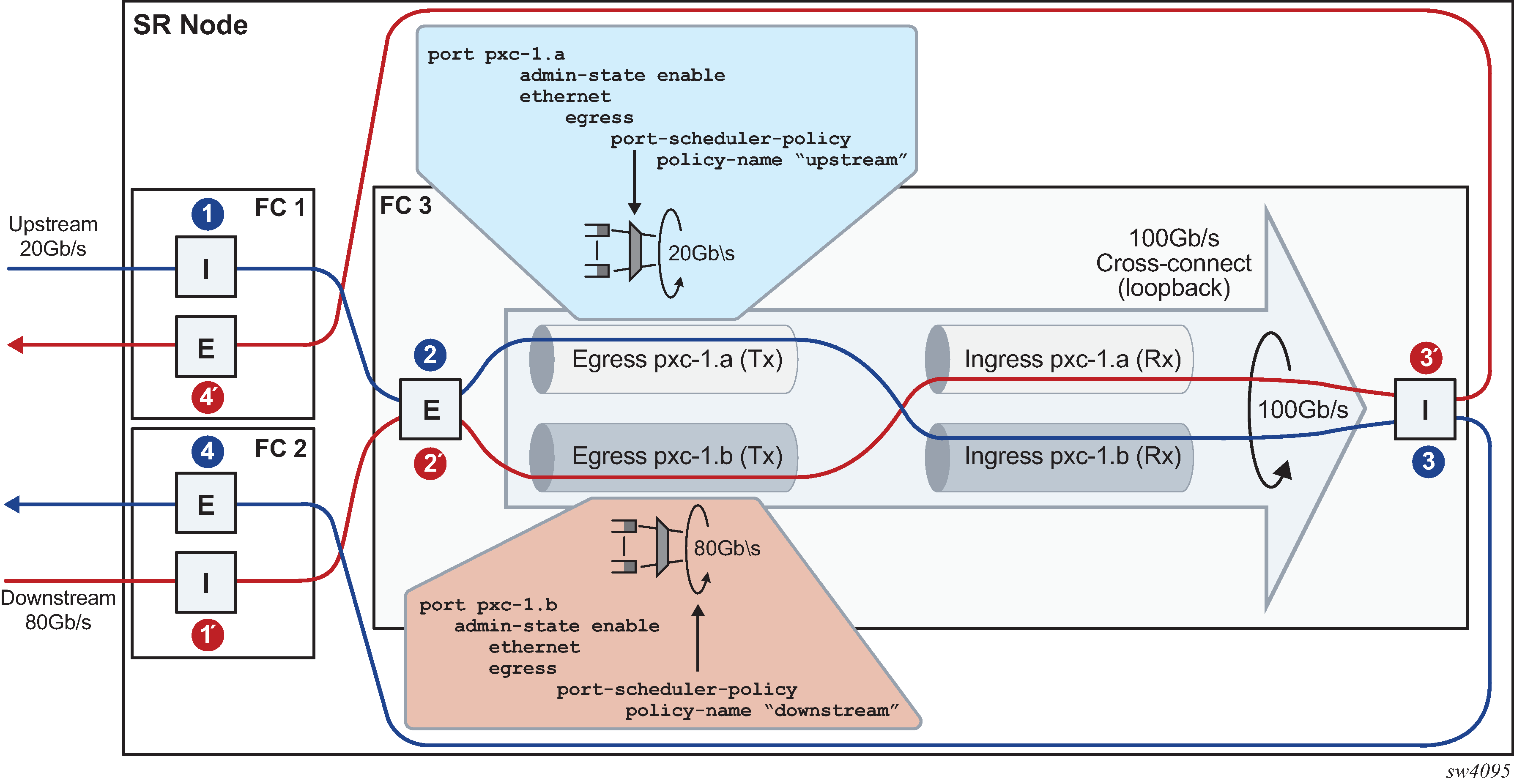

Funneling traffic from two PXC sub-ports through the same loopback requires separate bandwidth management for each PXC sub-ports. The sum of the configured bandwidth caps for the Egress Port Scheduler (EPS) under the two PXC sub-ports should not exceed the bandwidth capacity of the underlying loopback. Bandwidth management on PXC sub-ports shows an example of this concept where each PXC sub-port is divided into two parts, the Tx or the egress part and the Rx or the ingress part. Bandwidth management on PXC sub-ports shows bidirectional traffic entering and exiting the SR node at forwarding complex 1 and 2, with PXC processing on forwarding complex 3. In the upstream direction, traffic enters SR node at the ingress forwarding complex 1 at point (1) and is redirected to the PXC for additional processing, points (2) and (3). From there, traffic is sent by the egress forwarding complex 2 out of the node, at point (4).

Similar logic can be followed in the downstream (opposite) direction where the traffic enters the ingress forwarding complex 2 at point (1’), it is redirected to the same PXC on forwarding complex 3 and exists the node on forwarding complex 1 at point (4’).

In this example with the maximum loopback bandwidth of 100 Gb\s, port-schedulers under the PXC egress subports must be configured to support their respective anticipated bandwidth in each direction (20 Gb\s upstream and 80 Gb\s downstream), for the total bandwidth of 100 Gb\s supported on the cross-connect.

Traffic traversing PXC contains an overhead of 4 bytes per packet that are attributed to the internal VLAN tag used for PXC sub-port identification within the SR node. However, these 4 bytes are not accounted for in the configured QoS rates. Therefore, the user should take this into consideration when configuring rates on QoS objects under PXC ports.

Queue allocation on PXC sub-ports

PXC sub-ports are auto-configured in hybrid mode and this cannot be changed by configuration. The PXC sub-ports each have a set of queues on the network egress side and a set of queues on the access egress and ingress (per SAP or ESM subscriber). Queues on network ingress are shared per FP or per MDA, as they are on non-PXC ports in hybrid mode.

Queue groups are allocated per PXC sub-ports.

Pool allocations on PXC ports

Queue buffers are created in buffer pools and are used for traffic buffering when queues are congested. Buffer pools are allocated per forwarding complex or per cross-connect.

Each cross-connect has three associated buffer pools:

access ingress

access egress

network egress

The network ingress pool is shared between all faceplate ports on a forwarding complex. The size of the buffer pools is automatically determined by the system based on the forwarding complex type and cross-connect configuration.

Operational states

A port under a PXC (for example, port 1/1/1 or port 1/x1/1/m1/1), the PXC itself (PXC ID represented by the cross-connect port configuration port-xc pxc 1), and PXC sub-ports (for example, port pxc-1.a and pxc-1.b) all have administrative and operational states.

For a port-based PXC, when all layers of a PXC (PXC port, PXC ID, and PXC sub-ports) are operationally up, the faceplate port status LED on the faceplate blinks amber. The port activity LED lights green in the presence of traffic on PXC ports and turns off in the absence of traffic on PXC ports. The presence of the optical transceiver on the PXC has no effect on its operational state. Traffic cannot be sent out through the transceiver or be received through the transceiver from the outside. However, the existing traps related to insertion or removal of a transceiver (SFF Inserted/Removed) are supported. The "Signal-Fail" alarm on the PXC is suppressed.

The operational state of the PXC ID is derived from its administrative state and the operational state of the sub-ports.

The operational state of the PXC sub-ports is dependent on the operational state of the underlying port (faceplate port or MAC loopback) and the administrative state of the corresponding PXC ID.

PXC statistics

Two types of statistics can be collected on a regular, non-PXC Ethernet port:

-

Low-level port statistics which provide information about conditions on the data-link layer and physical port, for example, the aggregate number of forwarded and dropped octets or bytes on the data-link layer (Layer 2 MAC), FCS errors, number of collisions, and so on. These statistics can be viewed with the show port command.

-

Network-level statistics provide information about forwarded and dropped octets or packets on a per-queue level on network ports. These statistics can be viewed with the show port detail command.

Statistics collection ability on the PXC port depends on whether the PXC is port-based or MAC-based (internal).

Statistics on faceplate PXC ports

The statistics on the faceplate PXC ports are maintained only on the data-link layer (Layer 2 MAC). The internal Q-tag used for PXC sub-port identification within the router is included in the displayed octet count. The collected statistics represent the combined upstream and downstream traffic carried by the corresponding PXC sub-ports.

For example, in port level statistics output for a faceplate PXC port, the output count represents the upstream and downstream traffic flowing out of the faceplate port while the input count represents the same looped traffic returning into the same port.

show port 1/1/1 detail...

===============================================================================

Traffic Statistics

===============================================================================

Input Output

-------------------------------------------------------------------------------

Octets 290164703 290164703

Packets 2712661 2712661

Errors 0 0

Statistics are cleared when a faceplate port is added or removed from the PXC.

Statistics collection to a local file is not supported for faceplate PXC ports.

Queues are not instantiated on the faceplate PXC ports, therefore, the network level (queue) statistics are not maintained in that location.

Statistics collection on internal (MAC-based) PXC

Internal ports created in the MAC chip (for example port 1/x1/1/m1/1) do not have Layer 2 MAC addresses, therefore, statistics based on the data-link layer (Layer 2 MAC) are not available.

Statistics collection on PXC sub-ports

PXC sub-ports provide only network-level statistics (queue statistics). MAC related statistics are not available on PXC sub-ports.

PXC LAG

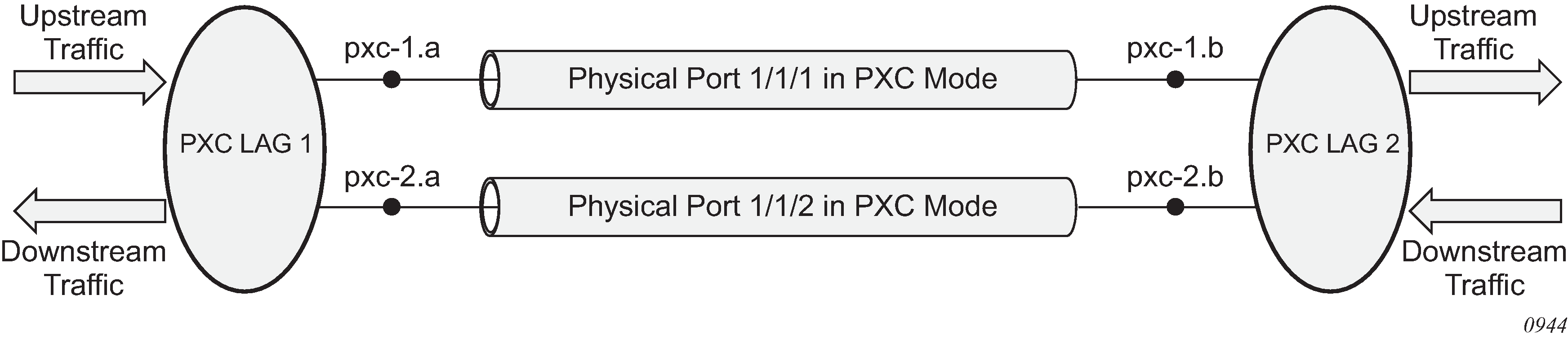

PXC sub-ports can be aggregated into a PXC LAG for increased capacity and card redundancy. A logical concept of a PXC LAG is shown in Logical concept of a LAG on PXC ports.

Although the configuration allows for a mix of port-based PXCs and MAC-based PXCs in a LAG, the configuration should be used in a production network only during a short migration period when transitioning from one type of PXC to the other. Outside of the migration, the PXCs in a LAG should be of the same type, for example, a LAG should contain only port-based PXCs or only MAC-based PXCs but not both.

The LAGs on PXC ports must be configured in pairs as shown in the following example.

MD-CLI

[ex:/configure]

A:admin@node-2# info

...

lag "lag-1" {

description "lag in the up direction"

port pxc-1.a {

}

port pxc-2.a {

}

}

lag "lag-2" {

description "lag in the down direction"

port pxc-1.b {

}

port pxc-2.b {

}

}

classic CLI

A:node-2# configure lag 1

A:node-2>config>lag$ info

----------------------------------------------

description "lag in the up direction"

port pxc-1.a

port pxc-2.a

----------------------------------------------

A:node-2# configure lag 2

A:node-2>config>lag$ info

----------------------------------------------

description "lag in the down direction"

port pxc-1.b

port pxc-2.b

no shutdown

----------------------------------------------Within the router, the two sides of the PXC LAG (LAG 1 and LAG 2 in the example configuration) are not aware of their interconnection. As a result, the operational state of one side of the PXC LAG is not influenced by the state of the PXC LAG on the other side.

PXC sub-ports in a LAG must have the same properties (such as the same speed). Mixing PXC sub-ports and non-PXC ports is not allowed. The first port added to a LAG determines the type of LAG (PXC or non-PXC).

Statistics in the output of the show lag statistics command represent combined traffic carried over the referenced LAG and its pair (lag 1 and lag 2 in the above example).

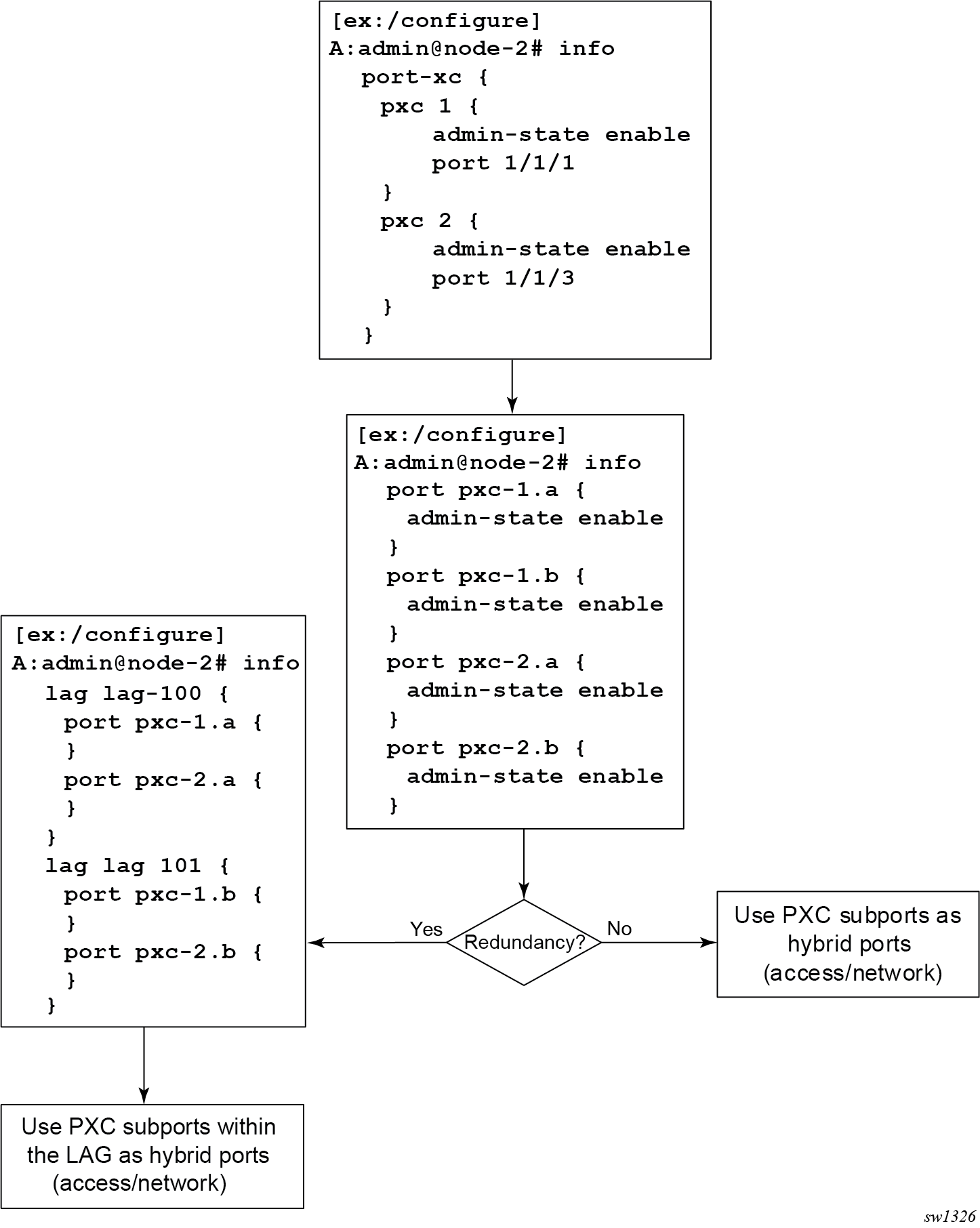

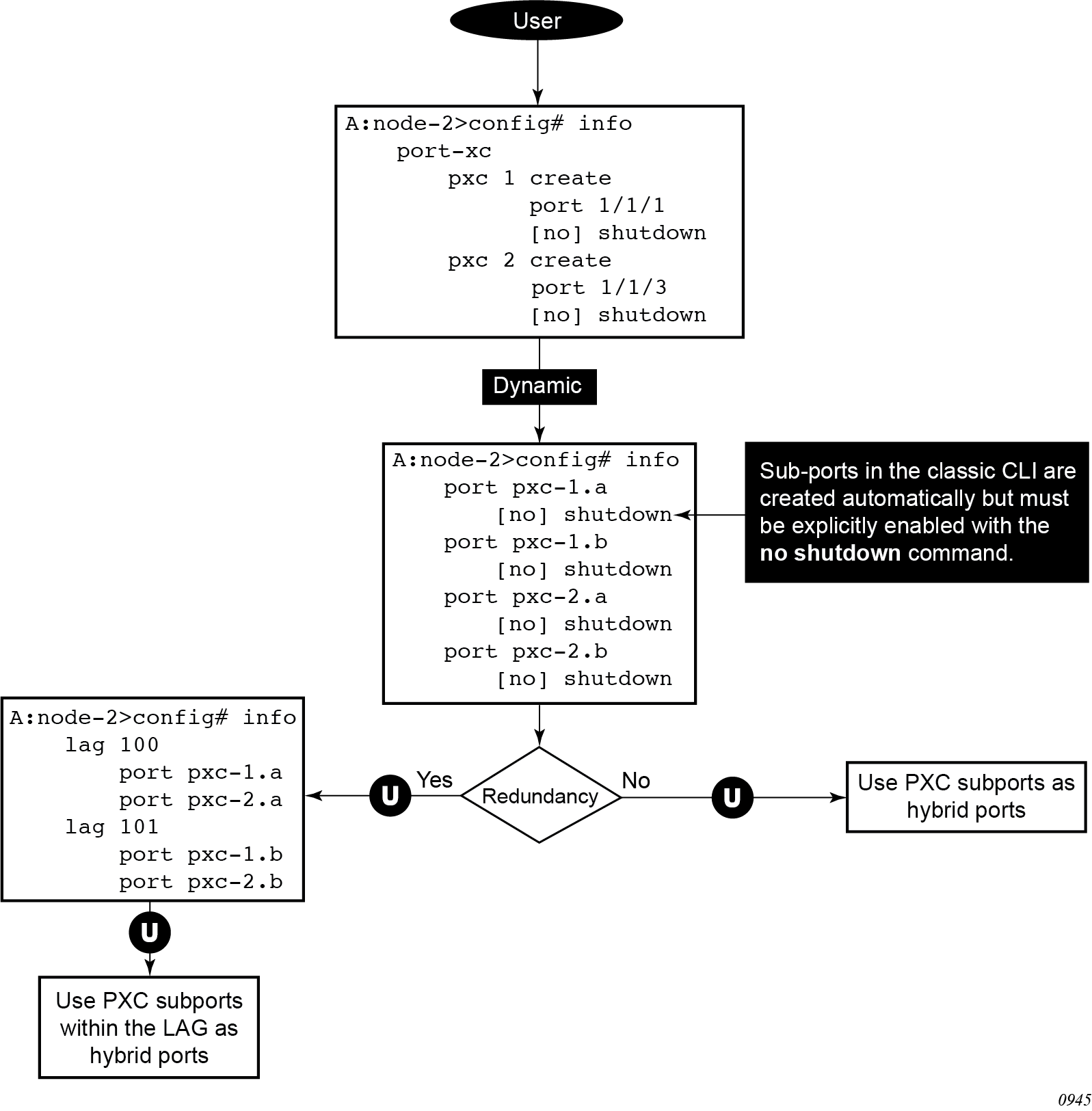

Basic PXC provisioning

The CLI configuration flow example shown in the following figure represents a PXC configuration based on the faceplate port. The oval marked ‟User” represents a configuration step that the user must perform. The block marked ‟Dynamic” represents a step that the system performs automatically without a user’s assistance.

PXC mirroring and LI

Traffic on a PXC sub-port can be mirrored or lawfully intercepted (LI). For example, subscriber ‟Annex1” traffic arriving on a PXC sub-port is mirrored if ‟Annex1” is configured as a mirror or LI source. A PXC sub-port can also be used to transmit mirror and LI traffic out from a mirror-destination service (such as a mirror-dest SAP or SDP can egress out a PXC sub-port, or a routable LI encapsulated packet can be forwarded and transmitted out a PXC sub-port).

A mirror destination can be configured to transmit mirrored and LI traffic out of a SAP on a PXC sub-port that is then cross connected into a VPLS service where a VXLAN encapsulation is added to the mirrored packets before transmission out of the node.

The internal Q-tag that represents the PXC sub-port within the system is included in the lawfully intercepted copy of the packet for traffic intercepted (mirrored) on the ingress side of a PXC sub-port, when the associate mirror-dest service is of type ether (the default) with routable lawful interception encapsulation in the following context.

Use the following command to configure a mirror destination to transmit mirrored and LI traffic from a SAP on a PXC sub-port.

configure mirror mirror-dest encapSee the 7450 ESS, 7750 SR, 7950 XRS, and VSR OAM and Diagnostics Guide for information about LI.

Multichassis redundancy

Multichassis Synchronization (MCS) configuration is supported for entities using PXCs in the following context.

configure redundancy multi-chassis peer syncHowever, MC-LAG is not supported directly on PXCs because PXCs are not directly connected to external equipment.

Health monitoring on the PXC

Health monitoring of the PXC sub-ports is based on EFM OAM where the Information OAMPDUs are transmitted by each peer (pxc sub-port) at the configured intervals. Their purpose is to perform keepalive and critical notification functions.

For PXCs with underlying faceplate ports, status monitoring can be enabled for either or both of the faceplate ports:

-

crc-monitoring (link quality) on the RX side of the port using the following command

configure port ethernet crc-monitor crc-monitoring (link quality) on the path from IOM toward MDA using the following command

configure port ethernet down-on-internal-errorThe TX disable flag (disable remote laser on error) is not supported on PXC ports because PXC ports are looped. Use the following command to turn the flag off:-

MD-CLI

configure port ethernet down-on-internal-error tx-laser off -

classic CLI

configure port ethernet down-on-internal-error tx-disable

-

CRC monitoring on the RX side of the faceplate ports has the following characteristics:

monitor ingress error conditions

compare error counts against configurable thresholds

CRC errors are only recorded if frames are transmitted

crossing the signal degrade (SD) threshold raises log event

crossing the signal failure (SF) threshold takes down the port’s operational state

error rate thresholds uses format m·10-n; both the threshold (n) and multiplier (m) are configurable

Health monitoring on the faceplate ports level is disabled by default.

In addition to the explicitly configured aforementioned health monitoring mechanisms, PXC operational state transitions are by default reported by a port UP/DOWN trap:

478 2015/10/22 14:08:15.86 UTC WARNING: SNMP #2004 Base pxc-1.b Interface pxc-1.b is not operational

478 2015/10/22 14:08:15.86 UTC WARNING: SNMP #2004 Base pxc-1.b Interface pxc-1.b is operational

Configuration example

The following example is based on a PXC on a faceplate port. Subscriber traffic with QinQ encapsulation arriving on two different line cards (3 and 4) is terminated on the PXC LAG on line cards 1 and 2. With this method, if one of the ingress line cards (3 or 4) fails, the subscriber traffic remains unaffected (continues to be terminated on line cards 1 and 2) provided that the correct protection mechanism is implemented in the access part of the network. This protection mechanism in the access part of the network must ensure that traffic arriving on card 3 can be rerouted to card 4 if card 3 fails. The opposite must be true as well (the path to card 4 must be protected by a path to card 3).

PXC can be on any card, independent of ingress ports.

Faceplate (physical) port configuration on cards 3 and 4 (MD-CLI)

[ex:/configure port 3/1/1]

A:admin@node-2# info

description "access I/O port on card 3; ecap is null which means that all

VLAN tagged and untagged traffic will be accepted”

ethernet {

mode access

encap-type null

}

[ex:/configure port 4/1/1]

A:admin@node-2# info

description "access I/O port on card 4; ecap is null which means that all

VLAN tagged and untagged traffic will be accepted”

ethernet {

mode access

encap-type null

}

}

Faceplate (physical) port configuration on cards 3 and 4 (classic CLI)

A:node-2>config# port 3/1/1

A:node-2>config>port# info

----------------------------------------------

description "access I/O port on card 3; ecap is null which means that all VLAN tagged and untagged traffic will be accepted"

ethernet

mode access

exit

A:node-2>config>port# info detail

----------------------------------------------

...

ethernet

...

encap-type null

...

A:node-2>config# port 4/1/1

A:node-2>config>port# info

----------------------------------------------

shutdown

description "access I/O port on card 4; ecap is null which means that all VLAN tagged and untagged traffic will be accepted"

ethernet

mode access

exit

A:node-2>config>port# info detail

----------------------------------------------

...

ethernet

...

encap-type null

...PXC configuration on cards 1 and 2 (MD-CLI)

[ex:/configure port-xc]

A:admin@node-2# info

pxc 1 {

admin-state enable

description "PXC on card 1"

port-id 1/1/1

}

pxc 2 {

admin-state enable

description "PXC on card 2"

port-id 2/1/1

}PXC configuration on cards 1 and 2 (classic CLI)

A:node-2>config>port-xc# info

----------------------------------------------

pxc 1 create

description "PXC on card 1"

port 1/1/1

no shutdown

exit

pxc 2 create

description "PXC on card 2"

port 2/1/1

no shutdown

exit

----------------------------------------------

The user must manually configure the sub-port encapsulation (the default is dot1q). PXC sub-ports transparently pass traffic with preserved QinQ tags from the .b side of the PXC to the .a side of the PXC where a *.* capture SAP is configured.

Configuration of the sub-port encapsulation (MD-CLI)

[ex:/configure port pxc-2.b]

A:admin@Vnode-2# info

...

port pxc-1.a {

admin-state enable

description "termination PXC side; *.* capture SAP will be configured here"

ethernet {

encap-type qinq

}

}

port pxc-1.b {

admin-state enable

description "transit PXC side; all VLAN tags (*) will be transparently passed via this side"

ethernet {

encap-type qinq

}

}

port pxc-2.a {

admin-state enable

description "together with pxc-1.a, this sub-port is a member of LAG 1"

ethernet {

encap-type qinq

}

}

port pxc-2.b {

admin-state enable

description "together with pxc-1.b, this sub-port is a member of LAG 2"

ethernet {

encap-type qinq

}

}Configuration of the sub-port encapsulation (classic CLI)

A:node-2# admin display-config

...

#--------------------------------------------------

echo "Port Configuration"

#--------------------------------------------------

...

port pxc-1.a

description "termination PXC side; *.* capture SAP will be configured here"

ethernet

encap-type qinq

exit

no shutdown

exit

port pxc-1.b

description "transit PXC side; all VLAN tags (*) will be transparently passed via this side"

ethernet

encap-type qinq

exit

no shutdown

exit

port pxc-2.a

description "together with pxc-1.a, this sub-port is a member of LAG 1"

ethernet

encap-type qinq

exit

no shutdown

exit

port pxc-2.b

description "together with pxc-1.b, this sub-port is a member of LAG 2"

ethernet

encap-type qinq

exit

no shutdown

exitPXC LAG configuration (MD-CLI)

[ex:/configure]

A:admin@node-2# info

...

lag "lag-1" {

admin-state enable

description "terminating side of the cross-connect"

port pxc-1.a {

}

port pxc-2.a {

}

}

lag "lag-2" {

admin-state enable

description "transient side of the cross-connect"

port pxc-1.b {

}

port pxc-2.b {

}

}PXC LAG configuration (classic CLI)

A:node-2# configure lag 1

A:node-2>config>lag$ info

----------------------------------------------

description "terminating side of the cross-connect"

port pxc-1.a

port pxc-2.a

no shutdown

----------------------------------------------

A:node-2# configure lag 2

A:node-2>config>lag$ info

----------------------------------------------

description "transient side of the cross-connect"

port pxc-1.b

port pxc-2.b

no shutdown

----------------------------------------------Passing traffic from the ingress side on access (ports 3/1/1 and 4/1/1) via the transient PXC sub-ports pxc-1.b and pxc-2.b to the termination side of the PXC is performed through VPLS.

Passing traffic through VPLS (MD-CLI)

[ex:/configure service]

A:admin@node-2# info

vpls "1" {

admin-state enable

description "stitching access side to the anchor"

customer "1"

split-horizon-group "access (I/O) side" {

}

sap 3/1/1 {

admin-state enable

description "I/O port"

split-horizon-group "access"

}

sap 4/1/1 {

admin-state enable

description "I/O port"

split-horizon-group "access"

}

sap lag-2:* {

admin-state enable

description "transit side of PXC"

}

}Passing traffic through VPLS (classic CLI)

A:node-2>config>service# info

----------------------------------------------

...

vpls 1 name "1" customer 1 create

description "stitching access side to the anchor"

split-horizon-group "access I/O side" create

exit

sap 3/1/1 split-horizon-group "access" create

description "I/O port"

no shutdown

exit

sap 4/1/1 split-horizon-group "access" create

description "I/O port"

no shutdown

exit

sap lag-2:* create

description "transit side of PXC"

no shutdown

exit

no shutdown

exit

----------------------------------------------Capture SAPs on the anchor (MD-CLI)

[ex:/configure service]

A:admin@node-2# info

vpls "3" {

admin-state enable

description "VPLS with capture SAPs"

customer "1"

capture-sap lag-1:10.* {

description "termination side of PXC; traffic with S-tag=10 will be extracted here"

trigger-packet {

dhcp true

dhcp6 true

pppoe true

}

}

capture-sap lag-1:11.* {

description "termination side of PXC; traffic with S-tag=11 will be extracted here"

}

}Capture SAPs on the anchor (classic CLI)

A:node-2>config>service# info

----------------------------------------------

vpls 3 name "3" customer 1 create

description "VPLS with capture SAPs"

sap lag-1:10.* capture-sap create

description "termination side of PXC; traffic with S-tag=10 will be extracted here"

trigger-packet dhcp dhcp6 pppoe

no shutdown

exit

sap lag-1:11.* capture-sap create

description "termination side of PXC; traffic with S-tag=11 will be extracted here"

no shutdown

exit

no shutdown

exit

----------------------------------------------