Triple Play multicast

Introduction to multicast

IP multicast provides an effective method of many-to-many communication. Delivering unicast datagrams is simple. Normally, IP packets are sent from a single source to a single recipient. The source inserts the address of the target host in the IP header destination field of an IP datagram, intermediate routers (if present) simply forward the datagram toward the target in accordance with their respective routing tables.

Sometimes distribution needs individual IP packets be delivered to multiple destinations (like audio or video streaming broadcasts). Multicast is a method of distributing datagrams sourced from one (or possibly more) hosts to a set of receivers that may be distributed over different (sub) networks. This makes delivery of multicast datagrams significantly more complex.

Multicast sources can send a single copy of data using a single address for the entire group of recipients. The routers between the source and recipients route the data using the group address route. Multicast packets are delivered to a multicast group. A multicast group specifies a set of recipients who are interested in a data stream and is represented by an IP address from a specified range. Data addressed to the IP address is forwarded to the members of the group. A source host sends data to a multicast group by specifying the multicast group address in datagram’s destination IP address. A source does not have to register to send data to a group nor do they need to be a member of the group.

Routers and Layer 3 switches use the Internet Group Management Protocol (IGMP) and Multicast Listener Discovery (MLD) to manage membership for a multicast session. When a host wants to receive one or more multicast sessions, it sends a join message for each multicast group it wants to join. When a host wants to leave a multicast group, it sends a leave message.

Multicast in the broadband service router

This section describes the multicast protocols employed when a Nokia router is used as a Broadband Service Router (BSR) in a Triple Play aggregation network.

The protocols used are:

Internet Group Management Protocol (Internet Group Management Protocol)

Multicast Listener Discovery (Multicast Listener Discovery)

Source Specific Multicast Groups (Internet Group Management Protocol)

Protocol Independent Multicast (Sparse Mode) (Protocol Independent Multicast Sparse Mode)

Internet Group Management Protocol

Internet Group Management Protocol (IGMP) is used by IPv4 hosts and routers to report their IP multicast group memberships to neighboring multicast routers. A multicast router keeps a list of multicast group memberships for each attached network, and a timer for each membership.

Multicast group memberships include at least one member of a multicast group on a given attached network, not a list of all the members. With respect to each of its attached networks, a multicast router can assume one of two roles, querier or non-querier. There is normally only one querier per physical network.

A querier issues two types of queries, a general query and a group-specific query. General queries are issued to solicit membership information about any multicast group. Group-specific queries are issued when a router receives a leave message from the node it perceives as the last group member remaining on that network segment.

Hosts wanting to receive a multicast session issue a multicast group membership report. These reports must be sent to all multicast enabled routers.

IGMP versions and interoperability requirements

If routers run different versions of IGMP, they negotiates the lowest common version of IGMP that is supported on their subnet and operate in that version.

Version 1

Specified in RFC 1112, Host extensions for IP Multicasting, was the first widely deployed version and the first version to become an Internet standard.

Version 2

Specified in RFC 2236, Internet Group Management Protocol, added support for low leave latency, that is, a reduction in the time it takes for a multicast router to learn that there are no longer any members of a group present on an attached network.

Version 3

Specified in RFC 3376, Internet Group Management Protocol, adds support for source filtering, that is, the ability for a system to report interest in receiving packets only from specific source addresses, as required to support Source-Specific Multicast (See Source Specific Multicast (SSM)), or from all but specific source addresses, sent to a multicast address.

IGMPv3 must keep state per group per attached network. This group state consists of a filter-mode, a list of sources, and various timers. For each attached network running IGMP, a multicast router records the necessary reception state for that network.

IGMP version transition

Nokia’s SRs are capable of interoperating with routers and hosts running IGMPv1, IGMPv2, or IGMPv3. Draft-ietf-magma-igmpv3-and-routing-0x.txt explores some of the interoperability issues and how they affect the various routing protocols.

IGMP version 3 specifies that if at any point a router receives an older version query message on an interface that it must immediately switch into a compatibility mode with that earlier version. Because none of the previous versions of IGMP are source aware, should this occur and the interface switch to Version 1 or 2 compatibility mode, any previously learned group memberships with specific sources (learned from the IGMPv3 specific INCLUDE or EXCLUDE mechanisms) must be converted to non-source specific group memberships. The routing protocol then treats this as if there is no EXCLUDE definition present.

Multicast Listener Discovery

Multicast Listener Discovery (MLD) is used by IPv6 hosts and routers to report their IP multicast group memberships to neighboring multicast routers. A multicast router keeps a list of multicast group memberships for each attached network, and a timer for each membership. Multicast group memberships include at least one member of a multicast group on a specific attached network, not a list of all the members. With respect to each of its attached networks, a multicast router can assume one of two roles, querier or non-querier. There is normally only one querier per physical network.

A querier issues two types of queries, a general query and a group-specific query. General queries are issued to solicit membership information about any multicast group. Group-specific queries are issued when a router receives a leave message from the node it perceives as the last group member remaining on that network segment.

Hosts wanting to receive a multicast session issue a multicast group membership report. These reports must be sent to all multicast enabled routers.

MLD versions and interoperability requirements

If routers run different versions of MLD, they negotiates the lowest common version of MLD that is supported on their subnet and operate in that version.

Version 1

Specified in RFC 2710, Multicast Listener Discovery (MLD) for IPv6, was the first deployed version and included low leave latency, that is, a reduction in the time it takes for a multicast router to learn that there are no longer any members of a group present on an attached network.

Version 2

Specified in RFC 3810, Multicast Listener Discovery Version 2 (MLDv2) for IPv6, adds support for source filtering, that is, the ability for a system to report interest in receiving packets only from specific source addresses, as required to support Source-Specific Multicast.

Multicast (SSM)), or from all but specific source addresses, sent to a multicast address. MLDv2 must keep state per group per attached network. This group state consists of a filter mode, a list of sources, and various timers. For each attached network running MLD, a multicast router records the wanted reception state for that network.

Source-specific multicast groups

IGMPv3and MLDv2 allows a receiver to join a group and specify that it only wants to receive traffic for a group if that traffic comes from a specific source. If a receiver does this, and no other receiver on the LAN requires all the traffic for the group, then the Designated Router (DR) can omit performing a (*,G) join to set up the shared tree, and instead issue a source-specific (S,G) join only.

For IPv4, the range of multicast addresses from 232.0.0.0 to 232.255.255.255 is currently set aside for source-specific multicast. For groups in this range, receivers should only issue source specific IGMPv3 joins. If a PIM router receives a non-source-specific join for a group in this range, it should ignore it.

For IPv6, the multicast prefix FF3x::/32 is currently set aside for source-specific multicast. For groups in this range, receivers should only issue source specific MLDv2 joins. If a PIM router receives a non-source-specific join for a group in this range, it should ignore it.

A Nokia PIM router must silently ignore a received (*, G) PIM join message where G is a multicast group address from the multicast address group range that has been explicitly configured for SSM. This occurrence should generate an event. If configured, the IGMPv2 (MLDv1for IPv6) request can be translated into IGMPv3 (MLDv2 for IPv6). The SR allows for the conversion of an IGMPv2 (*,G) request into a IGMPv3 (S,G) request based on manual entries. A maximum of 32 SSM ranges is supported.

IGMPv3 and MLDv2 also allows a receiver to join a group and specify that it only wants to receive traffic for a group if that traffic does not come from a specific source or sources. In this case, the DR performs a (*,G) join as normal, but can combine this with a prune for each of the sources the receiver does not want to receive.

Protocol Independent Multicast Sparse Mode

Protocol Independent Multicast Sparse Mode (PIM-SM) leverages the unicast routing protocols that are used to create the unicast routing table: OSPF, IS-IS, BGP, and static routes. Because PIM uses this unicast routing information to perform the multicast forwarding function it is effectively IP protocol independent. Unlike DVMRP, PIM does not send multicast routing tables updates to its neighbors.

PIM-SM uses the unicast routing table to perform the Reverse Path Forwarding (RPF) check function instead of building up a completely independent multicast routing table.

PIM-SM only forwards data to network segments with active receivers that have explicitly requested the multicast group. PIM-SM in the ASM model initially uses a shared tree to distribute information about active sources. Depending on the configuration options, the traffic can remain on the shared tree or switch over to an optimized source distribution tree. As multicast traffic starts to flow down the shared tree, routers along the path determine if there is a better path to the source. If a more direct path exists, then the router closest to the receiver sends a join message toward the source and then reroutes the traffic along this path.

As stated above, PIM-SM relies on an underlying topology-gathering protocol to populate a routing table with routes. This routing table is called the Multicast Routing Information Base (MRIB). The routes in this table can be taken directly from the unicast routing table, or it can be different and provided by a separate routing protocol such as MBGP. Regardless of how it is created, the primary role of the MRIB in the PIM-SM protocol is to provide the next hop router along a multicast-capable path to each destination subnet. The MRIB is used to determine the next hop neighbor to whom any PIM join/prune message is sent. Data flows along the reverse path of the join messages. Thus, in contrast to the unicast RIB that specifies the next hop that a data packet would take to get to some subnet, the MRIB gives reverse-path information, and indicates the path that a multicast data packet would take from its origin subnet to the router that has the MRIB.

Ingress multicast Path Management (IMPM) enhancements

See the Advanced Configuration Guide for more information about IMPM as well as detailed configuration examples.

IMPM allows the system to manage Layer 2 and Layer 3 IP multicast flows by sorting them into the available multicast paths through the switch fabric. The ingress multicast manager tracks the amount of available multicast bandwidth per path and the amount of bandwidth used per IP multicast stream. The following traffic is managed by IMPM when enabled:

IPv4 and IPv6 routed multicast traffic

VPLS IGMP snooping traffic

VPLS PIM snooping for IPv4 traffic

VPLS PIM snooping for IPv6 traffic (only when sg-based forwarding is configured)

Two policies define how each path should be managed, the bandwidth policy, and how multicast channels compete for the available bandwidth, the multicast information policy.

Chassis multicast planes should not be confused with IOM/IMM multicast paths. The IOM/IMM uses multicast paths to reach multicast planes on the switch fabric. An IOM/IMM may have less or more multicast paths than the number of multicast planes available in the chassis.

Each IOM/IMM multicast path is either a primary or secondary path type. The path type indicates the multicast scheduling priority within the switch fabric. Multicast flows sent on primary paths are scheduled at multicast high priority while secondary paths are associated with multicast low priority.

The system determines the number of primary and secondary paths from each IOM/IMM forwarding plane and distributes them as equally as possible between the available switch fabric multicast planes. Each multicast plane may terminate multiple paths of both the primary and secondary types.

The system ingress multicast management module evaluates the ingress multicast flows from each ingress forwarding plane and determines the best multicast path for the flow. A specific path can be used until the terminating multicast plane is ‟maxed” out (based on the rate limit defined in the per-mcast-plane-capacity commands) at which time either flows are moved to other paths or potentially blackholed (flows with the lowest preference are dropped first). In this way, the system makes the best use of the available multicast capacity without congesting individual multicast planes.

The switch fabric is simultaneously handling both unicast and multicast flows. The switch fabric uses a weighted scheduling scheme between multicast high, unicast high, multicast low and unicast low when deciding which cell to forward to the egress forwarding plane next. The weighted mechanism allows some amount of unicast and lower priority multicast (secondary) to drain on the egress switch fabric links used by each multicast plane. The amount is variable based on the number of switch fabric planes available on the amount of traffic attempting to use the fabric planes. The per-mcast-plane-capacity commands allows the amount of managed multicast traffic to be tuned to compensate for the expected available egress multicast bandwidth per multicast plane. In conditions where it is highly desirable to prevent multicast plane congestion, the per-mcast-plane-capacity commands should be used to compensate for the non-multicast or secondary multicast switch fabric traffic.

Multicast in the BSA

IP Multicast is normally not a function of the Broadband Service Aggregator (BSA) in a Triple Play aggregation network being a Layer 2 device. However, the BSA does use IGMP snooping to optimize bandwidth utilization.

IGMP snooping

For most Layer 2 switches, multicast traffic is treated like an unknown MAC address or broadcast frame, which causes the incoming frame to be flooded out (broadcast) on every port within a VLAN. While this is acceptable behavior for unknowns and broadcasts, as IP Multicast hosts may join and be interested in only specific multicast groups, all this flooded traffic results in wasted bandwidth on network segments and end stations.

IGMP snooping entails using information in layer 3 protocol headers of multicast control messages to determine the processing at layer 2. By doing so, an IGMP snooping switch provides the benefit of conserving bandwidth on those segments of the network where no node has expressed interest in receiving packets addressed to the group address.

On the 7450 ESS and 7750 SR, IGMP snooping can be enabled in the context of VPLS services. The IGMP snooping feature allows for optimization of the multicast data flow for a group within a service to only those Service Access Points (SAPs) and service destination points (SDPs) that are members of the group. In fact, the 7450 ESS and 7750 SR implementation performs more than pure snooping of IGMP data, because it also summarizes upstream IGMP reports and responds to downstream queries.

The 7450 ESS and 7750 SR maintain several multicast databases:

A port database on each SAP and SDP lists the multicast groups that are active on this SAP or SDP.

All port databases are compiled into a central proxy database. Towards the multicast routers, summarized group membership reports are sent based on the information in the proxy database.

The information in the different port databases is also used to compile the multicast forwarding information base (MFIB). This contains the active SAPs and SDPs for every combination of source router and group address (S,G), and is used for the actual multicast replication and forwarding.

When the router receives a join report from a host for a multicast group, it adds the group to the port database and (if it is a new group) to the proxy database. It also adds the SAP or SDP to existing (S,G) in the MFIB, or builds a new MFIB entry.

When the router receives a leave report from a host, it first checks if other devices on the SAP or SDP still want to receive the group (unless fast leave is enabled). Then it removes the group from the port database, and from the proxy database if it was the only receiver of the group. The router also deletes entries if it does not receive periodic membership confirmations from the hosts.

The fast leave feature finds its use in multicast TV delivery systems, for example. Fast Leave speeds up the membership leave process by terminating the multicast session immediately, instead of the standard procedure of issuing a group specific query to check if other group members are present on the SAP or SDP.

IGMP/MLD message processing

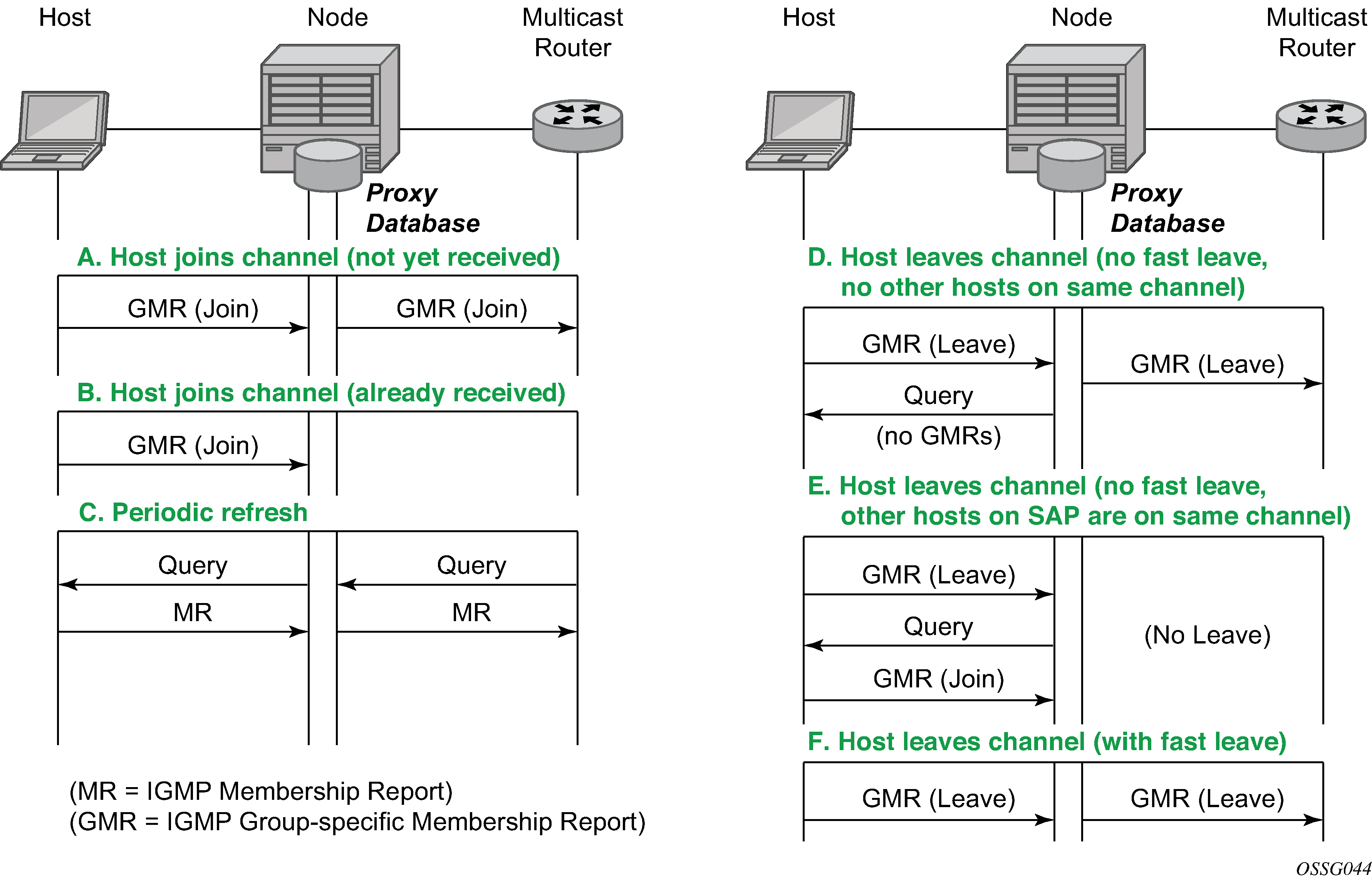

IGMP/MLD message processing illustrates the basic IGMP message processing in several situations.

IGMP message processing

Scenario A: A host joins a multicast group (TV channel) which is not yet being received by other hosts on the router, and therefore, is not yet present in the proxy database. The 7450 ESS or 7750 SR adds the group to the proxy database and sends a new IGMP Join group-specific membership report upstream to the multicast router.

Scenario B: A host joins a channel which is already being received by one or more hosts on the router, and is already present in the proxy database. No upstream IGMP report is generated by the router.

Scenario C: The multicast router periodically sends IGMP queries to the router, requesting it to respond with generic membership reports. Upon receiving such a query, the router compiles a report from its proxy database and send it back to the multicast router.

In addition, the router floods the received IGMP query to all hosts (on SAPs and spoke SDPs), and updates its proxy database based on the membership reports received back.

Scenario D: A host leaves a channel by sending an IGMP leave message. If fast-leave is not enabled, the router first checks whether there are other hosts on the same SAP or spoke SDP by sending a query. If no other host responds, the 7450 ESS or 7750 SR removes the channel from the SAP. In addition, if there are no other SAPs or spoke SDPs with hosts subscribing to the same channel, the channel is removed from the proxy database and an IGMP leave report is sent to the upstream Multicast Router.

Scenario E: A host leaves a channel by sending an IGMP leave message. If fast-leave is not enabled, the router checks whether there are other hosts on the same SAP or spoke SDP by sending a query. Another device on the same SAP or spoke SDP still wants to receive the channel and responds with a membership report. The router does not remove the channel from the SAP.

Scenario F: A host leaves a channel by sending an IGMP leave report. Fast-leave is enabled, so the router does not check whether there are other hosts on the same SAP or spoke SDP but immediately removes the group from the SAP. In addition, if there are no other SAPs or spoke SDPs with hosts subscribing to the same group, the group is removed from the proxy database and an IGMP leave report is sent to the upstream multicast router.

MLD message processing

MLD message processing differs from IGMP. An IPv6 host can have two WAN IPv6 addresses and an IPv6 prefix. MLD messages source address are link local addresses. This makes it difficult to know if the originating host is a WAN host or a PD host. By default, all requested IPv6 (s,g) are first associated with a WAN host. If this WAN host disconnects or ends its IPv6 session, the (s,g) is then associated with the remaining WAN host. If there are no more WAN hosts, the (s,g) is then associated with the remaining PD host. The (s,g) is always transferred to the remaining IPv6 host until there are no more report replies to corresponding to the queries. Scenarios A — F do not differ for IPv6 hosts.

IGMP/MLD filtering

A provider may want to block receive or transmit permission to individual hosts or a range of hosts. To this end, the 7450 ESS and 7750 SR support IGMP/MLD filtering. Two types of filter can be defined:

Filter IGMP/MLD membership reports from a host or range of hosts. This is performed by importing an appropriately defined routing policy into the SAP or spoke SDP.

Filter to prevent a host from transmitting multicast streams into the network. The operator can define a data-plane filter (ACL) which drops all multicast traffic, and apply this filter to a SAP or spoke SDP.

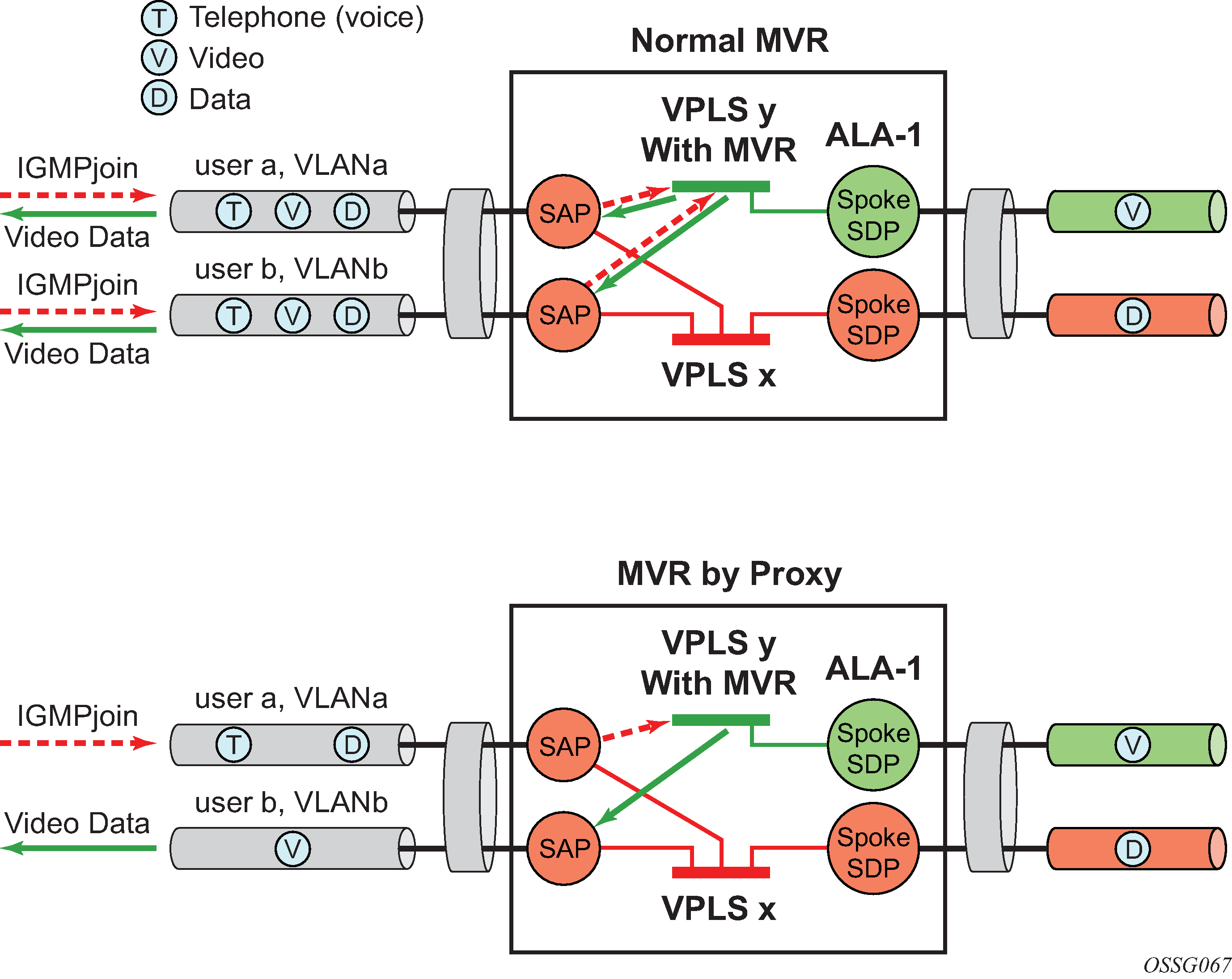

Multicast VPLS Registration (MVR)

Multicast VPLS Registration (MVR) is a bandwidth optimization method for multicast in a broadband services network. MVR allows a subscriber on a port to subscribe and unsubscribe to a multicast stream on one or more network-wide multicast VPLS instances.

MVR assumes that subscribers join and leave multicast streams by sending IGMP join and leave messages. The IGMP leave and join message are sent inside the VPLS to which the subscriber port is assigned. The multicast VPLS is shared in the network while the subscribers remain in separate VPLS services. Using MVR, users on different VPLS cannot exchange any information between them, but still multicast services are provided.

On the MVR VPLS, IGMP snooping must be enabled. On the user VPLS, IGMP snooping and MVR work independently. If IGMP snooping and MVR are both enabled, MVR reacts only to join and leave messages from multicast groups configured under MVR. Join and leave messages from all other multicast groups are managed by IGMP snooping in the local VPLS. This way, potentially several MVR VPLS instances could be configured, each with its own set of multicast channels.

MVR by proxy — In some situations, the multicast traffic should not be copied from the MVR VPLS to the SAP on which the IGMP message was received (standard MVR behavior) but to another SAP. This is called MVR by proxy.

MVR and MVR by proxy shows a MVR and MVR by proxy configuration.

Layer 3 multicast load balancing

Layer 3 multicast load balancing establishes a more efficient distribution of Layer 3 multicast data over ECMP and LAG links. Operators have the option to redistribute multicast groups over ECMP or LAG links if the number of links changes either up or down.

When implementing this feature, there are several considerations. When multicast load balancing is not configured, the distribution remains as is. Multicast load balancing is based on the number of ‟s,g” groups. This means that bandwidth considerations are not considered. The multicast groups are distributed over the available links as joins are processed. When link failure occurs, the load is distributed on the failed channel to the remaining channels so multicast groups are evenly distributed over the remaining links. When a link is added (or failed link returned) all multicast joins on the added links are allocated until a balance is achieved.

When multicast load balancing is configured, but the channels are not found in the multicast-info-policy, then multicast load balancing is based on the number of ‟s,g” groups. This means that bandwidth considerations are not considered. The multicast groups are distributed over the available links as joins are processed. The multicast groups are evenly distributed over the remaining links. When link failure occurs, the load is distributed on the failed channel to the remaining channels. When a link is added (or failed link returned) all multicast joins on the added links are allocated until a balance is achieved. A manual redistribute command enables the operator to re-evaluate the current balance and, if required, move channels to different links to achieve a balance. A timed redistribute parameter allows the system to automatically, at regular intervals, redistribute multicast groups over available links. If no links have been added or removed from the ECMP/LAG interface, then no redistribution is attempted.

When multicast load balancing is configured, multicast groups are distributed over the available links as joins are processed based on bandwidth configured for the specified group address. If the bandwidth is not configured for the multicast stream then the configured default value is used.

If link failure occurs, the load is distributed on the failed channel to the remaining channels. The bandwidth required over each individual link is evenly distributed over the remaining links.

When an additional link is available for a specific multicast stream, then it is considered in all multicast stream additions applied to the interface. This multicast stream is included in the next scheduled automatic rebalance run. A rebalance run re-evaluates the current balance with regard to the bandwidth utilization and if required, move multicast streams to different links to achieve a balance.

A rebalance, either timed or executing the mc-ecmp-rebalance command, should be administered gradually to minimize the effect of the rebalancing process on the different multicast streams. If multicast re-balancing is disabled and subsequently enabled, keeping with the rebalance process, the gradual and least invasive method is used to minimize the effect of the changes to the customer.

By default multicast load balancing over ECMP links is enabled and set at 30 minutes.

The rebalance process can be executed as a low priority background task while control of the console is returned to the operator. When multicast load rebalancing is not enabled, then ECMP changes are not be optimized, however, when a link is added occurs an attempt is made to balance the number of multicast streams on the available ECMP links. This however may not result in balanced utilization of ECMP links.

Only a single mc-ecmp-rebalance command can be executed any specific time, if a rebalance is in progress and the command is entered, it is rejected with the message saying that a rebalance is already in progress. A low priority event is generated when an actual change for a specific multicast stream occurs as a result of the rebalance process.

IGMP state reporter

The target application for this feature is linear TV delivery. In some countries, wholesale Service Providers are obligated by the government regulation to provide information about channel viewership per subscriber to retailers.

A service provider (wholesaler or retailer) my use this information for:

billing purposes

market research/data mining to gain view into the most frequently watched channels, duration of the channel viewing, frequency of channel zapping by the time of the day, and so on

The information about channel viewership is based on IGMP states maintained per each subscriber host. Each event related to the IGMP state creation is recorded and formatted by the IGMP process. The formatted event is then sent to another task in the system (Exporter), which allocates a TX buffer and start a timer.

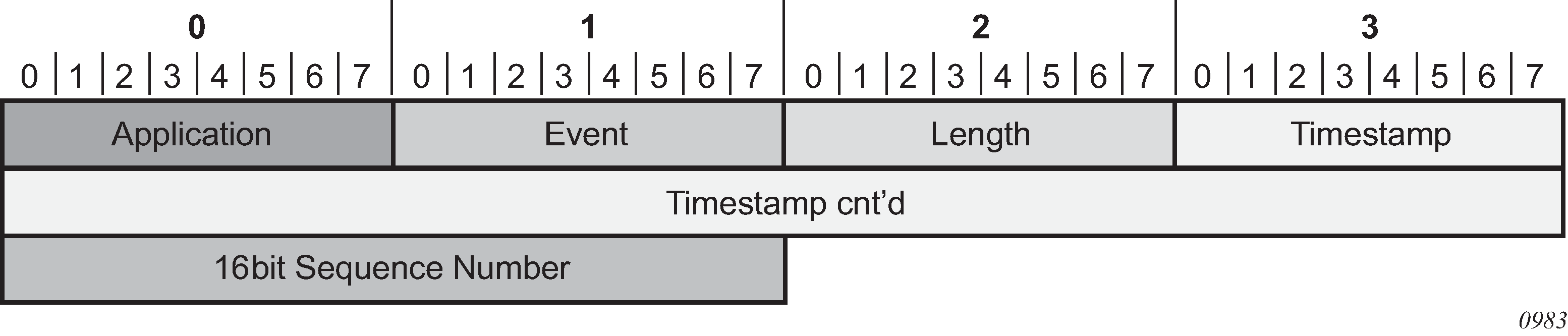

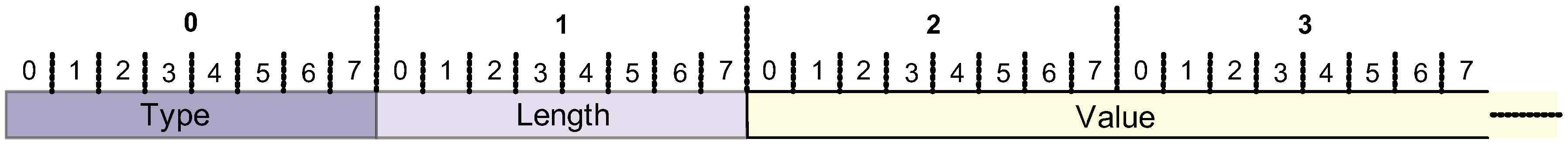

The event is then be written by the Exporter into the buffer. The buffer in essence corresponds to the packet that contains a single event or a set of events. Those events are transported as data records over UDP transport to an external collector node. The packet itself has a header followed by a set of TLV type data structures, each describing a unique filed within the IGMP event.

The packet is transmitted when it reaches a preconfigured size (1400 bytes), or when the timer expires, whichever comes first.

The receiving end (collector node) accepts the data on the destination UDP port. It must be aware of the data format so that it can interpret incoming data accordingly. The implementation details of the receiving node are outside of the scope of this description and are left to the network operator.

The IGMP state recording per subscriber host must be supported for hosts which are replicating multicast traffic directly as well as for those host that are only keeping track of IGMP states for the HQoS Adjustment purpose. The latter is implemented by redirection and not the Host Tracking (HT) feature as originally proposed. The IGMP reporting must differentiate events between direct replication and redirection.

It further distinguish events that are related to denial of IGMP state creation (because of filters, MCAC failure, and so on) and the ones that are related to removal of an already existing IGMP state in the system.

IGMP data records

Each IGMP state change generates a data record that is formatted by the IGMP task and written into the buffer. IGMP state transitions configured statically through CLI are not reported.

To minimize the size of the records when transported over the network, most fields in the data record are HEX coded (as opposed to ASCII descriptive strings).

Each data record has a common header as shown in Common IGMP data record header:

Application:

0x01 - IGMP

0x02 - IGMP Host Tracking Event:

Event:

Related to denial of a new state creation:

0x01

Join

0x02 (Join_Deny_Filter)

Join denied because of filtering by an import policy

0x03 (Join_Deny_CAC)

Join denied because of MCAC

0x04 (Join_Deny_MaxGrps)

Join denied because of maximum groups per host limit reached

0x05 (Join_Deny_MaxSrcs)

Join denied because of maximum sources limit reached

-

0x06 Join (Join_Deny_SysErr)

Join denied because of an internal error (for example: out of memory)

-

Related to removal of an existing IGMP state:

- 0x07

(Drop_Leave_Rx) IGMP state is removed because of the Leave message

-

0x08

(Drop_Expiry) IGMP state is removed because of the time out (by default 2*query_interval + query_response_interval = 260sec)

-

0x09

(Drop_Filter) IGMP state is removed because of the filter (import policy) change

-

0x0A

(Drop_CfgChange) IGMP state is removed because of a configuration change (clear grp, intf shutdown, PPPoE session goes unexpectedly down)

-

0x0B

(Drop_CAC) an existing stream is stopped because of a configuration change in MCAC

- 0x07

Length: The length of the entire data record (including the header and TLVs) in octets.

16 bit Sequence Number

Because IGMP Reporting is based on connectionless transport (UDP), a 16 bit sequence numbers are used in each data record so that data loss in the network can be tracked.

The 16 bit sequence number is located after the time stamp field. The sequence numbers increases sequentially from 0 to 65535 and then rolls over back to 0.

Timestamp: Timestamp is in UNIX format (32 bit integer in seconds because 01/01/1970) plus an extra 8 bits for 10msec resolution.

TLVs describing the IGMP state record has the following structure shown in Data record field TLV structure and the data record field descriptions in Data record field description:

| Type | Description | Encoding/length | Mandatory/optional |

|---|---|---|---|

0x02 |

Subscriber ID |

ASCII |

M |

0x03 |

Sub Host IP |

4 Bytes IPv4 |

M |

0x04 |

Mcast Group IP |

4 Bytes IPv4 |

M |

0x05 |

Mcast Source IP |

4 Bytes IPv4 |

M |

0x06 |

Host MAC |

6 Bytes |

M |

0x07 |

PPPoE Session-ID |

2 Bytes |

M |

0x08 |

Service ID |

4 Bytes |

M |

0x09 |

SAP ID |

ASCII |

M |

0x0A |

Redirection vRtrId |

4 Bytes |

M |

0x0B |

Redirection ifIndex |

4 Bytes |

M |

The redirection destination TLV is a mandatory TLV that is sent only in cases where redirection is enabled. It contains two 32 bit integer numbers. The first number identifies the VRF where IGMPs are redirected; the second number identifies the interface index.

Optional fields can be included in the data records according to the configuration.

In IGMPv3, if an IGMP message (Join or Leave) contains multiple multicast groups or a multicast group contains multiple IP sources, only a single event is generated per group-source combination. In other words, data records are transmitted with a single source IP address and multiple mcast group addresses or a single multicast group address with multiple source IP addresses, depending on the content of the IGMP message. (*,G)

Transport mechanism

Data is transported by a UDP socket. The destination IP address, the destination port and the source IP address are configurable. The default UDP source and destination port number is 1037.

Upon the arrival of an IGMP event, the Exporter allocates a buffer for the packet (if not already allocated) and starts writing the events into the buffer (packet). Along with the initial buffer creation, a timer is started. The trigger for the transmission of the packet is either the TX buffer being filled up to 1400B (hard coded value), or the timer expiry, whichever comes first.

The source IP address is configurable within GRT (by default system IP), and the destination IP address can be reachable only by GRT. The source IP address is modified in the system>security>source-address>application CLI hierarchy.

The receiving end (the collector node) collects the data and process them according to the formatting rules defined in this document. The capturing and processing of the data on the collector node is outside of the context of this description.

The processing node should have sufficient resources to accept and process packets that contain information about every IGMP state change for every host from a set of network BRASes that are transporting data to this collector node.

Multicast Reporter traffic is marked as BE (all 6 DSCP bits are set to 0) exiting our system.

HA compliance

IGMP events are synchronized between two CPMs before they are transported out of the system.

QoS awareness

IGMP Reporter is a client of sgt-qos so that DSCP or dot1p bits can be appropriately be marked when egressing the system.

IGMP reporting restrictions

The following are not supported:

Regular (non-subscriber) interfaces

SAM support as the collector device

Multicast support over subscriber interfaces in Routed CO model

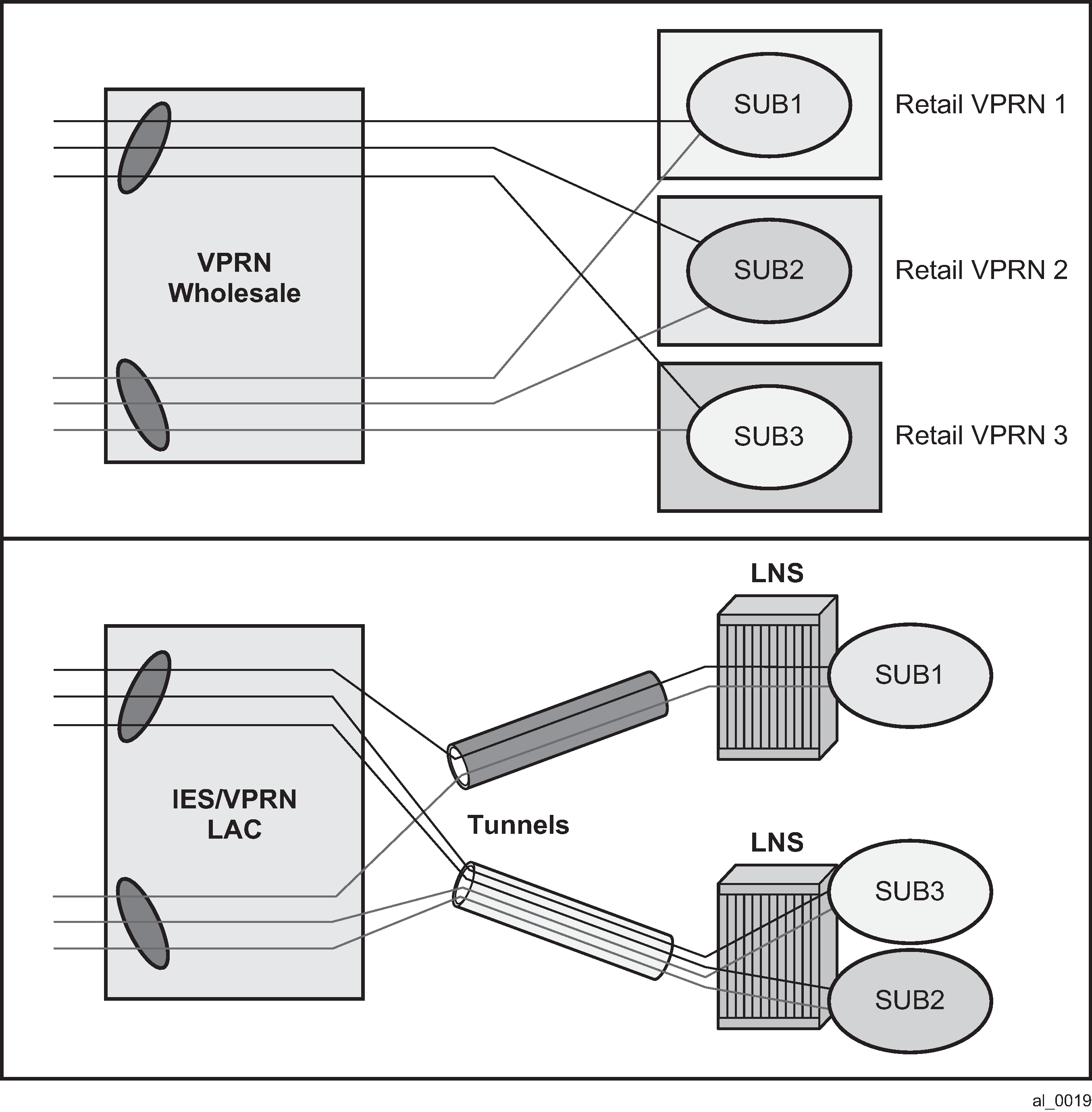

Applications for multicast over subscriber interfaces in Routed CO ESM model can be divided in two main categories:

Residential customers where the driver applications are:

IPTV in an environment with legacy non-multicasting DSLAMs

Internet multicast where users connect to a multicast stream sourced from the Internet.

For the business customers, the main drivers are enterprise multicast and Internet multicast applications.

On multicast-capable ANs, a single copy of each multicast stream is delivered over a separate regular IP interface. AN would then perform the replication. This is how multicast would be deployed in Routed CO environment with the 7750 SR and 7450 ESS.

On legacy, non-multicast ANs, or in environments with low volume multicast traffic where it is not worth setting up a separate multicast topology (from BNG to AN), multicast replication is performed with subscriber-interfaces in the 7750 SR and 7450 ESS. There are differences in replicating multicast traffic on IPoE vs PPPoX which are described in subsequent sessions.

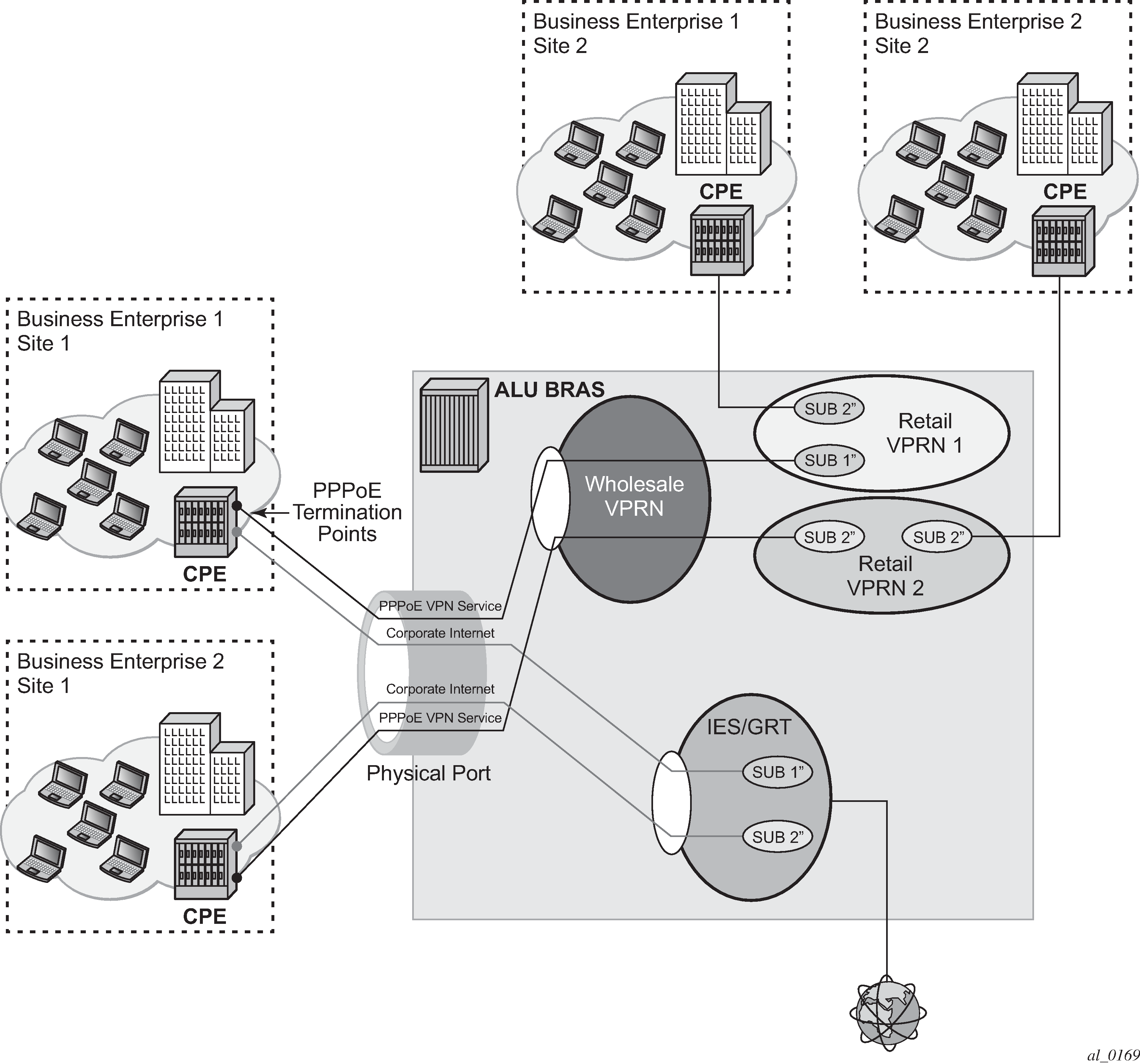

An example of a business connectivity model is shown in Typical business connectivity model.

In this example, HSI is terminated in a Global Routing Table (GRT) whereas VPRN services are terminated in Wholesale/Retail VPRN method, with each customer using a separate VPRN.

The actual connectivity model that is deployed depends on many operational aspects that are present in the customer environment.

Multicast over subscriber-interfaces in a Routed CO model is supported for both types of hosts, IPoE and PPPoE which can be simultaneously enabled on a shared SAP.

There are some fundamental differences in multicast behavior between two host types (IPoE and PPPoX). The differences are discussed further in the next sections.

Multicast over IPoE

There are several deployment scenarios for delivering multicast directly over subscriber hosts:

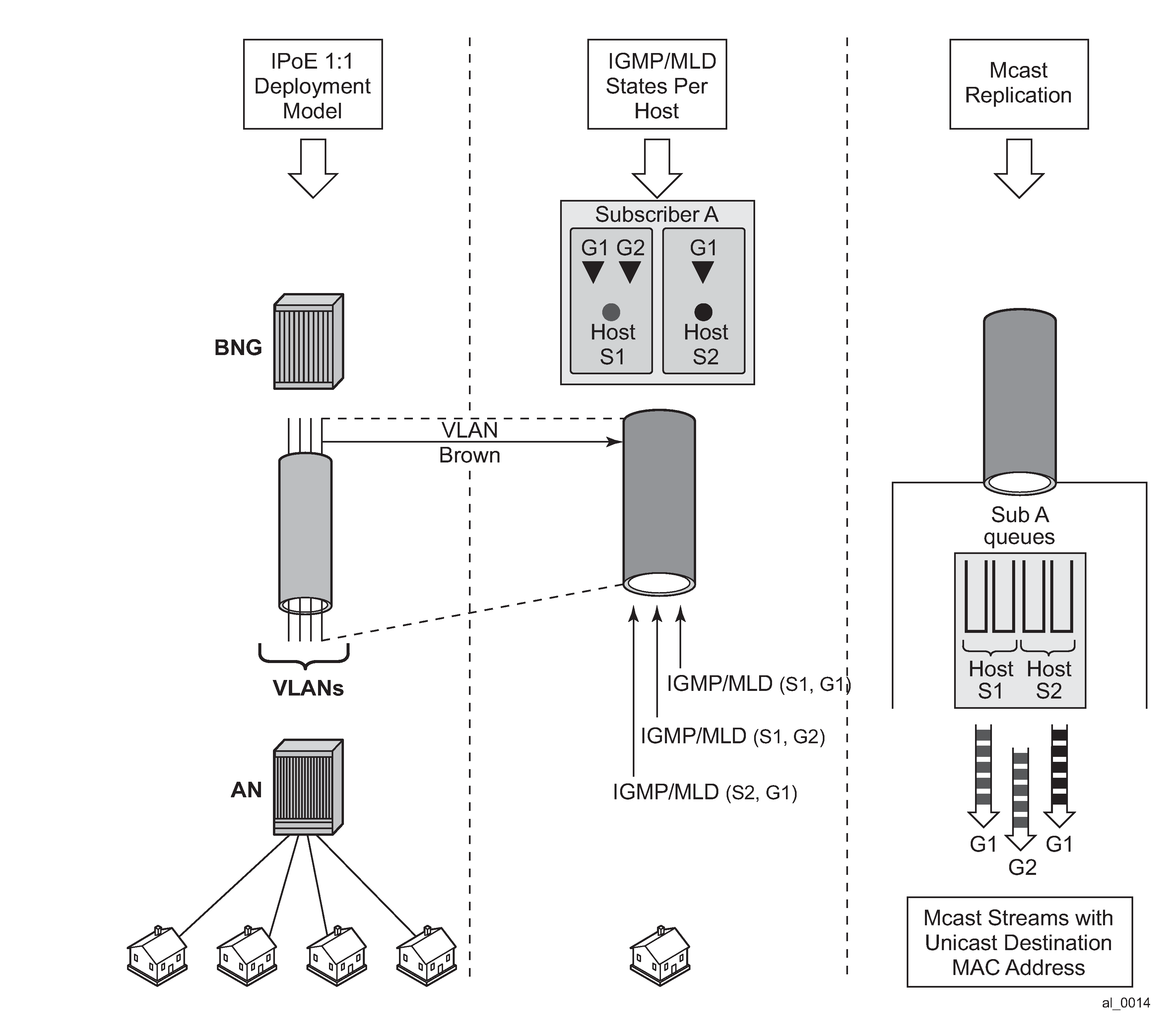

1:1 model (subscriber per VLAN/SAP) with the Access Node (AN) that is not IGMP/MLD aware.

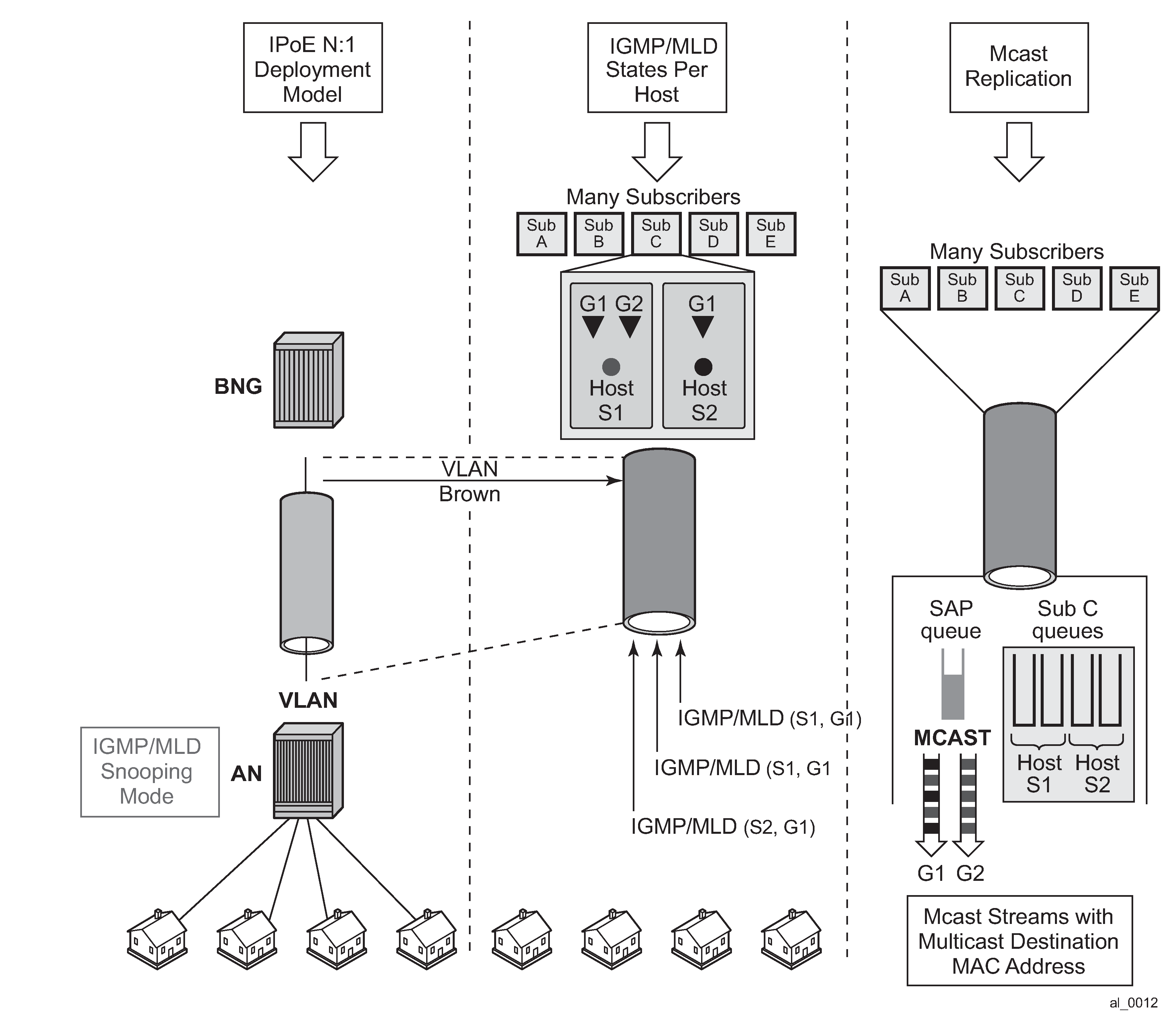

N:1 model (service per VLAN/SAP) with the AN in the Snooping mode.

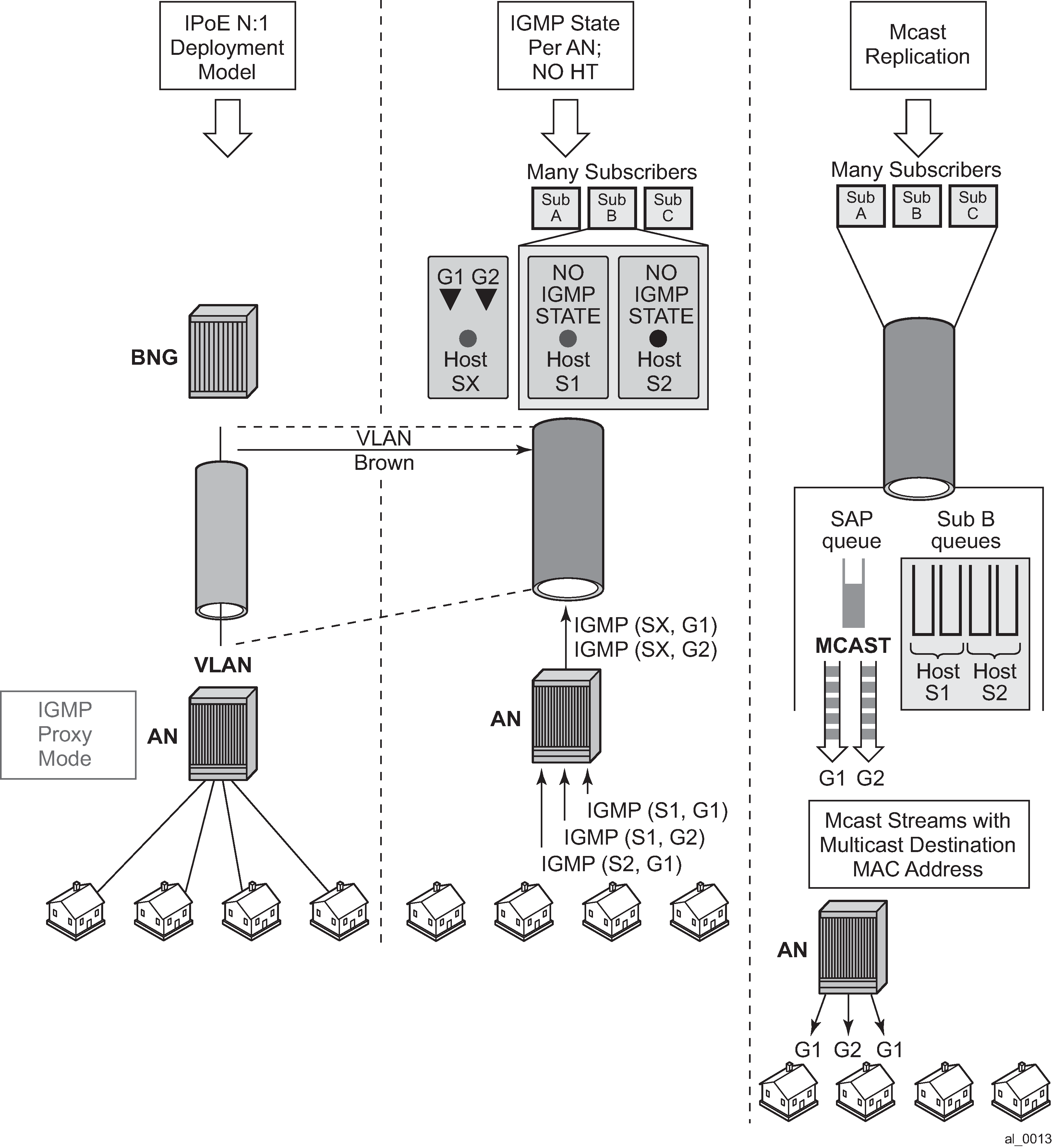

N:1 model with the AN in the Proxy mode.

N:1 model with the AN that is not IGMP/MLD aware.

There are two modes of operation for subscriber multicast that can be chosen to address the above mentioned deployment scenarios:

Per SAP replication — A single multicast stream per group is forwarded on any given SAP. Even if the SAP has a multicast group (channel) that is registered to multiple hosts, only a single copy of the multicast stream is forwarded over this SAP. The multicast stream has a multicast destination MAC address (as opposed to unicast). IGMP/MLD states are maintained per host. This is the default mode of operation.

Per subscriber host replication in this mode of operation, multicast is replicated per subscriber host even if this means that multiple copies of the same stream that are forwarded over the same SAP. For example, if two hosts on the same SAP are registered to receive the same multicast group (channel), then this multicast channel is replicated twice on the same SAP. The streams have a unique unicast destination MAC address (otherwise it would not make sense to replicate the streams twice).

In all deployment scenarios and modes of operation the IGMP/MLD states per source IP address of the incoming IGMP/MLD message is maintained. This source IP address might represent a subscriber hosts or the AN (proxy mode).

For MLD, the source IP address is the host link local address. Therefore, the MLD message is associated with all IP address/prefix of the host.

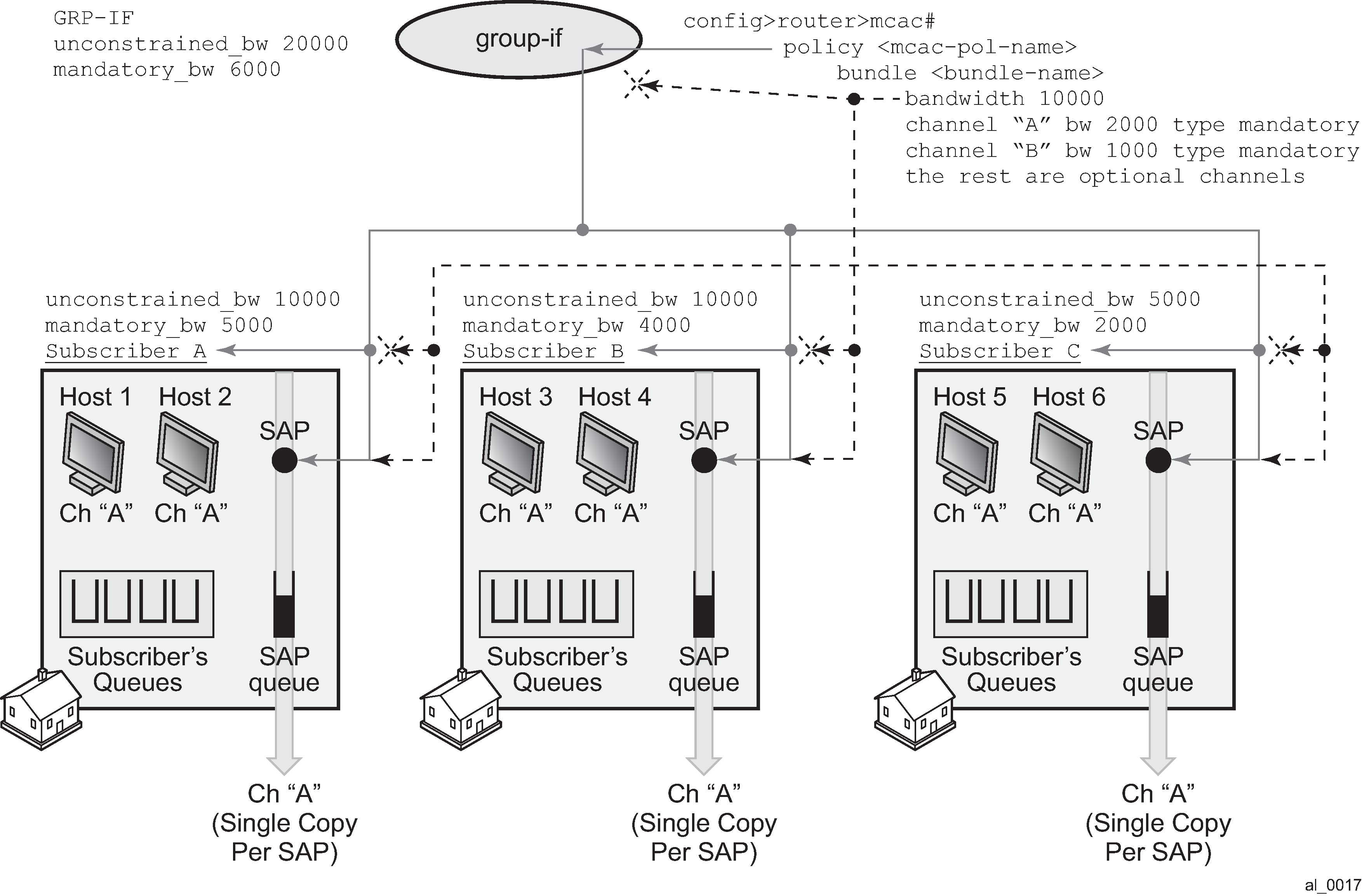

Per SAP replication mode

In the per SAP replication mode a single copy of the multicast channel is forwarded per SAP. In other words, if a subscriber (in 1:1 mode) or a group of subscribers (in N:1 mode) have multiple hosts and all of them are subscribed to the same multicast group (watching the same channel), then only a single copy of the multicast stream for that group is sent. The destination MAC address is always a multicast MAC (there are no conversion to unicast MAC address).

IGMP/MLD states are maintained per subscriber host and per SAP.

Per SAP queue

Multicast traffic over subscribers in a per-SAP replication mode flows through a SAP queue which is outside of the subscriber queues context. Sending the multicast traffic over the default SAP queue is characterized by:

the inability to classify multicast traffic into separate subscriber queues and therefore include it natively in the subscriber HQoS hierarchy; however, multicast traffic can be classified into specific (M-)SAP queues, assuming that such queues are enabled by (M-)SAP based QoS policy

redirection of multicast traffic by internal queues in case the SAP queue in subscriber environment is disabled (sub-sla-mgmt>single-sub-parameters>profiled-traffic-only); this is applicable only to 1:1 subscriber model

a possible necessity for HQoS Adjustment as multicast traffic is flowing outside of the subscriber queues

de-coupling of the multicast forwarding statistics from the overall subscriber forwarding statistics obtained by subscriber specific show commands

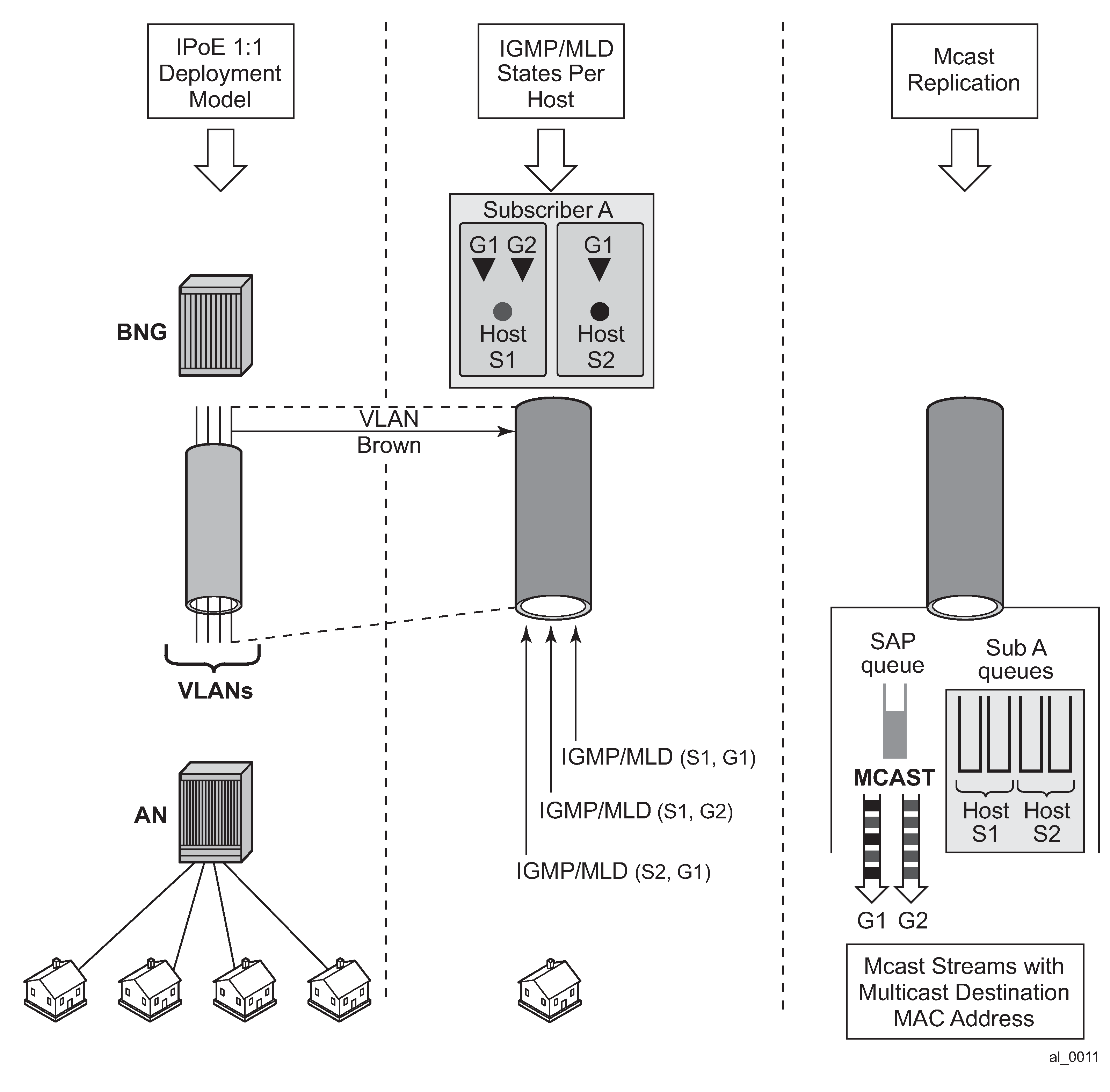

IPoE 1:1 model (subscriber per VLAN/SAP) — no IGMP/MLD in AN

The AN is not IGMP/MLD aware, all replications are performed in the BNG. From the BNG perspective this deployment model has the following characteristics:

IGMP/MLD states are kept per hosts and SAPs. Each host can be registered to more than one group.

MLD uses link-local as source address, which makes it difficult to associate with the originating host. MLD (S,G) are always first associated with a IPv6 WAN host if available. If this WAN host terminate its IPv6 session (by lease expiry, session terminate, and so on), the (S,G) is then associated with any remaining WAN host. Only when there are no more WAN hosts available is the (S,G) associated with the PD host, if any.

IGMP/MLD Joins are accepted only from the active subscriber hosts as dictated by antispoofing.

IGMP/MLD statistics can be displayed per host or per group.

Multicast traffic for the subscriber is forwarded through the egress SAP queue. In case that the SAP queue is disabled (profiled-traffic-only command), multicast traffic flows through internal queues outside of the subscriber context.

A single copy of any multicast stream is generated per SAP. This can be viewed as replication per unique multicast group per SAP, instead of the replication per host. In other words, the number of multicast streams on this SAP is equal to the number of unique groups across all hosts on this SAP (subscriber).

Traffic statistics are kept per the SAP queue. Consequently multicast traffic stats are shown outside of the subscriber context.

HQoS adjustment may be necessary.

Traffic cannot be explicitly classified (forwarding classes and queue mappings) inside of the subscriber queues.

Redirection to the common multicast VLAN (or Layer 3 interface) is supported.

Multicast streams have multicast destination MAC.

MLD uses link-local as source address, which makes it difficult to associate with the originating host. MLD (s,g) is associated with the IPv6 host. Therefore, if any WAN host or PD host end their IPv6 session (by lease expire, and so on), the (s,g) is associated with the remaining host address/prefix. The (s,g) is be delivered to the subscriber as long as a IPv6 address or prefix remains.

This model is shown in 1:1 model.

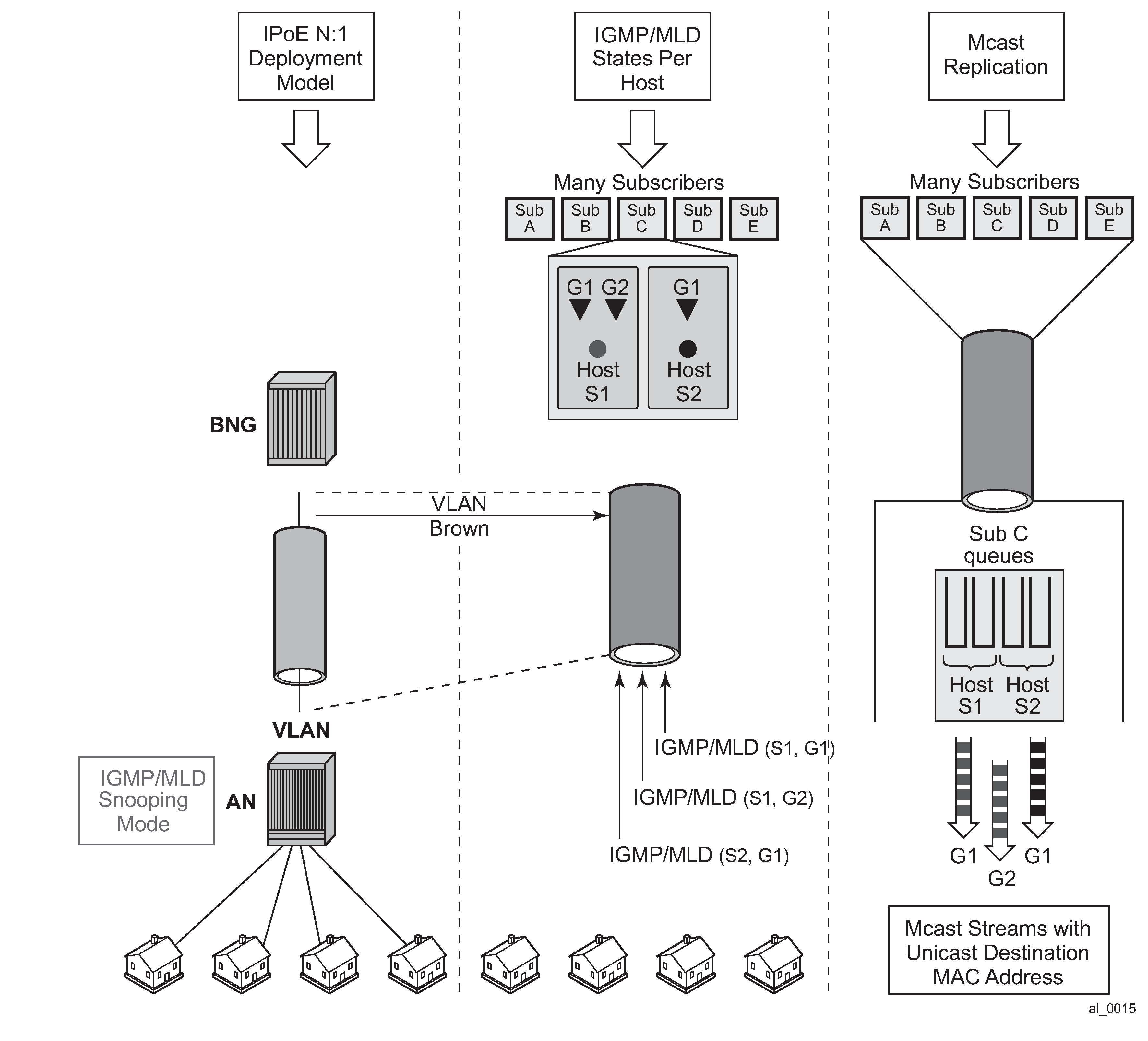

IPoE N:1 model (service per VLAN/SAP) — IGMP/MLD snooping in the AN

The AN is IGMP/MLD aware and is participating in multicast replication. From the BNG perspective this deployment model has the following characteristics:

IGMP/MLD states are kept per hosts and SAPs. Each host can be registered to more than one group.

IGMP/MLD Joins are accepted only from the active subscriber hosts as dictated by antispoofing.

IGMP/MLD statistics are displayed per host, per group or per subscriber.

Multicast traffic for all subscribers on this SAP is forwarded through the egress SAP queues.

A single copy of any multicast stream is generated per SAP. This can be viewed as the replication per unique multicast group per SAP, instead of the replication per host or subscriber. In other words, the number of multicast streams on this SAP is equal to the number of unique groups across all hosts and subscribers on this SAP.

The AN receives a single multicast stream and based on its own (AN) IGMP/MLD snooping information, it replicates the mcast stream to the appropriate subscribers.

Traffic statistics are kept per the SAP queue. Consequently multicast traffic stats are shown on a per SAP basis (aggregate of all subscribers on this SAP).

Traffic cannot be explicitly classified (forwarding classes and queue mappings) inside of the subscriber queues.

Redirection to the common multicast VLAN is supported.

Multicast streams have multicast destination MAC.

IGMP Joins are accepted (src IP address) only for the sub hosts that are already created in the system. IGMP Joins coming from the hosts that are nonexistent in the system are rejected, unless this functionality is explicitly enabled by the sub-hosts-only command under the IGMP group-int CLI hierarchy level.

MLD uses link-local as source address, which makes it difficult to associate with the originating host. MLD (S,G) are always first associated with a IPv6 WAN host, if available. If this WAN host terminate its IPv6 session (by lease expiry, session terminate, and so on), the (S,G) is then associated with any remaining WAN host. Only when there are no more WAN hosts available, is the (S,G) associated with the PD host, if any.

MLD join are only accepted if it matches the subscriber host link local address. MLD Joins coming from the hosts that are nonexistent in the system is rejected, unless this functionality is explicitly enabled by the sub-hosts-only command under the MLD group-int CLI hierarchy level.

This model is shown in N:1 model - AN in IGMP snooping mode.

Figure 7. N:1 model - AN in IGMP snooping mode

IPoE N:1 model (service per VLAN/SAP) — IGMP/MLD proxy in the AN

The AN is configured as IGMP/MLD Proxy node and is participating in downstream multicast replication.

For IPv4, IGMP messages from multiple sources (subscribers hosts) for the same multicast group are consolidated in the AN into a single IGMP messages. This single IGMP message has the source IP address of the AN.

For IPv6, MLD messages from multiple sources (subscribers hosts) for the same multicast group are consolidated in the AN into a single MLD messages. This single MLD message has the link-local IP address of the AN.

From the BNG perspective this deployment model has the following characteristics:

Subscriber IGMP/MLD states are maintained in the AN.

IGMP Joins are accepted from the source IP address that is different from any of the subscriber’s IP addresses already existing in the BNG. This is controlled by an IGMP filter on a per group-interface level assuming that the IGMP processing for subscriber hosts is disabled with the no sub-hosts-only command under the router/service vprn>igmp>group-interface CLI hierarchy. In this case all IGMP messages that cannot be related to existing hosts are treated in the context of the SAP while IGMP messages from the existing hosts are treated in the context of the subscriber hosts.

MLD Joins are only accepted if the link-local address matches the subscriber’ link local address. To allow processing of foreign link-local address such as the AN link local address, the MLD processing for subscriber hosts should be disabled with the no sub-hosts-only command under the router/service vprn>mld>group-interface CLI hierarchy. In this case all MLD messages that cannot be related to existing hosts are treated in the context of the SAP while MLD messages from the existing hosts are treated in the context of the subscriber hosts.

IGMP/MLD statistics can be displayed per group-interface.

Multicast traffic for all subscribers on this SAP is forwarded through the egress SAP queue.

A single copy of any multicast stream is generated per SAP.

The AN receives a single multicast stream. Based on the IGMP/MLD proxy information, the AN replicates the mcast stream to the appropriate subscribers.

Traffic statistics are maintained per SAP queue.

HQoS Adjustment is not useful because the per host/subscriber IGMP/MLD granularity is lost. IGMP/MLD states are aggregated per AN.

Traffic can be explicitly classified into a specific SAP queues by a QoS policy applied under the SAP.

Multicast streams have multicast destination MAC.

In the following example, IGMPs from the source IP address <ip> is accepted even though there is no subscriber-host with that IP addresses present in the system. An IGMP state is created under the SAP context (service per vlan, or N:1 model) for the group pref-definition. All other IGMP messages originated from non-subscriber hosts is rejected. IGMP messages for subscriber hosts are processed according to the IGMP policy applied to each subscriber host.

configure

service vprn <id>

igmp

group-interface <ip-int-name>

import <policy-name>

configure

router

policy-options

begin

prefix-list <pref-name>

prefix <pref-definition>

policy-statement proxy-policy

entry 1

from

group-address <pref-name>

source-address <ip>

protocol igmp

exit

action accept

exit

exit

default-action reject

This functionality (accepting IGMP from non-subscriber hosts) can be disabled with the following flag.

configure

service vprn <id>

igmp

group-interface <ip-int-name>

sub-host-only

In this case, only per host IGMP processing is allowed.

In the following example, MLDs with foreign link-local-address is accepted even though there is no subscriber-host with that link local addresses present in the system. An MLD state is created under the SAP context (service per vlan, or N:1 model) for the group pref-definition. All other MLD messages originated from non-subscriber hosts are rejected. MLD messages for subscriber hosts are processed according to the IGMP policy applied to each subscriber host.

configure

service vprn <id>

mld

group-interface <ip-int-name>

import <policy-name>

configure

router

policy-options

begin

prefix-list <pref-name>

prefix <pref-definition>

policy-statement proxy-policy

entry 1

from

group-address <pref-name>

source-address <ip>

protocol igmp

exit

action accept

exit

exit

default-action reject

This functionality (accepting MLD from non-subscriber hosts) can be disabled with the following flag.

configure

service vprn <id>

mld

group-interface <ip-int-name>

sub-host-only

In this case, only per host MLD processing is allowed.

This model is shown in N:1 model - AN in proxy mode.

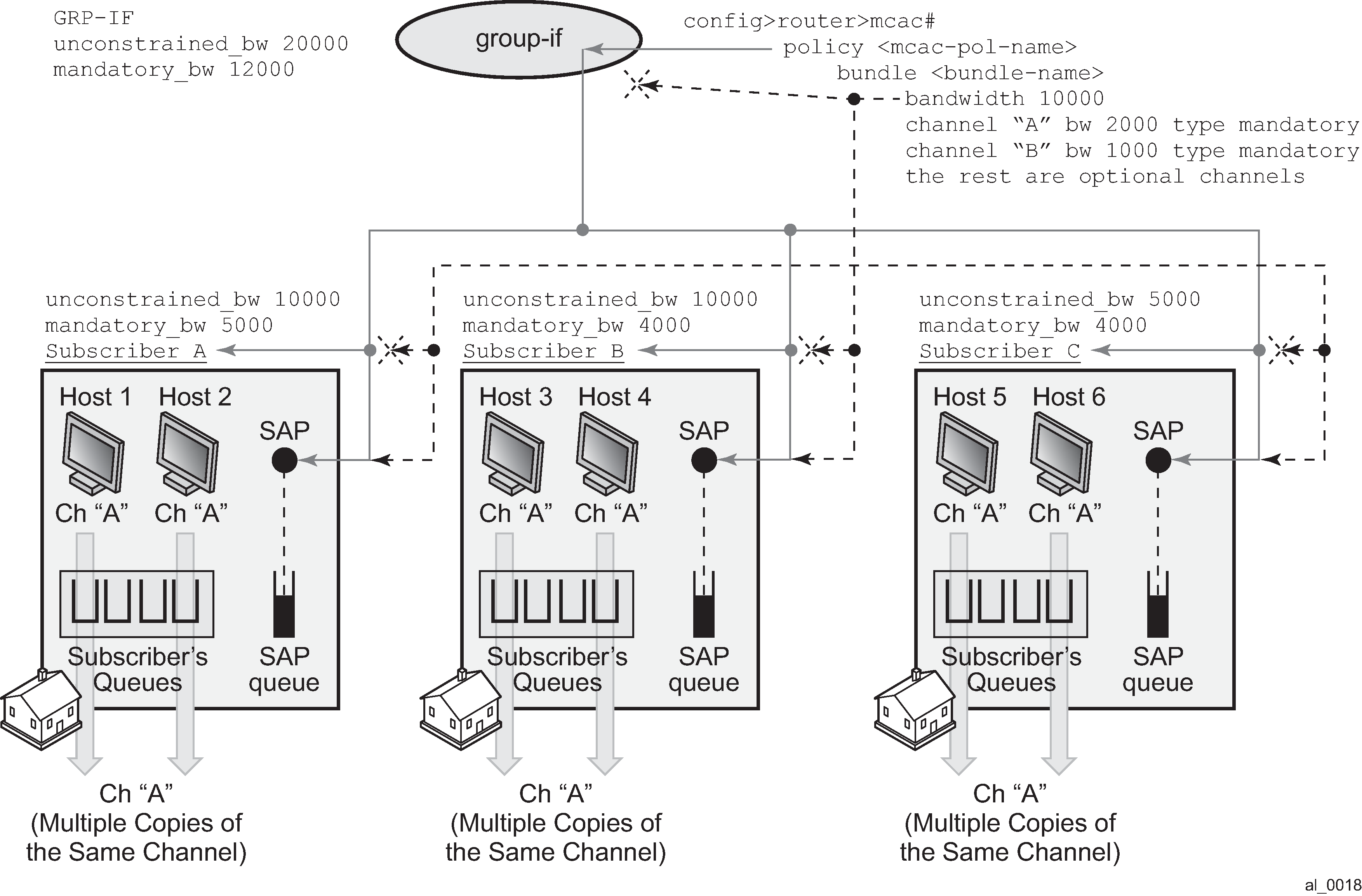

Per subscriber host replication mode

In this mode a multicast stream is transmitted per subscriber hosts for each registered multicast group (channel). As a result, multiple copies of the same multicast stream destined for different destinations can be transmitted over the same SAP. In this case, traffic flows within the subscriber queues and consequently it is accounted in HQoS. As a result, HQoS Adjustment is not needed. Each copy of the same multicast stream have a unique unicast destination MAC addresses. The per host unicast MAC destination addresses are necessary to differentiate multiple copies between different receivers on the same SAP.

Per host replication mode can be enabled on a subscriber basis with the per-host-replication command in the config>subscr-mgmt>igmp-policy context.

For IPv6, the command is in the config>subscr-mgmt>mld-policy context.

IPoE 1:1 model (subscriber per VLAN/SAP) — no IGMP/MLD in AN

The AN is not IGMP/MLD aware and multicast replication is performed in the BNG. Multicast streams are sent directly to the hosts using their unicast MAC addresses. HQoS adjustment is not needed as multicast traffic is flowing through subscriber queues. From the BNG perspective this deployment model has the following characteristics:

IGMP/MLD states are kept per hosts. Each host can be registered to multiple IGMP/MLD groups.

MLD uses link-local as source address, which makes it difficult to associate with the originating host. MLD (S,G) are always first associated with a IPv6 WAN host if available. If this WAN host terminate its IPv6 session (with lease expiry, session terminate, and so on), the (S,G) is then associated with any remaining WAN host. Only when there are no more WAN hosts available, are the (S,G) associated with the PD host, if any.

IGMP/MLD Joins are accepted only from the active subscriber hosts. In other words antispoofing is in effect for IGMP/MLD messages.

IGMP/MLD statistics can be displayed per host, per group or per subscriber.

Multicast traffic is forwarded through subscriber queues using unicast destination MAC address of the destination host.

Multiple copies of the same multicast stream can be generated per SAP. The number of copies depends on the number of hosts on the SAP that are registered to the same multicast group (channel). In other words, the number of multicast streams on the SAP is equal to the number of groups registered across all hosts on this SAP.

Traffic statistics are kept per the host queue. In case that multicast statistics need to be separated from unicast, the multicast traffic should be classified in a subscriber separate queue.

HQoS Adjustment is not needed as traffic is flowing within the subscriber queues and is automatically accounted in HQoS.

Multicast traffic can be explicitly classified into forwarding classes and consequently directed into needed queues.

MCAC is supported.

profiled-traffic-only mode defined under sub-sla-mgmt is supported. This mode (profiled-traffic-only) is used to save the number of queues in 1:1 model (sub-sla-mgmt-> no multisub-SAP) by preventing the creation of the SAP queues. Because multicast traffic is not using the SAP queue, enabling this feature does not have any effect on the multicast operation.

This model is shown in 1:1 model.

IPoE N:1 model (service per VLAN/SAP) — no IGMP/MLD in the AN

The AN is not IGMP/MLD aware and is not participating in multicast replication. From the BNG perspective this deployment model has the following characteristics:

IGMP/MLD states are kept per hosts. Each host can be registered to multiple multicast groups.

MLD uses link-local as source address, which makes it difficult to associate with the originating host. MLD (S,G) are always first associated with a IPv6 WAN host if available. If this WAN host terminate its IPv6 session (by lease expiry, session terminate, and so on), the (S,G) is then associated with any remaining WAN host. Only when there are no more WAN hosts available, are the (S,G) associated with the PD host, if any.

IGMP/MLD Joins are accepted only from the active subscriber hosts, subject to antispoofing.

IGMP/MLD statistics can be displayed per host, per group or per subscriber.

Multicast traffic is forwarded through subscriber queues using unicast destination MAC address of the destination host.

Multiple copies of the same multicast stream can be generated per SAP. The number of copies depends on the number of hosts on the SAP that are registered to the same multicast group (channel). In other words, the number of multicast streams on the SAP is equal to the number of groups registered across all hosts on this SAP.

Traffic statistics are kept per the host queue. In case that multicast statistics need to be separated from unicast, the multicast traffic should be classified in a separate subscriber queue.

HQoS Adjustment is not needed as traffic is flowing within the subscriber queues and is automatically accounted in HQoS.

Multicast traffic can be explicitly classified into forwarding classes and consequently directed into needed queues.

MCAC is supported.

This model is shown in N:1 model — no IGMP/MLD in the AN.

Figure 10. N:1 model — no IGMP/MLD in the AN

Per-SLA profile instance replication mode

In the per-SLA Profile Instance (SPI) replication mode, a multicast stream is transmitted for the subscriber host SLA profile for each registered multicast group (channel). As a result, multiple copies of the same multicast stream are transmitted over the same SAP. The multicast packet replication depends on the number of SLA profile instances configured for the subscriber. For example, if the subscriber hosts use three SLA profiles, the (S,G) is replicated three times. But if the hosts use the same SLA profile, the (S,G) is replicated only once.

MCAC is supported for per-SPI replication.

Multicast traffic flows on the SAP queue and each copy of the multicast stream use the same multicast destination MAC address.

The per-SPI replication mode can be enabled per subscriber:

in classic CLI, using the per-spi-replication command in the following contexts:

config>subscriber-mgmt>igmp-policy

config>subscriber-mgmt>mld-policy (IPv6)

in MD-CLI, using the replication command in the following contexts:

configure subscriber-mgmt igmp-policy

configure subscriber-mgmt mld-policy

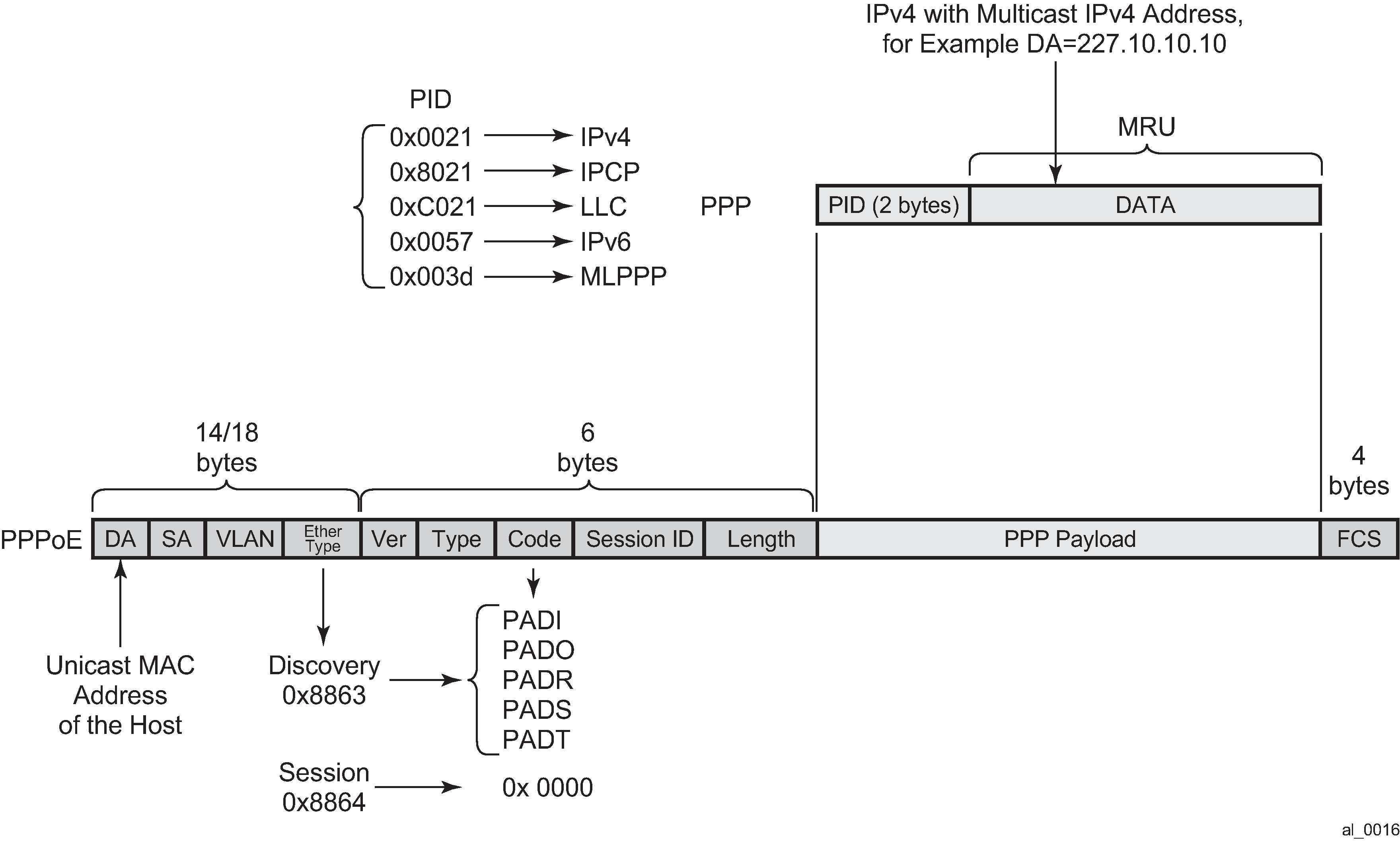

Multicast over PPPoE

In a PPPoE environment, multicast replication is performed per session (host) regardless of whether those sessions are shared per SAP or they reside on individual SAPs. This is because of the point-to-point nature of PPPoE sessions. HQoS adjustment is not needed as multicast is part of the PPPoE session traffic that is flowing through subscriber queues. Multicast packets are sent with unicast MAC address to each CPE. PPP protocol field is set to IP and the destination IP address is the multicast group address for each unique session ID.

This model is shown in Multicast IPv4 address and unicast MAC address in PPPoE subscriber multicast.

Note that in a SRRP setup, the multichassis active BNG (SRRP master state) uses the SRRP MAC address as the source MAC for both multicast and multicast control protocol packets. This is only applicable to PPPoE hosts.

IGMP flooding containment

The query function in IGMP can cause some unintended flooding in N:1 IPoE deployment model with AN in the IGMP snooping mode. By maintaining IGMP session states per host, it is assumed that the IGMP interaction between multicast receivers and the BNG are on a one-to-one basis. Upon arrival of an IGMP leave from a host for a specific multicast group, the IGMP querier would normally multicast a group-specific query (fast-leave). In N:1 model with SAP replication mode enabled, the 7750 SR and 7450 ESS sends a group-specific query (fast-leave) only when it receives the IGMP leave message for the last group shared amongst all subscribers on this SAP.

IGMP/MLD timers

IGMP/MLD timers are maintained under the following hierarchy:

IPv4:

config>router>igmp

config>service>vprn>igmp

IPv6:

config>router>mld

config>service>vprn>mld

The IGMP/MLD timers are controlled on a per routing instance (VRF or GRT) level.

The timer values are used to:

determine the interval at which queries are transmitted (query-interval)

determine the amount of time after which a join times out

However, the timers can be different for hosts and redirected interface in case that redirection between VRFs is enabled.

IGMP/MLD query intervals

IGMP/MLD query related intervals (query-interval, query-last-member-interval, query-response-interval, robust-count) are configured on a global router/vprn IGMP/MLD level. They are used to determine the IGMP/MLD timeout states and the rates at which queries are transmitted.

In case of redirection, the subscriber-host IGMP/MLD state determines the IGMP/MLD state on the redirected interface, assuming that IGMP/MLD messages are not directly received on the redirected interface (for example from the AN performing IGMP/MLD forking). For example, if the redirected interface is not receiving IGMP/MLD messages from the downstream node, then the IGMP/MLD state under the redirected interface is removed simultaneously with the removal of the IGMP/MLD state for the subscriber host (because of leave or a timeout).

If the redirected interface is receiving IGMP/MLD message directly from the downstream node, the IGMP/MLD states on that redirected interface are driven by those direct IGMP/MLD messages.

For example, an IGMP/MLD host in VRF1 has an expiry time of 60 seconds and the expiry time defined under the VRF2 where multicast traffic is redirected is set to 90 seconds. The IGMP/MLD state times out for the host in VRF1 after 60s, and if no host has joined the same multicast group in VRF2 (where redirected interface resides), the IGMP state is removed there too.

If a join was received directly on the redirection interface in VRF2, the IGMP/MLD state for that group is maintained for 90s, regardless of the IGMP/MLD state for the same group in VRF1.

HQoS adjustment

HQoS Adjustment is required in the scenarios where subscriber multicast traffic flow is disassociated from subscriber queues. In other words, the unicast traffic for the subscriber is flowing through the subscriber queues while at the same time multicast traffic for the same subscriber is explicitly (through redirection) or implicitly (per-sap replication mode) redirected through a separate non-subscriber queue. In this case HQoS Adjustment can be deployed where preconfigured multicast bandwidth per channel is artificially included in HQoS. For example, bandwidth consumption per multicast group must be known in advance and configured within the 7450 ESS and 7750 SR. By keeping the IGMP state per host, the bandwidth for the multicast group (channel) to which the host is registered is known and is deducted as consumed from the aggregate subscriber bandwidth.

The multicast bandwidth per channel must be known (this is always an approximation) and provisioned in the BNG node in advance.

In PPPoE and in IPoE per-host replication environment, HQoS Adjustment is not needed as multicast traffic is unicast to each subscriber and therefore is flowing through subscriber queues.

For HQoS Adjustment, the channel bandwidth definition and association with an interface is the same as in the MCAC case. This is a departure from the legacy HT channel bandwidth definition which is done by a multicast-info-policy.

Example of HQoS adjustment:

Channel definition:

configure

router

mcac

policy <name>

<channel definition>

Channel bandwidth definition policy can be applied under:

group-interface

configure service vprn <id> igmp group-interface <ip-int-name> mcac policy <mcac-policy-name>-

plain interface

configure router/service vprn igmp interface <ip-int-name> mcac policy <mcac-policy-name> -

retailer group-interface

configure service vprn <id> igmp group-interface fwd-service <svc-id> <grp-if-name> mcac policy <mcac-policy-name>

Enabling HQoS adjustment:

configure

subscriber-management

igmp-policy <name>

egress-rate-modify [egress-aggregate-rate-limit | scheduler <name>]

Applying HQoS adjustment to the subscriber:

configure

subscriber-management

sub-profile <subscriber-profile-name>

igmp-policy <name>

To activate HQoS adjustment on the subscriber level, the sub-mcac-policy must be enabled under the subscriber with the following CLI:

configure

subscriber-management

sub-mcac-policy <pol-name>

no shutdown

configure

subscriber-management

sub-profile <subscriber-profile-name>

sub-mcac-policy <pol-name>

The adjusted bandwidth during operation can be verified with the following commands (depending whether agg-rate-limit or scheduler-policy is used):

*B:BNG-1# show service active-subscribers subscriber "sub-1" detail

===============================================================================

Active Subscribers

===============================================================================

-------------------------------------------------------------------------------

Subscriber sub-1

-------------------------------------------------------------------------------

I. Sched. Policy : up-silver

E. Sched. Policy : N/A E. Agg Rate Limit: 4000

I. Policer Ctrl. : N/A

E. Policer Ctrl. : N/A

Q Frame-Based Ac*: Disabled

Acct. Policy : N/A Collect Stats : Enabled

Rad. Acct. Pol. : sub-1-acct

Dupl. Acct. Pol. : N/A

ANCP Pol. : N/A

HostTrk Pol. : N/A

IGMP Policy : sub-1-IGMP-Pol

Sub. MCAC Policy : sub-1-MCAC

NAT Policy : N/A

Def. Encap Offset: none Encap Offset Mode: none

Avg Frame Size : N/A

Preference : 5

Sub. ANCP-String : "sub-1"

Sub. Int Dest Id : ""

Igmp Rate Adj : -2000

RADIUS Rate-Limit: N/A

Oper-Rate-Limit : 2000

...

-------------------------------------------------------------------------------

*B:BNG-1#

Consider a different example with a scheduler instead of agg-rate-limit:

*A:Dut-C>config>subscr-mgmt>sub-prof# info

----------------------------------------------

igmp-policy "pol1"

sub-mcac-policy "smp"

egress

scheduler-policy "h1"

scheduler "t2" rate 30000

exit

exit

----------------------------------------------

*A:Dut-C>config>subscr-mgmt>igmp-policy# info

----------------------------------------------

egress-rate-modify scheduler "t2"

redirection-policy "mc_redir1"

----------------------------------------------

Assume that the subscriber joins a new channel with bandwidth of 1 Mb/s (1000 kb/s).

A:Dut-C>config>subscr-mgmt>sub-prof>egr>sched# show qos scheduler-hierarchy subscrib

er "sub_1" detail

===============================================================================

Scheduler Hierarchy - Subscriber sub_1

===============================================================================

Ingress Scheduler Policy:

Egress Scheduler Policy : h1

-------------------------------------------------------------------------------

Legend :

(*) real-time dynamic value

(w) Wire rates

B Bytes

-------------------------------------------------------------------------------

Root (Ing)

|

No Active Members Found on slot 1

Root (Egr)

| slot(1)

|--(S) : t1

| | AdminPIR:90000 AdminCIR:10000

| |

| |

| | [Within CIR Level 0 Weight 0]

| | Assigned:0 Offered:0

| | Consumed:0

| |

| | [Above CIR Level 0 Weight 0]

| | Assigned:0 Offered:0

| | Consumed:0

| | TotalConsumed:0

| | OperPIR:90000

| |

| | [As Parent]

| | Rate:90000

| | ConsumedByChildren:0

| |

| |

| |--(S) : t2

| | | AdminPIR:29000 AdminCIR:10000(sum) <==== bw 1000 from igmp s

ubstracted

| | |

| | |

| | | [Within CIR Level 0 Weight 1]

| | | Assigned:10000 Offered:0

| | | Consumed:0

| | |

| | | [Above CIR Level 1 Weight 1]

| | | Assigned:29000 Offered:0 <==== bw 1000 from igmp s

ubstracted

| | | Consumed:0

| | |

| | |

| | | TotalConsumed:0

| | | OperPIR:29000 <==== bw 1000 from igmp s

ubstracted

| | |

| | | [As Parent]

| | | Rate:29000 <==== bw 1000 from igmp

substracted

| | | ConsumedByChildren:0

| | |

| | |

| | |--(S) : t3

| | | | AdminPIR:70000 AdminCIR:10000

| | | |

| | | |

| | | | [Within CIR Level 0 Weight 1]

| | | | Assigned:10000 Offered:0

| | | | Consumed:0

| | | |

| | | | [Above CIR Level 1 Weight 1]

| | | | Assigned:29000 Offered:0

| | | | Consumed:0

| | | |

| | | |

| | | | TotalConsumed:0

| | | | OperPIR:29000

| | | |

| | | | [As Parent]

| | | | Rate:29000

| | | | ConsumedByChildren:0

| | | |

*A:Dut-C>config>subscr-mgmt>igmp-policy# show service active-subscribers sub-mcac

===============================================================================

Active Subscribers Sub-MCAC

===============================================================================

Subscriber : sub_1

MCAC-policy : smp (inService)

In use mandatory bandwidth : 1000

In use optional bandwidth : 0

Available mandatory bandwidth : 1147482647

Available optional bandwidth : 1000000000

-------------------------------------------------------------------------------

Subscriber : sub_2

MCAC-policy : smp (inService)

In use mandatory bandwidth : 0

In use optional bandwidth : 0

Available mandatory bandwidth : 1147483647

Available optional bandwidth : 1000000000

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Number of Subscribers : 2

===============================================================================

*A:Dut-C#

*A:Dut-C# show service active-subscribers subscriber "sub_1" detail

===============================================================================

Active Subscribers

===============================================================================

-------------------------------------------------------------------------------

Subscriber sub_1 (1)

-------------------------------------------------------------------------------

I. Sched. Policy : N/A

E. Sched. Policy : h1 E. Agg Rate Limit: Max

I. Policer Ctrl. : N/A

E. Policer Ctrl. : N/A

Q Frame-Based Ac*: Disabled

Acct. Policy : N/A Collect Stats : Disabled

Rad. Acct. Pol. : N/A

Dupl. Acct. Pol. : N/A

ANCP Pol. : N/A

HostTrk Pol. : N/A

IGMP Policy : pol1

Sub. MCAC Policy : smp

NAT Policy : N/A

Def. Encap Offset: none Encap Offset Mode: none

Avg Frame Size : N/A

Preference : 5

Sub. ANCP-String : "sub_1"

Sub. Int Dest Id : ""

Igmp Rate Adj : N/A

RADIUS Rate-Limit: N/A

Oper-Rate-Limit : Maximum

...

===============================================================================

*A:Dut-C#

*A:Dut-C# show subscriber-mgmt igmp-policy "pol1"

===============================================================================

IGMP Policy pol1

===============================================================================

Import Policy :

Admin Version : 3

Num Subscribers : 2

Host Max Group : No Limit

Host Max Sources : No Limit

Fast Leave : yes

Redirection Policy : mc_redir1

Per Host Replication : no

Egress Rate Modify : "t2"

Mcast Reporting Destination Name :

Mcast Reporting Admin State : Disabled

===============================================================================

*A:Dut-C#

Host Tracking (HT) considerations

HT is a light version of HQoS Adjustment feature. The use of HQoS Adjustment functionality in place of HT is strongly encouraged.

When HT is enabled, the AN forks off (duplicates) the IGMP messages on the common mcast SAP to the subscriber SAP. IGMP states are not fully maintained per sub-host in the BNG, instead they are only tracked (less overhead) for bandwidth adjustment purposes.

Example of HT

Channel Definition:

configure

mcast-management

multicast-info-policy <policy-name>

<channel to b/w mapping definition>

Applying channel definition policy on a router/VPRN global level:

config>router>multicast-info-policy <policy-name>

config>service>vprn>multicast-info-policy <policy-name>

Defining the rate object on which HT is applied:

configure

subscriber-management

host-tracking-policy <policy-name>

egress-rate-modify [agg-rate-limit | scheduler <sch-name>]

Applying the HT to the subscriber:

configure

subscriber-management

sub-profile <subscriber-profile-name>

host-tracking-policy <policy-name> => mutually exclusive with igmp-

policy

HQoS adjust per Vport

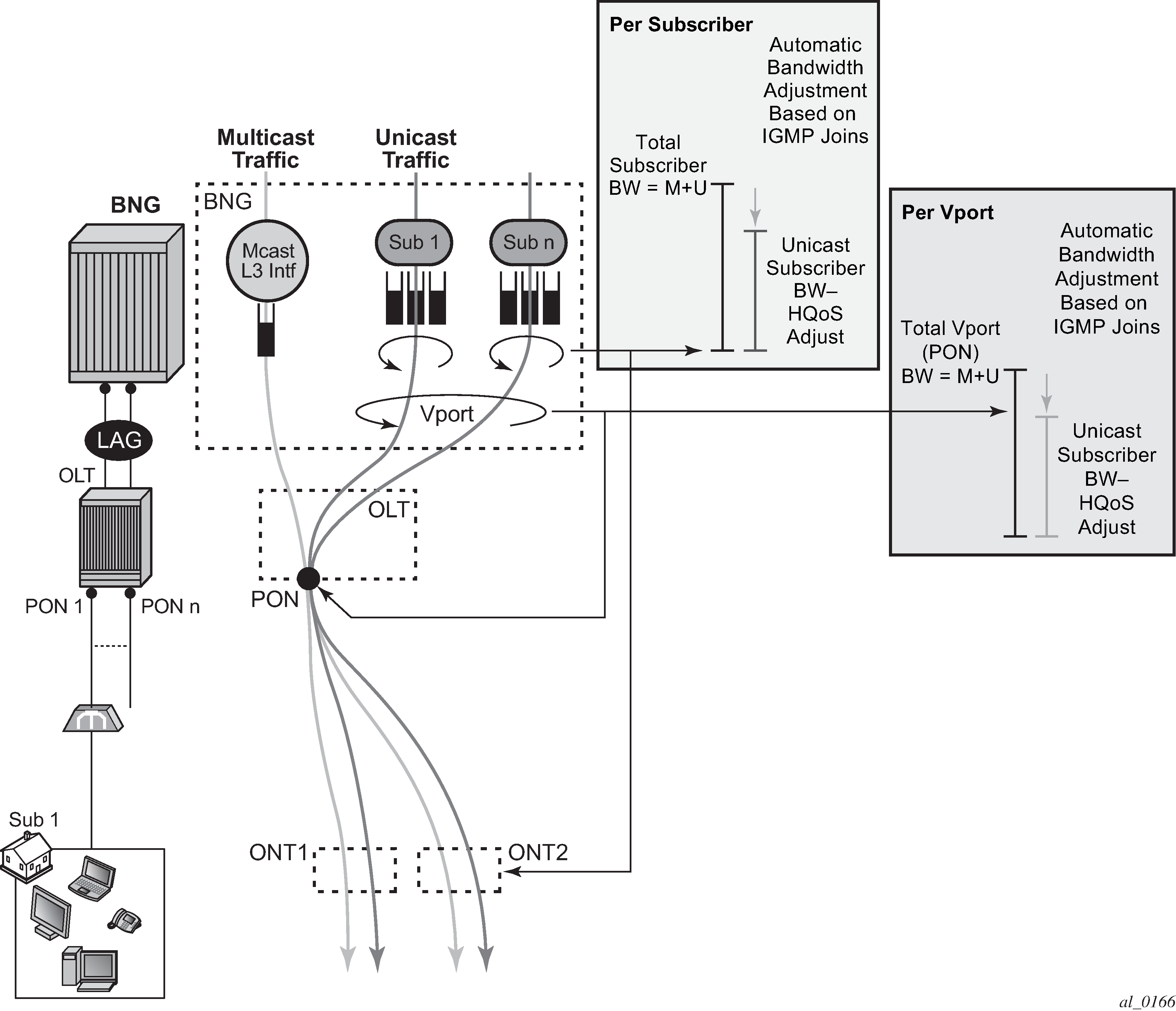

HQoS adjust per Vport can be used in environments where Vport represents a physical medium over which traffic for multiple subscribers is shared. Typical example of this scenario is shown in HQoS adjustment per subscriber and Vport. Multicast traffic within the router is taking a separate path from unicast traffic, only for the two traffic flows to merge later in the PON (represented by a Vport) and ONT (represented by the subscriber in the 7450 ESS and 7750 SR).

A single copy of each channel is replicated on the PON as long as there is at least one subscriber on that PON interested in this channel (has joined the IGMP/MLD group).

The 7450 ESS and 7750 SR monitors IGMP/MLD Joins at the subscriber level and consequently the channel bandwidth is subtracted from the current Vport rate limit only in the case that this is the first channel flowing through the corresponding PON. Otherwise, the Vport bandwidth is not modified. Similarly, when the channel is removed from the last subscriber on the PON, the channel bandwidth is returned to the Vport.

Association between the Vport and the subscriber is performed by an inter-destination-string or svlan during the subscriber setup phase. An inter-destination-string can be obtained either by RADIUS or LUDB. If the association between the Vport and the subscriber is performed based on the svlan (as specified in sub-sla-mgmt under the SAP or MSAP), then the destination string under the Vport must be a number matching the svlan.

The mcac-policy (channel definition bandwidth) can be applied on the group interface under which the subscribers are instantiated or in case of redirection under the redirected-interface.

In a LAG environment, the Vport instance is instantiated per member LAG link on the IOM. For accurate bandwidth control, it is prerequisite for this feature that subscriber traffic hashing is performed per Vport.

The CLI structure is as follows.

configure

port <port-id>

ethernet

access

egress

vport <name>

egress-rate-modify

agg-rate

host-match <destination-string>

port-scheduler-policy <port-scheduler-policy-name>

configure

port <port-id>

sonnet-sdh

path [<sonnet-sdh-index>]

access

egress

vport <name>

egress-rate-modify

agg-rate

host-match <destination-string>

port-scheduler-policy <port-scheduler-policy-name>

The Vport rate that is affected by this functionality depends on the configuration:

When the agg-rate-limit within the Vport is configured, its value is modified based on the IGMP activity associated with the subscriber under this Vport.

When the port-scheduler-policy within the Vport is referenced, the max-rate defined in the corresponding port scheduler policy is modified based on the IGMP activity associated with the subscriber under this Vport.

The Vport rates can be displayed with the following two commands:

- show port 1/1/5 vport name

- qos scheduler-hierarchy port port-id vport vport-name

As an example:

*A:system-1# show port 1/1/7 vport

========================================================================

Port 1/1/7 Access Egress vport

========================================================================

VPort Name : isam1

Description : (Not Specified)

Sched Policy : 1

Rate Limit : Max

Rate Modify : enabled

Modify delta : -14000

In this case, the configured Vport aggregate-rate-limit max value has been reduced by 14 Mb/s.

Similarly, if the Vport had a port-scheduling-policy applied, the max-rate value configured in the port-scheduling-policy would have been modified by the amount shown in the Modify delta output in the above command.

Multi-chassis redundancy

Modified Vport rate synchronization in multichassis environment relies on the synchronization of the subscriber IGMP/MLD states between the redundant nodes. Upon the switchover, the Vport rate on the newly active node is adjusted according to the current IGMP/MLD state of the subscribers associated with the Vport.

Scalability considerations

It is assumed that the rate of the IGMP/MLD state change on the Vport level is substantially lower than on the subscriber level.

The reason for this is that the IGMP/MLD Join/Leaves are shared amongst subscribers on the same Vport (PON for example) and therefore, the IGMP/MLD state on the Vport level is changed only for the first IGMP/MLD Join per channel and the last IGMP/MLD leave per channel.

Redirection

Two levels of MCAC can be enabled simultaneously and in such case this is referred as Hierarchical MCAC (H-MCAC). In case that redirection is enabled, H-MCAC per subscriber and the redirected interface is supported. However, mcac per group-interface in this case is not supported. Channel definition policy for the subscriber and the redirected interface is in this case referenced under the igmp>interface (redirected interface) CLI or for IPv6 mld>interface.

If redirection is disabled, H-MCAC for both, the subscriber and the group-interface is supported. The channel definition policy is in this case configured under the config>router>igmp>group interface context or for IPv6 config>router>mld>group interface.

There are two options in multicast redirection. The first option is to redirect all subscriber multicast traffic to a dedicated redirect interface.

Example:

Defining redirection action:

configure

router

policy-options

begin

policy-statement <name>

default-action accept

multicast-redirection [fwd-service <svc id>] <interface name>

exit

exit

exit

exit