Y.1564 service activation testhead OAM tool

The ITU-T Y.1564 standard defines the out-of-service test methodology and the parameters measured to test service SLA conformance during service turn up. Two primary test phases are defined: service configuration and service performance test phase.

The service configuration test is the first phase and validates whether the service is configured correctly. This test is typically run for a short duration and measures the throughput, frame delay (FD), frame delay variation (FDV), and frame loss ratio (FLR) for each service. The service performance test is the second test phase and validates the quality of services delivered to the end customer. In these tests, which are typically run for a longer duration, traffic is generated up to the configured rate for all services simultaneously, and the service performance parameters are measured for each service.

The service activation testhead tool administers the service configuration test based on a user-configured rate and measures the FD, FDV, and FLR for the service. This tool supports bidirectional measurement.

The service activation testhead tool can generate a single stream per test. The tool validates the user-specified rate to generate traffic for the different test types configured and computes the throughput, FD, FDV, and FLR for the service streams.

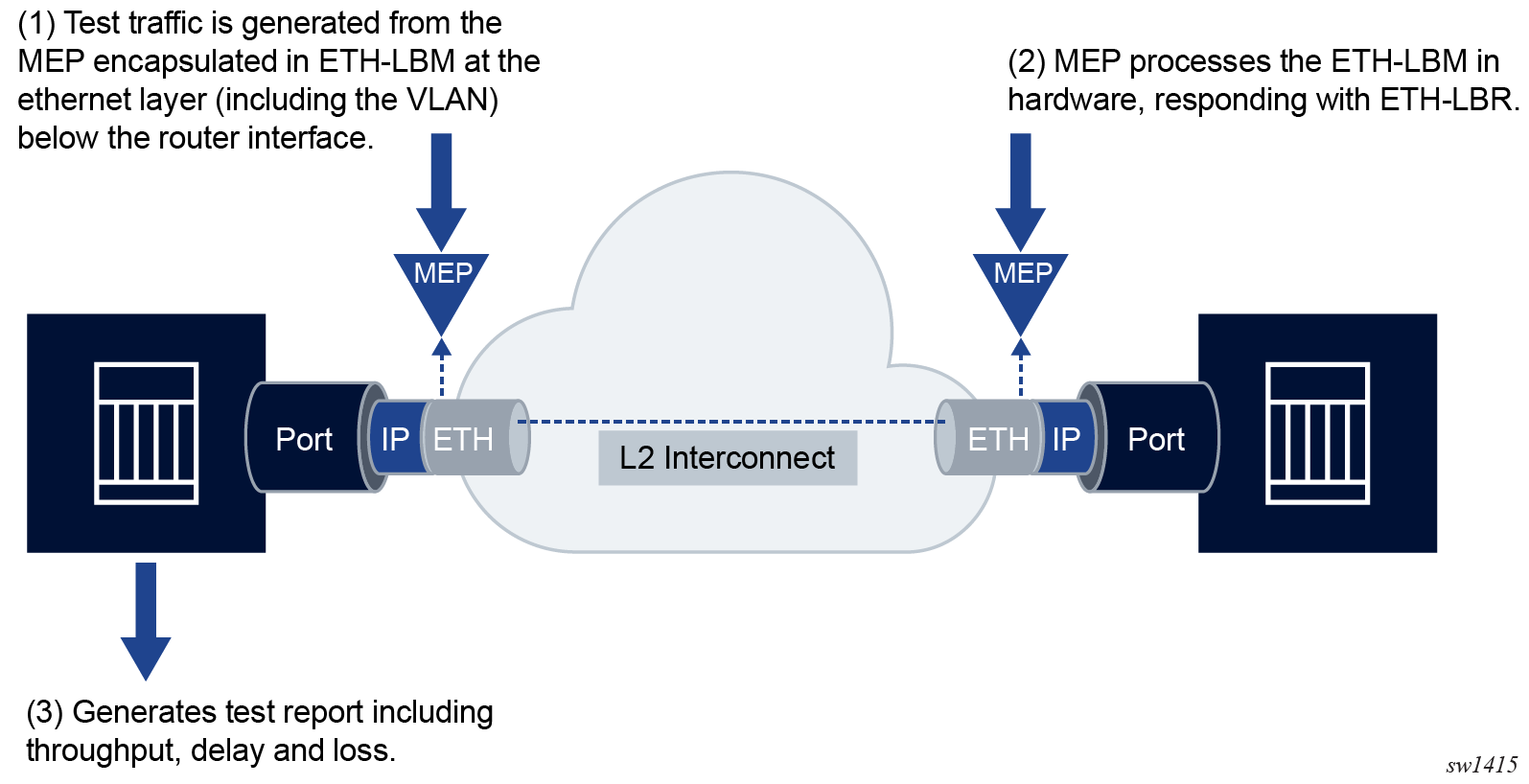

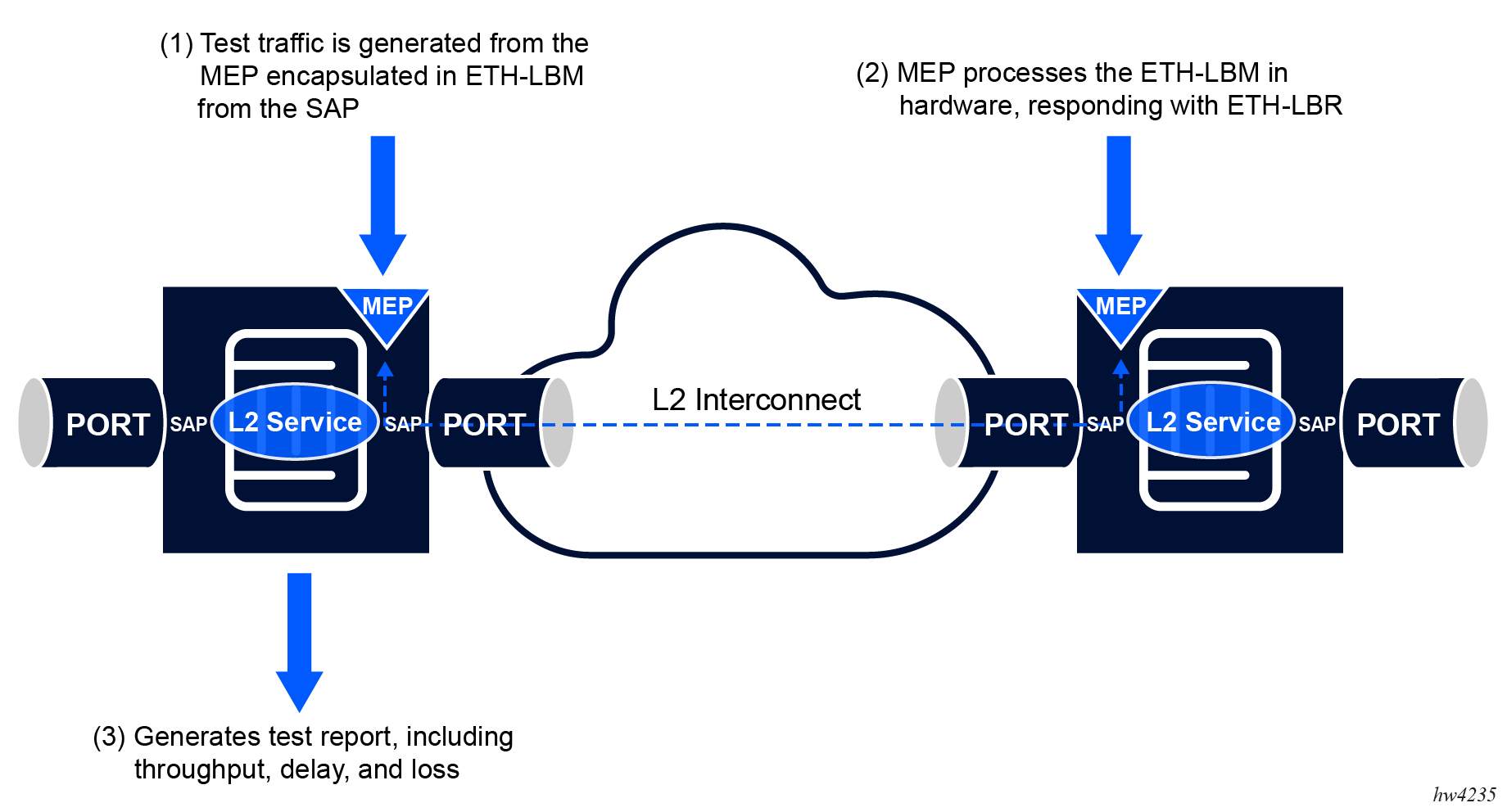

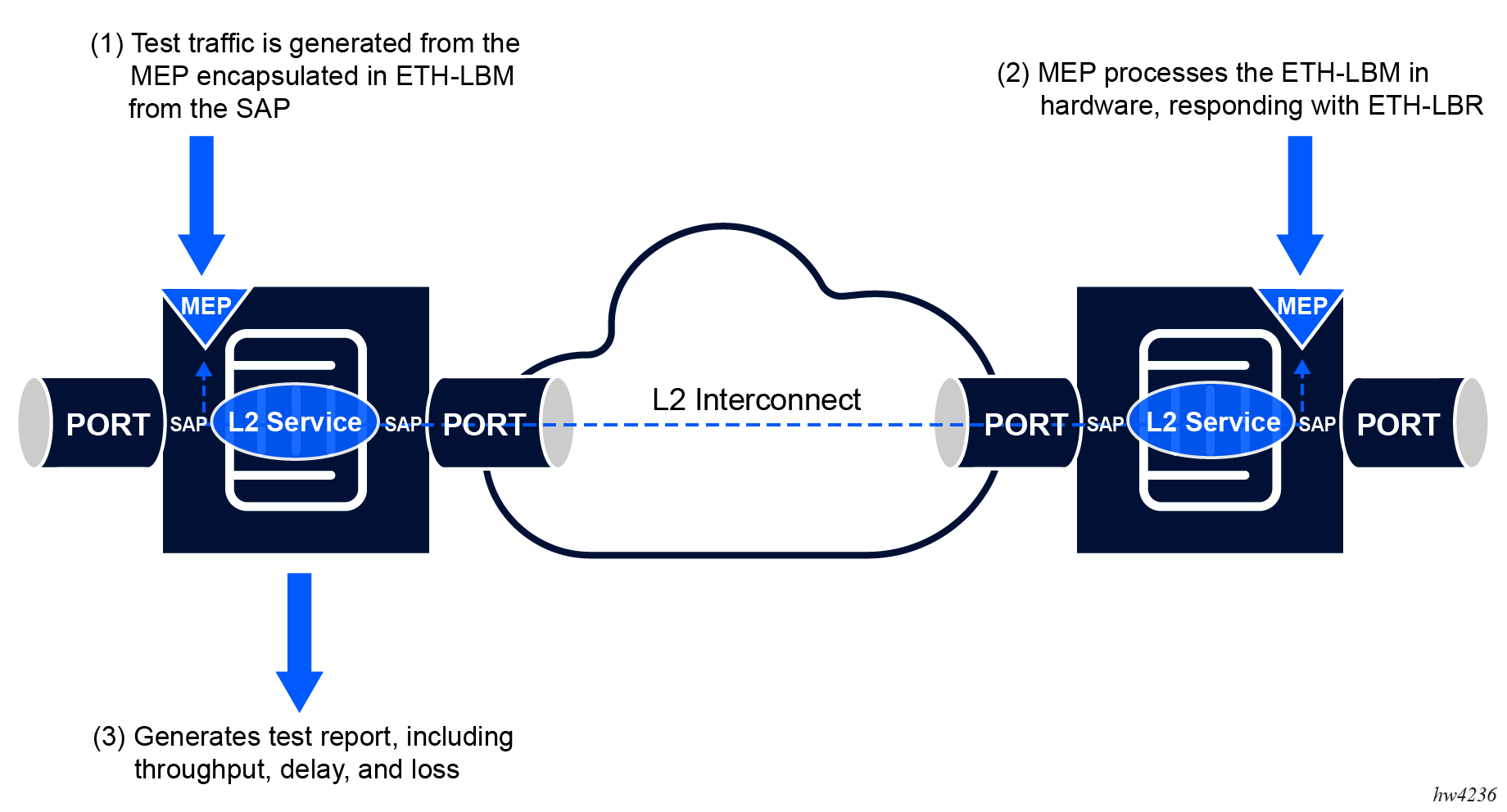

The Y.1564 Service Activation Test (SAT) encapsulates the frame format of the ETH-CFM loopback message (LBM). This implementation requires MEPs to be configured at specific service endpoints to process and reflect Y.1564 SAT streams.

To process ETH-LBM Y.1564 SAT streams, the remote node must properly handle inbound ETH-LBM protocol data units (PDU) and respond with an appropriate ETH-LBR PDU in the data plane. The lbm-svc-act-responder CLI command must be configured on the ETH-CFM LBM responder at the remote service endpoint to reflect the SAT streams.

The following figure shows the required remote loopback and the flow of the frame, which is generated by the testhead tool, through the network.

The user must configure a source MEP, which is associated with a SAP or router interface under the test, and a destination MEP MAC address for the CFM LBM frame payload configuration. The data pattern for the payload, dot1p, and discard eligibility indicator (DEI) for the VLAN tags can also be specified. The VLAN tag is not user-configurable; it is derived from the SAP hosting the MEP.

-

network interface configured with null, dot1q and QinQ encapsulation in the Base routing instance

-

SAP on Ethernet ports for Epipe and VPLS with null, dot1q and QinQ encapsulations

-

two-way measurement of service performance metrics. The tests measure throughput, FD, FDV, and FLR.

-

FD measurements computed by periodically injecting delay measurement message (DMM) frames. The DMM picks up the VLAN tags from the SAP or router interface hosting the MEP, and the dot1p and DEI are marked based on the frame payload configuration. No user configuration is required to run the DMM for FD measurements.

-

configuration of a rate value and option to measure performance metrics. The testhead OAM tool generates traffic up to the specified rate and measures service performance metrics such as delay, delay variation, and loss.

-

configuration of a single frame size ranging from 64 bytes to 9212 bytes

-

test duration configured up to a maximum of 24 hours, 59 minutes, and 59 seconds. The test performance is measured after the specified rate is achieved. The user can probe the system at any time to check the current status and progress of the test.

-

configure templates to define test configuration parameters. The user can start a test using a preconfigured default template. The acceptance criteria allows the user to configure the thresholds that indicate the acceptable range for the service performance metrics. At the end of the test, the measured values for FD, FDV, and FLR are compared against the configured thresholds to determine the pass or fail criteria and to generate a trap to the management station.

The acceptance criteria template ‟default” is attached to every service stream created in the service-test context, where no explicit acceptance criteria template is configured. When a default acceptance criteria template is used, the test is always expected to pass.

-

ITU-T Y.1564 specifies the following tests:

-

service configuration tests

These short duration tests are used to verify the accuracy of the configuration and can meet requested SLA metrics such as throughput, delay, and loss. The following tests are supported:

-

CIR configuration test

-

PIR configuration test

-

policing test

Service configuration tests can be run by setting the rate value appropriately for the specific test. For a policing test, traffic is always run at 125% of the configured PIR.

-

-

service performance test

This long duration test is used to check the service performance metrics.

-

Service activation testhead OAM tool

The SR OS FP4 and above platforms support the use of the service activation testhead OAM tool for out of service testing and reporting of performance information.

Use the service-stream configuration commands to group a set of streams under a single service test. The following configuration options are supported for each stream:

The CIR and PIR, frame payload contents, and frame size can be configured for each stream.

Different acceptance criteria per stream can be configured and used to determine pass/fail criteria for the stream and to monitor streams that are in progress.

For each stream, it is possible to use a single command to run a service configuration test, CIR test, PIR test, and service performance test concurrently, instead of running each test individually.

Instead of using threshold parameters to determine the pass/fail criteria for a test, use the m-factor CLI command to configure the margin by which the measured throughput is off from the configured throughput. The margin is configured using the m-factor CLI command.

The operator can store the test results in an accounting record in XML format. The XML file contains the keywords and MIB references listed in the following table.

| XML file keyword | Description | TIMETRA-OAM-SERV-ACTIV-TEST-MIB |

|---|---|---|

acceptanceCriteriaName |

Provides the name of the acceptance criteria template policy used to compare the measured results |

tmnxOamSathStsSvcStrmAcpCritTmpl |

cirRate |

The user-configured CIR rate |

tmnxOamSathStsSvcStrmTxCIR |

cTagDot1p |

The dot1p value set for VLAN tag 2 (inner VLAN tag) in the frame payload generated by the tool |

tmnxOamSathStsSvcStrmCustTagPrio |

cTagDei |

The DEI value set for VLAN tag 2 (inner VLAN tag) in the frame payload generated by the tool |

tmnxOamSathStsSvcStrmCustTagDEI |

cirTestDurMin |

The duration, in minutes, of the CIR test |

tmnxOamSathStsSvcTestDrCirMin |

cirTestDurSec |

The duration, in seconds, of the CIR test |

tmnxOamSathStsSvcTestDrCirSec |

cirThreshold |

The CIR rate threshold to compare with the measured value |

tmnxOamSathStsSvcStrmAcThldCir |

dataPattern |

The data pattern to include in the packet generated by the service testhead tool |

tmnxOamSathStsSvcStrmPadPattern |

description |

The user-configured description for the service test and service stream |

tmnxOamSathStsSvcTestDescription tmnxOamSathStsSvcStrmDescription |

dstMac |

The destination MAC address to use in the packet generated by the tool |

tmnxOamSathStsSvcStrmDestMacAddr |

delayVarMax |

The maximum value of delay variation measured by the tool |

tmnxOamSathStsTestMeasDelayVrMax |

delayVarMin |

The minimum value of delay variation measured by the tool |

tmnxOamSathStsTestMeasDelayVrMin |

delayVarAvg |

The average value of delay variation measured by the tool |

tmnxOamSathStsTestMeasDelayVrAvg |

delayVariationAcceptancePass |

Indicates whether the measured delay variation is within the configured delay variation threshold in the acceptance criteria |

tmnxOamSathStsTestOprStDelayVar |

delayVariationThreshold |

The delay variation threshold configured in the acceptance criteria |

tmnxOamSathStsSvcStrmAcThldDlyVr |

delayAcceptancePass |

Indicates whether the measured delay is within configured delay threshold |

tmnxOamSathStsTestOprStDelay |

delayAvg |

The average of delay values computed for the test stream |

tmnxOamSathStsTestMeasDelayAvg ncy |

delayMax |

The maximum value of delay measured by the tool |

tmnxOamSathStsTestMeasDelayMax |

delayMin |

The minimum value of delay measured by the tool |

tmnxOamSathStsTestMeasDelayMin |

delayThreshold |

The delay threshold configured in the acceptance criteria |

tmnxOamSathStsSvcTestTimeEnd |

endTime |

The time (wall-clock time) the test was completed |

tmnxOamSathStsSvcTestTimeEnd tmnxOamSathStsTestTimeEnd |

frameLossThreshold |

The loss threshold configured in the acceptance criteria |

tmnxOamSathStsSvcStrmAcThldFLR |

frameLossThresholdPolicing |

The loss threshold configured in the acceptance criteria for the policing test |

tmnxOamSathStsSvcStrmAcThldFlrPl |

frameSizeTemplateName |

The frame size template name. The testhead tool generates packet sizes as specified in the frame size template. This is used to specify a mix of frames with different sizes to be generated by the tool. |

tmnxOamSathStsSvcStrmFrmSizeTmpl |

lossRatio |

The measured frame loss ratio |

tmnxOamSathStsTestMeasFLR |

lossAcceptancePass |

Indicates whether the measured loss ratio is within the configured loss threshold |

tmnxOamSathStsTestOprStFLR |

measuredThroughput |

The measured throughput |

tmnxOamSathStsTestMeasThroughput |

mepId |

The configured source MEP |

tmnxOamSathStsSvcStrmSrcMepId |

mepDomain |

The configured Maintenance Domain (MD) for the MEP |

tmnxOamSathStsSvcStrmSrcMdIndex |

mepAssociation |

The configured Maintenance Association (MA) for the MEP |

tmnxOamSathStsSvcStrmSrcMaIndex |

mfactor |

A factor to use as a margin by which the observed throughput is off from the configured throughput to determine whether a service test passes or fails |

tmnxOamSathStsSvcStrmAcMFactor |

perfTestDurHours |

The duration, in hours, of the performance test |

tmnxOamSathStsTestDrPerfHours |

perfTestDurMin |

The duration, in minutes, of the performance test |

tmnxOamSathStsSvcTestDrPerfMin |

perfTestDurSec |

The duration, in seconds, of the performance test |

tmnxOamSathStsTestDrPerfSec |

pirRate |

The PIR rate configured |

tmnxOamSathStsSvcStrmTxPIR |

pirTestDurMin |

The PIR test duration, in minutes |

tmnxOamSathStsSvcTestDrCirPirMin |

pirTestDurSec |

The PIR test duration, in seconds |

tmnxOamSathStsSvcTestDrCirPirSec |

pirThreshold |

The PIR threshold configured in the acceptance criteria |

tmnxOamSathStsSvcStrmAcThldPIR |

pktCountRx |

The received packet count |

tmnxOamSathStsTestMeasFramesRx |

pktCountTx |

The transmitted packet count |

tmnxOamSathStsTestMeasFramesTx |

policeTestDurMin |

The policing test duration, in minutes |

tmnxOamSathStsSvcTestDrPoliceMin |

policeTestDurSec |

The policing test duration, in seconds |

tmnxOamSathStsSvcTestDrPoliceSec |

|

port |

The source port used as the test launch point |

tmnxOamSathStsSvcStrmSrcRtrPort |

|

routerInstance |

The routing instance of the interface launching the test |

tmnxOamSathStsSvcStrmSrcRtrInst |

|

routerInterface |

The configured router interface launching the test |

tmnxOamSathStsSvcStrmSrcRtrIf |

runNumber |

The run number of the test |

tmnxOamSathStsSvcTestRun |

runOperState |

The operational status of the service test and service stream. |

tmnxOamSathStsSvcTestOprState tmnxOamSathStsSvcStrmOprState tmnxOamSathStsTestOprState |

sap |

The SAP used as the test endpoint |

tmnxOamSathStsSvcStrmSrcSAP |

sequence |

The sequence of payload sizes specified in the frame-mix policy |

tmnxOamSathStsSvcStrmFrmSizeSeq |

sizeA |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeA specifies the frame size for the packet identified with the letter ‟a” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeA |

sizeB |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeB specifies the frame size for the packet identified with the letter ‟b” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeB |

sizeC |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeC specifies the frame size for the packet identified with the letter ‟c” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeC |

sizeD |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeD specifies the frame size for the packet identified with the letter ‟d” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeD |

sizeE |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeE specifies the frame size for the packet identified with the letter ‟e” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeE |

sizeF |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeF specifies the frame-size for packet identified with letter ‟f” in the frame-sequence. |

tmnxOamSathStsSvcStrmFrmSizeF |

sizeG |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeG specifies the frame size for the packet identified with the letter ‟g” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeG |

sizeH |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeH specifies the frame size for the packet identified with the letter ‟h” in the frame sequence. |

tmnxOamSathStsSvcStrmFrmSizeH |

sizeU |

The user can configure a frame sequence to indicate the sequence of frame sizes to be generated by the tool. The frame sequence is specified using letters ‟a” to ‟h” and ‟u”. sizeU specifies the frame size for the packet identified with the letter ‟u” in the frame sequence. sizeU is the user-defined packet size. |

tmnxOamSathStsSvcStrmFrmSizeU |

srcMac |

The source MAC address in the frame payload generated by the tool |

tmnxOamSathStsSvcStrmSrcMacAddr |

sTagDot1p |

The dot1p value set for VLAN tag 1 (outermost VLAN tag) in the frame payload generated by the tool |

tmnxOamSathStsSvcStrmSvcTagPrio |

sTagDei |

The DEI value set for VLAN tag 1 (outermost VLAN tag) in the frame payload generated by the tool |

tmnxOamSathStsSvcStrmSvcTagDEI |

startTime |

The time at which the test was started (wall clock time) |

tmnxOamSathStsSvcTestTimeStart |

streamId |

The stream identifier |

tmnxOamSathCfgSvcStrmNum |

streamRunOrder |

Indicates if the streams configured for the service test were run one after another or run in parallel. This is for each stream and test type. |

tmnxOamSathSvcTestStrRunOrder |

testCir |

The CIR configuration test |

tmnxOamSathStsSvcStrmCIR |

testCirPir |

The CIR-PIR configuration test |

tmnxOamSathStsStrmCirPir |

testPerf |

The performance test |

tmnxOamSathStsSvcStrmPerformance |

testPolicing |

The policing test |

tmnxOamSathStsSvcStrmPolicing |

testname |

The service test name |

tmnxOamSathCfgSvcTestName |

throughputAcceptancePass |

Indicates whether the measured throughput matches the configured CIR/PIR rate |

tmnxOamSathStsTestOprStThroughpt |

trapNotification |

Indicates whether the trap must be sent after completion of the test |

tmnxOamSathStsSvcTestComplNotif tmnxOamSathStsSvcStrmComplNotif |

Ethernet frame size specification

The service test testhead OAM tool can be configured to generate a flow containing a frame sequence of different sizes. The following table lists the frame size and designations defined by ITU-T Y.1564.

| a | b | c | d | e | f | g | h | u |

|---|---|---|---|---|---|---|---|---|

64 |

128 |

256 |

512 |

1024 |

1280 |

1518 |

MTU |

User defined |

The Ethernet frame size ranges from 64 bytes to 9212 bytes, and the designated letters are used to specify the frame size. Frame size is configured using the sequence command in the frame-mix context in a service stream. The frame mix only allows the configuration of a single fixed frame size sequence. The configured size applies to all frames in the test flow.

Prerequisites

The user must consider the following prerequisites for using the testhead OAM tool:

-

Only test traffic from the service activation testhead should be present on the entity under test.

-

The lbm-svc-act-responder command must be configured on the responding MEP to loopback the Y.1564 SAT traffic.

-

The testhead OAM tool does not check the state of the MEP parent (router interface) before initiating the tests.

-

In the case of base router interface testing, the test executes regardless of the state of the interface. This is because the test is executing at Layer 2, where the MEP resides, and not at an IP layer. Only Layer 2 functions are tested.

-

Modifications to the service test configuration can be made only when the testhead tool is shut down.

-

Only unicast traffic flows should be tested with the testhead tool.

-

Only out-of-service performance metrics can be measured using the testhead OAM tool. For in-service performance metrics, use OAM-PM-based tools.

-

The CFM lbm-svc-act-responder configuration is required only on the remote MEP that is responsible for looping back the test stream.

-

Any disruptive fault condition (such as a MEP down, or MDA or card reboot) stops the Y.1564 service test. Only a test type that has completed its run indicates a pass or fail based on the acceptance criteria thresholds. A test type halted as a result of a fault condition always reports the test as ‟Failed”.

Configuration guidelines

This section lists the configuration guidelines for the testhead OAM tool.

-

The following cannot be configured for testing with the testhead tool:

-

network interfaces configured on a LAG

-

SAPs configured on a LAG

-

SAPs configured using connection profiles

-

SAPs configured using PXC connections

-

-

In a subscriber environment, if the Layer 2 traffic is redirected through the subscriber queues (using a non-subscriber traffic configuration) the return SAT throughput LBR frames are forwarded into the service and not included in the SAT test result. To run SAT testing in a subscriber context, the single-sub-parameters configuration under the SAP must be deleted.

-

Up MEPs on a VPLS SAP must configure the MEP MAC address on the SAP using the following command:

Failure to configure the MEP MAC in this manner causes the service stream to using the multicast queues, which changes the dynamics of the test.configure service vpls sap static-mac <ieee-address> [create] -

Down MEPs must not use the command static-mac ieee-address create command to install the MEP MAC address on the SAP. This causes a forwarding miss and the test does not receive any response packets.

-

The testhead waits for about 2 seconds at the end of each configured test before collecting statistics. This ensures all in-flight packets are received by the node and accounted for in the test measurements. In the case of a router interface, the testhead waits for about 5 seconds.

-

The testhead works with the Layer 2 rate, that is, the rate after subtracting the Layer 1 overhead. The Layer 1 overhead is because of the IFG and Preamble added to every Ethernet frame, and is typically about 20 bytes (IFG is 12 bytes and Preamble is 8 bytes).

-

It is not expected that the user plans to use the testhead tool to measure the throughput or other performance parameters of the network during the course of a network event.

-

Service activation testhead functionality requires a mirror resource per stream. If no mirror resources are available, the test fails to execute.

-

For sources to be properly allocated, the service activation testhead requires double the configured test bandwidth be available. In the case of the test type policing, an additional 12.5% is required because of the over subscription of the policing test.

Note: Failure to ensure the appropriate overhead is available leads to packet loss and resource contention. -

Use of mirror resources for the service activation testhead causes an interruption to traffic mirror or LI when there is a mirror destination conflict. When these conflicts occur, a log event is sent to the specific application log that indicates a disruption has occurred. When the service activation testhead completes all the tests in the template, the previously interrupted mirror or LI is reinstated, and a new application log event is issued.

-

The testhead uses a QoS adaptation-rule equal to closest for traffic generation. The adaptation rule provides a constraint used when the exact rate is not available because of hardware implementation trade-offs. This means throughput rates could be slightly higher or lower than the specified rate when the exact rate is not available. If this occurs for a test stream near the port level, and the closest match is above the port rate, packet loss may occur because the stream is transmitting more than the port capacity. However, the throughput reports the actual port rate.

-

Depending on start and stop events, the test may be slightly longer or shorter than configured, typically between 0 and 20 ms. When this event occurs, the throughput calculation is affected by that measure. The impact of this depends on the length of the test. In the longer duration test, this difference is muted by the longer runtime of that test.

Configuring service test OAM testhead tool parameters

The following example displays a Service Activation Testhead configuration.

MD-CLI

[ex:/configure test-oam service-activation-testhead]

A:admin@node-2# info detail

## apply-groups

## apply-groups-exclude

acceptance-criteria-template "accept-1" {

## apply-groups

## apply-groups-exclude

## description

cir-threshold 9000000

pir-threshold 9900000

loss-threshold 0.05

loss-threshold-policing 25.0

delay-threshold 100

delay-var-threshold 10

m-factor 1000

}

acceptance-criteria-template "default" {

## apply-groups

## apply-groups-exclude

description "Test OAM TestHead default acceptance criteria template (not modifiable)"

## cir-threshold

## pir-threshold

## loss-threshold

## loss-threshold-policing

## delay-threshold

## delay-var-threshold

## m-factor

}

frame-size-template "default" {

## apply-groups

## apply-groups-exclude

size-a 64

size-b 128

size-c 256

size-d 512

size-e 1024

size-f 1280

size-g 1518

size-h 9212

size-u 2000

}

frame-size-template "frame-1" {

## apply-groups

## apply-groups-exclude

size-a 64

size-b 128

size-c 256

size-d 512

size-e 1024

size-f 1280

size-g 1518

size-h 9212

size-u 2000

}

service-test "sat-1-gen" {

## apply-groups

## apply-groups-exclude

admin-state enable

## description

## accounting-policy

service-test-completion-notification true

stream-run-type parallel

test-duration {

cir {

minutes-seconds "05:00"

}

cir-pir {

minutes-seconds "10:00"

}

policing {

minutes-seconds "10:00"

}

performance {

hours-minutes-seconds "01:00:00"

}

}

service-stream 1 {

## apply-groups

## apply-groups-exclude

admin-state enable

## description

acceptance-criteria-template "accept-1"

rate-cir 9000000

rate-pir 9900000

service-stream-completion-notification false

frame-mix {

frame-size-template "frame-1"

sequence "u"

}

test-types {

cir true

cir-pir true

policing false

performance true

}

frame-payload {

data-pattern {

repeat 0x00000000

}

ethernet {

dst-mac 00:00:00:00:00:02

eth-cfm {

source {

mep-id 1

md-admin-name "level-2"

ma-admin-name "L2-1564-testing"

}

}

s-tag {

dot1p 5

discard-eligible false

}

c-tag {

dot1p 7

discard-eligible false

}

}

}

}

}classic CLI

A:node-2>config>test-oam# service-activation-testhead

A:node-2>config>test-oam>sath# info detail

----------------------------------------------

acceptance-criteria-template "default" create

no description

no cir-threshold

no delay-threshold

no delay-var-threshold

no loss-threshold

no loss-threshold-policing

no m-factor

no pir-threshold

exit

acceptance-criteria-template "accept-1" create

no description

cir-threshold 9000000

delay-threshold 100

delay-var-threshold 10

loss-threshold 0.0500

loss-threshold-policing 25.0000

m-factor 1000

pir-threshold 9900000

exit

frame-size-template "default" create

size-a 64

size-b 128

size-c 256

size-d 512

size-e 1024

size-f 1280

size-g 1518

size-h 9212

size-u 2000

exit

frame-size-template "frame-1" create

size-a 64

size-b 128

size-c 256

size-d 512

size-e 1024

size-f 1280

size-g 1518

size-h 9212

size-u 2000

exit

service-test "sat-1-gen" create

no description

no accounting-policy

service-test-completion-notification

stream-run-type parallel

service-stream 1 create

no description

acceptance-criteria-template "accept-1"

rate cir 9000000 pir 9900000

no service-stream-completion-notification

frame-mix

frame-size-template "frame-1"

sequence "u"

exit

frame-payload

data-pattern

repeat 0

exit

ethernet

dst-mac 00:00:00:00:00:02

c-tag

discard-eligible false

dot1p 7

exit

eth-cfm

source mep 1 domain 1000 association 1000

exit

s-tag

discard-eligible false

dot1p 5

exit

exit

exit

test-types

cir

cir-pir

performance

no policing

exit

no shutdown

exit

test-duration

cir

minutes 5

seconds 0

exit

cir-pir

minutes 10

seconds 0

exit

performance

hours 1

minutes 0

seconds 0

exit

policing

minutes 10

seconds 0

exit

exit

no shutdown

exit

----------------------------------------------Use the following command to start a service test.

oam service-activation-testhead service-test "sat-1-gen" startUse the following command to display the run instance summary of a service test.

show test-oam service-activation-testhead service-tests

===============================================================================

Y.1564 Service Activation Test Run Summary

===============================================================================

Service Test Name Run Completed (UTC)

-------------------------------------------------------------------------------

sat-1-gen 13 2003/07/14 12:44:41

sat-1-gen 14 2003/07/16 22:42:17

sat-1-gen 15 N/A

-------------------------------------------------------------------------------

No. of Y.1564 Service Activation Test Runs: 3

===============================================================================Use the following command to display the service test results.

show test-oam service-activation-testhead service-test "sat-1-gen" run 13-------------------------------------------------------------------------------

Y.1564 Results for Run 13

Service Test sat-1-gen

-------------------------------------------------------------------------------

Description : (Not Specified)

Oper State : passed

Start Time : 2003/07/14 11:29:21 UTC

End Time : 2003/07/14 12:44:41 UTC

Stream Run Type : parallel

Acct Policy : (Unspecified)

Acct Policy Status: none

Completion Notif'n: enabled

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Y.1564 Results for Stream 1 Run 13

Service Test sat-1-gen

-------------------------------------------------------------------------------

Description : (Not Specified)

Oper State : passed

Source MEP : 1 MEP Domain : 1000

MEP Assoc. : 1000

Router Inst. : Base

Router Intf. : to-dut-2

Source Port : 1/1/c5/2

Source MAC : 00:00:00:00:00:01 Dest MAC : 00:00:00:00:00:02

Pattern (Dec): 0 Pattern (Hex): 0x0

C-Tag Dot1p : 7 C-Tag DEI : false

S-Tag Dot1p : 5 S-Tag DEI : false

Frm Size Tmpl: frame-1

Frm Sequence : u Frame Size a : 64 bytes

Frame Size b : 128 bytes Frame Size c : 256 bytes

Frame Size d : 512 bytes Frame Size e : 1024 bytes

Frame Size f : 1280 bytes Frame Size g : 1518 bytes

Frame Size h : 9212 bytes Frame Size u : 2000 bytes

Confg Tx CIR : 9000000 kbps Confg Tx PIR : 9900000 kbps

Acp Crit Tmpl: accept-1

Test Types : CIR CIR-PIR Performance

Complet Notif: disabled

-------------------------------------------------------------------------------

-------------------------------------------------------------------------------

Y.1564 Results for Stream 1 Run 13 Test Type CIR

Service Test sat-1-gen

-------------------------------------------------------------------------------

Oper State : passed

Test Duration: 05:00 (mm:ss) Time Left : 0 s

Start Time : 2003/07/14 11:29:23 UTC

End Time : 2003/07/14 11:34:23 UTC

Frms Injected: 169168256 Frms Received: 169168256

Min Delay : 32 us Min Delay Var: 0 us

Max Delay : 48 us Max Delay Var: 12 us

Avg Delay : 40 us Avg Delay Var: 3 us

-------------------------------------------------------------------------------

===============================================================================

Test Compliance Report

===============================================================================

Criteria Throughput(kbps) FLR (%) Delay (us) Delay Var (us)

-------------------------------------------------------------------------------

Acceptable 9000000 0.0500 100 10

Configured 9000000 0.0500 100 10

M-Factor 1000 N/A N/A N/A

Measured 9000075 0.0000 40 3

Result pass pass pass pass

===============================================================================

-------------------------------------------------------------------------------

Y.1564 Results for Stream 1 Run 13 Test Type CIR-PIR

Service Test sat-1-gen

-------------------------------------------------------------------------------

Oper State : passed

Test Duration: 10:00 (mm:ss) Time Left : 0 s

Start Time : 2003/07/14 11:34:30 UTC

End Time : 2003/07/14 11:44:30 UTC

Frms Injected: 371389928 Frms Received: 371389928

Min Delay : 36 us Min Delay Var: 0 us

Max Delay : 96 us Max Delay Var: 20 us

Avg Delay : 43 us Avg Delay Var: 2 us

-------------------------------------------------------------------------------

===============================================================================

Test Compliance Report

===============================================================================

Criteria Throughput(kbps) FLR (%) Delay (us) Delay Var (us)

-------------------------------------------------------------------------------

Acceptable 9900000 0.0500 100 10

Configured 9900000 0.0500 100 10

M-Factor 1000 N/A N/A N/A

Measured 9900070 0.0000 43 2

Result pass pass pass pass

===============================================================================

-------------------------------------------------------------------------------

Y.1564 Results for Stream 1 Run 13 Test Type Performance

Service Test sat-1-gen

-------------------------------------------------------------------------------

Oper State : passed

Test Duration: 01:00:00 (hh:mm:ss) Time Left : 0 s

Start Time : 2003/07/14 11:44:36 UTC

End Time : 2003/07/14 12:44:36 UTC

Frms Injected: 2227620145 Frms Received: 2227620145

Min Delay : 36 us Min Delay Var: 0 us

Max Delay : 96 us Max Delay Var: 20 us

Avg Delay : 43 us Avg Delay Var: 2 us

-------------------------------------------------------------------------------

===============================================================================

Test Compliance Report

===============================================================================

Criteria Throughput(kbps) FLR (%) Delay (us) Delay Var (us)

-------------------------------------------------------------------------------

Acceptable 9900000 0.0500 100 10

Configured 9900000 0.0500 100 10

M-Factor 1000 N/A N/A N/A

Measured 9900007 0.0000 43 2

Result pass pass pass pass

===============================================================================