TCAM allocation on SR Linux devices

Ternary Content Addressable Memory (TCAM) on SR Linux devices can be allocated to system resources either statically or dynamically, based on configuration requirements.

The following sections describe static and dynamic allocation for TCAM banks (known as TCAM slices) and provide details for how SR Linux allocates TCAM on the following devices:

Static TCAM allocation

Static TCAM refers to TCAM slices that are allocated at initialization and remain constantly reserved for their allocated purpose. Each static TCAM slice has a type, which refers to the type or types of entries that it stores. Examples of different entry types are IPv4 ingress ACL, IPv6 ingress ACL, and so on. A static TCAM slice consumes resources even if has no entries.

When the system is initialized (for example, after a restart) static TCAM slices are allocated first, before the configuration is evaluated and dynamic TCAM slices are created.

Dynamic TCAM allocation

Dynamic TCAM refers to TCAM slices that are taken from a free or unused pool of TCAM slices and released back to that pool when configuration determines that they are no longer needed.

Each dynamic TCAM slice has a type, which refers to the type or types of entries that it stores. When the configuration of the device has no entries of the type associated with a particular dynamic TCAM slice, there are no allocated slices of that type. The device does not hold onto empty TCAM banks to guarantee a minimum reservation.

The addition of the first entry associated with a particular dynamic TCAM slice attempts to create the required group of slices (slice group). Depending on the entry type (and resulting TCAM key length) the size of the slice group can be one TCAM slice (single-wide TCAM slice type), two TCAM slices (double-wide TCAM slice type), or three TCAM slices (triple-wide TCAM slice type). If a configuration change adds many entries of a new type, multiple slice groups may be needed.

Subsequent configuration changes may add more entries of a type that already has dynamic TCAM slices allocated. When the net number of new entries of that type (that is, additional entries minus deleted entries) exceeds the limit of the current allocation (after filling all empty holes in existing allocated slice groups), the system attempts to allocate new slice groups. There is no artificially imposed limit on the maximum number of slices that can be consumed for an entry type.

If a configuration change requires additional dynamic TCAM slices, and there are not enough free slices to accommodate the new slice groups, even if existing groups are moved to satisfy system constraints on the placement of groups, then the configuration commit is blocked.

If a configuration change requires additional dynamic TCAM slices, and there are enough free slices to accommodate the new slice groups, but only if existing groups are moved to satisfy system constraints on the placement of groups, then the commit is allowed and the TCAM slice relocation operations are initiated automatically. Moving a bank to a new location can take two or three minutes, so an entire operation involving multiple banks could delay the programming of the new entries for up to 20 minutes or more.

The deletion of the last entry associated with a particular dynamic slice group deletes the group and makes the freed slices available to other slice types. If a single configuration commit deletes entries of one type and adds entries of another type, the deleted entries (and any reclamation of slices) are processed first, so that there is a greater chance of successfully allocating the additional slices.

The deletion of some (but not all) entries of a specific type that already has dynamic TCAM slices allocated can leave empty entries in these TCAM slices. This could create a situation where there are N allocated slices for a specific entry type, but considering the empty entries in each of these N slices, fewer than N slices would be needed if the used entries could be moved between slices to consolidate them. This process, called slice compaction, is done automatically during the processing of a configuration transaction that results in a net deletion of entries; it should not be service impacting.

The maximum number of statistics resources that is available to dynamic TCAM entry

types remains fixed, and does not scale to the maximum possible size for an entry

type. If an ACL rule is configured to have per-entry statistics, but a statistics

index resource cannot be allocated, this is indicated by

statistics-resource-allocated = false in the YANG state for the

entry.

Displaying static and dynamic TCAM usage

Use the info from state platform command to display the number of free static and dynamic TCAM entries available to each type of ACL. For example:

--{ running }--[ ]--

# info from state platform linecard 1 forwarding-complex 0 tcam resource *

platform {

linecard 1 {

forwarding-complex 0 {

tcam {

resource if-input-ipv4 {

free-static 6912

free-dynamic 0

reserved 0

programmed 0

}

resource if-input-ipv4-qos {

free-static 6904

free-dynamic 0

reserved 8

programmed 8

}

resource if-input-ipv6 {

free-static 2304

free-dynamic 0

reserved 0

programmed 0

}

resource if-input-ipv6-qos {

free-static 2262

free-dynamic 0

reserved 42

programmed 42

}

resource if-input-mac {

free-static 2304

free-dynamic 0

reserved 0

programmed 0

}

resource if-input-policer {

free-static 1536

free-dynamic 0

reserved 0

programmed 0

}

resource if-output-cpm-ipv4 {

free-static 1536

free-dynamic 0

reserved 0

programmed 0

}

resource if-output-cpm-ipv6 {

free-static 512

free-dynamic 0

reserved 0

programmed 0

}

resource if-output-cpm-mac {

free-static 1536

free-dynamic 0

reserved 0

programmed 0

}

resource system-capture-ipv4 {

free-static 768

free-dynamic 0

reserved 0

programmed 0

}

resource system-capture-ipv6 {

free-static 384

free-dynamic 0

reserved 0

programmed 0

}

}

}

}

}The free-static statistic is the number of free entries assuming the

number of TCAM slices that are currently allocated to the type of entry remains

constant.

The free-dynamic statistic is the number of free entries that are

possible if all remaining unused TCAM slices are dynamically assigned to this one

type of entry.

TCAM allocation on 7220 IXR-D1

On the 7220 IXR-D1, all TCAM slices are allocated statically. The 7220 IXR-D1 does not support dynamic TCAM allocation. The 7220 IXR-D1 has 3 groups of TCAM slices associated with 3 different stages of the forwarding pipeline. Each of these groups is a separate resource pool.

TCAM allocation details for each stage are summarized in the following table.

| Stage | TCAM allocation details |

|---|---|

| VFP |

There are 4 VFP slices. Each VFP slice provides 512 entries. IPv4 packet capture filter entries require a single slice of single-width entries, providing a maximum of 512 entries per slice. One bank is statically allocated, providing scaling of 512 entries. IPv6 packet capture filter entries require a single slice of double-width entries, providing a maximum of 256 entries per slice. One bank is statically allocated, providing scaling of 256 entries. One bank is unused. |

| IFP |

There are 18 IFP slices. Each IFP slice provides 512 entries. Ingress IPv4 filter entries require a single slice of single-width entries, providing a maximum of 512 entries per slice. Two banks are statically allocated, providing scaling of 1024 entries. Ingress IPv6 filter entries require a triple-wide slice, providing a maximum of 512 entries per triple-wide slice. Six banks are statically allocated, providing scaling of 1024 entries. Ingress MAC filter entries require a double-wide slice, providing a maximum of 512 entries per double-wide slice. Zero banks are statically allocated. Six banks are unused. |

| EFP |

There are 4 EFP slices. Each EFP slice provides 256 entries. Egress IPv4 and IPv4 CPM filter entries require a single slice of single-width entries, providing a maximum of 256 entries per slice. One bank is statically allocated, providing scaling of 256 entries. Egress IPv6 and IPv6 CPM filter entries require a double-wide slice, providing a maximum of 256 entries per double-wide slice. Two banks are statically allocated, providing scaling of 256 entries. Egress MAC filter entries require a single-wide slice, providing a maximum of 256 entries per slice. Zero banks are statically allocated. One bank is unused. |

TCAM allocation on 7220 IXR-D2 and D3

The 7220 IXR-D2 and 7220 IXR-D3 have 3 groups of TCAM slices associated with 3 different stages of the forwarding pipeline. Each of these groups is a separate resource pool. A free slice in one pool is not available to an entry type associated with a different stage of the pipeline. For example, freeing an IFP bank does not provide one more available EFP bank.

The details of each stage in terms of supported dynamic TCAM entry types, total number of TCAM slices, and number of pre-reserved static TCAM slices (with their associated entry types) is summarized in the following table.

| Stage | TCAM allocation details |

|---|---|

| VFP |

Lookup happens after There are 4 VFP slices. Each VFP slice provides 256 entries indexed by a 234-bit key or 128 entries indexed by a 468-bit key (intra-slice double-wide mode). |

|

1 slice is allocated statically. Entries serve 2 purposes:

|

|

|

3 slices are available for dynamic allocation:

|

|

| IFP |

Lookup happens after QoS classification, tunnel decapsulation, and FIB lookup. Used for ingress interface ACLs, ingress subinterface policing, ingress MF QoS classification, VXLAN ES functionality, and CPM extraction (CPU QoS queue assignment). There are 12 IFP slices. Each IFP slice provides 768 entries indexed by a 160-bit key (intra-slice double-wide mode). |

|

4 slices are allocated statically:

|

|

|

8 slices are available for dynamic allocation:

|

|

| EFP |

Lookup happens before final packet modification, after CoS rewrite. Used for out-mirror stats, egress interface ACLs and CPM filter ACLs. There are 4 EFP slices. Each EFP slice provides 512 entries indexed by a 272-bit key. |

|

1 slice is allocated statically. Entries serve 2 purposes:

|

|

|

3 slices are available for dynamic allocation:

|

|

|

XGS has limitations expanding an EFP slice when the entries have policers; therefore the following restriction is imposed: If a single-wide IPv4 slice has been created, and it has entries with policers (for example. CPM IPv4 filter entries) or entries with a drop and log action, it is not possible to expand the number of IPv4 slices beyond this single slice; conversely, if the number of IPv4 slices was allowed to extend to 2 or more it is not possible to attach a policer or add a drop and log action to any entries in the expanded set of slices |

TCAM allocation on 7220 IXR-D4 and D5

The 7220 IXR-D4 and 7220 IXR-D5 have 3 groups of TCAM slices associated with 3 different stages of the forwarding pipeline.

TCAM allocation details for each stage is summarized in the following table.

| Stage | TCAM allocation details |

|---|---|

| VFP |

There are 4 VFP slices, providing a total of 4*256 = 1024 entries. All slices are allocated statically. |

|

1 slice:

|

|

|

3 slices for packet capture and system filter entries:

|

|

| IFP |

There are 12 IFP slices on 7220 IXR-D4/D5, providing a total of 12*2K = 24K entries. |

|

On 7220 IXR-D5, all slices are allocated statically:

|

|

|

On 7220 IXR-D5, all slices are allocated statically:

|

|

| EFP |

There are 4 EFP slices providing a total of 4*512 = 2048 entries |

|

1 slice is allocated statically. Entries serve 2 purposes:

|

|

3 slices are available for dynamic allocation

|

TCAM allocation on 7250 IXR-6/10/6e/10e and 7250 IXR-X series

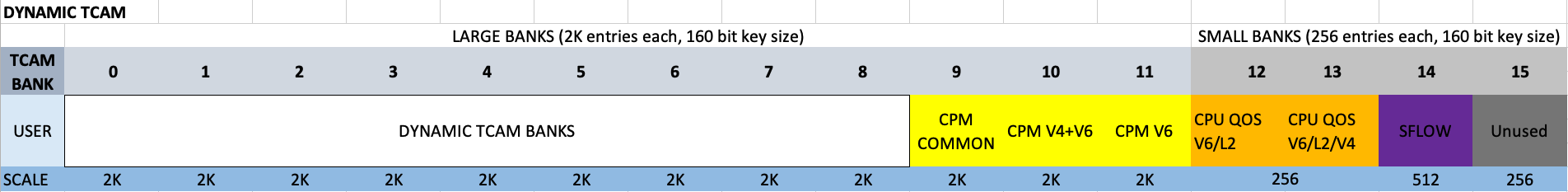

Each forwarding complex on a 7250 IXR-6/10/6e/10e and 7250 IXR-X series IMM has a TCAM with 12 large banks and 4 small banks. Each of the large banks supports 2K entries each, addressable with a 160-bit key. Each of the small banks supports 256 entries each with a 160-bit key size. This TCAM bank allocation is shown in TCAM allocation on 7250 IXR-6/10/6e/10e and 7250 IXR-X platforms.

ACLs can be dynamically allocated to banks 0-8. Requirements for each ACL type are as follows:

- Ingress IPv4 ACL: entries require single-wide banks that can start at any bank number (and do not need to be contiguous)

- Egress IPv4 ACL: entries require single-wide banks that can start at any bank number (and do not need to be contiguous)

- Ingress IPv6 ACL: entries require double-wide (side-by-side) banks that must start at an even bank number

- Egress IPv6 ACL: entries require double-wide (side-by-side) banks that must start at an even bank number

- IPv4 policy-forwarding (PBF): entries require single-wide banks that can start at any bank number (and do not need to be contiguous)

Dynamic TCAM allocation works as follows:

- When a new bank needs to be allocated, the system looks for the first available space, progressing in ascending order from bank 0. If space for a double-wide bank cannot be found, the system attempts to make space by moving the fewest number of single-wide banks.

- When the number of entries required by a particular user drops to a level where a single-wide or double-wide bank can be freed up, the system selects the bank that can create the largest space of free banks, moving entries between banks as necessary.