VSR Hypervisor Configuration

This chapter provides information about VSR hypervisor configuration.

Topics in this chapter include:

Applicability

The information and configuration in this chapter applies to all VSR releases.

Overview

Deployment of the Nokia Virtualized Service Router (VSR) virtual machine (VM) requires a highly tuned hypervisor. Configuration of a KVM hypervisor server requires changing the BIOS settings, the kernel parameters, and, finally, the XML file that defines the VSR VM. Running non-VSR VMs on the same hypervisor is only allowed if there is no resource overlap or oversubscription with the VSR VM. This includes dedicated memory and CPU pinning so that no other VM can use the VSR’s CPUs.

Configuration

The detailed configuration requirements are described in the VSR VNF Installation and Setup Guide. The following examples show VSR VM deployments on commonly used hypervisor hardware, such as RHEL 7 or Centos 7, and must be adapted as needed. Some commands shown may not be installed by default.

The configuration consists of the following topics which apply across all server types:

- BIOS requirements

- CPU settings, such as NUMA and hyperthreading

- Host OS and Network Interface Card (NIC) requirements

Specific configuration examples are provided for the following servers:

- Nokia VSR Appliance (VSR-a) AirFrame server

- Dell server (with and without hyperthreading)

- HPE server (with and without hyperthreading)

A complete XML file example is also provided.

Required BIOS settings

- SR-IOV enabled (if used)

- Intel VT-x enabled

- Intel VT-d enabled

- x2APIC enabled

- NUMA enabled (if used)

- hardware prefetcher disabled

- I/O non posted prefetching disabled

- adjacent cache line prefetching disabled

- PCIe Active State Power Management (ASPM) support disabled

- Advanced Configuration and Power Interface (ACPI)

- P-state disabled

- C-state disabled

- NUMA node interleaving disabled (if NUMA enabled)

- turbo boost enabled

- power management set to maximum or high performance

- CPU frequency set to maximum supported without overclocking

- memory speed set to maximum supported without overclocking

CPU terminology

This chapter uses the following terminology to describe CPUs:

- socket: the physical socket on the motherboard that the CPU is inserted into, typically one or two per motherboard

- CPU: depending on the context, this is either the physical CPU package that is inserted into the core or a logical processor that can be assigned to the VM in the XML file. (CPU can mean physical package, die, core, or logical processor.)

- core: the physical CPU die contains multiple CPU cores or processors that can be further logically divided into threads (two per core)

- processor: logical processor, CPU, or thread; either one per core if hyperthreading is disabled or two per core if hyperthreading is enabled

- pCPU: either one of the CPU cores if hyperthreading is disabled or one of the threads if hyperthreading is enabled\

- vCPU: a virtual CPU assigned to the VM, running on one of the pCPUs.

Multiple-socket servers

To use multiple-socket servers with multiple NUMA nodes, NUMA must be enabled in the BIOS. When using multiple NUMA nodes on a server, ensure that all resources belonging to a single VSR do not span across more than one NUMA node. Consequently, to fully utilize a dual socket server, it is necessary to provision multiple VSR VMs or use non-VSR VMs on one of the NUMA nodes.

Hyperthreading

VSR CPU pinning configuration is more complex if hyperthreading is enabled. As hyperthreading does not always provide a performance benefit, hyperthreading can be disabled if it is not needed. Hyperthreading is recommended for CPU-intensive packet applications like Application Assurance (AA) and IPsec.

Host OS

Ensure that the host OS and kernel are supported by consulting the SR OS Software Release Notes for the specific VSR release.

To verify the host OS:

[admin@hyp56 ~]$ cat /etc/*release*

CentOS Linux release 7.9.2009 (Core)

Derived from Red Hat Enterprise Linux 7.9 (Source)

...To verify the host kernel:

[admin@hyp56 ~]$ uname -a

Linux hyp56 3.10.0-1160.66.1.el7.x86_64 #1 SMP Wed May 18 16:02:34 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

Host NICs

Ensure that the host NICs are supported by consulting the SR OS Software Release Notes for the specific VSR release. The firmware version must also be supported, as well as the drivers, if applicable (not applicable to PCI-PT for example). The lshw command output shows all host NICs and their PCI addresses:

[root@hyp56 ~]# lshw -c network -businfo

Bus info Device Class Description

========================================================

pci@0000:04:00.0 p6p1 network MT27700 Family [ConnectX-4]

pci@0000:04:00.1 p6p2 network MT27700 Family [ConnectX-4]

pci@0000:01:00.0 em1 network Ethernet Controller 10-Gigabit X540-AT2

pci@0000:01:00.1 em2 network Ethernet Controller 10-Gigabit X540-AT2

pci@0000:06:00.0 p5p1 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.1 p5p2 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.2 p5p3 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.3 p5p4 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:09:00.0 em3 network I350 Gigabit Network Connection

pci@0000:09:00.1 em4 network I350 Gigabit Network Connection

pci@0000:81:00.0 network Ethernet 10G 2P X520 Adapter

pci@0000:81:00.1 network Ethernet 10G 2P X520 Adapter

pci@0000:83:00.0 p4p1 network MT27700 Family [ConnectX-4]

pci@0000:83:00.1 p4p2 network MT27700 Family [ConnectX-4]

pci@0000:83:00.2 p4p1_0 network MT27700 Family [ConnectX-4 Virtual Function]

pci@0000:83:00.4 p4p2_0 network MT27700 Family [ConnectX-4 Virtual Function]

pci@0000:85:00.0 p3p1 network Ethernet 10G 2P X520 Adapter

pci@0000:85:00.1 p3p2 network Ethernet 10G 2P X520 Adapter

pci@0000:87:00.0 p2p1 network Ethernet 10G 2P X520 Adapter

pci@0000:87:00.1 p2p2 network Ethernet 10G 2P X520 Adapter

br-mgmt network Ethernet interface

virbr0-nic network Ethernet interface

virbr0 network Ethernet interface

vnet0 network Ethernet interface

This server has several types of NICs, including Mellanox ConnectX-4 and Intel X710. The PCI address format is domain:bus:device.function. Different ports on the same physical NIC have addresses that differ only by the function number; for example, in the preceding lshw output, the first two ports are on the same physical NIC. Virtual functions (VFs) may have a different device or function number than their physical NIC, and VF numbering schemes may vary. Another way to check the installed NICs is using the lspci command:

[admin@hyp62 ~]$ lspci -v | grep "Ethernet controller"

02:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5719 Gigabit Ethernet PCIe (rev 01)

02:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5719 Gigabit Ethernet PCIe (rev 01)

02:00.2 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5719 Gigabit Ethernet PCIe (rev 01)

02:00.3 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5719 Gigabit Ethernet PCIe (rev 01)

04:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

04:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

05:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

05:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

05:00.2 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

05:00.3 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

05:02.0 Ethernet controller: Intel Corporation Ethernet Virtual Function 700 Series (rev 01)

81:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

81:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

Nokia AirFrame server (VSR-a)

The VSR-a hypervisor is an AirFrame server that has been customized by Nokia R&D.

Server model and motherboard

The server model can be identified with the dmidecode command:

[root@hyp70 admin]# dmidecode -t 2

# dmidecode 3.0

Getting SMBIOS data from sysfs.

SMBIOS 3.2 present.

# SMBIOS implementations newer than version 3.0 are not

# fully supported by this version of dmidecode.

Handle 0x0002, DMI type 2, 15 bytes

Base Board Information

Manufacturer: Nokia Solutions and Networks

Product Name: AR-D52BT-A/AF0310.01

...

The Product Name field describes the server type and motherboard; this example corresponds to a VSR-a SN8.

CPU

The lscpu command shows the number of sockets, cores, threads, and their numbering scheme:

[root@hyp70 admin]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 48

On-line CPU(s) list: 0-47

Thread(s) per core: 2

Core(s) per socket: 24

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Platinum 8160 CPU @ 2.10GHz

Stepping: 4

CPU MHz: 2100.000

BogoMIPS: 4200.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 33792K

NUMA node0 CPU(s): 0-47

In this example, there is a single socket, the CPU has 24 cores, and hyperthreading is enabled, resulting in 48 vCPUs, numbered consecutively from 0 to 47.

When vCPUs are assigned to a VSR VM and hyperthreading is enabled in the BIOS, it is important to ensure that the first two vCPUs are siblings of the same pCPU core, the next two vCPUs are siblings of some other pCPU core, and so on. Verify the hyperthreading numbering scheme using the cat /proc/cpuinfo command:

[root@hyp70 admin]# cat /proc/cpuinfo | egrep "processor|physical id|core id"

processor : 0

physical id : 0

core id : 0

processor : 1

physical id : 0

core id : 1

processor : 2

physical id : 0

core id : 2

processor : 3

physical id : 0

core id : 3

…

processor : 24

physical id : 0

core id : 0

processor : 25

physical id : 0

core id : 1

processor : 26

physical id : 0

core id : 2

processor : 27

physical id : 0

core id : 3

…

This output is in order of processor: in order of CPU if hyperthreading is disabled, and in order of thread if hyperthreading is enabled. This output is abbreviated here to only show the first four vCPUs on each CPU core. The physical id (CPU socket) is always 0 because there is only one socket, and the core id (pCPU number) increases with the processor (vCPU) number until vCPU 23, then wraps around and the core id starts from zero again.

This numbering scheme makes all vCPU numbers consecutive whether hyperthreading is tuned on or off in the BIOS, and therefore if the CPU pinning in the XML file assumed hyperthreading off, the XML file will remain valid even if hyperthreading is turned on.

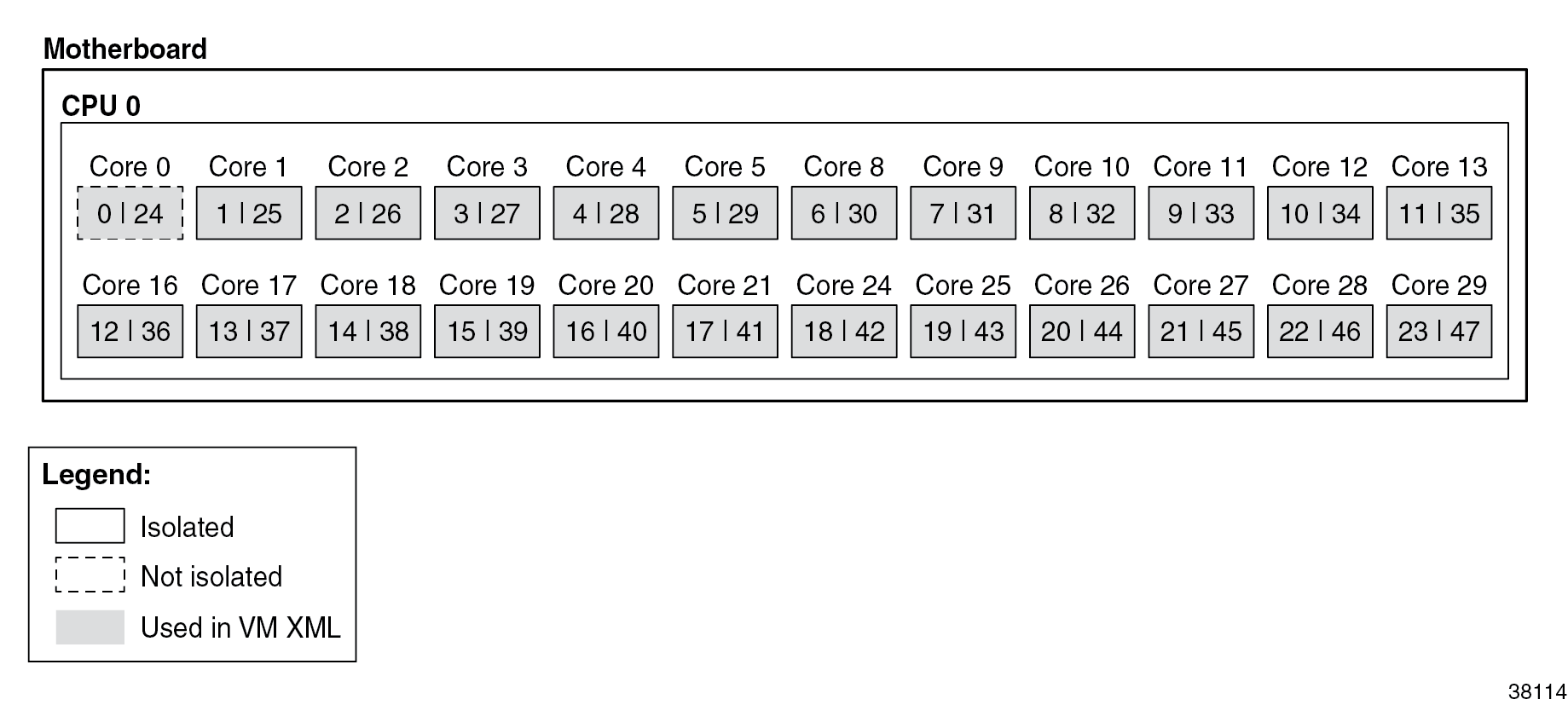

Numbering scheme Airframe with hyperthreading enabledshows the numbering scheme. All available CPUs are assigned to VM and emulator functions and core id numbers are not all consecutive, while processor numbers are.

Network ports

This server has the following network ports:

[root@hyp70 xml]# lspci -v | grep "Ethernet controller"

17:00.0 Ethernet controller: Mellanox Technologies MT27800 Family [ConnectX-5]

17:00.1 Ethernet controller: Mellanox Technologies MT27800 Family [ConnectX-5]

6a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GbE SFP+ (rev 04)

6a:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GbE SFP+ (rev 04)

b3:00.0 Ethernet controller: Mellanox Technologies MT27800 Family [ConnectX-5]

b3:00.1 Ethernet controller: Mellanox Technologies MT27800 Family [ConnectX-5]Kernel parameters

The following kernel parameters include all required and recommended parameters for CPU isolation based on the installed CPU’s numbering scheme and the number of hugepages corresponding to the maximum amount of memory usable by a VSR VM.

root@hyp70 admin]# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-3.10.0-862.51.1.el7.x86_64 root=UUID=dae36453-3a6b-4d93-b6b8-80b74de3b823 ro hugepagesz=1G default_hugepagesz=1G hugepages=64 isolcpus=1-23,25-47 selinux=0 audit=0 kvm_intel.ple_gap=0 pci=realloc pcie_aspm=off intel_iommu=on iommu=pt nopat ixgbe.allow_unsupported_sfp=1,1,1,1,1,1,1,1,1,1,1,1 intremap=no_x2apic_optout console=tty0 console=ttyS0,115200 crashkernel=auto rd.md.uuid=c3dae94c:76b2416c:39008bea:d16a2541 rd.md.uuid=6d84171b:6588e600:01020790:6c9744e2 net.ifnames=0 rhgb quiet

On this server, all vCPUs are isolated except for the threads on the first pCPU, threads 0 and 24. These two threads will be used for the emulatorpin in the XML file.

XML file

The following section configures the memory with 64GB of hugepages and ACPI:

<domain type='kvm'>

<name>VSR-A</name>

<uuid>afa0cce8-8ee0-496f-a6e0-d8c49764cba5</uuid>

<description>VSR-a SN8</description>

<memory unit='G'>64</memory>

<memoryBacking>

<hugepages>

<page size='1' unit='G' nodeset='0'/>

</hugepages>

<nosharepages/>

</memoryBacking>

<features>

<acpi/>

</features>

The next section configures CPU settings for a single thread per pCPU. This configuration still takes full advantage of all pCPUs and is valid even if hyperthreading is enabled in the BIOS:

<vcpu placement='static'>23</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='1'/>

<vcpupin vcpu='1' cpuset='2'/>

<vcpupin vcpu='2' cpuset='3'/>

<vcpupin vcpu='3' cpuset='4'/>

<vcpupin vcpu='4' cpuset='5'/>

<vcpupin vcpu='5' cpuset='6'/>

<vcpupin vcpu='6' cpuset='7'/>

<vcpupin vcpu='7' cpuset='8'/>

<vcpupin vcpu='8' cpuset='9'/>

<vcpupin vcpu='9' cpuset='10'/>

<vcpupin vcpu='10' cpuset='11'/>

<vcpupin vcpu='11' cpuset='12'/>

<vcpupin vcpu='12' cpuset='13'/>

<vcpupin vcpu='13' cpuset='14'/>

<vcpupin vcpu='14' cpuset='15'/>

<vcpupin vcpu='15' cpuset='16'/>

<vcpupin vcpu='16' cpuset='17'/>

<vcpupin vcpu='17' cpuset='18'/>

<vcpupin vcpu='18' cpuset='19'/>

<vcpupin vcpu='19' cpuset='20'/>

<vcpupin vcpu='20' cpuset='21'/>

<vcpupin vcpu='21' cpuset='22'/>

<vcpupin vcpu='22' cpuset='23'/>

<emulatorpin cpuset='0'/>

</cputune>

<cpu mode='host-model'>

<model fallback='allow'/>

</cpu>

Note the emulatorpin directive, which pins emulator threads onto a dedicated CPU that was not isolated in the kernel parameters.

The following is an alternate CPU configuration that is only valid with hyperthreading enabled in the BIOS and all threads assigned to the proper pCPUs for this system, based on the cat /proc/cpuinfo command:

<vcpu placement='static'>46</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='1'/>

<vcpupin vcpu='1' cpuset='25'/>

<vcpupin vcpu='2' cpuset='2'/>

<vcpupin vcpu='3' cpuset='26'/>

<vcpupin vcpu='4' cpuset='3'/>

<vcpupin vcpu='5' cpuset='27'/>

<vcpupin vcpu='6' cpuset='4'/>

<vcpupin vcpu='7' cpuset='28'/>

<vcpupin vcpu='8' cpuset='5'/>

<vcpupin vcpu='9' cpuset='29'/>

<vcpupin vcpu='10' cpuset='6'/>

<vcpupin vcpu='11' cpuset='30'/>

<vcpupin vcpu='12' cpuset='7'/>

<vcpupin vcpu='13' cpuset='31'/>

<vcpupin vcpu='14' cpuset='8'/>

<vcpupin vcpu='15' cpuset='32'/>

<vcpupin vcpu='16' cpuset='9'/>

<vcpupin vcpu='17' cpuset='33'/>

<vcpupin vcpu='18' cpuset='10'/>

<vcpupin vcpu='19' cpuset='34'/>

<vcpupin vcpu='20' cpuset='11'/>

<vcpupin vcpu='21' cpuset='35'/>

<vcpupin vcpu='22' cpuset='12'/>

<vcpupin vcpu='23' cpuset='36'/>

<vcpupin vcpu='24' cpuset='13'/>

<vcpupin vcpu='25' cpuset='37'/>

<vcpupin vcpu='26' cpuset='14'/>

<vcpupin vcpu='27' cpuset='38'/>

<vcpupin vcpu='28' cpuset='15'/>

<vcpupin vcpu='29' cpuset='39'/>

<vcpupin vcpu='30' cpuset='16'/>

<vcpupin vcpu='31' cpuset='40'/>

<vcpupin vcpu='32' cpuset='17'/>

<vcpupin vcpu='33' cpuset='41'/>

<vcpupin vcpu='34' cpuset='18'/>

<vcpupin vcpu='35' cpuset='42'/>

<vcpupin vcpu='36' cpuset='19'/>

<vcpupin vcpu='37' cpuset='43'/>

<vcpupin vcpu='38' cpuset='20'/>

<vcpupin vcpu='39' cpuset='44'/>

<vcpupin vcpu='40' cpuset='21'/>

<vcpupin vcpu='41' cpuset='45'/>

<vcpupin vcpu='42' cpuset='22'/>

<vcpupin vcpu='43' cpuset='46'/>

<vcpupin vcpu='44' cpuset='23'/>

<vcpupin vcpu='45' cpuset='47'/>

<emulatorpin cpuset="0,24"/>

</cputune>

<cpu mode='host-model'>

<model fallback='allow'/>

<topology sockets='1' cores='23' threads='2'/>

</cpu>

Next is the smbios section where many important configuration parameters are passed to the VSR VM. Several settings can be defined here in place of the bof.cfg file on cf3.

<sysinfo type='smbios'>

<system>

<entry name='product'>TIMOS: chassis=VSR-I slot=A card=cpm-v mda/1=m20-v mda/2=isa-ms-v static-route=172.16.0.0/8@172.16.36.1 address=172.16.37.71/23@active primary-config=cf3:/config.cfg license-file=cf3:/license.txt</entry>

</system>

</sysinfo>

Next are the standard OS and clock features:

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>

The final section contains devices and starts with the disks, serial, and console ports:

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr-a.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/cf1.qcow2'/>

<target dev='hdb' bus='virtio'/>

</disk>

<serial type='pty'>

<source path='/dev/pts/1'/>

<target port='0'/>

<alias name='serial0'/>

</serial>

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>In this case, the configuration has a separate qcow2 file for cf1. This file can be used to back up SR OS files before an upgrade, as the cf3 qcow2 file gets replaced during the upgrade. The devices section also contains the network ports (PCI devices):

<interface type='bridge'>

<source bridge='br0'/>

<model type='virtio'/>

</interface>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x17' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x17' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0xb3' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0xb3' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x6a' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>The first network port is assigned to the SR OS management port, the next to port 1/1/1, then 1/1/2, and so on. The Mellanox ports are assigned to ports 1/1/1 to 1/1/3 using the PCI addresses obtained with the lshw command.

Dell server

Server model and motherboard

The server model can be identified with the dmidecode command:

[root@hyp56 ~]# dmidecode -t 2

# dmidecode 3.2

Getting SMBIOS data from sysfs.

SMBIOS 2.8 present.

Handle 0x0200, DMI type 2, 8 bytes

Base Board Information

Manufacturer: Dell Inc.

Product Name: 072T6D

Version: A06

Serial Number: .DJ2XHH2.CN7793173D00E3.

This product name corresponds to a Dell PowerEdge R730

CPU

The lscpu command gives the number of sockets, cores, threads, and their numbering scheme.

With hyperthreading disabled:

[root@hyp56 ~]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 44

On-line CPU(s) list: 0-43

Thread(s) per core: 1

Core(s) per socket: 22

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2699A v4 @ 2.40GHz

Stepping: 1

CPU MHz: 1200.000

CPU max MHz: 3000.0000

CPU min MHz: 1200.0000

BogoMIPS: 4799.90

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 56320K

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43

…

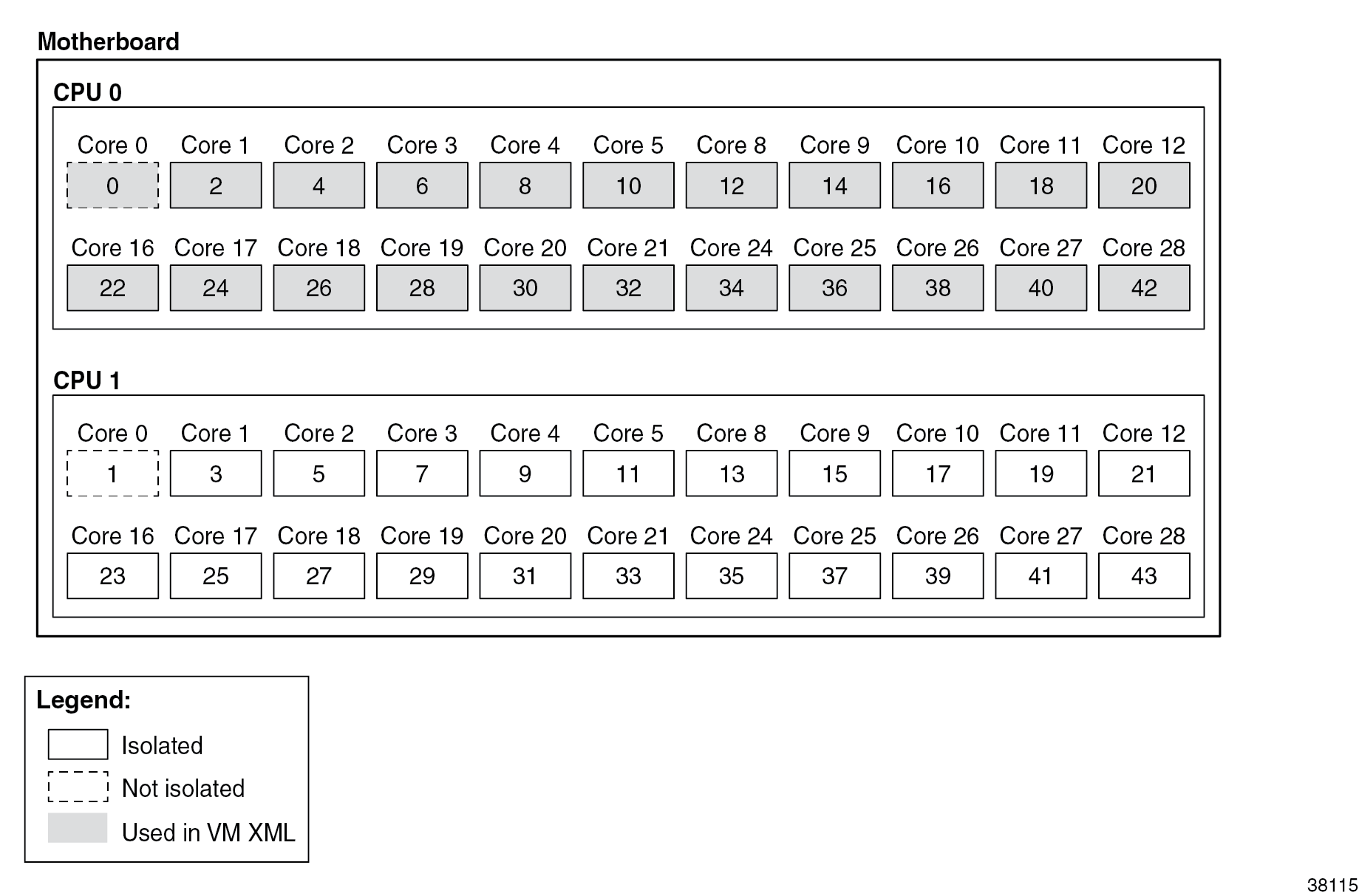

This system has two CPU sockets, each CPU has 22 cores, and hyperthreading is disabled, resulting in 44 vCPUs.

Use the cat /proc/cpuinfo command to confirm the CPU numbering scheme and see that all even number CPUs (listed as "processors" in the command output) are on NUMA node 0 (listed as "physical id" in the command output), while all odd number CPUs are on NUMA node 1. As a result, all even number CPUs can be assigned to one VSR VM, while all odd number CPUs must be assigned to a different VM.

[root@hyp56 ~]# cat /proc/cpuinfo | egrep "processor|physical id|core id"

processor : 0

physical id : 0

core id : 0

processor : 1

physical id : 1

core id : 0

processor : 2

physical id : 0

core id : 1

processor : 3

physical id : 1

core id : 1

processor : 4

physical id : 0

core id : 2

processor : 5

physical id : 1

core id : 2

processor : 6

physical id : 0

core id : 3

processor : 7

physical id : 1

core id : 3

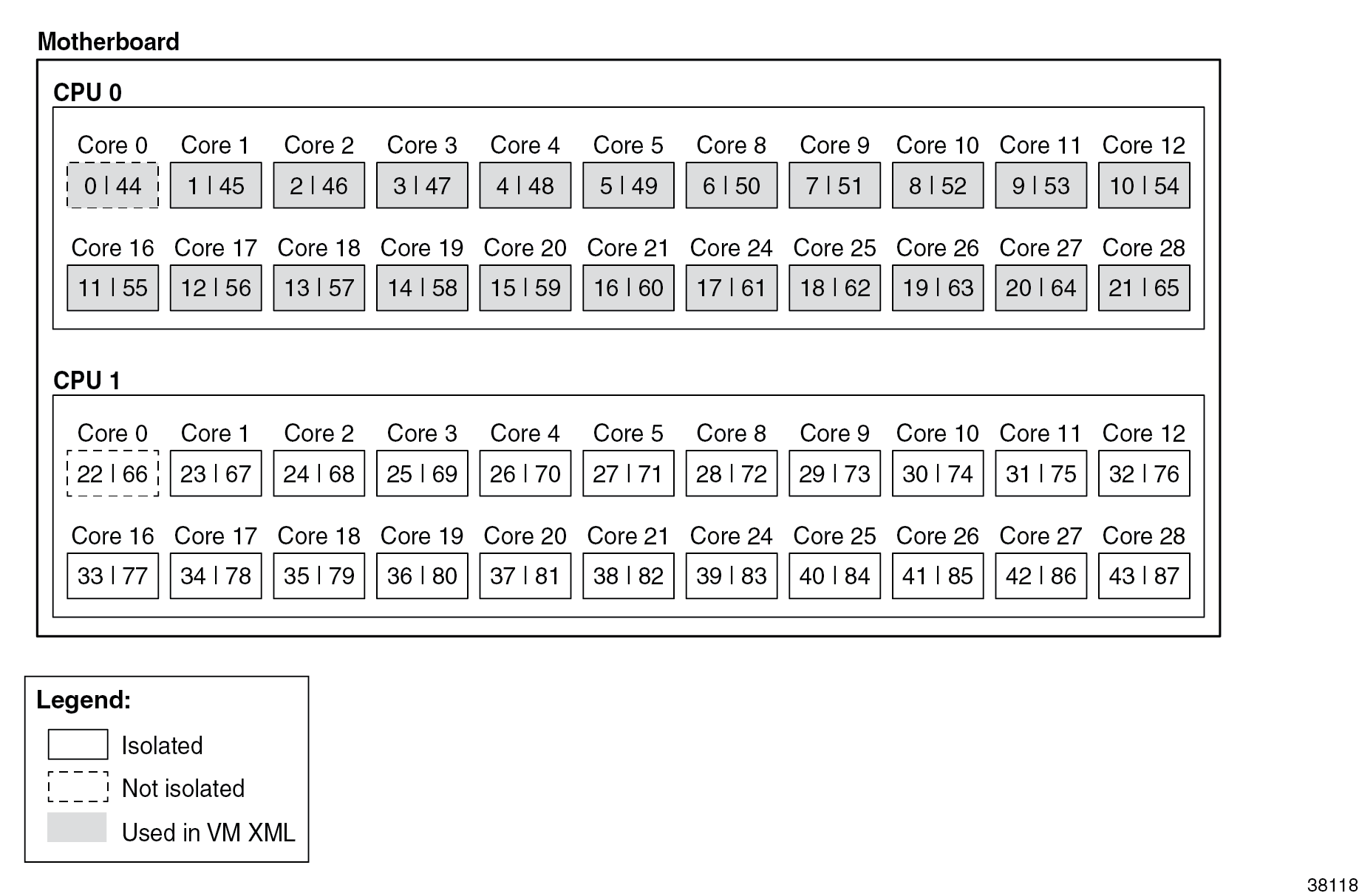

…Numbering scheme Dell server without hyperthreading shows the numbering scheme. Only NUMA node 0 (CPU 0) CPUs are used for VM functions in this example, but CPU isolation is also configured on NUMA node 1 for a possible identical future VSR VM:

With hyperthreading enabled:

[root@hyp56 admin]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 88

On-line CPU(s) list: 0-87

Thread(s) per core: 2

Core(s) per socket: 22

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2699A v4 @ 2.40GHz

Stepping: 1

CPU MHz: 1199.853

CPU max MHz: 3000.0000

CPU min MHz: 1200.0000

BogoMIPS: 4800.22

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 56320K

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54,56,58,60,62,64, 66,68,70,72,74,76,78,80,82,84,86

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55,57,59,61,63,65, 67,69,71,73,75,77,79,81,83,85,87

…

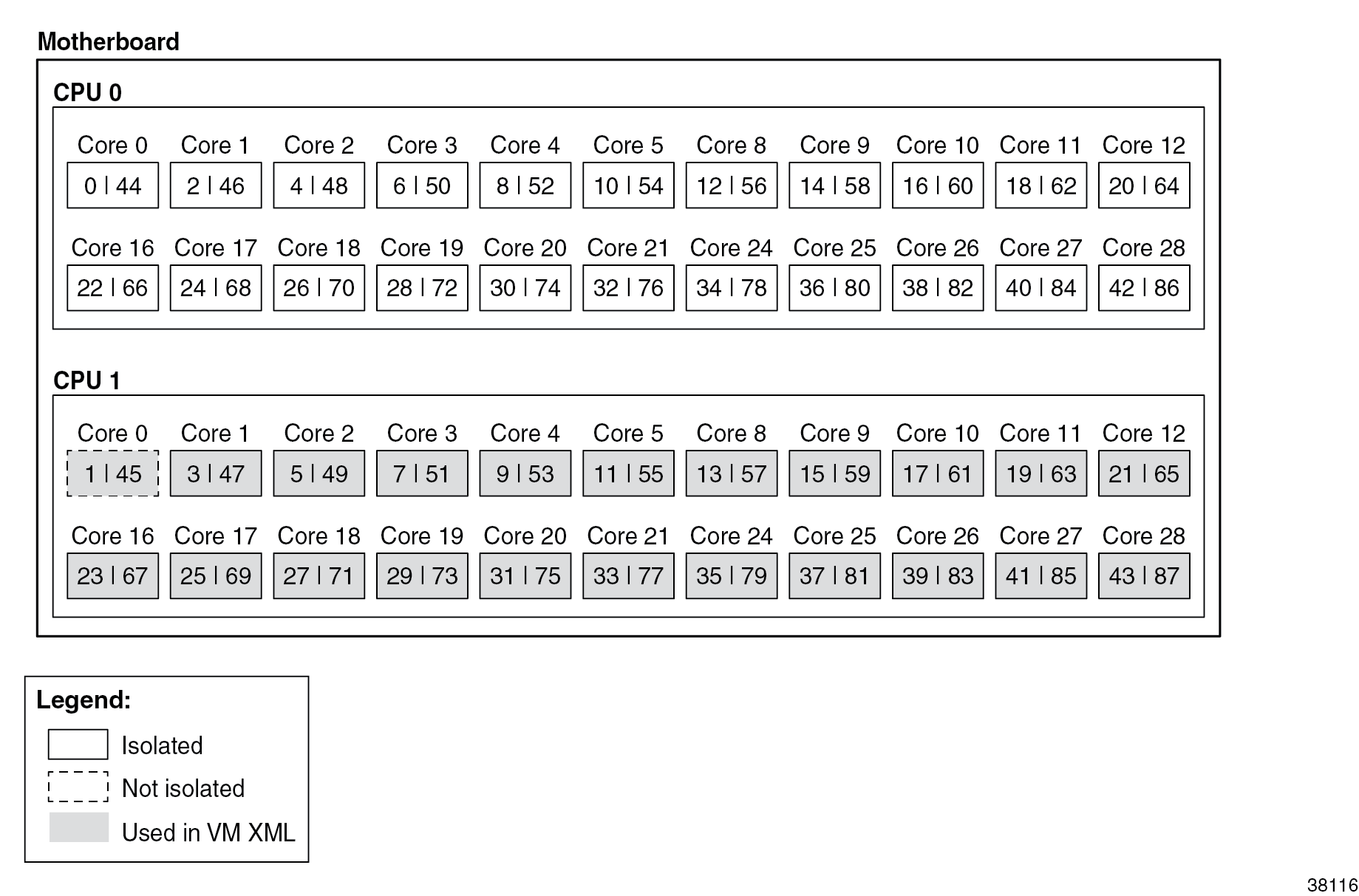

The system has 88 CPUs across two sockets, with all even-number CPUs belonging to NUMA node 0 and all odd-number CPUs belonging to NUMA node 1. The numbering scheme is such that the additional threads start at number 44 and continue with even-number CPUs on NUMA 0 and odd-number CPUs on NUMA 1:

[root@hyp56 admin]# cat /proc/cpuinfo | egrep "processor|physical id|core id"

processor : 0

physical id : 0

core id : 0

processor : 1

physical id : 1

core id : 0

…

processor : 44

physical id : 0

core id : 0

processor : 45

physical id : 1

core id : 0

…

processor : 86

physical id : 0

core id : 28

processor : 87

physical id : 1

core id : 28

Numbering scheme Dell server with hyperthreading shows this numbering scheme. Only NUMA node 1 CPUs are used for the example VM with hyperthreading enabled. NUMA node 0 CPUs are isolated for future use with another identical VSR VM.

Network ports

This server has the following network ports:

[root@hyp56 ~]# lshw -c network -businfo

Bus info Device Class Description

========================================================

pci@0000:04:00.0 p6p1 network MT27700 Family [ConnectX-4]

pci@0000:04:00.1 p6p2 network MT27700 Family [ConnectX-4]

pci@0000:01:00.0 em1 network Ethernet Controller 10-Gigabit X540-AT2

pci@0000:01:00.1 em2 network Ethernet Controller 10-Gigabit X540-AT2

pci@0000:06:00.0 p5p1 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.1 p5p2 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.2 p5p3 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:06:00.3 p5p4 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:09:00.0 em3 network I350 Gigabit Network Connection

pci@0000:09:00.1 em4 network I350 Gigabit Network Connection

pci@0000:81:00.0 network Ethernet 10G 2P X520 Adapter

pci@0000:81:00.1 network Ethernet 10G 2P X520 Adapter

pci@0000:83:00.0 p4p1 network MT27700 Family [ConnectX-4]

pci@0000:83:00.1 p4p2 network MT27700 Family [ConnectX-4]

pci@0000:83:00.2 p4p1_0 network MT27700 Family [ConnectX-4 Virtual Function]

pci@0000:83:00.4 p4p2_0 network MT27700 Family [ConnectX-4 Virtual Function]

pci@0000:85:00.0 p3p1 network Ethernet 10G 2P X520 Adapter

pci@0000:85:00.1 p3p2 network Ethernet 10G 2P X520 Adapter

pci@0000:87:00.0 p2p1 network Ethernet 10G 2P X520 Adapter

pci@0000:87:00.1 p2p2 network Ethernet 10G 2P X520 Adapter

br-mgmt network Ethernet interface

virbr0-nic network Ethernet interface

virbr0 network Ethernet interface

vnet0 network Ethernet interface

Because this server has two NUMA nodes, ensure that the NICs allocated to a VSR VM are on the same NUMA node as the VM’s CPUs. Carefully reviewing the Mellanox ConnectX-4 NIC 0000:04:00 ports shows that this NIC is on NUMA node 0:

[root@hyp56 ~]# lspci -v -s 04:00.0 | egrep "Ethernet|NUMA"

04:00.0 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

Flags: bus master, fast devsel, latency 0, IRQ 149, NUMA node 0However, the Mellanox ConnectX-4 NIC 0000:83.00 is on NUMA node 1:

[root@hyp56 ~]# lspci -v -s 83:00.0 | egrep "Ethernet|NUMA"

83:00.0 Ethernet controller: Mellanox Technologies MT27700 Family [ConnectX-4]

Flags: bus master, fast devsel, latency 0, IRQ 456, NUMA node 1

The Mellanox NIC on NUMA node 1 has two VFs that can be used for SR-IOV.

Kernel parameters

The following kernel parameters include those required for CPU isolation based on the installed CPU’s numbering scheme and a sufficient number of hugepages to accommodate the memory usage of all VSR VMs. With hyperthreading disabled:

[root@hyp56 ~]# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-3.10.0-1160.66.1.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet pci=realloc pcie_aspm=off iommu=pt intel_iommu=on nopat hugepagesz=1G default_hugepagesz=1G hugepages=100 kvm_intel.ple_gap=0 isolcpus=2-43 selinux=0 audit=0 LANG=en_US.UTF-8

On this server, all CPUs are isolated except for the first CPU on each socket, CPUs 0 and 1. These two CPUs will be used for the emulatorpin in the XML file. Having 100 hugepages on a two-NUMA node results in 50 hugepages per NUMA node and therefore 50GB available to a VSR VM.

With hyperthreading enabled, the isolcpus parameter must be:

isolcpus=2-43,46-87

XML file for hyperthreading disabled and NUMA node 0

The XML file header contains the VM name:

<domain type='kvm'>

<name>vsr56-1</name>The UUID is removed in order to generate a new UUID. The new UUID can be obtained with the virsh dumpxml command after running the VM and then pasted into the XML file to preserve it.

<!-- UUID: remove to auto-generate a new UUID -->Configure hugepages and allocate 64 GB of RAM to the VSR VM. Here, the nodeset is always set to 0:

<memory unit="G">64</memory>

<memoryBacking>

<hugepages>

<page size="1" unit="G" nodeset="0"/>

</hugepages>

<nosharepages/>

</memoryBacking>Configure the numatune setting to ensure all the RAM is allocated from NUMA node 0, the NUMA used for all resources on this VM:

<numatune>

<memory mode='strict' nodeset='0'/>

</numatune>Configure CPU features and CPU mode. Host-model with fallback=allow is recommended:

<features>

<acpi/>

</features>

<cpu mode='host-model'>

<model fallback='allow'/>

</cpu>Configure the CPU pinning, with the emulatorpin set to a non-isolated CPU on the same NUMA node as the VM:

<vcpu placement='static'>21</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='2'/>

<vcpupin vcpu='1' cpuset='4'/>

<vcpupin vcpu='2' cpuset='6'/>

<vcpupin vcpu='3' cpuset='8'/>

<vcpupin vcpu='4' cpuset='10'/>

<vcpupin vcpu='5' cpuset='12'/>

<vcpupin vcpu='6' cpuset='14'/>

<vcpupin vcpu='7' cpuset='16'/>

<vcpupin vcpu='8' cpuset='18'/>

<vcpupin vcpu='9' cpuset='20'/>

<vcpupin vcpu='10' cpuset='22'/>

<vcpupin vcpu='11' cpuset='24'/>

<vcpupin vcpu='12' cpuset='26'/>

<vcpupin vcpu='13' cpuset='28'/>

<vcpupin vcpu='14' cpuset='30'/>

<vcpupin vcpu='15' cpuset='32'/>

<vcpupin vcpu='16' cpuset='34'/>

<vcpupin vcpu='17' cpuset='36'/>

<vcpupin vcpu='18' cpuset='38'/>

<vcpupin vcpu='19' cpuset='40'/>

<vcpupin vcpu='20' cpuset='42'/>

<emulatorpin cpuset='0'/>

</cputune>Configure the OS features:

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>Configure SMBIOS. The control-cpu-cores and vsr-deployment-model=high-packet-touch parameters must only be enabled when required for the specific VSR deployment (see the VSR VNF Installation and Setup Guide and the SR OS Software Release Notes):

<sysinfo type='smbios'>

<system>

<entry name='product'>TiMOS:

address=172.16.224.192/24@active \

static-route=172.16.0.0/8@172.16.224.1 \

license-file=ftp://user:password@172.16.224.10/license/VSR_license.txt \

primary-config=ftp://user:pass@172.16.29.91/kvm/hyp56/vsr56-1.cfg \

chassis=vsr-i \

slot=A \

card=cpm-v \

slot=1 \

card=iom-v \

mda/1=m20-v \

mda/2=isa-ms-v \

system-base-mac=fa:ac:ff:ff:54:00 \

<!-- control-cpu-cores=2 \ -->

<!-- vsr-deployment-model=high-packet-touch \ -->

</entry>

</system>

</sysinfo>Configure the clock:

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>The devices configuration section includes disks, network interfaces, and console ports. By default, a VSR is configured with cf3:

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr56-1.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>

Configure network interfaces starting with a management port attached to a Linux bridge and two PCI-PT network interfaces on NUMA node 0:

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-mgmt'/>

</interface>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>Configure the console port, that is accessible using the virsh console <vm> command:

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>The end of the XML file includes the required seclabel configuration:

</devices>

<seclabel type='none'/>

</domain>The VSR’s boot messages can be checked to confirm the correct CPU, memory, and NIC assignments; for example:

…

KVM based vcpu

Running in a KVM/QEMU virtual machine

ACPI: found 21 cores, 21 enabled

1 virtio net device is detected

2 MLX5 devices detected (2 pci passthrough, 0 SR-IOV virtual function)

…The VSR automatically allocates CPUs between different task types:

A:vsr56-1-kvm# show card 1 virtual fp

===============================================================================

Card 1 Virtual Forwarding Plane Statistics

===============================================================================

Task vCPUs Average Maximum

Utilization Utilization

-------------------------------------------------------------------------------

NIC 1 0.00 % 0.00 %

Worker 17 0.03 % 0.03 %

Scheduler 1 0.00 % 0.00 %

===============================================================================

Verify the CPU pinning with the virsh vcpuinfo command and ensure that no other VMs are sharing the VSR’s resources:

[root@hyp56 ~]# virsh vcpuinfo vsr56-1

VCPU: 0

CPU: 2

State: running

CPU time: 30.6s

CPU Affinity: --y-----------------------------------------

VCPU: 1

CPU: 4

State: running

CPU Affinity: ----y---------------------------------------

VCPU: 2

CPU: 6

State: running

CPU Affinity: ------y-------------------------------------

…

VCPU: 20

CPU: 42

State: running

CPU Affinity: ------------------------------------------y-

XML file for hyperthreading enabled and NUMA node 1

The XML file header contains the VM name:

<domain type='kvm'>

<name>vsr56-1</name>The UUID is removed in order to generate a new UUID. The new UUID can be obtained with the virsh dumpxml command after running the VM and then pasted into the XML file to preserve it.

<!-- UUID: remove to auto-generate a new UUID -->Define hugepages and allocate 64 GB of RAM to the VSR VM. Here, the nodeset is always set to 0:

<memoryBacking>

<hugepages>

<page size="1" unit="G" nodeset="0"/>

</hugepages>

<nosharepages/>

</memoryBacking>Configure the numatune setting to ensure that all the RAM is allocated from NUMA node 1, the NUMA used for all resources on this VM:

<numatune>

<memory mode='strict' nodeset='1'/>

</numatune>Configure the CPU features and CPU mode. The host-model with fallback=allow is recommended. Because hyperthreading is enabled, the following topology is required:

<features>

<acpi/>

</features>

<cpu mode='host-model'>

<model fallback='allow'/>

<!-- topology is required when hyperthreading is enabled -->

<topology sockets='1' cores='21' threads='2'/>

</cpu>Configure the CPU pinning, with the emulatorpin set to non-isolated CPUs on the same NUMA as the VM:

<vcpu placement='static'>42</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='3'/>

<vcpupin vcpu='1' cpuset='47'/>

<vcpupin vcpu='2' cpuset='5'/>

<vcpupin vcpu='3' cpuset='49'/>

<vcpupin vcpu='4' cpuset='7'/>

<vcpupin vcpu='5' cpuset='51'/>

<vcpupin vcpu='6' cpuset='9'/>

<vcpupin vcpu='7' cpuset='53'/>

<vcpupin vcpu='8' cpuset='11'/>

<vcpupin vcpu='9' cpuset='55'/>

<vcpupin vcpu='10' cpuset='13'/>

<vcpupin vcpu='11' cpuset='57'/>

<vcpupin vcpu='12' cpuset='15'/>

<vcpupin vcpu='13' cpuset='59'/>

<vcpupin vcpu='14' cpuset='17'/>

<vcpupin vcpu='15' cpuset='61'/>

<vcpupin vcpu='16' cpuset='19'/>

<vcpupin vcpu='17' cpuset='63'/>

<vcpupin vcpu='18' cpuset='21'/>

<vcpupin vcpu='19' cpuset='65'/>

<vcpupin vcpu='20' cpuset='23'/>

<vcpupin vcpu='21' cpuset='67'/>

<vcpupin vcpu='22' cpuset='25'/>

<vcpupin vcpu='23' cpuset='69'/>

<vcpupin vcpu='24' cpuset='27'/>

<vcpupin vcpu='25' cpuset='71'/>

<vcpupin vcpu='26' cpuset='29'/>

<vcpupin vcpu='27' cpuset='73'/>

<vcpupin vcpu='28' cpuset='31'/>

<vcpupin vcpu='29' cpuset='75'/>

<vcpupin vcpu='30' cpuset='33'/>

<vcpupin vcpu='31' cpuset='77'/>

<vcpupin vcpu='32' cpuset='35'/>

<vcpupin vcpu='33' cpuset='79'/>

<vcpupin vcpu='34' cpuset='37'/>

<vcpupin vcpu='35' cpuset='81'/>

<vcpupin vcpu='36' cpuset='39'/>

<vcpupin vcpu='37' cpuset='83'/>

<vcpupin vcpu='38' cpuset='41'/>

<vcpupin vcpu='39' cpuset='85'/>

<vcpupin vcpu='40' cpuset='43'/>

<vcpupin vcpu='41' cpuset='87'/>

<emulatorpin cpuset='1,45'/>

</cputune>Configure the OS features:

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>

Configure SMBIOS. Only enable the control-cpu-cores and vsr-deployment-model=high-packet-touch parameters when required for the specific VSR deployment (see the VSR VNF Installation and Setup Guide). In this example, configure vsr-deployment-model=high-packet-touch and additional Worker tasks will be present to take advantage of the additional threads.

<sysinfo type='smbios'>

<system>

<entry name='product'>TiMOS:

address=172.16.224.192/24@active \

static-route=172.16.0.0/8@172.16.224.1 \

license-file=ftp://user:password@172.16.224.10/license/VSR_license.txt \

primary-config=ftp://user:pass@172.16.29.91/kvm/hyp56/vsr56-1.cfg \

chassis=vsr-i \

slot=A \

card=cpm-v \

slot=1 \

card=iom-v \

mda/1=m20-v \

mda/2=isa-ms-v \

system-base-mac=fa:ac:ff:ff:54:00 \

vsr-deployment-model=high-packet-touch \

</entry>

</system>

</sysinfo>Configure the clock settings:

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>The devices configuration section includes disks, network interfaces, and console ports. By default, a VSR is configured with cf3:

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr56-1.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>Configure network interfaces starting with a management port attached to a Linux bridge, and two SR-IOV network interfaces on NUMA node 1. To configure VLAN IDs on SR-IOV interfaces, the tags are defined in the XML file and the SR OS outer tagging is set to null:

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-mgmt'/>

</interface>

<interface type='hostdev' managed='yes'>

<mac address="00:50:56:00:54:01"/>

<source>

<address type='pci' domain='0x0000' bus='0x83' slot='0x00' function='0x2'/>

</source>

<vlan>

<tag id='100'/>

</vlan>

<target dev='vsr1_port_1/1/1'/>

</interface>

<interface type='hostdev' managed='yes'>

<mac address="00:50:56:00:54:02"/>

<source>

<address type='pci' domain='0x0000' bus='0x83' slot='0x00' function='0x4'/>

</source>

<vlan>

<tag id='200'/>

</vlan>

<target dev='vsr1_port_1/1/2'/>

</interface>Configure the console port, that is accessible using the virsh console <vm> command:

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>The end of the XML file includes the required seclabel configuration:

</devices>

<seclabel type='none'/>

</domain>The VSR’s boot messages can be checked to confirm the correct CPU, memory, and NIC assignments; for example:

…

KVM based vcpu

Running in a KVM/QEMU virtual machine

ACPI: found 42 cores, 42 enabled

1 virtio net device is detected

2 MLX5 devices detected (0 pci passthrough, 2 SR-IOV virtual function)

…The VSR automatically allocates CPUs between different task types, with more tasks than in the non-hyperthreaded case when hyperthreading gives a performance advantage:

A:vsr56-1-kvm# show card 1 virtual fp

===============================================================================

Card 1 Virtual Forwarding Plane Statistics

===============================================================================

Task vCPUs Average Maximum

Utilization Utilization

-------------------------------------------------------------------------------

NIC 1 0.00 % 0.00 %

Worker 34 0.04 % 0.04 %

Scheduler 1 0.00 % 0.00 %

===============================================================================

A:vsr56-1-kvm#Verify the CPU pinning with the virsh vcpuinfo command and ensure that no other VMs are sharing the VSR’s resources:

[root@hyp56 ~]# virsh vcpuinfo vsr56-1

VCPU: 0

CPU: 3

State: running

CPU time: 45.5s

CPU Affinity: ---y------------------------------------------------------------------------------------

VCPU: 1

CPU: 47

State: running

CPU time: 5.8s

CPU Affinity: -----------------------------------------------y----------------------------------------

VCPU: 2

CPU: 5

State: running

CPU time: 5.8s

CPU Affinity: -----y----------------------------------------------------------------------------------

VCPU: 3

CPU: 49

State: running

CPU time: 5.8s

CPU Affinity: -------------------------------------------------y--------------------------------------

…

VCPU: 40

CPU: 43

State: running

CPU time: 47.7s

CPU Affinity: -------------------------------------------y--------------------------------------------

VCPU: 41

CPU: 87

State: running

CPU time: 48.1s

CPU Affinity: ---------------------------------------------------------------------------------------y

HPE server

Server model and motherboard

The server model can be identified with the dmidecode command:

[root@hyp62 ~]# dmidecode -t 2

# dmidecode 3.2

Getting SMBIOS data from sysfs.

SMBIOS 2.8 present.

Handle 0x0028, DMI type 2, 17 bytes

Base Board Information

Manufacturer: HP

Product Name: ProLiant DL380 Gen9

Version: Not Specified

Serial Number: MXQ724142B

...This server’s product name is HPE ProLiant DL380 Gen9.

CPU

The lscpu command gives the number of sockets, cores and threads and their numbering scheme. With hyperthreading disabled:

[root@hyp62 ~]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 44

On-line CPU(s) list: 0-43

Thread(s) per core: 1

Core(s) per socket: 22

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2699A v4 @ 2.40GHz

Stepping: 1

CPU MHz: 1200.000

CPU max MHz: 2400.0000

CPU min MHz: 1200.0000

BogoMIPS: 4794.53

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 56320K

NUMA node0 CPU(s): 0-10,22-32

NUMA node1 CPU(s): 11-21,33-43

…

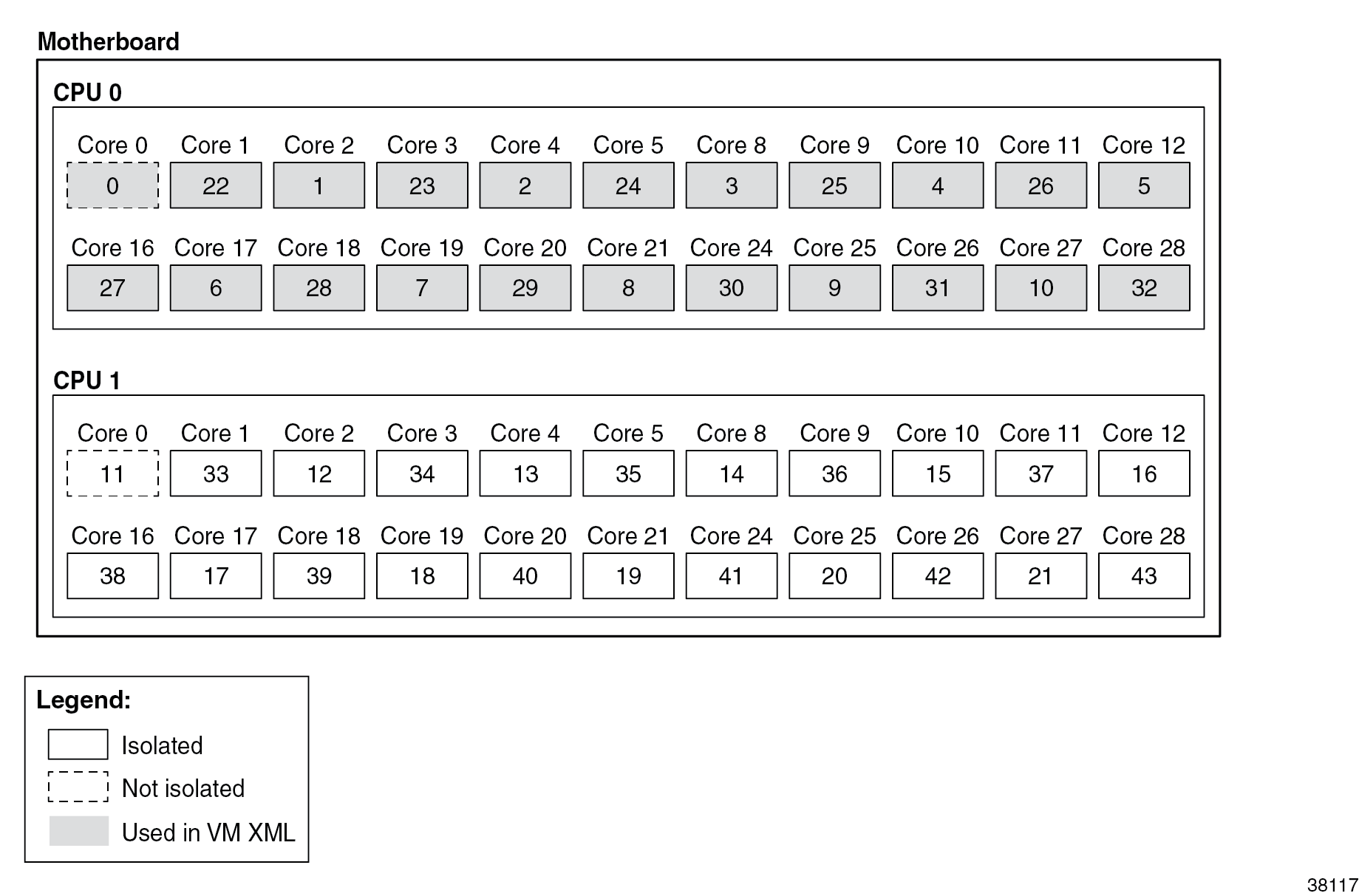

The server has 44 cores with CPUs with number 0 through 10 and 22 through 32 on NUMA node 0, and CPUs with number 11 through 21 and 33 through 43 on NUMA node 1. Note the offset numbering scheme.

processor : 0

physical id : 0

core id : 0

processor : 1

physical id : 0

core id : 2

processor : 2

physical id : 0

core id : 4

processor : 3

physical id : 0

core id : 8

processor : 4

physical id : 0

core id : 10

…

processor : 22

physical id : 0

core id : 1

processor : 23

physical id : 0

core id : 3

…

Numbering scheme HPE server without hyperthreading shows this numbering scheme. Only NUMA node 0 CPUs are used for VM functions in the example XML file with hyperthreading disabled. NUMA node 1 CPUs are also isolated for future use with an identical VM.

With hyperthreading enabled:

[admin@hyp62 ~]$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 88

On-line CPU(s) list: 0-87

Thread(s) per core: 2

Core(s) per socket: 22

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2699A v4 @ 2.40GHz

Stepping: 1

CPU MHz: 1200.000

CPU max MHz: 2400.0000

CPU min MHz: 1200.0000

BogoMIPS: 4794.48

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 56320K

NUMA node0 CPU(s): 0-21,44-65

NUMA node1 CPU(s): 22-43,66-87

…

The server has 88 cores with CPUs with number 0 through 21 and 44 through 65 on NUMA node 0, and CPUs with number 22 through 43 and 66 through 87 on NUMA node 1.

[admin@hyp62 ~]$ cat /proc/cpuinfo | egrep "processor|physical id|core id"

processor : 0

physical id : 0

core id : 0

processor : 1

physical id : 0

core id : 1

…

processor : 44

physical id : 0

core id : 0

processor : 45

physical id : 0

core id : 1

…

Numbering scheme HPE server with hyperthreadingshows the numbering scheme. Only NUMA node 0 CPUs are used for VM functions in the hyperthreading example XML file. NUMA node 1 CPUs are isolated for future use with an identical VM.

Network ports

The server has the following network ports:

[root@hyp62 ~]# lshw -c network -businfo

Bus info Device Class Description

=============================================================

pci@0000:05:00.0 ens3f0 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:05:00.1 ens3f1 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:05:00.2 ens3f2 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:05:00.3 ens3f3 network Ethernet Controller X710 for 10GbE SFP+

pci@0000:05:02.0 network Ethernet Virtual Function 700 Series

pci@0000:04:00.0 eno49 network 82599ES 10-Gigabit SFI/SFP+ Network Connection

pci@0000:04:00.1 eno50 network 82599ES 10-Gigabit SFI/SFP+ Network Connection

pci@0000:02:00.0 eno1 network NetXtreme BCM5719 Gigabit Ethernet PCIe

pci@0000:02:00.1 eno2 network NetXtreme BCM5719 Gigabit Ethernet PCIe

pci@0000:02:00.2 eno3 network NetXtreme BCM5719 Gigabit Ethernet PCIe

pci@0000:02:00.3 eno4 network NetXtreme BCM5719 Gigabit Ethernet PCIe

pci@0000:81:00.0 ens6f0 network 82599ES 10-Gigabit SFI/SFP+ Network Connection

pci@0000:81:00.1 ens6f1 network 82599ES 10-Gigabit SFI/SFP+ Network Connection

Mgmt-br network Ethernet interface

svc-rl-tap1 network Ethernet interface

svc-rl-tap2 network Ethernet interface

br-pe2-pe3 network Ethernet interface

br-pe1-pe3 network Ethernet interface

br-pe5-int network Ethernet interface

br-pe5-ext network Ethernet interface

br-pe3-pe4 network Ethernet interface

br-ce1-pe1 network Ethernet interface

alubr0 network Ethernet interface

br-pe2-pe4 network Ethernet interface

ovs-system network Ethernet interface

eno49.9 network Ethernet interface

br-pe1-pe4 network Ethernet interface

SIM-Mgmt-bridge network Ethernet interface

br-pe4-pe5 network Ethernet interface

br-ce2-pe2 network Ethernet interface

br-pe3-pe5 network Ethernet interface

br-mgmt network Ethernet interface

br-unallocated network Ethernet interface

br-ce3-pe3 network Ethernet interface

virbr0-nic network Ethernet interface

virbr0 network Ethernet interface

vsr1-mgmt network Ethernet interface

svc-pat-tap network Ethernet interface

br-ce4-pe4 network Ethernet interface

br-ce2-int network Ethernet interface

br-pe1-pe2 network Ethernet interface

br-ce2-ext network Ethernet interface

Verify that all network ports assigned to the VSR VM belong to NUMA node 0:

[root@hyp62 ~]# lspci -v -s 05:00.0 | egrep "Ethernet|NUMA"

libkmod: kmod_config_parse: /etc/modprobe.d/blacklist.conf line 1: ignoring bad line starting with 'iavf'

05:00.0 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

Subsystem: Intel Corporation Ethernet Converged Network Adapter X710-4

Flags: bus master, fast devsel, latency 0, IRQ 16, NUMA node 0

[admin@hyp62 ~]$ lspci -v -s 05:00.1 | egrep "Ethernet|NUMA"

libkmod: kmod_config_parse: /etc/modprobe.d/blacklist.conf line 1: ignoring bad line starting with 'iavf'

05:00.1 Ethernet controller: Intel Corporation Ethernet Controller X710 for 10GbE SFP+ (rev 01)

Subsystem: Intel Corporation Ethernet Converged Network Adapter X710

Flags: bus master, fast devsel, latency 0, IRQ 16, NUMA node 0

All ports on the same PCI slot belong to the same NUMA node.

Kernel parameters

The following kernel parameters include those required for CPU isolation based on the installed CPU’s numbering scheme and a sufficient number of hugepages to accommodate the memory usage of all VSR VMs. With hyperthreading disabled:

[root@hyp62 ~]# cat /proc/cmdline

BOOT_IMAGE=/vmlinuz-3.10.0-1160.71.1.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet pci=realloc pcie_aspm=off iommu=pt nopat intel_iommu=on hugepagesz=1G hugepages=128 default_hugepagesz=1G selinux=0 audit=0 kvm_intel.ple_gap=0 isolcpus=1-10,12-43

On this server, all CPUs are isolated except for the first CPU on each socket, CPUs 0 and 11. These two CPUs will be used for emulatorpin in the XML file. 100 hugepages on a two-NUMA node results in 50 hugepages per NUMA node and therefore 50GB available to a VSR VM.

With hyperthreading enabled the isolcpus parameter must be:

isolcpus=1-21,23-43,45-65,67-87

XML file for hyperthreading disabled and NUMA node 0

The XML file header contains the VM name:

<domain type='kvm'>

<name>vsr62-1</name>The UUID is removed in order to generate a new UUID. The new UUID can be obtained with the virsh dumpxml command after running the VM and then pasted into the XML file to preserve it. Here the UUID is set, as a license is already tied to this UUID:

<uuid>66fdf83e-fd6b-4ce7-9ee1-ca16080e073f</uuid>Define the hugepages and allocate 64 GB of RAM to the VSR VM. Here, the nodeset is always set to 0:

<memory unit="G">64</memory>

<memoryBacking>

<hugepages>

<page size="1" unit="G" nodeset="0"/>

</hugepages>

<nosharepages/>

</memoryBacking>Configure the numatune setting to ensure all the RAM is allocated from NUMA node 0, the NUMA used for all resources on this VM:

<numatune>

<memory mode='strict' nodeset='0'/>

</numatune>Configure the CPU features and the CPU mode; host-model with fallback=allow is recommended:

<features>

<acpi/>

</features>

<cpu mode='host-model'>

<model fallback='allow'/>

</cpu>Configure the CPU pinning, with the emulatorpin set to a non-isolated CPU on the same NUMA as the VM:

<vcpu placement='static'>21</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='22'/>

<vcpupin vcpu='1' cpuset='1'/>

<vcpupin vcpu='2' cpuset='23'/>

<vcpupin vcpu='3' cpuset='2'/>

<vcpupin vcpu='4' cpuset='24'/>

<vcpupin vcpu='5' cpuset='3'/>

<vcpupin vcpu='6' cpuset='25'/>

<vcpupin vcpu='7' cpuset='4'/>

<vcpupin vcpu='8' cpuset='26'/>

<vcpupin vcpu='9' cpuset='5'/>

<vcpupin vcpu='10' cpuset='27'/>

<vcpupin vcpu='11' cpuset='6'/>

<vcpupin vcpu='12' cpuset='28'/>

<vcpupin vcpu='13' cpuset='7'/>

<vcpupin vcpu='14' cpuset='29'/>

<vcpupin vcpu='15' cpuset='8'/>

<vcpupin vcpu='16' cpuset='30'/>

<vcpupin vcpu='17' cpuset='9'/>

<vcpupin vcpu='18' cpuset='31'/>

<vcpupin vcpu='19' cpuset='10'/>

<vcpupin vcpu='20' cpuset='32'/>

<emulatorpin cpuset='0'/>

</cputune>Configure the OS features:

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>Configure SMBIOS. The control-cpu-cores and vsr-deployment-model=high-packet-touch parameters must only be enabled when required for the specific VSR deployment (see the VSR VNF Installation and Setup Guide):

<sysinfo type='smbios'>

<system>

<entry name='product'>TiMOS:

address=138.120.224.187/24@active \

static-route=138.0.0.0/8@138.120.224.1 \

static-route=135.0.0.0/8@138.120.224.1 \

system-base-mac=ba:db:ee:f4:f3:3d \

license-file=ftp://ftpuser:3LSaccess@135.121.29.91/license/VSR-I_license_22.txt \

primary-config=ftp://anonymous:pass@135.121.29.91/kvm/hyp62/vsr62-1.cfg \

chassis=vsr-i \

slot=A \

card=cpm-v \

slot=1 \

card=iom-v \

mda/1=m20-v \

mda/2=isa-bb-v \

system-base-mac=fa:ac:ff:ff:10:00 \

<!-- control-cpu-cores=2 \ -->

<!-- vsr-deployment-model=high-packet-touch \ -->

</entry>

</system>

</sysinfo>Configure the clock:

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>The devices configuration section includes disks, network interfaces and console ports. By default, a VSR is configured with cf3:

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr62-1.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>Configure the network interfaces starting with a management port attached to a Linux bridge and two PCI-PT network interfaces on NUMA node 0:

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-mgmt'/>

</interface>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x05' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>

The console port is accessible using the virsh console <vm> command:

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>The end of the XML file includes the required seclabel configuration:

</devices>

<!-- Seclabel: required -->

<seclabel type='none'/>

</domain>The VSR’s boot messages can be checked to confirm the correct CPU, memory, and NIC assignments; for example:

...

KVM based vcpu

Running in a KVM/QEMU virtual machine

ACPI: found 21 cores, 21 enabled

1 virtio net device is detected

2 i40e devices are detected

...The VSR automatically allocates CPUs between different task types:

A:vsr62-1# show card 1 virtual fp

===============================================================================

Card 1 Virtual Forwarding Plane Statistics

===============================================================================

Task vCPUs Average Maximum

Utilization Utilization

-------------------------------------------------------------------------------

NIC 1 0.00 % 0.00 %

Worker 17 0.03 % 0.03 %

Scheduler 1 0.00 % 0.00 %

===============================================================================

Verify the CPU pinning with the virsh vcpuinfo command and ensure that no other VMs are sharing the VSR’s resources:

[root@hyp62 ~]# virsh vcpuinfo vsr62-1

VCPU: 0

CPU: 22

State: running

CPU time: 40.6s

CPU Affinity: ----------------------y---------------------

VCPU: 1

CPU: 1

State: running

CPU time: 9.8s

CPU Affinity: -y------------------------------------------

VCPU: 2

CPU: 23

State: running

CPU time: 145.2s

CPU Affinity: -----------------------y--------------------

VCPU: 3

CPU: 2

State: running

CPU time: 153.4s

CPU Affinity: --y-----------------------------------------

...

XML file for hyperthreading enabled and NUMA node 0

The XML file header contains the VM name:

<domain type='kvm'>

<name>vsr62-1</name>The UUID is removed in order to generate a new UUID. The new UUID can be obtained with the virsh dumpxml command after running the VM and then pasted into the XML file to preserve it. Here, the UUID is set, as a license is already tied to this UUID:

<uuid>66fdf83e-fd6b-4ce7-9ee1-ca16080e073f</uuid>Define the hugepages and the allocate 64 GB of RAM to the VSR VM. Here, the nodeset is always set to 0:

<memory unit="G">64</memory>

<memoryBacking>

<hugepages>

<page size="1" unit="G" nodeset="0"/>

</hugepages>

<nosharepages/>

</memoryBacking>Configure the numatune setting to ensure all the RAM is allocated from NUMA node 0, the NUMA used for all resources on this VM:

<numatune>

<memory mode='strict' nodeset='0'/>

</numatune>Configure the CPU features and CPU mode; host-model with fallback=allow is recommended. Because hyperthreading is enabled, the topology is required:

<features>

<acpi/>

</features>

<cpu mode='host-model'>

<model fallback='allow'/>

<topology sockets='1' cores='21' threads='2'/>

</cpu>

Configure the CPU pinning, with emulatorpin set to a non-isolated CPU on the same NUMA as the VM:

<vcpu placement='static'>42</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='1'/>

<vcpupin vcpu='1' cpuset='45'/>

<vcpupin vcpu='2' cpuset='2'/>

<vcpupin vcpu='3' cpuset='46'/>

<vcpupin vcpu='4' cpuset='3'/>

<vcpupin vcpu='5' cpuset='47'/>

<vcpupin vcpu='6' cpuset='4'/>

<vcpupin vcpu='7' cpuset='48'/>

<vcpupin vcpu='8' cpuset='5'/>

<vcpupin vcpu='9' cpuset='49'/>

<vcpupin vcpu='10' cpuset='6'/>

<vcpupin vcpu='11' cpuset='50'/>

<vcpupin vcpu='12' cpuset='7'/>

<vcpupin vcpu='13' cpuset='51'/>

<vcpupin vcpu='14' cpuset='8'/>

<vcpupin vcpu='15' cpuset='52'/>

<vcpupin vcpu='16' cpuset='9'/>

<vcpupin vcpu='17' cpuset='53'/>

<vcpupin vcpu='18' cpuset='10'/>

<vcpupin vcpu='19' cpuset='54'/>

<vcpupin vcpu='20' cpuset='11'/>

<vcpupin vcpu='21' cpuset='55'/>

<vcpupin vcpu='22' cpuset='12'/>

<vcpupin vcpu='23' cpuset='56'/>

<vcpupin vcpu='24' cpuset='13'/>

<vcpupin vcpu='25' cpuset='57'/>

<vcpupin vcpu='26' cpuset='14'/>

<vcpupin vcpu='27' cpuset='58'/>

<vcpupin vcpu='28' cpuset='15'/>

<vcpupin vcpu='29' cpuset='59'/>

<vcpupin vcpu='30' cpuset='16'/>

<vcpupin vcpu='31' cpuset='60'/>

<vcpupin vcpu='32' cpuset='17'/>

<vcpupin vcpu='33' cpuset='61'/>

<vcpupin vcpu='34' cpuset='18'/>

<vcpupin vcpu='35' cpuset='62'/>

<vcpupin vcpu='36' cpuset='19'/>

<vcpupin vcpu='37' cpuset='63'/>

<vcpupin vcpu='38' cpuset='20'/>

<vcpupin vcpu='39' cpuset='64'/>

<vcpupin vcpu='40' cpuset='21'/>

<vcpupin vcpu='41' cpuset='65'/>

<emulatorpin cpuset='0,44'/>

</cputune>Configure the OS features:

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>Configure SMBIOS. The control-cpu-cores and vsr-deployment-model=high-packet-touchparameters must only be enabled when required for the specific VSR deployment (see the VSR VNF Installation and Setup Guide and the SR OS Software Release Notes). In this example, vsr-deployment-model=high-packet-touch is configured and additional worker tasks will be present to take advantage of the additional threads.

<sysinfo type='smbios'>

<system>

<entry name='product'>TiMOS:

address=138.120.224.187/24@active \

static-route=138.0.0.0/8@138.120.224.1 \

static-route=135.0.0.0/8@138.120.224.1 \

system-base-mac=ba:db:ee:f4:f3:3d \

license-file=ftp://ftpuser:3LSaccess@135.121.29.91/license/VSR-I_license_22.txt \

primary-config=ftp://anonymous:pass@135.121.29.91/kvm/hyp62/vsr62-1.cfg \

chassis=vsr-i \

slot=A \

card=cpm-v \

slot=1 \

card=iom-v \

mda/1=m20-v \

mda/2=isa-bb-v \

system-base-mac=fa:ac:ff:ff:10:00 \

<!-- control-cpu-cores=2 \ -->

vsr-deployment-model=high-packet-touch \

</entry>

</system>

</sysinfo>Configure the clock settings:

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>The devices configuration section includes disks, network interfaces, and console ports. By default, a VSR is configured with cf3:

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr62-1.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>Configure network interfaces starting with a management port attached to a Linux bridge, and two PCI-PT network interfaces on NUMA node 0:

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-mgmt'/>

</interface>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x05' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>

Configure the console port accessible using the virsh console <vm> command:

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>The end of XML file includes the required seclabel configuration:

</devices>

<!-- Seclabel: required -->

<seclabel type='none'/>

</domain>The VSR’s boot messages can be checked to confirm correct CPU, memory and NIC assignments; for example:

...

KVM based vcpu

Running in a KVM/QEMU virtual machine

ACPI: found 42 cores, 42 enabled

1 virtio net device is detected

2 i40e devices are detected

...The VSR automatically allocates CPUs between different task types:

A:vsr62-1# show card 1 virtual fp

===============================================================================

Card 1 Virtual Forwarding Plane Statistics

===============================================================================

Task vCPUs Average Maximum

Utilization Utilization

-------------------------------------------------------------------------------

NIC 1 0.00 % 0.00 %

Worker 34 0.03 % 0.04 %

Scheduler 1 0.00 % 0.00 %

===============================================================================

Verify the CPU pinning with the virsh vcpuinfo command and ensure that no other VMs are sharing the VSR’s resources:

[root@hyp62 ~]# virsh vcpuinfo vsr62-1

VCPU: 0

CPU: 1

State: running

CPU time: 40.5s

CPU Affinity: -y--------------------------------------------------------------------------------------

VCPU: 1

CPU: 45

State: running

CPU time: 8.3s

CPU Affinity: ---------------------------------------------y------------------------------------------

VCPU: 2

CPU: 2

State: running

CPU time: 8.3s

CPU Affinity: --y-------------------------------------------------------------------------------------

VCPU: 3

CPU: 46

State: running

CPU time: 8.5s

CPU Affinity: ----------------------------------------------y-----------------------------------------

...Complete XML file example

The following example XML file is provided in an easy to read and modify format with multiple mutually exclusive options that can be commented out.

<domain type='kvm'>

<!-- VM name -->

<name>vsr56-1</name>

<!-- UUID: remove to auto-generate a new UUID -->

<!-- Example UUID, do not use -->

<!-- <uuid>ab9711d2-f725-4e27-8a52-ffe1873c102f</uuid> -->

<!-- VM memory allocation depends on role and required scaling, see User Guide and Release Notes -->

<memory unit="G">64</memory>

<!-- Hugepages -->

<memoryBacking>

<hugepages>

<page size="1" unit="G" nodeset="0"/>

</hugepages>

<nosharepages/>

</memoryBacking>

<!-- Numatune: specifying NUMA node is required for systems with multiple NUMA nodes -->

<numatune>

<memory mode='strict' nodeset='0'/>

</numatune>

<!-- CPU features: ACPI is required for hyperthreading -->

<features>

<acpi/>

</features>

<!-- CPU mode: mode='host-model' with fallback='allow' is recommended -->

<cpu mode='host-model'>

<model fallback='allow'/>

<!-- topology is required when hyperthreading is enabled -->

<!-- <topology sockets='1' cores='21' threads='1'/> -->

<!-- <topology sockets='1' cores='21' threads='2'/> -->

</cpu>

<!-- CPU pinning: 'emulatorpin' is set to non-isolated CPU not allocated to VSR VM, but are on the same CPU/NUMA -->

<!-- NUMA 0 hypertheading disabled -->

<vcpu placement='static'>21</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='2'/>

<vcpupin vcpu='1' cpuset='4'/>

<vcpupin vcpu='2' cpuset='6'/>

<vcpupin vcpu='3' cpuset='8'/>

<vcpupin vcpu='4' cpuset='10'/>

<vcpupin vcpu='5' cpuset='12'/>

<vcpupin vcpu='6' cpuset='14'/>

<vcpupin vcpu='7' cpuset='16'/>

<vcpupin vcpu='8' cpuset='18'/>

<vcpupin vcpu='9' cpuset='20'/>

<vcpupin vcpu='10' cpuset='22'/>

<vcpupin vcpu='11' cpuset='24'/>

<vcpupin vcpu='12' cpuset='26'/>

<vcpupin vcpu='13' cpuset='28'/>

<vcpupin vcpu='14' cpuset='30'/>

<vcpupin vcpu='15' cpuset='32'/>

<vcpupin vcpu='16' cpuset='34'/>

<vcpupin vcpu='17' cpuset='36'/>

<vcpupin vcpu='18' cpuset='38'/>

<vcpupin vcpu='19' cpuset='40'/>

<vcpupin vcpu='20' cpuset='42'/>

<emulatorpin cpuset='0'/>

</cputune>

<!-- NUMA 1 hypertheading disabled

<vcpu placement='static'>21</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='3'/>

<vcpupin vcpu='1' cpuset='5'/>

<vcpupin vcpu='2' cpuset='7'/>

<vcpupin vcpu='3' cpuset='9'/>

<vcpupin vcpu='4' cpuset='11'/>

<vcpupin vcpu='5' cpuset='13'/>

<vcpupin vcpu='6' cpuset='15'/>

<vcpupin vcpu='7' cpuset='17'/>

<vcpupin vcpu='8' cpuset='19'/>

<vcpupin vcpu='9' cpuset='21'/>

<vcpupin vcpu='10' cpuset='23'/>

<vcpupin vcpu='11' cpuset='25'/>

<vcpupin vcpu='12' cpuset='27'/>

<vcpupin vcpu='13' cpuset='29'/>

<vcpupin vcpu='14' cpuset='31'/>

<vcpupin vcpu='15' cpuset='33'/>

<vcpupin vcpu='16' cpuset='35'/>

<vcpupin vcpu='17' cpuset='37'/>

<vcpupin vcpu='18' cpuset='39'/>

<vcpupin vcpu='19' cpuset='41'/>

<vcpupin vcpu='20' cpuset='43'/>

<emulatorpin cpuset='1'/>

</cputune>

-->

<!-- NUMA 0 hypertheading enabled

<vcpu placement='static'>42</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='2'/>

<vcpupin vcpu='1' cpuset='46'/>

<vcpupin vcpu='2' cpuset='4'/>

<vcpupin vcpu='3' cpuset='48'/>

<vcpupin vcpu='4' cpuset='6'/>

<vcpupin vcpu='5' cpuset='50'/>

<vcpupin vcpu='6' cpuset='8'/>

<vcpupin vcpu='7' cpuset='52'/>

<vcpupin vcpu='8' cpuset='10'/>

<vcpupin vcpu='9' cpuset='54'/>

<vcpupin vcpu='10' cpuset='12'/>

<vcpupin vcpu='11' cpuset='56'/>

<vcpupin vcpu='12' cpuset='14'/>

<vcpupin vcpu='13' cpuset='58'/>

<vcpupin vcpu='14' cpuset='16'/>

<vcpupin vcpu='15' cpuset='60'/>

<vcpupin vcpu='16' cpuset='18'/>

<vcpupin vcpu='17' cpuset='62'/>

<vcpupin vcpu='18' cpuset='20'/>

<vcpupin vcpu='19' cpuset='64'/>

<vcpupin vcpu='20' cpuset='22'/>

<vcpupin vcpu='21' cpuset='66'/>

<vcpupin vcpu='22' cpuset='24'/>

<vcpupin vcpu='23' cpuset='68'/>

<vcpupin vcpu='24' cpuset='26'/>

<vcpupin vcpu='25' cpuset='70'/>

<vcpupin vcpu='26' cpuset='28'/>

<vcpupin vcpu='27' cpuset='72'/>

<vcpupin vcpu='28' cpuset='30'/>

<vcpupin vcpu='29' cpuset='74'/>

<vcpupin vcpu='30' cpuset='32'/>

<vcpupin vcpu='31' cpuset='76'/>

<vcpupin vcpu='32' cpuset='34'/>

<vcpupin vcpu='33' cpuset='78'/>

<vcpupin vcpu='34' cpuset='36'/>

<vcpupin vcpu='35' cpuset='80'/>

<vcpupin vcpu='36' cpuset='38'/>

<vcpupin vcpu='37' cpuset='82'/>

<vcpupin vcpu='38' cpuset='40'/>

<vcpupin vcpu='39' cpuset='84'/>

<vcpupin vcpu='40' cpuset='42'/>

<vcpupin vcpu='41' cpuset='86'/>

<emulatorpin cpuset='0,44'/>

</cputune>

-->

<!-- NUMA 1 hyperthreading enabled

<vcpu placement='static'>42</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='3'/>

<vcpupin vcpu='1' cpuset='47'/>

<vcpupin vcpu='2' cpuset='5'/>

<vcpupin vcpu='3' cpuset='49'/>

<vcpupin vcpu='4' cpuset='7'/>

<vcpupin vcpu='5' cpuset='51'/>

<vcpupin vcpu='6' cpuset='9'/>

<vcpupin vcpu='7' cpuset='53'/>

<vcpupin vcpu='8' cpuset='11'/>

<vcpupin vcpu='9' cpuset='55'/>

<vcpupin vcpu='10' cpuset='13'/>

<vcpupin vcpu='11' cpuset='57'/>

<vcpupin vcpu='12' cpuset='15'/>

<vcpupin vcpu='13' cpuset='59'/>

<vcpupin vcpu='14' cpuset='17'/>

<vcpupin vcpu='15' cpuset='61'/>

<vcpupin vcpu='16' cpuset='19'/>

<vcpupin vcpu='17' cpuset='63'/>

<vcpupin vcpu='18' cpuset='21'/>

<vcpupin vcpu='19' cpuset='65'/>

<vcpupin vcpu='20' cpuset='23'/>

<vcpupin vcpu='21' cpuset='67'/>

<vcpupin vcpu='22' cpuset='25'/>

<vcpupin vcpu='23' cpuset='69'/>

<vcpupin vcpu='24' cpuset='27'/>

<vcpupin vcpu='25' cpuset='71'/>

<vcpupin vcpu='26' cpuset='29'/>

<vcpupin vcpu='27' cpuset='73'/>

<vcpupin vcpu='28' cpuset='31'/>

<vcpupin vcpu='29' cpuset='75'/>

<vcpupin vcpu='30' cpuset='33'/>

<vcpupin vcpu='31' cpuset='77'/>

<vcpupin vcpu='32' cpuset='35'/>

<vcpupin vcpu='33' cpuset='79'/>

<vcpupin vcpu='34' cpuset='37'/>

<vcpupin vcpu='35' cpuset='81'/>

<vcpupin vcpu='36' cpuset='39'/>

<vcpupin vcpu='37' cpuset='83'/>

<vcpupin vcpu='38' cpuset='41'/>

<vcpupin vcpu='39' cpuset='85'/>

<vcpupin vcpu='40' cpuset='43'/>

<vcpupin vcpu='41' cpuset='87'/>

<emulatorpin cpuset='1,45'/>

</cputune>

-->

<!-- OS features -->

<os>

<type arch='x86_64' machine='pc'>hvm</type>

<boot dev='hd'/>

<smbios mode='sysinfo'/>

</os>

<!-- SMBIOS configuration -->

<sysinfo type='smbios'>

<system>

<entry name='product'>TiMOS:

address=138.120.224.192/24@active \

static-route=138.0.0.0/8@138.120.224.1 \

static-route=135.0.0.0/8@138.120.224.1 \

license-file=ftp://ftpuser:3LSaccess@172.16.29.91/license/VSR-I_license_22.txt \

primary-config=ftp://anonymous:pass@172.16.29.91/kvm/hyp56/vsr56-1.cfg \

chassis=vsr-i \

slot=A \

card=cpm-v \

slot=1 \

card=iom-v \

mda/1=m20-v \

mda/2=isa-ms-v \

system-base-mac=fa:ac:ff:ff:10:00 \

<!-- control-cpu-cores=2 \ -->

<!-- vsr-deployment-model=high-packet-touch \ -->

</entry>

</system>

</sysinfo>

<!-- Clock features -->

<clock offset='utc'>

<timer name='pit' tickpolicy='delay'/>

<timer name='rtc' tickpolicy='catchup'/>

<timer name='hpet' present='no'/>

</clock>

<!-- Devices -->

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<!-- CF3 QCOW2 -->

<disk type='file' device='disk'>

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/var/lib/libvirt/images/vsr56-1.qcow2'/>

<target dev='hda' bus='virtio'/>

</disk>

<!-- Network interfaces -->

<!-- Management network: Linux bridge -->

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-mgmt'/>

</interface>

<!-- Linux bridge port 1

<interface type='bridge'>

<source bridge='br-mgmt'/>

<model type='virtio'/>

<target dev='vsr1-br1'/>

</interface>

-->

<!-- PCI Passthrough port 1 -->

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x0'/>

</source>

<rom bar='off'/>

</hostdev>

<!-- SR-IOV port 1

<interface type='hostdev' managed='yes'>

<mac address="00:50:56:00:54:01"/>

<source>

<address type='pci' domain='0x0000' bus='0x83' slot='0x00' function='0x2'/>

</source>

<vlan>

<tag id='1001'/>

</vlan>

<target dev='vsr1_port_1/1/1'/>

</interface>

-->

<!-- PCI Passthrough port 2 -->

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x04' slot='0x00' function='0x1'/>

</source>

<rom bar='off'/>

</hostdev>

<!-- SR-IOV port 2

<interface type='hostdev' managed='yes'>

<mac address="00:50:56:00:54:02"/>

<source>

<address type='pci' domain='0x0000' bus='0x83' slot='0x00' function='0x4'/>

</source>

<vlan>

<tag id='200'/>

</vlan>

<target dev='vsr1_port_1/1/2'/>

</interface>

-->

<!-- Console redirected to virsh console -->

<console type='pty' tty='/dev/pts/1'>

<source path='/dev/pts/1'/>

<target type='serial' port='0'/>

<alias name='serial0'/>

</console>

<!-- Console port redirected to TCP socket, port number must be unique per hypervisor

<console type='tcp'>

<source mode='bind' host='0.0.0.0' service='2501'/>

<protocol type='telnet'/>

<target type='virtio' port='0'/>

</console>

-->

</devices>

<!-- Seclabel: required -->

<seclabel type='none'/>

</domain>Conclusion

VSR VM configuration requires specific CPU pinning and proper assignment of dedicated system resources to achieve high performance and stability. The examples in this guide demonstrate various CPU numbering schemes and provisioning scenarios and provide an easier starting point when creating a new VSR VM.