Virtual Private LAN Service (VPLS), RFC 4762, Virtual Private LAN Service (VPLS) Using Label Distribution Protocol (LDP) Signaling, provides a solution for extending Ethernet LAN services using MPLS tunneling capabilities through a routed, traffic-engineered MPLS backbone without running (M)STP across the backbone. As a result, VPLS has been deployed on a large scale in service provider networks.

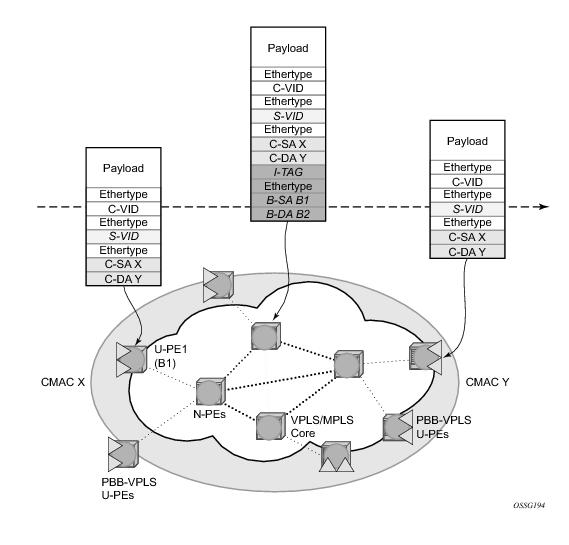

Another VPLS context (I-VPLS) can be used to provide the multipoint I-component functionality emulating the ELAN service (refer to the triangle marked “I” in Figure 54). Similar to B-VPLS, I-VPLS inherits from the regular VPLS the pseudowire (SDP bindings) and native Ethernet (SAPs) handoffs accommodating this way different types of access: for example, direct customer link, QinQ or HVPLS.

The SROS model supports PBB Epipes and I-VPLS services on the B-VPLS. SPB is added to B-VPLS in place of other control planes (see Table 12). SPB runs in a separate instance of IS-IS. SPB is configured in a single service instance of B-VPLS that controls the SPB behavior (via IS-IS parameters) for the SPB IS-IS session between nodes. Up to four independent instances of SPB can be configured. Each SPB instance requires a separate control B-VPLS service. A typical SPB deployment uses a single control VPLS with zero, one or more user B-VPLS instances. SPB is multi-topology (MT) capable at the IS-IS LSP TLV definitions however logical instances offer the nearly the same capability as MT. The SROS SPB implementation always uses MT topology instance zero. Area addresses are not used and SPB is assumed to be a single area. SPB must be consistently configured on nodes in the system. SPB Regions information and IS-IS hello logic that detect mismatched configuration are not supported.

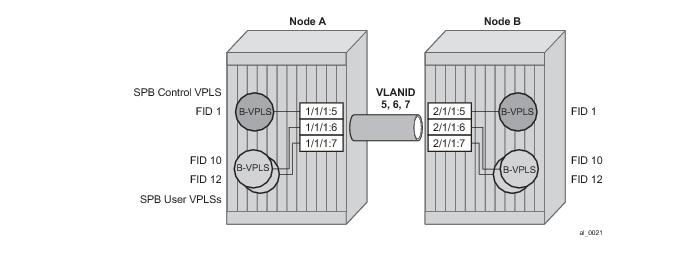

Figure 56 illustrates two switches where an SPB control B-VPLS configured with FID 1 and uses a SAP with 1/1/1:5 therefore using a VLAN Tag 5 on the link. The SAP 1/1/1:1 could also have been be used but in SROS the VID does not have to equal FID. Alternatively an MPLS PW (spoke SDP binding) could be for some interfaces in place of the SAP.

Figure 56 illustrates a control VPLS and two user B-VPLS. The User B-VPLS must share the same topology and are required to have interfaces on SAPs/Spoke SDPs on the same links or LAG groups as the B-VPLS. To allow services on different B-VPLS to use a path when there are multiple paths a different ECT algorithm can be configured on a B-VPLS instance. In this case, the user B-VPLS still fate shared the same topology but they may use different paths for data traffic; see

Shortest Path and Single Tree.

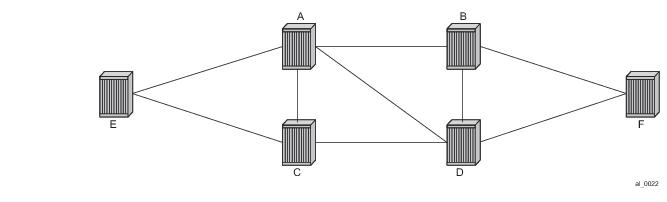

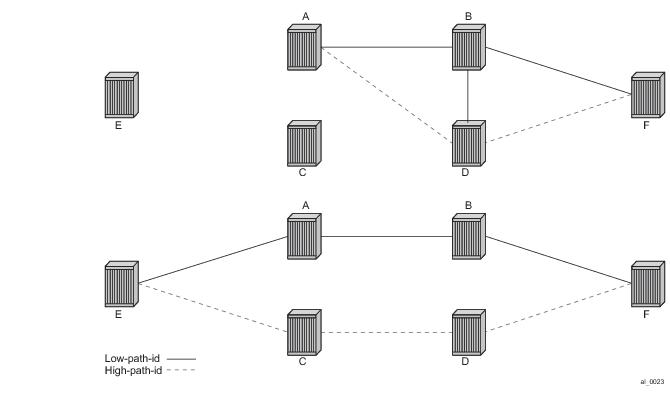

Assume that Node A is the lowest Bridge Identifier and the Multicast root node and all links have equal metrics. Also, assume that Bridge Identifiers are ordered such that Node A has a numerically lower Bridge identifier than Node B, and Node B has lower Bridge Identifier than Node C, etc. Unicast paths are configured to use shortest path tree (SPT). Figure 58 shows the shortest paths computed from Node A and Node E to Node F. There are only two shortest paths from A to F. A choice of low-path-id algorithm uses Node B as transit node and a path using high-path-id algorithm uses Node D as transit node. The reverse paths from Node F to A are the same (all unicast paths are reverse path congruent). For Node E to Node F there are three paths E-A-B-F, E-A-D-F, and E-C-D-F. The low-path-id algorithm uses path E-A-B-F and the high-path-id algorithm uses E-C-D-F. These paths are also disjoint and are reverse path congruent. Note that any nodes that are directly connected in this network have only one path between them (not shown for simplicity).

Figure 60 shows an example network showing four nodes with SPB B-VPLS. The SPB instance is configured on the B-VPLS 100001. B-VPLS 100001 uses FID 1 for SPB instance 1024. All BMACs and I-SIDs are learned in the context of B-VPLS 100001. B-VPLS 100001 has an i-vpls 10001 service, which also uses the I-SID 10001. B-VPLS 100001 is configured to use VID 1 on SAPs 1/2/2 and 1/2/3 and while the VID does not need to be the same as the FID the VID does however need to be the same on the other side (Dut-B and Dut-C).

A user B-VPLS service 100002 is configured and it uses B-VPLS 10001 to provide forwarding. It fate shares the control topology. In Figure 60, the control B-VPLS uses the low-path-id algorithm and the user B-VPLS uses high-path-id algorithm. Note that any B-VPLS can use any algorithm. The difference is illustrated in the path between Dut A and Dut D. The short dashed line through Dut-B is the low-path-id algorithm and the long dashed line thought Dut C is the high-path-id algorithm.

Dut-A:

Control B-VPLS:*A:Dut-A>config>service>vpls# pwc

-------------------------------------------------------------------------------

Present Working Context :

-------------------------------------------------------------------------------

<root>

configure

service

vpls "100001"

-------------------------------------------------------------------------------

*A:Dut-A>config>service>vpls# info

----------------------------------------------

pbb

source-bmac 00:10:00:01:00:01

exit

stp

shutdown

exit

spb 1024 fid 1 create

level 1

ect-algorithm fid-range 100-100 high-path-id

exit

no shutdown

exit

sap 1/2/2:1.1 create

spb create

no shutdown

exit

exit

sap 1/2/3:1.1 create

spb create

no shutdown

exit

exit

no shutdown

----------------------------------------------

User B-VPLS:

*A:Dut-A>config>service>vpls# pwc

-------------------------------------------------------------------------------

Present Working Context :

-------------------------------------------------------------------------------

<root>

configure

service

vpls "100002"

-------------------------------------------------------------------------------

*A:Dut-A>config>service>vpls# info

----------------------------------------------

pbb

source-bmac 00:10:00:02:00:01

exit

stp

shutdown

exit

spbm-control-vpls 100001 fid 100

sap 1/2/2:1.2 create

exit

sap 1/2/3:1.2 create

exit

no shutdown

----------------------------------------------

I-VPLS:

configure service

vpls 10001 customer 1 i-vpls create

service-mtu 1492

pbb

backbone-vpls 100001

exit

exit

stp

shutdown

exit

sap 1/2/1:1000.1 create

exit

no shutdown

exit

vpls 10002 customer 1 i-vpls create

service-mtu 1492

pbb

backbone-vpls 100002

exit

exit

stp

shutdown

exit

sap 1/2/1:1000.2 create

exit

no shutdown

exit

exit

The show base commands output a summary of the instance parameters under a control B-VPLS. The

show command for a user B-VPLS indicates the control B-VPLS. Note that the base parameters except for Bridge Priority and Bridge ID must match on neighbor nodes.

*A:Dut-A# show service id 100001 spb base

===============================================================================

Service SPB Information

===============================================================================

Admin State : Up Oper State : Up

ISIS Instance : 1024 FID : 1

Bridge Priority : 8 Fwd Tree Top Ucast : spf

Fwd Tree Top Mcast : st

Bridge Id : 80:00.00:10:00:01:00:01

Mcast Desig Bridge : 80:00.00:10:00:01:00:01

===============================================================================

ISIS Interfaces

===============================================================================

Interface Level CircID Oper State L1/L2 Metric

-------------------------------------------------------------------------------

sap:1/2/2:1.1 L1 65536 Up 10/-

sap:1/2/3:1.1 L1 65537 Up 10/-

-------------------------------------------------------------------------------

Interfaces : 2

===============================================================================

FID ranges using ECT Algorithm

-------------------------------------------------------------------------------

1-99 low-path-id

100-100 high-path-id

101-4095 low-path-id

===============================================================================

The show adjacency command displays the system ID of the connected SPB B-VPLS neighbors and the associated interfaces to connect those neighbors.

*A:Dut-A# show service id 100001 spb adjacency

===============================================================================

ISIS Adjacency

===============================================================================

System ID Usage State Hold Interface MT Enab

-------------------------------------------------------------------------------

Dut-B L1 Up 19 sap:1/2/2:1.1 No

Dut-C L1 Up 21 sap:1/2/3:1.1 No

-------------------------------------------------------------------------------

Adjacencies : 2

===============================================================================

Details about the topology can be displayed with the database command. There is a detail option that displays the contents of the LSPs.

*A:Dut-A# show service id 100001 spb database

===============================================================================

ISIS Database

===============================================================================

LSP ID Sequence Checksum Lifetime Attributes

-------------------------------------------------------------------------------

Displaying Level 1 database

-------------------------------------------------------------------------------

Dut-A.00-00 0xc 0xbaba 1103 L1

Dut-B.00-00 0x13 0xe780 1117 L1

Dut-C.00-00 0x13 0x85a 1117 L1

Dut-D.00-00 0xe 0x174a 1119 L1

Level (1) LSP Count : 4

===============================================================================

The show routes command illustrates the next hop if for the MAC addresses both unicast and multicast. The path to 00:10:00:01:00:04 (Dut-D) illustrates the low-path-id algorithm id. For FID one the neighbor is Dut-B and for FID 100 the neighbor is Dut-C. Since Dut-A is the root of the multicast single tree the multicast forwarding is the same for Dut-A. However, unicast and multicast routes will differ on most other nodes. Also the I-SIDs exist on all of the nodes so I-SID base multicast follows the multicast tree exactly. If the I-SID had not existed on Dut-B or Dut-D then for FID 1 there would be no entry. Note only designated nodes (root nodes) show metrics. Non designated nodes will not show metrics.

*A:Dut-A# show service id 100001 spb routes

================================================================

MAC Route Table

================================================================

Fid MAC Ver. Metric

NextHop If SysID

----------------------------------------------------------------

Fwd Tree: unicast

----------------------------------------------------------------

1 00:10:00:01:00:02 10 10

sap:1/2/2:1.1 Dut-B

1 00:10:00:01:00:03 10 10

sap:1/2/3:1.1 Dut-C

1 00:10:00:01:00:04 10 20

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:02 10 10

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:03 10 10

sap:1/2/3:1.1 Dut-C

100 00:10:00:02:00:04 10 20

sap:1/2/3:1.1 Dut-C

Fwd Tree: multicast

----------------------------------------------------------------

1 00:10:00:01:00:02 10 10

sap:1/2/2:1.1 Dut-B

1 00:10:00:01:00:03 10 10

sap:1/2/3:1.1 Dut-C

1 00:10:00:01:00:04 10 20

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:02 10 10

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:03 10 10

sap:1/2/3:1.1 Dut-C

100 00:10:00:02:00:04 10 20

sap:1/2/3:1.1 Dut-C

----------------------------------------------------------------

No. of MAC Routes: 12

================================================================

================================================================

ISID Route Table

================================================================

Fid ISID Ver.

NextHop If SysID

----------------------------------------------------------------

1 10001 10

sap:1/2/2:1.1 Dut-B

sap:1/2/3:1.1 Dut-C

100 10002 10

sap:1/2/2:1.1 Dut-B

sap:1/2/3:1.1 Dut-C

----------------------------------------------------------------

No. of ISID Routes: 2

================================================================

The show service spb fdb command shows the programmed unicast and multicast source MACs in SPB-managed B-VPLS service.

*A:Dut-A# show service id 100001 spb fdb

==============================================================================

User service FDB information

==============================================================================

MacAddr UCast Source State MCast Source State

------------------------------------------------------------------------------

00:10:00:01:00:02 1/2/2:1.1 ok 1/2/2:1.1 ok

00:10:00:01:00:03 1/2/3:1.1 ok 1/2/3:1.1 ok

00:10:00:01:00:04 1/2/2:1.1 ok 1/2/2:1.1 ok

------------------------------------------------------------------------------

Entries found: 3

==============================================================================

*A:Dut-A# show service id 100002 spb fdb

==============================================================================

User service FDB information

==============================================================================

MacAddr UCast Source State MCast Source State

------------------------------------------------------------------------------

00:10:00:02:00:02 1/2/2:1.2 ok 1/2/2:1.2 ok

00:10:00:02:00:03 1/2/3:1.2 ok 1/2/3:1.2 ok

00:10:00:02:00:04 1/2/3:1.2 ok 1/2/3:1.2 ok

------------------------------------------------------------------------------

Entries found: 3

==============================================================================

The show service spb mfib command shows the programmed multicast ISID addresses Macs in SPB-managed B-VPLS service shows the multicast ISID pbb group mac addresses in SPB-managed B-VPLS. Note that other types of *,G multicast traffic is sent over the multicast tree and these MACs are not shown. OAM traffic that uses multicast (for example vMEP CCM) will take this path for example.

*A:Dut-A# show service id 100001 spb mfib

===============================================================================

User service MFIB information

===============================================================================

MacAddr ISID Status

-------------------------------------------------------------------------------

01:1E:83:00:27:11 10001 Ok

-------------------------------------------------------------------------------

Entries found: 1

===============================================================================

*A:Dut-A# show service id 100002 spb mfib

===============================================================================

User service MFIB information

===============================================================================

MacAddr ISID Status

-------------------------------------------------------------------------------

01:1E:83:00:27:12 10002 Ok

-------------------------------------------------------------------------------

Entries found: 1

===============================================================================

In a real life deployment, different customer VPNs do not share the same community of interest – for example, VPN instances may be located on different PBB PEs. The M:1 model depicted in Figure 62 requires a per VPN flood containment mechanism so that VPN traffic is distributed just to the B-VPLS locations that have customer VPN sites: for example, flooded traffic originated in the blue I-VPLS should be distributed just to the PBB PEs where blue I-VPLS instances are present – PBB PE B, E and F.

|

•

|

SF/CPM switchover due to STP reconvergence

|

Figure 63 depicts the case of an Inter-domain deployment where multiple metro domains (MANs) are interconnected through a wide area network (WAN). A BVPLS is configured across these domains running PBB M:1 model to provide infrastructure for multiple IVPLS services. MMRP is enabled in the BVPLS to build per IVPLS flooding trees. In order to limit the load in the core PEs or PBB BCBs, the local IVPLS instances must use MMRP and data plane resources only in the MAN regions where they have sites. A solution to the above requirements is depicted in

Figure 64. The case of native PBB metro domains inter-connected via a MPLS core is used in this example. Other technology combinations are possible.

*A:ALA-7>config>service>mrp# info

----------------------------------------------

mrp-policy "test" create

default-action block

entry 1 create

match

isid 100 to 150

exit

action allow

exit

exit

----------------------------------------------

----------------------------------------------

A;ALA-7>config>filter# info

----------------------------------------------

mac-filter 90 create

description "filter-wan-man"

type isid

scope template

entry 1 create

description "drop-local-isids"

match

isid from 100 to 1000

exit

action drop

exit

----------------------------------------------

The secondary path requires a MEP to exchange the G.8031 APS PDUs. The following Ethernet CFM configuration in the eth-tunnel>path>eth-cfm>mep context can be used to enable the G.8031 protection without activating the Ethernet CCMs:

In Figure 67, the G.8031 Ethernet tunnels are used by the B-SAP(s) mapped to the green BVPLS entities supporting the ELINE services. A LAG-like loadsharing solution is provided for the Multipoint BVPLS (white circles) supporting the ELAN (IVPLS) services. The green G.8031 tunnels co-exist with LAG-emulating Ethernet tunnels (loadsharing mode) on both BEB-BCB and BCB-BCB physical links.

PBB Blackholing Issue — Assuming that the link between PE A1 and node 5 is active, the remote PEs participating in the orange VPN (for example, PE D) will learn the CMAC X associated with backbone MAC A1. Under failure of the link between node 5 and PE A1 and activation of link to PE A2, the remote PEs (for example, PE D) will black-hole the traffic destined for customer MAC X to BMAC A1 until the aging timer expires or a packet flows from X to Y through the PE A2. This may take a long time (default aging timer is 5 minutes) and may affect a large number of flows across multiple I-VPLSes.

For flush-all-but-mine indication (“positive flush”):

For flush-all-from-me indication (“negative flush”)

An example of processing steps involved in PBB MAC Flush is depicted in Figure 69 for the case when a Topology Change Notification (TCN) is received on PBB PE 2 from a QinQ access in the I-VPLS domain.

A PBB-TLV is added by PE2 to the regular LDP MAC flush-all-but-mine. BMAC2, the source BMAC associated with B-VPLS on PE2 is carried inside the PBB TLV to indicate who “mine” is. The ISID list identifying the I-VPLS affected by the TCN is also included if the number of affected I-VPLS is 100 or less. No ISID list is included in the PBB-TLV if more than 100 ISIDs are affected. If no ISID list is included, then the receiving PBB PE will flush all the local I-VPLS instances associated with the B-VPLS context identified by the FEC TLV in the LDP MAC withdraw message. This is done to speed up delivery and processing of the message.

A PBB network using Native Ethernet core is depicted in Figure 70. MC-LAG is used to multi-home a number of edge switches running QinQ to PBB BEBs.

The topology depicted in Figure 71 describes the details of the solution for the I-VPLS use case. Although the native PBB use case is used, the solution works the same for any other PBB infrastructure: for example, G.8031 Ethernet tunnels, pseudowire/MPLS, or a combination.

Figure 72 depicts the case of access link failure.

Figure 76 depicts a specific use case. Keeping the same topology - an ingress PBB PE, a PBB core and an egress PBB PE - let us consider the generic use case where:

|

→

|

Case 1: SAP type = null/dot1q default (1/1/1 or 1/1/1.*) so there is no service delimiting tag used and stripped on the ingress side.

|

|

→

|

Case 2: SAP type = dot1q or qinq default (1/1/1.100 or 1/1/1.100.*) so there is a service delimiting tag used and stripped.

|

|

→

|

Case 3: SAP type = qinq (1/1/1.100.10) so there are two service delimiting tags used and stripped.

|

|

→

|

Case 1: SDP vc-type = Ethernet (force-vlan-vc-forwarding= not supported for I-PW) so there is no service delimiting tag stripped on the ingress side.

|

|

→

|

Case 2: SDP vc-type = Ethernet VLAN so there is a service delimiting tag stripped.

|

|

→

|

Case 1: SAP type = null/dot1q default (2/2/2 or 2/2/2.*) so there is no service delimiting tag added on the egress side.

|

|

→

|

Case 2: SAP type = dot1q/qinq default (3/1/1.300 or 3/1/1.300.*) so a service delimiting tag is added on egress

|

|

→

|

Case 3: SAP type = qinq (3//1/1.300.30) so two service delimiting tags are added on egress

|

|

−

|

If the qinq-mark-top-only command under vpls>sap>egress is not enabled (default), the policy is applied to both service delimiting tags.

|

|

→

|

Case 1: I-SDP vc-type = Ethernet VLAN so there is service delimiting tag added after PW encapsulation.

|

|

→

|

Case 2: I-SDP vc-type = Ethernet (force-vlan-vc-forwarding=not supported for I-SDPs) so there is no service delimiting tag added on egress PW

|

The user adds or removes members to the encap-group, one at a time or as a range of contiguous values. However, when the

qos-per-member option is enabled, members must be added or removed one at a time. These members are also referred to as ISID contexts.

[no]

member encap-id [

to encap-id]

The qos-per-member keyword allows the user to specify that a separate queue set instance and scheduler/agg-rate-limit instance will be created for each ISID value in the encap-group. By default, shared instances will be created for the entire encap-group.

|

•

|

When qos-per-member is specified in the encap-group creation, the user must add or remove ISID members one at a time. The command is failed if a range is entered.

|

|

•

|

When qos-per-member is specified in the encap-group creation, the sap-egress QoS policy ID and the scheduler policy name cannot be changed unless the group membership is empty. However, the agg-rate-limit parameter value can be changed or the command removed ( no agg-rate-limit).

|

|

•

|

When qos-per-member is not specified in the encap-group creation, the user may add or remove ISID members as a singleton or as a range of contiguous values.

|

|

•

|

When qos-per-member is not specified in the encap-group creation, the sap-egress QoS policy ID and the scheduler policy name or agg-rate-limit parameter value may be changed at anytime. Note however that the user cannot still remove the SAP egress QoS policy ( no qos) while there are members defined in the encap-group.

|

|

-

|

The member command is all-or-nothing. No ISID in a range is added if one fails

|

Figure 77 displays an example of egress queue scheduling.

The user type in Figure 77 maps to a specific encap-group defined for the B-SAP in CLI. The operator has the flexibility of scheduling many user types by assigning different scheduling parameters as follows:

The port scheduler max-rate and

priority level rates and weights, if a Weighted Scheduler Group is used, are always “on-the-wire” rates and thus use the actual frame size. The same applies to the agg-rate-limit on a SAP, a subscriber, or a Multi-Service Site (MSS) when the queue is port-parented.

When the user enables frame-based-accounting in a scheduler policy or

queue-frame-based-accounting with agg-rate-limit in a port scheduler policy, the queue rate is capped to a user- configured “on-the-wire” rate but the packet-byte-offset value is still in effect as explained above.

config

qos

port-scheduler-policy "bvpls-backbone-port-scheduler"

group scheduler-group1 create

rate 1000

level 3 rate 1000 group scheduler-group1 weight w1

level 4 rate 1000 group scheduler-group1 weight w4

level 5 rate 1000 cir-rate 100

level 7 rate 5000 cir-rate 5000

level 8 rate 500 cir-rate 500

exit

scheduler-policy "user-type1"

tier 1

scheduler root

port-parent level 8 rate pir1 weight w-pir1 cir-level 8 cir-rate cir1 cir-weight w-cir1

exit

tier 3

scheduler wfq

rate pir1

parent root

exit

exit

exit

scheduler-policy "user-type2"

tier 1

scheduler root

port-parent level 7 rate pir2 weight w-pir2 cir-level 7 cir-rate cir2 cir-weight w-cir2

exit

tier 3

scheduler wfq

rate pir2

parent root

exit

exit

exit

scheduler-policy "b-sap"

tier 1

scheduler root

port-parent level 5 rate pir5 weight w-pir5 cir-level 1 cir-rate cir5 cir-weight w-cir5

exit

tier 3

scheduler wfq

rate pir5

parent root

exit

exit

exit

sap-egress 100 // user type 1 QoS policy

queue 1

parent wfq weight x level 3 cir-weight x cir-level 3

packet-byte-offset subtract bytes 22

queue 2

packet-byte-offset subtract bytes 22

parent wfq weight y level 3 cir-weight y cir-level 3

queue 3

packet-byte-offset subtract bytes 22

parent wfq weight z level 3 cir-weight z cir-level 3

queue 4

parent root level 8 cir-level 8

packet-byte-offset subtract bytes 22

fc be queue 1

fc l2 queue 2

fc h2 queue 3

fc ef queue 4

exit

sap-egress 200 // user type 2 QoS policy

queue 1

parent wfq weight x level 3 cir-weight x cir-level 3

packet-byte-offset subtract bytes 26

queue 2

parent wfq weight y level 3 cir-weight y cir-level 3

packet-byte-offset subtract bytes 26

queue 3

parent wfq weight z level 3 cir-weight z cir-level 3

packet-byte-offset subtract bytes 26

queue 4

parent root level 8 cir-level 8

packet-byte-offset subtract bytes 26

fc be queue 1

fc l2 queue 2

fc h2 queue 3

fc ef queue 4

exit

sap-egress 300 // User type 3 QoS policy

queue 1

port-parent level 4 rate pir3 weight w-pir3 cir-level

4 cir-rate cir3 cir-weight w-cir3

packet-byte-offset subtract bytes 22

fc be queue 1

exit

sap-egress 400 // User type 4 QoS policy

queue 1

port-parent level 3 rate pir4 weight w-pir4 cir-level

3 cir-rate cir4 cir-weight w-cir4

packet-byte-offset subtract bytes 22

fc be queue 1

exit

sap-egress 500 // B-SAP default QoS policy

queue 1

parent wfq weight x level 3 cir-weight x cir-level 3

queue 2

parent wfq weight y level 3 cir-weight y cir-level 3

queue 3

parent wfq weight z level 3 cir-weight z cir-level 3

queue 4

parent root level 8 cir-level 8

fc be queue 1

fc l2 queue 2

fc h2 queue 3

fc ef queue 4

exit

exit

exit

config

service

vpls 100 bvpls

sap 1/1/1:100

egress

encap-defined-qos

encap-group type1-grouped type isid

member 1 to 10

qos 100

scheduler-policy user-type1

exit

encap-group type1-separate type isid qos-per-member

member 16

qos 100

scheduler-policy user-type1

exit

encap-group type2-grouped type isid

member 21 to 30

qos 200

scheduler-policy user-type2

exit

encap-group type2-separate type isid qos-per-member

member 36

qos 200

scheduler-policy user-type2

exit

encap-group type3-grouped type isid

member 41 to 50

qos 300

exit

encap-group type4-grouped type isid

member 61 to 70

qos 400

exit

qos 500

scheduler-policy b-sap

exit

exit

exit

exit

exit

*A:7750_ALU>config>eth-tunnel 1

description "LAG-emulation to BCB1 ET1"

protection-type loadsharing

ethernet

mac 00:11:11:11:11:12

encap-type dot1q

exit

ccm-hold-time down 5 up 10 // 50 ms down, 1 sec up

lag-emulation

access adapt-qos distribute

path-threshold 1

exit

path 1

member 1/1/1

control-tag 0

eth-cfm

…

exit

no shutdown

exit

path 2

member 2/1/1

control-tag 0

eth-cfm

…

exit

no shutdown

exit

path 3

member 3/1/1

control-tag 0

eth-cfm

…

exit

no shutdown

exit

no shutdown

--------------------------------------------------

*A:7750_ALU>config>eth-tunnel 3

description "G.8031 tunnel ET3"

protection-type 8031_1to1

ethernet

mac 00:11:11:11:11:11

encap-type dot1q

exit

ccm-hold-time down 5 // 50 ms down, no up hold-down

path 1

member 1/1/1

control-tag 5

precedence primary

eth-cfm

mep 2 domain 1 association 1

ccm-enable

control-mep

no shutdown

exit

exit

no shutdown

exit

path 2

member 4/1/1

control-tag 5

eth-cfm

mep 2 domain 1 association 2

ccm-enable

control-mep

no shutdown

exit

exit

no shutdown

exit

no shutdown

--------------------------------------------------

# Service config

--------------------------------------------------

*A:7750_ALU>config>service vpls 1 customer 1 m-vpls b-vpls create

description "m-VPLS for multipoint traffic"

stp

mst-name "BVPLS"

mode p-mstp

mst-instance 10

mst-priority 4096

vlan-range 100-199

exit

mst-instance 20

mst-priority 8192

vlan-range 200-299

exit

no shutdown

exit

sap eth-tunnel-1 create // BSAP0 to BCB E

sap 4/1/1:0 create // physical link to BCB F (NOTE 0 or 0.*)

// indicate untagged for m-VPLS)

exit

no shutdown

---------------------------------------------------

# Service config: one of the same-fate SAP over

# loadsharing tunnel

---------------------------------------------------

A:7750_ALU>config service vpls 100 customer 1 b-vpls create

sap eth-tunnel-1:1 create //to BCB E

// must specify tags for each path for loadsharing

eth-tunnel

path 1 tag 100

path 2 tag 100

path 3 tag 100

exit

no shutdown …

sap 3/1/1:200 // to BCBF

…

A:7750_ALU>config service vpls 1000 customer 1 i-vpls create

pbb backbone-vpls 100 isid 1000

sap 4/1/1:200 // access SAP to QinQ

…

--------------------------------------------------

# Service config: one of epipes into b-VPLS protected tunnel

# as per R7.0 R4

--------------------------------------------------

A:7750_ALU>config service service vpls 3 customer 1 b-vpls create

sap eth-tunnel-3 create

…

service epipe 2000

pbb-tunnel 100 backbone-dest-mac to-AS20 isid 2000

sap 3/1/1:400 create

*A:alcmtu1-R6>config>eth-cfm# info

----------------------------------------------

domain 1 name "ivpls" level 4

association 1 format string name "ivpls"

bridge-identifier 5000

mhf-creation explicit

exit

ccm-interval 1

remote-mepid 51

exit

exit

----------------------------------------------

*A:alcag1-R6>config>eth-cfm#

*A:alcmtu1-R6# configure service vpls 5000

*A:alcmtu1-R6>config>service>vpls# info

----------------------------------------------

send-flush-on-failure

send-flush-on-failure-into-bvpls

backbone-vpls 10000

exit

stp

shutdown

exit

sap lag-5:5 create

eth-cfm

mep 56 domain 1 association 1 direction up

ccm-enable

low-priority-defect allDef

mac-address 00:AF:AF:AF:AF:AF

no shutdown

exit

exit

exit

no shutdown

----------------------------------------------

*A:alcag1-R6>config>eth-cfm# info

----------------------------------------------

domain 1 name "ivpls" level 4

association 1 format string name "ivpls"

bridge-identifier 5000

mhf-creation explicit

exit

ccm-interval 1

remote-mepid 56

exit

exit

----------------------------------------------

*A:alcag1-R6>config>eth-cfm#

*A:alcag1-R6# configure service vpls 5000

*A:alcag1-R6>config>service>vpls# info

----------------------------------------------

backbone-vpls 10000

exit

stp

shutdown

exit

sap 1/2/9:5 create

eth-cfm

mep 51 domain 1 association 1 direction up

ccm-enable

low-priority-defect allDef

mac-address 00:AE:AE:AE:AE:AE

no shutdown

exit

exit

exit

no shutdown

----------------------------------------------