IEEE 802.1ah Provider Backbone Bridging

PBB overview

IEEE 802.1ah draft standard (IEEE802.1ah), also known as Provider Backbone Bridges (PBB), defines an architecture and bridge protocols for interconnection of multiple Provider Bridge Networks (PBNs - IEEE802.1ad QinQ networks). PBB is defined in IEEE as a connectionless technology based on multipoint VLAN tunnels. IEEE 802.1ah employs Provider MSTP as the core control plane for loop avoidance and load balancing. As a result, the coverage of the solution is limited by STP scale in the core of large service provider networks.

Virtual Private LAN Service (VPLS), RFC 4762, Virtual Private LAN Service (VPLS) Using Label Distribution Protocol (LDP) Signaling, provides a solution for extending Ethernet LAN services using MPLS tunneling capabilities through a routed, traffic-engineered MPLS backbone without running (M)STP across the backbone. As a result, VPLS has been deployed on a large scale in service provider networks.

The Nokia implementation fully supports a native PBB deployment and an integrated PBB-VPLS model where desirable PBB features such as MAC hiding, service aggregation and the service provider fit of the initial VPLS model are combined to provide the best of both worlds.

PBB features

This section provides information about PBB features.

Integrated PBB-VPLS solution

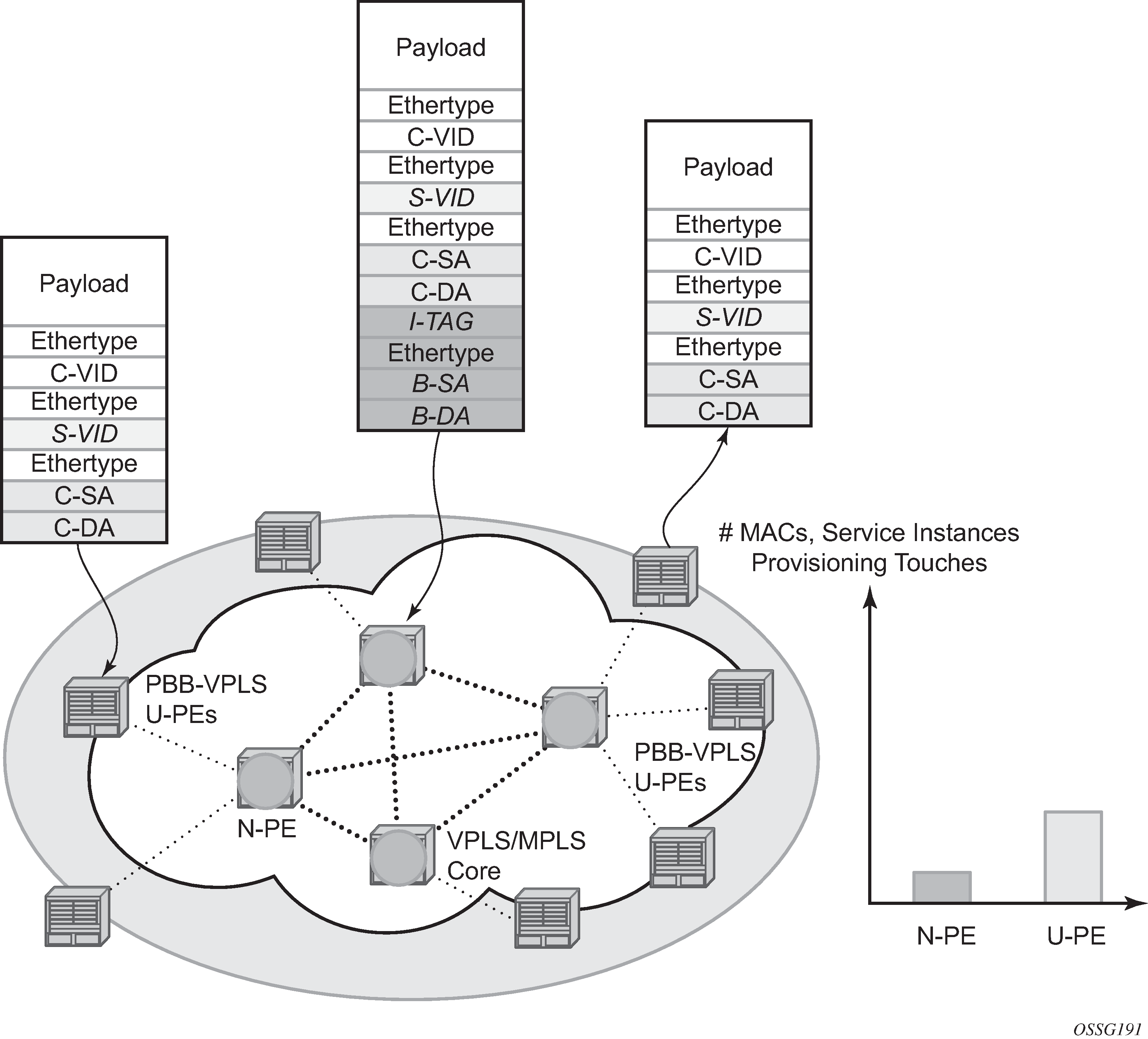

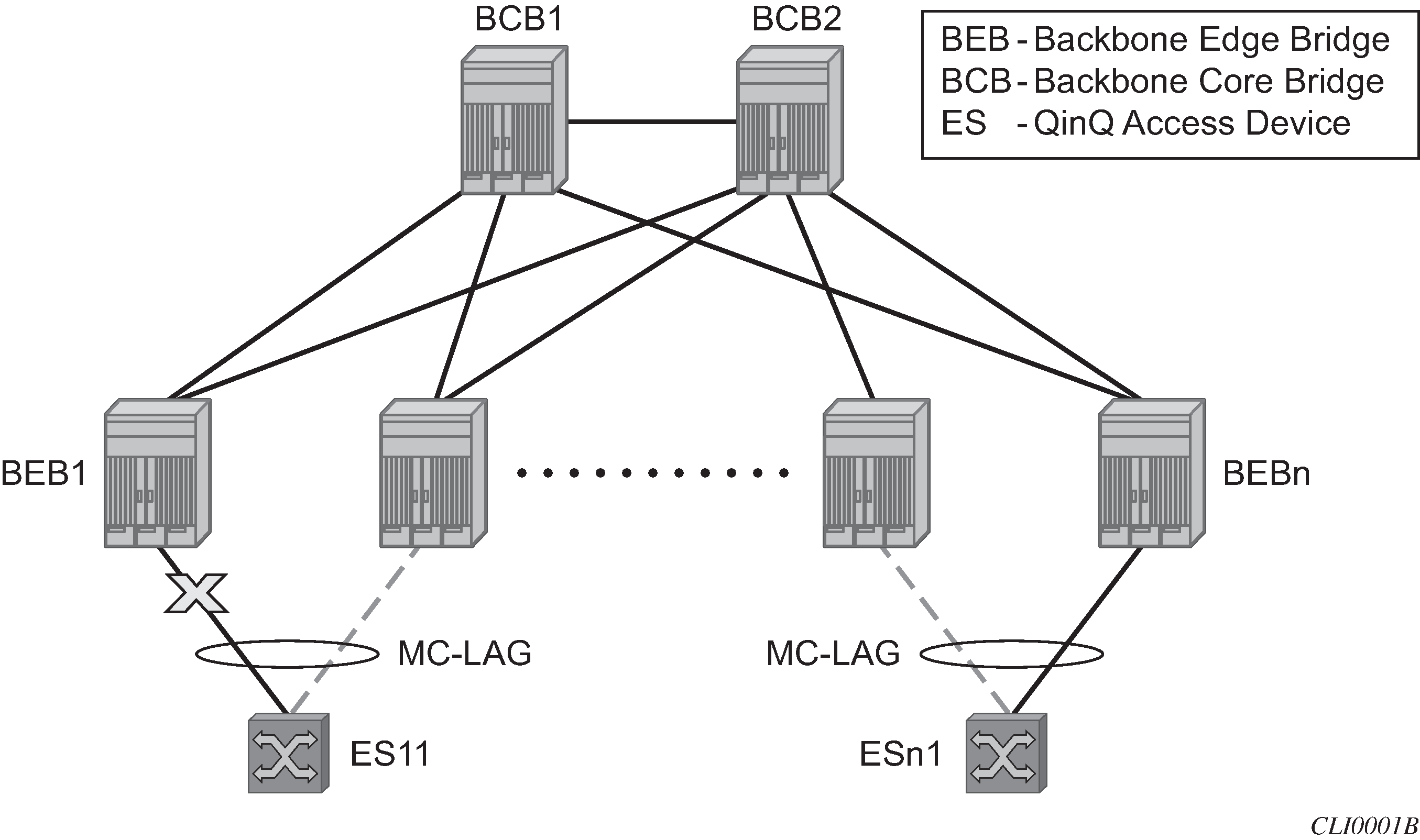

HVPLS introduced a service-aware device in a central core location to provide efficient replication and controlled interaction at domain boundaries. The core network facing provider edge (N-PE) devices have knowledge of all VPLS services and customer MAC addresses for local and related remote regions resulting in potential scalability issues as depicted in Large HVPLS deployment.

In a large VPLS deployment, it is important to improve the stability of the overall solution and to speed up service delivery. These goals are achieved by reducing the load on the N-PEs and respectively minimizing the number of provisioning touches on the N-PEs.

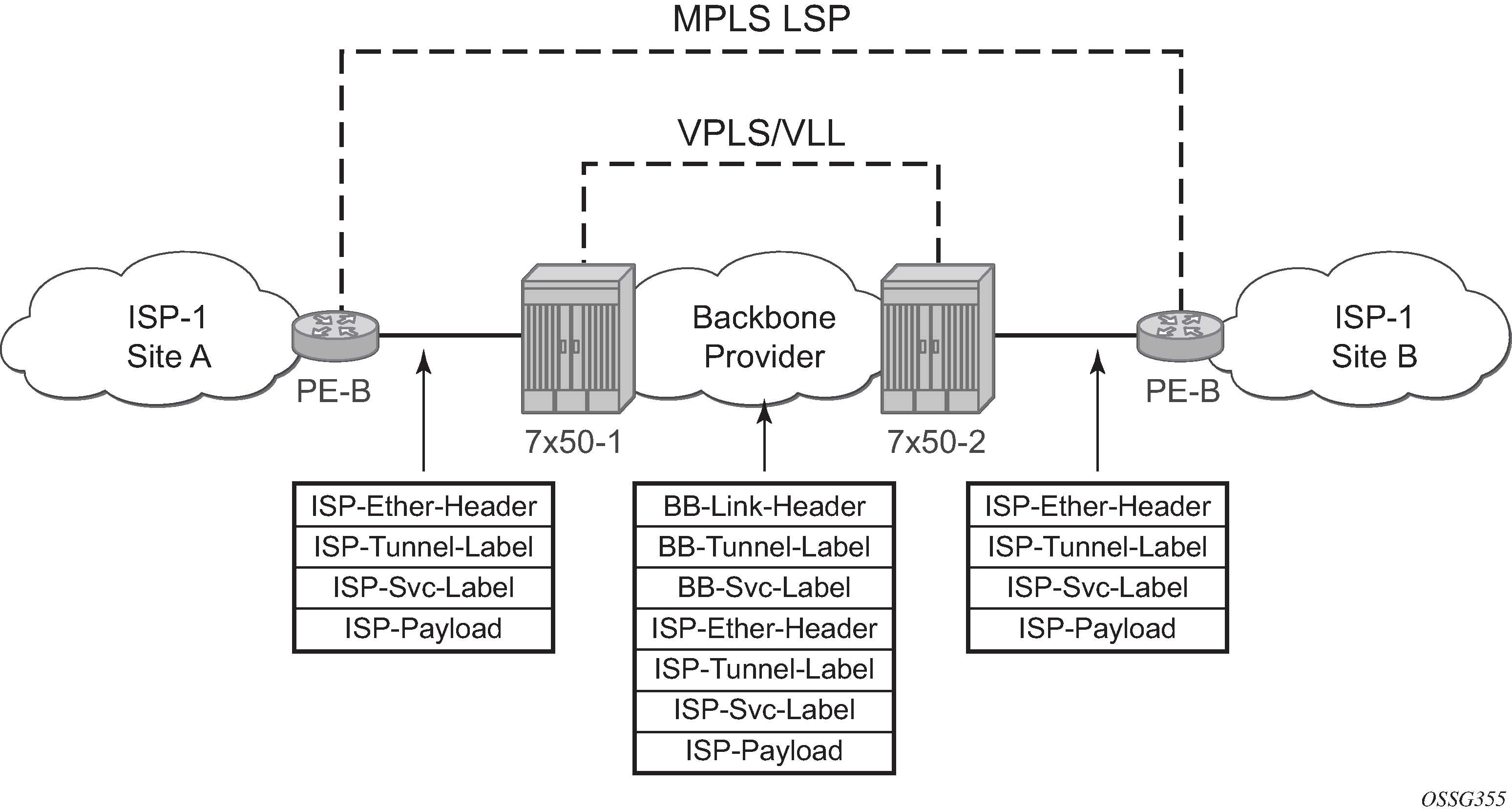

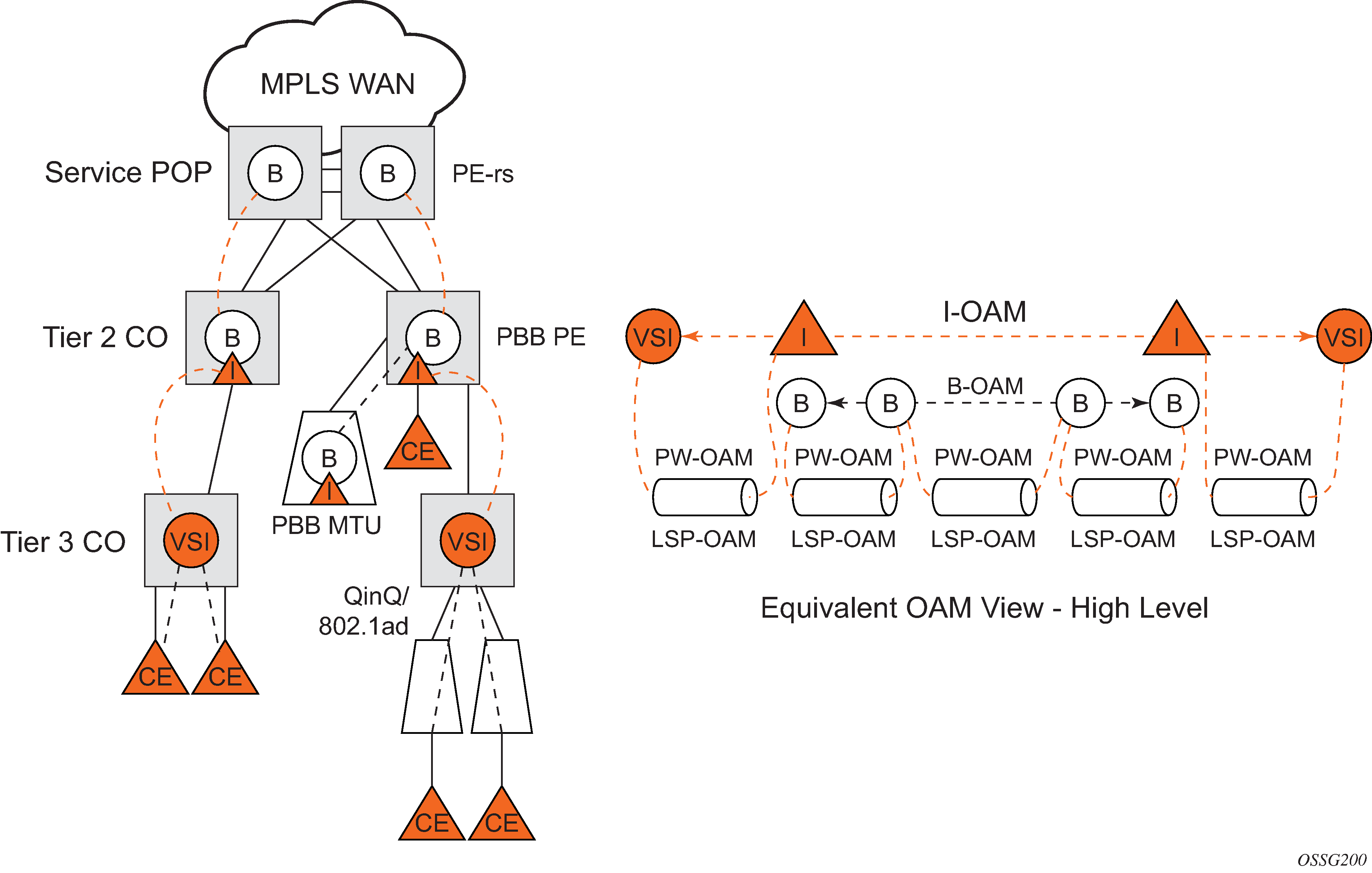

The integrated PBB-VPLS model introduces an additional PBB hierarchy in the VPLS network to address these goals as depicted in Large PBB-VPLS deployment.

PBB encapsulation is added at the user facing PE (U-PE) to hide the customer MAC addressing and topology from the N-PE devices. The core N-PEs need to only handle backbone MAC addressing and do not need to have visibility of each customer VPN. As a result, the integrated PBB-VPLS solution decreases the load in the N-PEs and improves the overall stability of the backbone.

The Nokia PBB-VPLS solution also provides automatic discovery of the customer VPNs through the implementation of IEEE 802.1ak MMRP minimizing the number of provisioning touches required at the N-PEs.

PBB technology

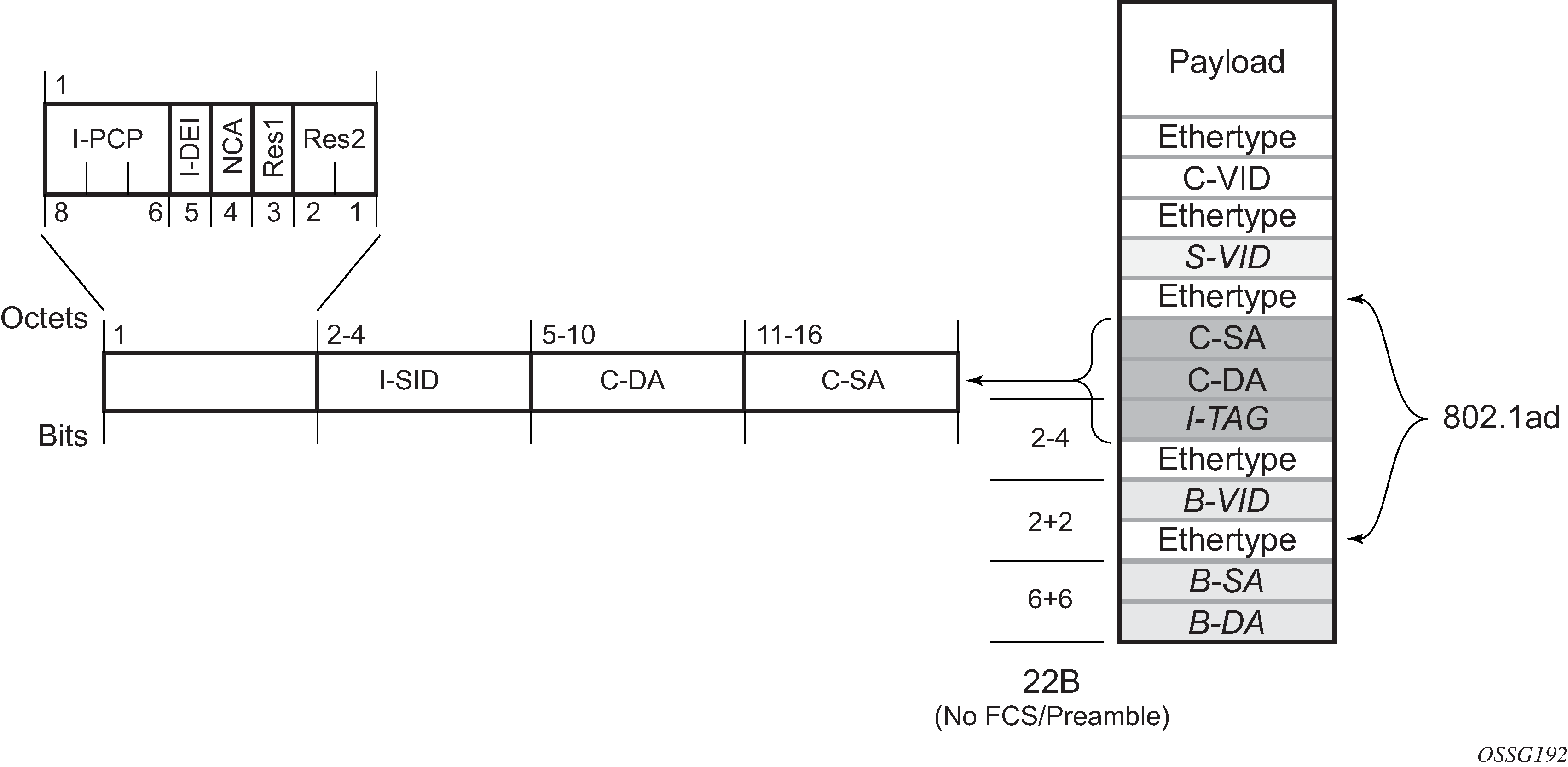

IEEE 802.1ah specification encapsulates the customer or QinQ payload in a provider header as shown in QinQ payload in provider header example.

PBB adds a regular Ethernet header where the B-DA and B-SA are the backbone destination and respectively, source MACs of the edge U-PEs. The backbone MACs (B-MACs) are used by the core N-PE devices to switch the frame through the backbone.

A special group MAC is used for the backbone destination MAC (B-DA) when handling an unknown unicast, multicast or broadcast frame. This backbone group MAC is derived from the I-service instance identifier (ISID) using the rule: a standard group OUI (01-1E-83) followed by the 24 bit ISID coded in the last three bytes of the MAC address.

The BVID (backbone VLAN ID) field is a regular DOT1Q tag and controls the size of the backbone broadcast domain. When the PBB frame is sent over a VPLS pseudowire, this field may be omitted depending on the type of pseudowire used.

The following ITAG (standard Ether-type value of 0x88E7) has the role of identifying the customer VPN to which the frame is addressed through the 24 bit ISID. Support for service QoS is provided through the priority (3 bit I-PCP) and the DEI (1 bit) fields.

PBB mapping to existing VPLS configurations

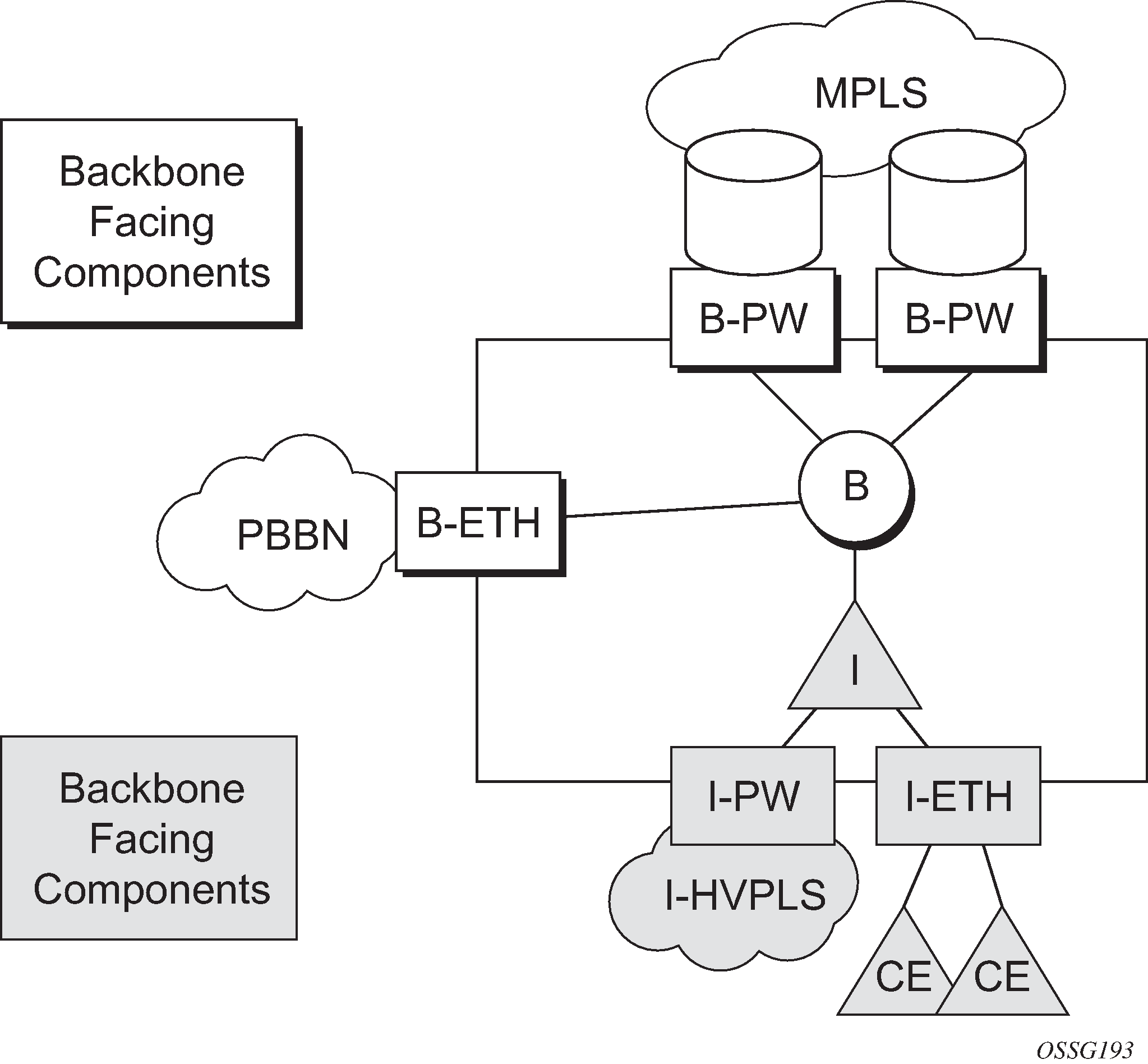

The IEEE model for PBB is organized around a B-component handling the provider backbone layer and an I-component concerned with the mapping of the customer/provider bridge (QinQ) domain (MACs, VLANs) to the provider backbone (B-MACs, B-VLANs): for example, the I-component contains the boundary between the customer and backbone MAC domains.

The Nokia implementation is extending the IEEE model for PBB to allow support for MPLS pseudowires using a chain of two VPLS context linked together as depicted in PBB mapping to VPLS configurations.

A VPLS context is used to provide the backbone switching component. The white circle marked B, referred to as backbone-VPLS (B-VPLS), operates on backbone MAC addresses providing a core multipoint infrastructure that may be used for one or multiple customer VPNs. The Nokia B-VPLS implementation allows the use of both native PBB and MPLS infrastructures.

Another VPLS context (I-VPLS) can be used to provide the multipoint I-component functionality emulating the E-LAN service (see the triangle marked ‟I” in PBB mapping to VPLS configurations). Similar to B-VPLS, I-VPLS inherits from the regular VPLS the pseudowire (SDP bindings) and native Ethernet (SAPs) handoffs accommodating this way different types of access: for example, direct customer link, QinQ or HVPLS.

To support PBB E-Line (point-to-point service), the use of an Epipe as I-component is allowed. All Ethernet SAPs supported by a regular Epipe are also supported in the PBB Epipe.

SAP and SDP support

This section provides information about SAP and SDP support.

PBB B-VPLS

The following describes SAP support for PBB B-VPLS:

Ethernet DOT1Q and QinQ are supported. This is applicable to most PBB use cases, for example, one backbone VLAN ID used for native Ethernet tunneling. In the case of QinQ, a single tag x is supported on a QinQ encapsulation port for example (1/1/1:x.* or 1/1/1:x.0).

Ethernet null is supported. This is supported for a direct connection between PBB PEs, for example, no BVID is required.

Default SAP types are blocked in the CLI for the B-VPLS SAP.

The following rules apply to the SAP processing of PBB frames:

For ‟transit frames” (not destined for a local B-MAC), there is no need to process the ITAG component of the PBB frames. Regular Ethernet SAP processing is applied to the backbone header (B-MACs and BVID).

If a local I-VPLS instance is associated with the B-VPLS, ‟local frames” originated/terminated on local I-VPLSs are PBB encapsulated/de-encapsulated using the pbb-etype provisioned under the related port or SDP component.

The following describes SDP support for PBB B-VPLS:

For MPLS, both mesh and spoke-SDPs with split horizon groups are supported.

Similar to regular pseudowire, the outgoing PBB frame on an SDP (for example, B-pseudowire) contains a BVID qtag only if the pseudowire type is Ethernet VLAN. If the pseudowire type is ‛Ethernet’, the BVID qtag is stripped before the frame goes out.

PBB I-VPLS

port level

All existing Ethernet encapsulation types are supported (for example, null, dot1q, qinq).

-

SAPs

The following describes SAP support for PBB I-VPLS:

The I-VPLS SAPs can coexist on the same port with SAPs for other business services, for example, VLL, VPLS SAPs.

All existing Ethernet encapsulation are supported: null, dot1q, qinq.

SDPs

GRE and MPLS SDP are spoke-sdp only. Mesh SDPs can just be emulated by using the same split horizon group everywhere.

Existing SAP processing rules still apply for the I-VPLS case; the SAP encapsulation definition on Ethernet ingress ports defines which VLAN tags are used to determine the service that the packet belongs to:

For null encapsulations defined on ingress, any VLAN tags are ignored and the packet goes to a default service for the SAP

For dot1q encapsulations defined on ingress, only the first VLAN tag is considered.

For qinq encapsulations defined on ingress, both VLAN tags are considered; wildcard support is for the inner VLAN tag.

For dot1q/qinq encapsulations, traffic encapsulated with VLAN tags for which there is no definition is discarded.

Any VLAN tag used for service selection on the I-SAP is stripped before the PBB encapsulation is added. Appropriate VLAN tags are added at the remote PBB PE when sending the packet out on the egress SAP.

I-VPLS services do not support the forwarding of PBB encapsulated frames received on SAPs or spoke SDPs through their associated B-VPLS service. PBB frames are identified based on the configured PBB Ethertype (0x88e7 by default).

PBB packet walkthrough

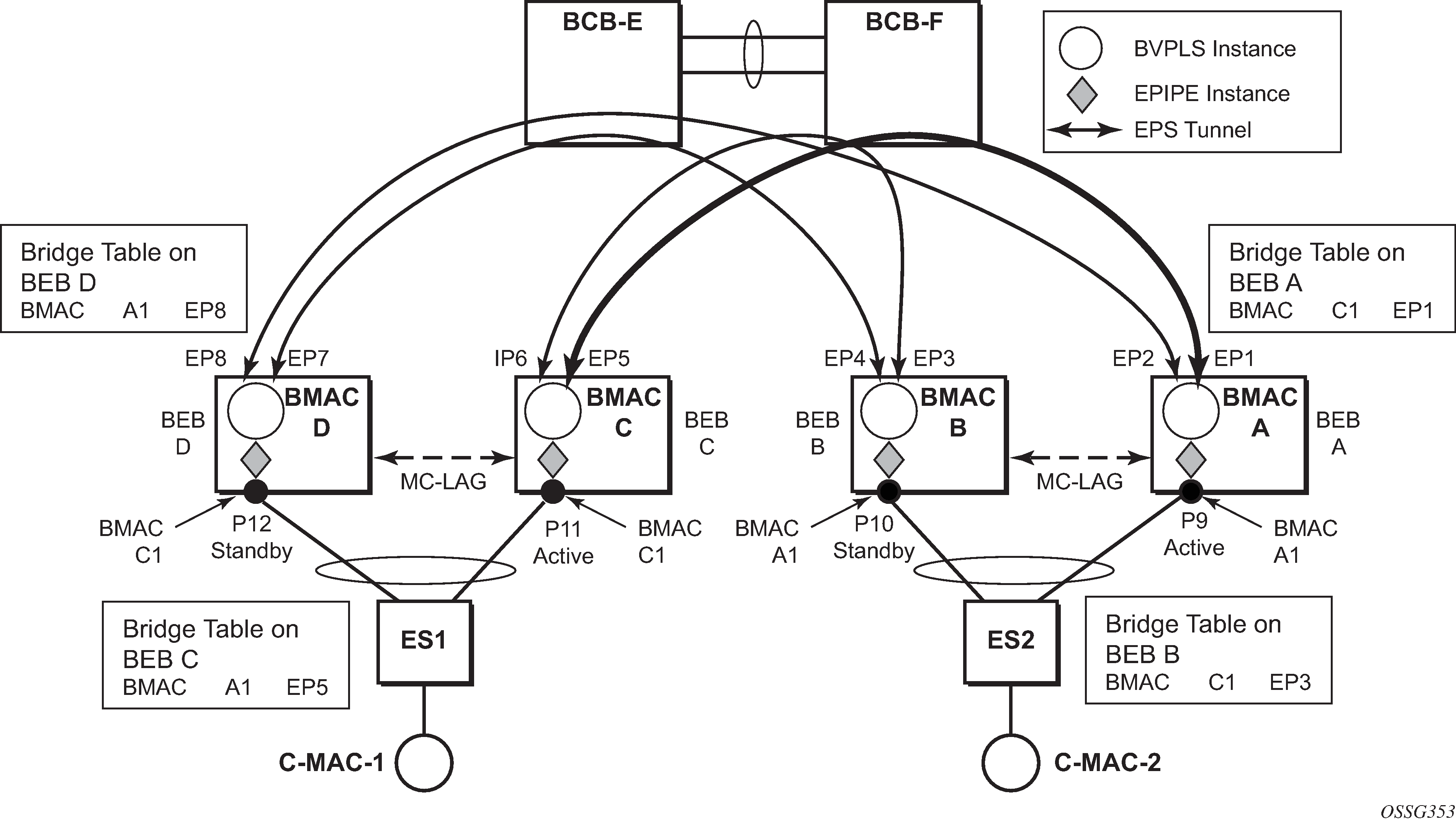

This section describes the walkthrough for a packet that traverses the B-VPLS and I-VPLS instances using the example of a unicast frame between two customer stations as depicted in the following network diagram PBB packet walkthrough.

The station with C-MAC (customer MAC) X wants to send a unicast frame to C-MAC Y through the PBB-VPLS network. A customer frame arriving at PBB-VPLS U-PE1 is encapsulated with the PBB header. The local I-VPLS FDB on U-PE1 is consulted to determine the destination B-MAC of the egress U-PE for C-MAC Y. In our example, B2 is assumed to be known as the B-DA for Y. If C-MAC Y is not present in the U-PE1 forwarding database, the PBB packet is sent in the B-VPLS using the standard group MAC address for the ISID associated with the customer VPN. If the up link to the N-PE is a spoke pseudowire, the related PWE3 encapsulation is added in front of the B-DA.

Next, only the Backbone Header in green is used to switch the frame through the green B-VPLS/VPLS instances in the N-PEs. At the receiving U-PE2, the C-MAC X is learned as being behind B-MAC B1; then the PBB encapsulation is removed and the lookup for C-MAC Y is performed. In the case where a pseudowire is used between N-PE and U-PE2, the pseudowire encapsulation is removed first.

PBB control planes

PBB technology can be deployed in a number of environments. Natively, PBB is an Ethernet data plane technology that offers service scalability and multicast efficiency.

Environment:

MPLS (mesh and spoke-SDPs)

Ethernet SAPs

Within these environments, SR OS offers a number of optional control planes:

Shortest Path Bridging MAC (SPBM) (SAPs and spoke-SDPs); see SPBM

Rapid Spanning Tree Protocol (RSTP) optionally with MMRP (SAPs and spoke-SDPs); see MMRP support over B-VPLS SAPs and SDPs.

- MSTP optionally with MMRP (SAPs and spoke-SDPs); see Multiple spanning tree.

Multiple MAC registration Protocol (MMRP) alone (SAPs, spoke and mesh SDPs); see IEEE 802.1ak MMRP for service aggregation and zero touch provisioning.

In general a control plane is required on Ethernet SAPs, or SDPs where there could be physical loops. Some network configurations of Mesh and Spoke SDPs can avoid physical loops and no control plane is required.

The choice of control plane is based on the requirement of the networks. SPBM for PBB offers a scalable link state control plane without B-MAC flooding and learning or MMRP. RSTP and MSTP offer Spanning tree options based on B-MAC flooding and learning. MMRP is used with flooding and learning to improve multicast.

SPBM

Shortest Path Bridging (SPB) enables a next generation control plane for PBB based on IS-IS that adds the stability and efficiency of link state to unicast and multicast services. Specifically this is an implementation of SPBM (SPB MAC mode). Current SR OS PBB B-VPLS offers point-to-point and multipoint to multipoint services with large scale. PBB B-VPLS is deployed in both Ethernet and MPLS networks supporting Ethernet VLL and VPLS services. SPB removes the flooding and learning mode from the PBB Backbone network and replaces MMRP for ISID Group MAC Registration providing flood containment. SR OS SPB provides true shortest path forwarding for unicast and efficient forwarding on a single tree for multicast. It supports selection of shortest path equal cost tie-breaking algorithms to enable diverse forwarding in an SPB network.

Flooding and learning versus link state

SPB brings a link state capability that improves the scalability and performance for large networks over the xSTP flooding and learning models. Flooding and learning has two consequences. First, a message invoking a flush must be propagated, second the data plane is allowed to flood and relearn while flushing is happening. Message based operation over these data planes may experience congestion and packet loss.

| PBB B-VPLS Control plane |

Flooding and learning | Multipath | Convergence time |

|---|---|---|---|

xSTP |

Yes |

MSTP |

xSTP + MMRP |

G.8032 |

Yes |

Multiple Ring instances Ring topologies only |

Eth-OAM based + MMRP |

SPB-M |

No |

Yes –ECT based |

IS-IS link state (incremental) |

Link state operates differently in that only the information that truly changes needs to be updated. Traffic that is not affected by a topology change does not have to be disturbed and does not experience congestion because there is no flooding. SPB is a link state mechanism that uses restoration to reestablish the paths affected by topology change. It is more deterministic and reliable than RSTP and MMRP mechanisms. SPB can handle any number of topology changes and as long as the network has some connectivity, SPB does not isolate any traffic.

SPB for B-VPLS

The SR OS model supports PBB Epipes and I-VPLS services on the B-VPLS. SPB is added to B-VPLS in place of other control planes (see B-VPLS control planes). SPB runs in a separate instance of IS-IS. SPB is configured in a single service instance of B-VPLS that controls the SPB behavior (via IS-IS parameters) for the SPB IS-IS session between nodes. Up to four independent instances of SPB can be configured. Each SPB instance requires a separate control B-VPLS service. A typical SPB deployment uses a single control VPLS with zero, one or more user B-VPLS instances. SPB is multi-topology (MT) capable at the IS-IS LSP TLV definitions however logical instances offer the nearly the same capability as MT. The SR OS SPB implementation always uses MT topology instance zero. Area addresses are not used and SPB is assumed to be a single area. SPB must be consistently configured on nodes in the system. SPB Regions information and IS-IS hello logic that detect mismatched configuration are not supported.

SPB Link State PDUs (LSPs) contains B-MACs, I-SIDs (for multicast services) and link and metric information for an IS-IS database. Epipe I-SIDs are not distributed in SR OS SPB allowing high scalability of PBB Epipes. I-VPLS I-SIDs are distributed in SR OS SPB and the respective multicast group addresses are automatically populated in forwarding in a manner that provides automatic pruning of multicast to the subset of the multicast tree that supports I-VPLS with a common I-SID. This replaces the function of MMRP and is more efficient than MMRP so that in the future, SPB scales to a greater number of I-SIDs.

SPB on SR OS can leverage MPLS networks or Ethernet networks or combinations of both. SPB allows PBB to take advantage of multicast efficiency and at the same time leverage MPLS features such as resiliency.

Control B-VPLS and user B-VPLS

Control B-VPLS are required for the configuration of the SPB parameters and as a service to enable SPB. Control B-VPLS therefore must be configured everywhere SPB forwarding is expected to be active even if there are no terminating services. SPB uses the logical instance and a Forwarding ID (FID) to identify SPB locally on the node. The FID is used in place of the SPB VLAN identifier (Base VID) in IS-IS LSPs enabling a reference to exchange SPB topology and addresses. More specifically, SPB advertises B-MACs and I-SIDs in a B-VLAN context. Because the service model in SR OS separates the VLAN Tag used on the port for encapsulation from the VLAN ID used in SPB the SPB VLAN is a logical concept and is represented by configuring a FID. B-VPLS SAPs use VLAN Tags (SAPs with Ethernet encapsulation) that are independent of the FID value. The encapsulation is local to the link in SR/ESS so the SAP encapsulation has been configured the same between neighboring switches. The FID for a specified instance of SPB between two neighbor switches must be the same. The independence of VID encapsulation is inherent to SR OS PBB B-VPLS. This also allows spoke-SDP bindings to be used between neighboring SPB instances without any VID tags. The one exception is mesh SDPs are not supported but arbitrary mesh topologies are supported by SR OS SPB.

Control and user B-VPLS with FIDs shows two switches where an SPB control B-VPLS configured with FID 1 and uses a SAP with 1/1/1:5 therefore using a VLAN Tag 5 on the link. The SAP 1/1/1:1 could also have been be used but in SR OS the VID does not have to equal FID. Alternatively an MPLS PW (spoke-SDP binding) could be for some interfaces in place of the SAP. Control and user B-VPLS with FIDs shows a control VPLS and two user B-VPLS. The User B-VPLS must share the same topology and are required to have interfaces on SAPs/Spoke SDPs on the same links or LAG groups as the B-VPLS. To allow services on different B-VPLS to use a path when there are multiple paths a different ECT algorithm can be configured on a B-VPLS instance. In this case, the user B-VPLS still fate shared the same topology but they may use different paths for data traffic; see Shortest path and single tree.

Each user BVPLS offers the same service capability as a control B-VPLS and are configured to ‟follow” or fate share with a control B-VPLS. User B-VPLS must be configured as active on the whole topology where control B-VPLS is configured and active. If there is a mismatch between the topology of a user B-VPLS and the control B-VPLS, only the user B-VPLS links and nodes that are in common with the control B-VPLS function. The services on any B-VPLS are independent of a particular user B-VPLS so a misconfiguration of one of the user B-VPLS does not affect other B-VPLS. For example if a SAP or spoke-SDP is missing in the user B-VPLS any traffic from that user B-VPLS that would use that interface, is missing forwarding information and traffic is dropped only for that B-VPLS. The computation of paths is based only on the control B-VPLS topology.

User B-VPLS instances supporting only unicast services (PBB-Epipes) may share the FID with the other B-VPLS (control or user). This is a configuration short cut that reduces the LSP advertisement size for B-VPLS services but results in the same separation for forwarding between the B-VPLS services. In the case of PBB-Epipes only B-MACs are advertised per FID but B-MACs are populated per B-VPLS in the FDB. If I-VPLS services are to be supported on a B-VPLS that B-VPLS must have an independent FID.

Shortest path and single tree

IEEE 802.1aq standard SPB uses a source specific tree model. The standard model is more computationally intensive for multicast traffic because in addition to the SPF algorithm for unicast and multicast from a single node, an all pairs shorted path needs to be computed for other nodes in the network. In addition, the computation must be repeated for each ECT algorithm. While the standard yields efficient shortest paths, this computation is overhead for systems where multicast traffic volume is low. Ethernet VLL and VPLS unicast services are popular in PBB networks and the SR OS SPB design is optimized for unicast delivery using shortest paths. Ethernet supporting unicast and multicast services are commonly deployed in Ethernet transport networks. SR OS SPB Single tree multicast (also called shared tree or *,G) operates similarly today. The difference is that SPB multicast never floods unknown traffic.

The SR OS implementation of SPB with shortest path unicast and single tree multicast, requires only two SPF computations per topology change reducing the computation requirements. One computation is for unicast forwarding and the other computation is for multicast forwarding.

A single tree multicast requires selecting a root node much like RSTP. Bridge priority controls the choice of root node and alternate root nodes. The numerically lowest Bridge Priority is the criteria for choosing a root node. If multiple nodes have the same Bridge Priority, then the lowest Bridge Identifier (System Identifier) is the root.

In SPB the source-bmac can override the chassis-mac allowing independent control of tie breaking, The shortest path unicast forwarding does not require any special configuration other than selecting the ECT algorithm by configuring a B-VPLS use a FID with low-path-id algorithm or high-path-id algorithm to be the tiebreaker between equal cost paths. Bridge priority allows some adjustment of paths. Configuring link metrics adjusts the number of equal paths.

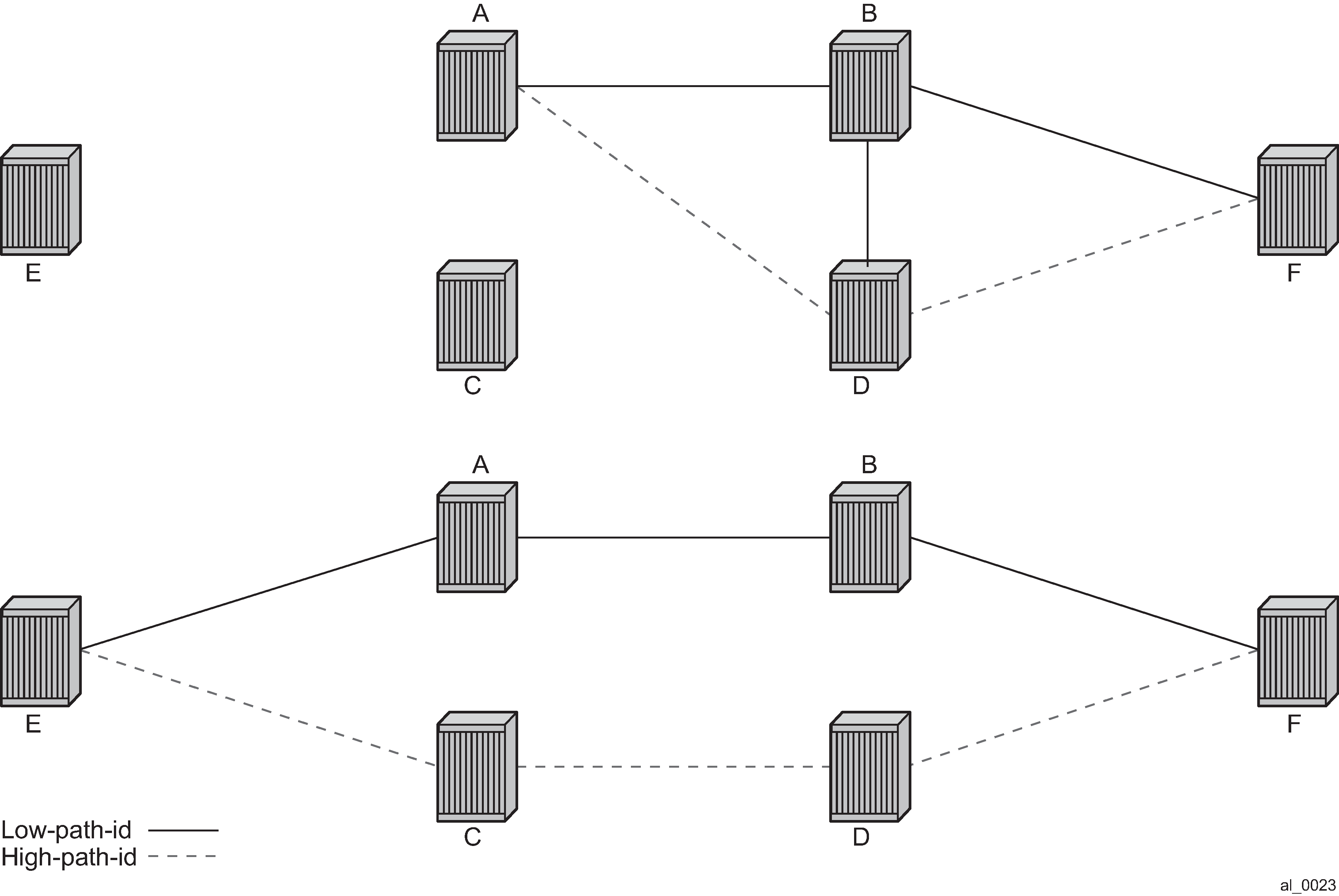

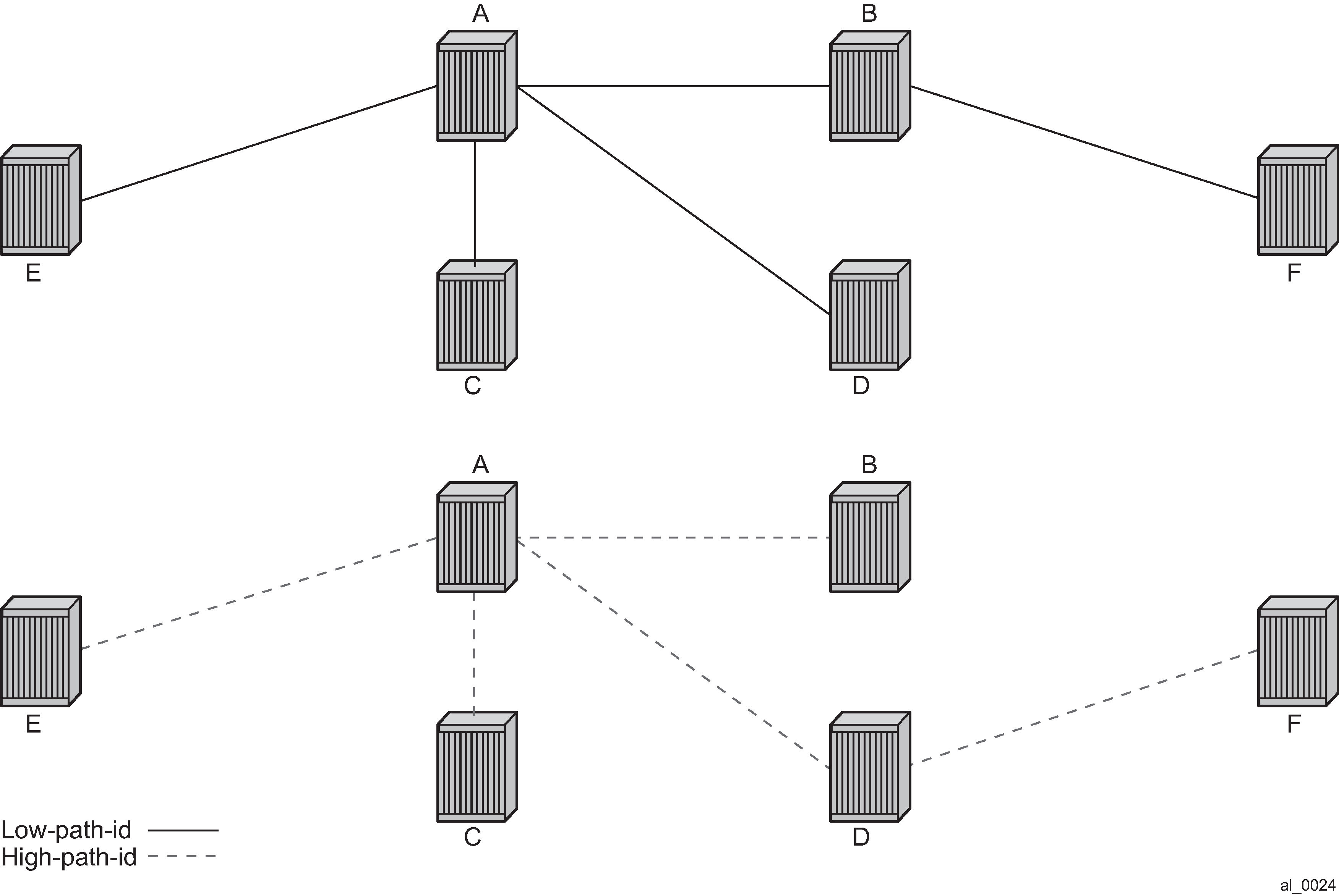

To illustrate the behavior of the path algorithms an example network is shown in Example partial mesh network.

Assume that Node A is the lowest Bridge Identifier and the Multicast root node and all links have equal metrics. Also, assume that Bridge Identifiers are ordered such that Node A has a numerically lower Bridge identifier than Node B, and Node B has lower Bridge Identifier than Node C, and so on, Unicast paths are configured to use shortest path tree (SPT). Unicast paths for low-path-id and high-path-id shows the shortest paths computed from Node A and Node E to Node F. There are only two shortest paths from A to F. A choice of low-path-id algorithm uses Node B as transit node and a path using high-path-id algorithm uses Node D as transit node. The reverse paths from Node F to A are the same (all unicast paths are reverse path congruent). For Node E to Node F there are three paths E-A-B-F, E-A-D-F, and E-C-D-F. The low-path-id algorithm uses path E-A-B-F and the high-path-id algorithm uses E-C-D-F. These paths are also disjoint and are reverse path congruent. Any nodes that are directly connected in this network have only one path between them (not shown for simplicity).

For Multicast paths the algorithms used are the same low-path-id or high-path-id but the tree is always a single tree using the root selected as described earlier (in this case Node A). Multicast paths for low-path-id and high-path-id shows the multicast paths for low-path-id and high-path-id algorithm.

All nodes in this network use one of these trees. The path for multicast to/from Node A is the same as unicast traffic to/from Node A for both low-path-id and high-path-id. However, the multicast path for other nodes is now different from the unicast paths for some destinations. For example, Node E to Node F is now different for high-path-id because the path must transit the root Node A. In addition, the Node E multicast path to C is E-A-C even though E has a direct path to Node C. A rule of thumb is that the node chosen to be root should be a well-connected node and have available resources. In this example, Node A and Node D are the best choices for root nodes.

The distribution of I-SIDs allows efficient pruning of the multicast single tree on a per I-SID basis because only MFIB entries between nodes on the single tree are populated. For example, if Nodes A, B and F share an I-SID and they use the low-path–id algorithm only those three nodes would have multicast traffic for that I-SID. If the high-path-id algorithm is used traffic from Nodes A and B must go through D to get to Node F.

Data path and forwarding

The implementation of SPB on SR OS uses the PBB data plane. There is no flooding of B-MAC based traffic. If a B-MAC is not found in the FDB, traffic is dropped until the control plane populates that B-MAC. Unicast B-MAC addresses are populated in all FDBs regardless of I-SID membership. There is a unicast FDB per B-VPLS both control B-VPLS and user BVPLS. B-VPLS instances that do not have any I-VPLS, have only a default multicast tree and do not have any multicast MFIB entries.

The data plane supports an ingress check (reverse path forwarding check) for unicast and multicast frames on the respective trees. Ingress check is performed automatically. For unicast or multicast frames the B-MAC of the source must be in the FDB and the interface must be valid for that B-MAC or traffic is dropped. The PBB encapsulation (See PBB technology) is unchanged from current SR OS. Multicast frames use the PBB Multicast Frame format and SPBM distributes I-VPLS I-SIDs which allows SPB to populate forwarding only to the relevant branches of the multicast tree. Therefore, SPB replaces both spanning tree control and MMRP functionality in one protocol.

By using a single tree for multicast the amount of MFIB space used for multicast is reduced (per source shortest path trees for multicast are not currently offered on SR OS). In addition, a single tree reduces the amount of computation required when there is topology change.

SPB Ethernet OAM

Ethernet OAM works on Ethernet services and use a combination of unicast with learning and multicast addresses. SPB on SR OS supports both unicast and multicast forwarding, but with no learning and unicast and multicast may take different paths. In addition, SR OS SPB control plane offers a wide variety of show commands. The SPB IS-IS control plane takes the place of many Ethernet OAM functions. SPB IS-IS frames (Hello and PDU and so on) are multicast but they are per SPB interface on the control B-VPLS interfaces and are not PBB encapsulated.

All Client Ethernet OAM is supported from I-VPLS interfaces and PBB Epipe interfaces across the SPB domain. Client OAM is the only true test of the PBB data plane. The only forms of Eth-OAM supported directly on SPB B-VPLS are Virtual MEPS (vMEPs). Only CCM is supported on these vMEPs; vMEPs use a S-TAG encapsulation and follow the SPB multicast tree for the specified B-VPLS. Each MEP has a unicast associated MAC to terminate various ETH-CFM tools. However, CCM messages always use a destination Layer 2 multicast using 01:80:C2:00:00:3x (where x = 0 to 7). vMEPs terminate CCM with the multicast address. Unicast CCM can be configured for point to point associations or hub and spoke configuration but this would not be typical (when unicast addresses are configured on vMEPs they are automatically distributed by SPB in IS-IS).

Up MEPs on services (I-VPLS and PBB Epipes) are also supported and these behave as any service OAM. These OAM use the PBB encapsulation and follow the PBB path to the destination.

Link OAM or 802.1ah EFM is supported below SPB as standard. This strategy of SPB IS-IS and OAM gives coverage.

| OAM origination | Data plane support | Comments |

|---|---|---|

PBB-Epipe or Customer CFM on PBB Epipe. Up MEPs on PBB Epipe. |

Fully Supported. Unicast PBB frames encapsulating unicast/multicast. |

Transparent operation. Uses Encapsulated PBB with Unicast B-MAC address. |

I-VPLS or Customer CFM on I-VPLS. Up MEPs on I-VPLS. |

Fully Supported. Unicast/Multicast PBB frames determined by OAM type. |

Transparent operation. Uses Encapsulated PBB frames with Multicast/Unicast B-MAC address. |

vMEP on B-VPLS Service. |

CCM only. S-Tagged Multicast Frames. |

Ethernet CCM only. Follows the Multicast tree. Unicast addresses may be configured for peer operation. |

In summary SPB offers an automated control plane and optional Eth-CFM/Eth-EFM to allow monitoring of Ethernet Services using SPB. B-VPLS services PBB Epipes and I-VPLS services support the existing set of Ethernet capabilities.

SPB levels

Levels are part of IS-IS. SPB supports Level 1 within a control B-VPLS. Future enhancements may make use of levels.

SPBM to non-SPBM interworking

By using static definitions of B-MACs and ISIDs interworking of PBB Epipes and I-VPLS between SPBM networks and non-SPBM PBB networks can be achieved.

Static MACs and static ISIDs

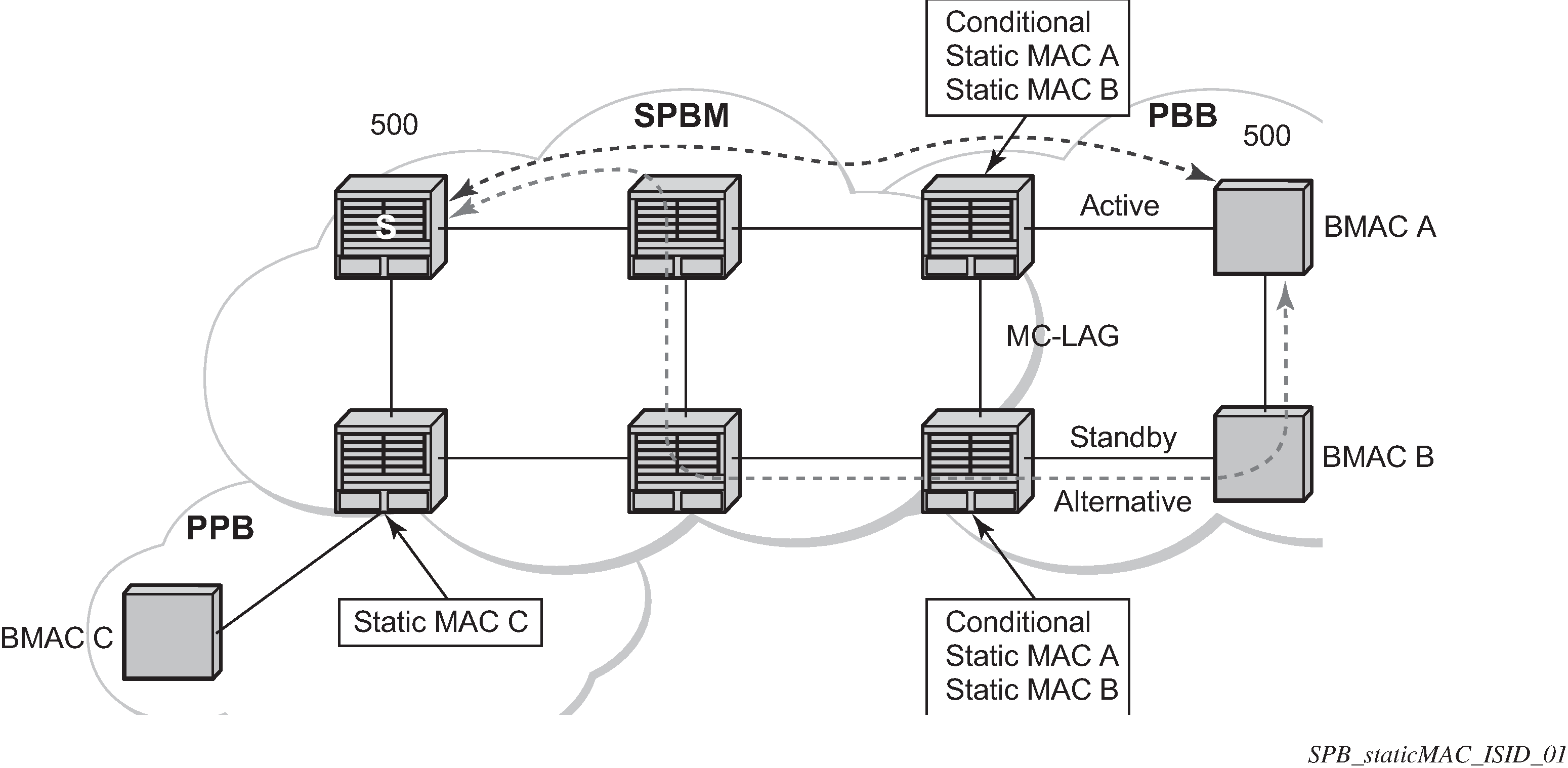

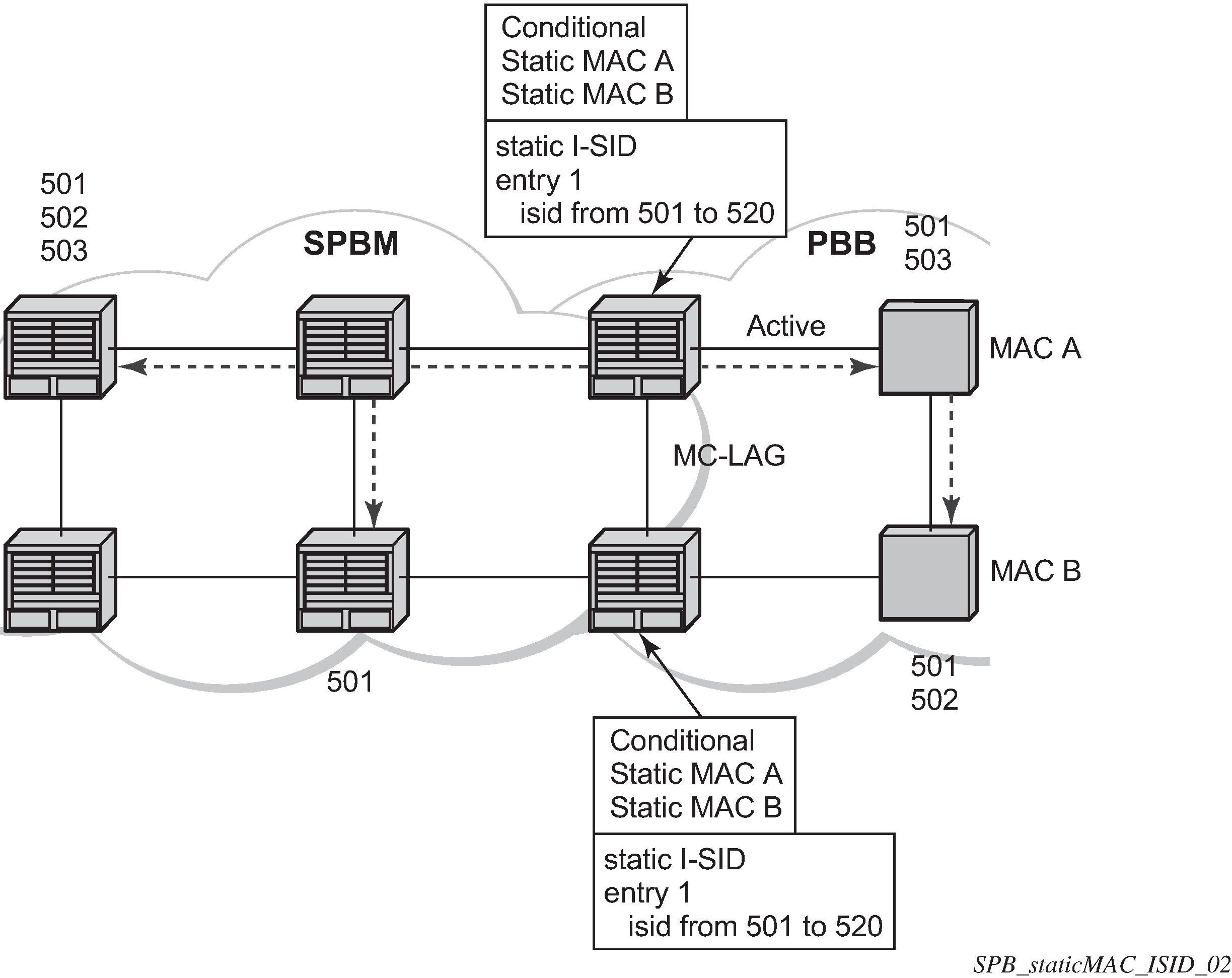

To extend SPBM networks to other PBB networks, static MACs and ISIDs can be defined under SPBM SAPs/SDPs. The declaration of a static MAC in an SPBM context allows a non-SPBM PBB system to receive frames from an SPBM system. These static MACs are conditional on the SAP/SDP operational state. Currently this is only supported for SPBM because SPBM can advertise these B-MACs and ISIDs without any requirement for flushing. The B-MAC (and B-MAC to ISID) must remain consistent when advertised in the IS-IS database.

The declaration of static-isids allows an efficient connection of ISID based services. The ISID is advertised as supported on the local nodal B-MAC and the static B-MACs which are the true destinations for the ISIDs are also advertised. When the I-VPLS learn the remote B-MAC they associate the ISID with the true destination B-MAC. Therefore if redundancy is used the B-MACs and ISIDs that are advertised must be the same on any redundant interfaces.

If the interface is an MC-LAG interface the static MAC and ISIDs on the SAPs/SDPs using that interface are only active when the associated MC-LAG interface is active. If the interface is a spoke-SDP on an active/ standby pseudo wire (PW) the ISIDs and B-MACs are only active when the PW is active.

Epipe static configuration

For Epipe only, the B-MACs need to be advertised. There is no multicast for PBB Epipes. Unicast traffic follows the unicast path shortest path or single tree. By configuring remote B-MACs Epipes can be setup to non-SPBM systems. A special conditional static-mac is used for SPBM PBB B-VPLS SAPs/SDPs that are connected to a remote system. In the diagram ISID 500 is used for the PBB Epipe but only conditional MACs A and B are configured on the MC-LAG ports. The B-VPLS advertises the static MAC either always or optionally based on a condition of the port forwarding.

I-VPLS static config

I-VPLS static config consists of two components: static-mac and static ISIDs that represent a remote B-MAC-ISID combination.

The static-MACs are configured as with Epipe, the special conditional static-mac is used for SPBM PBB B-VPLS SAPs/SDPs that are connected to a remote system. The B-VPLS advertises the static MAC either always or optionally based on a condition of the port forwarding.

The static-isids are created under the B-VPLS SAP/SDPs that are connected to a non-SPBM system. These ISIDs are typically advertised but may be controlled by ISID policy.

For I-VPLS ISIDs the ISIDs are advertised and multicast MAC are automatically created using PBB-OUI and the ISID. SPBM supports the pruned multicast single tree. Unicast traffic follows the unicast path shortest path or single tree. Multicast/and unknown Unicast follow the pruned single tree for that ISID.

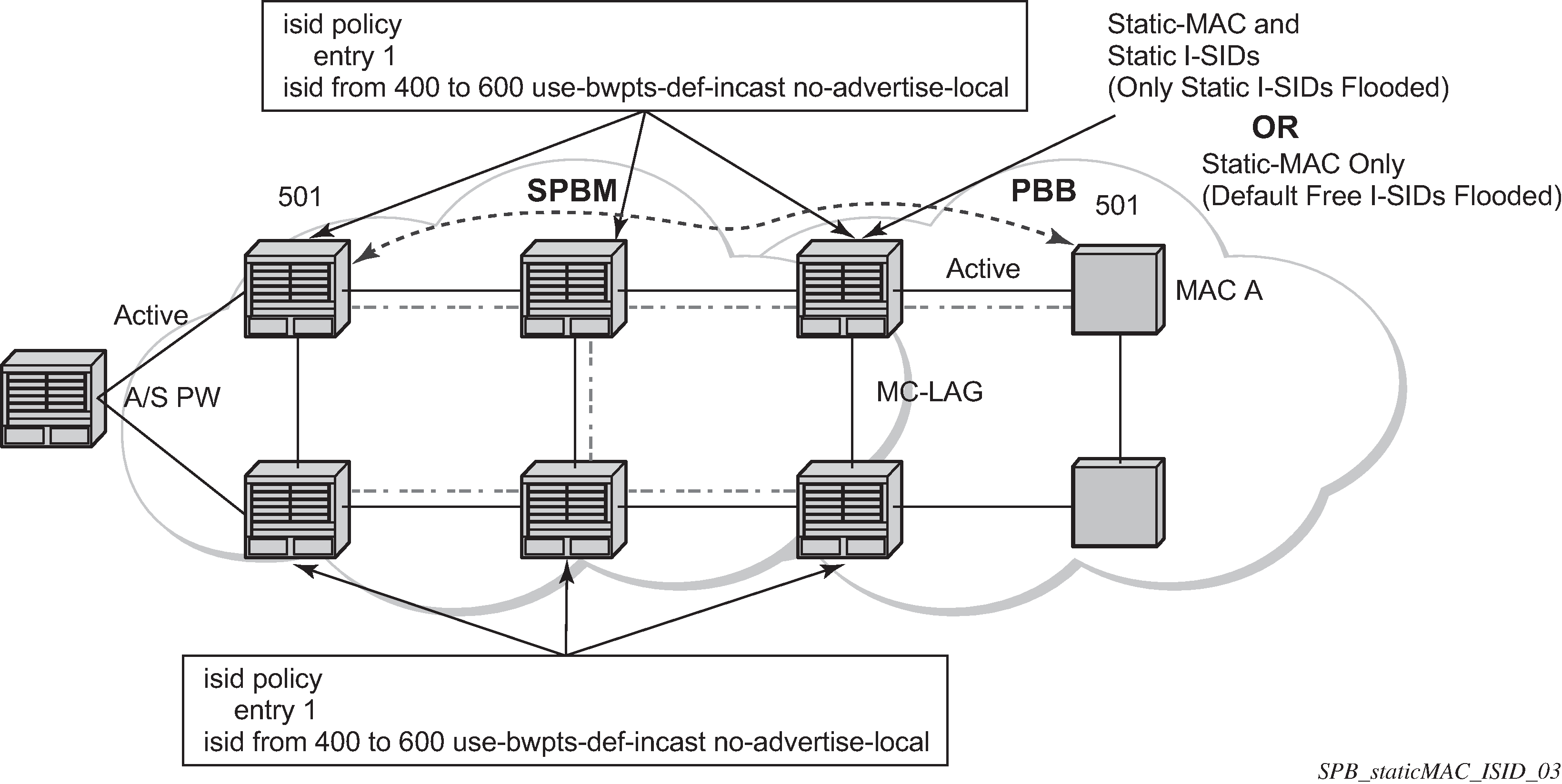

SPBM ISID policies

ISID policies are an optional aspect of SPBM which allow additional control of ISIDs for I-VPLS. PBB services using SPBM automatically populate multicast for I-VPLS and static-isids. Incorrect use of isid-policy can create black holes or additional flooding of multicast.

To enable more flexible multicast, ISID policies control the amount of MFIB space used by ISIDs by trading off the default Multicast tree and the per ISID multicast tree. Occasionally customers want services that use I-VPLS that have multiple sites but use primarily unicast. The ISID policy can be used on any node where an I-VPLS is defined or static ISIDs are defined.

The typical use is to suppress the installation of the ISID in the MFIB using use-def-mcast and the distribution of the ISID in SPBM by using no advertise-local.

The use-def-mcast policy instructs SPBM to use the default B-VPLS multicast forwarding for the ISID range. The ISID multicast frame remains unchanged by the policy (the standard format with the PBB OUI and the ISID as the multicast destination address) but no MFIB entry is allocated. This causes the forwarding to use the default BVID multicast tree which is not pruned. When this policy is in place it only governs the forwarding locally on the current B-VPLS.

The advertise local policy ISID policies are applied to both static ISIDs and I-VPLS ISIDs. The policies define whether the ISIDs are advertised in SPBM and whether the use the local MFIB. When ISIDs are advertised they use the MFIB in the remote nodes. Locally the use of the MFIB is controlled by the use-def-mcast policy.

The types of interfaces are summarized in SPBM ISID policies table.

| Service type | ISID policy on B-VPLS | Notes |

|---|---|---|

Epipe |

No effect |

PBB Epipe ISIDs are not advertised or in MFIB. |

I-VPLS |

None: Uses ISID Multicast tree. Advertised ISIDs of I-VPLS. |

I-VPLS uses dedicated (pruned) multicast tree. ISIDs are advertised. |

I-VPLS (for Unicast) |

use-def-mcast no advertise-local |

I-VPLS uses default Multicast. Policy only required where ISIDs are defined. ISIDs not advertised. must be consistently defined on all nodes with same ISIDs. |

I-VPLS (for Unicast) |

use-def-mcast advertise-local |

I-VPLS uses default Multicast. Policy only required where ISIDs are defined. ISIDs advertised and pruned tree used elsewhere. May be inconsistent for an ISID. |

Static ISIDs for I-VPLS interworking |

None: (recommended) Uses ISID Multicast tree |

I-VPLS uses dedicated (pruned) multicast tree. ISIDs are advertised. |

Static ISIDs for I-VPLS interworking (defined locally) |

use-def-mcast |

I-VPLS uses default Multicast. Policy only required where ISIDs are configured or where I-VPLS is located. |

No MFIB for any ISIDs Policy defined on all nodes |

use-def-mcast no advertise-local |

Each B-VPLS with the policy does not install MFIB. Policy defined on all switches ISIDs are defined. ISIDs advertised and pruned tree used elsewhere. May be inconsistent for an ISID. |

ISID policy control

Static ISID advertisement

Static ISIDs are advertised between using the SPBM Service Identifier and Unicast Address sub-TLV in IS-IS when there is no ISID policy. This TLV advertises the local B-MAC and one or more ISIDs. The B-MAC used is the source-bmac of the Control/User VPLS. Typically remote B-MACs (the ultimate source-bmac) and the associated ISIDs are configured as static under the SPBM interface. This allows all remote B-MACs and all remote ISIDs to be configured one time per interface.

I-VPLS for unicast service

If the service is using unicast only an I-VPLS still uses MFIB space and SPBM advertises the ISID. By using the default multicast tree locally, a node saves MFIB space. By using the no advertise-local SPBM does not advertise the ISIDs covered by the policy. Note the actual PBB multicast frames are the same regardless of policy. Unicast traffic is the not changed for the ISID policies.

The Static B-MAC configuration is allowed under Multi-Chassis LAG (MC-LAG) based SAPs and active/standby PW SDPs.

Unicast traffic follows the unicast path shortest path or single tree. By using the ISID policy Multicast/and unknown Unicast traffic (BUM) follows the default B-VPLS tree in the SPBM domain. This should be used sparingly for any high volume of multicast services.

Default behaviors

When static ISIDs are defined the default is to advertise the static ISIDs when the interface parent (SAP or SDP) is up.

If the advertisement is not needed, an ISID policy can be created to prevent advertising the ISID.

-

use-def-mcast

If a policy is defined with use-def-mcast the local MFIB does not contain an Multicast MAC based on the PBB OUI+ ISID and the frame is flooded out the local tree. This applies to any node where the policy is defined. On other nodes if the ISID is advertised the ISID uses the MFIB for that ISID.

-

no advertise-local

If a policy of no advertise-local is defined in the ISIDs, the policy is not advertised. This combination should be used everywhere there is an I-VPLS with the ISID or where the Static ISID is defined to prevent black holes. If an ISID is to be moved from advertising to no advertising it is advisable to use use-def-mcast on all the nodes for that ISID which allows the MFIB to not be installed and starts using the default multicast tree at each node with that policy. Then the no advertise-local option can be used.

Each Policy may be used alone or in combination.

Example network configuration

Example network shows an example network showing four nodes with SPB B-VPLS. The SPB instance is configured on the B-VPLS 100001. B-VPLS 100001 uses FID 1 for SPB instance 1024. All B-MACs and I-SIDs are learned in the context of B-VPLS 100001. B-VPLS 100001 has an i-vpls 10001 service, which also uses the I-SID 10001. B-VPLS 100001 is configured to use VID 1 on SAPs 1/2/2 and 1/2/3 and while the VID does not need to be the same as the FID the VID does however need to be the same on the other side (Dut-B and Dut-C).

A user B-VPLS service 100002 is configured and it uses B-VPLS 10001 to provide forwarding. It fate shares the control topology. In Example network, the control B-VPLS uses the low-path-id algorithm and the user B-VPLS uses high-path-id algorithm. Any B-VPLS can use any algorithm. The difference is illustrated in the path between Dut A and Dut D. The short dashed line through Dut-B is the low-path-id algorithm and the long dashed line thought Dut C is the high-path-id algorithm.

Example configuration for Dut-A

Dut-A:

Control B-VPLS:*A:Dut-A>config>service>vpls# pwc

-------------------------------------------------------------------------------

Present Working Context :

-------------------------------------------------------------------------------

<root>

configure

service

vpls "100001"

-------------------------------------------------------------------------------

*A:Dut-A>config>service>vpls# info

----------------------------------------------

pbb

source-bmac 00:10:00:01:00:01

exit

stp

shutdown

exit

spb 1024 fid 1 create

level 1

ect-algorithm fid-range 100-100 high-path-id

exit

no shutdown

exit

sap 1/2/2:1.1 create

spb create

no shutdown

exit

exit

sap 1/2/3:1.1 create

spb create

no shutdown

exit

exit

no shutdown

----------------------------------------------

User B-VPLS:

*A:Dut-A>config>service>vpls# pwc

-------------------------------------------------------------------------------

Present Working Context :

-------------------------------------------------------------------------------

<root>

configure

service

vpls "100002"

-------------------------------------------------------------------------------

*A:Dut-A>config>service>vpls# info

----------------------------------------------

pbb

source-bmac 00:10:00:02:00:01

exit

stp

shutdown

exit

spbm-control-vpls 100001 fid 100

sap 1/2/2:1.2 create

exit

sap 1/2/3:1.2 create

exit

no shutdown

----------------------------------------------

I-VPLS:

configure service

vpls 10001 customer 1 i-vpls create

service-mtu 1492

pbb

backbone-vpls 100001

exit

exit

stp

shutdown

exit

sap 1/2/1:1000.1 create

exit

no shutdown

exit

vpls 10002 customer 1 i-vpls create

service-mtu 1492

pbb

backbone-vpls 100002

exit

exit

stp

shutdown

exit

sap 1/2/1:1000.2 create

exit

no shutdown

exit

exit

Show commands outputs

The show base command outputs a summary of the instance parameters under a control B-VPLS. The show command for a user B-VPLS indicates the control B-VPLS. The base parameters except for Bridge Priority and Bridge ID must match on neighbor nodes.

*A:Dut-A# show service id 100001 spb base

===============================================================================

Service SPB Information

===============================================================================

Admin State : Up Oper State : Up

ISIS Instance : 1024 FID : 1

Bridge Priority : 8 Fwd Tree Top Ucast : spf

Fwd Tree Top Mcast : st

Bridge Id : 80:00.00:10:00:01:00:01

Mcast Desig Bridge : 80:00.00:10:00:01:00:01

===============================================================================

ISIS Interfaces

===============================================================================

Interface Level CircID Oper State L1/L2 Metric

-------------------------------------------------------------------------------

sap:1/2/2:1.1 L1 65536 Up 10/-

sap:1/2/3:1.1 L1 65537 Up 10/-

-------------------------------------------------------------------------------

Interfaces : 2

===============================================================================

FID ranges using ECT Algorithm

-------------------------------------------------------------------------------

1-99 low-path-id

100-100 high-path-id

101-4095 low-path-id

===============================================================================

The show adjacency command displays the system ID of the connected SPB B-VPLS neighbors and the associated interfaces to connect those neighbors.

*A:Dut-A# show service id 100001 spb adjacency

===============================================================================

ISIS Adjacency

===============================================================================

System ID Usage State Hold Interface MT Enab

-------------------------------------------------------------------------------

Dut-B L1 Up 19 sap:1/2/2:1.1 No

Dut-C L1 Up 21 sap:1/2/3:1.1 No

-------------------------------------------------------------------------------

Adjacencies : 2

===============================================================================

Details about the topology can be displayed with the database command. There is a detail option that displays the contents of the LSPs.

*A:Dut-A# show service id 100001 spb database

===============================================================================

ISIS Database

===============================================================================

LSP ID Sequence Checksum Lifetime Attributes

-------------------------------------------------------------------------------

Displaying Level 1 database

-------------------------------------------------------------------------------

Dut-A.00-00 0xc 0xbaba 1103 L1

Dut-B.00-00 0x13 0xe780 1117 L1

Dut-C.00-00 0x13 0x85a 1117 L1

Dut-D.00-00 0xe 0x174a 1119 L1

Level (1) LSP Count : 4

===============================================================================

The show routes command shows the next hop if for the MAC addresses both unicast and multicast. The path to 00:10:00:01:00:04 (Dut-D) shows the low-path-id algorithm ID. For FID one the neighbor is Dut-B and for FID 100 the neighbor is Dut-C. Because Dut-A is the root of the multicast single tree the multicast forwarding is the same for Dut-A. However, unicast and multicast routes differ on most other nodes. Also the I-SIDs exist on all of the nodes so I-SID base multicast follows the multicast tree exactly. If the I-SID had not existed on Dut-B or Dut-D then for FID 1 there would be no entry. Note only designated nodes (root nodes) show metrics. Non-designated nodes do not show metrics.

*A:Dut-A# show service id 100001 spb routes

================================================================

MAC Route Table

================================================================

Fid MAC Ver. Metric

NextHop If SysID

----------------------------------------------------------------

Fwd Tree: unicast

----------------------------------------------------------------

1 00:10:00:01:00:02 10 10

sap:1/2/2:1.1 Dut-B

1 00:10:00:01:00:03 10 10

sap:1/2/3:1.1 Dut-C

1 00:10:00:01:00:04 10 20

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:02 10 10

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:03 10 10

sap:1/2/3:1.1 Dut-C

100 00:10:00:02:00:04 10 20

sap:1/2/3:1.1 Dut-C

Fwd Tree: multicast

----------------------------------------------------------------

1 00:10:00:01:00:02 10 10

sap:1/2/2:1.1 Dut-B

1 00:10:00:01:00:03 10 10

sap:1/2/3:1.1 Dut-C

1 00:10:00:01:00:04 10 20

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:02 10 10

sap:1/2/2:1.1 Dut-B

100 00:10:00:02:00:03 10 10

sap:1/2/3:1.1 Dut-C

100 00:10:00:02:00:04 10 20

sap:1/2/3:1.1 Dut-C

----------------------------------------------------------------

No. of MAC Routes: 12

================================================================

================================================================

ISID Route Table

================================================================

Fid ISID Ver.

NextHop If SysID

----------------------------------------------------------------

1 10001 10

sap:1/2/2:1.1 Dut-B

sap:1/2/3:1.1 Dut-C

100 10002 10

sap:1/2/2:1.1 Dut-B

sap:1/2/3:1.1 Dut-C

----------------------------------------------------------------

No. of ISID Routes: 2

================================================================

The show service spb fdb command shows the programmed unicast and multicast source MACs in SPB-managed B-VPLS service.

*A:Dut-A# show service id 100001 spb fdb

==============================================================================

User service FDB information

==============================================================================

MacAddr UCast Source State MCast Source State

------------------------------------------------------------------------------

00:10:00:01:00:02 1/2/2:1.1 ok 1/2/2:1.1 ok

00:10:00:01:00:03 1/2/3:1.1 ok 1/2/3:1.1 ok

00:10:00:01:00:04 1/2/2:1.1 ok 1/2/2:1.1 ok

------------------------------------------------------------------------------

Entries found: 3

==============================================================================

*A:Dut-A# show service id 100002 spb fdb

==============================================================================

User service FDB information

==============================================================================

MacAddr UCast Source State MCast Source State

------------------------------------------------------------------------------

00:10:00:02:00:02 1/2/2:1.2 ok 1/2/2:1.2 ok

00:10:00:02:00:03 1/2/3:1.2 ok 1/2/3:1.2 ok

00:10:00:02:00:04 1/2/3:1.2 ok 1/2/3:1.2 ok

------------------------------------------------------------------------------

Entries found: 3

==============================================================================

The show service spb mfib command shows the programmed multicast ISID MAC addresses in SPB-managed B-VPLS service shows the multicast ISID pbb group mac addresses in SPB-managed B-VPLS. Other types of *,G multicast traffic is sent over the multicast tree and these MACs are not shown. OAM traffic that uses multicast (for example vMEP CCM) takes this path for example.

*A:Dut-A# show service id 100001 spb mfib

===============================================================================

User service MFIB information

===============================================================================

MacAddr ISID Status

-------------------------------------------------------------------------------

01:1E:83:00:27:11 10001 Ok

-------------------------------------------------------------------------------

Entries found: 1

===============================================================================

*A:Dut-A# show service id 100002 spb mfib

===============================================================================

User service MFIB information

===============================================================================

MacAddr ISID Status

-------------------------------------------------------------------------------

01:1E:83:00:27:12 10002 Ok

-------------------------------------------------------------------------------

Entries found: 1

===============================================================================

Debug commands

Use the following commands to debug an SPB-managed B-VPLS service:

debug service id svc-id spb

debug service id svc-id spb adjacency [{sap sap-id | spoke-sdp sdp-id:vc-id | nbr-system-id}]

debug service id svc-id spb interface [{sap sap-id | spoke-sdp sdp-id:vc-id}]

debug service id svc-id spb l2db

debug service id svc-id spb lsdb [{system-id | lsp-id}]

debug service id svc-id spb packet [packet-type] [{sap sap-id | spoke-sdp sdp-id:vc-id}] [detail]

debug service id svc-id spb spf system-id

Tools commands

Use the following commands to troubleshoot an SPB-managed B-VPLS service:

tools perform service id svc-id spb run-manual-spf

tools dump service id svc-id spb

tools dump service id svc-id spb default-multicast-list

tools dump service id svc-id spb fid fid default-multicast-list

tools dump service id svc-id spb fid fid forwarding-path destination isis-system-id forwarding-tree {unicast | multicast}

Clear commands

Use the following commands to clear SPB-related data:

clear service id svc-id spb

clear service id svc-id spb adjacency system-id

clear service id svc-id spb database system-id

clear service id svc-id spb spf-log

clear service id svc-id spb statistics

IEEE 802.1ak MMRP for service aggregation and zero touch provisioning

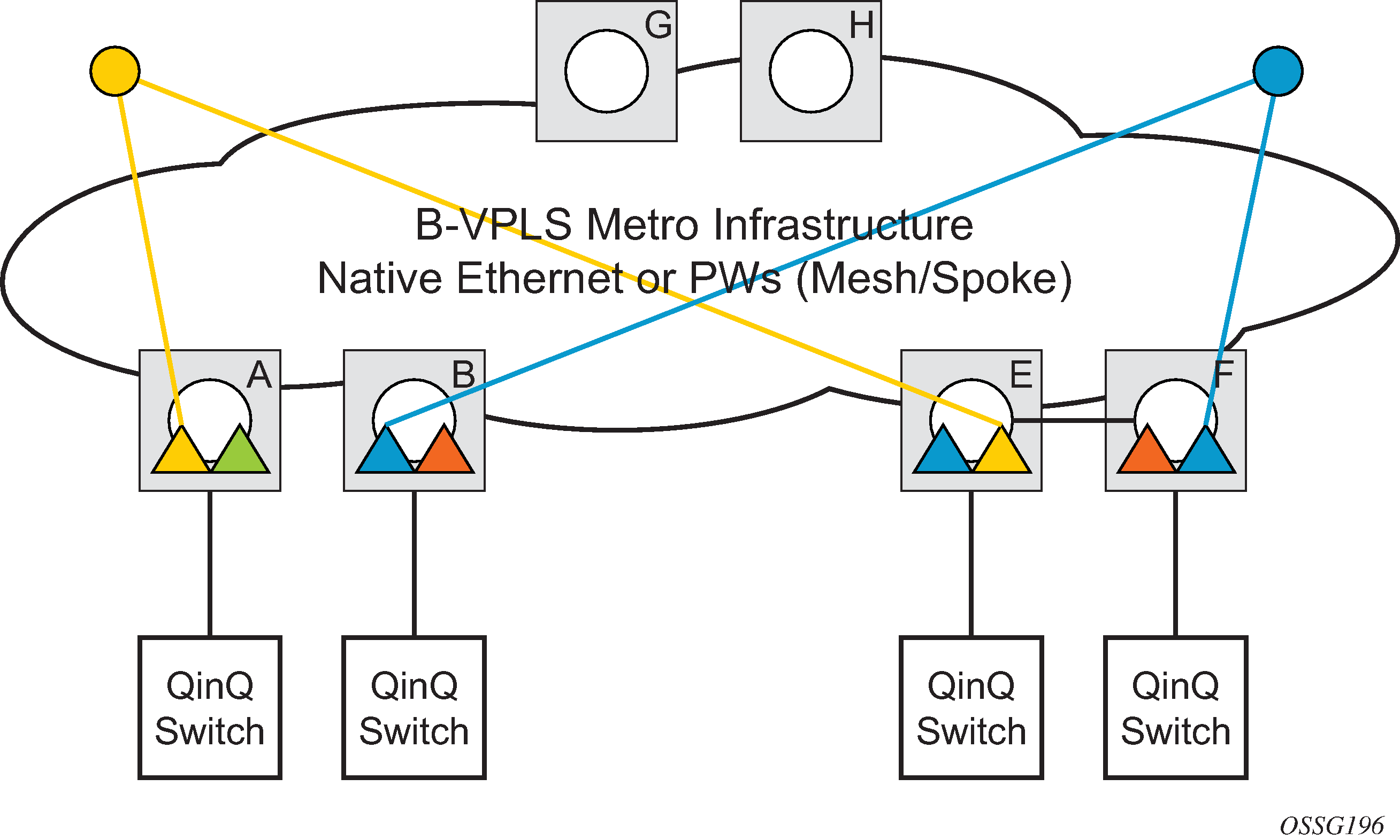

IEEE 802.1ah supports an M:1 model where multiple customer services, represented by ISIDs, are transported through a common infrastructure (B-component). The Nokia PBB implementation supports the M:1 model allowing for a service architecture where multiple customer services (I-VPLS or Epipe) can be transported through a common B-VPLS infrastructure as depicted in Customer services transported in 1 B-VPLS (M:1 model).

The B-VPLS infrastructure represented by the white circles is used to transport multiple customer services represented by the triangles of different colors. This service architecture minimizes the number of provisioning touches and reduces the load in the core PEs: for example, G and H use less VPLS instances and pseudowire.

In a real life deployment, different customer VPNs do not share the same community of interest – for example, VPN instances may be located on different PBB PEs. The M:1 model depicted in Flood containment requirement in M:1 model requires a per VPN flood containment mechanism so that VPN traffic is distributed just to the B-VPLS locations that have customer VPN sites: for example, flooded traffic originated in the blue I-VPLS should be distributed just to the PBB PEs where blue I-VPLS instances are present – PBB PE B, E and F.

Per customer VPN distribution trees need to be created dynamically throughout the BVPLS as new customer I-VPLS instances are added in the PBB PEs.

The Nokia PBB implementation employs the IEEE 802.1ak Multiple MAC Registration Protocol (MMRP) to dynamically build per I-VPLS distribution trees inside a specific B-VPLS infrastructure.

IEEE 802.1ak Multiple Registration Protocol (MRP) – Specifies changes to IEEE Std 802.1Q that provide a replacement for the GARP, GMRP and GVRP protocols. MMRP application of IEEE 802.1ak specifies the procedures that allow the registration/de-registration of MAC addresses over an Ethernet switched infrastructure.

In the PBB case, as I-VPLS instances are enabled in a specific PE, a group B-MAC address is by default instantiated using the standard based PBB Group OUI and the ISID value associated with the I-VPLS.

When a new I-VPLS instance is configured in a PE, the IEEE 802.1ak MMRP application is automatically invoked to advertise the presence of the related group B-MAC on all active B-VPLS SAPs and SDP bindings.

When at least two I-VPLS instances with the same ISID value are present in a B-VPLS, an optimal distribution tree is built by MMRP in the related B-VPLS infrastructure as depicted in Flood containment requirement in M:1 model.

MMRP support over B-VPLS SAPs and SDPs

MMRP is supported in B-VPLS instances over all the supported BVPLS SAPs and SDPs, including the primary and standby pseudowire scheme implemented for VPLS resiliency.

When a B-VPLS with MMRP enabled receives a packet destined for a specific group B-MAC, it checks its own MFIB entries and if the group B-MAC does not exist, it floods it everywhere. This should never happen as this kind of packet is generated at the I-VPLS/PBB PE when a registration was received for a local I-VPLS group B-MAC.

I-VPLS changes and related MMRP behavior

This section describes the MMRP behavior for different changes in IVPLS.

-

When an ISID is set for a specific I-VPLS and a link to a related B-VPLS is activated (for example, through the configure service vpls backbone-vpls vpls id:isid command), the group B-MAC address is declared on all B-VPLS virtual ports (SAPs or SDPs).

-

When the ISID is changed from one value to a new one, the old group B-MAC address is undeclared on all ports and the new group B-MAC address is declared on all ports in the B-VPLS.

-

When the I-VPLS is disassociated with the B-VPLS, the old group B-MAC is no longer advertised as a local attribute in the B-VPLS if no other peer B-VPLS PEs have it declared.

-

When an I-VPLS goes operationally down (either all SAPs/SDPs are down) or the I-VPLS is shutdown, the associated group B-MAC is undeclared on all ports in the B-VPLS.

-

When the I-VPLS is deleted, the group B-MAC should already be undeclared on all ports in the B-VPLS because the I-VPLS has to be shutdown to delete it.

Limiting the number of MMRP entries on a per B-VPLS basis

The MMRP exchanges create one entry per attribute (group B-MAC) in the B-VPLS where MMRP protocol is running. When the first registration is received for an attribute, an MFIB entry is created for it.

The Nokia implementation allows the user to control the number of MMRP attributes (group B-MACs) created on a per B-VPLS basis. Control over the number of related MFIB entries in the B-VPLS FDB is inherited from previous releases through the use of the configure service vpls mfib-table-size table-size command. This ensures that no B-VPLS takes up all the resources from the total pool.

Optimization for improved convergence time

Assuming that MMRP is used in a specific B-VPLS, under failure conditions the time it takes for the B-VPLS forwarding to resume may depend on the data plane and control plane convergence plus the time it takes for MMRP exchanges to settle down the flooding trees on a per ISID basis.

To minimize the convergence time, the Nokia PBB implementation offers the selection of a mode where B-VPLS forwarding reverts for a short time to flooding so that MMRP has enough time to converge. This mode can be selected through configuration using the configure service vpls b-vpls mrp flood-time value command where value represents the amount of time in seconds that flooding is enabled.

If this behavior is selected, the forwarding plane reverts to B-VPLS flooding for a configurable time period, for example, for a few seconds, then it reverts back to the MFIB entries installed by MMRP.

The following B-VPLS events initiate the switch from per I-VPLS (MMRP) MFIB entries to ‟B-VPLS flooding”:

Reception or local triggering of a TCN

B-SAP failure

Failure of a B-SDP binding

Pseudowire activation in a primary/standby HVPLS resiliency solution

SF/CPM switchover because of the STP reconvergence

Controlling MRP scope using MRP policies

MMRP advertises the Group B-MACs associated with ISIDs throughout the whole BVPLS context regardless of whether a specific IVPLS is present in one or all the related PEs or BEBs. When evaluating the overall scalability the resource consumption in both the control and data plane must be considered:

control plane

The control plane is responsible for MMRP processing and the number of attributes advertised.

data plane

One tree is instantiated per ISID or Group B-MAC attribute.

In a multi-domain environment, for example multiple MANs interconnected through a WAN, the BVPLS and implicitly MMRP advertisement may span across domains. The MMRP attributes are flooded throughout the BVPLS context indiscriminately, regardless of the distribution of IVPLS sites.

The solution described in this section limits the scope of MMRP control plane advertisements to a specific network domain using MRP Policy. ISID-based filters are also provided as a safety measure for BVPLS data plane.

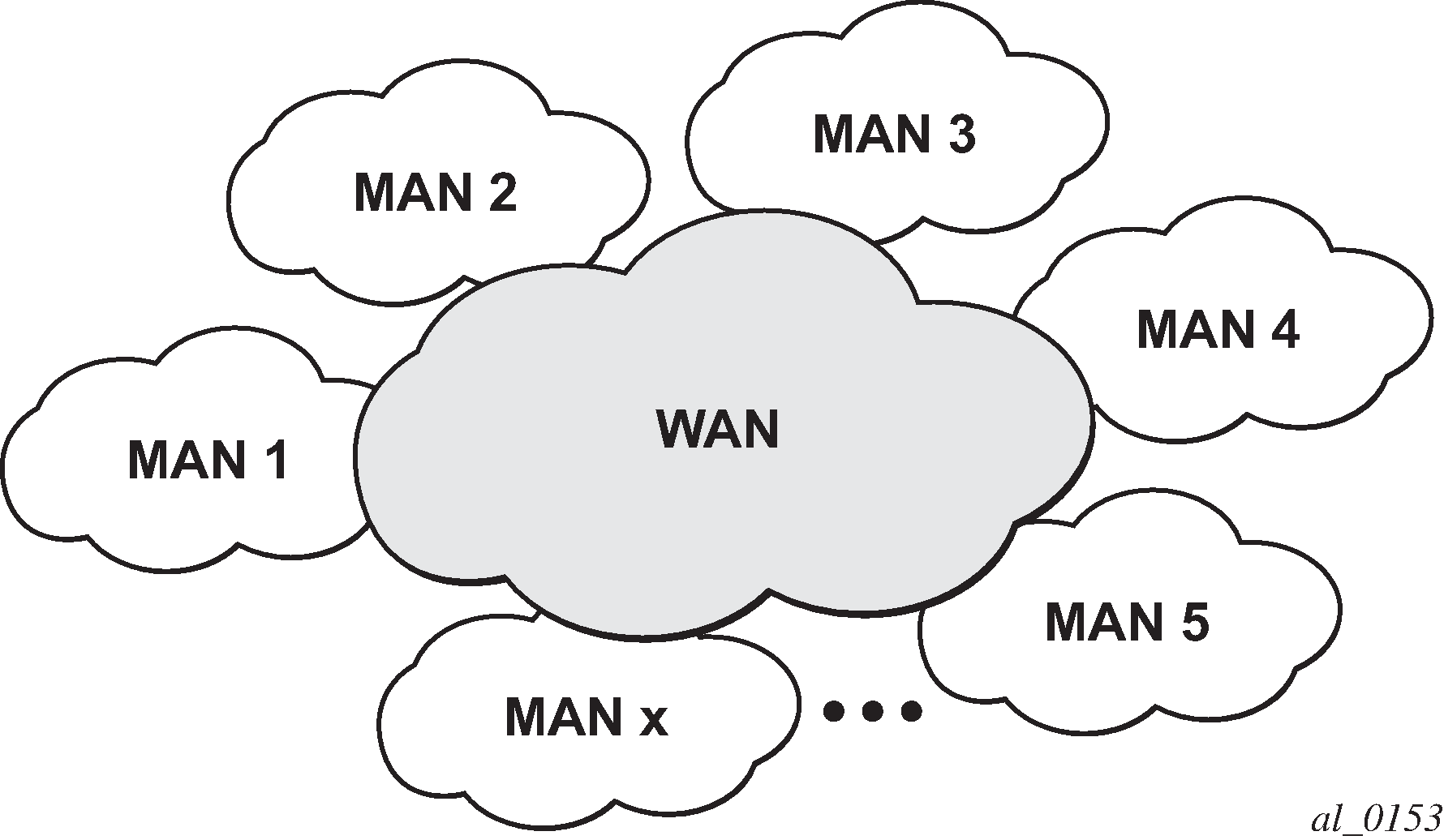

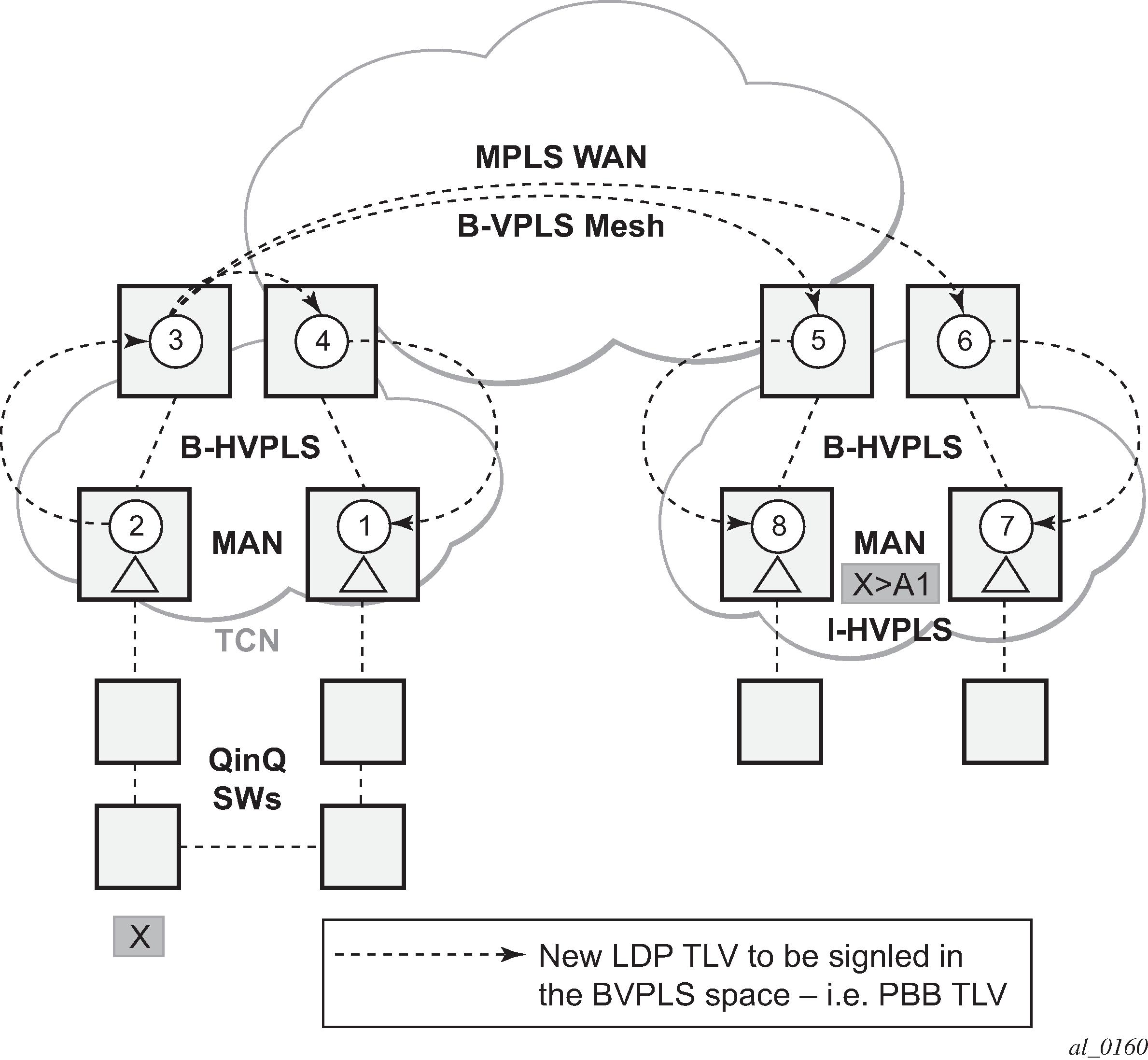

Inter-domain topology shows the case of an Inter-domain deployment where multiple metro domains (MANs) are interconnected through a wide area network (WAN). A BVPLS is configured across these domains running PBB M:1 model to provide infrastructure for multiple IVPLS services. MMRP is enabled in the BVPLS to build per IVPLS flooding trees. To limit the load in the core PEs or PBB BCBs, the local IVPLS instances must use MMRP and data plane resources only in the MAN regions where they have sites. A solution to the above requirements is depicted in Limiting the scope of MMRP advertisements. The case of native PBB metro domains inter-connected via a MPLS core is used in this example. Other technology combinations are possible.

An MRP policy can be applied to the edge of MAN1 domain to restrict the MMRP advertisements for local ISIDs outside local domain. Or the MRP policy can specify the inter-domain ISIDs allowed to be advertised outside MAN1. The configuration of MRP policy is similar with the configuration of a filter. It can be specified as a template or exclusively for a specific endpoint under service mrp object. An ISID or a range of ISIDs can be used to specify one or multiple match criteria that can be used to generate the list of Group MACs to be used as filters to control which MMRP attributes can be advertised. An example of a simple mrp-policy that allows the advertisement of Group B-MACs associated with ISID range 100-150 is provided below:

*A:ALA-7>config>service>mrp# info

----------------------------------------------

mrp-policy "test" create

default-action block

entry 1 create

match

isid 100 to 150

exit

action allow

exit

exit

----------------------------------------------

A special action end-station is available under mrp-policy entry object to allow the emulation on a specific SAP/PW of an MMRP end-station. This is usually required when the operator does not want to activate MRP in the WAN domain for interoperability reasons or if it prefers to manually specify which ISID is interconnected over the WAN. In this case the MRP transmission is shutdown on that SAP/PW and the configured ISIDs are used the same way as an IVPLS connection into the BVPLS, emulating a static entry in the related BVPLS MFIB. Also if MRP is active in the BVPLS context, MMRP declares the related GB-MACs continuously over all the other BVPLS SAP/PWs until the mrp-policy end-station action is removed from the mrp-policy assigned to that BVPLS context.

The MMRP usage of the mrp-policy ensures automatically that traffic using GB-MAC is not flooded between domains. There could be though small transitory periods when traffic originated from PBB BEB with unicast B-MAC destination may be flooded in the BVPLS context as unknown unicast in the BVPLS context for both IVPLS and PBB Epipe. To restrict distribution of this traffic for local PBB services a new ISID match criteria is added to existing mac-filters. The mac-filter configured with ISID match criteria can be applied to the same interconnect endpoints, BVPLS SAP or PW, as the mrp-policy to restrict the egress transmission any type of frames that contain a local ISID. An example of this new configuration option is as follows:

----------------------------------------------

A;ALA-7>config>filter# info

----------------------------------------------

mac-filter 90 create

description "filter-wan-man"

type isid

scope template

entry 1 create

description "drop-local-isids"

match

isid from 100 to 1000

exit

action drop

exit

----------------------------------------------

These filters are applied as required on a per B-SAP or B-PW basis just in the egress direction. The ISID match criteria is exclusive with any other criteria under mac-filter. A new mac-filter type attribute is defined to control the use of ISID match criteria and must be set to isid to allow the use of isid match criteria. The ISID tag is identified using the PBB ethertype provisioned under config>port>ethernet>pbb-etype.

PBB and BGP-AD

BGP auto-discovery is supported only in the BVPLS to automatically instantiate the BVPLS pseudowires and SDPs.

PBB E-Line service

E-Line service is defined in PBB (IEEE 802.1ah) as a point-to-point service over the B-component infrastructure. The Nokia implementation offers support for PBB E-Line through the mapping of multiple Epipe services to a backbone VPLS infrastructure.

The use of Epipe scales the E-Line services as no MAC switching, learning or replication is required to deliver the point-to-point service.

All packets that ingress the customer SAP/spoke SDP are PBB encapsulated and unicasted through the B-VPLS ‟tunnel” using the backbone destination MAC of the remote PBB PE. The Epipe service does not support the forwarding of PBB encapsulated frames received on SAPs or spoke SDPs through their associated B-VPLS service. PBB frames are identified based on the configured PBB Ethertype (0x88e7 by default).

All the packets that ingress the B-VPLS destined for the Epipe are PBB de-encapsulated and forwarded to the customer SAP/spoke SDP.

A PBB E-Line service support the configuration of a SAP or non-redundant spoke SDP.

Non-redundant PBB Epipe spoke termination

This feature provides the capability to use non-redundant pseudowire connections on the access side of a PBB Epipe, where previously only SAPs could be configured.

PBB using G.8031 protected Ethernet tunnels

IEEE 802.1ah Provider Backbone Bridging (PBB) specification employs provider MSTP (PMSTP) to ensure loop avoidance in a resilient native Ethernet core. The usage of P-MSTP means failover times depend largely on the size and the connectivity model used in the network. The use of MPLS tunnels provides a way to scale the core while offering fast failover times using MPLS FRR. There are still service provider environments where Ethernet services are deployed using native Ethernet backbones. A solution based on native Ethernet backbone is required to achieve the same fast failover times as in the MPLS FRR case.

The Nokia PBB implementation offers the capability to use core Ethernet tunnels compliant with ITU-T G.8031 specification to achieve 50 ms resiliency for backbone failures. This is required to comply with the stringent SLAs provided by service providers in the current competitive environment. The implementation also allows a LAG-emulating Ethernet tunnel providing a complimentary native Ethernet E-LAN capability. The LAG-emulating Ethernet tunnels and G.8031 protected Ethernet tunnels operate independently.

The next section describes an applicability example where an Ethernet service provider using native PBB offers a carrier of carrier backhaul service for mobile operators.

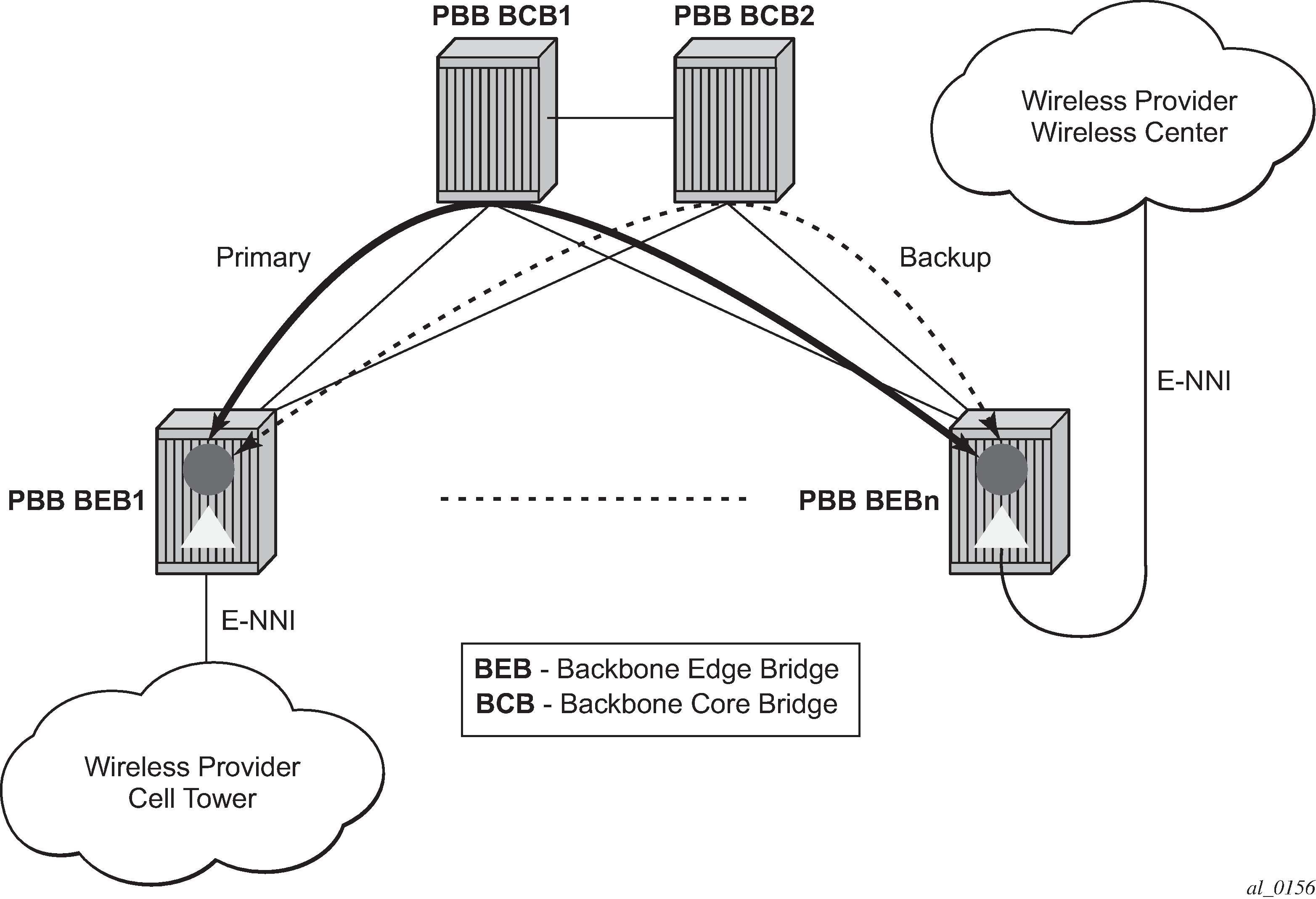

Solution overview

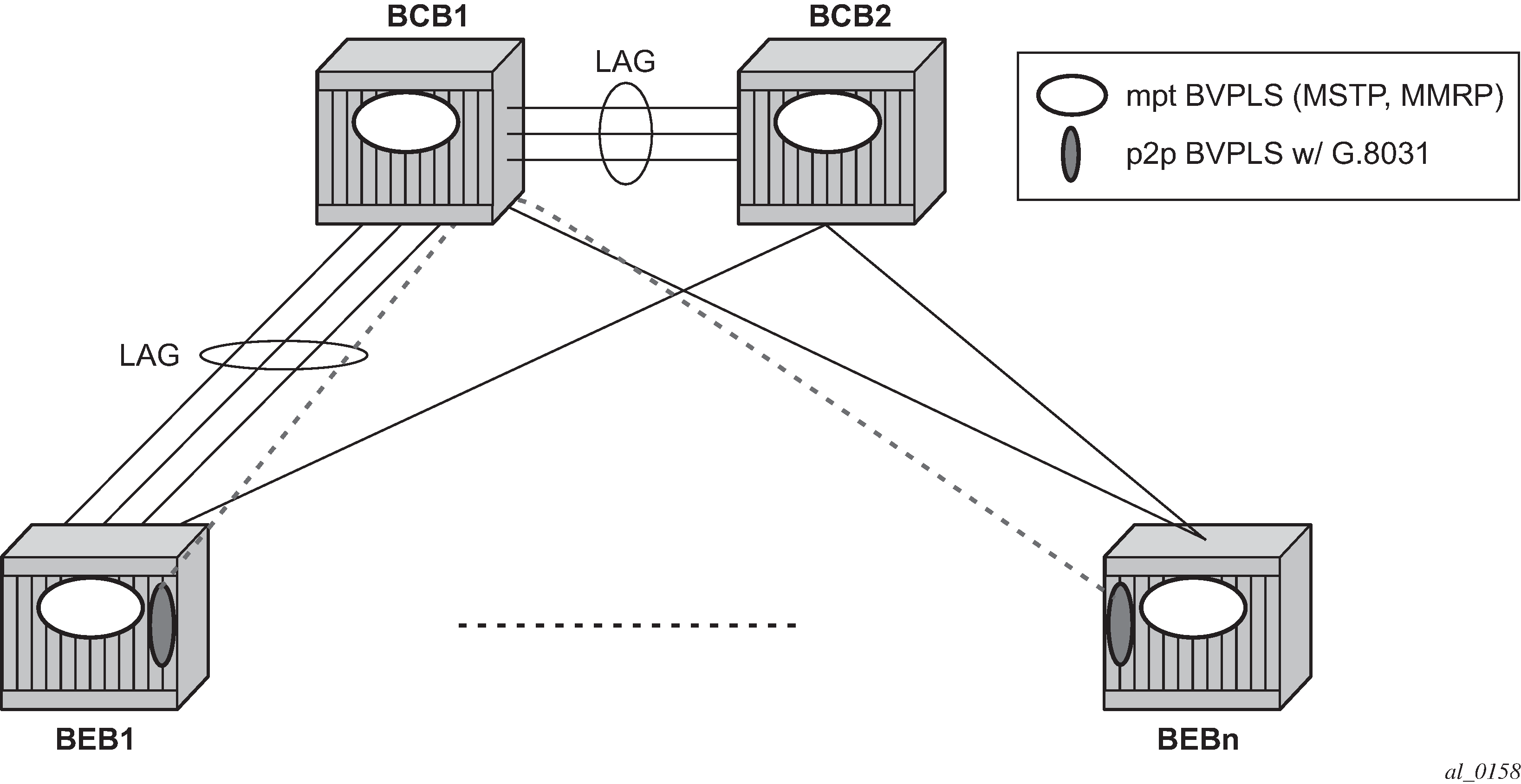

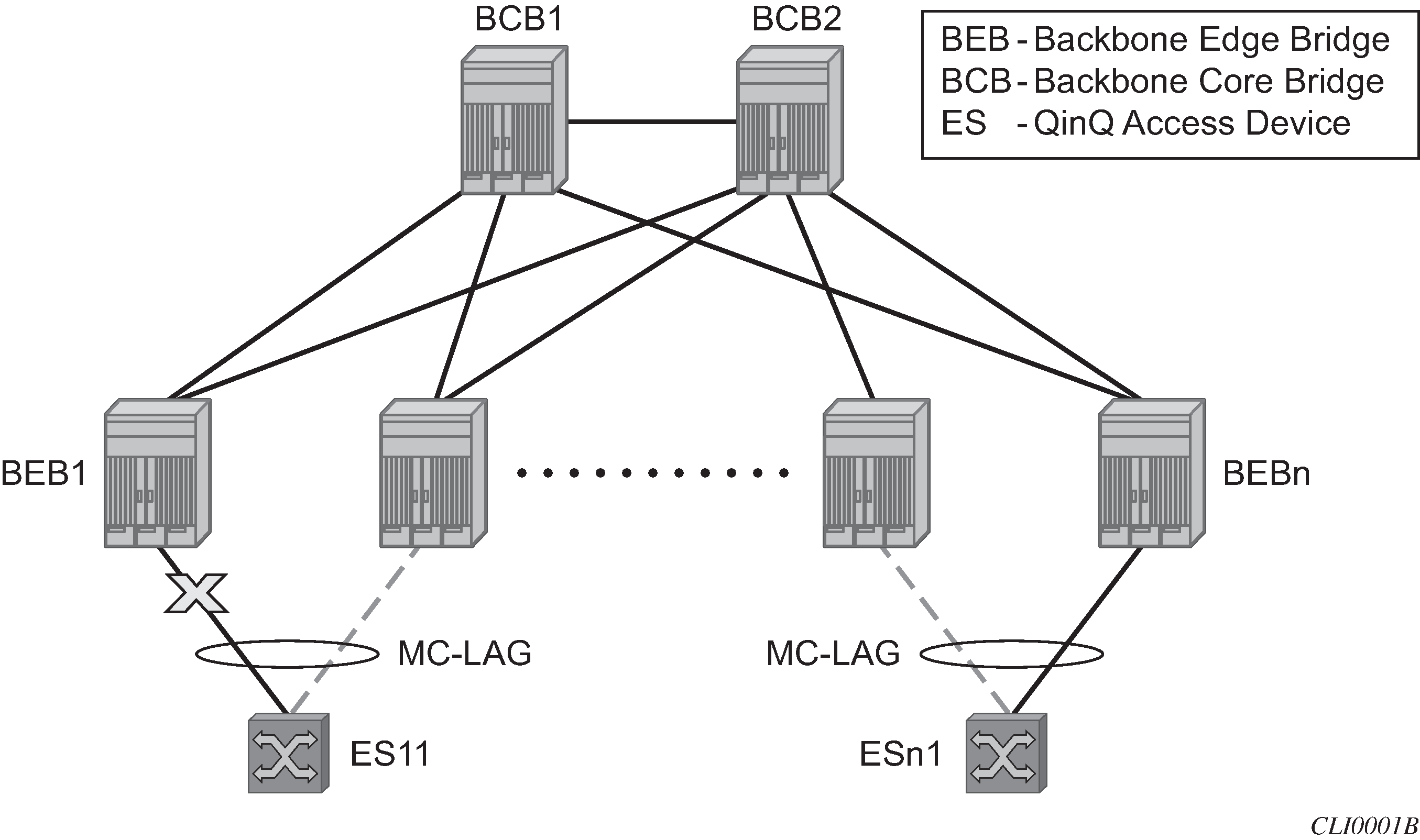

A simplified topology example for a PBB network offering a carrier of carrier service for wireless service providers is depicted in Mobile backhaul use case.

The wireless service provider in this example purchases an E-Line service between the ENNIs on PBB edge nodes, BEB1 and BEBn. PBB services are employing a type of Ethernet tunneling (Eth-tunnels) between BEBs where primary and backup member paths controlled by G.8031 1:1 protection are used to ensure faster backbone convergence. Ethernet CCMs based on IEEE 802.1ag specification may be used to monitor the liveliness for each individual member paths.

The Ethernet paths span a native Ethernet backbone where the BCBs are performing simple Ethernet switching between BEBs using an Epipe or a VPLS service.

Although the network diagram shows just the Epipe case, both PBB E-Line and E-LAN services are supported.

Detailed solution description

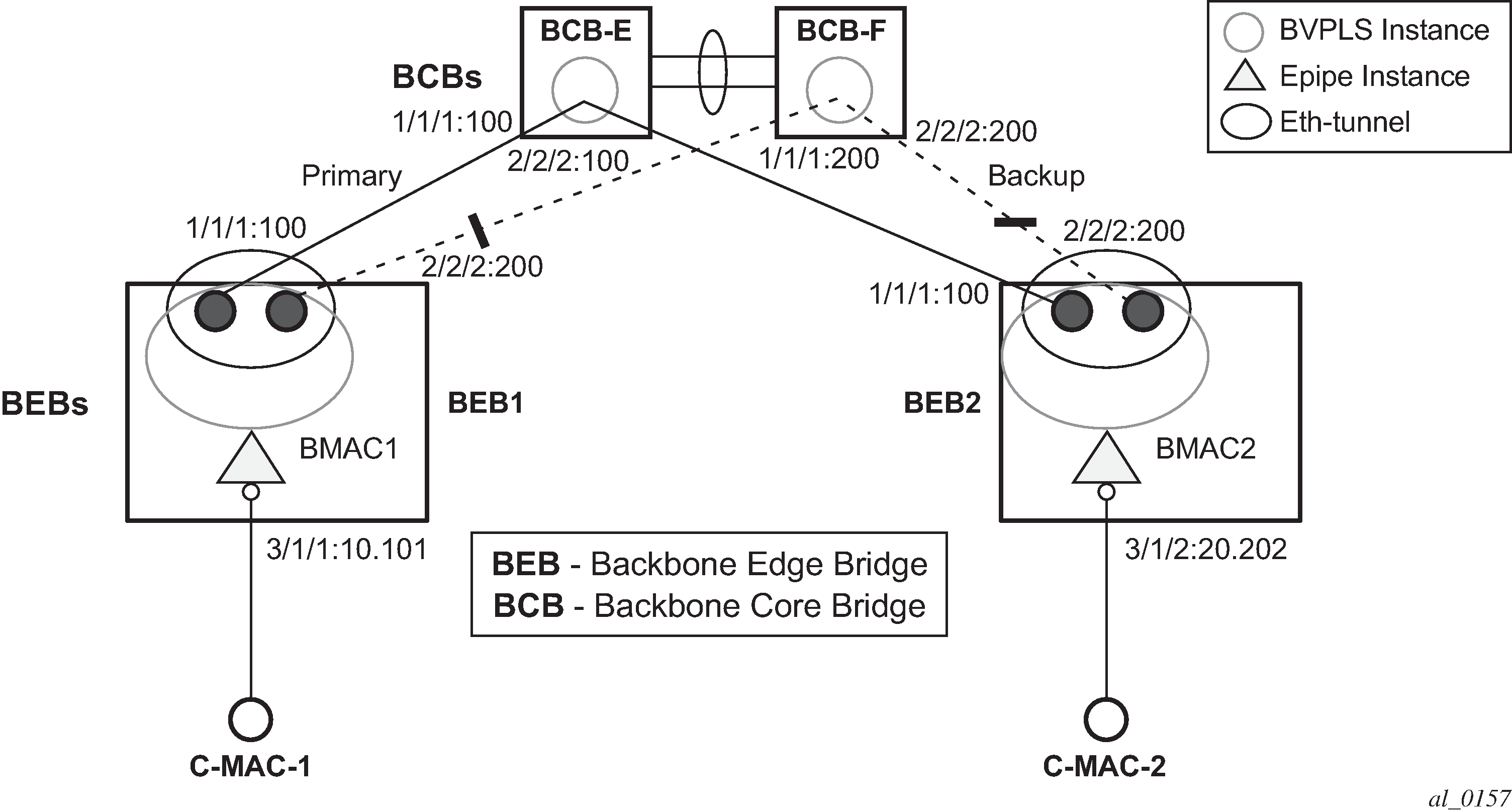

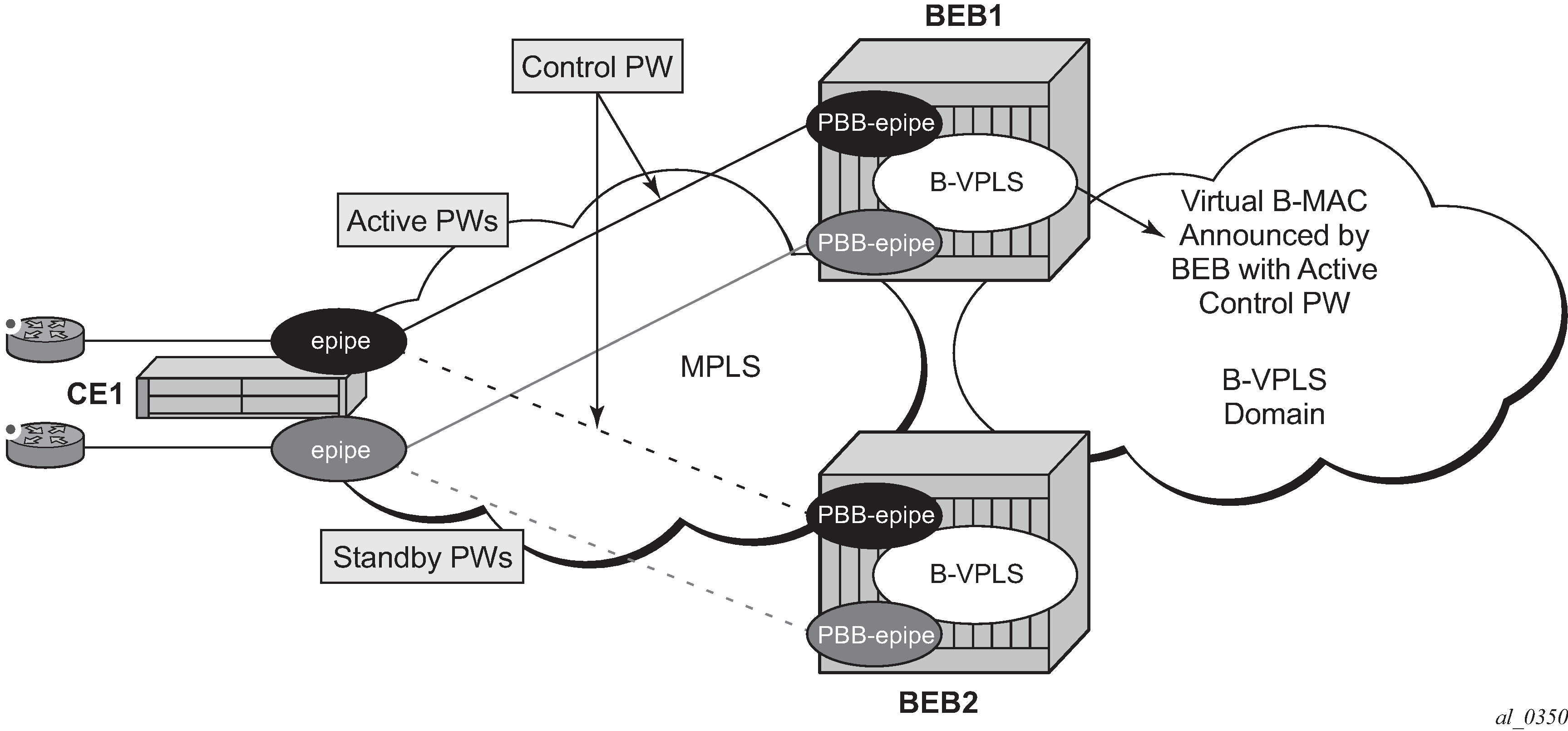

This section discusses the details of the Ethernet tunneling for PBB. The main solution components are depicted in PBB-Epipe with B-VPLS over Ethernet tunnel.

The PBB E-Line service is represented in the BEBs as a combination of an Epipe mapped to a BVPLS instance. A eth-tunnel object is used to group two possible paths defined by specifying a member port and a control tag. In our example, the blue-circle representing the eth-tunnel is associating in a protection group the two paths instantiated as (port, control-tag/bvid): a primary one of port 1/1/1, control-tag 100 and respectively a secondary one of port 2/2/2, control tag 200.

The BCBs devices stitch each BVID between different BEB-BCB links using either a VPLS or Epipe service. Epipe instances are recommended as the preferred option because of the increased tunnel scalability.

Fast failure detection on the primary and backup paths is provided using IEEE 802.1ag CCMs that can be configured to transmit at 10 msec interval. Alternatively, the link layer fault detection mechanisms like LoS/RDI or 802.3ah can be employed.

Path failover is controlled by an Ethernet protection module, based on standard G.8031 Ethernet Protection Switching. The Nokia implementation of Ethernet protection switching supports only the 1:1 model which is common practice for packet based services because it makes better use of available bandwidth. The following additional functions are provided by the protection module:

Synchronization between BEBs such that both send and receive on the same Ethernet path in stable state.

Revertive / non-revertive choices.

Compliant G.8031 control plane.

The secondary path requires a MEP to exchange the G.8031 APS PDUs. The following Ethernet CFM configuration in the eth-tunnel>path>eth-cfm>mep context can be used to enable the G.8031 protection without activating the Ethernet CCMs:

-

Create the domain (MD) in CFM.

-

Create the association (MA) in CFM and do not put remote MEPs.

-

Create the MEP.

-

Configure control-mep and no shutdown on the MEP.

-

Use the no ccm-enable command to keep the CCM transmission disabled.

If a MEP is required for troubleshooting issues on the primary path, the configuration described above for the secondary path must be used to enable the use of Link Layer OAM on the primary path.

LAG loadsharing is offered to complement G.8031 protected Ethernet tunnels for situations where unprotected VLAN services are to be offered on some or all of the same native Ethernet links.

In G.8031 P2P tunnels and LAG-like loadsharing coexistence , the G.8031 Ethernet tunnels are used by the B-SAPs mapped to the green BVPLS entities supporting the E-Line services. A LAG-like loadsharing solution is provided for the Multipoint BVPLS (white circles) supporting the E-LAN (IVPLS) services. The green G.8031 tunnels coexist with LAG-emulating Ethernet tunnels (loadsharing mode) on both BEB-BCB and BCB-BCB physical links.

The G.8031-controlled Ethernet tunnels select an active tunnel based on G.8031 APS operation, while emulated-LAG Ethernet tunnels hash traffic within the configured links. Upon failure of one of the links the emulated-LAG tunnels rehash traffic within the remaining links and fail the tunnel when the number of links breaches the minimum required (independent of G.8031-controlled Ethernet tunnels on the links shared emulated-LAG).

Detailed PBB emulated LAG solution description

This section discusses the details of the emulated LAG Ethernet tunnels for PBB. The main solution components are depicted in Ethernet tunnel overlay which overlays Ethernet Tunnels services on the network from PBB-Epipe with B-VPLS over Ethernet tunnel.

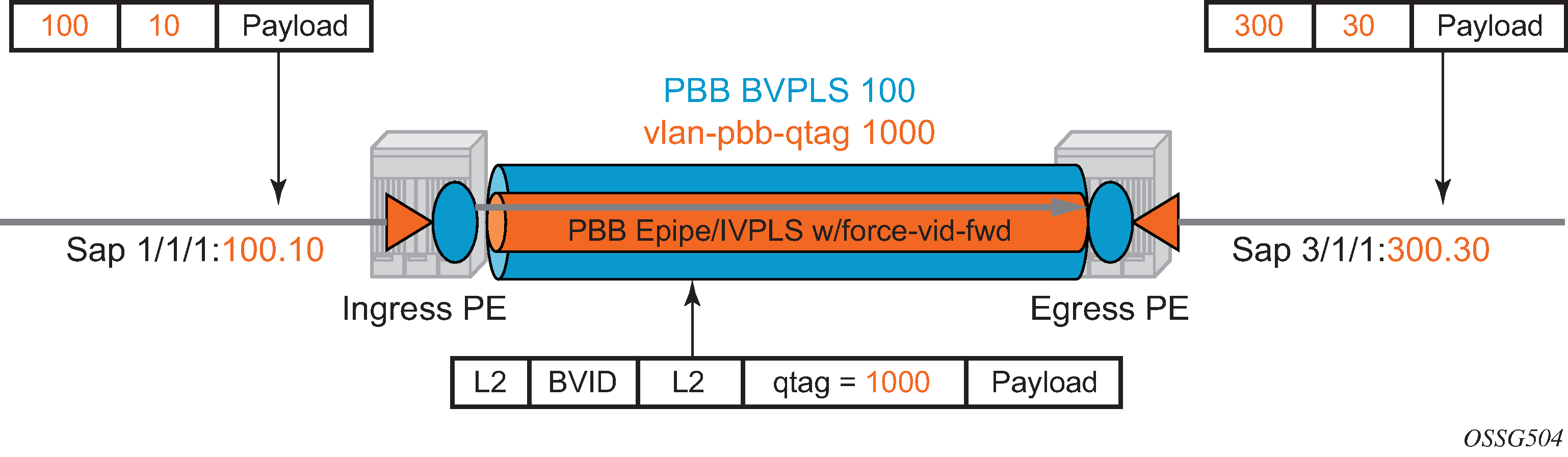

For a PBB Ethernet VLAN to make efficient use of an emulated LAG solution, a Management-VPLS (m-VPLS) is configured enabling Provider Multi-Instance Spanning Tree Protocol (P-MSTP). The m-VPLS is assigned to two SAPs; the eth-tunnels connecting BEB1 to BCB-E and BCB-F, respectively, reserving a range of VLANs for P-MSTP.

The PBB P-MSTP service is represented in the BEBs as a combination of an Epipe mapped to a BVPLS instance as before but now the PBB service is able to use the Ethernet tunnels under the P-MSTP control and load share traffic on the emulated LAN. In our example, the blue-circle representing the BVPLS is assigned to the SAPs which define two paths each. All paths are specified as primary precedence to load share the traffic.

A Management VPLS (m-VPLS) is first configured with a VLAN-range and assigned to the SAPs containing the path to the BCBs. The load shared eth-tunnel objects are defined by specifying a member ports and a control tag of zero. Then individual B-VPLS services can be assigned to the member paths of the emulated LAGs and defining the path encapsulation. Then individual services such as the IVPLS service can be assigned to the B-VPLS.

At the BCBs the tunnels are terminated the next BVPLS instance controlled by P-MSTP on the BCBs to forward the traffic.

In the event of link failure, the emulated LAG group automatically adjusts the number of paths. A threshold can be set whereby the LAG group is declared down. All emulated LAG operations are independent of any 8031-1to1 operation.

Support service and solution combinations

The following considerations apply when Ethernet tunnels are configured under a VPLS service:

Only ports in access or hybrid mode can be configured as eth-tunnel path members. The member ports can be located on the same or different IOMs, MDAs, XCMs, or XMAs.

Dot1q and QinQ ports are supported as eth-tunnel path members.

The same port cannot be used as member in both a LAG and an Ethernet-tunnel.

A mix of regular and multiple eth-tunnel SAPs and PWs can be configured in the same BVPLS.

Split horizon groups in BVPLS are supported on eth-tunnel SAPs. The use of split horizon groups allows the emulation of a VPLS model over the native Ethernet core, eliminating the need for P-MSTP.

STP and MMRP are not supported in a BVPLS using eth-tunnel SAPs.

Both PBB E-Line (Epipe) and E-LAN (IVPLS) services can be transported over a BVPLS using Ethernet-tunnel SAPs.

MC-LAG access multihoming into PBB services is supported in combination with Ethernet tunnels:

MC-LAG SAPs can be configured in IVPLS or Epipe instances mapped to a BVPLS that uses eth-tunnel SAPs

Blackhole Avoidance using native PBB MAC flush/MAC move solution is also supported

Support is also provided for BVPLS with P-MSTP and MMRP control plane running as ships-in-the-night on the same links with the Ethernet tunneling which is mapped by a SAP to a different BVPLS.

Epipes must be used in the BCBs to support scalable point-to-point tunneling between the eth-tunnel endpoints when management VPLS is used.

The following solutions or features are not supported in the current implementation for the 7450 ESS and 7750 SR and are blocked:

Capture SAP

Subscriber management

Application assurance

Eth-tunnels usage as a logical port in the config>redundancy>multi-chassis>peer>sync>port context

For more information, see the 7450 ESS, 7750 SR, 7950 XRS, and VSR Services Overview Guide.

Periodic MAC notification

Virtual B-MAC learning frames (for example, the frames sent with the source MAC set to the virtual B-MAC) can be sent periodically, allowing all BCBs/BEBs to keep the virtual B-MAC in their Layer 2 forwarding database.

This periodic mechanism is useful in the following cases:

A new BEB is added after the current mac-notification method has stopped sending learning frames.

A new combination of [MC-LAG:SAP|A/S PW]+[PBB-Epipe]+[associated B-VPLS]+[at least one B-SDP|B-SAP] becomes active. The current mechanism only sends learning frames when the first such combination becomes active.

A BEB containing the remote endpoint of a dual-homed PBB-Epipe is rebooted.

Traffic is not seen for the MAC aging timeout (assuming that the new periodic sending interval is less than the aging timeout).

There is unidirectional traffic.

In each of the above cases, all of the remote BEB/BCBs learn the virtual MAC in the worst case after the next learning frame is sent.

In addition, this allows all of the above when to be used in conjunction with discard-unknown in the B-VPLS. Currently, if discard-unknown is enabled in all related B-VPLSs (to avoid any traffic flooding), all above cases could experience an increased traffic interruption, or a permanent loss of traffic, as only traffic toward the dual homed PBB-Epipe can restart bidirectional communication. For example, it reduces the traffic outage when:

The PBB-Epipe virtual MAC is flushed on a remote BEB/BCB because of the failover of an MC-LAG or A/S pseudowires within the customer’s access network, for example, in between the dual homed PBB-Epipe peers and their remote tunnel endpoint.

There is a failure in the PBB core causing the path between the two BEBs to pass through a different BCB.

It should be noted that this does not help in the case where the remote tunnel endpoint BEB fails. In this case traffic is flooded when the remote B-MAC ages out if discard-unknown is disabled. If discard-unknown is enabled, then the traffic follows the path to the failed BEB but is eventually dropped on the source BEB when the remote B-MAC ages out on all systems.

To scale the implementation it is expected that the timescale for sending the periodic notification messages is much longer than that used for the current notification messages.

MAC flush

PBB resiliency for B-VPLS over pseudowire infrastructure

The following VPLS resiliency mechanisms are also supported in PBB VPLS:

Native Ethernet resiliency supported in both I-VPLS and B-VPLS contexts

Distributed LAG, MC-LAG, RSTP

MSTP in a management VPLS monitoring (B- or I-) SAPs and pseudowire

BVPLS service resiliency, loop avoidance solutions - Mesh, active/standby pseudowires and multi-chassis endpoint

IVPLS service resiliency, loop avoidance solutions - Mesh, active/standby pseudowires (PE-rs only role), BGP multihoming

To support these resiliency options, extensive support for blackhole avoidance mechanisms is required.

Porting existing VPLS LDP MAC flush in PBB VPLS

Both the I-VPLS and B-VPLS components inherit the LDP MAC flush capabilities of a regular VPLS to fast age the related FDB entries for each domain: C-MACs for I-VPLS and B-MACs for B-VPLS. Both types of LDP MAC flush are supported for I-VPLS and B-VPLS domains:

flush-all-but-mine

This refers to flushing on a positive event, for example:

pseudowire activation (VPLS resiliency using active/standby pseudowire)

reception of a STP TCN

flush-all-from-me

This refers to flushing on a negative event, for example:

SAP failure (link down or MC-LAG out-of-sync)

pseudowire or endpoint failure

In addition, only for the B-VPLS domain, changing the backbone source MAC of a B-VPLS triggers an LDP MAC flush-all-from-me to be sent in the related active topology. At the receiving PBB PE, a B-MAC flush automatically triggers a flushing of the C-MACs associated with the old source B-MAC of the B-VPLS.

PBB blackholing issue

In the PBB VPLS solution, a B-VPLS may be used as infrastructure for one or more I-VPLS instances. B-VPLS control plane (LDP Signaling or P-MSTP) replaces I-VPLS control plane throughout the core. This is raising an additional challenge related to blackhole avoidance in the I-VPLS domain as described in this section.

To address the PBB blackholing issue, assuming that the link between PE A1 and node 5 is active, the remote PEs participating in the orange VPN (for example, PE D) learn the C-MAC X associated with backbone MAC A1. Under failure of the link between node 5 and PE A1 and activation of link to PE A2, the remote PEs (for example, PE D) blackhole the traffic destined for customer MAC X to B-MAC A1 until the aging timer expires or a packet flows from X to Y through the PE A2. This may take a long time (default aging timer is 5 minutes) and may affect a large number of flows across multiple I-VPLSs.

A similar issue occurs in the case where node 5 is connected to A1 and A2 I-VPLS using active/standby pseudowires. For example, when node 5 changes the active pseudowire, the remote PBB PE keeps sending to the old PBB PE.

Another case is when the QinQ access network dual-homed to a PBB PE uses RSTP or M-VPLS with MSTP to provide loop avoidance at the interconnection between the PBB PEs and the QinQ SWs. In the case where the access topology changes, a TCN event is generated and propagated throughout the access network. Similarly, this change needs to be propagated to the remote PBB PEs to avoid blackholing.

A solution is required to propagate the I-VPLS events through the backbone infrastructure (B-VPLS) to flush the customer MAC to B-MAC entries in the remote PBB. As there are no IVPLS control plane exchanges across the PBB backbone, extensions to B-VPLS control plane are required to propagate the I-VPLS MAC flush events across the B-VPLS.

LDP MAC flush solution for PBB blackholing

In the case of an MPLS core, B-VPLS uses T-LDP signaling to set up the pseudowire forwarding. The following I-VPLS events must be propagated across the core B-VPLS using LDP MAC flush-all-but-mine or flush-all-from-me indications:

For flush-all-but-mine indication (‟positive flush”):