MPLS forwarding policy

The MPLS forwarding policy provides an interface for adding user-defined label entries into the label FIB of the router and user-defined tunnel entries into the tunnel table.

The endpoint policy allows the user to forward unlabeled packets over a set of user-defined direct or indirect next hops with the option to push a label stack on each next hop. Routes are bound to an endpoint policy when their next hop matches the endpoint address of the policy.

The user defines an endpoint policy by configuring a set of next-hop groups, each consisting of a primary and a backup next hops, and binding an endpoint to it.

The label-binding policy provides the same capability for labeled packets. In this case, labeled packets matching the ILM of the policy binding label are forwarded over the set of next hops of the policy.

The user defines a label-binding policy by configuring a set of next-hop groups, each consisting of a primary and a backup next hops, and binding a label to it.

This feature is targeted for router programmability in SDN environments.

Introduction to MPLS forward policy

This section provides information about configuring and operating a MPLS forwarding policy using CLI.

There are two types of MPLS forwarding policy:

endpoint policy

label-binding policy

The endpoint policy allows the user to forward unlabeled packets over a set of user-defined direct or indirect next hops, with the option to push a label stack on each next hop. Routes are bound to an endpoint policy when their next hop matches the endpoint address of the policy.

The label-binding policy provides the same capability for labeled packets. In this case, labeled packets matching the ILM of the policy binding label are forwarded over the set of next hops of the policy.

The data model of a forwarding policy represents each pair of {primary next hop, backup next hop} as a group and models the ECMP set as the set of Next-Hop Groups (NHGs). Flows of prefixes can be switched on a per NHG basis from the primary next hop, when it fails, to the backup next hop without disturbing the flows forwarded over the other NHGs of the policy. The same can be performed when reverting back from a backup next hop to the restored primary next hop of the same NHG.

Feature validation and operation procedures

The MPLS forwarding policy follows a number of configuration and operation rules which are enforced for the lifetime of the policy.

There are two levels of validation:

The first level validation is performed at provisioning time. The user can bring up a policy (no shutdown command) after these validation rules are met. Afterwards, the policy is stored in the forwarding policy database.

The second level validation is performed when the database resolves the policy.

Policy parameters and validation procedure rules

The following policy parameters and validation rules apply to the MPLS forwarding policy and are enforced at configuration time:

A policy must have either the endpoint or the binding-label command to be valid or the no shutdown is not allowed. These commands are mutually exclusive per policy.

The endpoint command specifies that this policy is used for resolving the next hop of IPv4 or IPv6 packets, of BGP prefixes in GRT, of static routes in GRT, of VPRN IPv4 or IPv6 prefixes, or of service packets of EVPN prefixes. It is also used to resolve the next hop of BGP-LU routes.

The resolution of prefixes in these contexts matches the IPv4 or IPv6 next-hop address of the prefix against the address of the endpoint. The family of the primary and backup next hops of the NHGs within the policy are not relevant to the resolution of prefixes using the policy.

See Tunnel table handling of MPLS forwarding policy for information about CLI commands for binding these contexts to an endpoint policy.

The binding-label command allows the user to specify the label for binding to the policy such that labeled packets matching the ILM of the binding label can be forwarded over the NHG of the policy.

The ILM entry is created only when a label is configured. Only a provisioned binding label from a reserved label block is supported. The name of the reserved label block using the reserved-label-block command must be configured.

The payload of the packet forwarded using the ILM (payload underneath the swapped label) can be IPv4, IPv6, or MPLS. The family of the primary and backup next hops of the NHG within the policy are not relevant to the type of payload of the forwarded packets.

Changes to the values of the endpoint and binding-label parameters require a shutdown of the specific forwarding policy context.

A change to the name of the reserved-label-block requires a shutdown of the forwarding-policies context. The shutdown is not required if the user extends or shrinks the range of the reserved-label-block.

The preference parameter allows the user to configure multiple endpoint forwarding policies with the same endpoint address value or multiple label-binding policies with the same binding label; providing the capability to achieve a 1:N backup strategy for the forwarding policy. Only the most preferred, lowest numerical preference value, policy is activated in data path as described in Policy resolution and operational procedures.

Changes to the value of parameter preference requires a shutdown of the specific forwarding-policy context.

A maximum of eight label-binding policies, with different preference values, are allowed for each unique value of the binding label.

Label-binding policies with exactly the same value of the tuple {binding label | preference} are duplicate and their configuration is not allowed.

The user cannot perform no shutdown on the duplicate policy.

A maximum eight endpoint policies, with different preference values, are allowed for each unique value of the tuple {endpoint}.

Endpoint policies with exactly the same value of the tuple {endpoint, reference} are duplicate and their configuration is not allowed.

The user cannot perform no shutdown on the duplicate policy.

The metric parameter is supported with the endpoint policy only and is inherited by the routes which resolve their next hop to this policy.

The revert-timer command configures the time to wait before switching back the resolution from the backup next hop to the restored primary next hop within an NHG. By default, this timer is disabled meaning that the NHG immediately reverts to the primary next hop when it is restored.

The revert timer is restarted each time the primary next hop flaps and comes back up again while the previous timer is still running. If the revert timer value is changed while the timer is running, it is restarted with the new value.

The MPLS forwarding policy feature allows for a maximum of 32 NHGs consisting of, at most, one primary next hop and one backup next hop.

The next-hop command allows the user to specify a direct next-hop address or an indirect next-hop address.

A maximum of ten labels can be specified for a primary or backup direct next hop using the pushed-labels command. The label stack is programmed using a super-NHLFE directly on the outgoing interface of the direct primary or backup next hop.

Note: This policy differs from the SR-TE LSP or SR policy implementation which can push a total of 11 labels because of the fact it uses a hierarchical NHLFE (super-NHLFE with maximum 10 labels pointing to the top SID NHLFE).The resolution-type {direct| indirect} command allows a limited validation at configuration time of the NHGs within a policy. The no shutdown command fails if any of these rules are not satisfied. The following are the rules of this validation:

NHGs within the same policy must be of the same resolution type.

A forwarding policy can have a single NHG of resolution type indirect with a primary next hop only or with both primary and backup next hops. An NHG with backup a next hop only is not allowed.

A forwarding policy has one or more NHGs of resolution type direct with a primary next hop only or with both primary and backup next hops. An NHG with a backup next hop only is not allowed.

A check is performed to ensure the address value of the primary and backup next hop, within the same NHG, are not duplicates. No check is performed for duplicate primary or backup next-hop addresses across NHGs.

A maximum of 64,000 forwarding policies of any combination of label binding and endpoint types can be configured on the system.

The IP address family of an endpoint policy is determined by the family of the endpoint parameter. It is populated in the TTMv4 or TTMv6 table accordingly. A label-binding policy does not have an IP address family associated with it and is programmed into the label (ILM) table.

The following are the IP type combinations for the primary and backup next hops of the NHGs of a policy:

A primary or a backup indirect next hop with no pushed labels (label-binding policy) can be IPv4 or IPv6. A mix of both IP types is allowed within the same NHG.

A primary or backup direct next hop with no pushed labels (label-binding policy) can be IP types IPv4 or IPv6. A mix of both families is allowed within the same NHG.

A primary or a backup direct next hop with pushed labels (both endpoint and label binding policies) can be IP types IPv4 or IPv6. A mix of both families is allowed within the same NHG.

Policy resolution and operational procedures

This section describes the validation of parameters performed at resolution time, as well as the details of the resolution and operational procedures.

The following parameter validation is performed by the forwarding policy database at resolution time; meaning each time the policy is re-evaluated:

If the NHG primary or backup next hop resolves to a route whose type does not match the configured value in resolution-type, that next hop is made operationally ‟down”.

A DOWN reason code shows in the state of the next hop.

The primary and backup next hops of an NHG are looked up in the routing table. The lookups can match a direct next hop in the case of the direct resolution type and therefore the next hop can be part of the outgoing interface primary or secondary subnet. They can also match a static, IGP, or BGP route for an indirect resolution type, but only the set of IP next hops of the route are selected. Tunnel next hops are not selected and if they are the only next hops for the route, the NHG is put in operationally ‟down” state.

The first 32, out of a maximum of 64, resolved IP next hops are selected for resolving the primary or backup next hop of a NHG of resolution-type indirect.

If the primary next hop is operationally ‟down”, the NHG uses the backup next hop if it is UP. If both are operationally DOWN, the NHG is DOWN. See Data path support for details of the active path determination and the failover behavior.

If the binding label is not available, meaning it is either outside the range of the configured reserved-label-block, or is used by another MPLS forwarding policy or by another application, the label-binding policy is put operationally ‟down” and a retry mechanism checks the label availability in the background.

A policy level DOWN reason code is added to alert users who may then choose to modify the binding label value.

No validation is performed for the pushed label stack of or a primary or backup next hop within a NHG or across NHGs. Users are responsible for validating their configuration.

The forwarding policy database activates the best endpoint policy, among the named policies sharing the same value of the tuple {endpoint}, by selecting the lowest preference value policy. This policy is then programmed into the TTM and into the tunnel table in the data path.

If this policy goes DOWN, the forwarding policy database performs a re-evaluation and activates the named policy with the next lowest preference value for the same tuple {endpoint}.

If a more preferred policy comes back up, the forwarding policy database reverts to the more preferred policy and activates it.

The forwarding policy database similarly activates the best label-binding policy, among the named policies sharing the same binding label, by selecting the lowest preference value policy. This policy is then programmed into the label FIB table in the data path as detailed in Data path support.

If this policy goes DOWN, the forwarding policy database performs a re-evaluation and activates the named policy with the next lowest preference value for the same binding label value.

If a more preferred policy comes back up, the forwarding policy database reverts to the more preferred policy and activates it.

The active policy performs ECMP, weighted ECMP, or CBF over the active (primary or backup) next hops of the NHG entries.

When used in the PCEP application, each LSP in a label-binding policy is reported separately by PCEP using the same binding label. The forwarding behavior on the node is the same whether the binding label of the policy is advertised in PCEP or not.

A policy is considered UP when it is the best policy activated by the forwarding policy database and when at least one of its NHGs is operationally UP. A NHG of an active policy is considered UP when at least one of the primary or backup next hops is operationally UP.

When the config>router>mpls or config>router>mpls>forwarding-policies context is set to shutdown, all forwarding policies are set to DOWN in the forwarding policy database and deprogrammed from IOM and data path.

Prefixes which were being forwarded using the endpoint policies revert to the next preferred resolution type configured in the specific context (GRT, VPRN, or EVPN).

When an NHG is set to shutdown, it is deprogrammed from the IOM and data path. Flows of prefixes which were being forwarded to this NHG are re-allocated to other NHGs based on the ECMP, Weighted ECMP, or CBF rules.

When a policy is set to shutdown, it is deleted in the forwarding policy database and deprogrammed from the IOM and data path. Prefixes which were being forwarded using this policy are reverted to the next preferred resolution type configured in the specific context (GRT, VPRN, or EVPN).

The no forwarding-policies command deletes all policies from the forwarding policy database provided none of them are bound to any forwarding context (GRT, VPRN, or EVPN). Otherwise, the command fails.

Tunnel table handling of MPLS forwarding policy

An endpoint forwarding policy validated as the most preferred policy for an endpoint address is added to the TTMv4 or TTMv6 according to the address family of the address of the endpoint parameter. A new owner of mpls-fwd-policy is used. A tunnel ID is allocated to each policy and is added into the TTM entry for the policy. For more information about the mpls-fwd-policy command, used to enable MPLS forwarding policy in different services, see the following guides:

7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 2 Services and EVPN Guide

7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 3 Services Guide: IES and VPRN

7450 ESS, 7750 SR, 7950 XRS, and VSR Router Configuration Guide

7450 ESS, 7750 SR, 7950 XRS, and VSR Unicast Routing Protocols Guide

The TTM preference value of a forwarding policy is configurable using the parameter tunnel-table-pref. The default value of this parameter is 4.

Each individual endpoint forwarding policy can also be assigned a preference value using the preference command with a default value of 255. When the forwarding policy database compares multiple forwarding policies with the same endpoint address, the policy with the lowest numerical preference value is activated and programmed into TTM. The TTM preference assigned to the policy is its own configured value in the tunnel-table-pref parameter.

If an active forwarding policy preference has the same value as another tunnel type for the same destination in TTM, then routes and services which are bound to both types of tunnels use the default TTM preference for the two tunnel types to select the tunnel to bind to as shown in Route preferences .

| Route preference | Value | Release introduced |

|---|---|---|

ROUTE_PREF_RIB_API |

3 |

new in 16.0.R4 for RIB API IPv4 and IPv6 tunnel table entry |

ROUTE_PREF_MPLS_FWD_POLICY |

4 |

new in 16.0.R4 for MPLS forwarding policy of endpoint type |

ROUTE_PREF_RSVP |

7 |

— |

ROUTE_PREF_SR_TE |

8 |

new in 14.0 |

ROUTE_PREF_LDP |

9 |

— |

ROUTE_PREF_OSPF_TTM |

10 |

new in 13.0.R1 |

ROUTE_PREF_ISIS_TTM |

11 |

new in 13.0.R1 |

ROUTE_PREF_BGP_TTM |

12 |

modified in 13.0.R1 (pref was 10 in R12) |

ROUTE_PREF_UDP |

254 |

introduced with 15.0 MPLS-over-UDP tunnels |

ROUTE_PREF_GRE |

255 |

— |

An active endpoint forwarding policy populates the highest pushed label stack size among all its NHGs in the TTM. Each service and shortcut application on the router uses that value and performs a check of the resulting net label stack by counting all the additional labels required for forwarding the packet in that context.

This check is similar to the one performed for SR-TE LSP and SR policy features. If the check succeeds, the service is bound or the prefix is resolved to the forwarding policy. If the check fails, the service does not bind to this forwarding policy. Instead, it binds to a tunnel of a different type if the user configured the use of other tunnel types. Otherwise, the service goes down. Similarly, the prefix does not get resolved to the forwarding policy and is either resolved to another tunnel type or becomes unresolved.

For more information about the resolution-filter CLI commands for resolving the next hop of prefixes in GRT, VPRN, and EVPN MPLS into an endpoint forwarding policy, see the following guides:

7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 2 Services and EVPN Guide

7450 ESS, 7750 SR, 7950 XRS, and VSR Layer 3 Services Guide: IES and VPRN

7450 ESS, 7750 SR, 7950 XRS, and VSR Router Configuration Guide

7450 ESS, 7750 SR, 7950 XRS, and VSR Unicast Routing Protocols Guide

BGP-LU routes can also have their next hop resolved to an endpoint forwarding policy.

Data path support

NHG of resolution type indirect

Each NHG is modeled as a single NHLFE. The following are the specifics of the data path operation:

Forwarding over the primary or backup next hop is modeled as a swap operation from the binding label to an implicit-null label over multiple outgoing interfaces (multiple NHLFEs) corresponding to the resolved next hops of the indirect route.

Packets of flows are sprayed over the resolved next hops of an NHG with resolution of type indirect as a one-level ECMP spraying. See Spraying of packets in a MPLS forwarding policy.

An NHG of resolution type indirect uses a single NHLFE and does not support uniform failover. It has CPM program only the active, the primary or backup, and the indirect next hop at any point in time.

Within an NHG, the primary next hop is the preferred active path in the absence of any failure of the NHG of resolution type indirect.

The forwarding database tracks the primary or backup next hop in the routing table. A route delete of the primary indirect next hop causes CPM to program the backup indirect next hop in the data path.

A route modify of the indirect primary or backup next hop causes CPM to update its resolved next hops and to update the data path if it is the active indirect next hop.

When the primary indirect next hop is restored and is added back into the routing table, CPM waits for an amount of time equal to the user programmed revert-timer before updating the data path. However, if the backup indirect next hop fails while the timer is running, CPM updates the data path immediately.

NHG of resolution type direct

The following rules are used for a NHG with a resolution type of direct:

Each NHG is modeled as a pair of {primary, backup} NHLFEs. The following are the specifics of the label operation:

For a label-binding policy, forwarding over the primary or backup next hop is modeled as a swap operation from the binding label to the configured label stack or to an implicit-null label (if the pushed-labels command is not configured) over a single outgoing interface to the next hop.

For an endpoint policy, forwarding over the primary or backup next hop is modeled as a push operation from the binding label to the configured label stack or to an implicit-null label (if the pushed-labels command is not configured) over a single outgoing interface to the next hop.

The labels, configured by the pushed-labels command, are not validated.

By default, packets of flows are sprayed over the set of NHGs with resolution of type direct as a one-level ECMP spraying. See Spraying of packets in a MPLS forwarding policy.

The user can enable weighted ECMP forwarding over the NHGs by configuring weight against all the NHGs of the policy. See Spraying of packets in a MPLS forwarding policy.

Within an NHG, the primary next hop is the preferred active path in the absence of any failure of the NHG of resolution type direct.

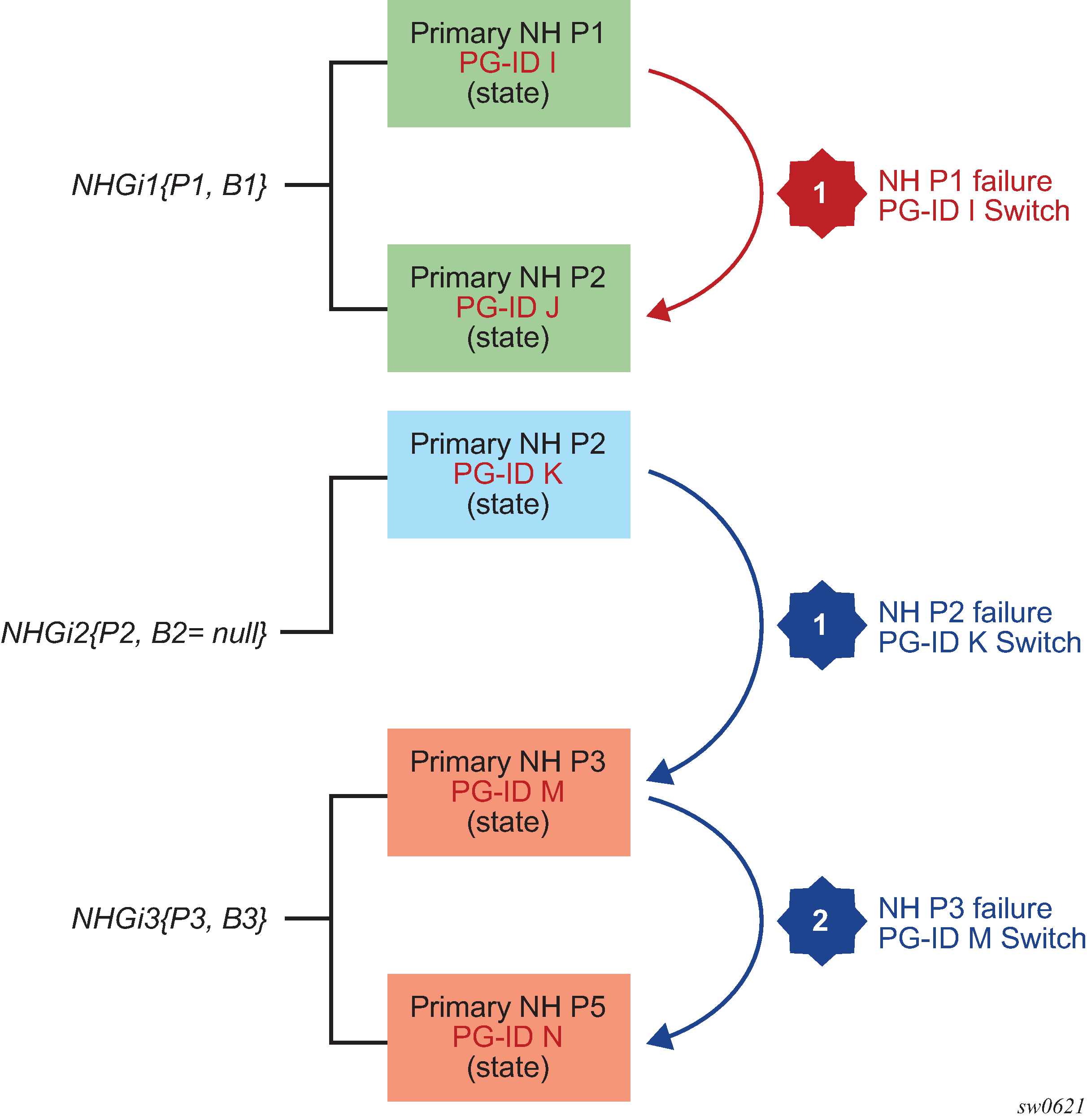

Note: The RIB API feature can change the active path away from the default. The gRPC client can issue a next-hop switch instruction to activate any of the primary or backup path at any time.The NHG supports uniform failover. The forwarding policy database assigns a Protect-Group ID (PG-ID) to each of the primary next hop and the backup next hop and programs both of them in the data path. A failure of the active path switches traffic to the other path following the uniform failover procedures as described in Active path determination and failover in a NHG of resolution type direct.

The forwarding database tracks the primary or backup next hop in the routing table. A route delete of the primary or backup direct next hop causes CPM to send the corresponding PG-ID switch to the data path.

A route modify of the direct primary or backup next hop causes CPM to update the MPLS forwarding database and to update the data path because both next hops are programmed.

When the primary direct next hop is restored and is added back into the routing table, CPM waits for an amount of time equal to the user programmed revert-timer before activating it and updating the data path. However, if the backup direct next hop fails while the timer is running, CPM activates it and updates the data path immediately. The latter failover to the restored primary next hop is performed using the uniform failover procedures as described in Active path determination and failover in a NHG of resolution type direct.

Note: RIB API does not support the revert timer. The gRPC client can issue a next-hop switch instruction to activate the restored primary next hop.CPM keeps track and updates the IOM for each NHG with the state of active or inactive of its primary and backup next hops following a failure event, a reversion to the primary next hop, or a successful next-hop switch request instruction (RIB API only).

Active path determination and failover in a NHG of resolution type direct

An NHG of resolution type direct supports uniform failover either within an NHG or across NHGs of the same policy. These uniform failover behaviors are mutually exclusive on a per-NHG basis depending on whether it has a single primary next hop or it has both a primary and backup next hops.

When an NHG has both a primary and a backup next hop, the forwarding policy database assigns a Protect-Group ID (PG-ID) to each and programs both in data path. The primary next hop is the preferred active path in the absence of any failure of the NHG.

During a failure affecting the active next hop, or the primary or backup next hop, CPM signals the corresponding PG-ID switch to the data path which then immediately begins using the NHLFE of the other next hop for flow packets mapped to NHGs of all forwarding polices which share the failed next hop.

An interface down event sent by CPM to the data path causes the data path to switch the PG-ID of all next hops associated with this interface and perform the uniform failover procedure for NHGs of all policies which share these PG-IDs.

Any subsequent network event causing a failure of the newly active next hop while the originally active next hop is still down, blackholes traffic of this NHG until CPM updates the policy to redirect the affected flows to the remaining NHGs of the forwarding policy.

When the NHG has only a primary next hop and it fails, CPM signals the corresponding PG-ID switch to the data path which then uses the uniform failover procedure to immediately re-assign the affected flows to the other NHGs of the policy.

A subsequent failure of the active next hop of a NHG the affected flow was re-assigned to in the first failure event, causes the data path to use the uniform failover procedure to immediately switch the flow to the other next hop within the same NHG.

NHG failover based on PG-ID switch illustrates the failover behavior for the flow packets assigned to an NHG with both a primary and backup next hop and to an NHG with a single primary next hop.

The notation NHGi{Pi,Bi} refers to NHG "i" which consists of a primary next hop (Pi) and a backup next hop (Bi). When an NHG does not have a backup next hop, it is referred to as NHGi{Pi,Bi=null}.

Spraying of packets in a MPLS forwarding policy

When the node operates as an LER and forwards unlabeled packets over an endpoint policy, the spraying of packets over the multiple NHGs of type direct or over the resolved next hops of a single NHG of type indirect follows prior implementation. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR Interface Configuration Guide.

When the node operates as an LSR, it forwards labeled packets matching the ILM of the binding label over the label-binding policy. An MPLS packet, including a MPLS-over-GRE packet, received over any network IP interface with a binding label in the label stack, is forwarded over the primary or backup next hop of either the single NHG of type indirect or of a selected NHG among multiple NHGs of type direct.

The router performs the following procedures when spraying labeled packets over the resolved next hops of a NHG of resolution type indirect or over multiple NHGs of type direct.

The router performs the GRE header processing as described in MPLS-over-GRE termination if the packet is MPLS-over-GRE encapsulated. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR Router Configuration Guide.

The router then pops one or more labels and if there is a match with the ILM of a binding label, the router swaps the label to implicit-null label and forwards the packet to the outgoing interface. The outgoing interface is selected from the set of primary or backup next hops of the active policy based on the LSR hash on the headers of the received MPLS packet.

-

The hash calculation follows the method in the user configuration of the command lsr-load-balancing {lbl-only | lbl-ip | ip-only} if the packet is MPLS-only encapsulated.

-

The hash calculation follows the method described in LSR Hashing of MPLS-over-GRE Encapsulated Packet if the packet is MPLS-over-GRE encapsulated. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR Interface Configuration Guide.

-

Outgoing packet Ethertype setting and TTL handling in label binding policy

The following rules determine how the router sets the Ethertype field value of the outgoing packet:

If the swapped label is not the Bottom-of-Stack label, the Ethertype is set to the MPLS value.

If the swapped label is the Bottom-of-Stack label and the outgoing label is not implicit-null, the Ethertype is set to the MPLS value.

If the swapped label is the Bottom-of-Stack label and the outgoing label is implicit-null, the Ethertype is set to the IPv4 or IPv6 value when the first nibble of the exposed IP packet is 4 or 6 respectively.

The router sets the TTL of the outgoing packet as follows:

The TTL of a forwarded IP packet is set to MIN(MPLS_TTL-1, IP_TTL), where MPLS_TTL refers to the TTL in the outermost label in the popped stack and IP_TTL refers to the TTL in the exposed IP header.

The TTL of a forwarded MPLS packet is set to MIN(MPLS_TTL-1, INNER_MPLS_TTL), where MPLS_TTL refers to the TTL in the outermost label in the popped stack and INNER_MPLS_TTL refers to the TTL in the exposed label.

Ethertype setting and TTL handling in endpoint policy

The router sets the Ethertype field value of the outgoing packet to the MPLS value.

The router checks and decrements the TTL field of the received IPv4 or IPv6 header and sets the TTL of all labels of the label stack specified in the pushed-labels command according to the following rules:

The router propagates the decremented TTL of the received IPv4 or IPv6 packet into all labels of the pushed label stack for a prefix in GRT.

The router then follows the configuration of the TTL propagation in the case of a IPv4 or IPv6 prefix forwarded in a VPRN context:

— config>router>ttl-propagate>vprn-local {none | vc-only | all}

— config>router>ttl-propagate>vprn-transit {none | vc-only | all}

— config>service>vprn>ttl-propagate>local {inherit | none | vc-only | all}

— config>service>vprn>ttl-propagate>transit {inherit | none | vc-only | all}

When a IPv6 packet in GRT is forwarded using an endpoint policy with an IPv4 endpoint, the IPv6 explicit null label is pushed first before the label stack specified in the pushed-labels command.

Weighted ECMP enabling and validation rules

Weighted ECMP is supported within an endpoint or a label-binding policy when the NHGs are of resolution type direct. Weighted ECMP is not supported with an NHG of type indirect.

Weighted ECMP is performed on labeled or unlabeled packets forwarded over the set of NHGs in a forwarding policy when all NHG entries have a load-balancing-weight configured. If one or more NHGs have no load-balancing-weight configured, the spraying of packets over the set of NHGs reverts to plain ECMP.

Also, the weighted-ecmp command in GRT (config>router>weighted-ecmp) or in a VPRN instance (config>service>vprn>weighted-ecmp) are not required to enable the weighted ECMP forwarding in an MPLS forwarding policy. These commands are used when forwarding over multiple tunnels or LSPs. Weighted ECMP forwarding over the NHGs of a forwarding policy is strictly governed by the explicit configuration of a weight against each NHG.

The weighted ECMP normalized weight calculated for a NHG index causes the data path to program this index as many times as the normalized weight dictates for the purpose of spraying the packets.

Statistics

Ingress statistics

The ingress statistics feature is associated with the binding label, that is the ILM of the forwarding policy, and provides aggregate packet and octet counters for packets matching the binding label.

The per-ILM statistic index for the MPLS forwarding policy features is assigned at the time the first instance of the policy is programmed in the data path. All instances of the same policy, for example, policies with the same binding-label, regardless of the preference parameter value, share the same statistic index.

The statistic index remains assigned as long as the policy exists and the ingress-statistics context is not shutdown. If the last instance of the policy is removed from the forwarding policy database, the CPM frees the statistic index and returns it to the pool.

If ingress statistics are not configured or are shutdown in a specific instance of the forwarding policy, identified by a unique value of pair {binding-label, preference} of the forwarding policy, an assigned statistic index is not incremented if that instance of the policy is activated

If a statistic index is not available at allocation time, the allocation fails and a retry mechanism checks the statistic index availability in the background.

Egress statistics

Egress statistics are supported for both binding-label and endpoint MPLS forwarding policies; however, egress statistics are only supported in case where the next-hops configured within these policies are of resolution type direct. The counters are attached to the NHLFE of each next hop. Counters are effectively allocated by the system at the time the instance is programmed in the data-path. Counters are maintained even if an instance is deprogrammed and values are not reset. If an instance is reprogrammed, traffic counting resumes at the point where it last stopped. Traffic counters are released and therefore traffic statistics are lost when the instance is removed from the database when the egress statistic context is deleted, or when egress statistics are disabled (egress-statistics shutdown).

No retry mechanism is available for egress statistics. The system maintains a state per next hop and per-instance about whether the allocation of statistic indexes is successful. If the system is not able to allocate all the needed indexes on a specified instance because of a lack of resources, the user should disable egress statistics on that instance, free the required number of statistics indexes, and re-enable egress statistics on the needed entry. The selection of which other construct to release statistic indexes from is beyond the scope of this document.

Configuring static label routes using MPLS forwarding policy

Steering flows to an indirect next hop

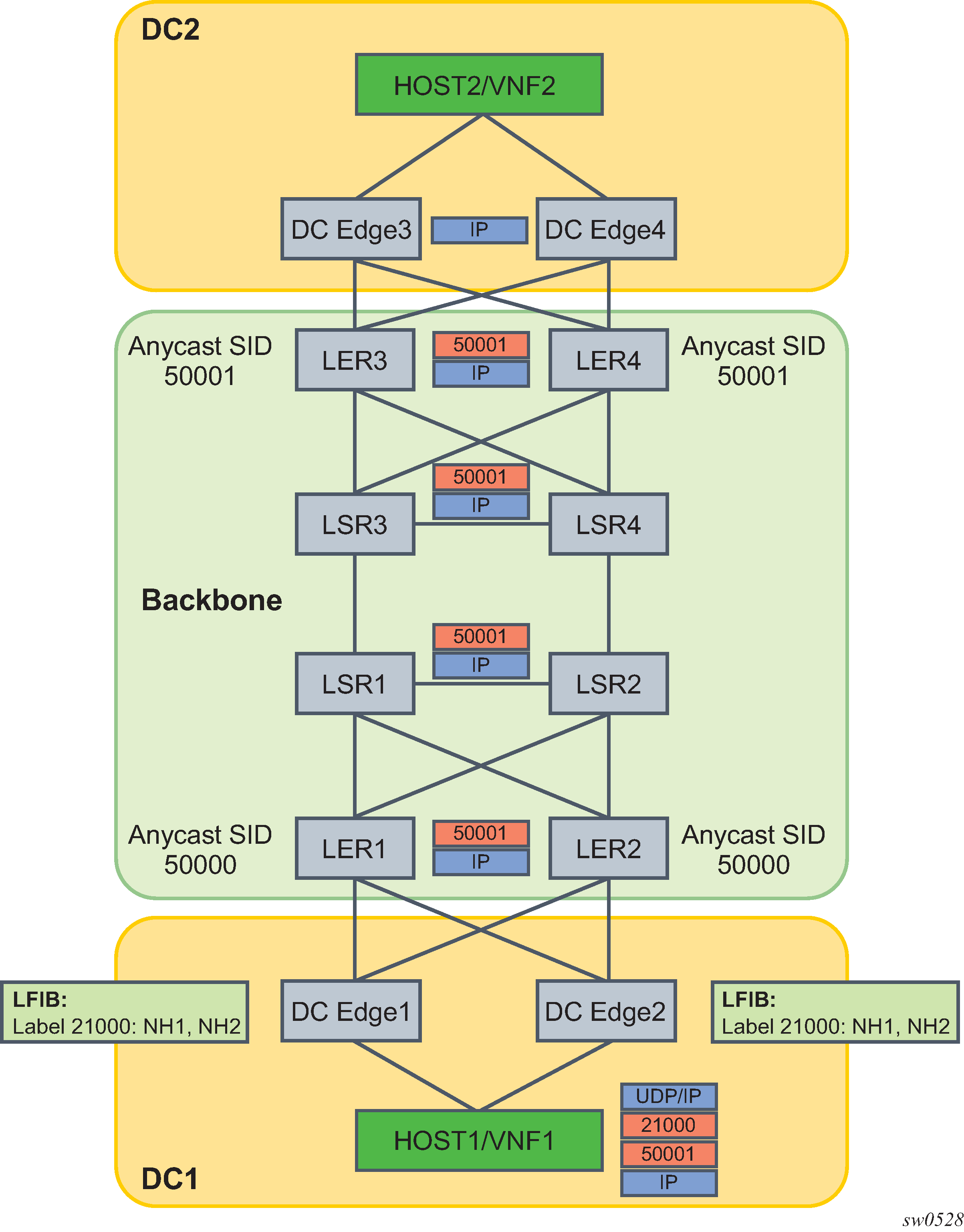

Traffic steering to an indirect next hop using a static label route illustrates the traffic forwarding from a Virtual Network Function (VNF1) residing in a host in a Data Center (DC1) to VNF2 residing in a host in DC2 over the segment routing capable backbone network. DC1 and DC2 do not support segment routing and MPLS while the DC Edge routers do not support segment routing. Hence, MPLS packets of VNF1 flows are tunneled over a UDP/IP or GRE/IP tunnel and a static label route is configured on DC Edge1/2 to steer the decapsulated packets to the remote DC Edge3/4.

The following are the data path manipulations of a packet across this network:

Host in DC1 pushes MPLS-over-UDP (or MPLS-over-GRE) header with outer IP destination address matching its local DC Edge1/2. It also pushes a static label 21000 which corresponds to the binding label of the MPLS forwarding policy configured in DC Edge1/2 to reach remote DC Edge3/4 (anycast address). The bottom of the label stack is the anycast SID for the remote LER3/4.

The label 21000 is configured on both DC Edge1 and DC Edge2 using a label-binding policy with an indirect next hop pointing to the static route to the destination prefix of DC Edge3/4. The backup next-hop points to the static route to reach some DC Edge5/6 in another remote DC (not shown).

There is EBGP peering between DC Edge1/2 and LER1/2 and between DC Edge3/4 and LER3/4.

DC Edge1/2 removes the UDP/IP header (or GRE/IP header) and swaps label 21000 to implicit-null and forwards (ECMP spraying) to all resolved next hops of the static route of the primary or backup next hop of the label-binding policy.

LER1/2 forwards based on the anycast SID to remote LER3/4.

LER3/4 removes the anycast SID label and forwards the inner IP packet to DC Edge3/4 which then forwards to Host2 in DC2.

The following CLI commands configure the static label route to achieve this use case. It creates a label-binding policy with a single NHG that is pointing to the first route as its primary indirect next hop and the second route as its backup indirect next hop. The primary static route corresponds to a prefix of remote DC Edge3/4 router and the backup static route to the prefix of a pair of edge routers in a different remote DC. The policy is applied to routers DC Edge1/2 in DC1.

— config>router

— static-route-entry fd84:a32e:1761:1888::1/128

— next-hop 3ffe::e0e:e05

— no shutdown

— next-hop 3ffe::f0f:f01

— no shutdown

— static-route-entry fd22:9501:806c:2387::2/128

— next-hop 3ffe::1010:1002

— no shutdown

— next-hop 3ffe::1010:1005

— no shutdown

—

— config>router>mpls-labels

— reserved-label-block static-label-route-lbl-block

— start-label 20000 end-label 25000

—

— config>router>mpls

— forwarding-policies

— reserved-label-block static-label-route-lbl-block

— forwarding-policy static-label-route-indirect

— binding-label 21000

— revert-timer 5

— next-hop-group 1 resolution-type indirect

— primary-next-hop

— next-hop fd84:a32e:1761:1888::1

— backup-next-hop

— next-hop fd22:9501:806c:2387::2

Steering flows to a direct next hop

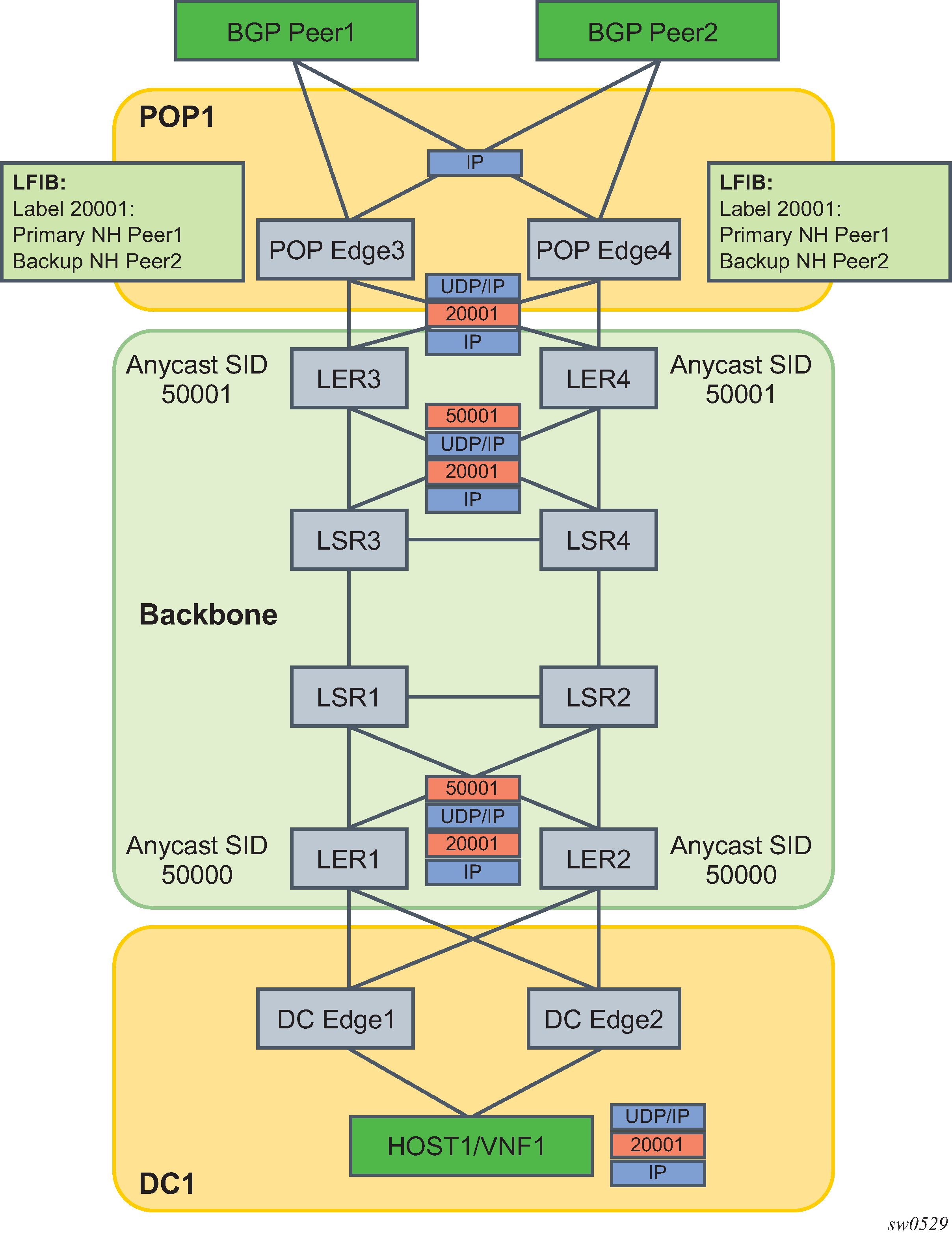

Traffic steering to a direct next hop using a static label route illustrates the traffic forwarding from a Virtual Network Function (VNF1) residing in a host in a Data Centre (DC1) to outside of the customer network via the remote peering Point Of Presence (POP1).

The traffic is forwarded over a segment routing capable backbone. DC1 and POP1 do not support segment routing and MPLS while the DC Edge routers do not support segment routing. Hence, MPLS packets of VNF1 flows are tunneled over a UDP/IP or GRE/IP tunnel and a static label route is configured on POP Edge3/4 to steer the decapsulated packets to the needed external BGP peer.

The intent is to override the BGP routing table at the peering routers (POP Edge3 and Edge4) and force packets of a flow originated in VNF1 to exit the network using a primary external BGP peer Peer1 and a backup external BGP peer Peer2, if Peer1 is down. This application is also referred to as Egress Peer Engineering (EPE).

The following are the data path manipulations of a packet across this network:

DC Edge1/2 receives a MPLS-over-UDP (or a MPLS-over-GRE) encapsulated packet from the host in the DC with the outer IP destination address set to the remote POP Edge3/4 routers in peering POP1 (anycast address). The host also pushes the static label 20001 for the remote external BGP Peer1 it wants to send to.

This label 20001 is configured on POP Edge3/4 using the MPLS forwarding policy feature with primary next hop of Peer1 and backup next hop of Peer2.

There is EBGP peering between DC Edge1/2 and LER1/2, and between POP Edge3/4 and LER3/4, and between POP Edge3/4 and Peer1/2.

LER1/LER2 pushes the anycast SID of remote LER3/4 as part of the BGP route resolution to a SR-ISIS tunnel or SR-TE policy.

LER3/4 removes the anycast SID and forwards the GRE packet to POP Edge3/4.

POP Edge3/4 removes UDP/IP (or GRE/IP) header and swaps the static label 20001 to implicit null and forwards to Peer1 (primary next hop) or to Peer2 (backup next hop).

The following CLI commands configure the static label route to achieve this use case. It creates a label-binding policy with a single NHG containing a primary and backup direct next-hops and is applied to peering routers POP Edge3/4.

—

— config>router>mpls-labels

— reserved-label-block static-label-route-lbl-block

— start-label 20000 end-label 25000

—

— config>router>mpls

— forwarding-policies

— forwarding-policy static-label-route-direct

— binding-label 20001

— revert-timer 10

— next-hop-group 1 resolution-type direct

— primary-next-hop

— next-hop fd84:a32e:1761:1888::1

— backup-next-hop

— next-hop fd22:9501:806c:2387::2