Network Address Translation

Terminology

-

BNG subscriber

This is a broader term than the ESM Subscriber, independent of the platform on which the subscriber is instantiated. It includes ESM subscribers on 7750 SR as well as subscribers instantiated on third party BNGs. Some of the NAT functions, such as Subscriber Aware Large Scale NAT44 utilizing standard RADIUS attribute work with subscribers independently of the platform on which they are instantiated.

-

deterministic NAT

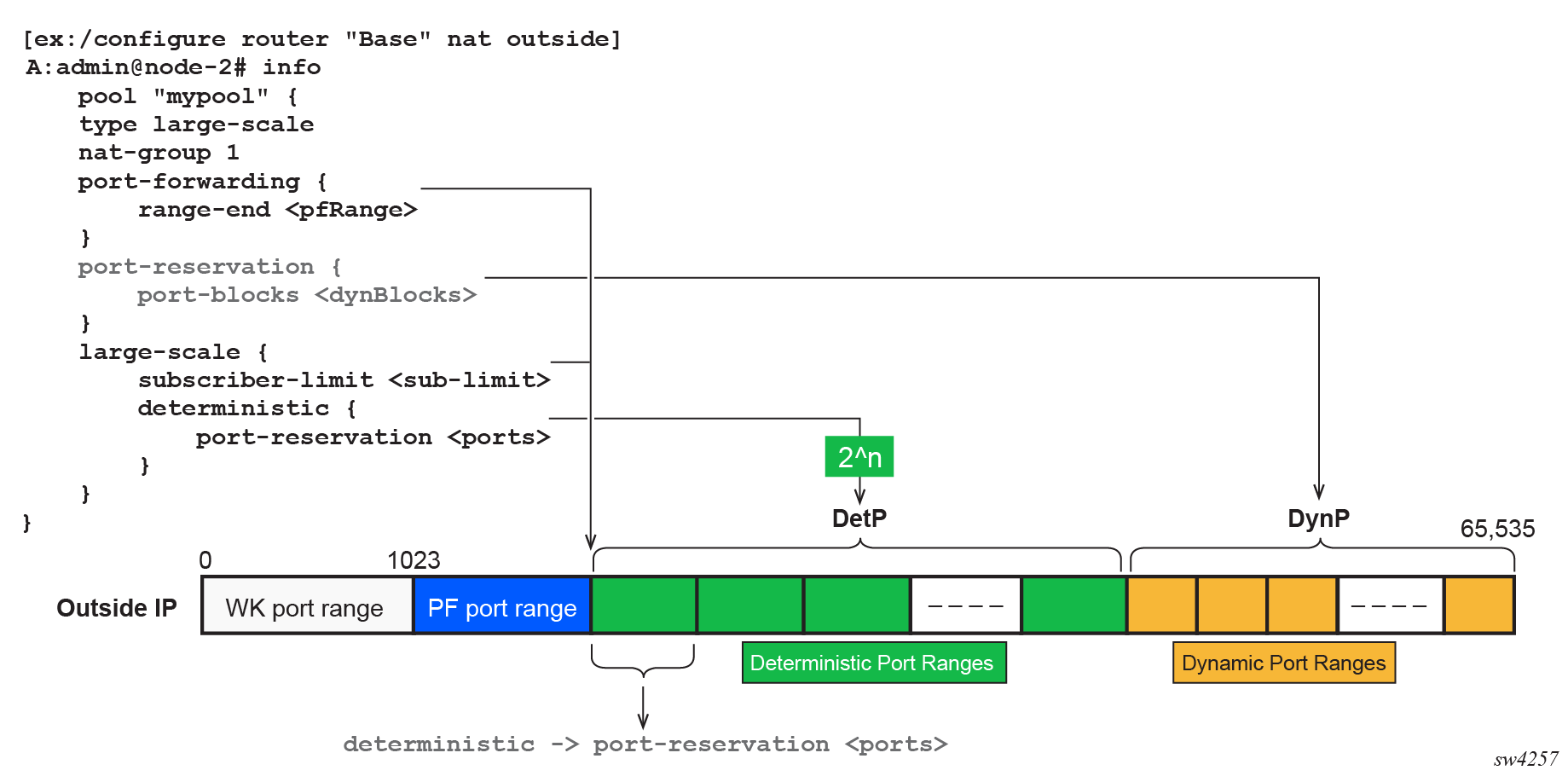

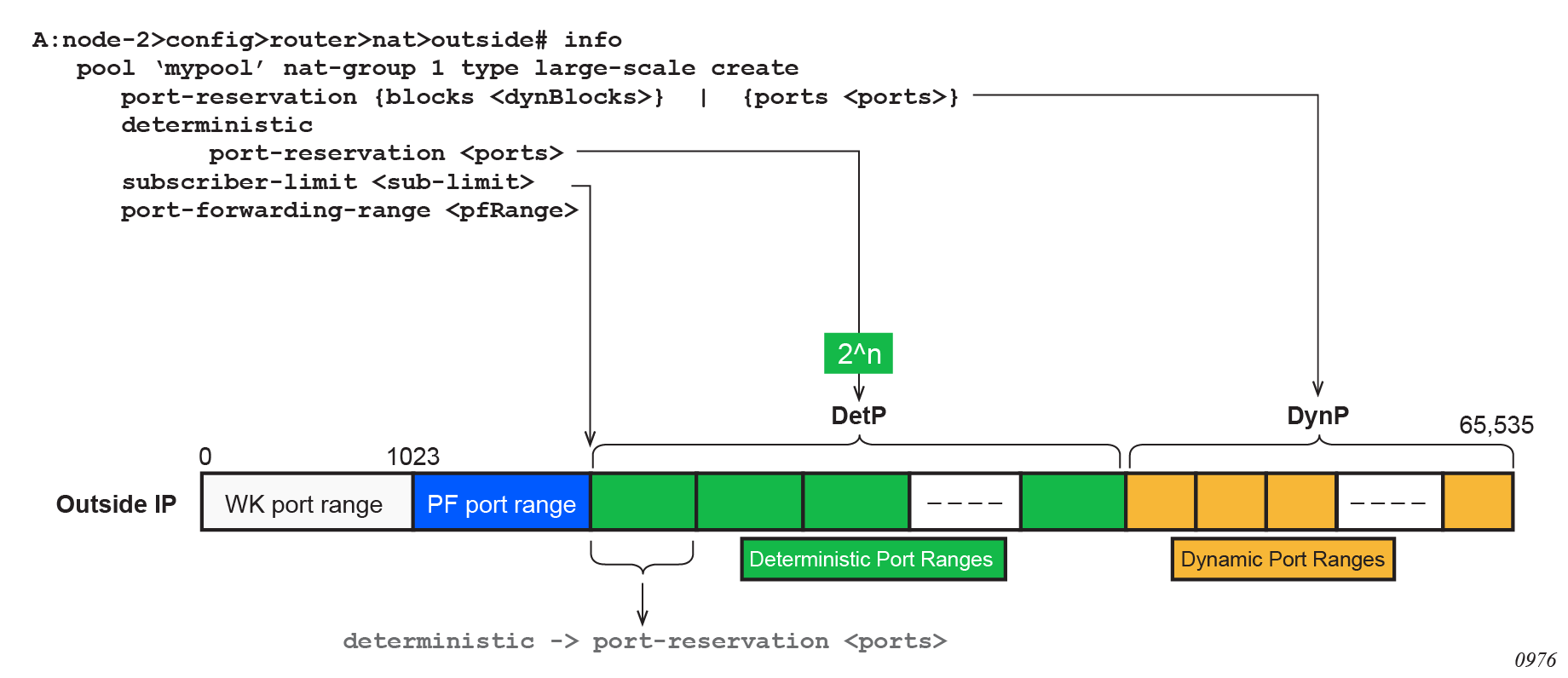

This is a mode of operation where mappings between the NAT subscriber and the outside IP address and port-range are allocated at the time of configuration. Each subscriber is permanently mapped to an outside IP and a dedicated port block. This dedicated port block is referred to as deterministic port block. Logging is not needed as the reverse mapping can be obtained using a known formula. The subscriber’s ports can be expanded by allocating a dynamic port block in case that all ports in deterministic port block are exhausted. In such case logging for the dynamic port block allocation/de-allocation is required.

-

Enhanced Subscriber Management (ESM) subscriber

This is a host or a collection of hosts instantiated in 7750 SR Broadband Network Gateway (BNG). The ESM subscriber represents a household or a business entity for which various services with committed Service Level Agreements (SLA) can be delivered. NAT function is not part of basic ESM functionality.

-

Layer 2–aware NAT

In the context of 7750 SR platform combines Enhanced Subscriber Management (ESM) subscriber-id and inside IP address to perform translation into a unique outside IP address and outside port. This is in contrast with classical NAT technique where only inside IP is considered for address translations. Because the subscriber-id alone is sufficient to make the address translation unique, Layer 2–aware NAT allows many ESM subscribers to share the same inside IP address. The scalability, performance and reliability requirements are the same as in LSN.

-

Large Scale NAT (LSN)

This refers to a collection of network address translation techniques used in service provider network implemented on a highly scalable, high performance hardware that facilitates various intra and inter-node redundancy mechanisms. The purpose of LSN semantics is to make delineation between high scale and high performance NAT functions found in service provider networks and enterprise NAT that is usually serving much smaller customer base at smaller speeds. The following NAT techniques can be grouped under the LSN name:

-

Large Scale NAT44 or Carrier Grade NAT (CGN)

-

DS-Lite

-

NAT64

Each distinct NAT technique is referred to by its corresponding name (Large Scale NAT44 [or CGN], DS-Lite and NAT64) with the understanding that in the context of 7750 SR platform, they are all part of LSN (and not enterprise based NAT).

Large Scale NAT44 term can be interchangeably used with the term Carrier Grade NAT (CGN) which in its name implies high reliability, high scale and high performance. These are again typical requirements found in service provider (carrier) network.

-

-

NAT RADIUS accounting

This is the reporting (or logging) of address translation related events (port-block allocation/de-allocation) via RADIUS accounting facility. NAT RADIUS accounting is facilitated via regular RADIUS accounting messages (start/interim-update/stop) as defined in RFC 2866, RADIUS Accounting, with NAT specific VSAs.

-

NAT RADIUS accounting

This can be used interchangeably with the term NAT RADIUS logging.

-

NAT subscriber

In NAT terminology, a NAT subscriber is an inside entity whose true identity is hidden from the outside. There are a few types of NAT implementation in 7750 SR and subscribers for each implementation are defined as follows:

-

Large Scale NAT44 (or CGN)

The subscriber is an inside IPv4 address.

-

Layer 2–aware NAT

The subscriber is an ESM subscriber which can spawn multiple IPv4 inside addresses.

-

DS-Lite

The subscriber in DS-Lite can be identified by the CPE’s IPv6 address (B4 element) or an IPv6 prefix. The selection of address or prefix as the representation of a DS-Lite subscriber is configuration dependent.

-

NAT64

The subscriber is an IPv6 prefix.

-

-

non-deterministic NAT

This is a mode of operation where all outside IP address and port block allocations are made dynamically at the time of subscriber instantiation. Logging in such case is required.

-

port block

This is collection of ports that is assigned to a subscriber. A deterministic LSN subscriber can have only one deterministic port block that can be extended by multiple dynamic port blocks. Non-deterministic LSN subscriber can be assigned only dynamic port blocks. All port blocks for a LSN subscriber must be allocated from a single outside IP address.

-

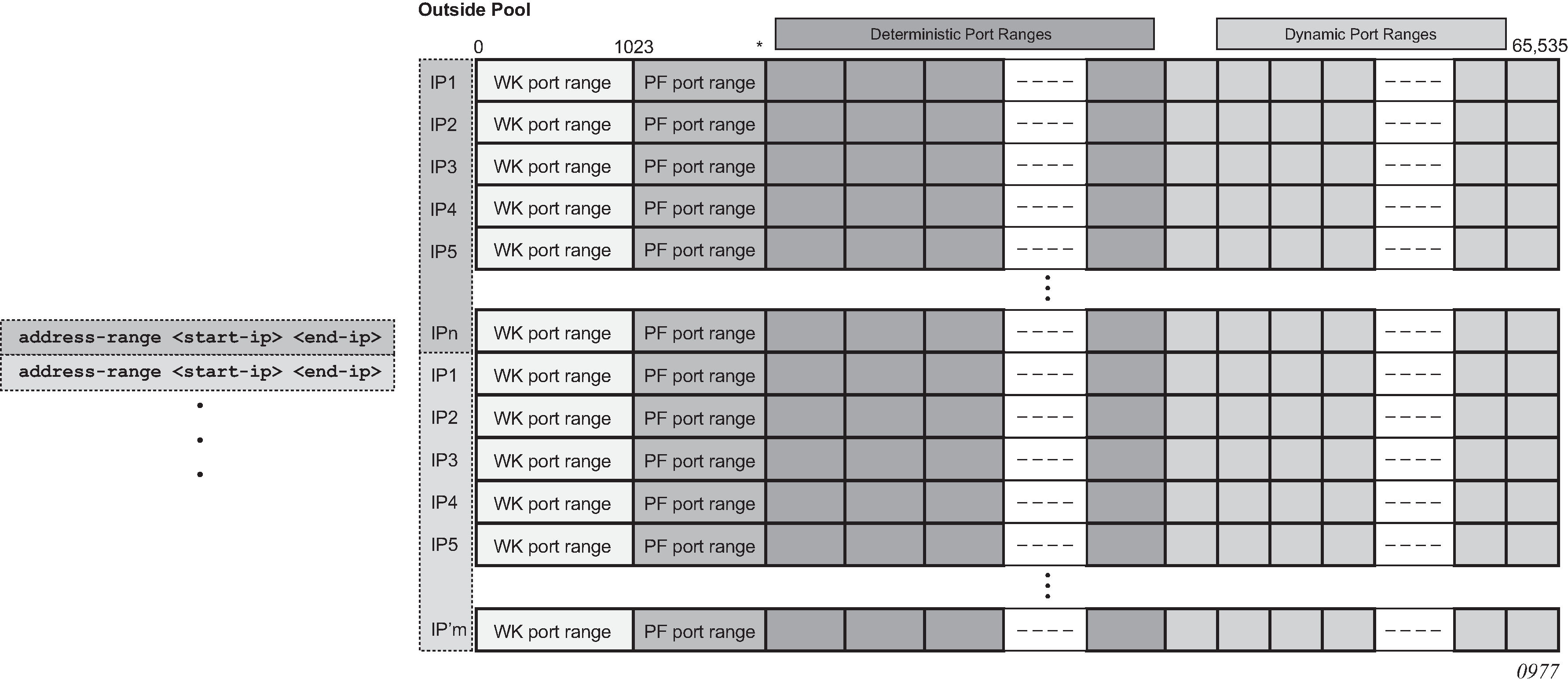

port-range

This is a collection of ports that can spawn multiple port blocks of the same type. For example, deterministic port-range includes all ports that are reserved for deterministic consumption. Similarly dynamic port-range is a total collection of ports that can be allocated in the form of dynamic port blocks. Other types of port-ranges are well-known ports and static port forwards.

Network Address Translation (NAT) overview

The 7750 SR supports Network Address (and port) Translation (NAPT) to provide continuity of legacy IPv4 services during the migration to native IPv6. By equipping the multiservice ISA (MS ISA) in an IOM4-e, IOM4-e-B, IOM4-e-HS, or in a 7750 SR-1e, 7750 SR-2e, or 7750 SR-3e (IOM-e), the 7750 SR can operate in two different modes, known as:

Large Scale NAT

Layer 2-Aware NAT

These two modes both perform source address and port translation as commonly deployed for shared Internet access. The 7750 SR with NAT is used to provide consumer broadband or business Internet customers access to IPv4 Internet resources with a shared pool of IPv4 addresses, such as may occur around the forecast IPv4 exhaustion. During this time it, is expected that native IPv6 services are still growing and a significant amount of Internet content remains IPv4.

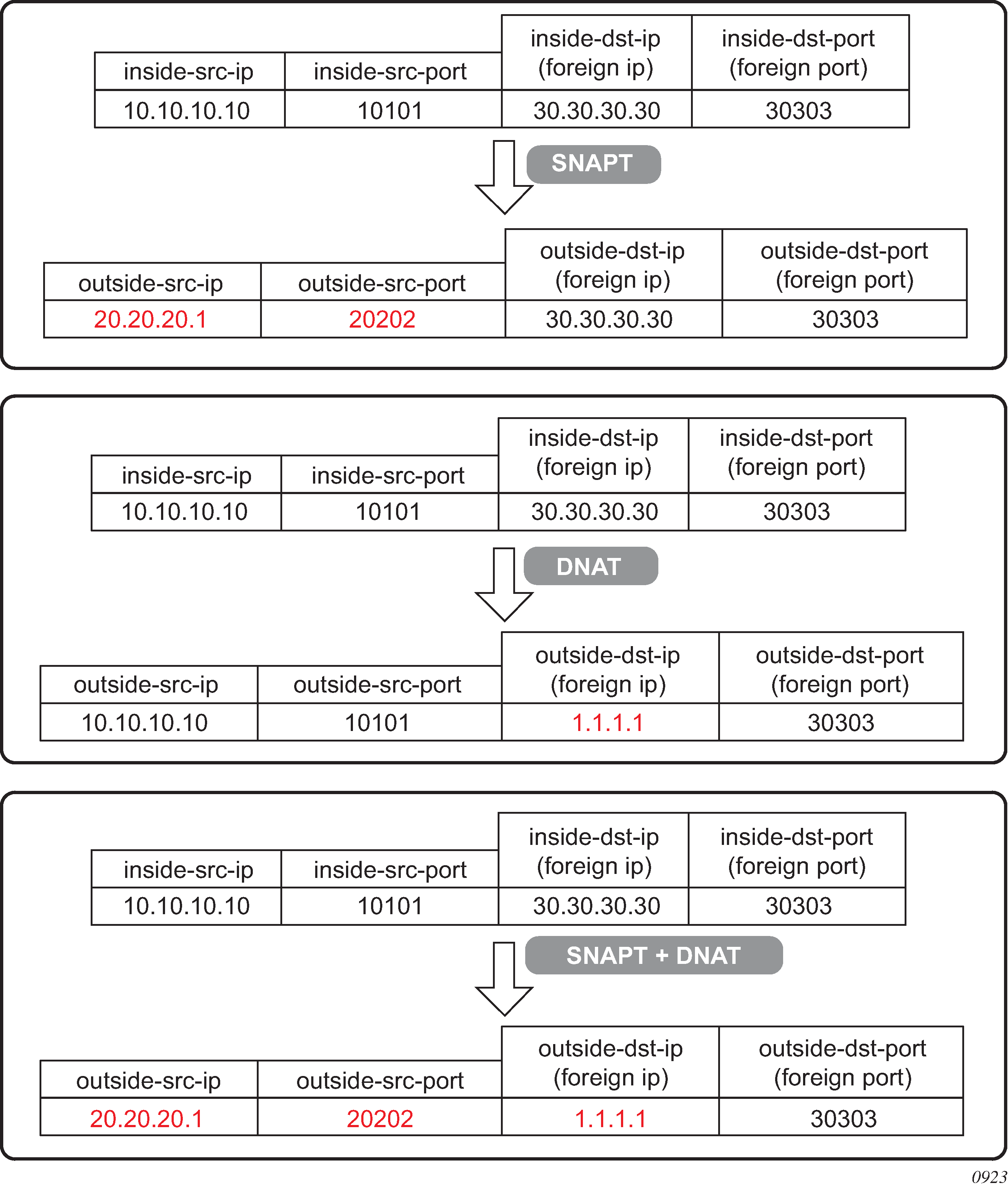

Principles of NAT

Network Address Translation devices modify the IP headers of packets between a host and server, changing some or all of the source address, destination address, source port (TCP/UDP), destination port (TCP/UDP), or ICMP query ID (for ping). The 7750 SR in both NAT modes performs Source Network Address and Port Translation (S-NAPT). S-NAPT devices are commonly deployed in residential gateways and enterprise firewalls to allow multiple hosts to share one or more public IPv4 addresses to access the Internet. The common terms of inside and outside in the context of NAT refer to devices inside the NAT (that is behind or masqueraded by the NAT) and outside the NAT, on the public Internet.

TCP/UDP connections use ports for multiplexing, with 65536 ports available for every IP address. Whenever many hosts are trying to share a single public IP address there is a chance of port collision where two different hosts may use the same source port for a connection. The resultant collision is avoided in S-NAPT devices by translating the source port and tracking this in a stateful manner. All S-NAPT devices are stateful in nature and must monitor connection establishment and traffic to maintain translation mappings. The 7750 SR NAT implementation does not use the well-known port range (1 to 1023).

In most circumstances, S-NAPT requires the inside host to establish a connection to the public Internet host or server before a mapping and translation occurs. With the initial outbound IP packet, the S-NAPT knows the inside IP, inside port, remote IP, remote port and protocol. With this information the S-NAPT device can select an IP and port combination (referred to as outside IP and outside port) from its pool of addresses and create a unique mapping for this flow of data.

Any traffic returned from the server uses the outside IP and outside port in the destination IP/port fields – matching the unique NAT mapping. The mapping then provides the inside IP and inside port for translation.

The requirement to create a mapping with inside port and IP, outside port and IP and protocol generally prevents new connections to be established from the outside to the inside as may occur when an inside host needs to be a server.

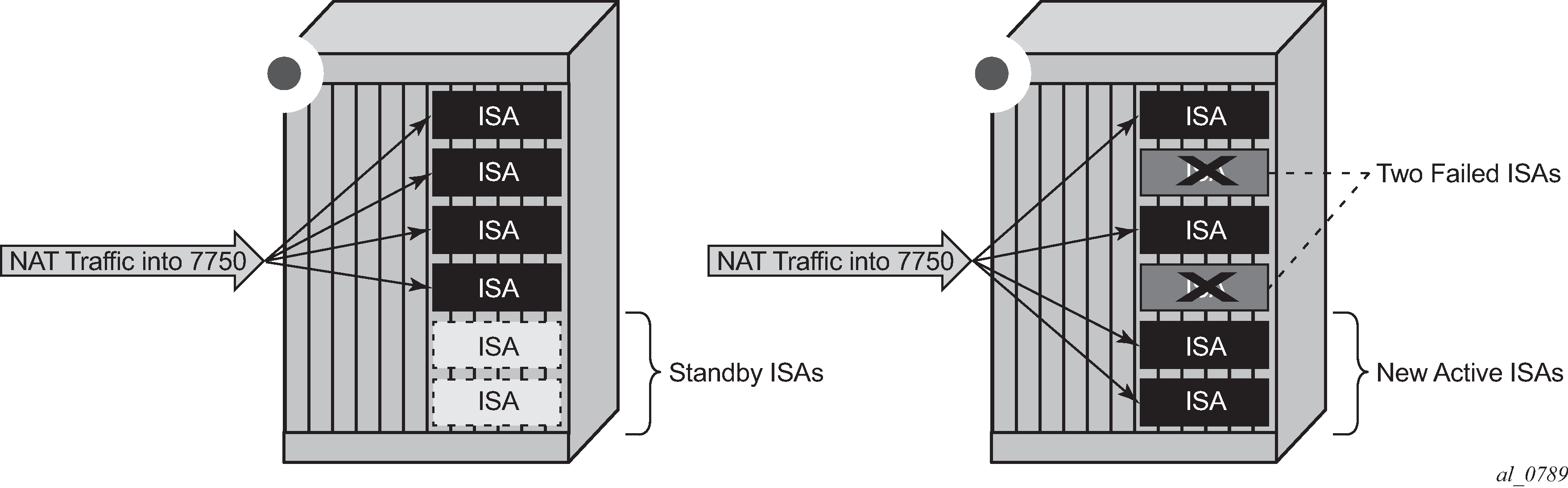

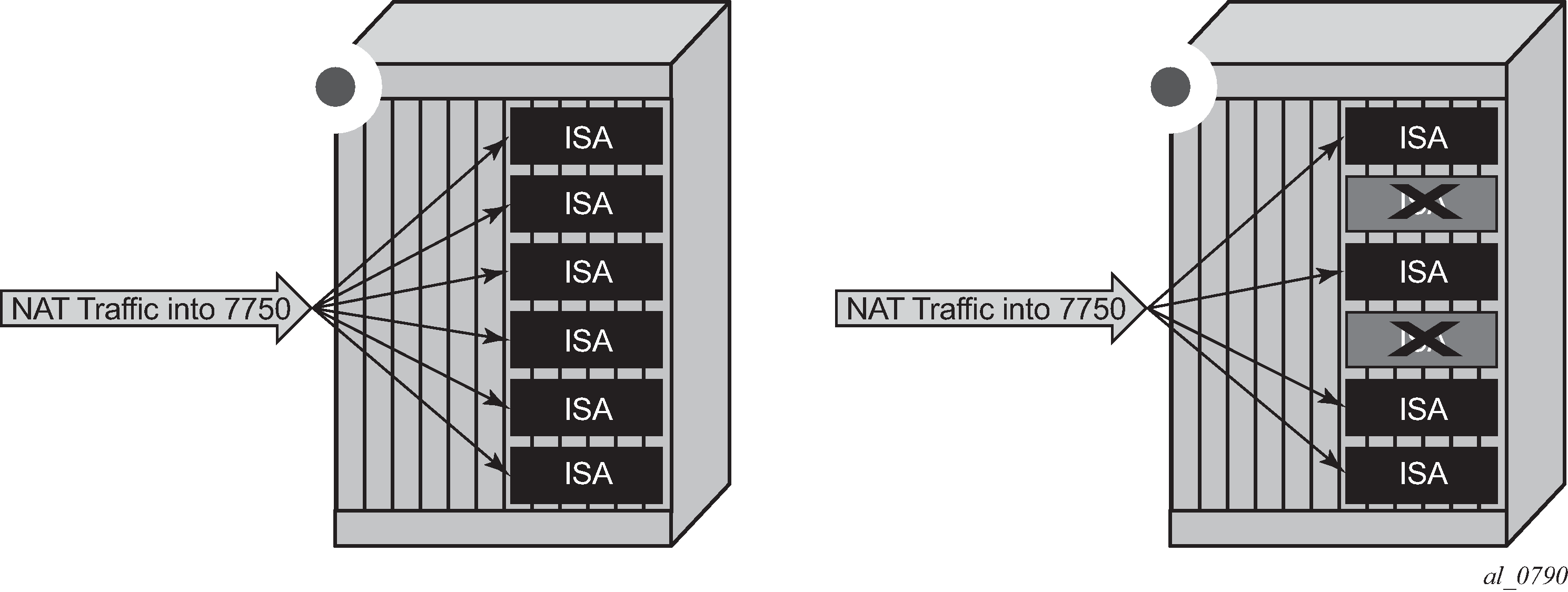

Traffic load balancing

NAT traffic in SR OS is distributed over ISAs and ESAs within each NAT group. As a result, NAT capacity grows incrementally by adding more ISAs and ESAs to the system while each ISA or ESA participates equally in load sharing.

SR OS load balancing mechanisms in CGN (LSN44, DS-Lite, and NAT64) differ in the upstream and downstream directions, they are independent and unaware of each other,

In the upstream direction, traffic is load balanced based on source IPv4 or IPv6 addresses or subnets.

In the downstream direction, outside IP address ranges (NAT pool address ranges) are micronetted (divided into smaller subnets), and these micronets are assigned to individual ISAs or ESAs in a balanced way. Downstream traffic is assigned to each ISA or ESA based on the micronets.

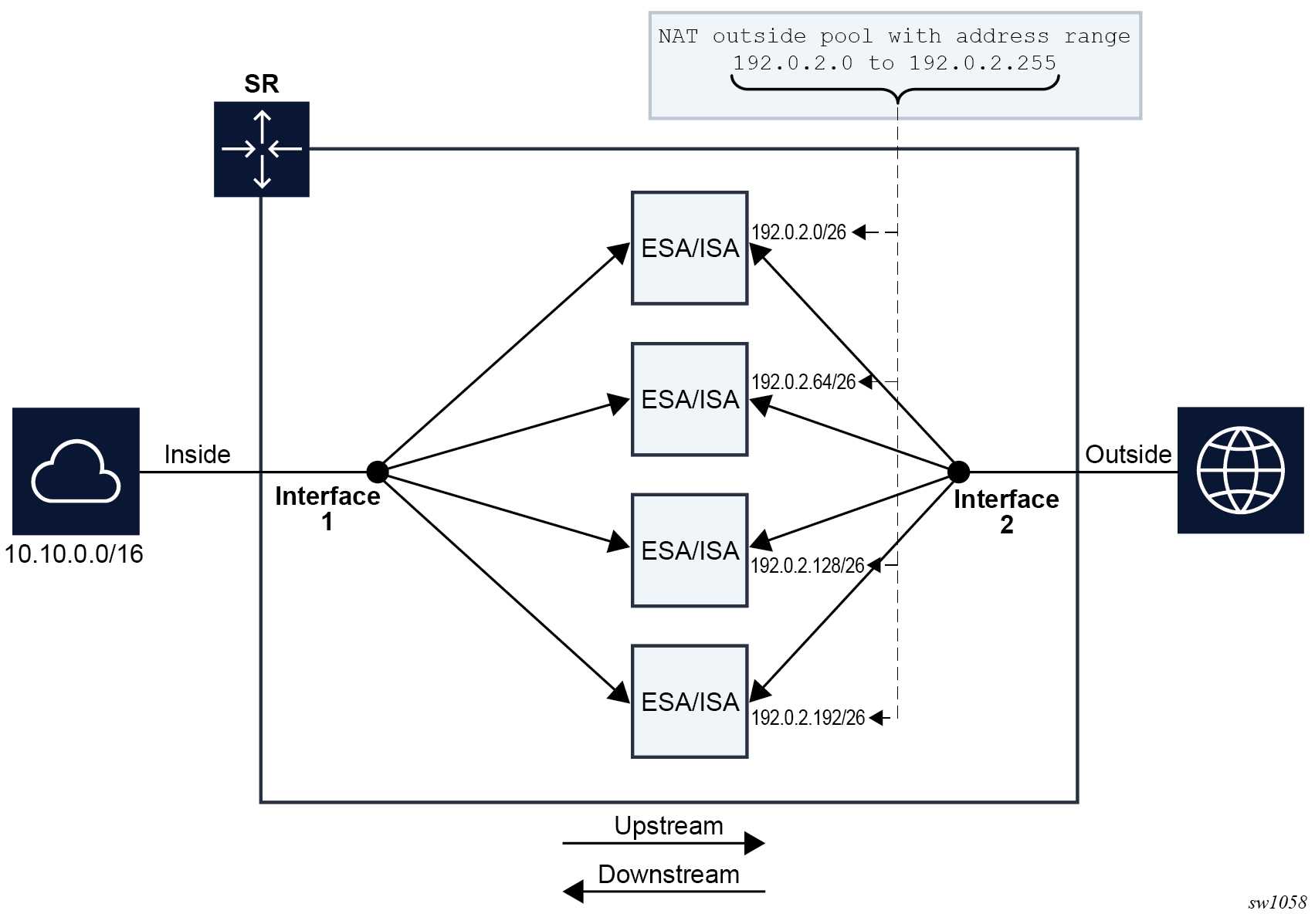

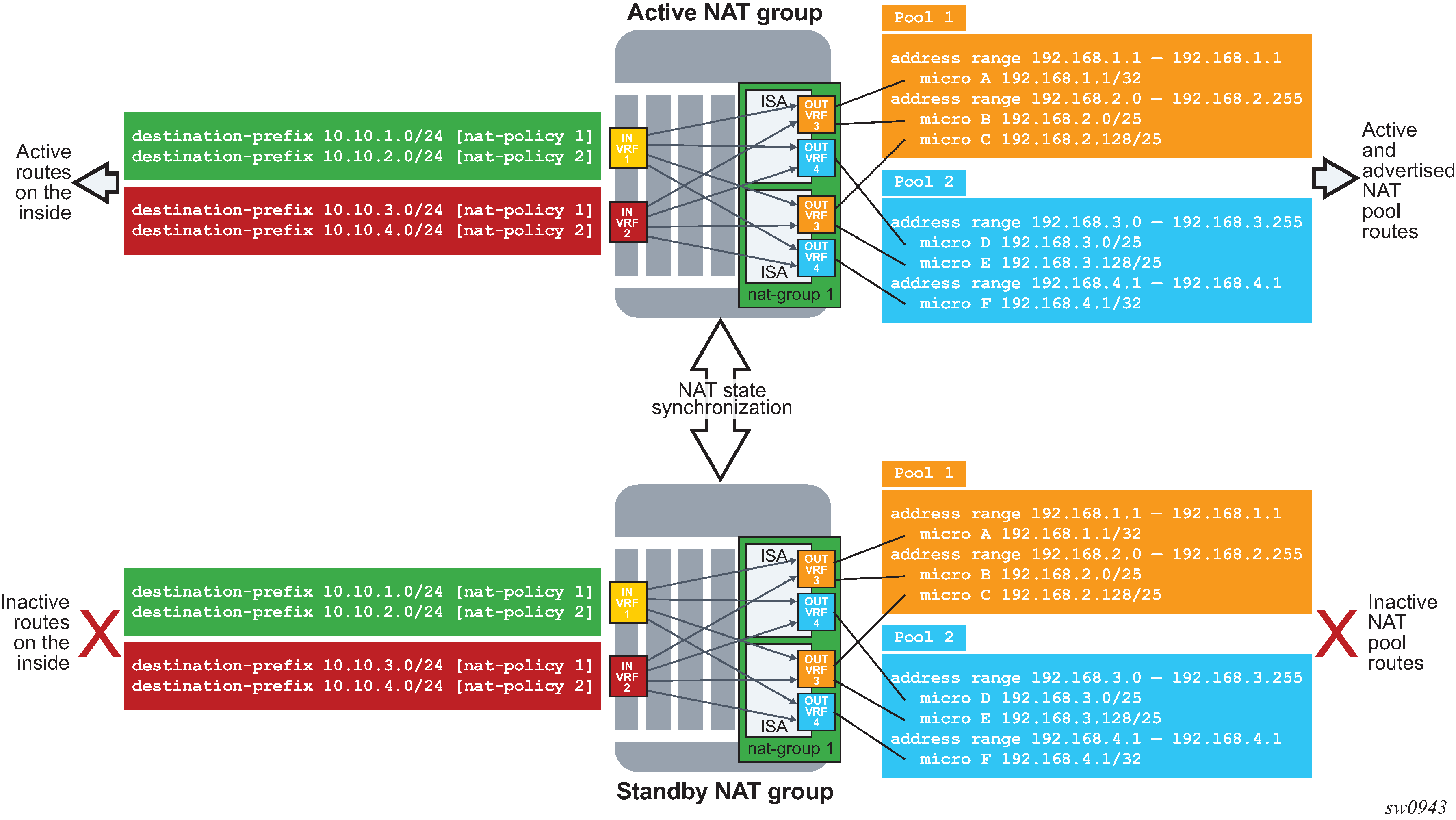

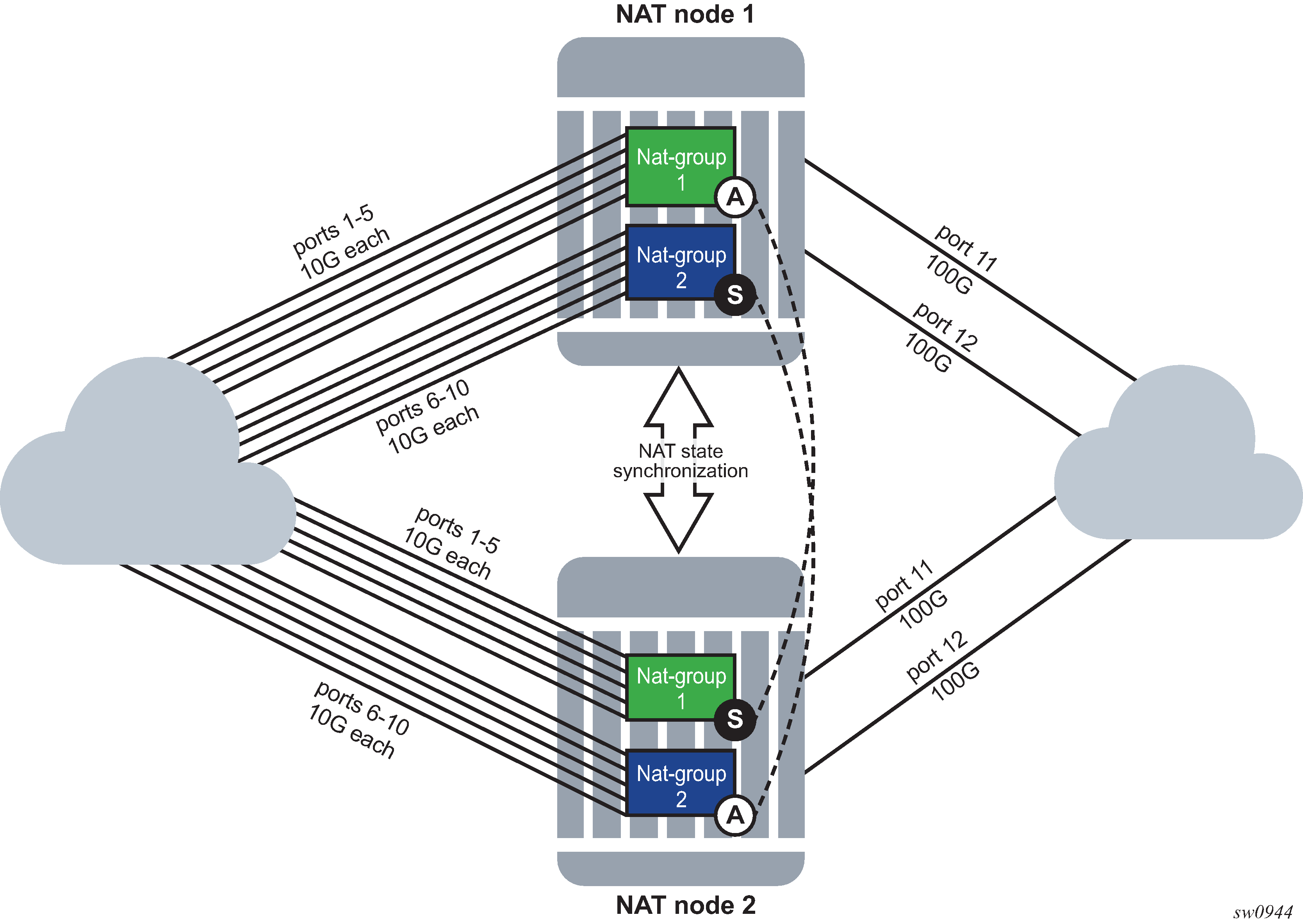

Load balancing over ISAs and ESAs shows traffic load balancing within SR OS. In the upstream direction, traffic is hashed based on the source IP addresses or subnets from the10.10.0.0/16 range. A sample of 64000 source IP addresses guarantees equal load distribution.

In this example, in the downstream direction, a pool of 256 public addresses is divided into four equal subnets and each subnet is assigned to one ISA or ESA, each ISA or ESA is serving 64 public IP addresses.

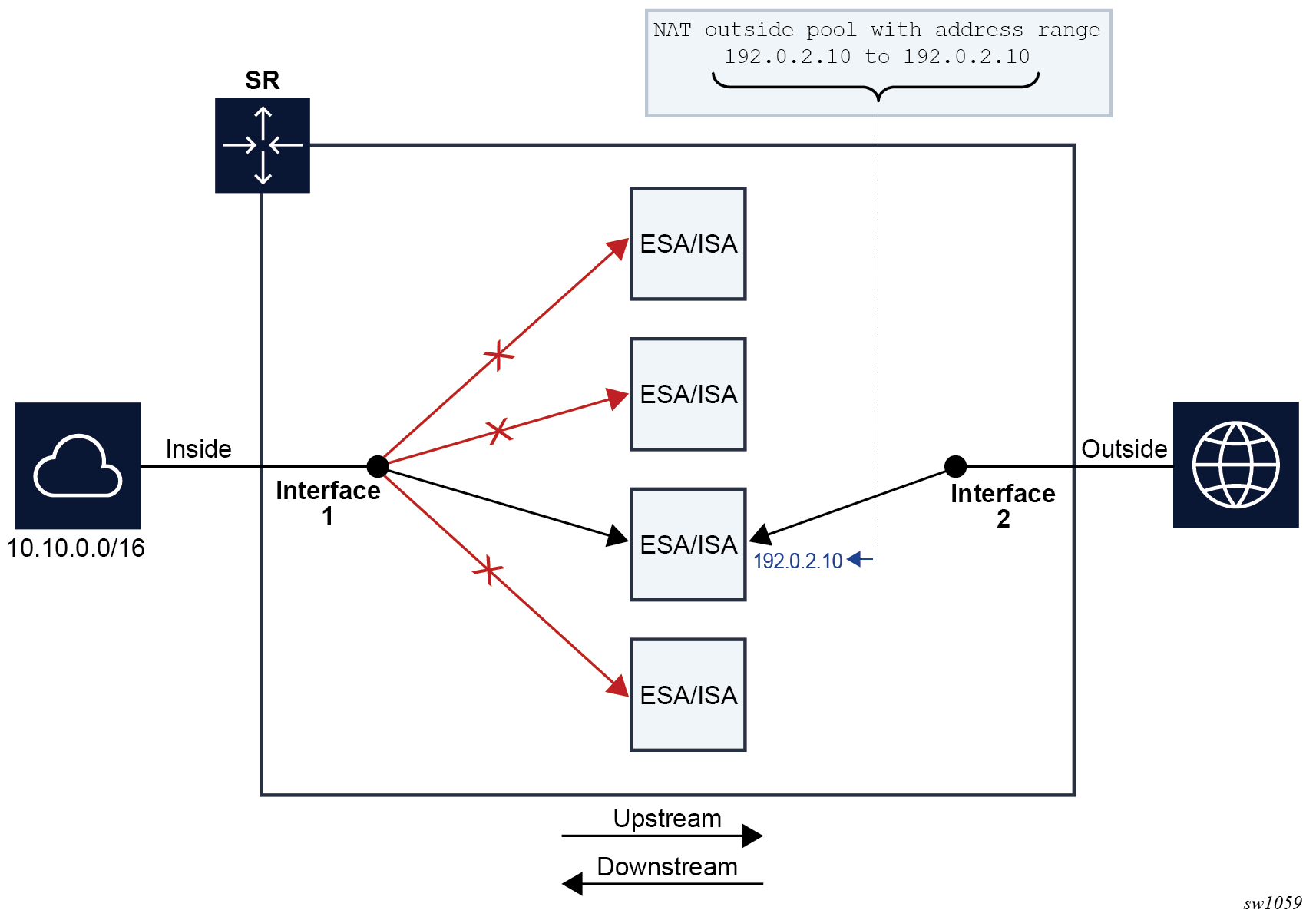

If there are not enough IP addresses on the inside and outside in relation to the number of ISAs and ESAs, unequal load balancing and, in extreme cases, traffic blackholing can occur. Traffic blackholing shows an example of an extreme case, where a single IP address is assigned to a pool in a NAT group with four ISAs or ESAs. This single outside IP address can be assigned to a single ISA or ESA that serves downstream traffic. Upstream traffic is unaware of the downstream load distribution, so it sends traffic to all four ISAs and ESAs, and as a result this traffic is dropped at ISAs or ESAs that do not have the public IP address assigned.

The operator is notified when the number of outside IP addresses in a pool is smaller than the number of ISAs or ESAs in the NAT group. The notification is sent in the form of a log, as shown in the example below.

3 2020/04/03 18:48:42.010 CEST MINOR: NAT #2015 Base Resource problem

"The address configuration for pool 'test.' causes one or more ISAs not getting an IP address"

4 2020/04/03 18:48:42.010 CEST MINOR: NAT #2014 Base Resource alarm raised

"The status of the NAT resource problem indication changed to true."

In classic CLI, this configuration is permitted by the CLI, but a message is displayed directly in response to a pool activation.

configure router nat outside pool "test" no shutdown

INFO: BB #1221 The address configuration for this pool causes one or more members not getting an IP address - Router 'Base', pool 'test'

The load balancing mechanism in Layer 2–aware NAT relies on a different algorithm than CGN. In Layer 2–aware NAT, on the inside, traffic is distributed across ISAs and ESAs based on the resource utilization of each ISA or ESA. This load balancing mechanism is control plane driven, contrary to CGN which is forwarding plane driven (hashing is based purely on source IP addresses or subnets). In Layer 2–aware NAT, an ESM subscriber is directed to an ISA or ESA hosting a large number of subscribers, hosts, and port blocks, as an aggregate. In Layer 2–aware NAT, traffic is not blackholed when the number of outside IP addresses is smaller than the number of ISAs and ESAs in the pool within a single NAT group. Instead, the outside IP address is assigned to some of the ISAs or ESAs and the ESM host is directed to those. ISAs and ESAs without assigned outside IP addresses remains unused.

Application compatibility

Applications which operate as servers (such as HTTP, SMTP, and so on) or peer-to-peer applications can have difficulty when operating behind an S-NAPT because traffic from the Internet cannot reach the NAT without a mapping in place.

Different methods can be employed to overcome this, including:

Port forwarding

STUN support

Application Layer Gateways (ALG)

The 7750 SR supports all three methods following the best-practice RFC for TCP (RFC 5382, NAT Behavioral Requirements for TCP) and UDP (RFC 4787, Network Address Translation (NAT) Behavioral Requirements for Unicast UDP). Port Forwarding is supported on the 7750 SR to allow servers which operate on well-known ports <1024 (such as HTTP and SMTP) to request the appropriate outside port for permanent allocation.

STUN is facilitated by the support of Endpoint-Independent Filtering and Endpoint-Independent Mapping (RFC 4787) in the NAT device, allowing STUN-capable applications to detect the NAT and allow inbound P2P connections for that specific application. Many new SIP clients and IM chat applications are STUN capable.

Application Layer Gateways (ALG) allows the NAT to monitor the application running over TCP or UDP and make appropriate changes in the NAT translations to suit. The 7750 SR has an FTP ALG enabled following the recommendation of the IETF BEHAVE RFC for NAT (RFC 5382).

Even with these three mechanisms some applications still experience difficulty operating behind a NAT. As an industry-wide issue, forums like UPnP the IETF, operator and vendor communities are seeking technical alternatives for application developers to traverse NAT (including STUN support). In many cases the alternative of an IPv6-capable application gives better long-term support without the cost or complexity associated with NAT.

Large-Scale NAT

Large-Scale NAT (LSN) represents the most common deployment of S-NAPT in carrier networks today, it is already employed by mobile operators around the world for handset access to the Internet.

An LSN is typically deployed in a central network location with two interfaces, the inside toward the customers, and the outside toward the Internet. A Large Scale NAT functions as an IP router and is located between two routed network segments (the ISP network and the Internet).

Traffic can be sent to the LSN function on the 7750 SR using IP filters (ACL) applied to SAPs or by installing static routes with a next-hop of the NAT application. These two methods allow for increased flexibility in deploying the LSN, especially those environments where IP MPLS VPN are being used in which case the NAT function can be deployed on a single PE and perform NAT for any number of other PE by simply exporting the default route.

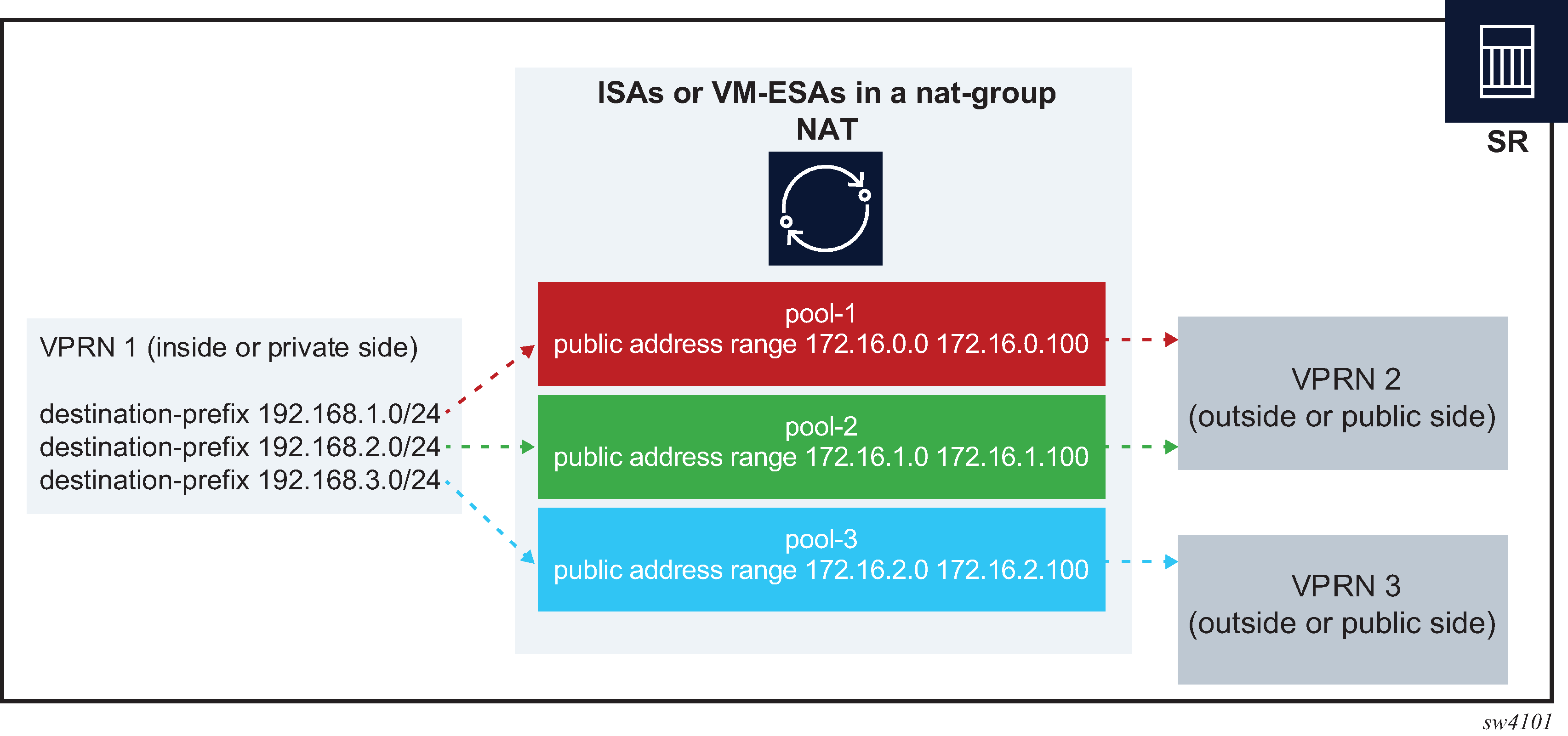

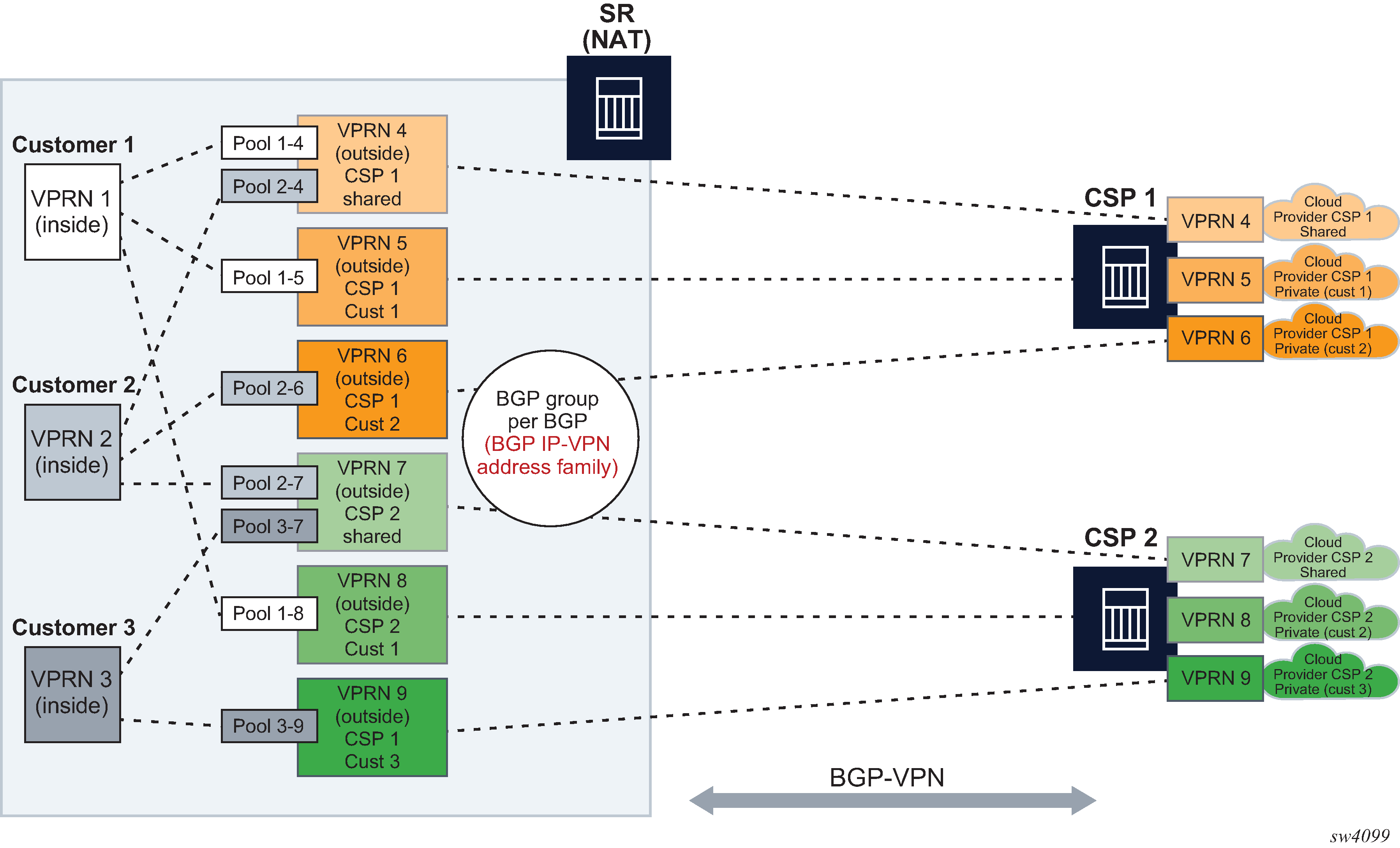

The 7750 SR NAT implementation supports NAT in the base routing instance and VPRN, and through NAT traffic may originate in one VPRN (the inside) and leave through another VPRN or the base routing instance (the outside). This technique can be employed to provide customers of IP MPLS VPN with Internet access by introducing a default static route in the customer VPRN, and NATing it into the Internet routing instance.

As LSN is deployed between two routed segments, the IP addresses allocated to hosts on the inside must be unique to each host within the VPRN. While RFC1918 private addresses have typically been used for this in enterprise or mobile environments, challenges can occur in fixed residential environments where a subscriber has existing S-NAPT in their residential gateway. In these cases the RFC 1918 private address in the home network may conflict with the address space assigned to the residential gateway WAN interface. Should a conflict occur, many residential gateways fail to forward IP traffic.

Port range blocks

The S-NAPT service on the 7750 SR BNG incorporates a port range block feature to address scalability of a NAT mapping solution. With a single BNG capable of hundreds of thousands of NAT mappings every second, logging each mapping as it is created and destroyed logs for later retrieval (as may be required by law enforcement) could quickly overwhelm the fastest of databases and messaging protocols. Port range blocks address the issue of logging and customer location functions by allocating a block of contiguous outside ports to a single subscriber. Instead of logging each NAT mapping, a single log entry is created when the first mapping is created for a subscriber and a final log entry when the last mapping is destroyed. This can reduce the number of log entries by 5000x or more. An added benefit is that as the range is allocated on the first mapping, external applications or customer location functions may be populated with this data to make real-time subscriber identification, instead of having to query the NAT as to the subscriber identity in real-time and possibly delay applications.

Port range blocks are configurable as part of outside pool configuration, allowing the operator to specify the number of ports allocated to each subscriber when a mapping is created. When a range is allocated to the subscriber, these ports are used for all outbound dynamic mappings and are assigned in a random manner to minimize the predictability of port allocations (draft-ietf-tsvwg-port-randomization-05).

Port range blocks also serve another useful function in a Large Scale NAT environment, and that is to manage the fair allocation of the shared IP resources among different subscribers.

When a subscriber exhausts all ports in their block, further mappings are prohibited. As with any enforcement system, some exceptions are allowed and the NAT application can be configured for reserved ports to allow high-priority applications access to outside port resources while exhausted by low priority applications.

Reserved ports and priority sessions

Reserved ports allows an operator to configure a small number of ports to be reserved for designated applications should a port range block be exhausted. Such a scenario may occur when a subscriber is unwittingly subjected to a virus or engaged in extreme cases of P2P file transfers. In these situations, instead of blocking all new mappings indiscriminately, the 7750 SR NAT application allows operators to nominate a number of reserved ports and then assign a 7750 SR forwarding class as containing high priority traffic for the NAT application. Whenever traffic reaches the NAT application which matches a priority session forwarding class, reserved ports are consumed to improve the chances of success. Priority sessions could be used by the operator for services such as DNS, web portal, e-mail, VoIP, and so on, to allow these applications even when a subscriber exhausted their ports.

Preventing port block starvation

Dynamic port block starvation in LSN

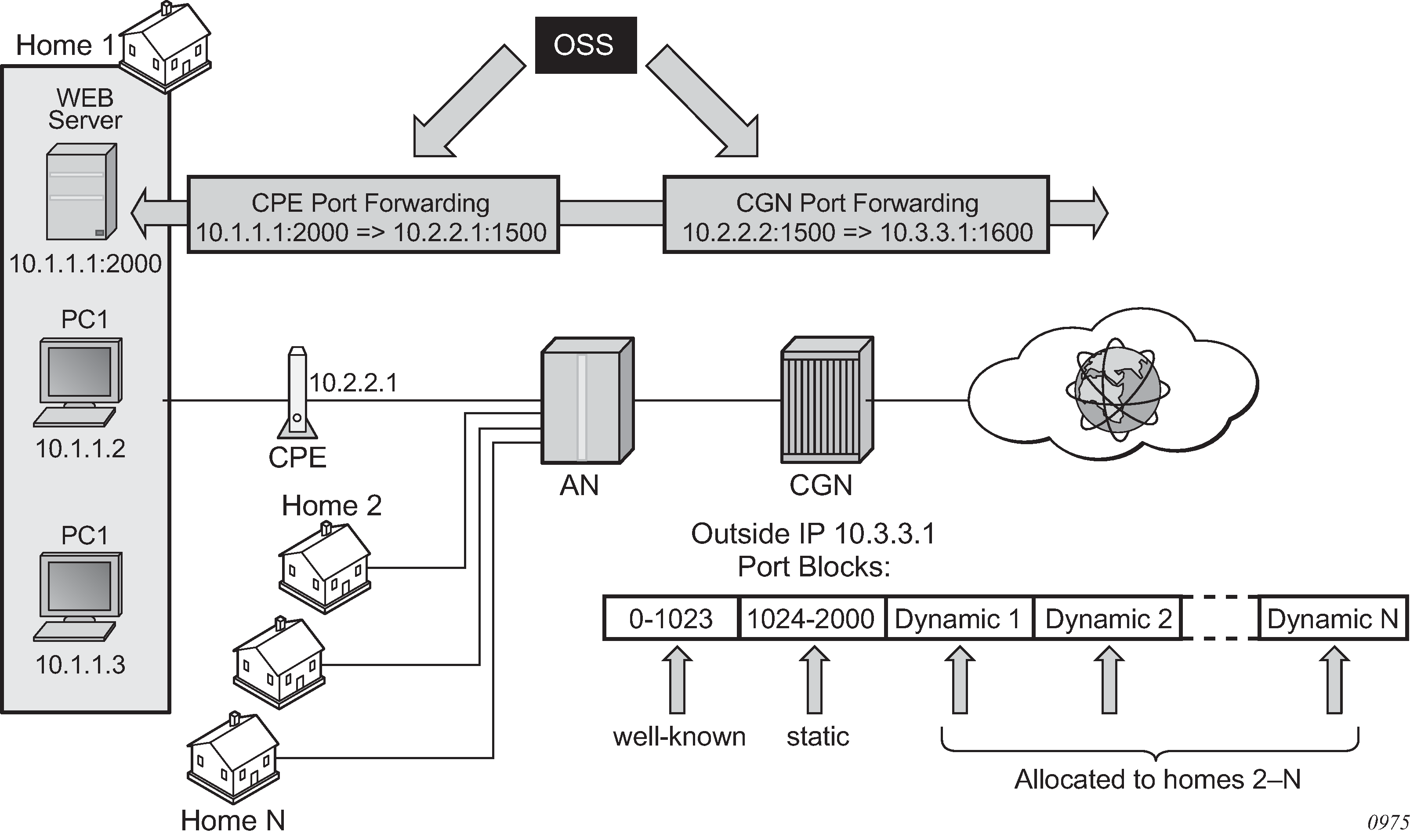

The outside IP address is always shared for the subscriber with a port forward (static or via PCP) and the dynamically allocated port block, insofar as the port from the port forward is in the range >1023. This behavior can lead to starvation of dynamic port blocks for the subscriber. An example for this scenario is shown in Dynamic port block starvation in LSN.

A static port forward for the WEB server in Home 1 is allocated in the CPE and the CGN. At the time of static port forward creation, no other dynamic port blocks for Home 1 exist (PCs are powered off).

Assume that the outside IP address for the newly created static port forward in the CGN is 10.3.3.1.

Over time dynamic port blocks are allocated for a number of other homes that share the same outside IP address, 10.3.3.1. Eventually those dynamic port block allocations exhaust all dynamic port block range for the address 10.3.3.1.

After the dynamic port blocks are exhausted for outside IP address 10.3.3.1, a new outside IP address (for example, 10.3.3.2) is allocated for additional homes.

Eventually the PCs in Home 1 come to life and they try to connect to the Internet. Because of the dynamic port block exhaustion for the IP address 10.3.3.1 (that is mandated by static port forward – Web Server), the dynamic port block allocation fails and consequently, the PCs are not able to access the Internet. There is no additional attempt within CGN to allocate another outside IP address. In the CGN there is no distinction between the PCs in Home 1 and the Web Server when it comes to source IP address. They both share the same source IP address 10.2.2.1 on the CPE.

The solution for this is to reserve a port block (or blocks) during the static port forward creation for the specific subscriber.

Dynamic port block reservation

To prevent starvation of dynamic port blocks for the subscribers that use port forwards, a dynamic port block (or blocks) is reserved during the lifetime of the port forward. Those reserved dynamic port blocks are associated with the same subscriber that created the port forward. However, a log would not be generated until the dynamic port block is actually used and mapping within that block are created.

At the time of the port forward creation, the dynamic port block is reserved in the following fashion:

If the dynamic port block for the subscriber does not exist, then a dynamic port block for the subscriber is reserved. No log for the reserved dynamic port block is generated until the dynamic port block starts being used (mapping created because of the traffic flow).

If the corresponding dynamic port block already exists, it is reserved even after the last mapping within the last port block had expired.

The reserved dynamic port block (even without any mapping) continues to be associated with the subscriber as long as the port forward for the subscriber is present. The log (syslog or RADIUS) is generated only when there is not active mapping within the dynamic port block and all port forwards for the subscriber are deleted.

Additional considerations with dynamic port block reservation:

The port block reservation should be triggered only by the first port forward for the subscriber. The subsequent port forwards do not trigger additional dynamic port block reservation.

Only a single dynamic port block for the subscriber is reserved (that is, no multiple port-block reservations for the subscriber are possible).

-

This feature is enabled with the following commands:

- MD-CLI

configure router nat outside pool port-forwarding dynamic-block-reservation configure service vprn nat outside pool port-forwarding dynamic-block-reservation - classic

CLI

configure router nat outside pool port-forwarding-dyn-block-reservation configure service vprn nat outside pool port-forwarding-dyn-block-reservation

These commands can be enabled only if the maximum number of configured port blocks per outside IP is greater or equal then the maximum configured number of subscribers per outside IP address. This guarantees that all subscribers (up to the maximum number per outside IP address) configured with port forwards can reserve a dynamic port block.

- MD-CLI

If the port-reservation is enabled while the outside pool is operational and subscribers traffic is already present, the following two cases must be considered:

The configured number of subscribers per outside IP is less or equal than the configured number of port blocks per outside IP address (this is permitted) but all dynamic port blocks per outside IP address are occupied at the moment when port reservation is enabled. This leaves existing subscribers with port forwards that do not have any dynamic port blocks allocated (orphaned subscribers), unable to reserve dynamic port blocks. In this case the orphaned subscribers must wait until dynamic port blocks allocated to the subscribers without port forwards are freed.

The configured number of subscribers per outside IP is greater than the configured number of port blocks per outside IP address. In addition, all dynamic port blocks per outside IP address are allocated. Before the port reservation is even enabled, the subscriber-limit per outside IP address must be lowered (by configuration) so that it is equal or less than the configured number of port blocks per outside IP address. This action causes random deletion of subscribers that do not have any port forwards. Such subscribers are deleted until the number of subscriber falls below the newly configured subscriber limit. Subscribers with static port forwards are not deleted, regardless of the configured subscriber-limit number. When the number of subscribers is within the newly configured subscriber-limit, the port-reservation can take place under the condition that the dynamic port blocks are available. If specific subscribers with port forwards have more than one dynamic port block allocated, the orphaned subscribers must wait for those additional dynamic port blocks to expire and consequently be released.

This feature is supported on the following applications: CGN, DS-Lite and NAT64.

Pools with flexible port allocations

Pools with flexible port allocations are specialized pools that allow subscribers to configure per-port allocations instead of per port-block allocations for specific use cases. Logging of port allocations and deallocations within such pools is not facilitated. These pools are compatible with ESA-VM and vISA (VSR) and are not applicable to ISA2. These pools cater to users that have a dedicated private pool. Pools with flexible port allocations ensure that the external IP addresses of the pools are associated with a single user entity or a tenant even before the pool provisioning phase, which eliminates the need for logging.

When pools with flexible port allocations are configured, static port forwards are interspersed with dynamically allocated ports. These pool can only be linked via a NAT policy to a source prefix or to a static port forward. This allows NAT processing of traffic solely originating from the configured source prefix or address. Neither of these two mechanisms inherently steers traffic to the ESA-VM/vISA modules. Therefore, traffic for NAT processing is directed to pools with flexible port allocations based on either destination prefix or filter criteria. In this case, explicitly configured NAT policy is not allowed within a destination prefix or a filter . After the traffic is in the ESA-VM/vISA, the pool is selected based on the source prefix or an existing flow created under the static port forward, which does not have a NAT policy explicitly configured.

Execute the following command to configure pools with flexible port allocations:

- MD-CLI

configure router nat outside pool applications flexible-port-allocation true - classic

CLI

configure router nat outside pool flexible-port-allocation

The traffic is steered to ESA-VM/vISA based on a destination prefix or a filter criteria.

- MD-CLI

configure service vprn nat inside large-scale nat-policy - classic

CLI

configure service vprn nat inside nat-policy

The following examples show configured NAT pools with flexible port allocations.

Destination prefix (MD-CLI)

[ex:/configure service vprn nat inside large-scale]

A:admin@node-2# info

traffic-identification {

source-prefix-only true

}

nat44 {

destination-prefix 0.0.0.0/0 {

}

source-prefix 10.10.10.0/24 {

nat-policy "nat-pol-1"

}

source-prefix 10.10.11.0/24 {

nat-policy "nat-pol-1"

}

source-prefix 10.10.12.0/25 {

nat-policy "nat-pol-2"

}

source-prefix 10.10.12.128/25 {

nat-policy "nat-pol-3"

}

}Destination prefix (classic CLI)

A:node-2>config>service>vprn>nat>inside# info

----------------------------------------------

destination-prefix 0.0.0.0/0

traffic-identification

source-prefix-only

exit

source-prefix 10.10.10.0/24 nat-policy "nat-pol-1"

source-prefix 10.10.11.0/24 nat-policy "nat-pol-1"

source-prefix 10.10.12.0/25 nat-policy "nat-pol-2"

source-prefix 10.10.12.128/25 nat-policy "nat-pol-3"

----------------------------------------------Filter definition (MD-CLI)

[ex:/configure filter ip-filter "demo-nat" entry 10]

A:admin@node-2# info

match {

protocol 6

dst-ip {

address 0.0.0.0/1

}

dst-port {

eq 2000

}

}

action {

nat {

}

}Filter definition (classic CLI)

A:node-2>config>filter>ip-filter>entry# info

----------------------------------------------

match protocol 6

dst-ip 0.0.0.0/1

dst-port eq 2000

exit

action

nat

exit

----------------------------------------------Applying a filter to the ingress interface (MD-CLI)

[ex:/configure service vprn “nat”]

A:admin@node-2# info

admin-state enable

service-id 20

customer "1"

nat {

inside {

large-scale {

traffic-identification {

source-prefix-only true

}

nat44 {

destination-prefix 0.0.0.0/0 {

}

source-prefix 10.10.10.0/24 {

nat-policy "nat-pol-1"

}

source-prefix 10.10.11.0/24 {

nat-policy "nat-pol-1"

}

source-prefix 10.10.12.0/25 {

nat-policy "nat-pol-2"

}

source-prefix 10.10.12.128/25 {

nat-policy "nat-pol-3"

}

}

}

}

}

interface "access" {

admin-state enable

ipv4 {

primary {

address 172.16.102.1

prefix-length 24

}

}

sap lag-4:2 {

ingress {

filter {

ip "demo-nat"

}

}

}

}

Applying a filter to the ingress interface (classic CLI)

A:node-2>config>service>vprn# info

----------------------------------------------

interface "access" create

address 172.16.102.1/24

sap lag-4:2 create

ingress

filter ip 1

exit

exit

exit

nat

inside

destination-prefix 0.0.0.0/0

traffic-identification

source-prefix-only

exit

source-prefix 10.10.10.0/24 nat-policy "nat-pol-1"

source-prefix 10.10.11.0/24 nat-policy "nat-pol-1"

source-prefix 10.10.12.0/25 nat-policy "nat-pol-2"

source-prefix 10.10.12.128/25 nat-policy "nat-pol-3"

exit

exit

no shutdown

----------------------------------------------Static port forwards in a pool with flexible port allocations

Static Port Forwards (SPF) are supported in pools with flexible port allocations.

Typically, the SPF command requires that the NAT subscriber be mapped to the same pool to which a source prefix is mapped. For instance, a subscriber with the IP 10.10.12.1, targeted in an SPF command, needs to correspond with t e pool related to its source prefix 10.10.12.0/25. This mapping is indirectly configured via the following nat-policy command.

Use the following command to configure an SPF configuration.

tools perform nat port-forwarding-action lsn create router "20" ip 10.10.12.1 protocol tcp port 2000 lifetime 3000 outside-ip 192.168.255.160 outside-port 2000 nat-policy "nat-policy-2"Source prefix configuration MD-CLI

[ex:/configure service vprn "1" nat]

A:admin@node-2# info

inside {

large-scale {

traffic-identification {

source-prefix-only true

}

nat44 {

destination-prefix 0.0.0.0/0 {

}

source-prefix 10.10.11.0/24 {

nat-policy "nat-policy-1"

}

source-prefix 10.10.12.0/25 {

nat-policy "nat-policy-2"

}

source-prefix 10.10.12.128/25 {

nat-policy "nat-policy-3"

}

}

}

}

Source prefix configuration classic CLI

A:node-2>config>service>vprn>nat>inside$ info

----------------------------------------------

destination-prefix 0.0.0.0/0

traffic-identification

source-prefix-only

exit

source-prefix 10.10.11.0/24 nat-policy "nat-policy-1"

source-prefix 10.10.12.0/25 nat-policy "nat-policy-2"

source-prefix 10.10.12.128/25 nat-policy "nat-policy-3"

----------------------------------------------

Failure to match the SPF request with a corresponding NAT policy and source prefix results in SPF creation failure. To circumvent this and enable SPF allocation from a different NAT pool than that of the corresponding source prefix, the NAT policy for SPF must be predeclared within the inside routing context. For example, if SPF is allocated from a different pool the NAT policy must be declared as follows.

tools perform nat port-forwarding-action lsn create router "20" ip 10.10.12.1 protocol tcp port 2000 lifetime 3000 outside-ip 192.168.255.160 outside-port 2000 nat-policy "nat-policy-1"Use the following command to declare a NAT policy:

- MD-CLI

configure service vprn "esm" nat inside large-scale static-port-forwards spf-nat-policy "nat-policy-1" - classic

CLI

configure service vprn nat inside spf-policy nat-policy "nat-policy-1"

This enables the creation of an SPF for the subscriber with the IP address 10.10.12.1 in a pool different from that associated with the corresponding source prefix. Specifically, the SPF is allocated in the pool indicated by nat-policy-1, diverging from the pool associated with nat-policy-2, which is linked to the source prefix.

Multiple NAT policies can be declared within this configuration hierarchy.

This enables the creation of an SPF for the subscriber with the IP address 10.10.12.1 in a pool different from that associated with the corresponding source prefix. Specifically, the SPF can be allocated in the pool indicated by nat-policy-1, diverging from the pool associated with nat-policy-2, which is linked to the source prefix.

Free port limit

The free port limit feature allows the user to configure a limit on free ports per protocol for each external IP address. This avoids rapid port depletion for new subscribers in paired pooling mode, or unnecessary toggling between external IP addresses for existing subscribers in arbitrary pooling mode.

Such port limits ensure that only IP addresses with sufficient free ports, in accordance with the configured limit, are considered for selection and added to the eligible IP address list.

The following example displays configured free port limits in pools with flexible port allocations.

MD-CLI

[ex:/configure router "Base" nat outside pool "demo" large-scale]

A:admin@node-2# info

flexible-port-allocation {

free-port-limit {

tcp 1000

udp 1000

icmp 1000

}

}classic CLI

A:node-2>config>router>nat>outside>pool# info

----------------------------------------------

...

flexible-port-allocation

free-port-limit tcp 1000 udp 1000 icmp 1000

exit

...

----------------------------------------------Restrictions

The following functionalities are not supported for pools with flexible port allocations and is blocked in the CLI:

- referencing this pool in the destination prefix or filter

- destination-based NAT (dNAT)

- PCP

- deterministic NAT

- Layer 2–aware NAT

- 1:1 NAT

- maximum number of subscribers per-IP address

- no reservation of ports based on QoS settings (port priorities)

- Stateful Inter-Chassis NAT Redundancy (SICR)

- WLAN-GW or L2 aware firewall-specific functionality (dormant pool or V6 translations)

- scaling profile 1 and scaling profile 3

Association between NAT subscribers and IP addresses in a NAT pool

A NAT subscriber can allocate ports on a single outside IP address or multiple IP addresses in a NAT pool. Nokia recommends that NAT subscribers allocate ports from a single outside IP address. If this IP address runs out of ports, the NAT subscriber runs out of ports. In other words, there is no attempt for a new port to be allocated from a different outside IP address. This method of address allocation to a NAT subscriber is referred to as Paired Address Pooling and is the default behavior in SR OS.

The alternative method of port allocation involves port exhaustion on the originally allocated IP address. An attempt is made to allocate ports from another IP addresses that has free ports available. This results in a NAT subscriber be associated with multiple outside IP addresses. This method is referred to as Arbitrary Address Pooling and can be optionally enabled in SR OS. See RFC 7857, Section 4 for more information.

Arbitrary address pooling may offer more efficient allocations of port-blocks across outside IP address in a NAT pool, but it may negatively affect some applications. For example, an application may require two channels for communication, a control channel and a data channel, each on a different source port from the client perspective on the inside of the NAT. The communication channel may be established on the outside address IP1 and outside port X. If port X is the last free port on the IP1, the SR OS attempts to allocate the next port Y for the data channel from a different outside address, IP2. If the application is robust enough to accept communication from the same client on two different IP addresses, there are no issues. However, some applications may not support this scenario and the communication fails.

Arbitrary address pooling implies the following:

-

The subscriber limit per outside IP address loses its meaning because the subscriber can now be associated with multiple IP addresses. Hence, the following command cannot be set:

- MD-CLI

configure router nat outside pool large-scale subscriber-limit - classic

CLI

configure router nat outside pool subscriber-limit

For more information about the subscriber-limit command in paired address pooling, see Managing port block space.

- MD-CLI

-

The number of outside IP addresses in a pool must be at least double the number of ESA-VM in a NAT-group hosting the subscriber. Each subscriber is hashed to a single ESA-VM, ISA2, or vISA; therefore at least two outside IP addresses must be available per ESA-VM, ISA2, or vISA for the subscriber to use more than one outside IP address.

-

The number of port blocks configured in a NAT policy using the following command is the aggregate limit that a NAT subscriber can be allocated across multiple outside IP addresses.

configure service nat nat-policy block-limit -

Reserving a port block by SPF configuration (when an SPF is configured before any port blocks are allocated to the subscriber) is not supported. In other words, the following commands are not supported:

MD-CLI

configure router nat outside pool port-forwarding dynamic-block-reservationclassic CLIconfigure router nat outside pool port-forwarding-dyn-block-reservation -

Arbitrary address pooling is not supported in Layer 2–aware NAT.

Use the following command to show NAT LSN information for the subscriber.

show service nat lsn-subscribers subscriberThe asterisk (*) next to the IP address field in the output indicates that additional outside IP addresses are associated with this subscriber in this pool.

===============================================================================

NAT LSN subscribers

===============================================================================

Subscriber : [LSN-Host@192.168.1.1]

NAT policy : nat-policy-lsn-deterministic

Subscriber ID : 276824064

-------------------------------------------------------------------------------

Type : classic-lsn-sub

Inside router : "Base"

Inside IP address prefix : 192.168.1.1/32

ISA NAT group : 1

ISA NAT group member : 1

Outside router : 4

Outside IP address : 192.0.0.1*

Use the detailed version of the command to see additional outside IP addresses and port blocks.

Timeouts

Creating a NAT mapping is only one half of the problem – removing a NAT mapping at the appropriate time maximizes the shared port resource. Having ports mapped when an application is no longer active reduces solution scale and may impact the customer experience should they exhaust their port range block. The NAT application provides timeout configuration for TCP, UDP and ICMP.

TCP state is tracked for all TCP connections, supporting both three-way handshake and simultaneous TCP SYN connections. Separate and configurable timeouts exist for TCP SYN, TCP transition (between SYN and Open), established and time-wait state. Time-wait assassination is supported and enabled by default to quickly remove TCP mappings in the TIME WAIT state.

UDP does not have the concept of connection state and is subject to a simple inactivity timer. Company-sponsored research into applications and NAT behavior suggested some applications, like the BitTorrent Distributed Hash Protocol (DHT) can make a large number of outbound UDP connections that are unsuccessful. Instead of waiting the default five (5) minutes to time these out, the 7750 SR NAT application supports an udp-initial timeout which defaults to 15 seconds. When the first outbound UDP packet is sent, the 15 second time starts – it is only after subsequent packets (inbound or outbound) that the default UDP timer becomes active, greatly reducing the number of UDP mappings.

Layer 2–aware NAT

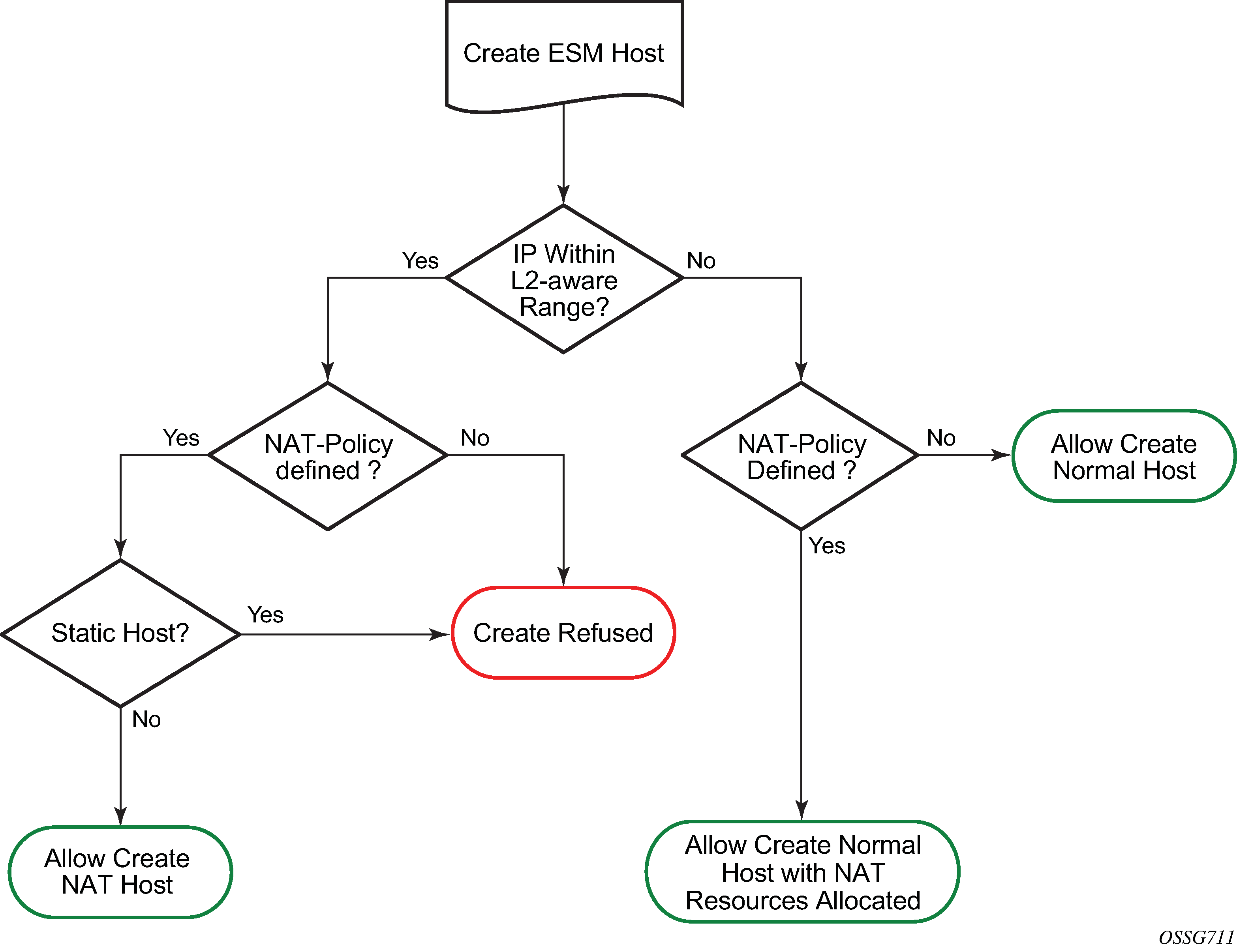

L2-Aware tree shows the L2-Aware tree.

NAT is supported on DHCP, PPPoE and L2TP. Static and ARP hosts are not supported.

Layer-2 Aware (or subscriber aware) NAT is combined with Enhanced Subscriber Management on the 7750 SR BNG to overcome the issues of colliding address space between home networks and the inside routed network between the customer and Large Scale NAT.

Layer-2 Aware NAT allows every broadband subscriber to be allocated the exact same IPv4 address on their residential gateway WAN link and then proceeds to translate this into a public IP through the NAT application.

Layer-2-Aware NAT is supported on any of the ESM access technologies, including PPPoE, IPoE (DHCP) and L2TP LNS. For IPoE both n:1 (VLAN per service) and 1:1 (VLAN per subscriber) models are supported. A subscriber device operating with Layer 2–aware NAT needs no modification or enhancement, existing address mechanisms (DHCP or PPP/IPCP) are identical to a public IP service, the 7750 SR BNG simply translates all IPv4 traffic into a pool of IPv4 addresses, allowing many Layer 2–aware NAT subscribers to share the same IPv4 address.

More information about Layer 2–aware NAT can be found in draft-miles-behave-l2nat-00.

Port block extensions

Similarly to LSN, a Layer 2–aware NAT subscriber is assigned a single outside IP address per NAT pool, with one or more port blocks tied to the IP address. The outside IP address is shared by multiple subscribers, each with its own unique set of port blocks.

To ensure that a predetermined number of subscribers receive NAT service, an outside IP address and at least one port block on that IP address must be guaranteed. For this reason, the port blocks space in a pool is divided into two partitions:

-

port block space reserved for new Layer 2–aware NAT subscribers

Each new subscriber is guaranteed to receive at least one port block, referred to as the initial port block.

-

port block space reserved for the extended port-blocks of existing NAT subscribers

This port partition can be used by subscribers who exhaust their ports in the initial port block and need additional ports. Pending on the availability and configuration, they are assigned additional port blocks.

Without this type of port space partitioning, the outside IP addresses and the NAT pool may become overtaken by users with heavier port consumption. This denies access to NAT services to a majority of users with lower port consumption.

This division of port space is controlled by limiting the number of subscribers per an outside IP address and configuring the size of the initial port block.

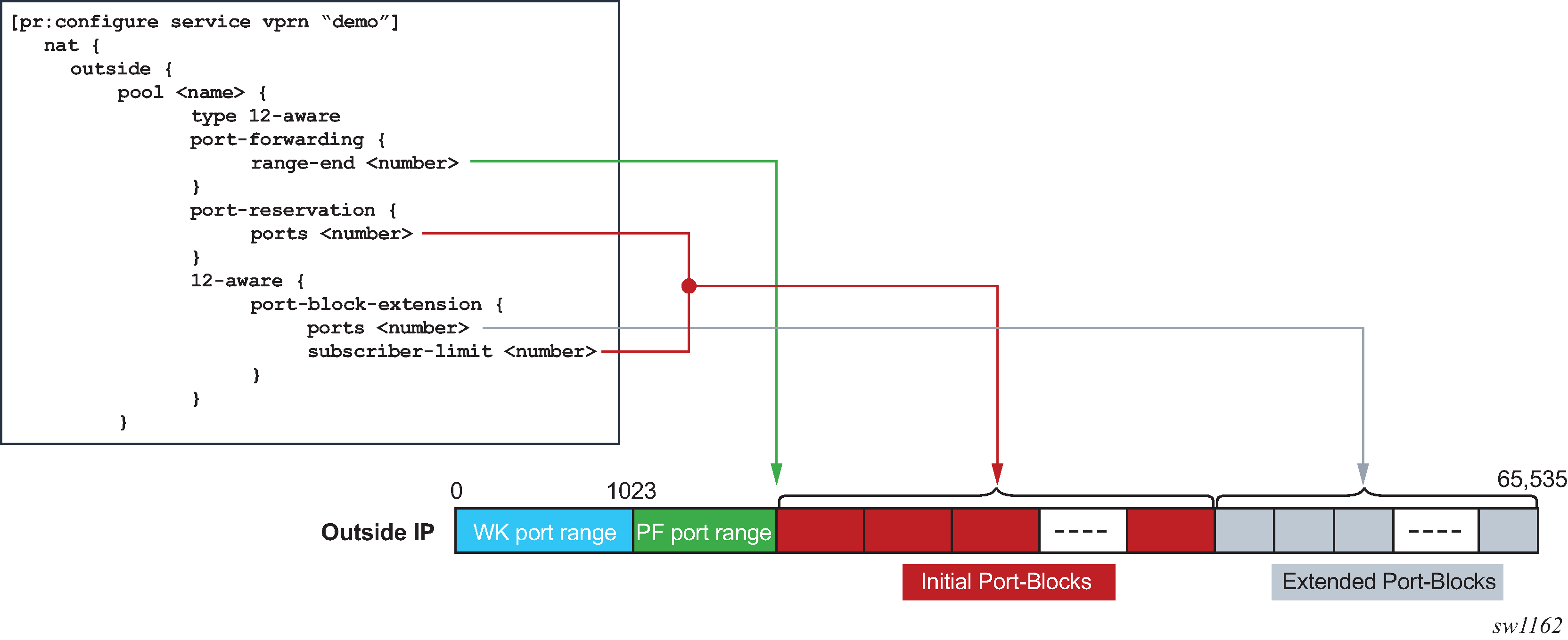

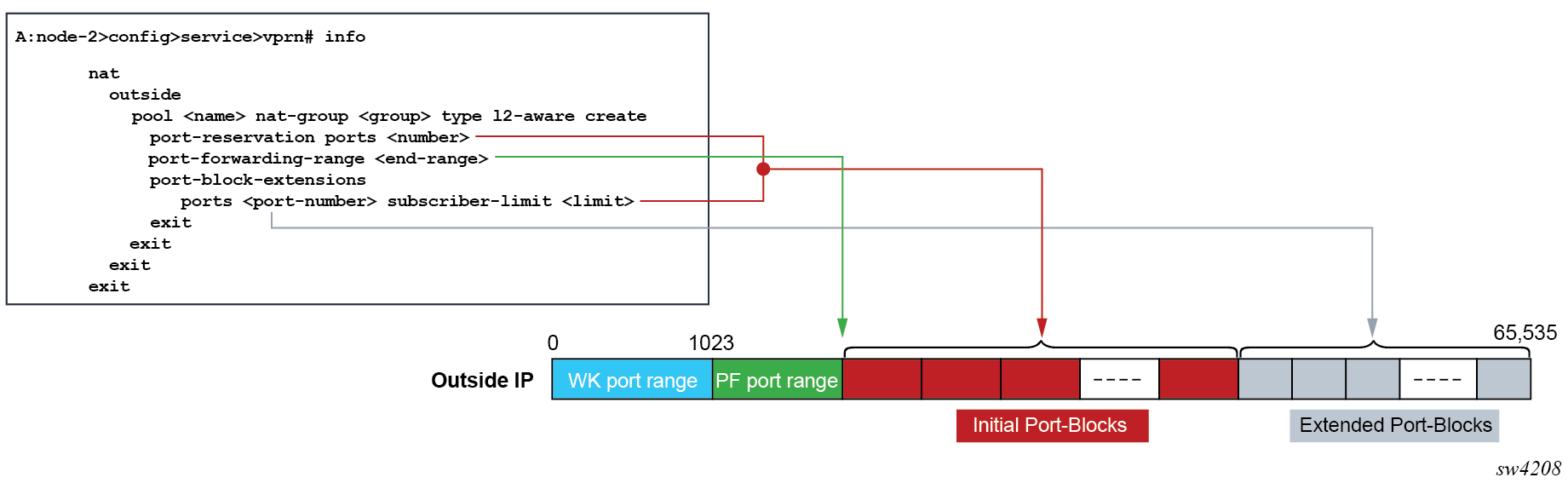

The following shows configuration information relevant to port-block allocation in Layer 2–aware NAT.

Use the following commands to configure the initial port block size for new subscribers:

- MD-CLI

configure service vprn nat outside pool port-reservation port-blocks configure service vprn nat outside pool port-reservation ports - classic

CLI

configure service vprn nat outside pool port-reservation blocks configure service vprn nat outside pool port-reservation portsNote: Only one of the above commands can be specified at a time.The pool type must be l2-aware.

The port-reservation blocks value can be configured only if port-block-extension is not enabled.

The following command configures the extended port block size for existing subscribers and the maximum number of subscribers per outside IP address. The size of the initial port blocks and extended port block may differ.

- MD-CLI

configure service vprn nat outside pool l2-aware port-block-extension ports subscriber-limit - classic

CLI

configure service vprn nat outside pool port-block-extensions ports subscriber-limit -

Note: The pool type must be l2-aware.

Use the following command to configure the upper boundary for static port forwarding:

- MD-CLI

configure service vprn nat outside pool port-forwarding range-end - classic

CLI

configure service vprn nat outside pool port-forwarding-range

Port space partitioning for an outside IP address (MD-CLI) and Port space partitioning for an outside IP address (classic CLI) show the effects of the commands.

The maximum number of port blocks that can be allocated per subscriber is configured in the NAT policy by the following command.

configure service nat nat-policy block-limitManaging port block space

Both port partitions, initial and extended, are served on a first-come-first-served basis. The initial port partition guarantees at least one port block for each of the preconfigured number of subscribers per outside IP address. Use the following command to configure subscriber limits:

- MD-CLI

configure router nat outside pool l2-aware port-block-extension subscriber-limit - classic

CLI

configure router nat outside pool port-block-extension ports subscriber-limit

If there are more subscribers in the network than the preconfigured number of NAT subscribers, then this space becomes oversubscribed.

Extended port partitioning, however, does not guarantee that each of the existing NAT subscriber receives additional port blocks. Each subscriber can allocate additional free port blocks only if they are available, up to the maximum combined limit (initial and extended) set in the NAT policy using the following command.

configure service nat nat-policy block-limitFor optimized NAT pool management and correct capacity planning, understanding the following command options in the operator’s network is essential:

-

IP address compression ratio (the number of subscribers who share one outside IP address)

-

subscriber oversubscription ratio (the number of NAT subscribers who are active simultaneously)

-

statistical port usage for subscribers (the percentage of subscribers who are heavy, medium, and light port users)

-

port block sizes

Based on the previous command options, an average port block per subscriber can be determined and the following command options in NAT can be set:

-

the subscriber limit per outside IP address configured in the NAT pool

-

the size of the initial and extended port blocks configured in the NAT pool

-

the maximum number of port blocks per subscriber configured in NAT policy

-

the outside IP address range configured in the NAT pool

The following are reasonable guidelines with an example that can serve as an initial configuration for operators who are unsure of their current traffic patterns in terms of port usage for their subscribers.

-

An operator has 10,000 subscribers that require NAT, but only 8,000 of them are active simultaneously. This means that the operator can allow oversubscription of outside (NAT) IP address.

-

Average port usage:

-

60% of the subscribers are light port users with less than 1000 ports.

-

30% of the subscribers are medium port users with less than 2000 ports.

-

10% of the subscribers are heavy port users with less than 4000 ports.

-

These assumptions lead to the following calculations:

-

8,000 active subscribers x (0.6 x 1000 + 0.3 x 2,000 + 0.1 x 4,000) = 12,800,000 total ports.

-

Consider that one outside IP address can accommodate ~50,000 (64K ports less the static port forwards and well known ports). This yields 256 outside IP addresses (/24) in a pool therefore, 12,800,000 / 50,000 = 256.

-

The compression ratio is 8,000 divided by 256 equals ~32 (32 subscribers share one outside IP address), therefore the subscriber limit equals 32.

Based on this calculation, a reasonable size for the initial port block size is 1000 ports and the extended port block size is 335 ports.

-

The maximum number of port blocks per subscriber is set to 10 to accommodate heavy users with 4,000 ports (1000 + 9 x 335 = 4015)

Setting the subscriber limit in a pool to 32, the initial and extended port block sizes to 1000 and 335 respectively, the maximum number of port blocks per subscriber to 10, and configuring a /24 address range in a pool would produce the needed results. This assumes that the subscribers are properly load-balanced over ISAs or ESAs. The following is an example configuration.

MD-CLI

[ex:/configure service vprn "demo" nat outside pool "demo pool"]

A:admin@node-2# info

type l2-aware

nat-group 1

port-forwarding {

range-end 4119

}

port-reservation {

ports 1000

}

l2-aware {

port-block-extension {

ports 335

subscriber-limit 32

}

}

[ex:/configure service nat nat-policy "demo-policy"]

A:admin@node-2# info

block-limit 10classic CLI

A:node-2>config>service>vprn>nat>outside$ info

----------------------------------------------

pool "demo pool" nat-group 1 type l2-aware create

shutdown

port-reservation ports 1000

port-forwarding-range 4119

port-block-extensions

ports 335 subscriber-limit 32

exit

exit

---------------------------------------------

A:node-2>config>service>nat>nat-policy$ info

----------------------------------------------

block-limit 10

----------------------------------------------

Layer 2–aware NAT bypass

Layer 2–aware NAT bypass refers to the functionality where the entire or partial traffic from a L2-Aware-NAT-enabled ESM1 subscriber circumvents local NAT function. There are three types of bypass supported for Layer 2–aware NAT in the SR OS:

full ESM host bypass

selective ESM host bypass based on an IP filter match

the entire ESM subscriber bypass because of ISA/ESA failure. This type of bypass is described in NAT redundancy.

Full ESM host bypass

In this type of bypass, a subscriber host is implicitly excluded from Layer 2–aware NAT if its IP address falls outside of the configured subnet in the inside NAT CLI hierarchy under the L2-Aware CLI node.

In the following example, the address under the L2-Aware CLI node (address 10.10.1.254/24) represents the default gateway and a L2-Aware subnet. Hosts with IP addresses within the configured L2-Aware subnet (in this example 10.10.1.0/24) are subjected to Layer 2–aware NAT (the exception is the default gateway address 10.10.1.254). Hosts outside of this IP range bypass NAT. In this way, a mix of hosts under the same L2-Aware enabled ESM subscriber can coexist, some of which are subject to NAT, and some of which are bypassing NAT.

MD-CLI

A:admin@node-2# configure router nat inside l2-aware subscribers 10.10.1.254/24classic CLI

A:node-2>configure router nat inside l2-aware address 10.10.1.254/24

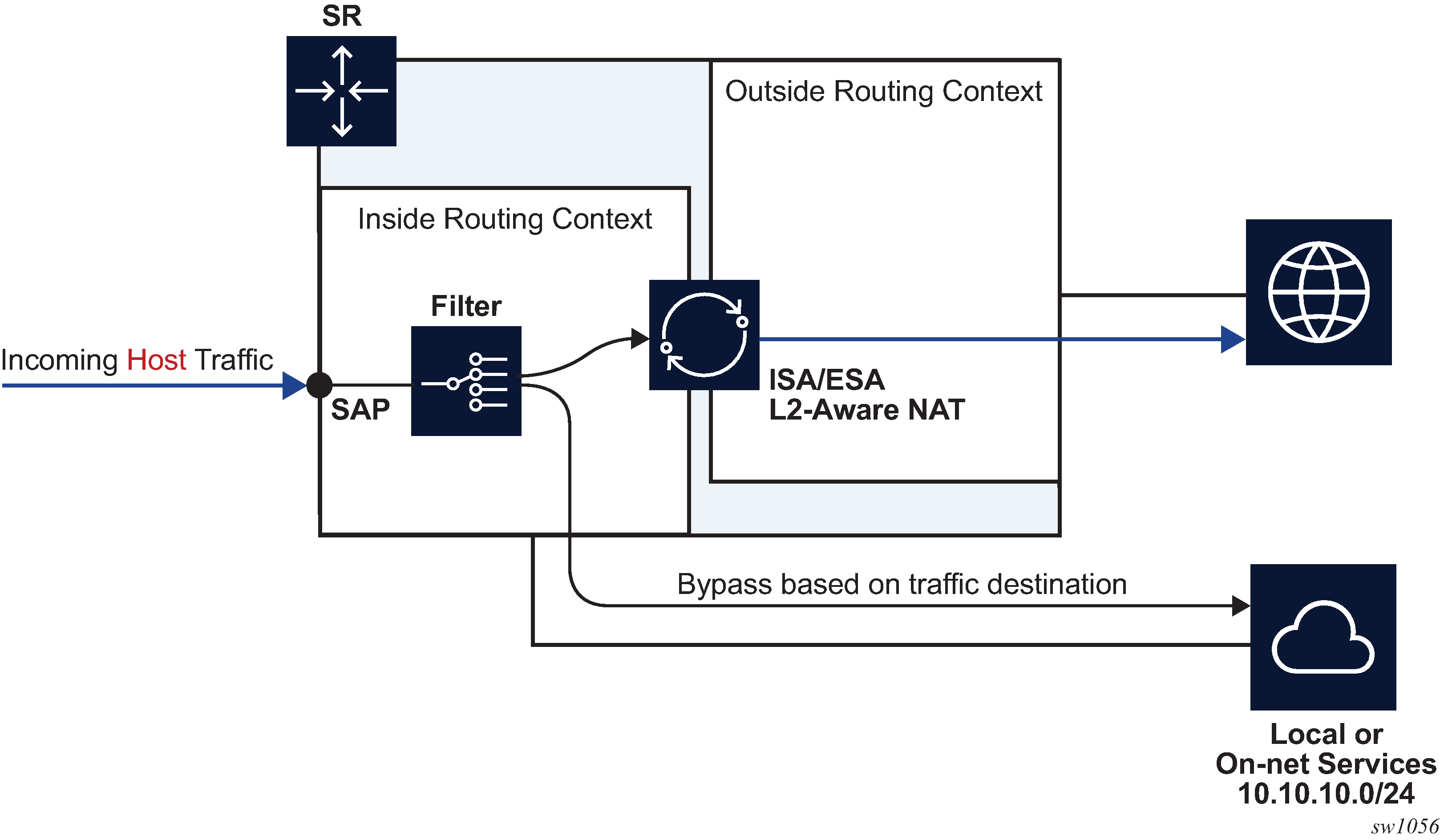

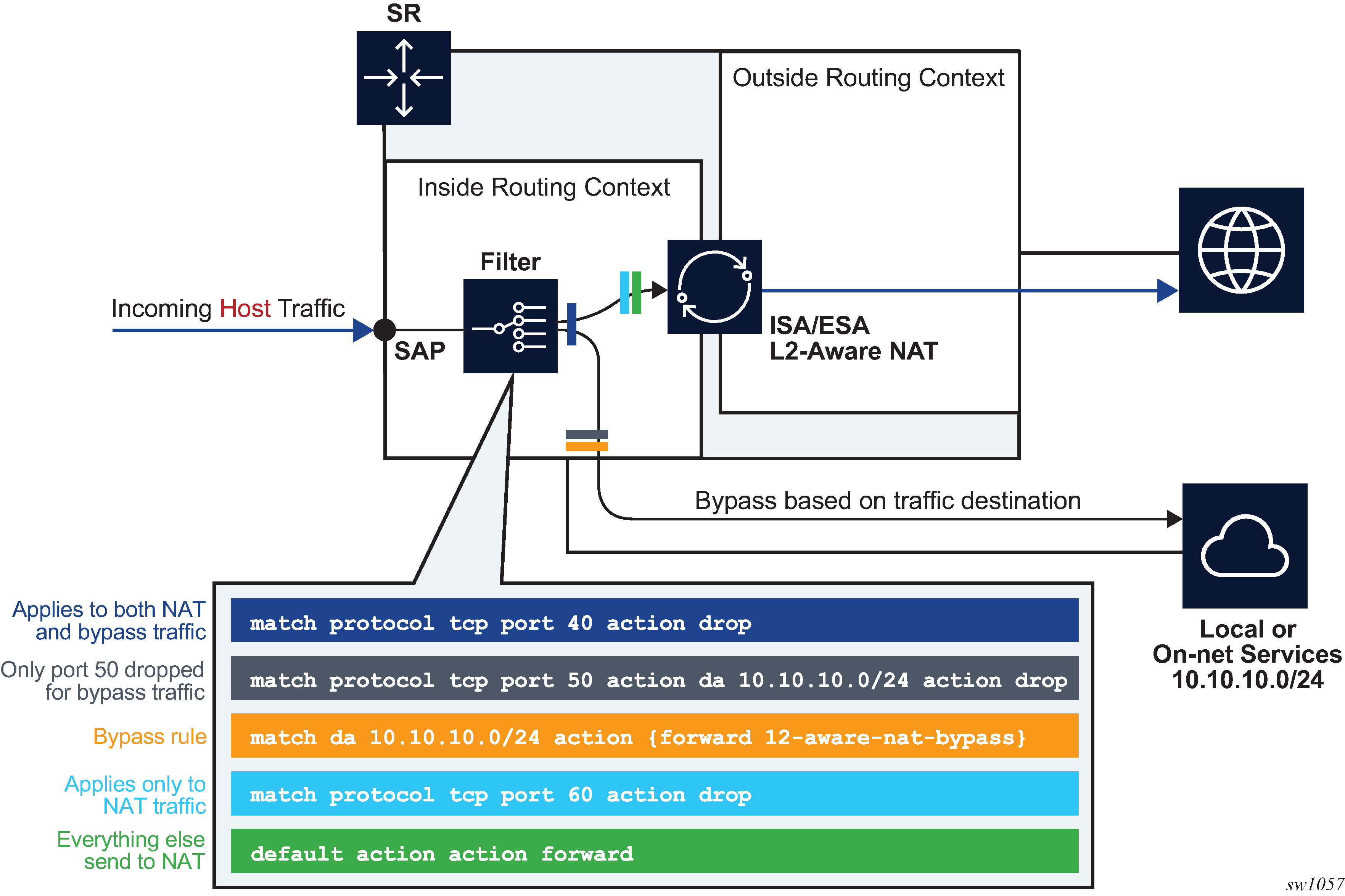

Selective Layer 2–aware NAT bypass

In selective Layer 2–aware NAT bypass, a decision whether to perform NAT is made based on the traffic classifiers (match conditions) defined in an IP filter applied to an ESM host.

A typical use case for selective Layer 2–aware NAT bypass is based on destinations, where on-net services are needed to be accessed without NAT, while some other off-net destinations, require NAT. Traffic to those on-net services is identified based on the destination IP addresses (L2-Aware bypass based on traffic destination).

Layer 2–aware NAT subscribers that are candidates for selective bypass in SR OS, must be first identified and enabled using the following command:

- MD-CLI

configure subscriber-mgmt sub-profile nat allow-bypass - classic

CLI

configure subscriber-mgmt sub-profile nat-allow-bypass

After the selective Layer 2–aware NAT bypass is enabled, the determination of whether specific traffic from a host bypasses NAT comes via an IP filter with the following action.

configure filter ip-filter entry action l2-aware-nat-bypassThis action must be configured in addition to the existing action below:

- MD-CLI

configure filter ip-filter entry action accept - classic

CLI

configure filter ip-filter entry action forward

This defined set of actions divert identified traffic away from NAT.

Although most typical use cases require traffic identification based on destination IP addresses, generic match statements in IP filters allow identification of traffic based on any Layer 3 fields.

The filter entries are executed in top-to-bottom order as shown in Filtering example for Layer 2–aware NAT bypass.

Configuration options for selective Layer 2–aware NAT bypass describes the behavior in relation to the three configuration options that directly influence selective Layer 2–aware NAT bypass.

| Layer 2–aware NAT-enabled host | Selective bypass enabled | IP filter action l2-aware-nat-bypass accept | forward |

Behavior |

|---|---|---|---|

|

Yes |

Yes |

Yes |

Selective bypass is in effect |

|

Yes |

Yes |

No |

The host is enabled for bypass, but without the corresponding IP filter action. Bypass is not in effect and all traffic from the host is NAT’d. After the bypass action is provided via the IP filter, traffic identified in the IP filter is bypassed. |

|

Yes |

No |

Yes |

The host is not enabled for bypass, but the IP filter is configured for bypass. This is an incorrect condition where host traffic is bypassed in the upstream direction but not in the downstream direction. As a result, downstream traffic is dropped. |

|

Yes |

No |

No |

The host is not enabled for bypass. All host traffic is NAT’d. |

|

No |

Yes |

Yes |

The host is not a Layer 2–aware NAT host. This is a full bypass case. |

|

No |

Yes |

No |

The host is not a Layer 2–aware NAT host. This is a full bypass case. |

|

No |

No |

Yes |

The host is not a Layer 2–aware NAT host. This is full bypass case. |

|

No |

No |

No |

The host is not a Layer 2–aware NAT host. This is full bypass case. |

The following are configuration considerations:

-

An ESM-enabled host can be enabled if the following two conditions are met:

-

the subscriber’s sub-profile contains a NAT policy

-

The host IP address belongs to the subnet configured under one of the following contexts:

- MD-CLI

configure router nat inside l2-aware subscribers configure service vprn nat inside l2-aware subscribers - classic

CLI

configure router nat inside l2-aware address configure service vprn nat inside l2-aware address

- MD-CLI

-

-

Selective bypass is enabled if the following command is configured under the sub-profile:

- MD-CLI

configure subscriber-mgmt sub-profile nat allow-bypass - classic

CLI

configure subscriber-mgmt sub-profile nat-allow-bypass

- MD-CLI

-

All configuration options are allowed in the CLI and it is up to the operator to consult Configuration options for selective Layer 2–aware NAT bypass for expected results.

On-line change of the selective NAT bypass

While traffic is flowing, it is possible to change its path from going through NAT to bypassing NAT. This kind of transition while traffic is flowing is referred to as on-line change.

NAT bypass for the Layer 2-aware NAT subscriber can be influenced using NAT access mode configuration command options. See Configuration options for selective Layer 2–aware NAT bypass for information about possible (valid and invalid) combinations of the two.

Use the following command to enable and disable NAT bypass, which is supported by changing the subscriber profile for the ESM subscriber with RADIUS/Gx. Changing the subscriber profile configuration while the profile is in use is not supported.

- MD-CLI

configure subscriber-mgmt sub-profile nat access-mode - classic

CLI

configure subscriber-mgmt sub-profile nat-access-mode

Nokia recommends changing the IP filter action using the following command, which overrides the existing IP filter using RADIUS or Gx.

configure filter ip-filter entry action l2-aware-nat-bypassNAT bypass verification

Use the following commands to verify that NAT bypass is in effect.

This command shows that the ESM subscriber is a Layer 2–aware NAT subscriber for which bypass is enabled.

show service active-subscribers detail===============================================================================

Active Subscribers

===============================================================================

-------------------------------------------------------------------------------

Subscriber AL_x0ffx6x0x0x2

(sub_l2-dhcp1)

-------------------------------------------------------------------------------

NAT Policy : pol-B-1

Outside IP : 130.0.0.201

Ports : 1536-1570

NAT Policy : pol-o-1

Outside IP : 19.0.0.87 (vprn101)

Ports : 1024-1055

NAT Policy : pol-o1-1

Outside IP : 130.0.0.68 (vprn601)

Ports : 1152-1349

NAT Policy : pol-o2-1

Outside IP : 130.0.0.222 (vprn602)

Ports : 1152-1349

-------------------------------------------------------------------------------

I. Sched. Policy : N/A

E. Sched. Policy : N/A E. Agg Rate Limit: Max

E. Min Resv Bw : 1

I. Policer Ctrl. : N/A

E. Policer Ctrl. : N/A

I. vport-hashing : Disabled

I. sec-sh-hashing: Disabled

Q Frame-Based Ac*: Disabled

Acct. Policy : N/A Collect Stats : Disabled

ANCP Pol. : N/A

Accu-stats-pol : (Not Specified)

HostTrk Pol. : N/A

IGMP Policy : N/A

MLD Policy : N/A

PIM Policy : N/A

Sub. MCAC Policy : N/A

NAT Policy : pol-o-1

Firewall Policy : N/A

UPnP Policy : N/A

NAT Prefix List : npl-4

Allow NAT bypass : Yes

The following command provides insight into whether the NAT bypass is in effect.

show filter ip===============================================================================

IP Filter

===============================================================================

Filter Id : 10 Applied : Yes

Scope : Template Def. Action : Drop

Type : Normal

System filter : Unchained

Radius Ins Pt : n/a

CrCtl. Ins Pt : n/a

RadSh. Ins Pt : n/a

PccRl. Ins Pt : n/a

Entries : 1

Description : (Not Specified)

Filter Name : 10

-------------------------------------------------------------------------------

Filter Match Criteria : IP

-------------------------------------------------------------------------------

Entry : 1

Description : (Not Specified)

Log Id : n/a

Src. IP : 0.0.0.0/0

Src. Port : n/a

Dest. IP : 0.0.0.0/0

Dest. Port : n/a

Protocol : Undefined Dscp : Undefined

ICMP Type : Undefined ICMP Code : Undefined

Fragment : Off Src Route Opt : Off

Sampling : Off Int. Sampling : On

IP-Option : 0/0 Multiple Option: Off

Tcp-flag : (Not Specified)

Option-pres : Off

Egress PBR : Disabled

Primary Action : Forward

L2 Aware NAT Bypass : Enabled

Ing. Matches : 0 pkts

Egr. Matches : 0 pkts

===============================================================================Layer 2–aware NAT destination-based multiple NAT policies

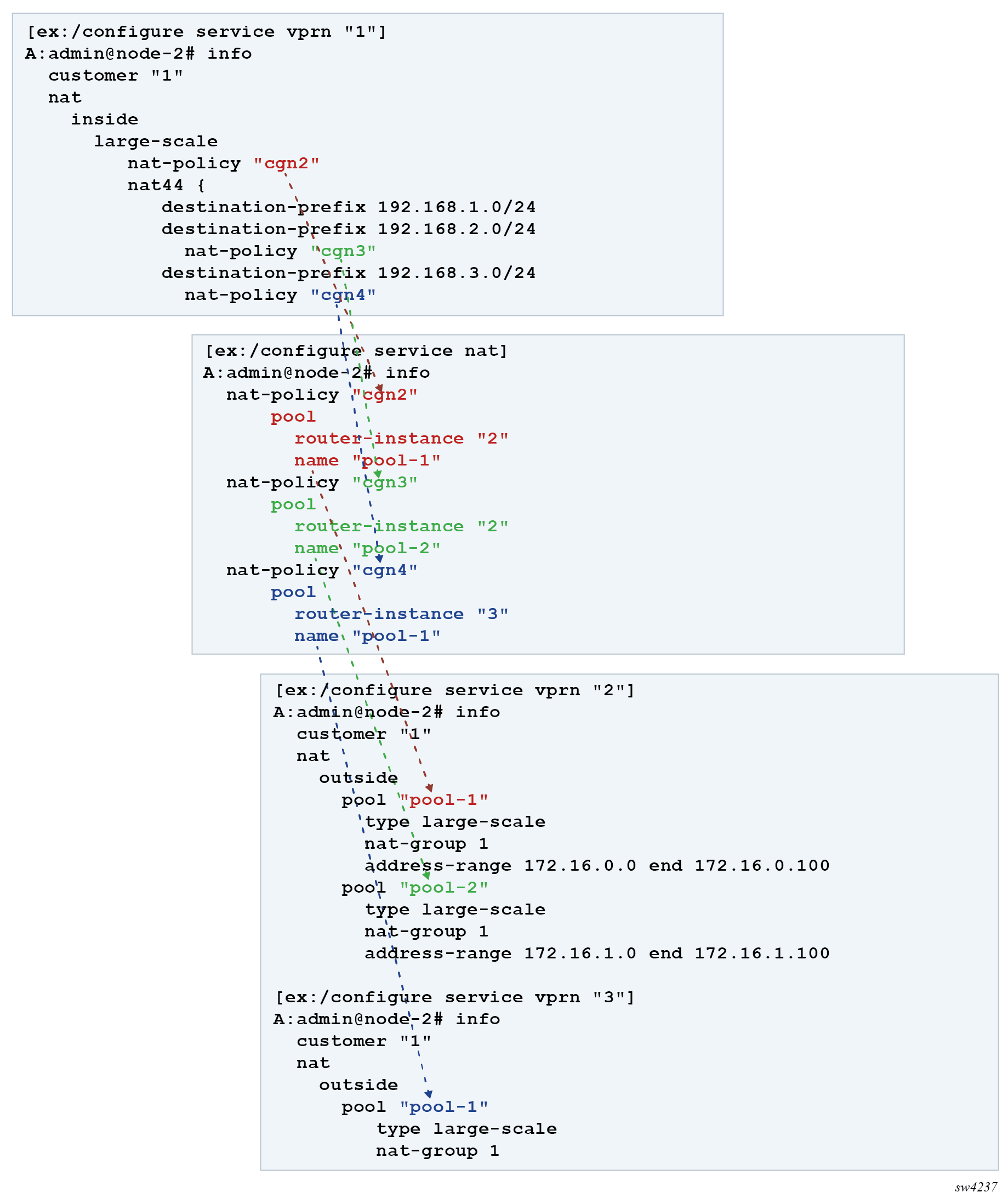

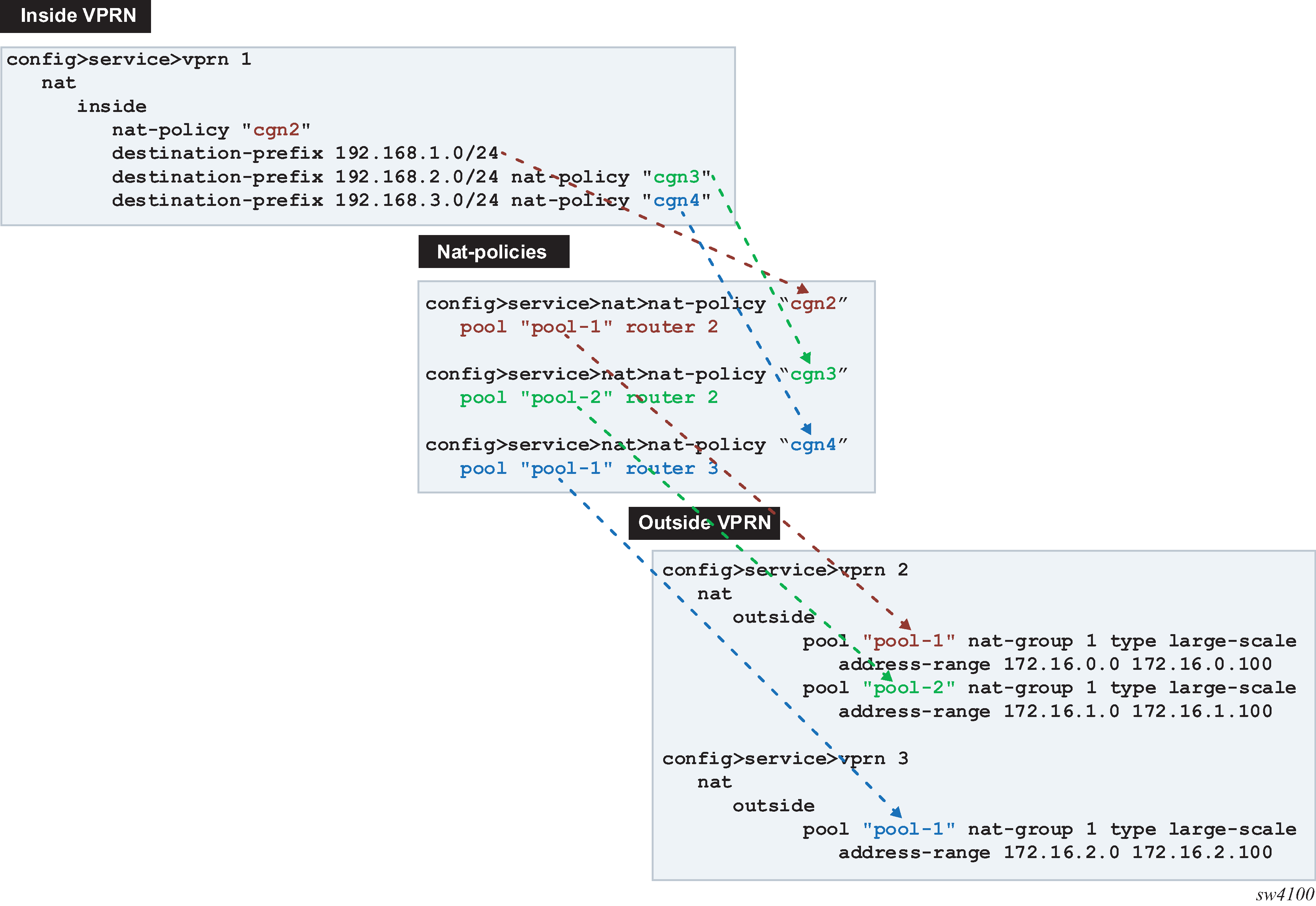

Multiple NAT policies for a L2-Aware subscriber can be selected based on the destination IP address of the packet. This allows the operator to assign different NAT pools and outside routing contexts based on the traffic destinations.

The mapping between the destination IP prefix and the NAT policy is defined in a NAT prefix list. This NAT prefix list is applied to the L2-Aware subscriber through a subscriber profile. After the subscriber traffic arrives to the MS-ISA where NAT is performed, an additional lookup based on the destination IP address of the packet is executed to select the specific NAT policy (and consequently the outside NAT pool). Failure to find the specific NAT policy based on the destination IP address lookup results in the selection of the default NAT policy referenced in the subscriber profile.

MD-CLI

[ex:/configure service]

A:admin@node-2# info

nat {

prefix-list "prefixlist1" {

prefix 192.168.0.0/30 {

nat-policy "l2aw nat policy"

}

prefix 192.168.0.64/30 {

nat-policy "l2aw nat policy"

}

prefix 192.168.0.128/30 {

nat-policy "l2aw nat policy"

}

prefix 192.168.1.0/30 {

nat-policy "another-l2aw-nat-policy"

}

prefix 192.168.1.64/30 {

nat-policy "another-l2aw-nat-policy"

}

prefix 192.168.1.128/30 {

nat-policy "another-l2aw-nat-policy"

}

}

nat-policy "another-l2aw-nat-policy" {

pool {

router-instance "Base"

name "another-l2-aw-nat-pool"

}

}

nat-policy "default-nat-policy" {

pool {

router-instance "Base"

name "default-nat-pool"

}

}

nat-policy "l2aw nat policy" {

pool {

router-instance "Base"

name "l2-aw-nat-pool"

}

}

}

[ex:/configure subscriber-mgmt]

A:admin@node-2# info

sub-profile "sub_profile" {

nat {

policy "default-nat-policy"

prefix-list "prefixlist1"

}

}classic CLI

A:node-2>config>service# info

----------------------------------------------

service

nat

nat-policy "l2aw-nat-policy" create

pool "l2aw-nat-pool" router 1

exit

nat-policy "another-l2aw-nat-policy" create

pool "another-l2aw-nat-pool" router 2

exit

nat-policy "default-nat-policy" create

pool "default-nat-pool" router Base

exit

nat-prefix-list "prefixlist1" application l2-aware-dest-to-policy create

prefix 192.168.0.0/30 nat-policy "l2aw-nat-policy"

prefix 192.168.0.64/30 nat-policy "l2aw-nat-policy"

prefix 192.168.0.128/30 nat-policy "l2aw-nat-policy"

prefix 192.168.1.0/30 nat-policy "another-l2aw-nat-policy"

prefix 192.168.1.64/30 nat-policy "another-l2aw-nat-policy"

prefix 192.168.1.128/30 nat-policy "another-l2aw-nat-policy"

exit

exit

----------------------------------------------

A:node-2>config>subscr-mgmt# info

----------------------------------------------

subscriber-mgmt

sub-profile "sub_profile" create

nat-policy "default-nat-policy"

nat-prefix-list "prefixlist1"

exit

----------------------------------------------

As displayed in the example above, multiple IP prefixes can be mapped to the same NAT policy.

The NAT prefix list cannot reference the default NAT policy. The default NAT policy is the one that is referenced directly under the subscriber profile.

Logging

In Layer 2–aware NAT with multiple nat-policies, the NAT resources are allocated in each pool associated with the subscriber. This NAT resource allocation is performed at the time when the ESM subscriber is instantiated. Each NAT resource allocation is followed by log generation.

For example, if RADIUS logging is enabled, one Alc-NAT-Port-Range VSA per NAT policy is included in the acct START/STOP message.

Alc-Nat-Port-Range = "192.168.20.1 1024-1055 router base nat-pol-1" Alc-Nat-Port-Range = "193.168.20.1 1024-1055 router base nat-pol-2" Alc-Nat-Port-Range = "194.168.20.1 1024-1055 router base nat-pol-3"

RADIUS logging and NAT-policy change via CoA

NAT policy change for Layer 2–aware NAT is supported through a sub-profile change triggered in CoA. However, change of sub-profile alone through CoA does not trigger generation of a new RADIUS accounting message and therefore NAT events related to NAT policy changes are not promptly logged. For this reason, each CoA initiating the sub-profile change in a NAT environment must do one of the following:

-

Change the SLA profile.

-

Include the Alc-Trigger-Acct-Interim VSA in the CoA messages.

Note that the SLA profile has to be changed and not just refreshed. In other words, replacing the existing SLA profile with the same one does not trigger a new accounting message.

Both of these events trigger an accounting update at the time CoA is processed. This keeps NAT logging current. The information about NAT resources for logging purposes is conveyed in the following RADIUS attributes:

-

Alc-Nat-Port-Range-Freed VSA → NAT resources released because of CoA.

-

Alc-Nat-Port-Range VSA → NAT resources in use. These can be the existing NAT resources which were not affected by CoA or they can be new NAT resource allocated because of CoA.

NAT logging behavior because of CoA depends on the deployed accounting mode of operation. This is described in NAT-policy change and CoA in L2-Aware NAT. The interim-update keyword must be configured for host or session accounting for Interim-Update messages to be triggered.

configure subscriber-mgmt radius-accounting-policy session-accounting interim-update

configure subscriber-mgmt radius-accounting-policy host-accounting interim-update| Host or session accounting | Queue-instance accounting | Comments | |

|---|---|---|---|

|

CoA Sub-prof change + ATAI VSA |

Single I-U with:

|

Single I-U with:

|

Single I-U message is triggered by CoA. |

|

CoA Sub-profile change + SLA profile change |

First I-U:

Second I-U:

|

Acct Stop:

Acct Start:

|

Two accounting messages are triggered in succession. |

|

CoA Sub-profile change |

— |

— |

No accounting messages are triggered by CoA. The next regular I-U messages contain:

|

|

CoA Sub-profile change+ Flame-proofing + ATAI VSA |

First I-U:

Second I-U:

|

Acct Stop:

Acct Start:

|

Two accounting messages are triggered in succession. |

Table Legend:

-

AATR (Alc-Acct-Triggered-Reason) VSA — This VSA is optionally carried in Interim-Update messages that are triggered by CoA.

-

ATAI (Alc-Trigger-Acct-Interim) VSA — this VSA can be carried in CoA to trigger Interim-Update message. The string carried in this VSA is reflected in the triggered Interim-Update message.

-

I-U (Interim-Update Message)

For example, the second CoA row describes the outcome triggered by CoA carrying new sub and SLA profiles. In host/session accounting mode this creates two Interim-Update messages. The first Interim-Messages carries information about:

-

the released NAT resources at the time when CoA is activated

-

existing NAT resources that are not affected by CoA

-

new NAT resources allocated at the time when CoA is activated

The second Interim-Update message carries information about the NAT resources that are in use (existing and new) when CoA is activated.

From this, the operator can infer which NAT resources are released by CoA and which NAT resources continue to be in use when CoA is activated.

Delay between the NAT resource allocation and logging during CoA

Nat-policy change induced by CoA triggers immediate log generation (for example acct STOP or INTERIM-UPDATE) indicating that the nat resources have been released. However, the NAT resources (outside IP addresses and port-blocks) in SR OS are not released for another five seconds. This delay is needed to facilitate proper termination of traffic flow between the NAT user and the outside server during the NAT policy transition. A typical example of this scenario is the following:

-

HTTP traffic is redirected to a WEB portal for authentication. Only when the user is authenticated, access to the Internet is granted along with a new NAT policy that provides more NAT resources (larger port-ranges, and so on).

-

After the user is authenticated, CoA is used to change the user forwarding properties (HTTP-redirect is removed and the NAT policy is changed). However, CoA must be sent before the authentication acknowledgment (ACK) messages is sent, otherwise the next new HTTP request would be redirected again.

-

Authentication acknowledgment is sent to the NAT user following the CoA which removed the HTTP redirect and instantiated a new NAT policy. Because the original communication between the WEB portal and the NAT user was relying on the original NAT policy, the NAT resources associated with the original NAT policy must be preserved to terminate this communication gracefully. Therefore, the delay of five seconds before the NAT resources are freed.

Similar to other stale dynamic mappings, stale port forwards are released after five seconds. Note that static port forwards are kept on the CPM. New CoAs related to NAT are rejected (NAK’d) in case that the previous change is in progress (during the 5seconds interval until the stale mappings are purged).

Static port forwards

Unless the specific NAT policy is provided during Static Port Forward (SPF) creation, the port forward is created in the pool referenced in the default NAT policy. A NAT policy can be part of the command used to modify or delete SPF. If the NAT policy is not provided, then the behavior is:

-

If there is only one match, the port forward is modified or deleted.

-

If there is more than one match, modify or delete port forward must specify a NAT policy. Otherwise, the modify or delete action fails.

A match is considered when at least these command options from the modify or delete command are matched (mandatory command options in the tools perform nat port-forwarding-action lsn command):

-

subscriber identification string

-

inside IP address

-

inside port

-

protocol

For a Layer 2-Aware NAT, an alternative AAA interface can be used to specify SPF. An alternative AAA interface and CLI-based port forwards are mutually exclusive. See the 7450 ESS, 7750 SR, and VSR RADIUS Attributes Reference Guide for more details.

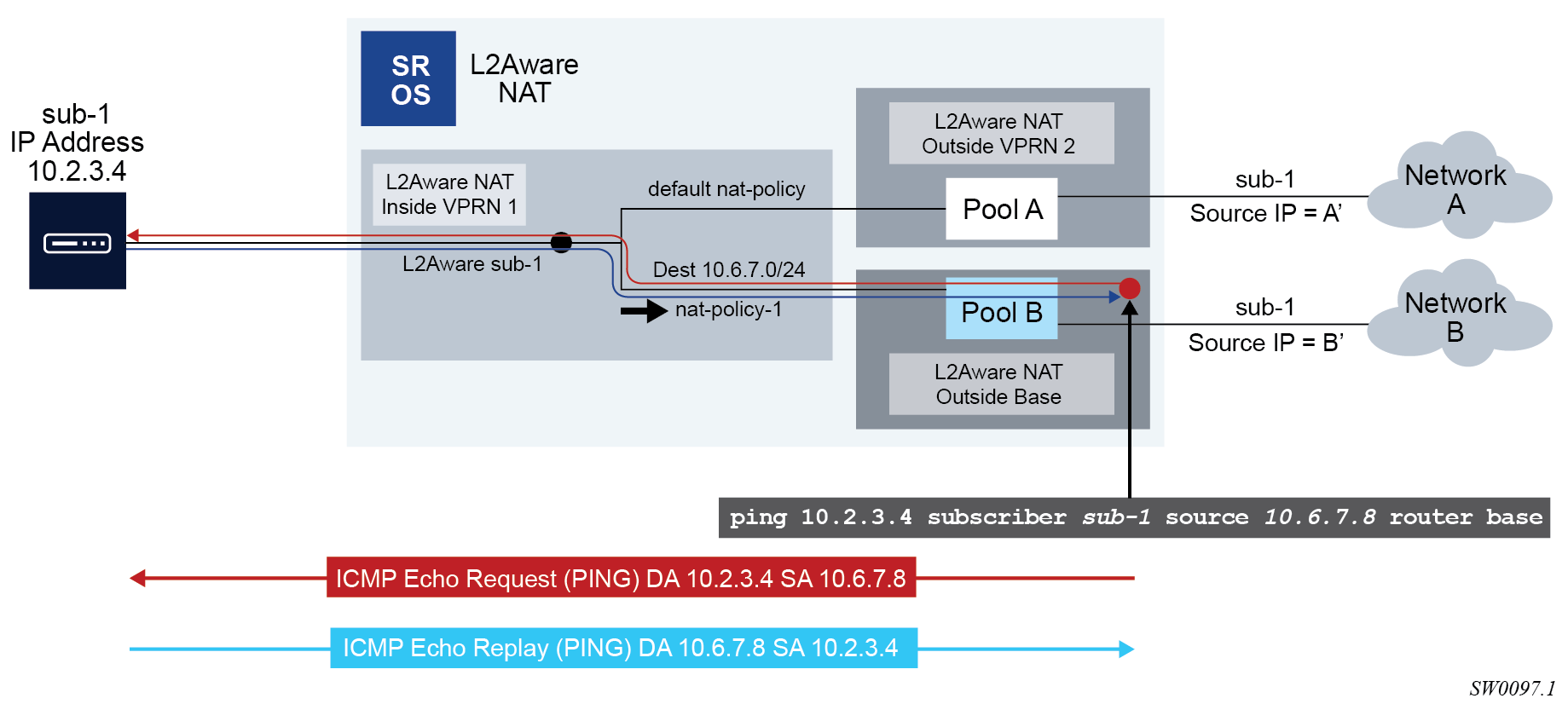

L2-Aware ping

Similar to the non-L2-Aware ping command, understanding how the ICMP Echo Request packets are sourced in L2-Aware ping is crucial for the correct execution of this command and the interpretation of its results. The ICMP Echo Reply packets must be able to reach the source IP address that was used in ICMP Echo Request packets on the SR OS node on which the L2-Aware ping command was executed. See L2-Aware ping.

The return packet (the ICMP Echo reply sent by the targeted host) is subject to L2- Aware NAT routing executed in the MS-ISA. The Layer 2–aware NAT routing process looks at the destination IP address of the upstream packet and then directs the packet to the correct outside routing context. The result of this lookup is a NAT policy that references the NAT pool in an outside routing context. This outside routing context must be the same as the one from which the L2-Aware ping command was sourced. Otherwise, the L2-Aware ping command fails.

The L2-Aware ping command can be run in two modes:

-

basic mode – the subscriber ID is a required field to differentiate subscriber hosts that assigned the same IP address (although each host has its own instantiation of this IP address)

- MD-CLI

ping subscriber - classic

CLI

ping subscriber-id

- MD-CLI

-

extended mode – additional command options can be selected. The two most important command options are the source IP address (source) and the routing context (router).

- MD-CLI

ping subscriber source-address router-instance - classic

CLI

ping subscriber-id source router

- MD-CLI

The following example shows the traffic flow for an L2-Aware ping command targeting the subscriber’s IP address 10.2.3.4, sourced from the Base routing context using an arbitrary source IP address of 10.6.7.8 (it is not required that this IP address belong to the L2-Aware ping originating node).

When the host 10.2.3.4 replies, the incoming packets with the destination IP address of 10.6.7.8 are matched against the destination-prefix 10.6.7.0/24 referencing the nat-policy-1. nat-policy-1 contains the Pool B which resides in the Base routing context. Hence, the loop is closed and the execution of the L2-Aware ping command is successful.

MD-CLI

[ex:/configure service nat]

A:admin@node-2# info

prefix-list "prefixlist1" {

prefix 10.6.7.0/24 {

nat-policy "nat-policy-1"

}

}

nat-policy "default nat-policy" {

pool {

router-instance 2

name "Pool A"

}

}

nat-policy "nat-policy-1" {

pool {

router-instance "Base"

name "Pool B"

}

}

[ex:/configure subscriber-mgmt]

A:admin@node-2# info

sub-profile "sub profile" {

nat {

policy "default nat-policy"

prefix-list "prefixlist1"

}

}classic CLI

A:node-2>config>service>nat# info

----------------------------------------------

nat-policy "default nat-policy" create

pool "Pool A" router 2

exit

nat-policy "nat-policy-1" create

pool "Pool B" router Base

exit

nat-prefix-list "prefixlist1" application l2-aware-dest-to-policy create

prefix 10.6.7.0/24 nat-policy "nat-policy-1"

exit

----------------------------------------------

A:node-2>config>subscr-mgmt# info

----------------------------------------------

sub-profile "sub profile" create

nat-policy "default nat-policy"

nat-prefix-list "prefixlist1"

exit

----------------------------------------------

L2-Aware ping is always sourced from the outside routing context, never from the inside routing context. If the router is not specifically configured as an option in the L2-Aware ping command, the Base routing context is selected by default. If that the Base routing context is not one of the outside routing contexts for the subscriber, the L2-Aware ping command execution fails with the following error message:

‟MINOR: OAM #2160 router ID is not an outside router for this subscriber.”

UPnP

UPnP uses the default NAT policy.

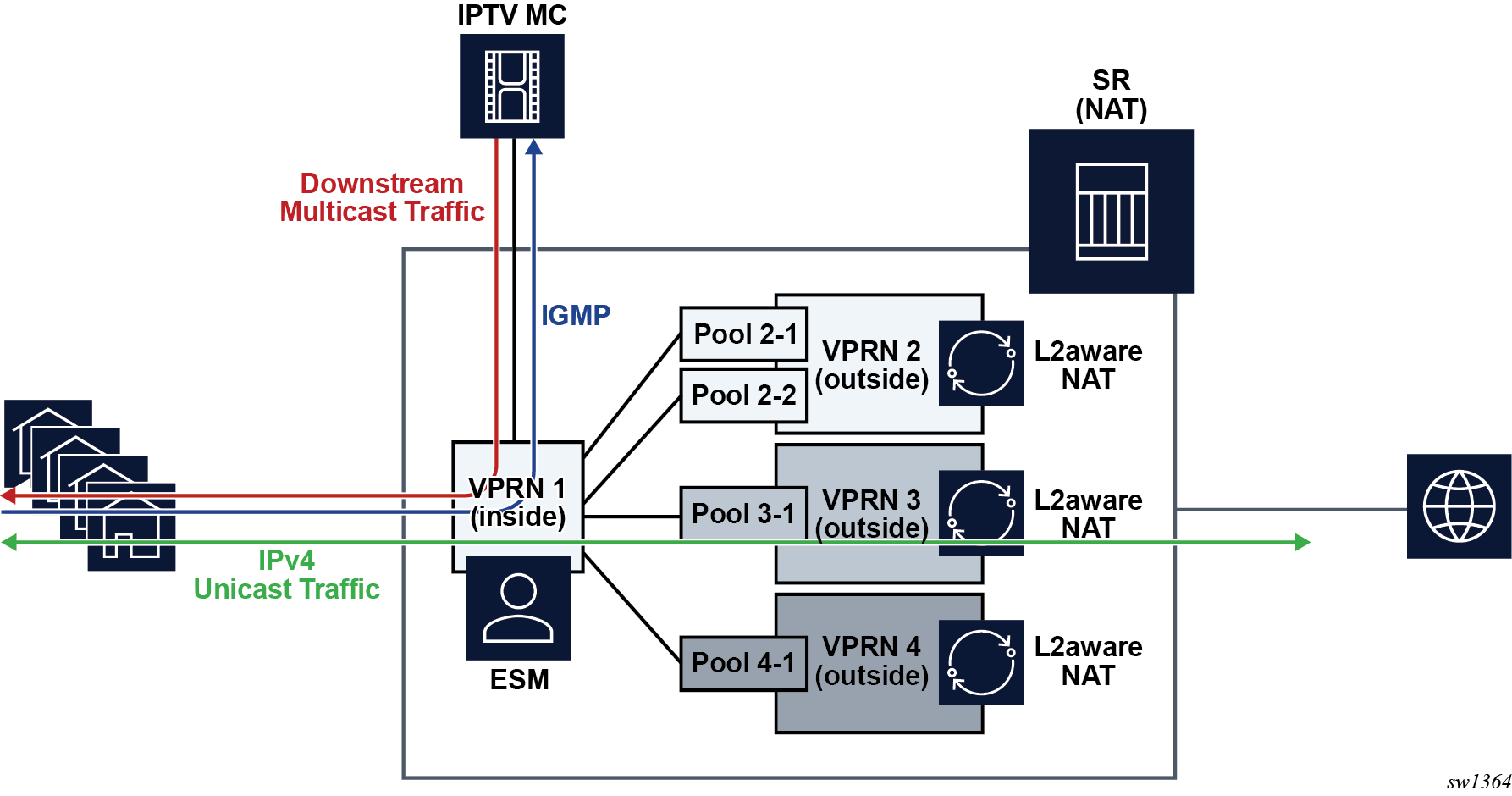

Layer 2–aware NAT and multicast

Multicast traffic through NAT is not supported. However, if the downstream multicast traffic is received in the inside routing context, without going through NAT, the traffic can be forwarded to a L2-Aware host.

To enable this type of traffic, the following entities must be configured:

-

A NAT policy in the sub-profile context. This configuration enables L2-Aware subscribers.

-

An IGMP policy in the sub-profile context. This enables multicast traffic that is originated on the inside.

-

The following command must be enabled. This configuration enforces the uniqueness of IPv4 addresses of L2-Aware subscribers. Layer 2–aware NAT supports overlapping subscriber IPv4 addresses in the inside routing context.

configure service vprn nat inside l2-aware force-unique-ip-addresses configure router nat inside l2-aware force-unique-ip-addresses

The following examples show the configuration of Layer 2–aware NAT and multicast.

MD-CLI

[ex:/configure subscriber-mgmt]

A:admin@node-2# info

sub-profile "demo-profile" {

igmp-policy "demo-mcast"

nat {

policy "demo-nat-pol"

}

}

[ex:/configure router "Base" nat inside]

A:admin@node-2# info

l2-aware {

force-unique-ip-addresses true

subscribers 192.168.100.200/32 { }

}

[ex:/configure service vprn "demo-vprn" nat inside]

A:admin@node-2# info

l2-aware {

force-unique-ip-addresses true

}classic CLI

A:node-2>config>subscr-mgmt# info

----------------------------------------------

sub-profile "demo-profile" create

nat-policy "demo-nat-pol"

igmp-policy "demo-mcast"

exitq

----------------------------------------------

A:node-2>config>service# info

----------------------------------------------

vprn 105 name "demo-vprn" customer 1 create

nat

inside

l2-aware

address 192.168.100.200/32

exit

exit

exit

no shutdown

exit

----------------------------------------------

A:node-2>config>router>nat>inside>l2-aware# info

----------------------------------------------

force-unique-ip-addresses

----------------------------------------------

A:node-2>config>service>vprn>nat>inside>l2-aware$ info

----------------------------------------------

force-unique-ip-addresses

----------------------------------------------NAT pool addresses and ICMP Echo Request/Reply (ping)

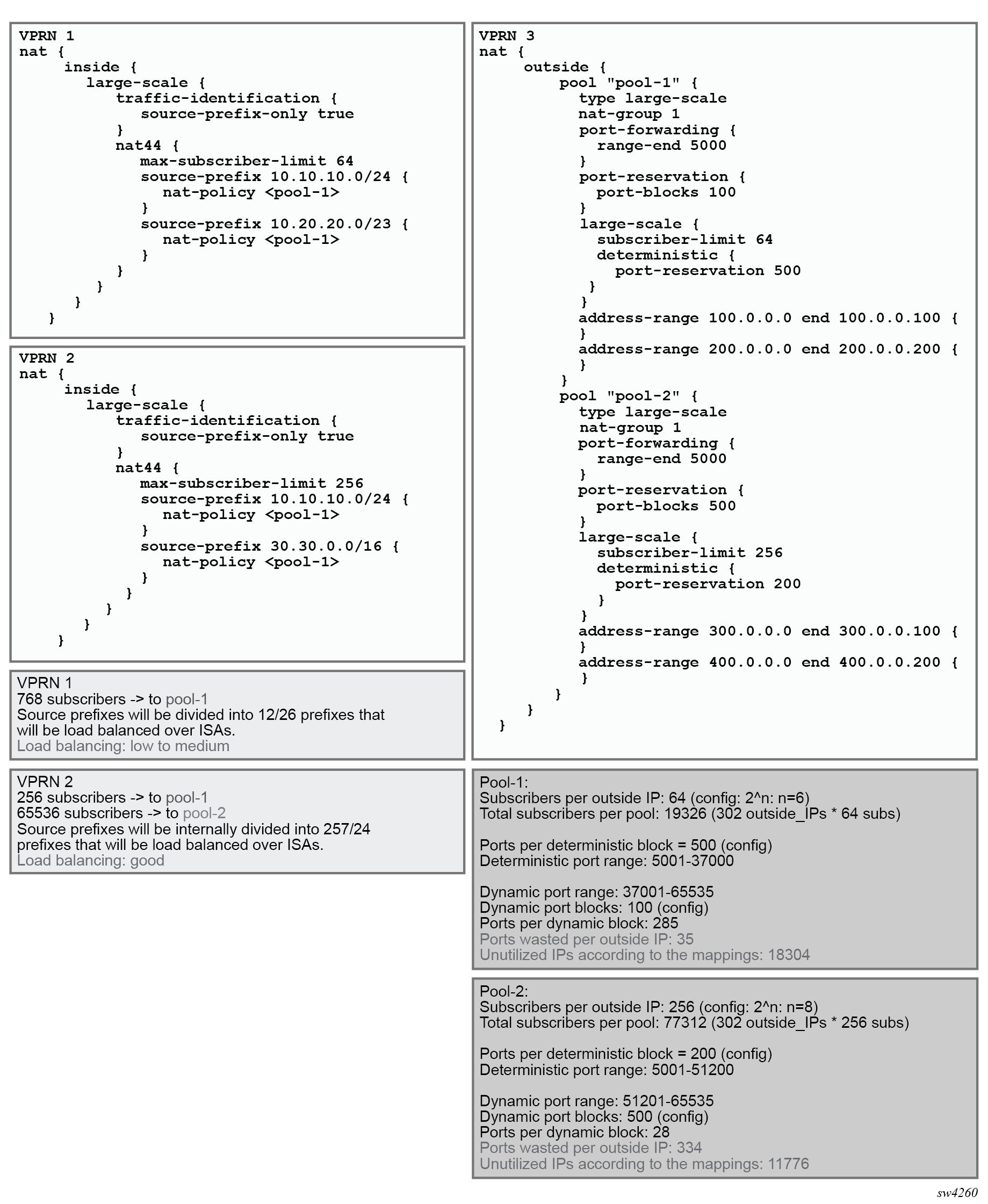

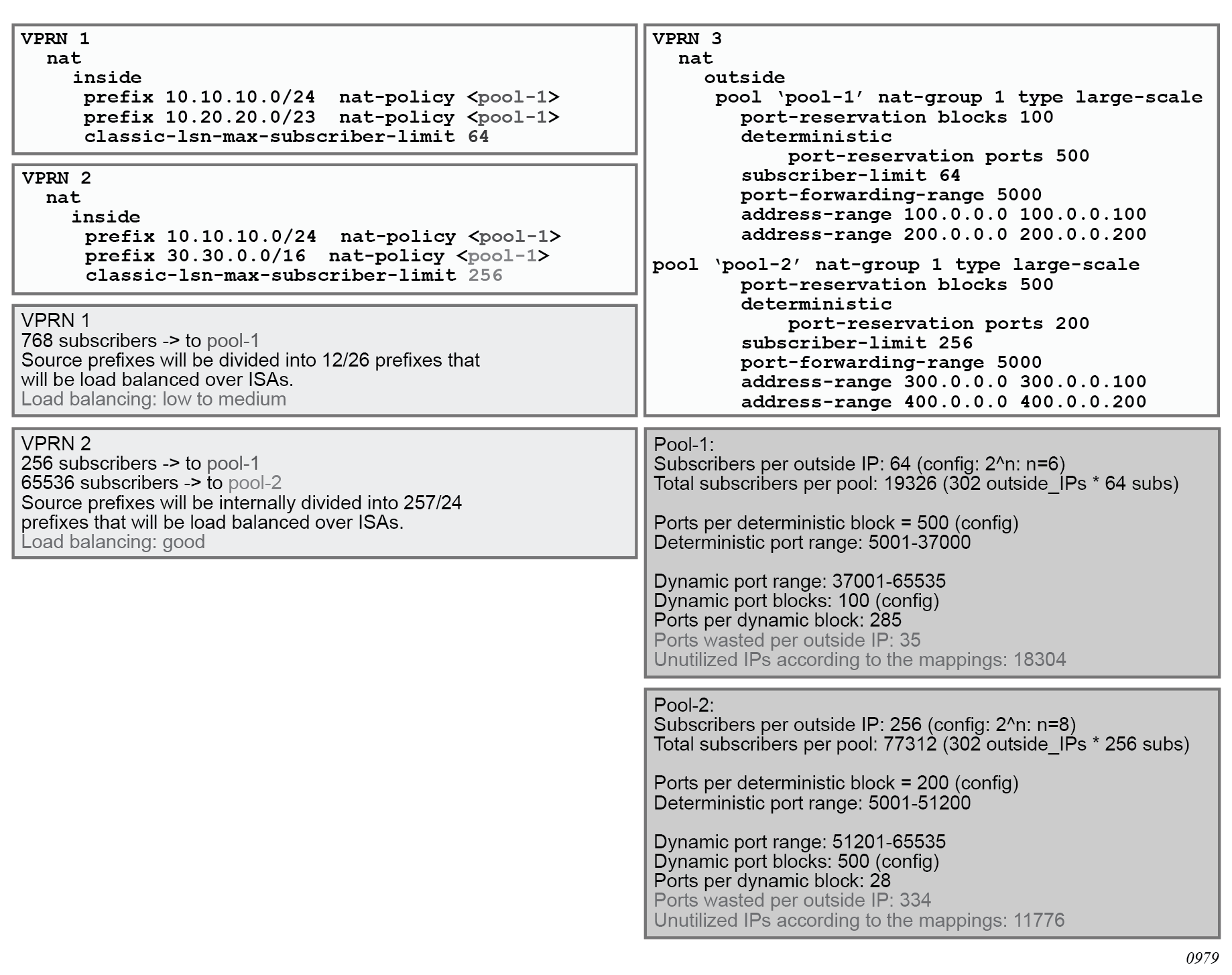

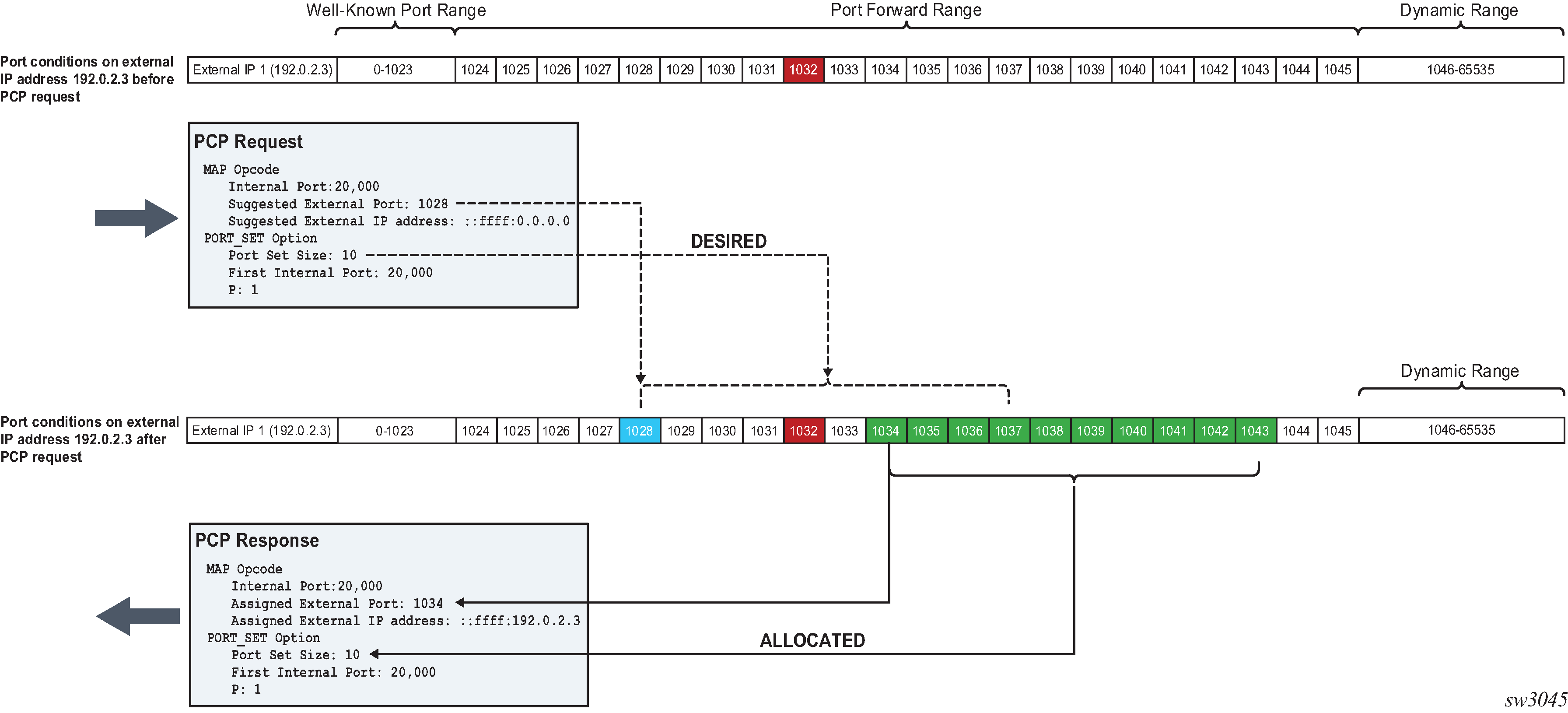

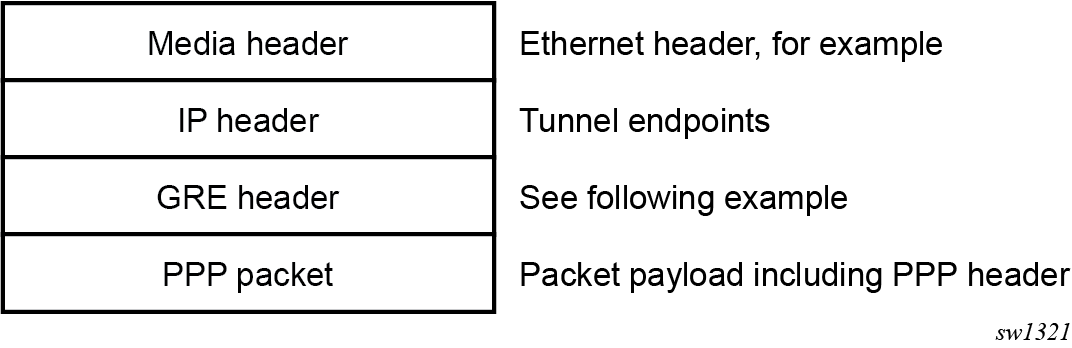

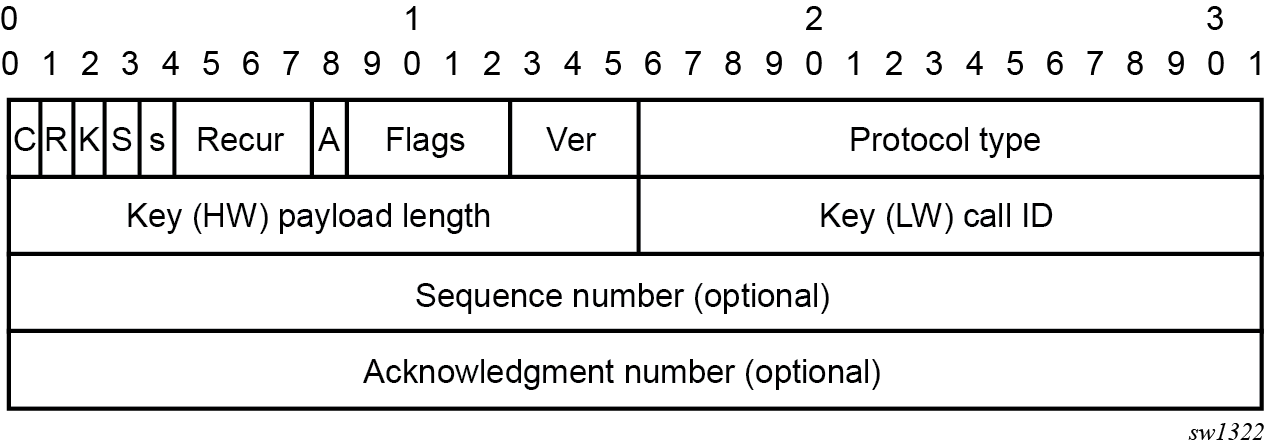

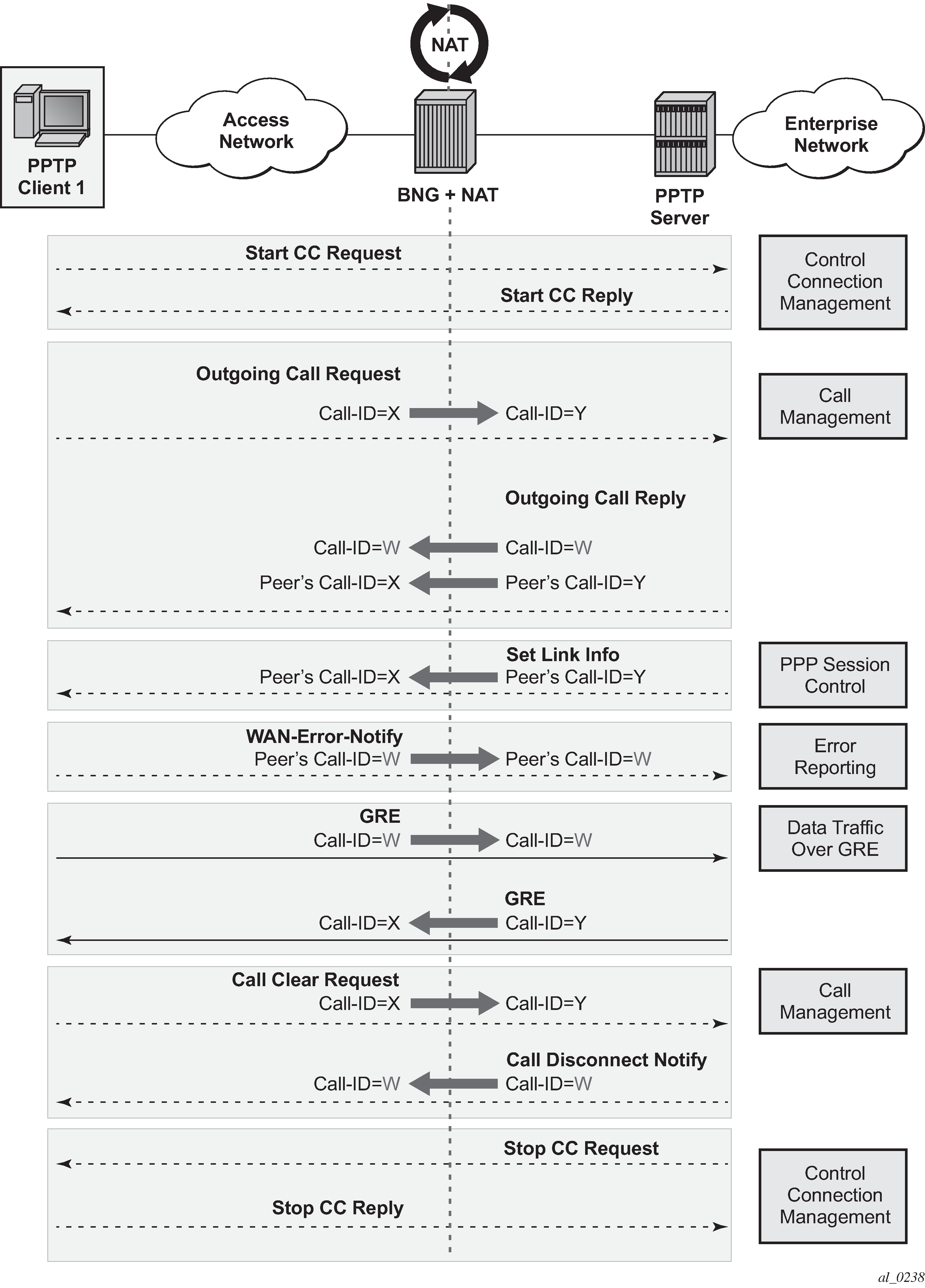

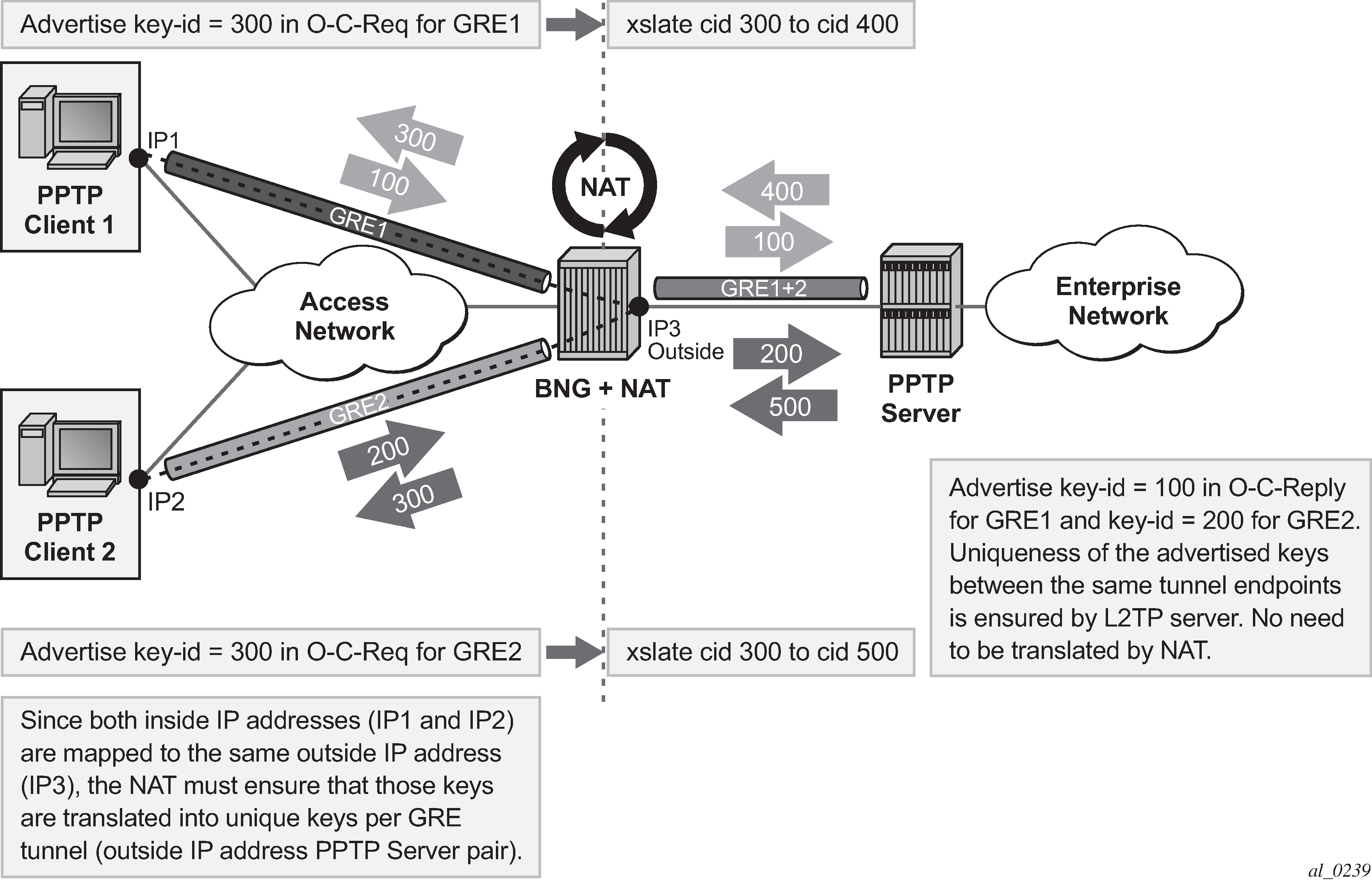

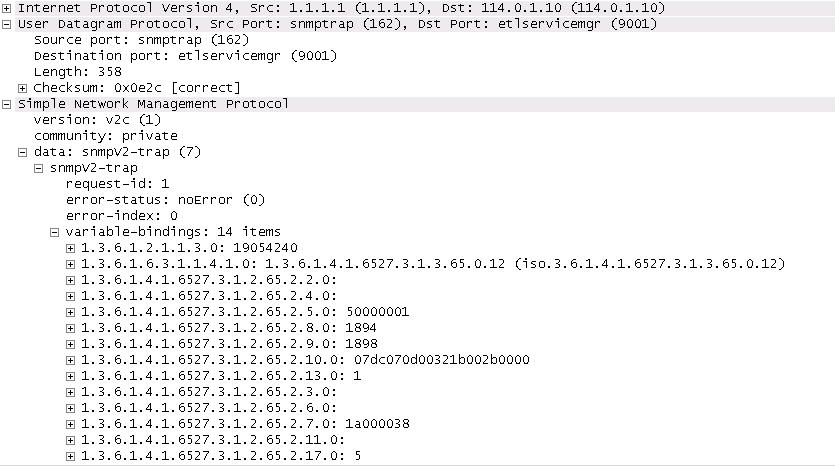

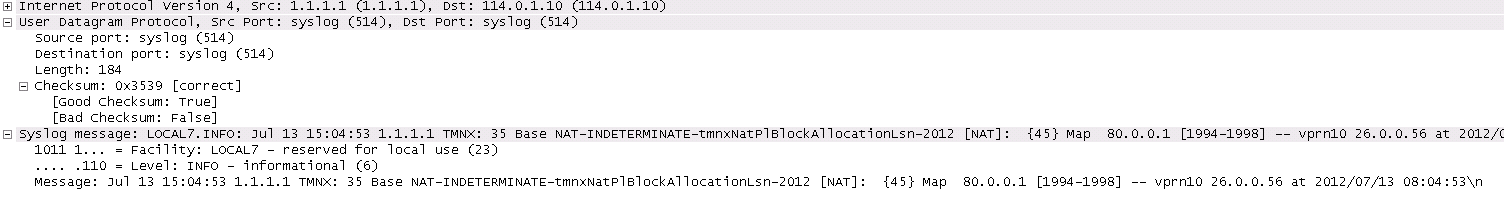

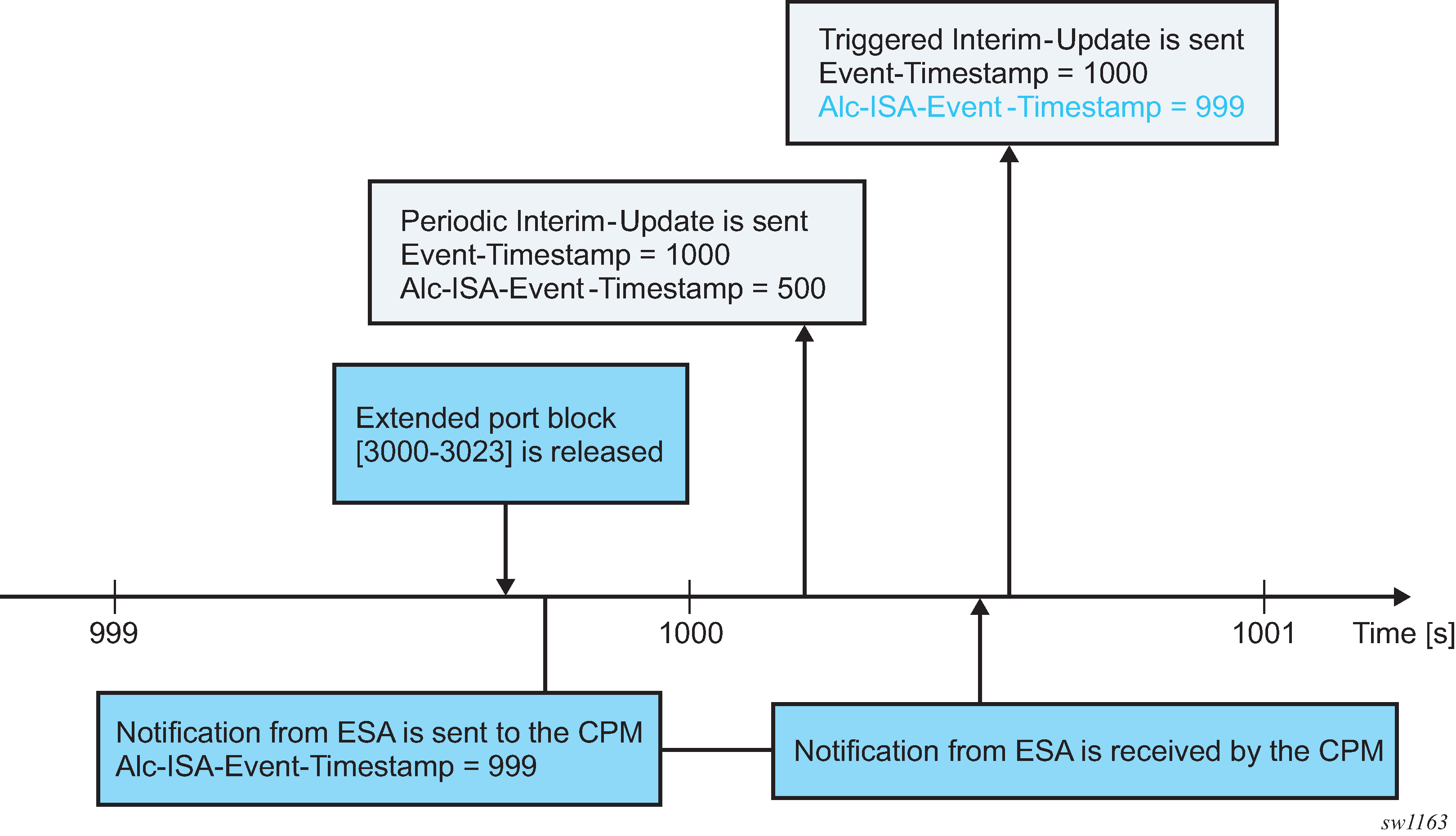

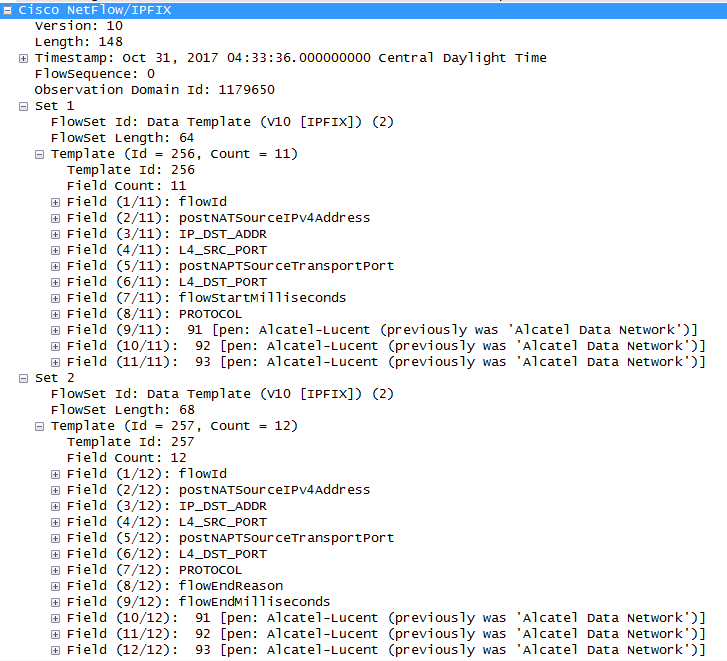

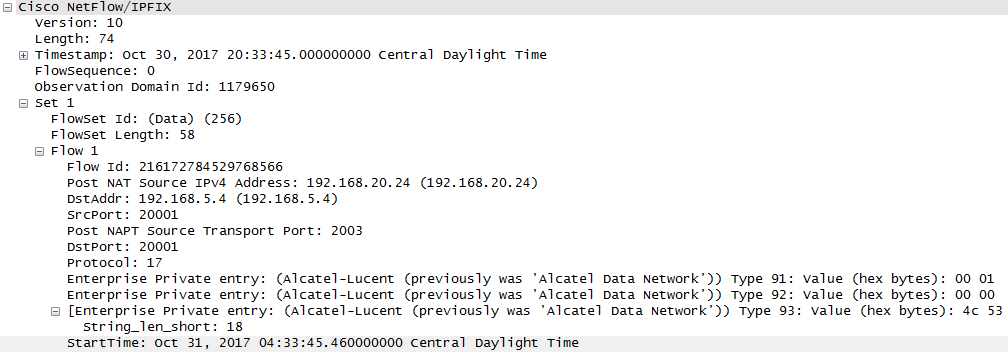

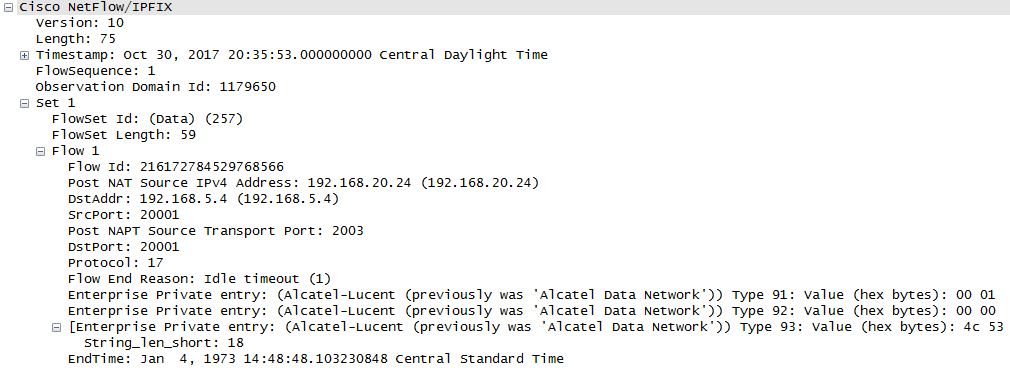

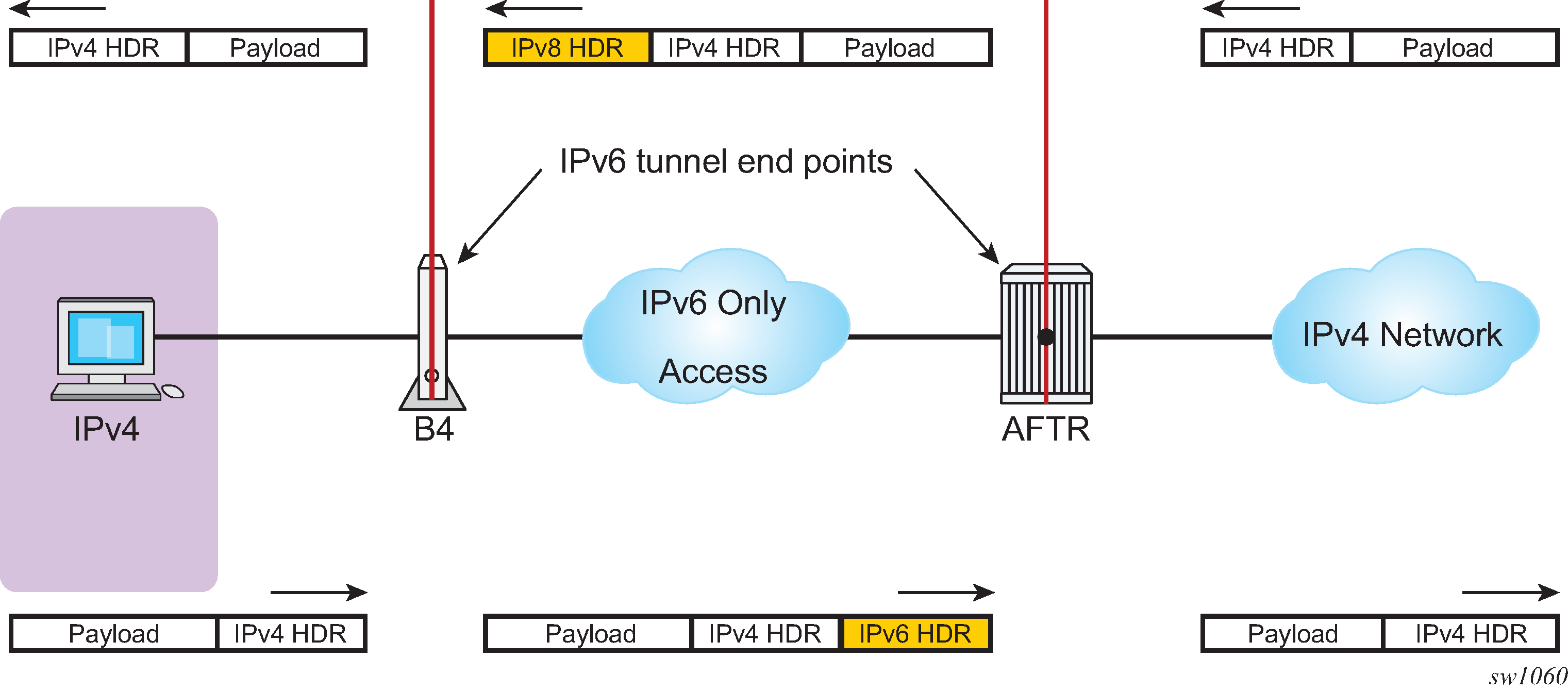

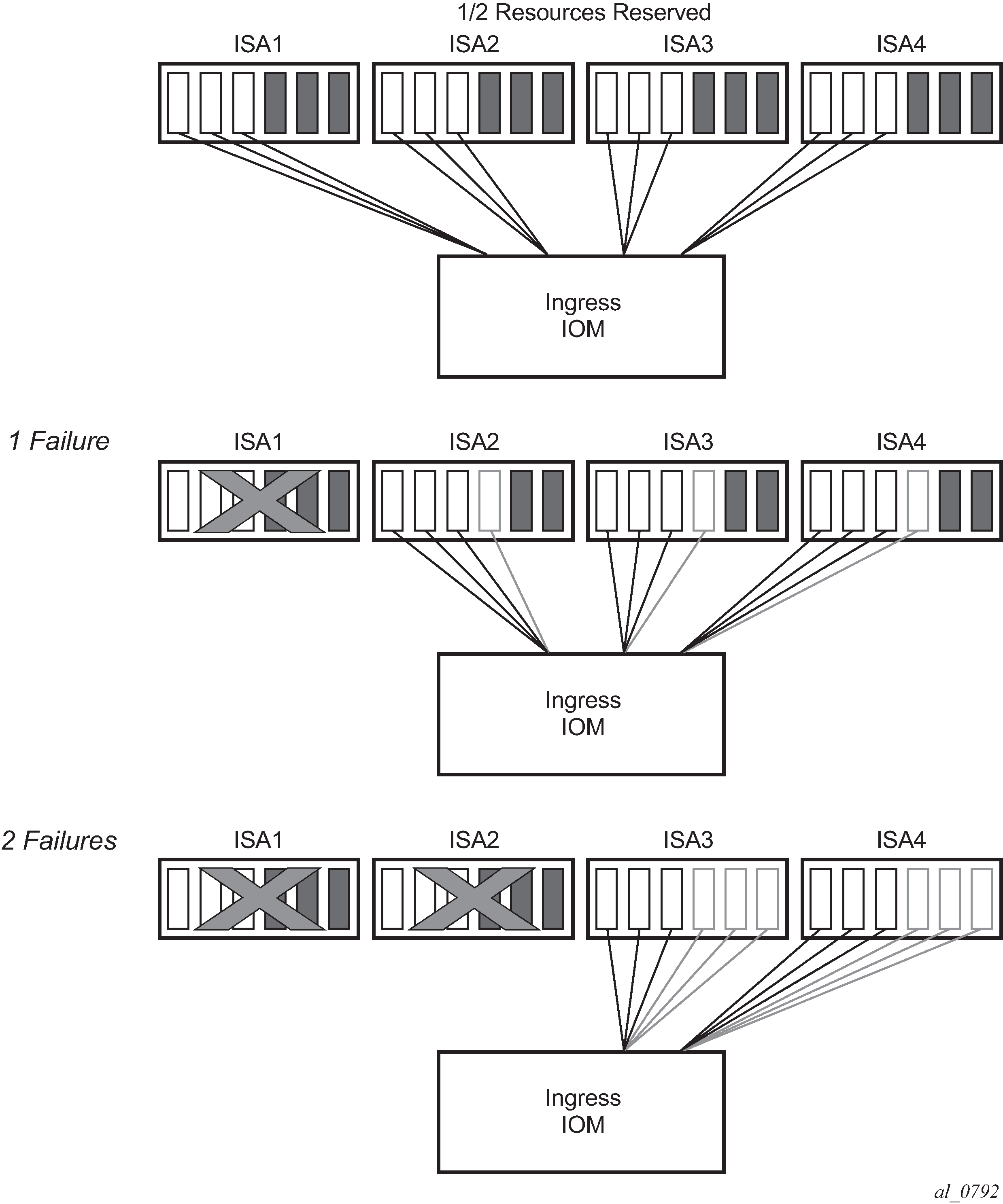

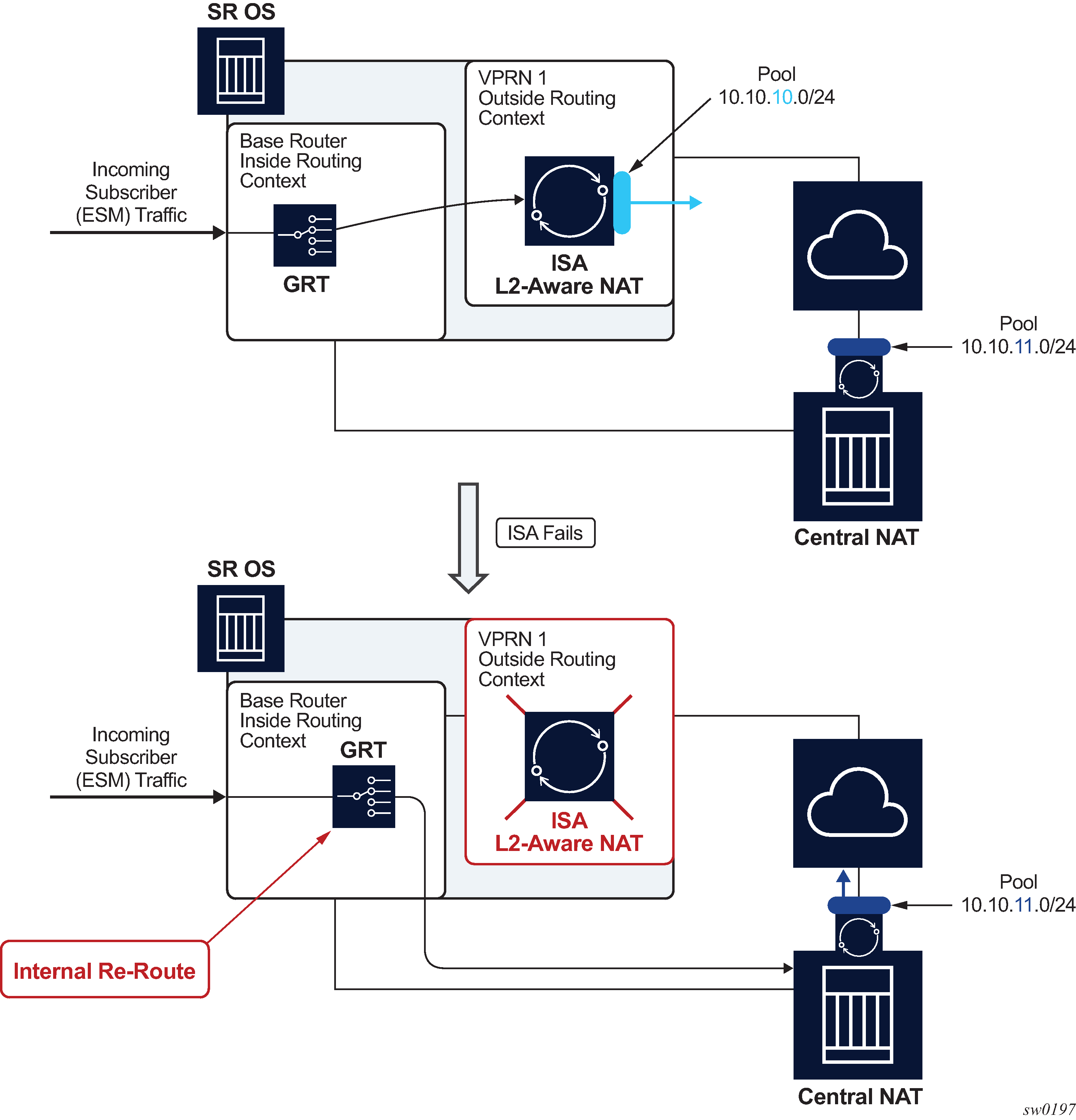

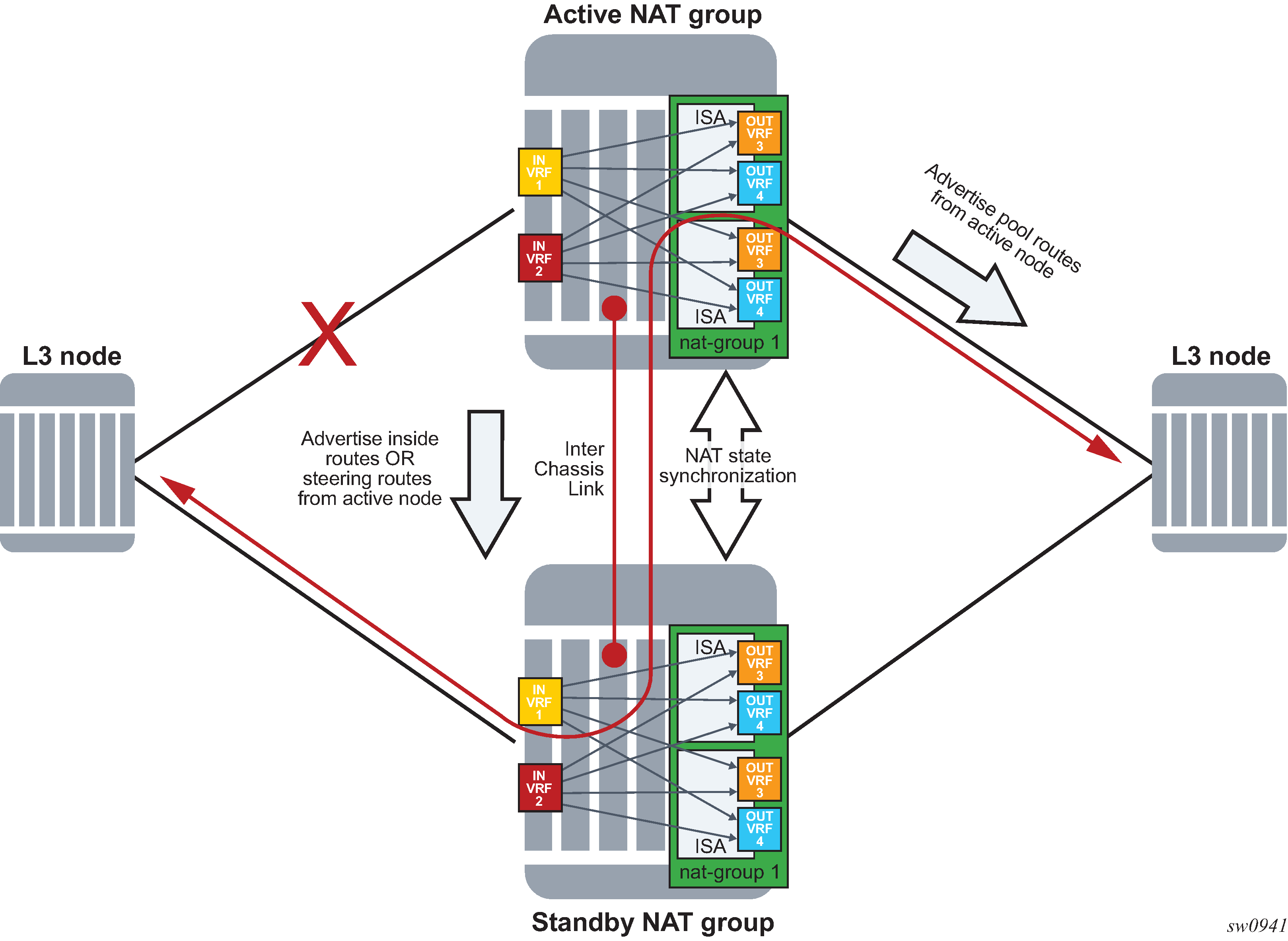

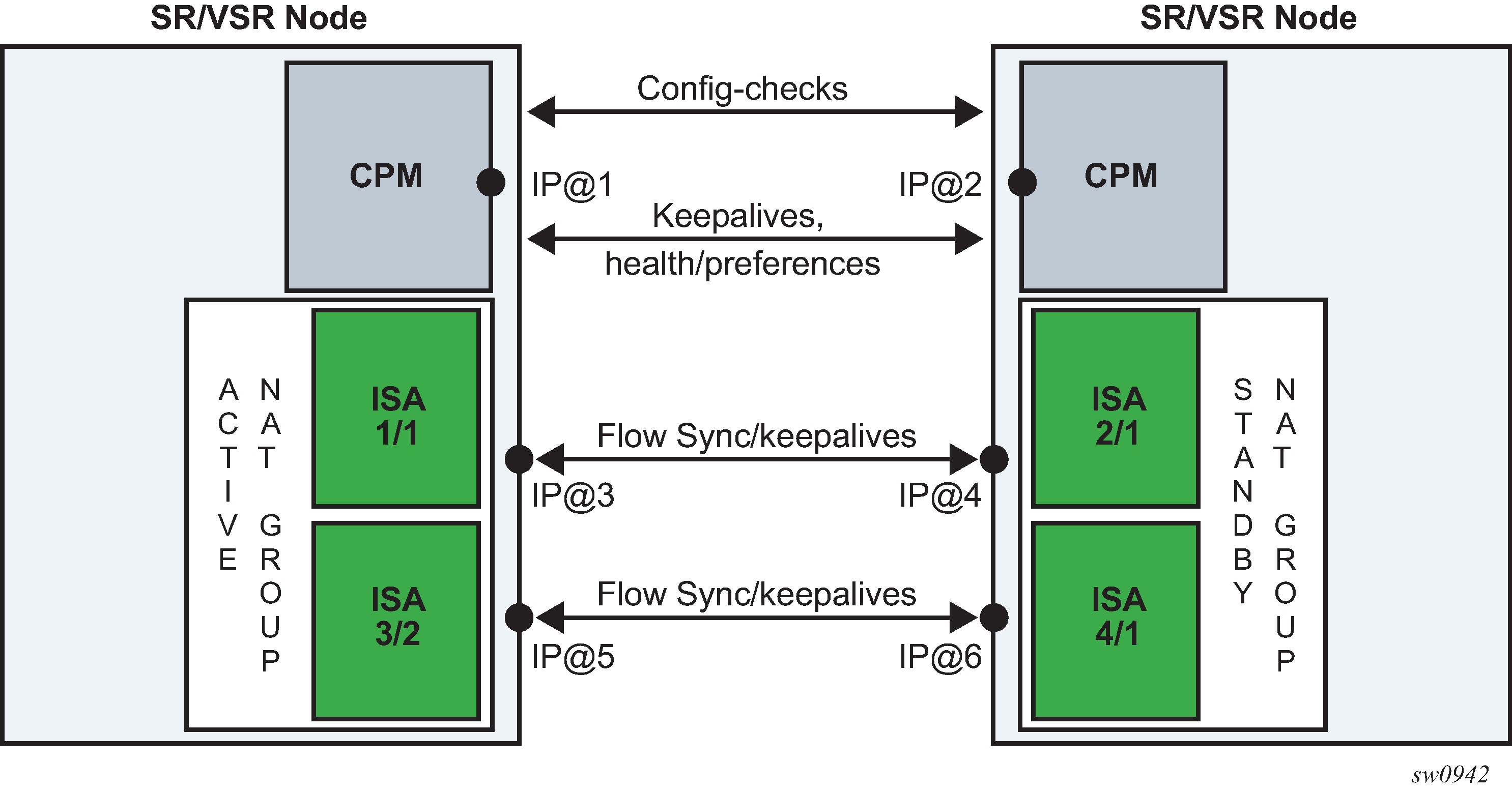

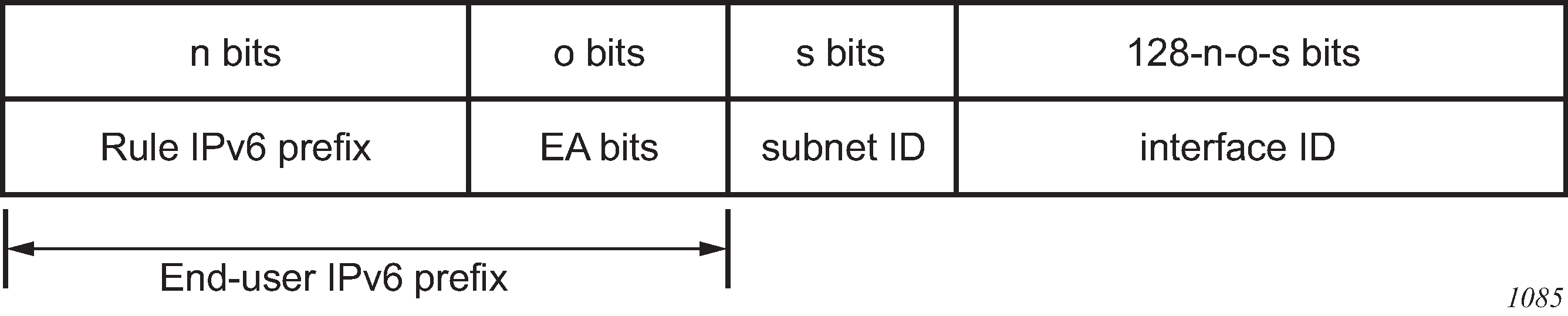

The outside IPv4 addresses in a NAT pool can be configured to answer pings. ICMPv4 Echo Requests are answered with ICMPv4 Echo Replies.