Event and accounting logs

This chapter provides information about configuring event and accounting logs on the SR OS.

Logging overview

The two primary types of logging supported in the SR OS are event logging and accounting logs.

Event logging controls the generation, dissemination, and recording of system events for monitoring status and troubleshooting faults within the system. The SR OS groups events into four major categories or event sources:

security events

Events that pertain to attempts to breach system security.

change events

Events that pertain to the configuration and operation of the node.

main events

Events that pertain to applications that are not assigned to other event categories or sources.

debug events

Events that pertain to trace or other debugging information.

Events within the SR OS have the following characteristics:

timestamp in UTC or local time

generating application

unique event ID within the application

-

router name (also called a vrtr-name) identifying the associated routing context (for example, Base or vprn1000)

-

subject identifying the affected object for the event (for example, interface name or port identifier)

short text description

Event control assigns the severity for each application event and whether the event will be generated or suppressed. The severity numbers and severity names supported in the SR OS conform to ITU standards M.3100 X.733 and X.21 and are listed in the following table.

| Severity number | Severity name |

|---|---|

1 |

cleared |

2 |

indeterminate (info) |

3 |

critical |

4 |

major |

5 |

minor |

6 |

warning |

Events that are suppressed by event control will not generate any event log entries. Event control maintains a count of the number of events generated (logged) and dropped (suppressed) for each application event. The severity of an application event can be configured in event control.

An event log within the SR OS associates event sources with logging destinations. Logging destinations include the following:

console session

Telnet or SSH session

memory logs

file destinations

SNMP trap groups

syslog destinations

A log filter policy can be associated with the event log to control which events are logged in the event log based on combinations of application, severity, event ID range, router name (vrtr-name), and the subject of the event.

The SR OS accounting logs collect comprehensive accounting statistics to support a variety of billing models. The routers collect accounting data on services and network ports on a per-service class basis. In addition to gathering information critical for service billing, accounting records can be analyzed to provide insight about customer service trends for potential service revenue opportunities. Accounting statistics on network ports can be used to track link utilization and network traffic pattern trends. This information is valuable for traffic engineering and capacity planning within the network core.

Accounting statistics are collected according to the options defined within the context of an accounting policy. Accounting policies are applied to access objects (such as access ports and SAPs) or network objects (such as network ports and network IP interface). Accounting statistics are collected by counters for individual service meters defined on the customer SAP or by the counters within forwarding class (FC) queues defined on the network ports.

The type of record defined within the accounting policy determines where a policy is applied, what statistics are collected, and time interval at which to collect statistics.

The supported destination for an accounting log is a compact flash system device. Accounting data is stored within a standard directory structure on the device in compressed XML format. On platforms that support multiple storage devices, Nokia recommends that accounting logs be configured on the cf1: or cf2: devices only. Accounting log files are not recommended on the cf3: device if other devices are available (Nokia recommends that cf3: be used primarily for software images and configuration related files).

Log destinations

Both event logs and accounting logs use a common mechanism for referencing a log destination.

The SR OS supports the following log destinations:

-

console

-

session

-

CLI logs

-

memory logs

-

log files

-

SNMP trap group

-

syslog

-

NETCONF

Only a single log destination can be associated with an event log or accounting log. An event log can be associated with multiple event sources, but it can only have a single log destination. An accounting log can only have a file destination.

Console

Sending events to a console destination means the message is sent to the system console The console device can be used as an event log destination.

Session

A session destination is a temporary log destination which directs entries to the active Telnet or SSH session for the duration of the session. When the session is terminated, for example, when the user logs out, the ‟to session” configuration is removed. Event logs configured with a session destination are stored in the configuration file but the ‟to session” part is not stored. Event logs can direct log entries to the session destination.

CLI logs

A CLI log is a log that outputs log events to a CLI session. The events are sent to the CLI session for the duration of that CLI session (or until an unsubscribe-from command is issued).

Use the following command to subscribe to a CLI log from within a CLI session.

tools perform log subscribe-to log-idMemory logs

A memory log is a circular buffer. When the log is full, the oldest entry in the log is replaced with the new entry. When a memory log is created, the specific number of entries it can hold can be specified, otherwise it assumes a default size. An event log can send entries to a memory log destination.

Log and accounting files

Log files can be used by both event logs and accounting logs and are stored on the compact flash devices in the file system.

A log file policy is identified using a numerical ID in classic interfaces and a string name in MD interfaces, but a log file policy is generally associated with a number of individual files in the file system. A log file policy is configured with a rollover parameter, expressed in minutes, which represents the period of time an individual log file is written to before a new file is created for the relevant log file policy. The rollover time is checked only when an update to the log is performed. Therefore, complying to this rule is subject to the incoming rate of the data being logged. For example, if the rate is very low, the actual rollover time may be longer than the configured value.

The retention time for a log file policy specifies the period of time an individual log file is retained on the system based on the creation date and time of the file. The system continuously checks for log files with expired retention periods once every hour and deletes as many files as possible during a 10-second interval.

When a log file policy is created, only the compact flash device for the log files is specified. Log files are created in specific subdirectories with standardized names depending on the type of information stored in the log file.

Event log files are always created in the \log directory on the specified compact flash device. The naming convention for event log files is:

log eeff-timestamp

where

ee is the event log ID

ff is the log file destination ID

timestamp is the timestamp when the file is created in the form of:

yyyymmdd-hhmmss

where

-

yyyy is the four-digit year (for example, 2019)

-

mm is the two-digit number representing the month (for example, 12 for December)

-

dd is the two-digit number representing the day of the month (for example, 03 for the 3rd of the month)

-

hh is the two-digit hour in a 24-hour clock (for example, 04 for 4 a.m.)

-

mm is the two-digit minute (for example, 30 for 30 minutes past the hour)

-

ss is the two-digit second (for example, 14 for 14 seconds)

-

Accounting log files are created in the \act-collect directory on a compact flash device (specifically cf1 or cf2). The naming convention for accounting log files is nearly the same as for log files except the prefix act is used instead of the prefix log. The naming convention for accounting logs is:

act aaff-timestamp.xml.g

where

aa is the accounting policy ID

ff is the log file destination ID

timestamp is the timestamp when the file is created in the form of:

yyyymmdd-hhmmss

where

-

yyyy is the four-digit year (for example, 2019)

-

mm

is the two-digit number representing the month (for example, 12 for December)

-

dd is the two-digit number representing the day of the month (for example, 03 for the 3rd of the month)

-

hh is the two-digit hour in a 24-hour clock (for example, 04 for 4 a.m.)

-

mm is the two-digit minute (for example, 30 for 30 minutes past the hour)

-

ss is the two-digit second (for example, 14 for 14 seconds)

-

Accounting logs are XML files created in a compressed format and have a .gz extension.

Active accounting logs are written to the \act-collect directory. When an accounting log is rolled over, the active file is closed and archived in the \act directory before a new active accounting log file is created in \act-collect.

When creating a new event log file on a compact flash disk card, the system checks the amount of free disk space and that amount must be greater than or equal to the lesser of 5.2 MB or 10% of the compact flash disk capacity.

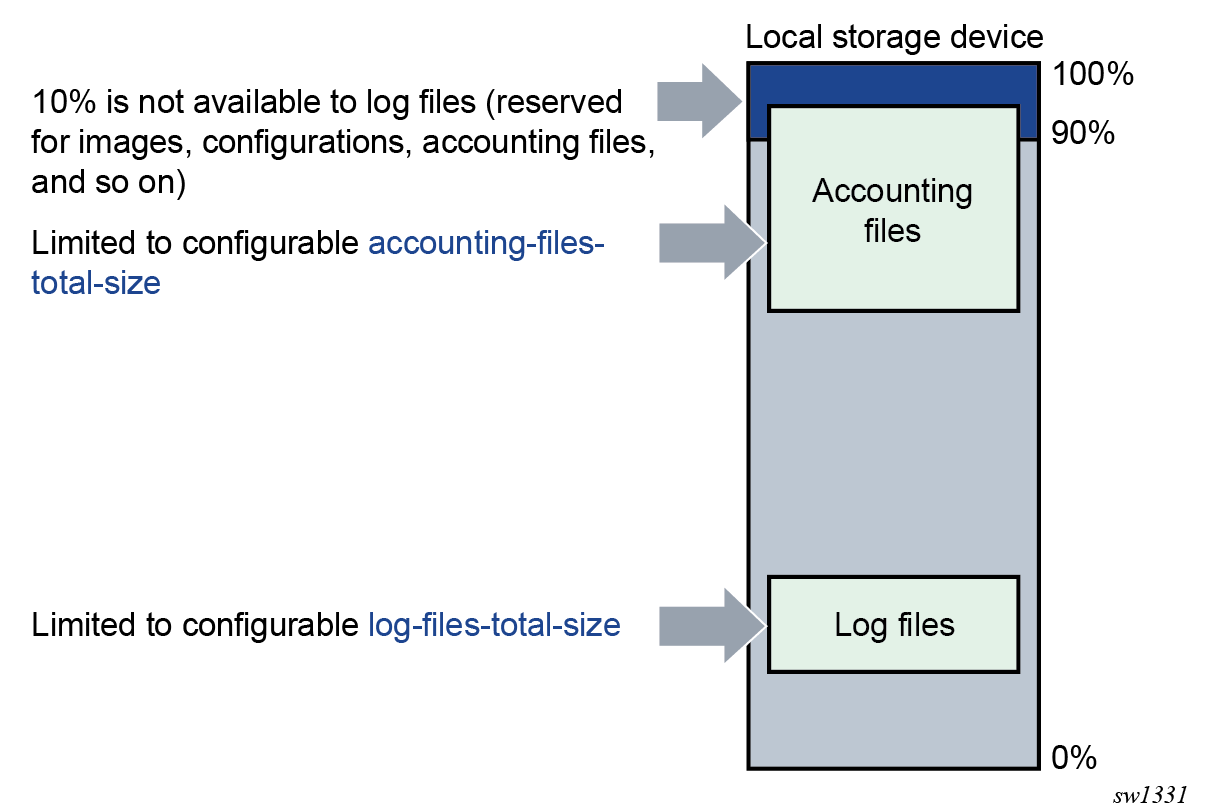

In addition to the 10% free space limit for event log files described in the preceding paragraph, configurable limits for the total size of all system-generated log files and all accounting files on each storage device are available using the following commands.

configure log file-storage-control accounting-files-total-size

configure log file-storage-control log-files-total-sizeThe space on each storage device (cf1, cf2, and so on) is independently limited to the same configured value.

The following figure illustrates the file space limits.

The system calculates the total size of all accounting files and log files on each storage device on the active CPM every hour. The storage space used on the standby CPM is not actively managed. If a user manually adds or deletes accounting or log files in the \act or \log directories, the total size of the files is taken into account during the next hourly calculation cycle. Files added by the system (that is, a new log file after a rollover period ends) or removed by the system (that is, a file that is determined as past the retention time during the hourly checks) are immediately accounted for in the total size.

If the configured limit is reached, the system attempts a cleanup to generate free space, as follows:

- Completed files beyond their retention time are removed.

- If the total size of all log files is still above the configured limit for a specific storage device, the oldest completed log files are removed until the total log size is below the limit. Accounting files below their retention time are not removed.

Whether the configurable total size limits are configured or not, log and accounting files never overwrite other types of files, such as images, configurations, persistency, and so on.

Log file encryption

The log files saved in local storage can be encrypted using the AES-256-CTR cipher algorithm.

Use the following command to configure the log file encryption key and enable log file encryption. The encryption key is used for all local log files in the system.

configure log encryption-key- When an encrypted log file is opened in a text editor, editing or viewing the file contents is not possible, as the entire file is encrypted.

- The encrypted log files can be decrypted offline using the appropriate OpenSSL command.

openssl enc aes-256-ctr -pbkdf2 -d -in <log file encrypted> -out <output log file> -p -pass pass:<passphrase>

SNMP trap group

An event log can be configured to send events to SNMP trap receivers by specifying an SNMP trap group destination.

An SNMP trap group can have multiple trap targets. Each trap target can have different operational values.

A trap destination has the following properties:

IP address of the trap receiver

UDP port used to send the SNMP trap

SNMP version (v1, v2c, or v3) used to format the SNMP notification

SNMP community name for SNMPv1 and SNMPv2c receivers

Security name and level for SNMPv3 trap receivers

For SNMP traps that are sent out-of-band through the Management Ethernet port on the SF/CPM, the source IP address of the trap is the IP interface address defined on the Management Ethernet port. For SNMP traps that are sent in-band, the source IP address of the trap is the system IP address of the router.

Each trap target destination of a trap group receives the identical sequence of events as defined by the log ID and the associated sources and log filter applied. For the list of options that can be sent in SNMP notifications, please see the SR OS MIBs (and RFC 3416, section 4.2.6).

Syslog

Syslog implementation overview

An event log can be configured to send events to one syslog destination. Syslog destinations have the following properties:

-

Syslog server IP address

-

UDP port or TLS profile used to send the Syslog message

-

Syslog Facility Code (0 to 23) (default 23 - local 7)

-

Syslog Severity Threshold (0 to 7); sends events exceeding the configured level

Because syslog uses eight severity levels whereas the SR OS uses six internal severity levels, the SR OS severity levels are mapped to syslog severities. The following table describes the severity level mappings.

| SR OS event severity | Syslog severity numerical code | Syslog severity name | Syslog severity definition |

|---|---|---|---|

|

— |

0 |

emergency |

System is unusable |

|

critical |

1 |

alert |

Action must be taken immediately |

|

major |

2 |

critical |

Critical conditions |

|

minor |

3 |

error |

Error conditions |

|

warning |

4 |

warning |

Warning conditions |

|

— |

5 |

notice |

Normal but significant condition |

|

cleared or indeterminate |

6 |

info |

Informational messages |

|

— |

7 |

debug |

Debug-level messages |

The general format of an SR OS Syslog message is the following, as defined in RFC 3164, The BSD Syslog Protocol:

<PRI><HEADER> <MSG>

where:

-

<PRI> is a number that is calculated from the message Facility and Severity codes as follows:

Facility * 8 + Severity

The calculated PRI value is enclosed in "<" and ">" angle brackets in the transmitted Syslog message.

-

<HEADER> is composed of the following:

<TIMESTAMP> <HOSTNAME>

-

<TIMESTAMP> immediately follows the trailing ">" from the PRI part, without a space between. Depending on the configuration of the configure log syslog timestamp-format command, the format is either:

MMM DD HH:MM:SS

or

MMM DD HH:MM:SS.sss

There are always two characters for the day (DD). Single digit days are preceded with a space character. Either UTC or local time is used, depending on the configuration of the time-format command for the event log.

-

<HOSTNAME> follows the <TIMESTAMP> with a space between. It is an IP address by default, or can be configured to use other values using the following commands:

configure log syslog hostname configure service vprn log syslog hostname

-

-

<MSG> is composed of the following:

<log-prefix>: <seq> <vrtr-name> <application>-<severity>-<Event Name>-<Event ID> [<subject>]: <message>\n

-

<log-prefix> is an optional 32 characters of text (default = 'TMNX') as configured using the log-prefix command.

-

<seq> is the log event sequence number (always preceded by a colon and a space char)

-

<vrtr-name> is vprn1, vprn2, … | Base | management | vpls-management

-

<subject> may be empty resulting in []:

-

\n is the standard ASCII newline character (0x0A)

-

Examples (from different nodes)

default log-prefix (TMNX):

<188>Jan 2 18:43:23 10.221.38.108 TMNX: 17 Base SYSTEM-WARNING-tmnxStateChange-

2009 [CHASSIS]: Status of Card 1 changed administrative state: inService,

operational state: outOfService\n

<186>Jan 2 18:43:23 10.221.38.108 TMNX: 18 Base CHASSIS-MAJOR-tmnxEqCardRemoved-

2003 [Card 1]: Class IO Module : removed\n

no log-prefix:

<188>Jan 11 18:48:12 10.221.38.108 : 32 Base SYSTEM-WARNING-tmnxStateChange-2009

[CHASSIS]: Status of Card 1 changed administrative state: inService,

operational state: outOfService\n

<186>Jan 11 18:48:12 10.221.38.108 : 33 Base CHASSIS-MAJOR-tmnxEqCardRemoved-

2003 [Card 1]: Class IO Module : removed\n

log-prefix "test":

<186>Jan 11 18:51:22 10.221.38.108 test: 47 Base CHASSIS-MAJOR-tmnxEqCardRemoved-

2003 [Card 1]: Class IO Module : removed\n

<188>Jan 11 18:51:22 10.221.38.108 test: 48 Base SYSTEM-WARNING-tmnxStateChange-

2009 [CHASSIS]: Status of Card 1 changed administrative state: inService,

operational state: outOfService\n

Syslog IP header source address

The source IP address field of the IP header on Syslog message packets depends on a number of factors including which interface the message is transmitted on and a few configuration commands.

When a syslog packet is transmitted out-of-band (out a CPM Ethernet port in the management router instance), the source IP address contains the address of the management interface as configured in the BOF.

When a syslog packet is transmitted in-band (for example, out a port on an IMM) in the Base router instance, the order of precedence for how the source IP address is populated is the following:

- source

address

configure system security source-address ipv4 syslog configure system security source-address ipv6 syslog - system

address

configure router interface "system" ipv4 primary address configure router interface "system" ipv6 address - IP address of the outgoing interface

- source

address

configure system security source-address application syslog configure system security source-address application6 syslog - system

address

configure router interface "system" address configure router interface "system" ipv6 address - IP address of the outgoing interface

When a syslog packet is transmitted out a VPRN interface, the source IP address is populated with the IP address of the outgoing interface.

Syslog HOSTNAME

The HOSTNAME field of Syslog messages can be populated with an IP address, the system name, or a number of other options.

configure log syslog hostname

configure service vprn log hostnameIf the hostname command is not configured, SR OS populates the syslog HOSTNAME field with an IP address as follows.

When a syslog packet is transmitted out-of-band (out a CPM Ethernet port in the management router instance), the HOSTNAME field contains the address of the management interface as configured in the BOF.

When a syslog packet is transmitted in-band (for example, out a port on an IMM) in the Base router instance, the order of precedence for how the source IP address is populated is the following:

- source

address

configure system security source-address ipv4 syslog configure system security source-address ipv6 syslog - system

address

configure router interface "system" ipv4 primary address configure router interface "system" ipv6 address - lowest loopback address

- lowest exit address

- source

address

configure system security source-address application syslog configure system security source-address application6 syslog - system

address

configure router interface "system" address configure router interface "system" ipv6 address - lowest loopback address

- lowest exit address

When a syslog packet is transmitted out a VPRN interface, the HOSTNAME is populated with the VPRN loopback address. When more than one loopback exists, the HOSTNAME contains the lowest loopback IP address. If no loopback interface is configured, the HOSTNAME contains the physical exit interface IP address. When no loopback interface is configured and more than one physical exit interface exists, the hostname contains the lowest physical exit interface IP address.

Syslog over TLS for log events

Syslog messages containing log events can be optionally sent over TLS instead of UDP. TLS support for log event Syslog messages is based on RFC 5425, which provides security for syslog through the use of encryption and authentication. Use the following command to enable TLS for syslog log events by configuring a TLS profile against the syslog profile.

configure log syslog tls-client-profileSyslog over TLS packets are sent with a fixed TCP source port of 6514.

TLS is supported for the following log event syslogs:

-

system syslogs (configure log syslog), which can send Syslog messages as follows:

-

in-band (for example, out a port on an IMM)

-

out-of-band (out a CPM Ethernet port in the management router instance)

The configure log route-preference command configuration determines where the TLS connection is established for the base system syslogs.

-

-

service VPRN syslogs using the following command

configure service vprn log syslog

NETCONF

A NETCONF log is a log that outputs log events to a NETCONF session as notifications. A NETCONF client can subscribe to a NETCONF log using the configured netconf-stream stream-name for the log in a subscription request. See NETCONF notifications for more details.

Event logs

Event logs are the means of recording system-generated events for later analysis. Events are messages generated by the system by applications or processes within the router.

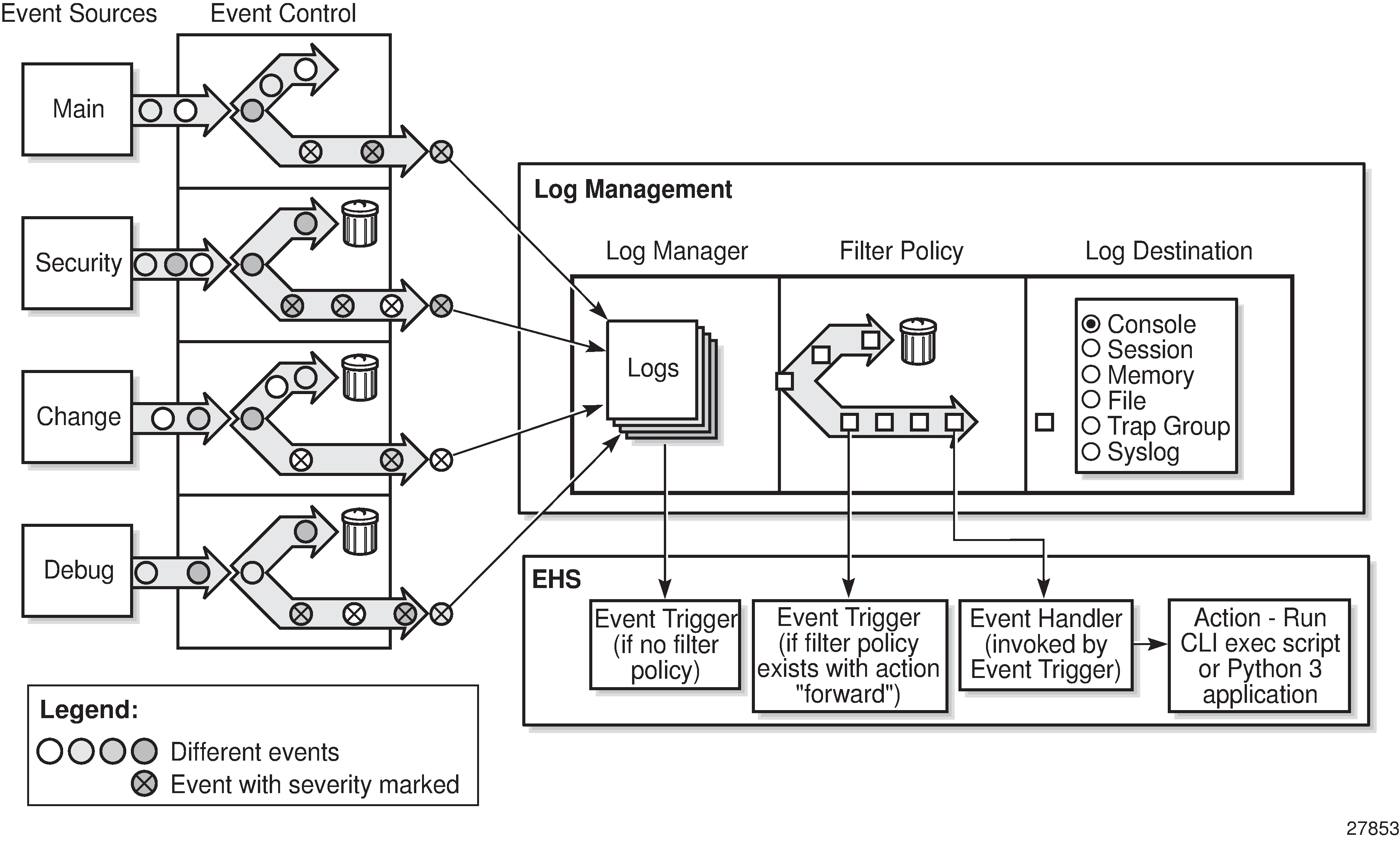

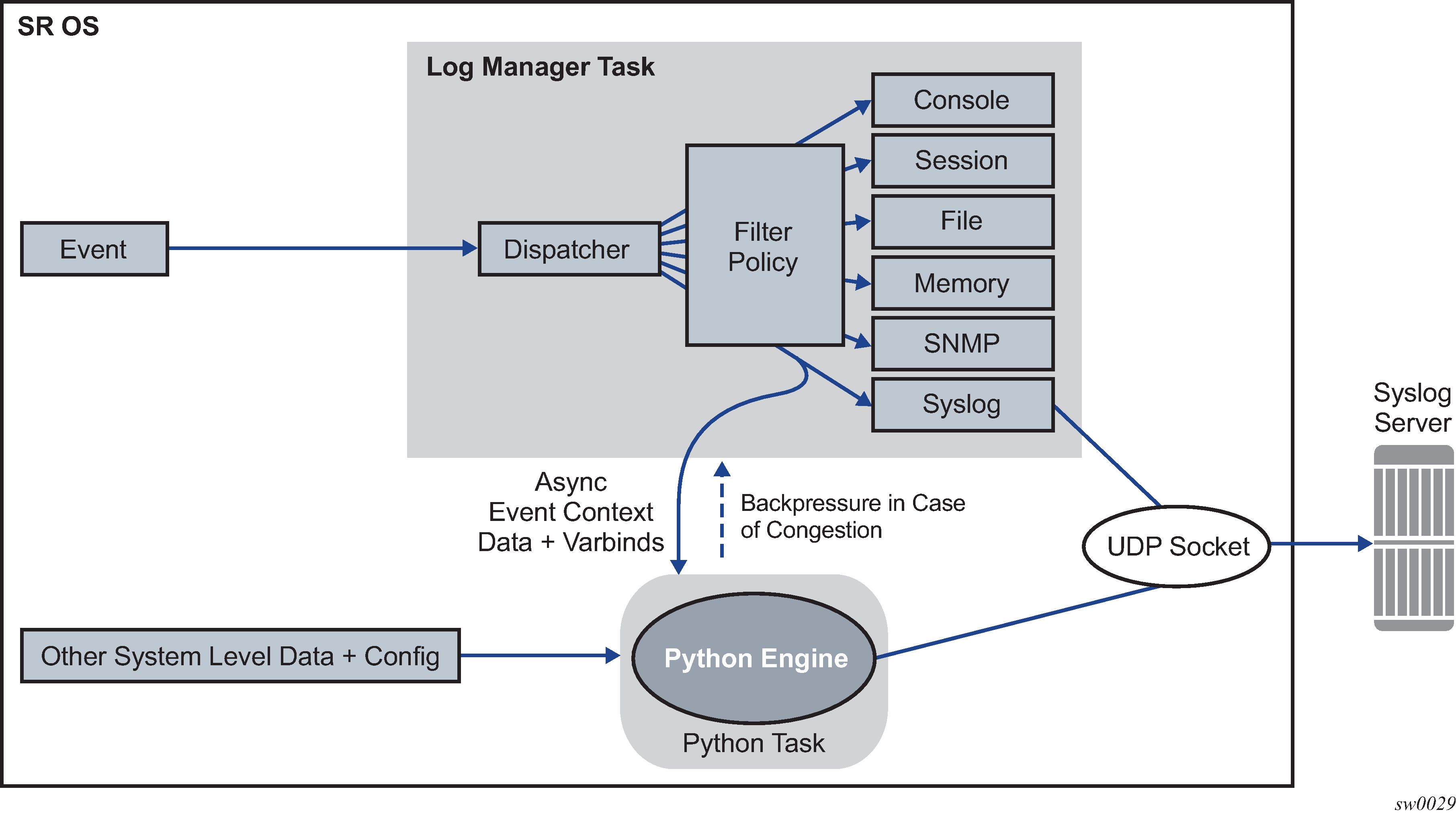

The following figure shows a function block diagram of event logging.

Event sources

In Event logging block diagram, the event sources are the main categories of events that feed the log manager:

security

The security event source is all events that affect attempts to breach system security such as failed login attempts, attempts to access MIB tables to which the user is not granted access or attempts to enter a branch of the CLI to which access has not been granted. Security events are generated by the SECURITY application, as well as several other applications (TLS for example).

change

The change activity event source receives all events that directly affect the configuration or operation of the node. Change events are generated by the USER application. The Change event stream also includes the tmnxConfigModify(#2006), tmnxConfigCreate (#2007), tmnxConfigDelete (#2008), and tmnxStateChange (#2009) change events from the SYSTEM application, as well as the various xxxConfigChange events from the MGMT_CORE application.

debug

The debug event source is the debugging configuration that has been enabled on the system. Debug events are generated when debug is enabled for various protocols under the debug branch of the CLI (for example, debug system ntp).

- li

The li event source generates lawful intercept events from the LI application.

main

The main event source receives events from all other applications within the router.

The event source for a particular log event is displayed in the output of the following command.

show log event-control detailThe event source can also be found in the source-stream element in the state log log-events context.

Use the following command to show the list of event log applications.

show log applicationsThe following example shows the show log applications command output. Examples of event log applications include IP, MPLS, OSPF, CLI, services, and so on.

==================================

Log Event Application Names

==================================

Application Name

----------------------------------

...

BGP

CCAG

CFLOWD

CHASSIS

...

MPLS

MSDP

NTP

...

USER

VRRP

VRTR

==================================Event control

Event control pre-processes the events generated by applications before the event is passed into the main event stream. Event control assigns a severity to application events and can either forward the event to the main event source or suppress the event. Suppressed events are counted in event control, but these events will not generate log entries as they never reach the log manager.

Simple event throttling is another method of event control and is configured similarly to the generation and suppression options. See Simple logger event throttling.

Events are assigned a default severity level in the system, but the application event severities can be changed by the user.

Application events contain an event number and description that describes why the event is generated. The event number is unique within an application, but the number can be duplicated in other applications.

Use the following command to display log event information.

show log event-controlThe following example, generated by querying event control for application generated events, shows a partial list of event numbers and names.

=======================================================================

Log Events

=======================================================================

Application

ID# Event Name P g/s Logged Dropped

-----------------------------------------------------------------------

show

BGP:

2001 bgpEstablished MI gen 1 0

2002 bgpBackwardTransition WA gen 7 0

2003 tBgpMaxPrefix90 WA gen 0 0

...

CCAG:

CFLOWD:

2001 cflowdCreated MI gen 1 0

2002 cflowdCreateFailure MA gen 0 0

2003 cflowdDeleted MI gen 0 0

...

CHASSIS:

2001 cardFailure MA gen 0 0

2002 cardInserted MI gen 4 0

2003 cardRemoved MI gen 0 0

...

,,,

DEBUG:

L 2001 traceEvent MI gen 0 0

DOT1X:

FILTER:

2001 filterPBRPacketsDropped MI gen 0 0

IGMP:

2001 vRtrIgmpIfRxQueryVerMismatch WA gen 0 0

2002 vRtrIgmpIfCModeRxQueryMismatch WA gen 0 0

IGMP_SNOOPING:

IP:

L 2001 clearRTMError MI gen 0 0

L 2002 ipEtherBroadcast MI gen 0 0

L 2003 ipDuplicateAddress MI gen 0 0

...

ISIS:

2001 vRtrIsisDatabaseOverload WA gen 0 0

Log manager and event logs

Events that are forwarded by event control are sent to the log manager. The log manager manages the event logs in the system and the relationships between the log sources, event logs and log destinations, and log filter policies.

An event log has the following properties:

A unique log ID that is a short, numeric identifier for the event log. A maximum of ten logs can be configured at one time.

One or more log source streams that can be sent to specific log destinations. The source must be identified before the destination can be specified. The events can be from the main event stream, in the security event stream, or in the user activity stream.

A single destination. The destination for the log ID destination can be console, session, syslog, snmp-trap-group, memory, or a file on the local file system.

An optional event filter policy that defines whether to forward or drop an event or trap based on match criteria.

Event filter policies

The log manager uses event filter policies to allow fine control over which events are forwarded or dropped based on various criteria. Like other policies in the SR OS, filter policies have a default action. The default actions are either:

Forward

Drop

Filter policies also include a number of filter policy entries that are identified with an entry ID and define specific match criteria and a forward or drop action for the match criteria.

Each entry contains a combination of matching criteria that define the application, event number, router, severity, and subject conditions. The action for the entry determines how the packets will be treated if they have met the match criteria.

Entries are evaluated in order from the lowest to the highest entry ID. The first matching event is subject to the forward or drop action for that entry.

Valid operators are described in the following table:

| Operator | Description |

|---|---|

eq |

equal to |

neq |

not equal to |

lt |

less than |

lte |

less than or equal to |

gt |

greater than |

gte |

greater than or equal to |

A match criteria entry can include combinations of:

Equal to or not equal to a specific system application.

-

Equal to or not equal to an event message string or regular expression match.

-

Equal to, not equal to, less than, less than or equal to, greater than, or greater than or equal to an event number within the application.

Equal to, not equal to, less than, less than or equal to, greater than, or greater than or equal to a severity level.

Equal to or not equal to a router name string or regular expression match.

Equal to or not equal to an event subject string or regular expression match.

Event log entries

Log entries that are forwarded to a destination are formatted in a way appropriate for the specific destination, whether it is recorded to a file or sent as an SNMP trap, but log event entries have common elements or properties. All application-generated events have the following properties:

timestamp in UTC or local time

generating application

unique event ID within the application

-

A router name identifying the router instance that generated the event.

subject identifying the affected object

short text description

The general format for an event in an event log with either a memory, console, or file destination is as follows.

nnnn <time> TZONE <severity>: <application> #<event-id> <vrtr-name> <subject>

<message>

Event log

252 2013/05/07 16:21:00.761 UTC WARNING: SNMP #2005 Base my-interface-abc

"Interface my-interface-abc is operational"

The specific elements that comprise the general format are described in the following table.

| Label | Description |

|---|---|

|

nnnn |

The log entry sequence number. |

|

<time> |

YYYY/MM/DD HH:MM:SS.SSS |

|

YYYY/MM/DD |

The UTC date stamp for the log entry. YYYY — Year MM — Month DD — Date |

|

HH:MM:SS.SSS |

The UTC timestamp for the event. HH — Hours (24 hour-format) MM — Minutes SS.SSS — Seconds |

|

TZONE |

The time zone (for example, UTC, EDT) as configured by the following command. |

|

<severity> |

The severity levels of the event:

|

|

<application> |

The application generating the log message. |

|

<event-id> |

The application event ID number for the event. |

|

<vrtr-name> |

The router name in a special format used by the logging system (for example, Base or vprn101, where 101 represents the service-id of the VPRN service), representing the router instance that generated the event. |

|

<subject> |

The subject/affected object for the event. |

|

message |

A text description of the event. |

Simple logger event throttling

Simple event throttling provides a mechanism to protect event receivers from being overloaded when a scenario causes many events to be generated in a very short period of time. A throttling rate, # events/# seconds, can be configured. Specific event types can be configured to be throttled. When the throttling event limit is exceeded in a throttling interval, any further events of that type cause the dropped events counter to be incremented.

Use the commands in the following context to display dropped event counts.

show log event-controlEvents are dropped before being sent to one of the logger event collector tasks. There is no record of the details of the dropped events and therefore no way to retrieve event history data lost by this throttling method.

A particular event type can be generated by multiple managed objects within the system. At the point this throttling method is applied the logger application has no information about the managed object that generated the event and cannot distinguish between events generated by object ‟A” from events generated by object ‟B”. If the events have the same event-id, they are throttled regardless of the managed object that generated them. It also does not know which events may eventually be logged to destination log-id <n> from events that are logged to destination log-id <m>.

Throttle rate applies commonly to all event types. It is not configurable for a specific event-type.

A timer task checks for events dropped by throttling when the throttle interval expires. If any events have been dropped, a TIMETRA-SYSTEM-MIB::tmnxTrapDropped notification is sent.

Default system log

Log 99 is a pre-configured memory-based log which logs events from the main event source (not security, debug, and so on). Log 99 exists by default.

The following example displays the log 99 configuration.

MD-CLI

[ex:/configure log]

A:admin@node-2# info

log-id "99" {

admin-state enable

description "Default system log"

source {

main true

}

destination {

memory {

max-entries 500

}

}

}

snmp-trap-group "7" {

}

classic CLI

A:node-2>config>log# info detail

#------------------------------------------

echo "Log Configuration "

#------------------------------------------

...

snmp-trap-group 7

exit

...

log-id 99

description "Default system log"

no filter

from main

to memory 500

no shutdown

exit

----------------------------------------------

Event handling system

See "Event Handling System" in the 7450 ESS, 7750 SR, and 7950 XRS System Management Advanced Configuration Guide for MD CLI for information about advanced configurations.

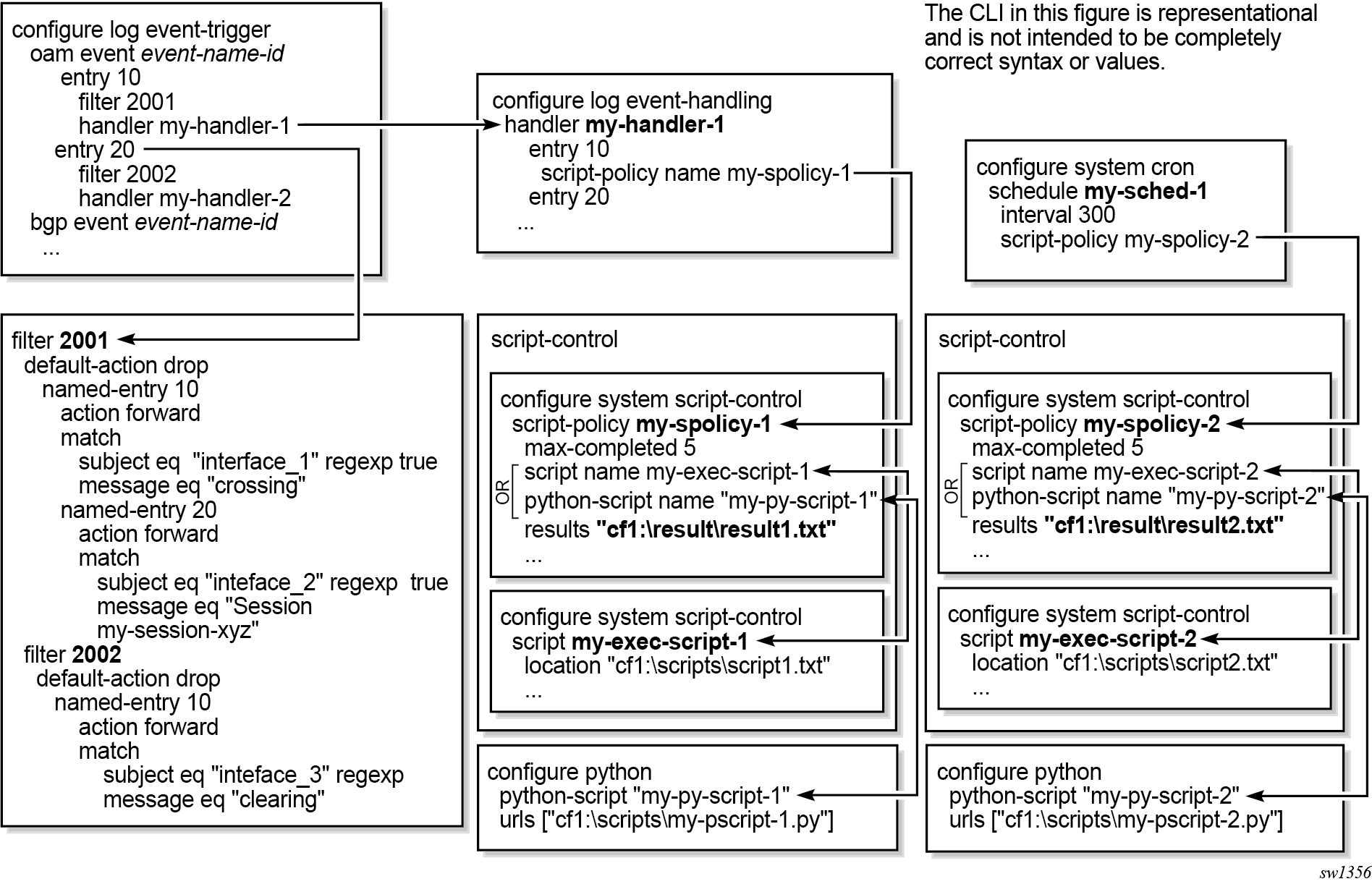

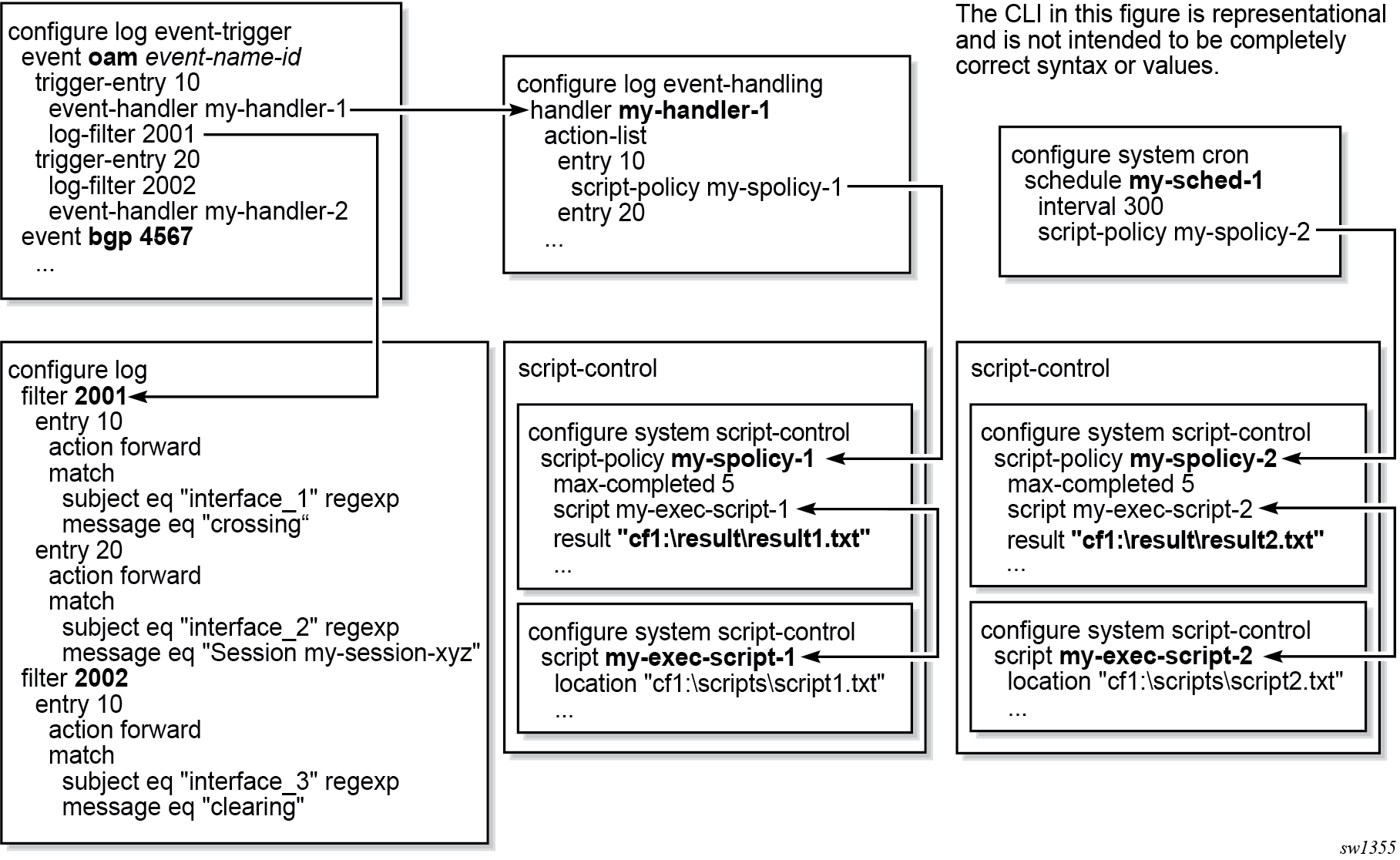

The Event Handling System (EHS) is a framework that allows operator-defined behavior to be configured on the router. EHS adds user-controlled programmatic exception handling by allowing the execution of either a CLI script or a Python 3 application when a log event (the ‟trigger”) is detected. Various fields in the log event provide regexp style expression matching, which allows flexibility for the trigger definition.

EHS handler objects are used to tie together the following:

trigger events (typically log events that match a configurable criteria)

a set of actions to perform (enabled using CLI scripts and Python applications)

EHS, along with CRON, may execute SR OS CLI scripts or Python 3 applications to perform operator-defined functions as a result of receiving a trigger event. The Python programming language provides an extensive framework for automation activities for triggered or scheduled events, including model-driven transactional configuration and state manipulation. See the Python chapter for more information.

The use of Python applications from EHS is supported only in model-driven configuration mode.

The following figure shows the relationships among the different configurable objects used by EHS (and CRON).

EHS configuration and variables

You can configure complex rules to match log events as the trigger for EHS. For example, use the commands in the following context to configure discard using suppression and throttling:

- MD-CLI

configure log log-events - classic

CLI

configure log event-control

When a log event is generated in SR OS, it is subject to discard using the configured suppression and throttling before it is evaluated as a trigger for EHS, according to the following:

EHS does not trigger on log events that are suppressed through the configuration.

EHS does not trigger on log events that are throttled by the logger.

EHS is triggered on log events that are dropped by user-configured log filters assigned to individual logs.

Use the following command to assign log filters:

configure log filterThe EHS event trigger logic occurs before the distribution of log event streams into individual logs.

The parameters from the log event are passed into the triggered EHS CLI script or Python application. For CLI scripts, the parameters are passed as individual dynamic variables (for example, $eventid). For Python applications, see the details in the following sections. The parameters are composed of:

- common events

- event specific options

The common event parameters are:

- appid

- eventid

- severity

- gentime (in UTC)

- timestamp (in seconds, available within a Python application only)

The event specific parameters depend on the log event. Use the following command to obtain information for a particular log event.

show log event-parametersAlternatively, in the MD-CLI use the following command for information.

state log log-eventsTriggering a CLI script from EHS

When using the classic CLI, an EHS script has the ability to define local (static) variables and uses basic .if or .set syntax inside the script. The use of variables with .if or .set commands within an EHS script adds more logic to the EHS scripting and allows the reuse of a single EHS script for more than one trigger or action.

Both passed-in and local variables can be used within an EHS script, either as part of the CLI commands or as part of the .if or .set commands.

The following applies to both CLI commands and .if or .set commands (where X represents a variable):

Using $X, without using single or double quotes, replaces the variable X with its string or integer value.

Using ‟X”, with double quotes, means the actual string X.

Using ‟$X”, with double quotes, replaces the variable X with its string or integer value.

Using ‛X’, with single quotes does not replace the variable X with its value but means the actual string $X.

The following interpretation of single and double quotes applies:

All characters within single quotes are interpreted as string characters.

All characters within double quotes are interpreted as string characters except for $, which replaces the variable with its value (for example, shell expansion inside a string).

Examples of EHS syntax supported in the classic CLI

This section describes the supported EHS syntax for the classic CLI.

.if $string_variable==string_value_or_string_variable {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if ($string_variable==string_value_or_string_variable) {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if $integer_variable==integer_value_or_integer_variable {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if ($integer_variable==integer_value_or_integer_variable) {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if $string_variable!=string_value_or_string_variable {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if ($string_variable!=string_value_or_string_variable) {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if $integer_variable!=integer_value_or_integer_variable {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.if ($integer_variable!=integer_value_or_integer_variable) {

CLI_commands_set1

.} else {

CLI_commands_set2

.} endif

.set $string_variable = string_value_or_string_variable

.set ($string_variable = string_value_or_string_variable)

.set $integer_variable = integer_value_or_integer_variable

.set ($integer_variable = integer_value_or_integer_variable)

where:

CLI_commands_set1 is a set of one or more CLI commands

CLI_commands_set2 is a set of one or more CLI commands

string_variable is a local (static) string variable

string_value_or_string_variable is a string value/variable

integer_variable is a local (static) integer variable

integer_value_or_integer_variable is an integer value/variable

A limit of 100 local (static) variables per EHS script is imposed. Exceeding this limit may result in an error and partial execution of the script.

When a set statement is used to set a string_variable to a string_value, the string_value can be any non-integer value not surrounded by single or double quotes, or it can be surrounded by single or double quotes.

A "." preceding a directive (for example, if, set...and so on) is always expected to start a new line.

An end of line is always expected after {.

A CLI command is always expected to start a new line.

Passed-in (dynamic) variables are always read-only inside an EHS script and cannot be overwritten using a set statement.

.if commands support == and != operators only.

.if and .set commands support the addition, subtraction, multiplication, and division of integers.

.if and .set commands support the addition of strings, which means concatenation of strings.

Valid examples for EHS syntax in the classic CLI

This section provides a list of valid examples to trigger log events using EHS syntax in the classic CLI:

configure service epipe $serviceID

where $serviceID is either a local (static) integer variable or passed-in (dynamic) integer variable

echo srcAddr is $srcAddr

where $srcAddr is a passed-in (dynamic) string variable

.set $ipAddr = "10.0.0.1"

where $ipAddr is a local (static) string variable

.set $ipAddr = $srcAddr

where $srcAddr is a passed-in (dynamic) string variable

$ipAddr is a local (static) string variable.

.set ($customerID = 50)

where $customerID is a local (static) integer variable

.set ($totalPackets = $numIngrPackets + $numEgrPackets)

where $totalPackets, $numIngrPackets, $numEgrPackets are local (static) integer variables

.set ($portDescription = $portName + $portLocation)

where $portDescription, $portName, $portLocation are local (static) string variables

if ($srcAddr == "CONSOLE") {

CLI_commands_set1

.else {

CLI_commands_set2

.} endif

where $srcAddr is a passed-in (dynamic) string variable

CLI_commands_set1 is a set of one or more CLI commands

CLI_commands_set2 is a set of one or more CLI commands

.if ($customerId == 10) {

CLI_commands_set1

.else {

CLI_commands_set2

.} endif

where $customerID is a passed-in (dynamic) integer variable CLI_commands_set1 is a set of one or more CLI commands

CLI_commands_set2 is a set of one or more CLI commands

.if ($numIngrPackets == $numEgrPackets) {

CLI_commands_set1

.else {

CLI_commands_set2

.} endif

where $numIngrPackets and $numEgrPackets are local (static) integer variables

CLI_commands_set1 is a set of one or more CLI commands

CLI_commands_set2 is a set of one or more CLI commands

Invalid examples for EHS syntax in the classic CLI

This section provides a list of invalid variable use in EHS syntax in the classic CLI:

.set $srcAddr = "10.0.0.1"

where $srcAddr is a passed-in (dynamic) string variable

Reason: passed-in variables are read only inside an EHS script.

.set ($ipAddr = $numIngrPackets + $numEgrPackets)

where $ipAddr is a local (static) string variable

$numIngrPackets and $numEgrPackets are local (static) integer variables

Reason: variable types do not match, cannot assign a string to an integer.

.set ($numIngrPackets = $ipAddr + $numEgrPackets)

where $ipAddr is a local (static) string variable

$numIngrPackets and $numEgrPackets are local (static) integer variables

Reason: variable types do not match, cannot concatenate a string to an integer.

.set $ipAddr = "10.0.0.1"100

where $ipAddr is a local (static) string variable

Reason: when double quotes are used, they have to surround the entire string.

.if ($totalPackets == "10.1.1.1") {

.} endif

where $totalPackets is a local (static) integer variables

Reason: cannot compare an integer variable to a string value.

.if ($ipAddr == 10) {

.} endif

where $ipAddr is a local (static) string variable

Reason: cannot compare a string variable to an integer value.

.if ($totalPackets == $ipAddr) {

where $totalPackets is a local (static) integer variables

$ipAddr is a local (static) string variable

Reason: cannot compare an integer variable to a string variable.

Triggering a Python application from EHS

When using model-driven configuration mode and the MD-CLI, EHS can trigger a Python application that is executed inside a Python interpreter running on SR OS. See the Python chapter for more information.

Python applications are not supported in classic configuration mode or mixed configuration mode.

When developing an EHS Python application, the event attributes are passed to the application using the get_event function in the pysros.ehs module.

To import this module, the Python application developer must add the following statement to the application.

from pysros.ehs import get_event

Use the get_event function call to obtain the event triggered the Python application to run. The following example catches the event and returns a Python object into the event variable.

event = get_event()

When using an EHS Python application, the operator can use the Python programming language to create applications, as required. See the Python chapter for information about displaying model-driven state or configuration information, performing transactional configuration of SR OS, or executing CLI commands in Python.

Common event parameters (group one) are available in Python from the object created using the get_event function, as shown in the following table (the functions assume that the EHS event object is called event).

| Function call | Description | Example output | Python return type |

|---|---|---|---|

event.appid |

The name of the application that generated the event |

SYSTEM |

String |

event.eventid |

The event ID number of the application |

2068 |

Integer |

event.severity |

The severity level of the event |

minor |

String |

event.subject |

The subject or affected object of the event |

EHS script |

String |

event.gentime |

The formatted time the event was generated in UTC |

The timestamp in ISO 8601 format (consistent with state date/time leaves) that the event was generated. For example, 2021-03-08T11:52:06.0-05:00 |

String |

event.timestamp |

The timestamp that the event was generated (in seconds) |

1632165026.921208 |

Float |

The variable parameters (group two) are available in Python in the eventparameters attribute of the event object, as shown in the following table. They are presented as a Python dictionary (unordered).

| Function call | Description | Example output | Python return type |

|---|---|---|---|

event.eventparameters |

The event specific variable parameters |

<EventParams> When calling keys() on this object the example output is: ('tmnxEhsHandlerName', 'tmnxEhsHEntryId', 'tmnxEhsHEntryScriptPlcyOwner', 'tmnxEhsHEntryScriptPlcyName', 'smLaunchOwner', 'smLaunchName', 'smLaunchScriptOwner', 'smLaunchScriptName', 'smLaunchError', 'tmnxSmLaunchExtAuthType', 'smRunIndex', 'tmnxSmRunExtAuthType', 'tmnxSmRunExtUserName') |

Dict |

In addition to the variables, the format_msg() function is provided to output the formatted log string from the event as it would appear in the output of the show log command.

format_msg() usage

print(event.format_msg())

Output of the format_msg() function

Launch of none operation failed with a error: Python script's operational status is not 'inService'. The script policy "test_ehs" created by the owner "TiMOS CLI" was executed with cli-user account "not-specified"

EHS debounce

EHS debounce (also called dampening) is the ability to trigger an action (for example an EHS script), if an event happens (N) times within a specific time window (S).

N = [2..15]

S = [1..604800]

Triggering occurs with the Nth event, not at the end of S.

There is no sliding window (for example a trigger at Nth event, N+1 event, and so on), because N is reset after a trigger and the count is restarted.

-

When EHS debouncing or dampening is used, the varbinds passed in to an EHS script at script triggering time are from the Nth event occurrence (the Nth triggering event).

If S is not specified, the SR OS continues to trigger every Nth event.

For example, when linkDown occurs N times in S seconds, an EHS script is triggered to shut down the port.

Executing EHS or CRON CLI scripts or Python applications

The execution of EHS or CRON scripts depends on the CLI engine associated with the configuration mode. The EHS or CRON script execution engine is based on the configured primary CLI engine. Use the following command to configure the primary CLI engine.

configure system management-interface cli cli-engineFor example, if cli-engine is configured to classic-cli, the script executes in the classic CLI infrastructure and disregards the configuration mode, even if it is model-driven.

The following is the default behavior of the EHS or CRON scripts, depending on the configuration mode:

-

model-driven configuration mode

EHS or CRON scripts execute in the MD-CLI environment and an error occurs if any classic CLI commands exist. Python applications are fully supported and use the SR OS model-driven interfaces and the pySROS libraries to obtain and manipulate state and configuration data, as well as pySROS API calls to execute MD-CLI commands.

-

classic configuration mode

EHS or CRON scripts execute in the classic CLI environment and an error occurs if any MD-CLI commands exist. Python applications are not supported and the system returns an error.

-

mixed configuration mode

EHS or CRON scripts execute in the classic CLI environment and an error occurs if any MD-CLI commands exist. Python applications are not supported and the system returns an error.

EHS or CRON scripts that contain MD-CLI commands can be used in the MD-CLI as follows:

-

scripts can be configured

-

scripts can be created, edited, and results read through FTP

-

scripts can be triggered and executed

-

scripts generate an error if there are any non MD-CLI commands or .if or .set syntax in the script

- MD-CLI and classic

CLI

configure system security cli-script authorization event-handler cli-user configure system security cli-script authorization cron cli-user - MD-CLI

only

configure system security python-script authorization event-handler cli-user configure system security python-script authorization cron cli-user

When a user is not specified, an EHS or CRON script and an EHS or CRON Python application bypasses authorization and can execute all commands.

In all configuration modes, a script policy can be disabled using the following command even if history exists:

- MD-CLI

configure system script-control script-policy admin-state disable - classic

CLI

configure system script-control script-policy shutdown

When the script policy is disabled, the following applies:

-

Newly triggered EHS or CRON scripts or Python applications are not allowed to execute or queue.

-

In-progress EHS or CRON scripts or Python applications are allowed to continue.

-

Already queued EHS or CRON scripts or Python applications are allowed to execute.

By default, a script policy is configured to allow an EHS or CRON script to override datastore locks from any model-driven interface (MD-CLI, NETCONF, and so on) in mixed and model-driven modes. Use the following command to configure a script policy to prevent EHS or CRON scripts from overriding datastore locks:

- MD-CLI

configure system script-control script-policy lock-override false - classic

CLI

configure system script-control script-policy no lock-override

Managing logging in VPRNs

Log events can be sent from within a VPRN instead of from the base router instance or the CPM management router instance. For example, a syslog collector may be reachable through a VPRN interface.

To deploy VPRN logs, the user must configure an event log inside the following context.

configure service vprn logBy default, the event source streams for VPRN event logs contain only events that are associated with the specific VPRN. To send a VPRN event log for the entire system-wide set of log events (VPRN and non-VPRN), use the following command. This can be useful, for example, when a VPRN is being used as a management VPRN.

configure log services-all-eventsCustom log events

The SR OS supports six custom log events, each with a different default severity that is modifiable like any other log event. The event names and associated default severity of the events are described in the following table.

| Event name | Default severity |

|---|---|

|

tmnxCustomEvent1 |

critical |

|

tmnxCustomEvent2 |

major |

|

tmnxCustomEvent3 |

minor |

|

tmnxCustomEvent4 |

warning |

|

tmnxCustomEvent5 |

cleared |

|

tmnxCustomEvent6 |

indeterminate |

A custom event can be raised by a user or client with the perform log custom-event command in MD-CLI, a YANG modeled operation NETCONF, or a pySROS script. The subject, message text, and multiple output parameters of the log event can be populated with custom strings.

The custom log events can be used as triggers for Event Handling System (EHS) handlers, with all parameters passed into the associated EHS scripts. The events can also be sent to any standard log destination type, for example syslog or SNMP notifications.

Custom generic log event raised in MD-CLI

The following is an example of a custom generic log event raised in MD-CLI.

[/]

A:admin@node-2# perform log custom-event 4 subject "test" message-string "Port 1/1/1 is in the Down state" parameter1 "1/1/1" parameter2 "Down" parameter3 "1977-05-04"The resulting log event is shown in the show log log-id 99 command.

[/]

A:admin@node-2# show log log-id 99

===============================================================================

Event Log 99 log-name 99

===============================================================================

Description : Default System Log

Memory Log contents [size=500 next event=45 (not wrapped)]

44 2024/05/02 12:21:12.423 UTC WARNING: LOGGER #2023 Base test "Port 1/1/1 is in the Down state" If the log event in the preceding example is sent as an SNMP notification, or if the log event is used as a trigger for the EHS, the following event-specific parameters are passed:

-

logCustomEventSubject = “test”

-

logCustomEventMessageString = “Port 1/1/1 is in the Down state”

-

logCustomEventParameter1 = “1/1/1”

-

logCustomEventParameter2 = “Down”

-

logCustomEventParameter3 = “1977-05-04”

The total length of the message-string plus all parameters (parameter1 to parameter8) strings must be equal to or less than 2400 characters.

Embedded double quotes are supported in the message-string and parameter inputs by using the backslash character (\) followed immediately by the double quote character (").

Custom log event with double quotes

The following is an example configuration of a custom log event.

[/]

A:admin@node-2# perform log custom-event 1 subject "test" message-string "{

\"nokia-conf:connect-retry\": 90, \"nokia-conf:local-preference\": 250,

\"nokia-conf:add-paths\": { \"ipv4\": { \"receive\": true } }}" The following string is the output of the message field of the resulting log event.

"{ "nokia-conf:connect-retry": 90, "nokia-conf:local-preference": 250,

"nokia-conf:add-paths": { "ipv4": { "receive": true } }}"Custom test events

The SR OS provides a specific test log event. The text for this test log event can be customized. The test log event can be raised with the perform log test-event command. The custom-text command in this context replaces the default message of the event.

The total length of the custom-text must be equal to or less than 800 characters. Embedded double quotes are not supported in the custom-text string. There is no special treatment for \n or \r sequences. For example, \n in the custom-text string is output as the backslash character (\) and “n” (the equivalent of ASCII 0x5C and 0x6e).

Test log event configured with custom text

[/]

A:admin@node-2# perform log test-event custom-text "Starting maintenance window 7728\n\r Now" The following test log event message is the output for the preceding command.

35 2023/05/24 00:41:00.191 UTC INDETERMINATE: LOGGER #2011 Base Event Test

"Starting maintenance window 7728\n\r Now" Customizing Syslog messages using Python

Any log events in SR OS can be customized using a Python script before they are sent to a syslog server. If the result of a log filter is to drop the event, no further processing occurs and the message is not sent. The following figure shows the interaction between the logger and the Python engine.

Python engine for syslog

This section describes the syslog-specific aspects of Python processing. For an introduction to Python, see the 7450 ESS, 7750 SR, and VSR Triple Play Service Delivery Architecture Guide, "Python script support for ESM".

When an event is dispatched to the log manager in SR OS, the log manager asynchronously passes the event context data and variables (varbinds in Python 2 and event parameters in Python 3) to the Python engine; that is, the logger task is not waiting for feedback from Python.

Varbinds or event parameters are variable bindings that represent the variable number of values that are included in the event. Each varbind in Python 2 consists of a triplet (OID, type, value).

Along with other system-level variables, the Python engine constructs a Syslog message and sends it to the syslog destination when the Python engine successfully concludes. During this process, the operator can modify the format of the Syslog message or leave it intact, as if it was generated by the syslog process within the log manager.

The tasks of the Python engine in a syslog context are as follows:

assemble custom Syslog messages (including PRI, HEADER and MSG fields) based on the received event context data, varbinds and event parameters specific to the event, system-level data, and the configuration parameters (syslog server IP address, syslog facility, log-prefix, and the destination UDP port)

reformat timestamps in a Syslog message

- modify attributes in the message and reformats the message

send the original or modified message to the syslog server

drop the message

Python 2 syslog APIs

Python APIs are used to assemble a Syslog message which, in SR OS, has the format described in section Syslog.

The following table describes Python information that can be used to manipulate Syslog messages.

| Imported Nokia (ALC) modules | Access rights | Comments |

|---|---|---|

event (from alc import event) |

— |

Method used to retrieve generic event information |

syslog (from alc import syslog) |

— |

Method used to retrieve syslog-specific parameters |

system (from alc import system) |

— |

Method used to retrieve system-specific information. Currently, the only parameter retrieved is the system name. |

Events use the following format as they are written into memory, file, console, and system: nnnn <time> <severity>:<application> # <event_id> <router-name> <subject> <message> The event-related information received in the context data from the log manager is retrieved via the following Python methods: |

||

event.sequence |

RO |

Sequence number of the event (nnnn) |

event.timestamp |

RO |

Event timestamp in the format: (YYYY/MM/DD HH:MM:SS.SS) |

event.routerName |

RO |

Router name, for example, BASE, VPRN1, and so on |

event.application |

RO |

Application generating the event, for example, NA |

event.severity |

RO |

Event severity configurable in SR OS (CLEARED [1], INFO [2], CRITICAL [3], MAJOR [4], MINOR [5], WARNING [6]). |

event.eventId |

RO |

Event ID; for example, 2012 |

event.eventName |

RO |

Event Name; for example, tmnxNatPlBloclAllocationLsn |

event.subject |

RO |

Optional field; for example, [NAT] |

event.message |

RO |

Event-specific message; for example, "{2} Map 192.168.20.29 [2001-2005] MDA 1/2 -- 276824064 classic-lsn-sub %3 vprn1 10.10.10.101 at 2015/08/31 09:20:15" |

Syslog methods |

||

syslog.hostName |

RO |

IP address of the SR OS node sending the Syslog message. This is used in the Syslog HEADER. |

syslog.logPrefix |

RO |

Log prefix which is configurable and optional; for example, TMNX: |

syslog.severityToPRI(event.severity) |

— |

Python method used to derive the PRI field in syslog header based on event severity and a configurable syslog facility |

syslog.severityToName(event.severity) |

— |

SR OS event severity to syslog severity name. For more information, see the Syslog section. |

syslog.timestampToUnix(timestamp) |

— |

Python method that takes a timestamp in the YYYY/MM/DD HH:MM:SS format and converts it into a UNIX-based format (seconds from Jan 01 1970 – UTC) |

syslog.set(newSyslogPdu) |

— |

Python method used to send the Syslog message in the newSyslogPdu. This variable must be constructed manually via string manipulation. In the absence of the command, the SR OS assembles the default Syslog message (as if Python was not configured) and sends it to the syslog server, assuming that the message is not explicitly dropped. |

syslog.drop() |

— |

Python method used to drop a Syslog message. This method must be called before the syslog.set<newSyslogPdu method. |

System methods |

||

system.name |

RO |

Python method used to retrieve the system name |

For example, assume that the syslog format is:

<PRI><timestamp> <hostname> <log-prefix>: <sequence> <router-name> <appid>-

<severity>-<name>-<eventid> [<subject>]: <text>

Then the syslogPdu is constructed via Python as shown in the following example:

syslogPdu = "<" + syslog.severityToPRI(event.severity) + ">" \ + event.timestamp + "

" \ + syslog.hostname + " " + syslog.logPrefix + ": " + \ event.sequence + " " + ev

ent.routerName + " " + \ event.application + "-

" + \ syslog.severityToName(event.severity) + "-" + \

event.eventName + "-" + event.eventId + " [" + \

event.subject + "]: " + event.message

Python 3 syslog APIs

Python APIs are used to modify and assemble a Syslog message which, in SR OS, has the format described in section Syslog.

The syslog module for Python 3 is included in the pySROS libraries pre-installed on the SR OS device. The get_event function must be imported from the pysros.syslog module at the beginning of each Python 3 application by including the following:

from pysros.sylog import get_eventThe specific event that the syslog handler is processing can be returned in a variable using the following example Python 3 code:

my_event = get_event()In the preceding example, my_event is an object of type Event. The Event class provides a number of parameters and functions as described in the following table:

| Key name | Python type | Read-only | Description |

|---|---|---|---|

|

name |

String |

N |

Event name |

|

appid |

String |

N |

Name of application that generated the log message |

|

eventid |

Integer |

N |

Event ID number of the application |

|

severity |

String |

N |

Severity level of the event (lowercase). The accepted values in SR OS are:

|

|

sequence |

Integer |

N |

Sequence number of the event in the syslog collector |

|

subject |

String |

N |

Subject or affected object for the event |

|

router_name |

String |

N |

Name of the SR OS router-instance (for example, Base) in which the event is triggered |

|

gentime |

String |

Y |

Timestamp in ISO 8601 format for the generated event. Example: 2021-03-08T11:52:06.0-0500. Changes to the timestamp field are reflected in this field |

|

timestamp |

Float |

N |

Timestamp, in seconds |

|

hostname |

String |

N |

Hostname field of the Syslog message. This can be an IP address, a fully-qualified domain name, or a hostname. |

|

log_prefix |

String |

N |

Optional log prefix, for example, TMNX |

|

facility |

Integer |

N |

Syslog facility [0-31] |

|

text |

String |

N |

String representation of the text portion of the message only. By default, this is generated from the eventparameters attribute. |

|

eventparameters |

Dict |

Y |

Python class that behaves similarly to a Python dictionary of all key, value pairs for all log event specific information that does not fall into the standard fields. |

|

format_msg() |

String |

n/a |

Formatted version of the full log message as it appears in show log Note: format_msg() is a function

itself and must be called to generate the

formatted message.

|

|

format_syslog_msg() |

String |

n/a |

Formatted version of the Syslog message as it would be sent to the syslog server. Note: format_syslog_msg() is a

function itself and must be called to generate the

formatted message.

|

|

override_payload(payload) |

n/a |

Provide a custom syslog message as it would appear in the packet, including the header information (facility, timestamp, and so on) and body data (the actual message). Attributes from this Event are used to construct a completely new message format. Any prior changes to the values of these attributes are used. |

|

|

drop() |

n/a |

Drop the message from the pipeline. The Syslog message is not sent out (regardless of any subsequent changes in the Python script). The script continues normally. |

The parameter values for the specific event are provided in the Event class. At the end of the Python application execution, the resultant values are returned to the syslog system to transmit the Syslog message. Any changes made to the read-write parameters are used in the Syslog message unless the drop() method is called.

More information about the pysros.syslog module can be found in the API documentation for pySROS delivered with the pySROS libraries.

Timestamp format manipulation in Python 2

Certain logging environments require customized formatting of the timestamp. Nokia provides a timestamp conversion method in the alu.syslog Python module to convert a timestamp from the format YYYY/MM/DD hh:mm:ss into a UNIX-based timestamp format (seconds from Jan 01 1970 – UTC).

For example, an operator can use the following Python method to convert a timestamp from the YYYY/MM/DD hh:mm:ss.ss or YYYY/MM/DD hh:mm:ss (no centiseconds) format into either the UNIX timestamp format or the MMM DD hh:mm:ss format.

from alc import event

from alc import syslog

from alc import system

#input format: YYYY/MM/DD hh:mm:ss.ss or YYYY/MM/DD hh:mm:ss

#output format 1: MMM DD hh:mm:ss

#output format 2: unixTimestamp (TBD)

def timeFormatConversion(timestamp,format):

if format not in range(1,2):

raise NameError('Unexpected format, expected:' \

'0<format<3 got: '+str(format))

try:

dat,tim=timestamp.split(' ')

except:

raise NameError('Unexpected timestamp format, expected:' \

'YYYY/MM/DD hh:mm:ss got: '+timestamp)

try:

YYYY,MM,DD=dat.split('/')

except:

raise NameError('Unexpected timestamp format, expected:' \

'YYYY/MM/DD hh:mm:ss got: '+timestamp)

try:

hh,mm,ss=tim.split(':')

ss=ss.split('.')[0] #just in case that the time format is hh:mm:ss.ss

except:

raise NameError('Unexpected timestamp format, expected:' \

'YYYY/MM/DD hh:mm:ss got: '+timestamp)

if not (1970<=int(YYYY)<2100 and

1<=int(MM)<=12 and

1<=int(DD)<=31 and

0<=int(hh)<=24 and

0<=int(mm)<=60 and

0<=int(ss)<=60):

raise NameError('Unexpected timestamp format, or values out of the range' \

'Expected: YYYY/MM/DD hh:mm:ss got: '+timestamp)

if format == 1:

MMM={1:'Jan',

2:'Feb',

3:'Mar',

4:'Apr',

5:'May',

6:'Jun',

7:'Jul',

8:'Aug',

9:'Sep',

10:'Oct',

11:'Nov',

12:'Dec'}[int(MM)]

timestamp=MMM+' '+DD+' '+hh+':'+mm+':'+ss

if format == 2:

timestamp=syslog.timestampToUnix(timestamp)

return timestamp

The timeFormatConversion method can accept the event.timestamp value in the format:

YYYY/MM/DD HH:MM:SS.SS

and return a new timestamp in the format determined by the format parameter:

1 MMM DD HH:MM:SS

2 Unix based time format

This method accepts the input format in either of the two forms, YYYY/MM/DD HH:MM:SS.SS or YYYY/MM/DD HH:MM:SS, and ignores the centisecond part in the former form.

Timestamp format manipulation in Python 3

Certain logging environments require customized formatting of the timestamp. The Python 3 interpreter provided with SR OS also provides the utime and datetime modules for format manipulation.

Python processing efficiency

Python retrieves event-related variables from the log manager, as opposed to retrieving pre-assembled Syslog messages. This eliminates the need for string parsing of the Syslog message to manipulate it constituent parts increasing the speed of Python processing.

To further improve processing performance, Nokia recommends performing string manipulation via the Python native string method, when possible.

Python backpressure

A Python task assembles Syslog messages based on the context information received from the logger and sends them to the syslog server independent of the logger. If the Python task is congested because of a high volume of received data, the backpressure should be sent to the ISA so that the ISA stops allocating NAT resources. This behavior matches the current behavior in which NAT resources allocation is blocked if that logger is congested.

Selecting events for Python processing

Events destined for Python processing are configured through a log ID that references a Python policy. Event selection is performed using a filter associated with the log ID. The remainder of the events destined for the same syslog server can bypass Python processing by redirecting them to a different log ID.

-

Use the commands in the following contexts to create the Python

policy and log ID:

- MD-CLI

configure python python-policy PyForLogEvents configure python python-policy syslog - classic

CLI

configure python python-policy PyForLogEvents create configure python python-policy syslog

- MD-CLI

-

Use log filters to identify the events that are subject to Python

processing.

MD-CLI

[ex:/configure log] A:admin@node-2# info filter "6" { default-action drop named-entry "1" { action forward match { application { eq nat } event { eq 2012 } } } } filter "7" { default-action forward named-entry "1" { action drop match { application { eq nat } event { eq 2012 } } } }classic CLIA:node-2>config>log# info ---------------------------------------------- filter 6 default-action drop entry 1 action forward match application eq "nat" number eq 2012 exit exit exit filter 7 default-action forward entry 1 action drop match application eq "nat" number eq 2012 exit exit exit -

Specify the syslog destination.

MD-CLI

[ex:/configure log] A:admin@node-2# info syslog "1" { address 192.168.1.1 }classic CLIA:node-2>config>log># info ---------------------------------------------- syslog 1 address 192.168.1.1 exit -

Apply the Python syslog policy to selected events using the

specified filters.

In the following example, the configuration-only event 2012 from application "nat" is sent to log-id 33. All other events are forwarded to the same syslog destination using log-id 34, without any modification. As a result, all events (modified using log-id 33 and unmodified using log-id 34) are sent to the syslog 1 destination.

This configuration may cause reordering of Syslog messages at the syslog 1 destination because of slight delay of messages processed by Python.

MD-CLI[ex:/configure log] A:admin@node-2# info log-id "33" { admin-state enable python-policy "PyForLogEvents" filter "6" source { main true } destination { syslog "1" } } log-id "34" { admin-state enable filter "7" source { main true } destination { syslog "1" } }classic CLIA:node-2>config>log># info ---------------------------------------------- log-id 33 filter 6 from main to syslog 1 python-policy "PyForLogEvents" no shutdown exit log-id 34 filter 7 from main to syslog 1 no shutdown exit

Accounting logs

Before an accounting policy can be created, a target log file policy must be created to collect the accounting records. The files are stored in system memory on compact flash (cf1: or cf2:) in a compressed (tar) XML format and can be retrieved using FTP or SCP.

A file policy can only be assigned to either one event log or one accounting log.

Accounting records

An accounting policy must define a record name and collection interval. Only one record name can be configured per accounting policy. Also, a record name can only be used in one accounting policy.

The record name, sub-record types, and default collection period for some service and network accounting policies are shown in Accounting record name and collection periods, Policer stats field descriptions, Queue group record types, and Queue group record type fields provide field descriptions.

| Record name | Sub-record types | Accounting object | Platform | Default collection period (minutes) |

|---|---|---|---|---|

|

service-ingress-octets |

sio |

SAP |

All |

5 |

|

service-egress-octets |

seo |

SAP |

All |

5 |

|

service-ingress-packets |

sip |

SAP |

All |

5 |

|

service-egress-packets |

sep |

SAP |

All |

5 |

|

network-ingress-octets |

nio |

Network port |

All |

15 |

|

network-egress-octets |

neo |

Network port |

All |

15 |

|

network-egress-packets |

nep |

Network port |

All |

15 |

|

network-ingress-packets |

nio |

Network port |

All |

15 |

|

compact-service-ingress-octets |

ctSio |

SAP |

All |

5 |

|

combined-service-ingress |

cmSipo |

SAP |

All |

5 |

|

combined-network-ing-egr-octets |

cmNio & cmNeo |

Network port |

All |

15 |

|

combined-service-ing-egr-octets |

cmSio & cmSeo |

SAP |

All |

5 |

|

complete-network-ing-egr |

cpNipo & cpNepo |

Network port |

All |

15 |

|

complete-service-ingress-egress |

cpSipo & cpSepo |

SAP |

All |

5 |

|

combined-sdp-ingress-egress |

cmSdpipo and cmSdpepo |

SDP and SDP binding |

All |

5 |

|

complete-sdp-ingress-egress |

cmSdpipo, cmSdpepo, cpSdpipo and cpSdpepo |

SDP and SDP binding |

All |

5 |

|

complete-subscriber-ingress-egress |

cpSBipo & cpSBepo |

Subscriber profile |

7750 SR |

5 |

|

aa-protocol |

aaProt |

AA ISA Group |

7750 SR |

15 |

|

aa-application |

aaApp |

AA ISA Group |

7750 SR |

15 |

|

aa-app-group |

aaAppGrp |