Configuring LDP

This chapter provides information about configuring Label Distribution Protocol (LDP) on SR Linux for both IPv4 and IPv6. It contains the following topics:

Enabling LDP

You must enable LDP for the protocol to be active. This procedure applies for both IPv4 and IPv6 LDP.

Enable LDP

The following example administratively enables LDP for the default network instance.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp admin-state

network-instance default {

protocols {

ldp {

admin-state enable

}

}

}

Configuring LDP neighbor discovery

You can configure LDP neighbor discovery, which allows SR Linux to discover and connect to IPv4 and IPv6 LDP peers without manually specifying the peers. SR Linux supports basic LDP discovery for discovering LDP peers, using multicast UDP hello messages.

Configure LDP neighbor discovery

The following example configures LDP neighbor discovery for a network instance and enables it on a subinterface for IPv4 and IPv6. The hello-interval parameter specifies the number of seconds between LDP link hello messages. The hello-holdtime parameter specifies how long the LDP link hello adjacency is maintained in the absence of link hello messages from the LDP neighbor.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery

network-instance default {

protocols {

ldp {

discovery {

interfaces {

hello-holdtime 30

hello-interval 10

interface ethernet-1/1.1 {

ipv4 {

admin-state enable

}

ipv6 {

admin-state enable

}

}

}

}

}

}

}

Configuring LDP peers

You can configure settings that apply to connections between SR Linux and IPv4 and IPv6 LDP peers, including session keepalive parameters. For individual LDP peers, you can configure the maximum number of FEC-label bindings that can be accepted by the peer.

If LDP receives a FEC-label binding from a peer that puts the number of received FECs from this peer at the configured FEC limit, the peer is put into overload. If the peer advertised the Nokia-overload capability (if it is another SR Linux router or an SR OS device) then the overload TLV is transmitted to the peer and the peer stops sending any further FEC-label bindings. If the peer did not advertise the Nokia-overload capability, then no overload TLV is sent to the peer. In either case, the received FEC-label binding is deleted.

Configure LDP peers

The following example configures settings for IPv4 and IPv6 LDP peers:

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp peers

network-instance default {

protocols {

ldp {

peers {

session-keepalive-holdtime 240

session-keepalive-interval 90

peer 10.1.1.1 label-space-id 0 {

fec-limit 1024

}

peer 2001:db8::0a01:0101 label-space-id 0 {

fec-limit 1024

}

}

}

}

}

In this example, the session-keepalive-holdtime parameter specifies the number of seconds an LDP session can remain inactive (no LDP packets received from the peer) before the LDP session is terminated and the corresponding TCP session closed. The session-keepalive-interval parameter specifies the number of seconds between LDP keepalive messages. SR Linux sends LDP keepalive messages at this interval only when no other LDP packets are transmitted over the LDP session.

For an individual LDP peer, indicated by its LSR ID and label space ID, a FEC limit is specified. SR Linux deletes FEC-label bindings received from this peer beyond this limit.

Configuring a label block for LDP

To configure LDP, you must specify a reference to a predefined range of labels, called a label block. A label block configuration includes a start-label value and an end-label value. LDP uses labels in the range between the start-label and end-label in the label block.

A label block can be static or dynamic. See Static and dynamic label blocks for information about each type of label block and how to configure them. LDP requires a dynamic, non-shared label block.

Configure dynamic LDP label block

The following example configures LDP to use a dynamic label block named

d1. The dynamic label block is configured with a start-label

value and an end-label value. See Configuring label blocks

for an example of a dynamic label block configuration.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp dynamic-label-block

network-instance default {

protocols {

ldp {

dynamic-label-block d1

}

}

}

Configuring longest-prefix match for IPv4 and IPv6 FEC resolution

By default, SR Linux supports /32 IPv4 and /128 IPv6 FEC resolution using IGP routes. You can optionally enable longest-prefix match for IPv4 and IPv6 FEC resolution. When this is enabled, IPv4 and IPv6 prefix FECs can be resolved by less-specific routes in the route table, as long as the prefix bits of the route match the prefix bits of the FEC. The IP route with the longest prefix match is the route that is used to resolve the FEC.

Configure longest-prefix match for IPv4 and IPv6 FEC resolution

The following example enables longest-prefix match for IPv4 and IPv6 FEC resolution.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp fec-resolution

network-instance default {

protocols {

ldp {

fec-resolution {

longest-prefix true

}

}

}

}

Configuring load balancing over equal-cost paths

ECMP support for LDP on SR Linux performs load balancing for LDP-based tunnels by using multiple outgoing next-hops for an IP prefix on ingress and transit LSRs. You can specify the maximum number of next-hops (up to 64) to be used for load balancing toward a specific FEC.

Configure load balancing for LDP

The following example configures the maximum number of next-hops that SR Linux can use for load balancing toward an IPv4 or IPv6 FEC.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp multipath

network-instance default {

protocols {

ldp {

multipath {

max-paths 64

}

}

}

}

Configuring graceful restart helper capability

Graceful restart allows a router that has restarted its control plane but maintained its forwarding state to restore LDP with minimal disruption.

To do this, the router relies on LDP peers, which have also been configured for graceful restart, to maintain forwarding state while the router restarts. LDP peers configured in this way are known as graceful restart helpers.

You can configure SR Linux to operate as a graceful restart helper for LDP. When the graceful restart helper capability is enabled, SR Linux advertises to its LDP peers by carrying the fault tolerant (FT) session TLV in the LDP initialization message, which assists the LDP peer to preserve its LDP forwarding state across the restart.

Configure graceful restart

The following example enables the graceful restart helper capability for LDP for both IPv4 and IPv6 peers.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp graceful--restart

network-instance default {

protocols {

ldp {

graceful-restart {

helper-enable true

max-reconnect-time 180

max-recovery-time 240

}

}

}

}

In this example, the max-reconnect-time parameter specifies the number of seconds the SR Linux waits for the remote LDP peer to reconnect after an LDP communication failure. The max-recovery-time parameter specifies the number of seconds the SR Linux router preserves its MPLS forwarding state after receiving the LDP initialization message from the restarted LDP peer.

Configuring LDP-IGP synchronization

You can configure synchronization between LDP and IPv4 or IPv6 interior gateway protocols (IGPs). LDP-IGP synchronization is supported for IS-IS.

When LDP-IGP synchronization is configured, LDP notifies the IGP to advertise the maximum cost for a link in the following scenarios: when the LDP hello adjacency goes down, when the LDP session goes down, or when LDP is not configured on an interface.

The following apply when LDP-IGP synchronization is configured:

-

If a session goes down, the IGP increases the metric of the corresponding interface to max-metric.

-

When the LDP adjacency is reestablished, the IGP starts a hold-down timer (default 60 seconds). When this timer expires, the IGP restores the normal metric, if it has not been restored already.

-

When LDP informs the IGP that all label-FEC mappings have been received from the peer, the IGP can be configured to immediately restore the normal metric, even if time remains on the hold-down timer.

LDP-IGP synchronization does not take place on LAN interfaces unless the IGP has a point-to-point connection over the LAN, and does not take place on IGP passive interfaces.

Configure LDP-IGP synchronization

The following example configures LDP-IGP synchronization for an IS-IS instance.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols isis instance i1 ldp-synchronization

network-instance default {

protocols {

isis {

instance i1 {

ldp-synchronization {

hold-down-timer 120

end-of-lib true

}

}

}

}

}

In this example, if the LDP adjacency goes down, the IGP increases the metric of the corresponding interface to max-metric. If the adjacency is subsequently reestablished, the IGP waits for the amount of time configured with the hold-down-timer parameter before restoring the normal metric.

When the end-of-lib parameter is set to true, it causes the IGP to restore the normal metric when all label-FEC mappings have been received from the peer, even if time remains in the hold-down timer.

Configuring static FEC (FEC originate)

Static FEC (also known as FEC originate) triggers a label for prefix announcement without a requirement to enable LDP on a subinterface or to receive a label from a neighbor. Network security requirements may dictate that prefixes advertised using LDP must not be directly associated with a subinterface or system IP address. Static FEC allows the router to advertise these prefixes and their labels to peers as LDP FECs to provide reachability to the desired IP addresses or subnets without explicitly using a subinterface address. The router can advertise a FEC with a pop action.

A FEC can be added to the LDP IP prefix database with a specific label operation on the node. The only permitted operation is pop (the swap parameter must be set to false).

A route-table entry is required for a FEC to be advertised. Static FEC is supported for both IPv4 and IPv6 FECs.

Configure static FEC

--{ + candidate shared default }--[ ]--

# info network-instance default protocols ldp static-fec 10.10.10.2/32

network-instance default {

protocols {

ldp {

static-fec 10.10.10.2/32 {

swap false

}

}

}

}

Overriding the LSR ID on an interface

For security concerns, it may be beneficial to overwrite the default LSR ID with a different LSR ID for link or targeted LDP sessions. The following LSR IDs can be overwritten:

- IPv4 l-LDP: local subinterface IPv4 address

- IPv6 l-LDP: local subinterface IPv4 or IPv6 address, in one of the following

combinations:

- the subinterface IPv4 address as the 32-bit LSR-ID and the subinterface IPv6 address as the transport connection address

- the subinterface IPv6 address as both a 128-bit LSR-ID and transport connection address

- IPv4 T-LDP: IPv4 loopback or any IPv4 LDP subinterface

- IPv6 T-LDP: IPv4 loopback, IPv6 loopack, IPv4 LDP subinterface, or IPv6 LDP subinterface

Note that a loopback interface cannot be used with the 32-bit format.

To change the value of the LSR ID on an interface to the local interface IP address, use the override-lsr-id option. When override-lsr-id is enabled, the transport address for the LDP session and the source IP address of the Hello messages is updated to the interface IP address.

In IPv6 networks, either the IPv4 or IPv6 interface address can override the IPv6 LSR ID.

To override the value of the LSR ID on an interface, use the following commands under the network-instance context:

-

IPv4:

protocols ldp discovery interfaces interface <name> ipv4 override-lsr-id local-subinterface ipv4

-

IPv6:

protocols ldp discovery interfaces interface <name> ipv6 override-lsr-id local-subinterface [ipv4 | ipv6]

Configure the LSR ID

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery interfaces interface ethernet-1/1.1

network-instance default {

protocols {

ldp {

discovery {

interfaces {

interface ethernet-1/1.1 {

ipv4 {

override-lsr-id {

local-subinterface ipv4

}

}

ipv6 {

override-lsr-id {

local-subinterface ipv6

}

}

}

}

}

}

}

}LDP FEC import and export policies

SR Linux supports FEC prefix import and export policies for LDP, which provide filtering of both inbound and outbound LDP label bindings.

FEC prefix export policy

A FEC prefix export policy controls the set of LDP prefixes and associated LDP label bindings that a router advertises to its LDP peers. By default, the router advertises local label bindings for only the system address, but propagates all FECs that are received from neighbors. The export policy can also be configured to advertise local interface FECs.

The export policy can accept or reject label bindings for advertisement to the LDP peers.

When applied globally, LDP export policies apply to FECs advertised to all neighbors. To control the propagation of FECs to a specific LDP neighbor, you can apply an LDP export prefix policy to the specified peer.

FEC prefix import policy

A FEC prefix import policy controls the set of LDP prefixes and associated LDP label bindings received from other LDP peers that a router accepts. The router redistributes all accepted LDP prefixes that it receives from its neighbors to other LDP peers (unless rejected by the FEC prefix export policy).

The import policy can accept or reject label bindings received from LDP peers. By default, the router imports all FEC prefixes from its LDP peers.

When applied globally, LDP import policies apply to FECs received from all neighbors. To control the import of FECs from a specific LDP neighbor, you can apply an LDP import prefix policy to the specified peer.

Routing policies

The filtering of label bindings in LDP FEC import and export policies is based on prefix lists that are defined using routing policies.

Configuring routing policies for LDP FEC import and export policies

To configure routing policies for LDP FEC import and export policies, use the routing-policy command.

Configure routing policy for global LDP FEC import and export

The following shows an example configuration of global LDP FEC import and export policies, defining match rules to accept an import prefix set, and reject an export prefix set.

--{ * candidate shared default }--[ ]--

# info routing-policy

routing-policy {

prefix-set export-prefix-set-test {

prefix 10.1.1.2/32 mask-length-range exact {

}

prefix 10.1.1.3/32 mask-length-range exact {

}

}

prefix-set import-prefix-set-test {

prefix 10.1.1.1/32 mask-length-range exact {

}

prefix 10.1.1.2/32 mask-length-range exact {

}

prefix 10.1.1.3/32 mask-length-range exact {

}

}

policy export-fec-test {

default-action {

policy-result accept

}

statement export-statement-test {

match {

prefix-set export-prefix-set-test

}

action {

policy-result reject

}

}

}

policy import-fec-test {

default-action {

policy-result accept

}

statement import-statement-test {

match {

prefix-set import-prefix-set-test

}

action {

policy-result accept

}

}

}

}Configure routing policy for peer LDP FEC import and export

The following shows an example configuration of peer LDP FEC import and export policies, defining match rules to reject an import prefix set and an export prefix set for the specified peer.

--{ * candidate shared default }--[ ]--

# info routing-policy

routing-policy {

prefix-set peer-export-prefix-test {

prefix 10.1.1.4/32 mask-length-range exact {

}

}

prefix-set peer-import-prefix-test {

prefix 10.1.1.5/32 mask-length-range exact {

}

}

policy peer-export-test {

statement peer-export-statement-test {

match {

prefix-set export-prefix-set-test

}

action {

policy-result reject

}

}

}

policy peer-import-test {

statement peer-import-statement-test {

match {

prefix-set import-prefix-set-test

}

action {

policy-result reject

}

}

}

}Applying global LDP FEC import and export policies

Use the ldp import-prefix-policy and ldp export-prefix-policy commands to apply global LDP FEC import and export policies.

The following example applies global export and import policies to LDP FECs.

Apply global LDP import and export policies

--{ +* candidate shared default }--[ ]--

# info network-instance default protocols ldp

network-instance default {

protocols {

ldp {

export-prefix-policy export-fec-test

import-prefix-policy import-fec-test

}

}

}Applying per-peer LDP FEC import and export policies

Use the import-prefix-policy and export-prefix-policy commands under the ldp peers peer context to apply per-peer LDP FEC import and export policies.

The following example applies LDP FEC export and import policies to LDP peer 10.10.10.1.

Apply global LDP import and export policies

--{ +* candidate shared default }--[ ]--

# info network-instance default protocols ldp peers peer 10.10.10.1 label-space-id 1

network-instance default {

protocols {

ldp {

peers {

peer 10.10.10.1 label-space-id 1 {

export-prefix-policy peer-export-test

import-prefix-policy peer-import-test

}

}

}

}

}LDP ECMP

LDP ECMP performs load balancing for LDP-based LSPs by using multiple outgoing next hops for an IP prefix on ingress and transit LSRs.

An LSR that has multiple equal cost paths to a specific IP prefix can receive an LDP label mapping for this prefix from each of the downstream next-hop peers. Because the LDP implementation uses the liberal label retention mode, it retains all the labels for an IP prefix received from multiple next-hop peers.

Without LDP ECMP support, only one of these next-hop peers is selected and installed in the forwarding plane. The algorithm used to select the next-hop peer looks up the route information obtained from the RTM for this prefix and finds the first valid LDP next-hop peer (for example, the first neighbor in the RTM entry from which a label mapping was received). If the outgoing label to the installed next hop is no longer valid (for example, the session to the peer is lost or the peer withdraws the label), a new valid LDP next-hop peer is selected out of the existing next-hop peers, and LDP reprograms the forwarding plane to use the label sent by this peer.

With ECMP support, all the valid LDP next-hop peers (peers that sent a label mapping for an IP prefix) are installed in the forwarding plane. In both cases, an ingress LER and a transit LSR, an ingress label is mapped to the next hops that are in the RTM and from which a valid mapping label has been received. The forwarding plane then uses an internal hashing algorithm to determine how the traffic is distributed among these multiple next hops, assigning each flow to a specific next hop.

SR Linux supports two options to perform ECMP packet hashing:

- LSR label-only hash (default)

- LSR label hash with Ethernet or IP headers (with L4 and TEID)

See MPLS LSR ECMP (7250 IXR platforms) for information about these options.

Support platforms

LDP ECMP is supported on 7730 SXR platforms only.

Targeted LDP

The 7730 SXR platforms support targeted LDP (T-LDP) for both IPv4 and IPv6, using IS-IS or OSPF/OSPFv3 as the IGP. While LDP sessions are typically established between two directly connected peers, T-LDP allows LDP sessions to be established between non-adjacent peers using LDP extended discovery. The source and destination addresses of targeted Hello packets are the LSR-IDs of the remote LDP peers.

In SR Linux, T-LDP sessions use the system IP address as the LSR-ID by default. You can optionally configure the override-lsr-id parameter on the targeted session to change the value of the LSR-ID to either an LDP-enabled local subinterface or to a loopback subinterface. The transport address for the LDP session and the source IP address of the targeted Hello message are then updated to the new LSR-ID value.

With IPv4 T-LDP, the override-lsr-id parameter can update the LSR-ID to an IPv4 subinterface or loopback subinterface. With IPv6 T-LDP, the override-lsr-id parameter can update the LSR-ID to an IPv4 or IPv6 subinterface or loopback subinterface.

The 7730 SXR supports multiple ways of establishing a targeted Hello adjacency to a peer LSR:

-

Configuration of the peer with targeted session parameters either explicitly configured for the peer or inherited from the global LDP targeted session. The explicit peer settings override the top level parameters shared by all targeted peers. Some parameters only exist in the global context and their value is always inherited by all targeted peers regardless of which event triggered the targeted adjacency.

-

Configuration of a pseudowire (FEC 128) using a pw-tunnel to the peer LSR in a virtual private wire service (VPWS) or MAC-VRF network instance. The targeted session parameter values are taken from the global context.

As the preceding triggering events can occur simultaneously or in any arbitrary order, the LDP code implements a priority handling mechanism to determine which event overrides the active targeted session parameters. The overriding trigger becomes the owner of the targeted adjacency to a specific peer and is displayed using the show network-instance default protocols ldp neighbor command.

The following table summarizes the triggering events and the associated priority.

| Triggering event | Automatic creation of targeted Hello adjacency | Active targeted adjacency parameter override priority |

|---|---|---|

|

Manual configuration of peer parameters (creator=manual) |

Yes |

1 |

|

Pseudowire and pw-tunnel configuration in a network instance (creator=pw manager) |

Yes |

3 |

Any parameter value change to an active targeted Hello adjacency caused by any of the triggering events in the preceding table is performed immediately by having LDP send a Hello message with the new parameters to the peer without waiting for the next scheduled time for the Hello message. This functionality allows the peer to adjust its local state machine immediately and maintains both the Hello adjacency and the LDP session in the up state. The only exceptions are the following:

-

The triggering event caused a change to the override-lsr-id parameter value. The Hello adjacency is brought down and causes the LDP session to be brought down if this is the last Hello adjacency associated with the session. A new Hello adjacency and LDP session is established to the peer using the new value of the local LSR ID.

-

The triggering event caused the targeted peer admin-state option to be enabled. The Hello adjacency is brought down and causes the LDP session to be brought down if this is the last Hello adjacency associated with the session.

The value of any LDP parameter that is specific to the peer LDP/TCP session is inherited from the network-instance protocols ldp peers context.

7730 SXR platforms support the following T-LDP hello timers:

- hello holdtime

- hello interval

7730 SXR platforms also support BFD tracking of T-LDP peers for fast failure detection.

Configuring targeted LDP

To configure an IPv4 or IPv6 targeted LDP session, use the network-instance protocols ldp discovery targeted command.

Configure targeted LDP

The following example shows an IPv4 targeted LDP session with BFD and advertise FEC enabled, custom hello holdtime and interval values defined, and the LSR ID set to the IPv4 address for subinterface ethernet-1/10.1.

--{ * candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery targeted

network-instance default {

protocols {

ldp {

discovery {

targeted {

ipv4 {

target 10.0.0.1 {

admin-state enable

enable-bfd true

advertise-fec true

hello-holdtime 30

hello-interval 10

override-lsr-id {

subinterface-ipv4 ethernet-1/10.1

}

}

}

}

}

}

}

}Manual LDP RLFA

LDP remote LFA (RLFA) builds on the existing segment routing (SR) RLFA capability to compute repair paths to a remote LFA node (or PQ node). After a link failure, RLFA puts packets back onto the shortest path without looping them back to the node that forwarded them. See the SR Linux Segment Routing Guide for more information about RLFA computation.

Unlike automatic LDP RLFA, manual LDP RLFA requires the targeted sessions (between source node and PQ node) to be manually configured before a link failure. RLFA repair tunnels can be LDP tunnels only.

Supported platforms

Manual LDP RLFA is supported on 7730 SXR-series platforms only.

General principles of LDP RLFA

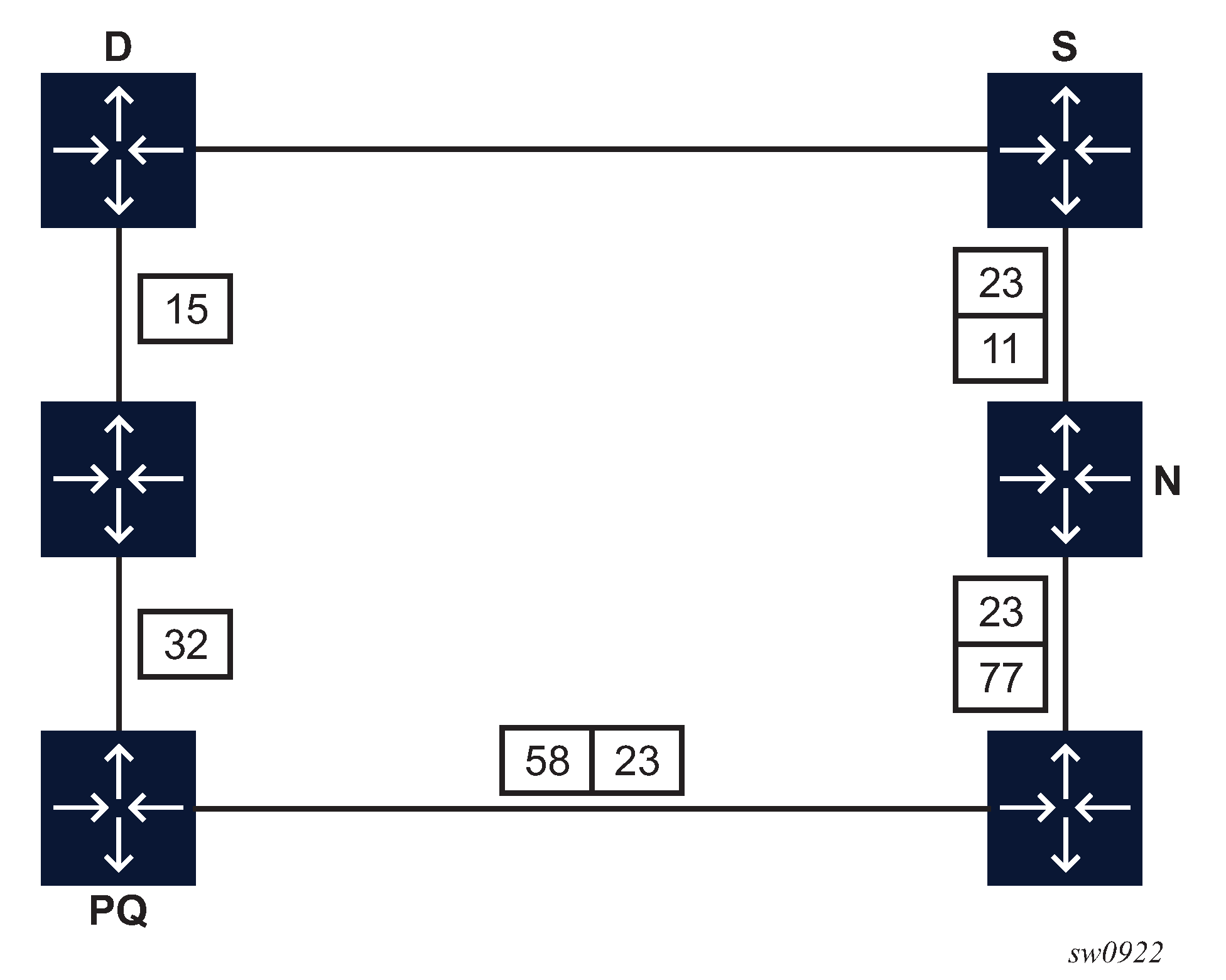

The following figure shows the general principles of LDP RLFA operation using an LDP-in-LDP repair tunnel.

In this figure, S is the source node and D is the destination node. The primary path is the direct link between nodes S and D, and the RLFA algorithm on node S determines the PQ node for destination D. In the event of a failure between nodes S and D, to prevent traffic from looping back to node S, the traffic must be sent to the PQ node. A targeted LDP (T-LDP) session is required between nodes PQ and S. Over that T-LDP session, the PQ node advertises label 23 for FEC D. All other labels are link LDP bindings, which allow traffic to reach the PQ node. On node S, LDP creates an NHLFE that has two labels, where label 23 is the inner label. Label 23 is tunneled to the PQ node, which then forwards traffic on the shortest path to node D.

As prerequisites for RLFA to operate, the LFA algorithm must be run (loopfree-alternate remote-lfa), and the PQ node information must also be attached to LDP route entries (loopfree-alternate augment-route-table).

Feature considerations

Be aware of the following feature considerations:

- LDP RLFA applies to IPv4 FECs with IS-IS only.

- Manual LDP RLFA requires the targeted sessions (between source node and PQ node) to be manually configured before a link failure. The system does not automatically set up T-LDP sessions toward the PQ nodes that the RLFA algorithm has identified. These targeted sessions must be set up with router IDs that match the IDs the RLFA algorithm uses.

- LDP RLFA is designed to operate in LDP-only environments.

- The lsp-trace OAM command is not supported over the repair tunnels.

Configuring manual LDP RLFA

In IS-IS:

-

Enable remote LFA computation using the following command:

network-instance protocols isis instance loopfree-alternate remote-lfa admin-state enable

-

Enable attaching RLFA information to RTM entries using the following command:

network-instance protocols isis instance loopfree-alternate augment-route-table

This second command attaches RLFA-specific information to route entries that are necessary for LDP to program repair tunnels toward the PQ node using a specific neighbor.

- To enable manual LDP RLFA on the source node and PQ node, configure targeted LDP sessions using the network-instance protocols ldp discovery targeted target command.

- On the PQ node, enable FEC advertisement using the network-instance protocols ldp discovery targeted [ipv4 | ipv6] target advertise-fec command.

Configure manual LDP RLFA on a source node

The following example configures manual LDP RLFA on a source node, with no advertise-fec configuration.

--{ candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery targeted

network-instance default {

protocols {

ldp {

discovery {

targeted {

ipv4 {

target 10.1.1.10 {

admin-state enable

hello-holdtime 60

hello-interval 30

}

}

}

}

}

}

}Configure manual LDP RLFA on a PQ node

The following example configures manual LDP RLFA on a PQ node, with advertise-fec set to true.

--{ candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery targeted

network-instance default {

protocols {

ldp {

discovery {

targeted {

ipv4 {

target 10.1.1.15 {

admin-state enable

advertise-fec true

hello-holdtime 60

hello-interval 30

}

}

}

}

}

}

}Automatic LDP RLFA

Unlike manual LDP RLFA, automatic LDP RLFA automatically establishes targeted LDP sessions without the need to specify a list of peers that require targeted sessions. On the source node, specifying which peer serves as the PQ node is not required. Instead, with automatic RLFA, the source node automatically identifies and establishes targeted LDP sessions with the PQ node.The PQ node then provides an LDP-in-LDP repair path to the destination following a failure. The automatic LDP RLFA feature minimizes overall configuration, providing a more dynamic and flexible environment.

Supported platforms

Automatic LDP RLFA is supported on 7730 SXR-series platforms only.

Simple scenario: one PQ node and one S node

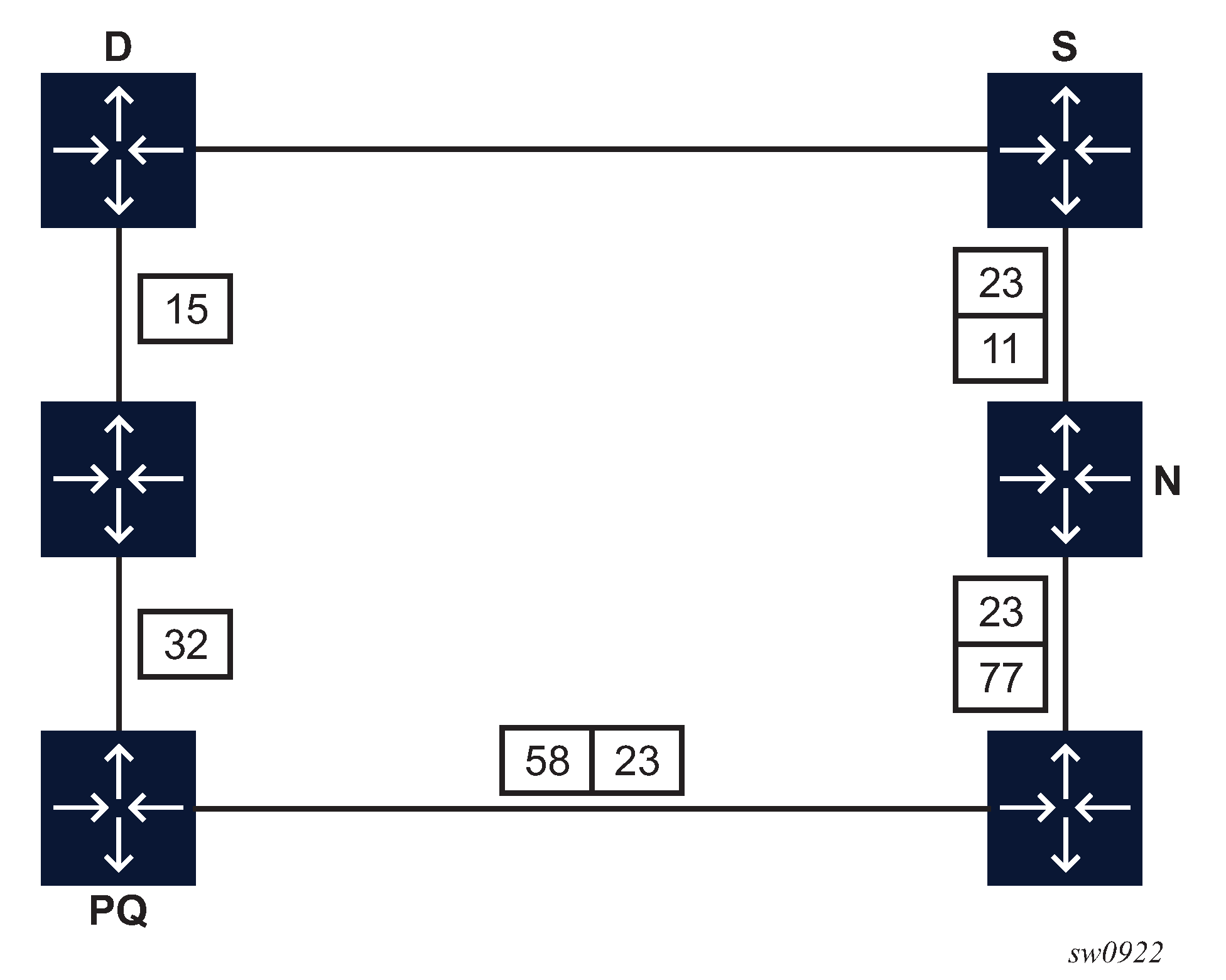

The basic principles of operation for the automatic LDP RLFA capability are the same as in manual LDP RLFA. The following figure again shows the general principles of LDP RLFA operation.

In this example, to address a failure on the shortest path between nodes S and D, node S needs a targeted LDP session toward the PQ node to learn the label-binding information on the PQ node for FEC D. To enable automatic RLFA, the same LFA algorithm used for manual RLFA must be run (loopfree-alternate remote-lfa), and the PQ node information must also be attached to LDP route entries (loopfree-alternate augment-route-table).

Because node S is the node that requires the T-LDP session, it is configured as the transmitting node (auto-tx) to initiate the T-LDP session. The PQ node is configured as the receiving node (auto-rx) of the targeted hello request for that session. And similar to manual LDP RLFA, the PQ node requires FEC advertisement to be enabled (auto-rx advertise-fec enable) so that the PQ node can send to the S node the label it has bound to FEC D (label 23).

With automatic RLFA enabled, node S uses the PQ node information attached to the route entries to automatically start sending targeted hellos to the PQ node. The PQ node accepts those hellos and the T-LDP session is established.

If the network topology changes and the PQ node of S for FEC D changes, S automatically brings down the T-LDP session to the previous PQ node and attempts to establish a new one towards the new PQ node.

Typical scenario: nodes are both PQ node and S node

In the previous simple example of one S node and one PQ node, auto-rx and FEC advertisement can remain disabled on the S node. However, in typical network deployments, each node is potentially the source node and also the PQ node for a given destination FEC. Therefore, all nodes can have both auto-tx and auto-rx enabled. These nodes can also have other configurations defined for specific LDP peers. These multiple configurations (either explicit or implicit) have implications for the operation of LDP RLFA.

The first implication relates to the fact that LDP operates using precedence levels. When a targeted session is established with a peer, LDP uses the session parameters with the highest precedence. The order of precedence is as follows (in descending order of priority):

-

peer

-

auto-tx

-

auto-rx

- node A (source node)

- node B (PQ node)

If source node A has both a per-peer configuration for node B and auto-tx enabled, node A chooses the parameters defined in the per-peer configuration rather than those defined under auto-tx to establish the session. A similar precedence also applies on PQ node B. However, when node B uses the per-peer configuration it has for node A rather than the auto-rx options (including advertise-fec enable), LDP RLFA cannot operate unless the per-peer configuration also enables advertise-fec.

A similar logic also applies in that the auto-tx configuration takes precedence over auto-rx. In a typical scenario, both these modes are enabled on a node that needs to act as both source and PQ node. However, if the node uses the auto-tx configuration for the T-LDP session (because it has the highest precedence), advertise-fec must be also enabled under auto-tx for LDP RLFA to function.

The second implication of multiple LDP configurations is that redundant T-LDP sessions may remain up after a topology change when they are no longer required. In this case, a tools command allows you to delete these redundant T-LDP sessions.

Configuration considerations

The following configuration considerations apply:

-

automatic LDP RLFA works with IS-IS only

-

automatic LDP RLFA only supports IPv4 FECs

-

override-lsr-id configuration is not supported

-

lsp-trace on the backup path is not supported

Configuring automatic LDP RLFA

In IS-IS:

-

Enable remote LFA computation using the following command:

network-instance protocols isis instance loopfree-alternate remote-lfa admin-state enable

-

Enable attaching RLFA information to RTM entries using the following command:

network-instance protocols isis instance loopfree-alternate augment-route-table

- On all potential source nodes, enable auto-rx.

- On all potential PQ nodes, enable auto-tx.

- On PQ-only nodes, enable the advertise-fec option under auto-rx.

- On PQ nodes that can also operate as source nodes, enable the advertise-fec option under both auto‑rx and auto-tx.

Configure automatic LDP RLFA on node that is both source and PQ node

The following example configures automatic LDP RLFA on a node that is both source and PQ node, with advertise-fec set to true under both auto-rx and auto-tx.

--{ candidate shared default }--[ ]--

# info network-instance default protocols ldp discovery targeted ipv4

network-instance default {

protocols {

ldp {

discovery {

targeted {

ipv4 {

auto-rx {

admin-state enable

advertise-fec true

}

auto-tx {

admin-state enable

advertise-fec true

}

}

}

}

}

}

}Displaying source context for automatic T-LDP session parameters

It is not possible to configure LDP options specifically for automatic T-LDP sessions except for advertise-fec. The session inherits the settings defined for the IPv4 and IPv6 families (under ldp discovery targeted) or the system default values. This applies to the following configurations:

- network-instance default protocols ldp discovery targeted hello-holdtime

- network-instance default protocols ldp discovery targeted hello-interval

To identify the context from which the active T-LDP session configuration options are taken, use the info from state command.

Display source context for automatic T-LDP session parameters

--{ candidate shared default }--[ ]--

# info from state network-instance default protocols ldp discovery targeted ipv4 target 10.1.1.6 oper-type

network-instance base {

protocols {

ldp {

discovery {

targeted {

ipv4 {

target 10.1.1.6 {

oper-type auto-tx

}

}

}

}

}

}

}

Deleting redundant T-LDP sessions

To delete any redundant T-LDP sessions, use the tools network-instance default protocols ldp targeted-auto-rx hold-time command.

You must run the command during a specific time window on all nodes that have auto-rx enabled. The hold-time value must be greater than the hello-timer value plus the time required to run the tools command on all applicable nodes. Ensure that the configured value is long enough to meet these requirements. A system check verifies that a non-zero value is configured, but no other checks are enforced.

Deleting redundant T-LDP sessions

The following example enables the targeted-auto-rx hold-time command to delete any redundant T-LDP sessions.

--{ candidate shared default }--[ ]--

# tools network-instance default protocols ldp targeted-auto-rx hold-time 120Display remaining timeout value

--{ candidate shared default }--[ ]--

# info from state network-instance default protocols ldp discovery targeted ipv4 target 10.10.10.15 hello-adjacencies adjacency 10.10.10.10 label-space-id 10 hello-holdtime remainingLSP ping and trace for LDP tunnels

- tools oam lsp-ping ldp fec <prefix>

- tools oam lsp-trace ldp fec <prefix>

Supported parameters include destination-ip, source-ip, timeout, ecmp-next-hop-select, and traffic-class. However, the only mandatory parameter is fec.

Results from the lsp-ping and lsp-trace operations are displayed using info from state commands.

For more information, see the SR Linux OAM and Diagnostics Guide.