Segment routing with MPLS data plane (SR-MPLS)

This section describes:

- Segment Routing (SR) in shortest path forwarding

- SR with Traffic Engineering (SR-TE)

- SR policies

Segment routing in shortest path forwarding

Segment routing adds to IS-IS and OSPF routing protocols the ability to perform shortest path routing and source routing using the concept of abstract segment. A segment can represent a local prefix of a node, a specific adjacency of the node (interface or next hop), a service context, or a specific explicit path over the network. For each segment, the IGP advertises a Segment ID (SID).

When segment routing is used together with MPLS data plane, the SID is a standard MPLS label. A router forwarding a packet using segment routing pushes one or more MPLS labels. This is the scope of the features described in this section.

Segment routing using MPLS labels can be used in both shortest path routing applications and in traffic engineering applications. This section focuses on the shortest path forwarding applications.

When a received IPv4 or IPv6 prefix SID is resolved, the Segment Routing module programs the Incoming Label Map (ILM) with a swap operation and also the LTN with a push operation both pointing to the primary or LFA NHLFE. An IPv4 or IPv6 SR tunnel to the prefix destination is also added to the TTM and is available for use by shortcut applications and Layer 2 and Layer 3 services.

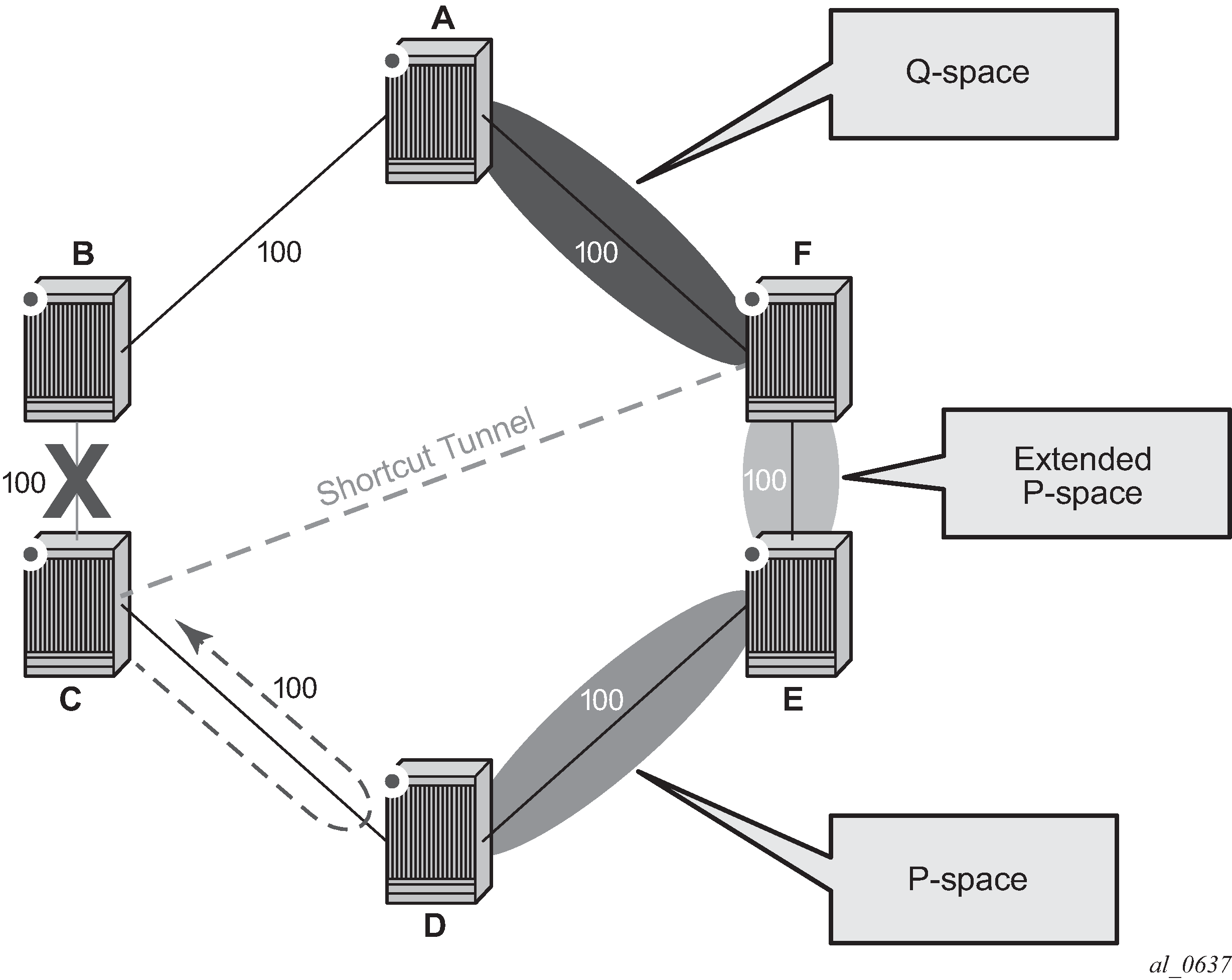

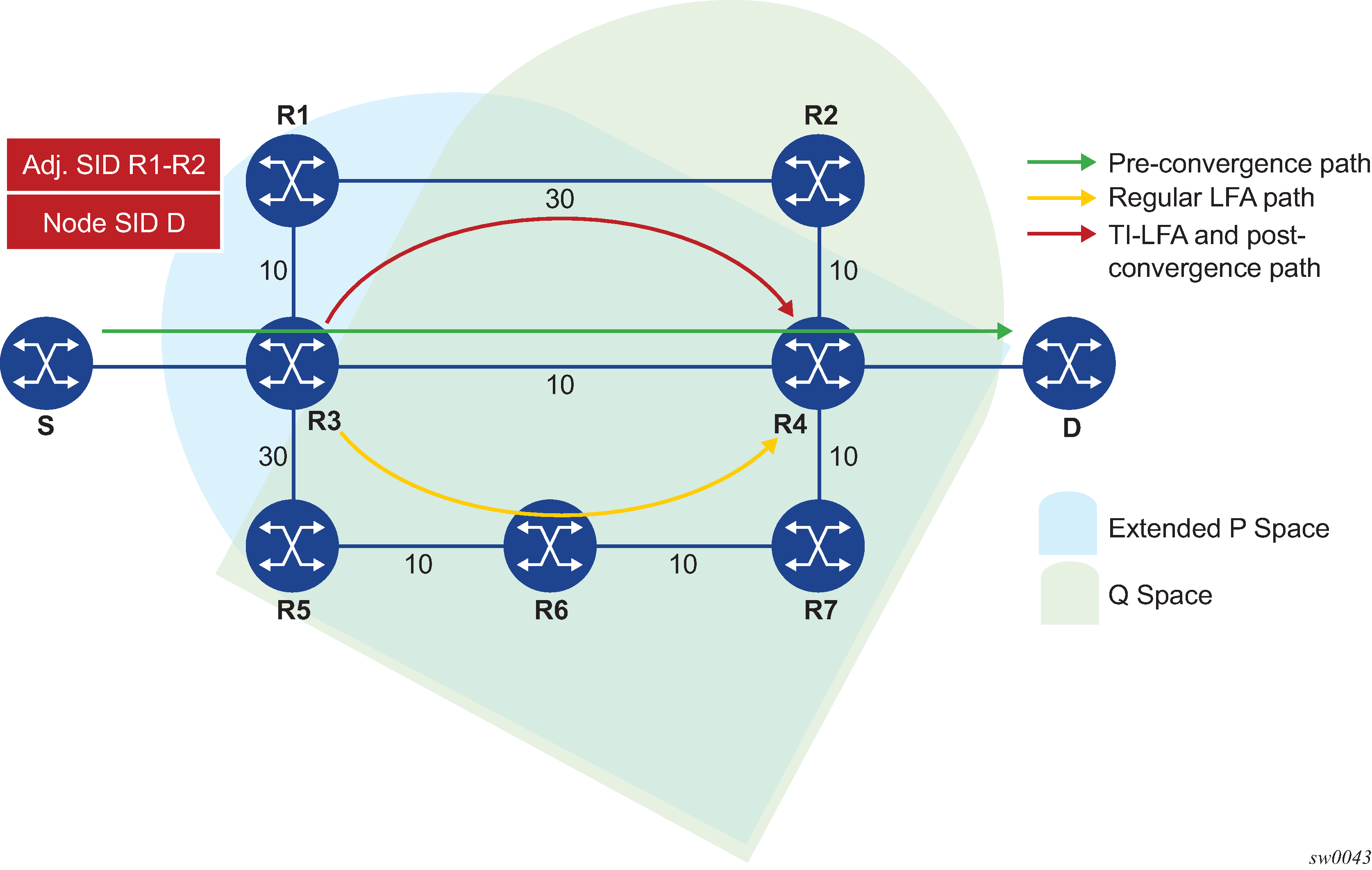

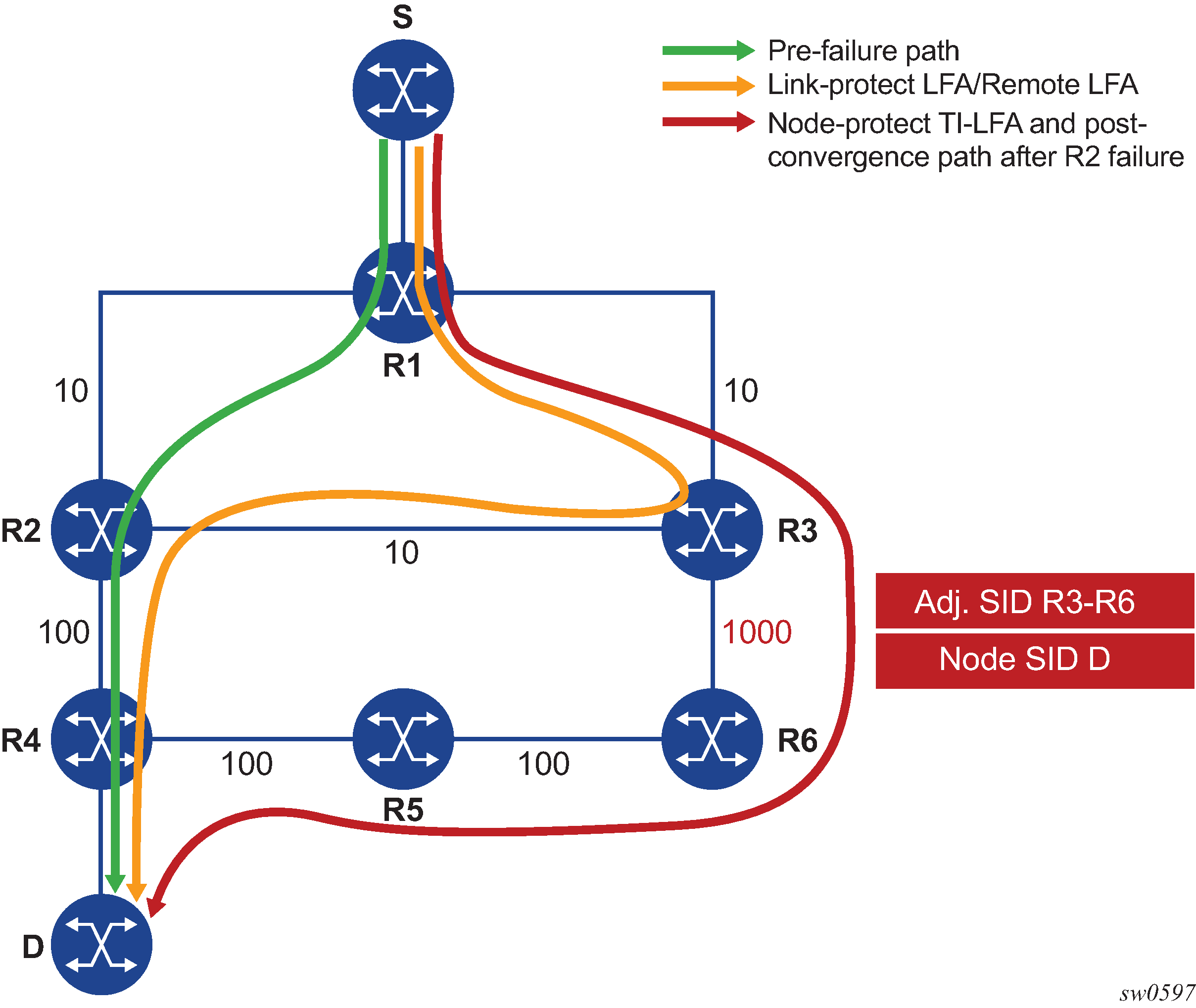

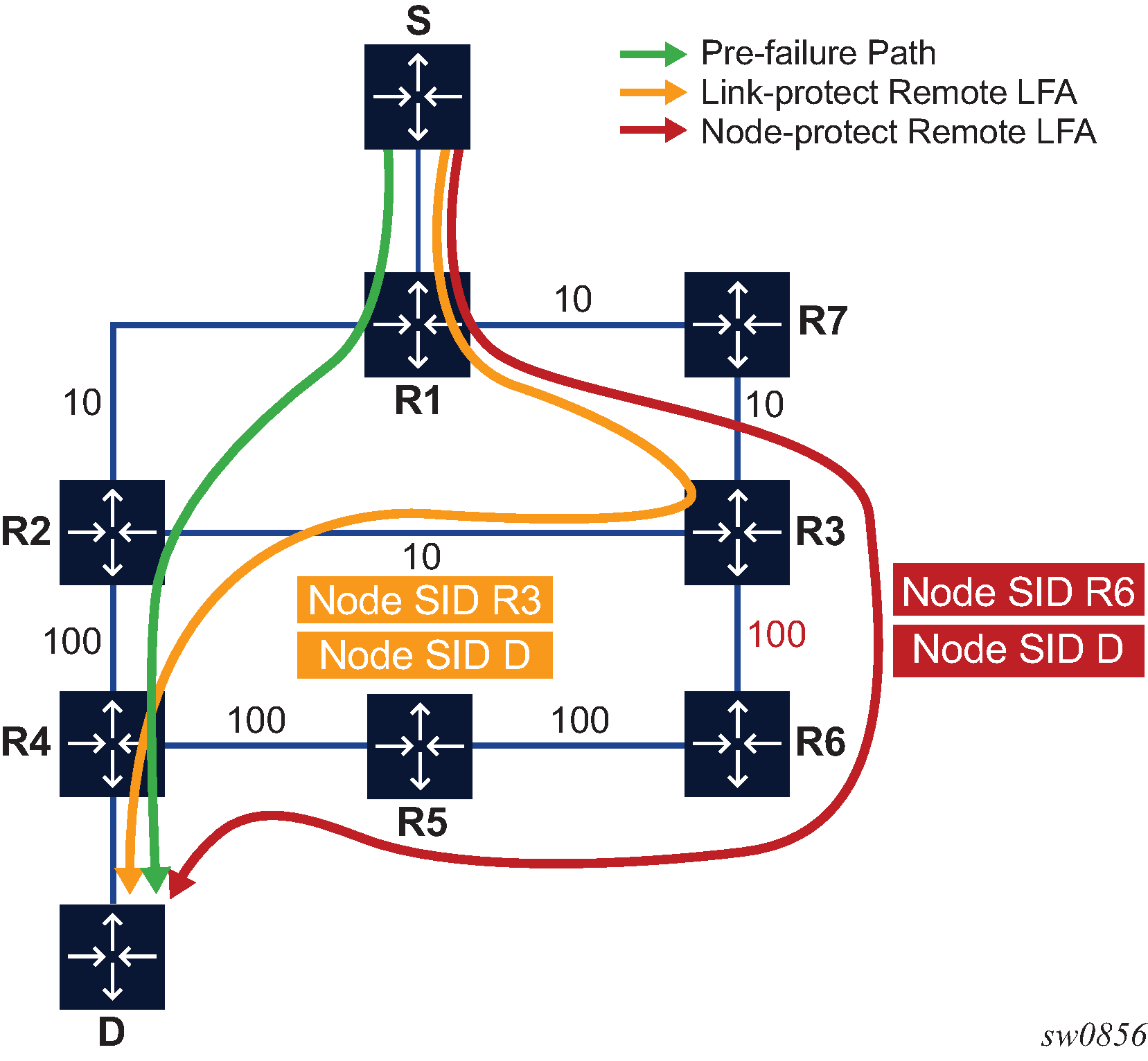

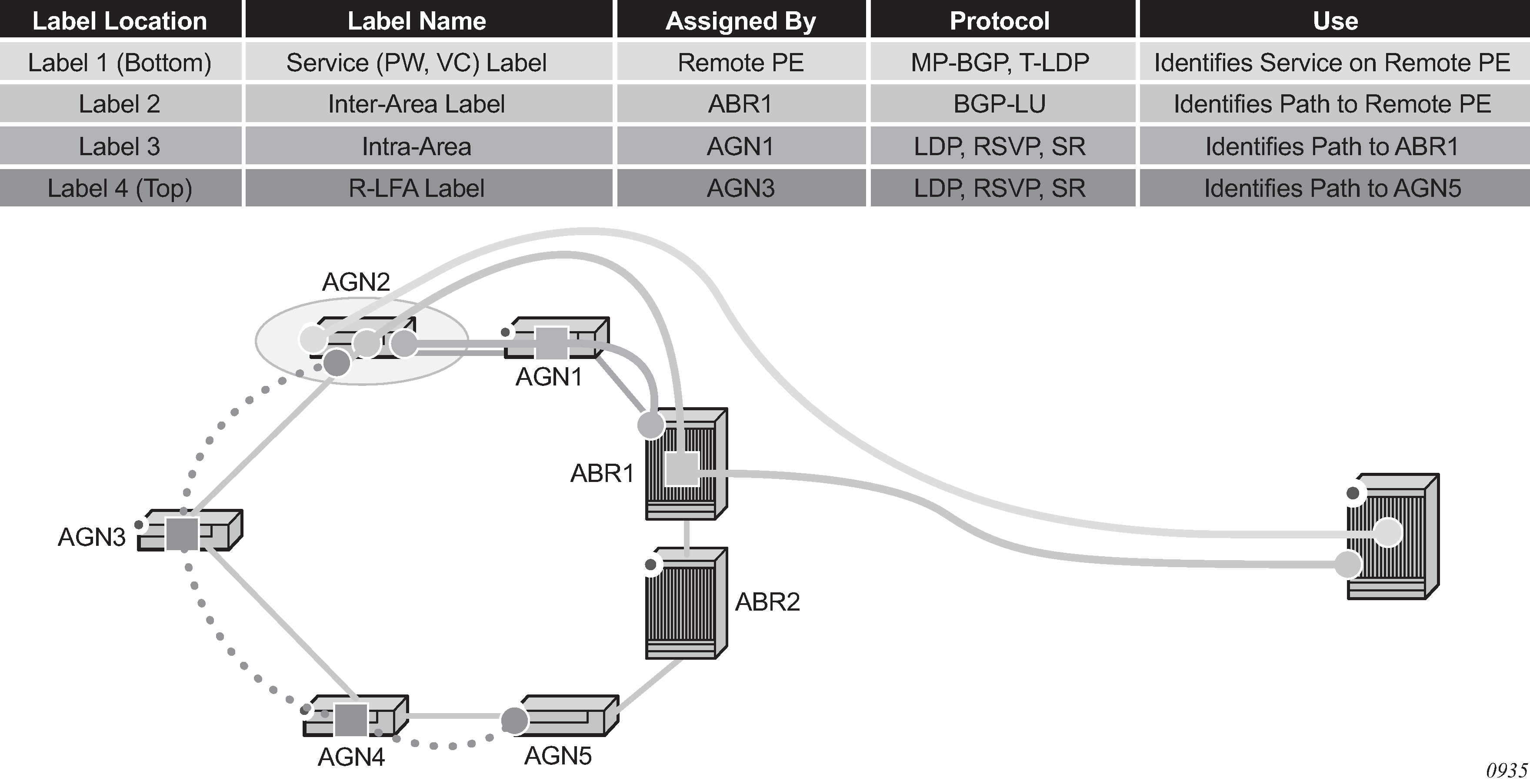

Segment routing introduces the remote LFA feature which expands the coverage of the LFA by computing and automatically programming SR tunnels which are used as backup next-hops. The SR shortcut tunnels terminate on a remote alternate node which provides loop-free forwarding for packets of the resolved prefixes. When the loopfree-alternates option is enabled in an IS-IS or OSPF instance, SR tunnels are protected with an LFA backup next hop. If the prefix of a specific SR tunnel is not protected by the base LFA, the remote LFA automatically computes a backup next hop using an SR tunnel if the remote-lfa option is also enabled in the IGP instance.

Configuring segment routing in shortest path

The user enables segment routing in an IGP routing instance using the following sequence of commands.

First, the user configures the global label block, known as Segment Routing Global Block (SRGB), which is reserved for assigning labels to segment routing prefix SIDs originated by this router. This range is derived from the system dynamic label range and is not instantiated by default:

config>router>mpls-labels>sr-labels start start-value end end-value

Next, the user enables the context to configure segment routing parameters within an IGP instance:

config>router>isis>segment-routing

config>router>ospf>segment-routing

The key parameter is the configuration of the prefix SID index range and the offset label value that this IGP instance uses. Because each prefix SID represents a network global IP address, the SID index for a prefix must be unique network-wide. Thus, all routers in the network are expected to configure and advertise the same prefix SID index range for an IGP instance. However, the label value used by each router to represent this prefix, that is, the label programmed in the ILM, can be local to that router by the use of an offset label, referred to as a start label:

Local Label (Prefix SID) = start-label + {SID index}

The label operation in the network is similar to LDP when operating in the independent label distribution mode (RFC 5036) with the difference that the label value used to forward a packet to each downstream router is computed by the upstream router based on advertised prefix SID index using the above formula.

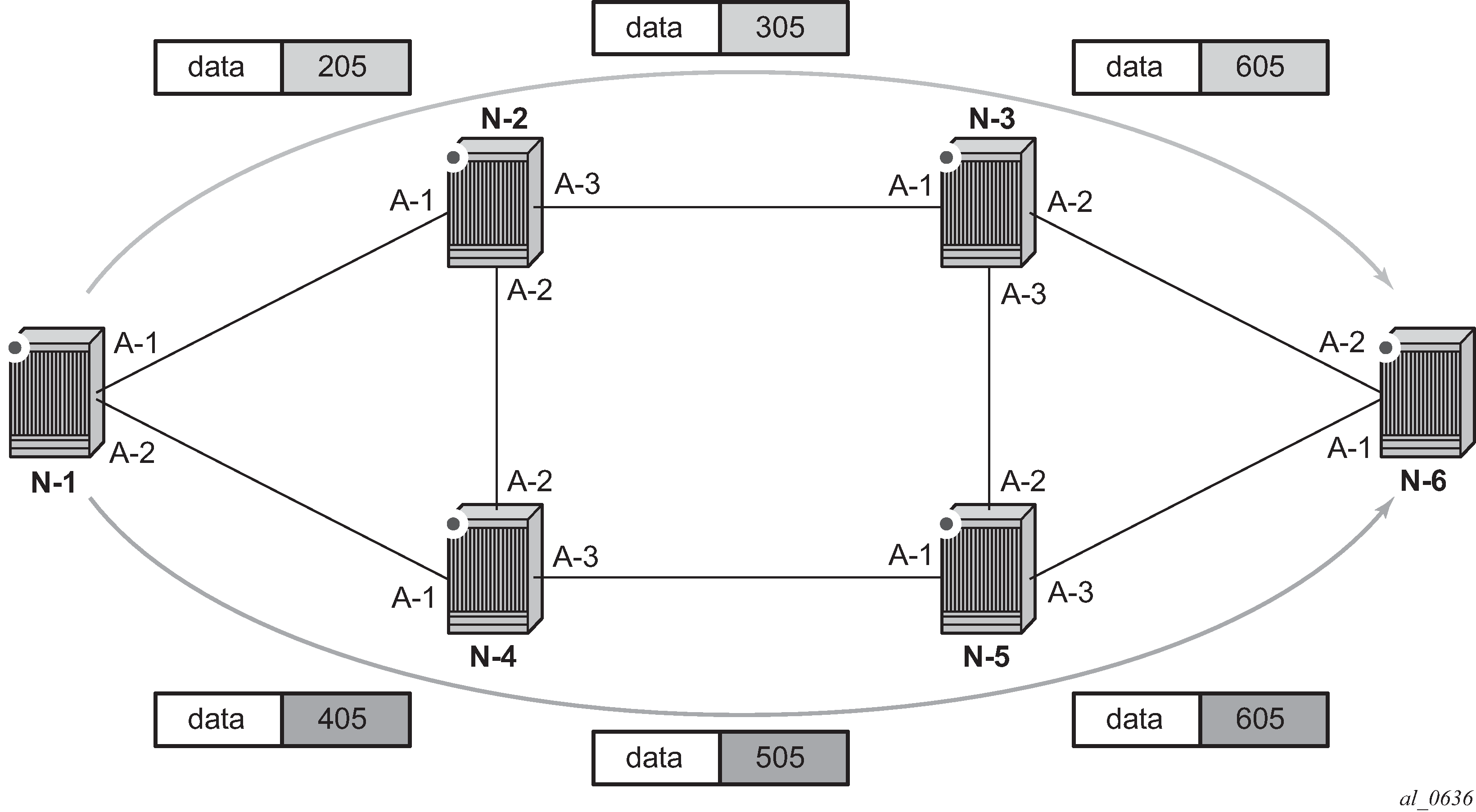

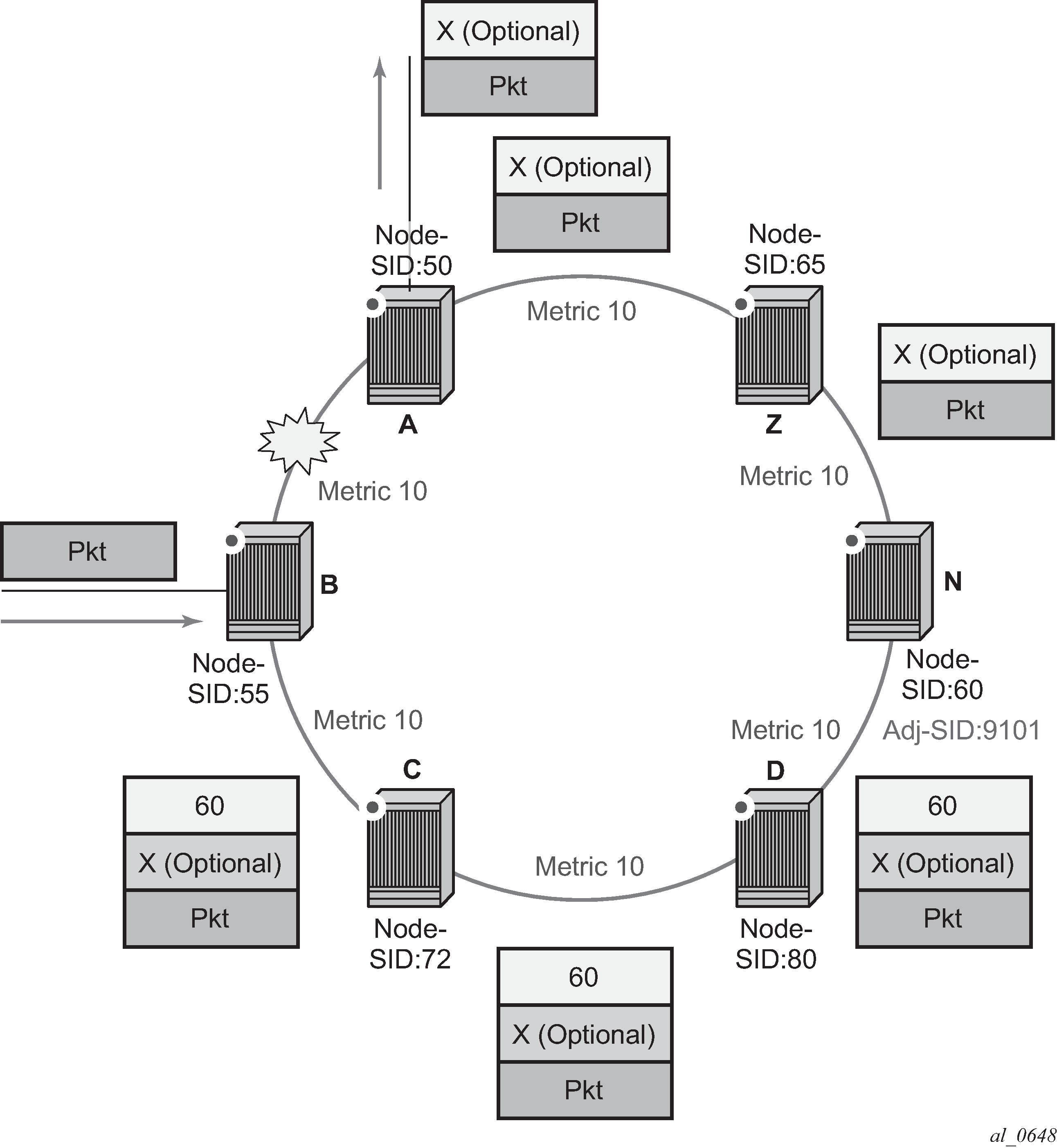

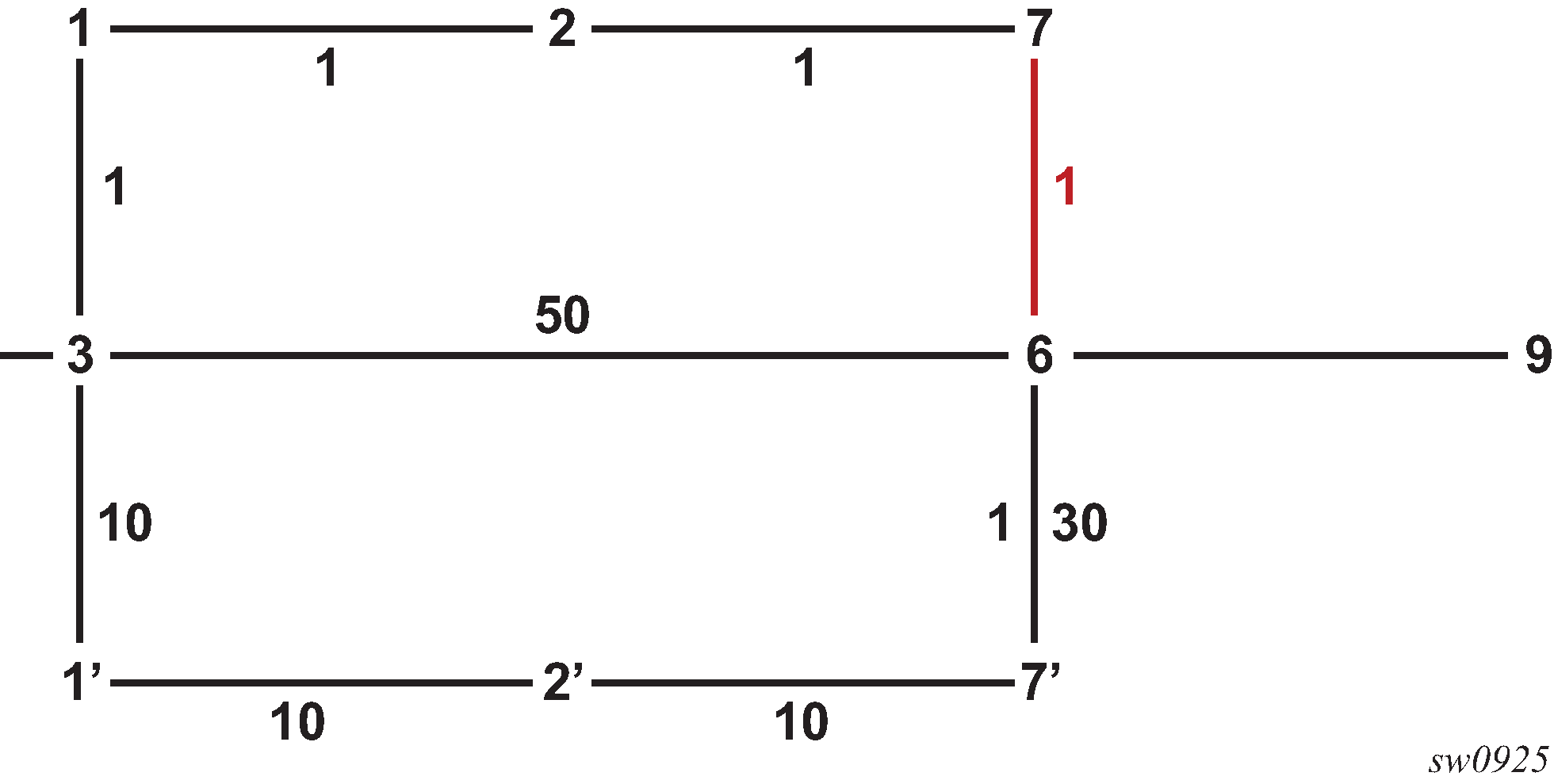

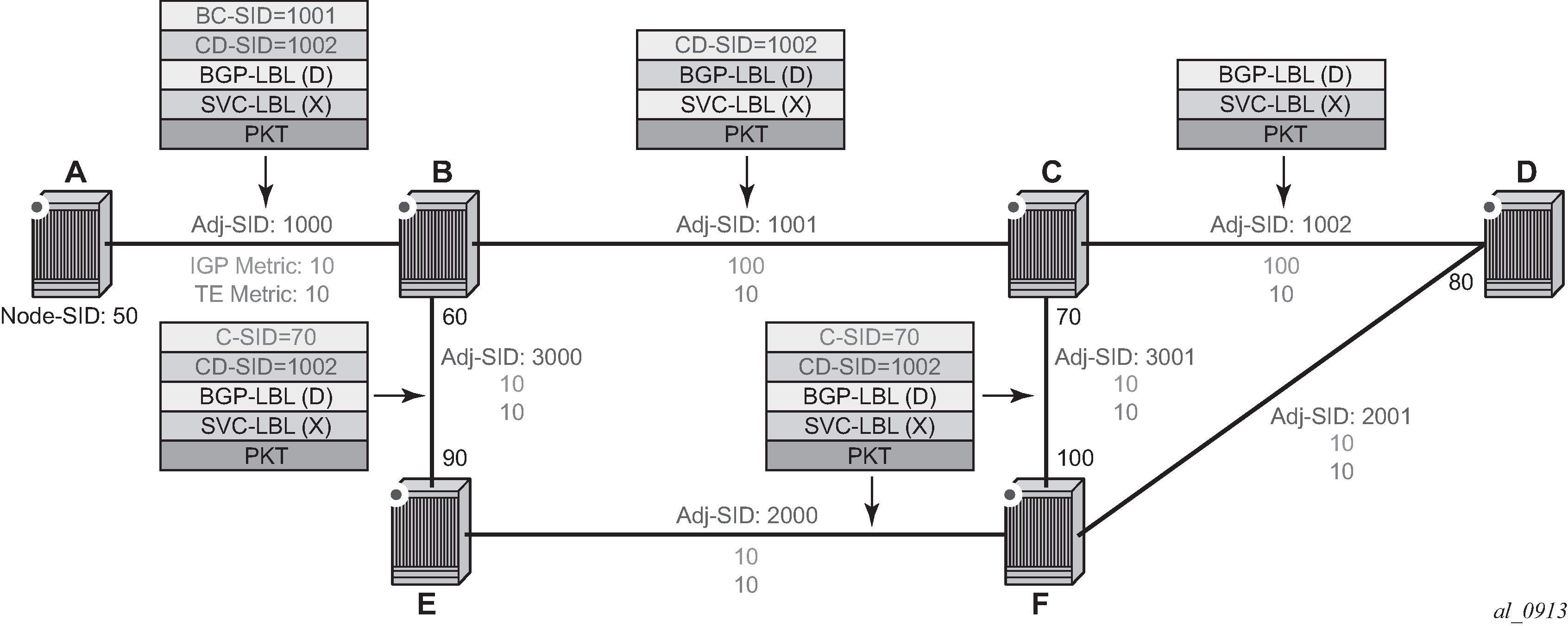

Packet label encapsulation using segment routing tunnel shows an example of a router advertising its loopback address and the resulting packet label encapsulation throughout the network.

Router N-6 advertises loopback 10.10.10.1/32 with a prefix index of 5.Routers N-1 to N-6 are configured with the same SID index range of [1,100] and an offset label of 100 to 600 respectively. The following are the actual label values programmed by each router for the prefix of PE2.

N-6 has a start label value of 600 and programs an ILM with label 605.

N-3 has a start label of 300 and swaps incoming label 305 to label 605.

N-2 has a start label of 200 and swaps incoming label 205 to label 305.

Similar operations are performed by N-4 and N-5 for the alternate path.

N-1 has an SR tunnel to N-6 with two ECMP paths. It pushes label 205 when forwarding an IP or service packet to N-6 via downstream next-hop N-2 and pushes label 405 when forwarding via downstream next-hop N-4.

The CLI commands for configuring the prefix SID index range and offset label value for an IGP instance are as follows:

config>router>isis>segment-routing>prefix-sid-range {global | start-label label-value max-index index-value}

config>router>ospf>segment-routing>prefix-sid-range {global | start-label label-value max-index index-value}

There are two mutually-exclusive modes of operation for the prefix SID range on the router. In the global mode of operation, the user configures the global value and this IGP instance takes the start label value as the lowest label value in the SRGB and the prefix SID index range size equal to the range size of the SRGB. After one IGP instance selected the global option for the prefix SID range, all IGP instances on the system are restricted to do the same.

The user must shutdown the segment routing context and delete the prefix-sid-range command in all IGP instances to change the SRGB. After the SRGB is changed, the user must re-enter the prefix-sid-range command. The SRGB range change fails if an already allocated SID index or label goes out of range.

In the per-instance mode of operation, the user partitions the SRGB into non-overlapping subranges among the IGP instances. The user configures a subset of the SRGB by specifying the start label value and the prefix SID index range size. All resulting net label values (start-label + index) must be within the SRGB or the configuration fails. Furthermore, the code checks for overlaps of the resulting net label value range across IGP instances and strictly enforces that these ranges do not overlap.

The user must shutdown the segment routing context of an IGP instance to change the SID index or label range of that IGP instance using the prefix-sid-range command. In addition, any range change fails if an already allocated SID index or label goes out of range.

The user can, however, change the SRGB at any time as long as it does not reduce the current per-IGP instance SID index or label range defined with the prefix-sid-range. Otherwise, the user must shutdown the segment routing context of the IGP instance and delete and reconfigure the prefix-sid-range command.

Finally, the user brings up segment routing on that IGP instances by un-shutting the context:

config>router>isis>segment-routing>no shutdown

config>router>ospf>segment-routing>no shutdown

This command fails if the user has not previously enabled the router-capability option in the IGP instance. Segment routing is a new capability and must be advertised to all routers in a domain so that routers which support the capability only program the node SID in the data path toward neighbors which support it.

config>router>isis>advertise-router-capability {area | as}

config>router>ospf>advertise-router-capability {link | area | as}

The IGP segment routing extensions are area-scoped. As a consequence, the user must configure the flooding scope to area in OSPF and to area or as in IS-IS, otherwise performing no shutdown of the segment-routing node fail.

Next, the user assigns a node SID index or label to the prefix representing the primary address of a network interface of type system or loopback using one of the following commands. A separate SID value can be configured for each IPv4 and IPv6 primary address of the interface.

config>router>isis>interface>ipv4-node-sid index value

config>router>ospf>area>interface>node-sid index value

config>router>ospf3>area>interface>node-sid index value

config>router>isis>interface>ipv4-node-sid label value

config>router>ospf>area>interface>node-sid label value

config>router>ospf3>area>interface>node-sid label value

config>router>isis>interface>ipv6-node-sid index value

config>router>isis>interface>ipv6-node-sid label value

The secondary address of an IPv4 interface cannot be assigned a node SID index and does not inherit the SID of the primary IPv4 address. The same applies to the non-primary IPv6 addresses of an interface.

In IS-IS, an interface inherits the configured IPv4 or IPv6 node SID value in any level the interface participates in: Level 1, Level 2, or both.

In OSPFv2 and OSPFv3, the node SID is configured in the primary area but is inherited in any other area in which the interface is added as secondary.

The preceding commands fail if the network interface is not of type system or loopback, or if the interface is defined in an IES or a VPRN context. Assigning the same SID index or label value to the same interface in two different IGP instances is not allowed within the same node.

For OSPF, the protocol version number and the instance number dictates if the node SID index or label is for an IPv4 or IPv6 address of the interface. Specifically, the support of address families in OSPF is as follows:

- for ospfv2, always IPv4 only

- for ospfv3, instance 0..31, ipv6 only

- for ospfv3, instance 64..95, ipv4 only

The value of the label or index SID is taken from the range configured for this IGP instance. When using the global mode of operation, a new segment routing module checks that the same index or label value is not assigned to more than one loopback interface address. When using the per-instance mode of operation, this check is not required because the index and the label ranges of the various IGP instances cannot overlap.

For an individual adjacency, values for the label may be provisioned for an IS-IS or OSPF interface. If they are not provisioned, they are dynamically allocated by the system from the dynamic label range. The following CLI commands are used:

config>router>isis>interface

[no] ipv4-adjacency-sid label value

[no] ipv6-adjacency-sid label value

config>router>ospf>area>interface

[no] adjacency-sid label valueThe value must correspond to a label in a reserved label block in provisioned mode referred to by the srlb command (see Segment routing local block for more details of SRLBs).

A static label value for an adjacency SID is persistent. Therefore, the P-bit of the Flags field in the Adjacency-SID TLV advertised in the IGP is set to 1.

By default, a dynamic adjacency SID is advertised for an interface. However, if a static adjacency SID value is configured, then the dynamic adjacency SID is deleted and only the static adjacency SID used. Changing an adjacency SID from dynamic (for example, no adjacency-sid) to static, or the other way around, may result in traffic being dropped as the ILM is reprogrammed.

For a provisioned adjacency SID of an interface, a backup is calculated similar to a regular adjacency SID when sid-protection is enabled for that interface.

Provisioned adjacency SIDs are only supported on point-to-point interfaces.

Configuring single shared loopback SR SID

Segment routing shortest path forwarding with IS-IS

This section describes the segment routing shortest path forwarding with IS-IS.

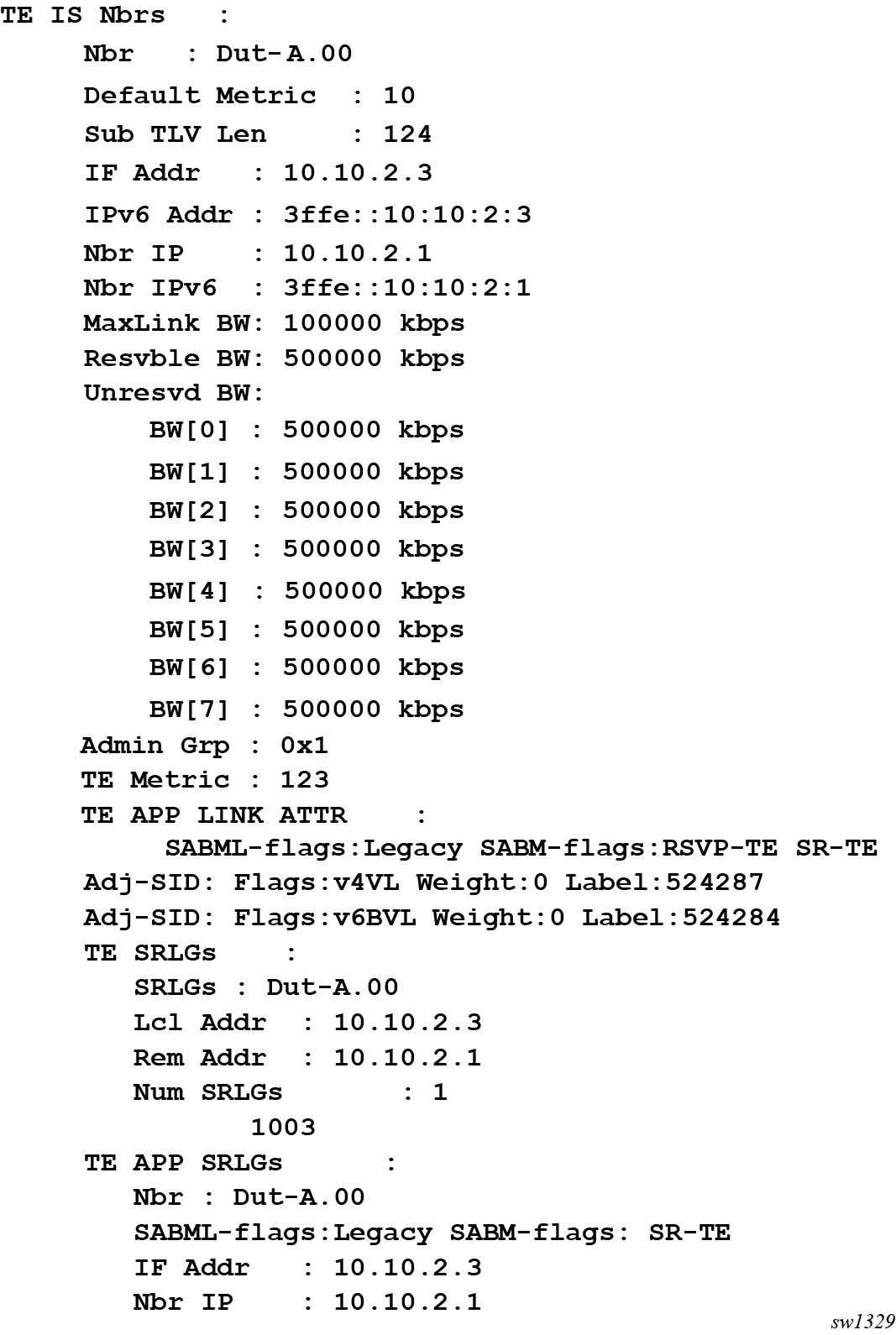

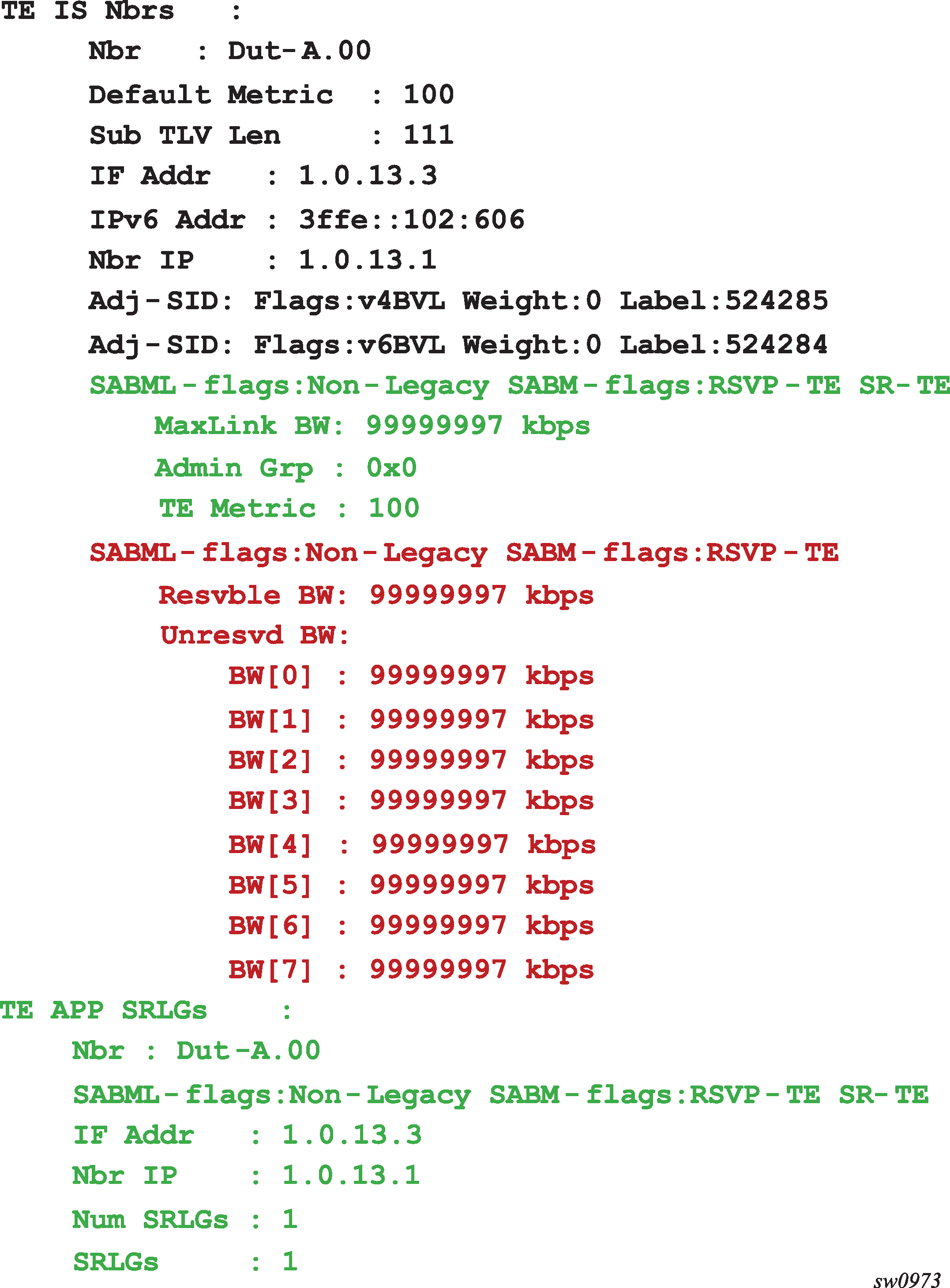

IS-IS control protocol changes

New TLV and sub-TLVs are defined in draft-ietf-isis-segment-routing-extensions and are supported in the implementation of segment routing in IS-IS. Specifically:

prefix SID sub-TLV

adjacency SID sub-TLV

SID/Label Binding TLV

SR-Capabilities sub-TLV

SR-Algorithm sub-TLV

This section describes the behaviors and limitations of the IS-IS support of segment routing TLV and sub-TLVs.

SR OS supports advertising the IS router capability TLV (RFC 4971) only for topology MT=0. As a result, the segment routing capability sub-TLV can only be advertised in MT=0 which restricts the segment routing feature to MT=0.

Similarly, if prefix SID sub-TLVs for the same prefix are received in different MT numbers of the same IS-IS instance, then only the one in MT=0 is resolved. When the prefix SID index is also duplicated, an error is logged and a trap is generated, as described in Error and resource exhaustion handling.

I and V flags are both set to 1 when originating the SR capability sub-TLV to indicate support for processing both SR MPLS encapsulated IPv4 and IPv6 packets on its network interfaces. These flags are not checked when the sub-TLV is received. Only the SRGB range is processed.

The algorithm field is set to 0, meaning Shortest Path First (SPF) algorithm based on link metric, when originating the SR-Algorithm capability sub-TLV but is not checked when the sub-TLV is received.

Both IPv4 and IPv6 prefix and adjacency SID sub-TLVs originate within MT=0.

SR OS originates a single prefix SID sub-TLV per IS-IS IP-reachability TLV and processes the first prefix SID sub-TLV only if multiple are received within the same IS-IS IP-reachability TLV.

SR OS encodes the 32-bit index in the prefix SID sub-TLV. The 24-bit label is not supported.

SR OS originates a prefix SID sub-TLV with the following encoding of the flags and the following processing rules:

The R-flag is set if the prefix SID sub-TLV, along with its corresponding IP-reachability TLV, is propagated between levels. See below for more details about prefix propagation.

The N-flag is always set because SR OS supports a prefix SID type that is node SID only.

The P-Flag (no-PHP flag) is always set, meaning the label for the prefix SID is pushed by the PHP router when forwarding to this router. The SR OS PHP router processes a received prefix SID with the P-flag set to zero and uses implicit-null for the outgoing label toward the router which advertised it as long as the P-Flag is also set to 1.

The E-flag (Explicit-Null flag) is always set to zero. An SR OS PHP router, however, processes a received prefix SID with the E-flag set to 1 and, when the P-flag is also set to 1, it pushes explicit-null for the outgoing label toward the router which advertised it.

The V-flag is always set to 0 to indicate an index value for the SID.

The L-flag is always set to 0 to indicate that the SID index value is not locally significant.

The algorithm field is always set to zero to indicate Shortest Path First (SPF) algorithm based on link metric and is not checked on a received prefix SID sub-TLV.

The SR OS resolves a prefix SID sub-TLV received without the N-flag set but with the prefix length equal to 32. A trap, however, is raised by IS-IS.

The SR OS does not resolve a prefix SID sub-TLV received with the N-flag set and a prefix length different than 32. A trap is raised by IS-IS.

The SR OS resolves a prefix SID received within a IP-reachability TLV based on the following route preference:

SID received via Level 1 in a prefix SID sub-TLV part of IP-reachability TLV

SID received via Level 2 in a prefix SID sub-TLV part of IP-reachability TLV

A prefix received in an IP-reachability TLV is propagated, along with the prefix SID sub-TLV, by default from Level 1 to Level 2 by an Level 1 or Level 2 router. A router in Level 2 sets up an SR tunnel to the Level 1 router via the Level 1 or Level 2 router, which acts as an LSR.

A prefix received in an IP-reachability TLV is not propagated, along with the prefix SID sub-TLV, by default from Level 2 to Level 1 by an Level 1 or Level 2 router. If the user adds a policy to propagate the received prefix, then a router in Level 1 sets up an SR tunnel to the Level 2 router via the Level 1 or Level 2 router, which acts as an LSR.

If a prefix is summarized by an ABR, the prefix SID sub-TLV is not propagated with the summarized route between levels. To propagate the node SID for a /32 prefix, route summarization must be disabled.

SR OS propagates the prefix SID sub-TLV when exporting the prefix to another IS-IS instance; however, it does not propagate it if the prefix is exported from a different protocol. Thus, when the corresponding prefix is redistributed from another protocol such as OSPF, the prefix SID is removed.

SR OS originates an adjacency SID sub-TLV with the following encoding of the flags:

The F-flag is set to zero for IPv4 family and is set to 1 to for IPv6 family for the adjacency encapsulation.

The B-Flag is set to zero and is not processed on receipt.

The V-flag is always set to 1.

The L-flag is always set to 1.

The S-flag is set to zero because assigning adjacency SID to parallel links between neighbors is not supported. An adjacency received SID with S-Flag set is not processed.

The weight octet is not supported and is set to all zeros.

SR OS can originate the SID/Label Binding TLV as part of the Mapping Server feature (see Segment routing mapping server function for IPv4 prefixes for more information). The following rules and limitations should be considered:

Only the mapping server prefix-SID sub-TLV within the TLV is processed and the ILMs installed if the prefixes in the provided range are resolved.

The range and FEC prefix fields are processed. Each FEC prefix is resolved, similar to the prefix SID sub-TLV, meaning there must be an IP-reachability TLV received for the exact matching prefix.

If the same prefix is advertised with both a prefix SID sub-TLV and a mapping server prefix-SID sub-TLV. The resolution follows the following route preference:

SID received via Level 1 in a prefix SID sub-TLV part of IP-reachability TLV

SID received via Level 2 in a prefix SID sub-TLV part of IP-reachability TLV

SID received via Level 1 in a mapping server Prefix-SID sub-TLV

SID received via Level 2 in a mapping server Prefix-SID sub-TLV

The entire TLV can be propagated between levels based on the settings of the S-flag. The TLV cannot be propagated between IS-IS instances (see Segment routing mapping server function for IPv4 prefixes for more information). Finally, an Level 1 or Level 2 router does not propagate the prefix-SID sub-TLV from the SID/label binding TLV (received from a mapping server) into the IP-reachability TLV if the latter is propagated between levels.

The mapping server which advertised the SID/label binding TLV does not need to be in the shortest path for the FEC prefix.

If the same FEC prefix is advertised in multiple binding TLVs by different routers, the SID in the binding TLV of the first router that is reachable is used. If that router becomes unreachable, the next reachable one is used.

No check is performed if the content of the binding TLVs from different mapping servers are consistent or not.

Any other sub-TLV, for example, the SID/label sub-TLV, ERO metric and unnumbered interface ID ERO, is ignored but the user can view the octets of the received-but-not-supported sub-TLVs using the IGP show command.

Announcing ELC, MSD-ERLD, and MSD-BMI with IS-IS

IS-IS can announce node Entropy Label Capability (ELC), the Maximum Segment Depth (MSD) for node Entropy Readable Label Depth (ERLD) and the MSD for node Base MPLS Imposition (BMI). If needed, exporting the IS-IS extensions into BGP-LS requires no additional configuration. These extensions are standardized through draft-ietf-isis-mpls-elc-10, Signaling Entropy Label Capability and Entropy Readable Label Depth Using IS-IS, and RFC 8491, Signaling Maximum SID Depth (MSD) Using IS-IS.

When entropy and segment routing are enabled on a router, it automatically announces the ELC, ERLD, and BMI IS-IS values when IS-IS prefix attributes and router capabilities are announced. The following configuration logic is used.

The router automatically announces ELC for host prefixes associated with an IPv4 or IPv6 node SID when segment-routing , segment-routing entropy-label, and prefix-attributes-tlv are enabled for IS-IS. Although the ELC capability is a node property, it is assigned to prefixes to allow inter-area or inter-AS signaling. Consequently, the prefix-attribute TLV must be enabled accordingly within IS-IS.

The router announces the maximum node ERLD for IS-IS when segment-routing and segment-routing entropy-label are enabled together with advertise-router-capability.

The router announces the maximum node MSD-BMI for IS-IS when segment-routing and advertise-router-capability are enabled.

Exporting ELC, MSD-ERLD, and MSD-BMI IS-IS extensions into BGP-LS encoding is enabled automatically when database-export for BGP-LS is configured.

The announced value for maximum node MSD-ERLD and MSD-BMI can be modified to a smaller number using the override-bmi and override-erld commands. This can be useful when services (such as EVPN) or more complex link protocols (such as Q-in-Q) are deployed. Provisioning correct ERLD and BMI values helps controllers and local Constrained Shortest Path First (CSPF) to construct valid segment routing label stacks to be deployed in the network.

Segment routing parameters are configured in the following contexts:

configure>router>isis>segment-routing>maximum-sid-depth

configure>router>isis>segment-routing>maximum-sid-depth>override-bmi value

configure>router>isis>segment-routing>maximum-sid-depth>override-erld valueEntropy label for IS-IS segment routing

The router supports the MPLS entropy label, as specified in RFC 6790, on IS-IS segment-routed tunnels. LSR nodes in a network can load-balance labeled packets in a more granular way than by hashing on the standard label stack. See the MPLS Guide for more information.

Announcing of Entropy Label Capability (ELC) is supported, however processing of ELC signaling is not supported for IS-IS segment-routed tunnels. Instead, ELC is configured at the head-end LER using the configure router isis entropy-label override-tunnel-elc command. This command causes the router to ignore any advertisements for ELC that may or may not be received from the network, and instead to assume that the whole domain supports entropy labels.

IPv6 segment routing using MPLS encapsulation

This feature supports SR IPv6 tunnels in IS-IS MT=0. The user can configure a node SID for the primary IPv6 global address of a loopback interface, which is then advertised in IS-IS. IS-IS automatically assigns and advertises an adjacency SID for each adjacency with an IPv6 neighbor. After the node SID is resolved, it is used to install an IPv6 SR-ISIS tunnel in the TTM for use by the services.

IS-IS MT=0 extensions

The IS-IS MT=0 extensions support the advertising and resolution of the prefix SID sub-TLV within the IP Reachability TLV-236 (IPv6), as defined in RFC 5308.The adjacency SID is still advertised as a sub-TLV of the Extended IS Reachability TLV 22, as defined in RFC 5305, IS-IS Extensions for Traffic Engineering, as in the case of an IPv4 adjacency. The router sets the V-Flag and I-Flag in the SR-capabilities sub-TLV to indicate that it can process SR MPLS-encapsulated IPv4 and IPv6 packets on its network interfaces.

Service and forwarding contexts supported

The service and forwarding contexts supported with the SR-ISIS IPv6 tunnels are:

SDP of type sr-isis with far-end option using an IPv6 address

VLL, VPLS, IES/VPRN spoke-interface, and R-VPLS

support of PW redundancy within Epipe/Ipipe VLL, Epipe spoke termination on VPLS and R-VPLS, and Epipe/Ipipe spoke termination on IES/VPRN

IPv6 static route resolution to indirect next hop using Segment Routing IPv6 tunnel

remote mirroring and Layer 3 encapsulated lawful interface

Services using SDP with an SR IPv6 tunnel

The MPLS SDP of type sr-isis with a far-end option using an IPv6 address is supported. Note the SDP must have the same IPv6 far-end address, used by the control plane for the T-LDP session, as the prefix of the node SID of the SR IPv6 tunnel.

configure

— service

— [no] sdp sdp-id mpls

— [no] far-end ipv6-address

— sr-isis

— no sr-isis

The bgp-tunnel, lsp, sr-te lsp, sr-ospf, and mixed-lsp-mode commands are blocked within the SDP configuration context when the far end is an IPv6 address.

SDP admin groups are not supported with an SDP using an SR IPv6 tunnel, or with SR-OSPF for IPv6 tunnels, and the attempt to assign them is blocked in the CLI.

Services that use LDP control plane such as T-LDP VPLS and R-VPLS, VLL, and IES/VPRN spoke interface have the spoke SDP (PW) signaled with an IPv6 T-LDP session because the far-end option is configured to an IPv6 address. The spoke SDP for these services binds to an SDP that uses an SR IPv6 tunnel where the prefix matches the far-end address. SR OS also supports the following:

the IPv6 PW control word with both data plane packets and VCCV OAM packets

hash label and entropy label, with the above services

network domains in VPLS

The PW switching feature is not supported with LDP IPv6 control planes. As a result, the CLI does not allow the user to enable the vc-switching option whenever one or both spoke SDPs uses an SDP that has the far-end configured as an IPv6 address.

L2 services that use BGP control plane such as dynamic MS-PW, BGP-AD VPLS, BGP-VPLS, BGP-VPWS, and EVPN MPLS cannot bind to an SR IPv6 tunnel because a BGP session to a BGP IPv6 peer does not support advertising an IPv6 next hop for the L2 NLRI. As a result, these services do not auto-generate SDPs using an SR IPv6 tunnel. In addition, they skip any provisioned SDPs with far-end configured to an IPv6 address when the use-provisioned-sdp option is enabled.

SR OS also supports multi homing with T-LDP active/standby FEC 128 spoke SDP using SR IPv6 tunnel to a VPLS/B-VPLS instance. BGP multi homing is not supported because BGP IPv6 does not support signaling an IPv6 next hop for the L2 NLRI.

The Shortest Path Bridging (SPB) feature works with spoke SDPs bound to an SDP that uses an SR IPv6 tunnel.

Segment routing mapping server function for IPv4 prefixes

The mapping server feature supports the configuration and advertisement, in IS-IS, of the node SID index for prefixes of routers in the LDP domain. This is performed in the router acting as a mapping server and using a prefix-SID sub-TLV within the SID/label binding TLV in IS-IS.

Use the following command syntax to configure the SR mapping database in IS-IS:

configure

— router

— [no] isis

— segment-routing

— no segment-routing

— mapping-server

— sid-map node-sid {index 0..4294967295 [range 0..65535]} prefix {{ip-address/mask} | {ip-address}{netmask}} [set-flags {s}] [level {1 | 2 | 1/2}]

— no sid-map node-sid index 0..4294967295

The user enters the node SID index, for one prefix or a range of prefixes, by specifying the first index value and, optionally, a range value. The default value for the range option is 1. Only the first prefix in a consecutive range of prefixes must be entered. If the user enters the first prefix with a mask lower than 32, the SID/label binding TLV is advertised, but a router that receives it does not resolve the prefix SID and instead generates a trap.

The no form of the sid-map command deletes the range of node SIDs beginning with the specified index value. The no form of the mapping-server command deletes all node SID entries in the IS-IS instance.

The S-flag indicates to the IS-IS routers in the network that the flooding scope of the SID/label binding TLV is the entire domain. In that case, a router receiving the TLV advertisement leaks it between IS-IS levels. If leaked from Level 2 to Level 1, the D-flag must be set; this prevents the TLV from being leaked back into level 2. Otherwise, the S-flag is clear by default and routers receiving the mapping server advertisement do not leak the TLV.

SR OS does not leak this TLV between IS-IS instances and does not support the multi-topology SID/label binding TLV format. In addition, the user can specify the flooding scope of the mapping server for the generated SID/label binding TLV using the level option. This option allows further narrowing of the flooding scope configured under the router IS-IS level-capability for one or more SID/label binding TLVs if required. The default flooding scope of the mapping server is L1 or L2, which can be narrowed by what is configured under the router IS-IS level-capability.

The A-flag indicates that a prefix for which the mapping server prefix SID is advertised is directly attached. The M-flag advertises a SID for a mirroring context to provide protection against the failure of a service node. None of these flags are supported on the mapping server; the mapping client ignores them.

Each time a prefix or a range of prefixes is configured in the SR mapping database in any routing instance, the router issues for this prefix, or range of prefixes, a prefix-SID sub-TLV within an IS-IS SID/label binding TLV in that instance. The flooding scope of the TLV from the mapping server is determined as previously described. No further check of the reachability of that prefix in the mapping server route table is performed. No check of the SID index is performed to determine whether the SID index is a duplicate of an existing prefix in the local IGP instance database or if the SID index is out of range with the local SRGB.

IP prefix resolution for segment routing mapping server

The following processing rules apply for IP prefix resolution.

-

SPF calculates the next hops, up to max-ecmp, to reach a destination node.

-

Each prefix inherits the next hops of one or more destination nodes advertising it.

-

A prefix advertised by multiple nodes, all reachable with the same cost, inherits up to max-ecmp next hops from the advertising nodes.

-

The next-hop selection value, up to max-ecmp, is based on sorting the next hops by:

-

lowest next-hop router ID

-

lowest interface index, for parallel links to same router ID

Each next hop keeps a reference to the destination nodes from which it was inherited.

-

Prefix SID resolution for segment routing mapping server

The following processing rules apply for prefix SID resolution.

-

For a specific prefix, IGP selects the SID value among multiple advertised values in the following order:

-

local intra-area SID owned by this router

-

prefix SID sub-TLV advertised within an IP Reachability TLV

If multiple SIDs exist, select the SID corresponding to the destination router or the ABR with the lowest system ID that is reachable using the first next hop of the prefix.

-

IS-IS SID and label binding TLV from the mapping server

If multiple SIDs exist, select the following, using the preference rules in draft-ietf-spring-conflict-resolution-05 [sid-conflict-resolution] when applied to the SRMS entries of the conflicting SIDs. The order of these rules is as follows:

- smallest range

- smallest starting address

- smallest algorithm

- smallest starting SID

Note: If an L1L2 router acts as a mapping server and also re-advertises the mapping server prefix SID from other mapping servers, the redistributed mapping server prefix SID is preferred by other routers resolving the prefix, which may result in not selecting the mapping server respecting these rules. -

-

The selected SID is used with all ECMP next-hops from step 1 toward all destination nodes or ABR nodes which advertised the prefix.

-

If duplicate prefix SIDs exist for different prefixes after the above steps, the first SID that is processed is programmed for its corresponding prefix. Subsequent SIDs cause a duplicate SID trap message and are not programmed. The corresponding prefixes are still resolved and programmed normally using IP next-next-hops.

SR tunnel programming for segment routing mapping server

The following processing rules apply for SR tunnel programming.

-

If the prefix SID is resolved from a prefix SID sub-TLV advertised within an IP Reachability TLV, one of the following applies:

-

The SR ILM label is swapped to an SR NHLFE label, as in SR tunnel resolution when the next hop of the IS-IS prefix is SR-enabled.

-

The SR ILM label is stitched to an LDP FEC of the same prefix when either the next hop of the IS-IS prefix is not SR-enabled (no SR NHLFE) or an import policy rejects the prefix (SR NHLFE is deprogrammed).

The LDP FEC can also be resolved by using the same or a different IGP instance as that of the prefix SID sub-TLV or by using a static route.

-

-

If the prefix SID is resolved from a mapping server advertisement, one of the following applies:

-

The SR ILM label is stitched to an LDP FEC of the same prefix, if one exists. The stitching is performed even if an import policy rejects the prefix in the local ISIS instance.

The LDP FEC can also be resolved by using a static route, a route within an IS-IS instance, or a route within an OSPF instance. The IS-IS or OSPF instances can be the same as, or different form the IGP instance that advertised the mapping server prefix SID sub-TLV.

-

The SR ILM label is swapped to an SR NHLFE label. This is only possible if a route is exported from another IGP instance into the local IGP instance without propagating the prefix SID sub-TLV with the route. Otherwise, the SR ILM label is swapped to an SR NHLFE label toward the stitching node.

-

Segment routing shortest path forwarding with OSPF

This section describes the segment routing shortest path forwarding with OSPF.

OSPFv2 control protocol changes

New TLVs and sub-TLVs are defined in draft-ietf-ospf-segment-routing-extensions-04 and are required for the implementation of segment routing in OSPF. Specifically:

prefix SID sub-TLV part of the OSPFv2 Extended Prefix TLV

prefix SID sub-TLV part of the OSPFv2 Extended Prefix Range TLV

adjacency SID sub-TLV part of the OSPFv2 Extended Link TLV

SID/Label Range capability TLV

SR-Algorithm capability TLV

This section describes the behaviors and limitations of OSPF support of segment routing TLV and sub-TLVs.

SR OS originates a single prefix SID sub-TLV per OSPFv2 Extended Prefix TLV and processes the first one only if multiple prefix SID sub-TLVs are received within the same OSPFv2 Extended Prefix TLV.

SR OS encodes the 32-bit index in the prefix SID sub-TLV. The 24-bit label or variable IPv6 SID is not supported.

SR OS originates a prefix SID sub-TLV with the following encoding of the flags:

The NP-Flag is always set. The label for the prefix SID is pushed by the PHP router when forwarding to this router. SR OS PHP routers process a received prefix SID with the NP-flag set to zero and use implicit-null for the outgoing label toward the router which advertised it.

The M-Flag is always unset because SR OS does not support originating a mapping server prefix-SID sub-TLV.

The E-flag is always set to zero. SR OS PHP routers process a received prefix SID with the E-flag set to 1, and when the NP-flag is also set to 1 they push explicit-null for the outgoing label toward the router which advertised it.

The V-flag is always set to 0 to indicate an index value for the SID.

The L-flag is always set to 0 to indicate that the SID index value is not locally significant.

The algorithm field is set to zero to indicate Shortest Path First (SPF) algorithm based on link IGP metric or to the flexible algorithm number.

SR OS resolves a prefix SID received within an Extended Prefix TLV based on the following route preference:

SID received via an intra-area route in a prefix SID sub-TLV part of Extended Prefix TLV

SID received via an inter-area route in a prefix SID sub-TLV part of Extended Prefix TLV

SR OS originates an adjacency SID sub-TLV with the following encoding of the flags:

The B-flag is set to zero and is not processed on receipt.

The V-flag is always set.

The L-flag is always set.

The G-flag is not supported.

The weight octet is not supported and is set to all zeros.

An adjacency SID is assigned to next hops over both the primary and secondary interfaces.

SR OS can originate the OSPFv2 Extended Prefix Range TLV as part of the Mapping Server feature and can process it properly, if received. Consider the following rules and limitations:

Only the prefix SID sub-TLV within the TLV is processed and the ILMs installed if the prefixes are resolved.

The range and address prefix fields are processed. Each prefix is resolved separately.

If the same prefix is advertised with both a prefix SID sub-TLV in an IP-reachability TLV and a mapping server Prefix-SID sub-TLV, the resolution follows the following route preference:

the SID received via an intra-area route in a prefix SID sub-TLV part of Extended Prefix TLV

the SID received via an inter-area route in a prefix SID sub-TLV part of Extended Prefix TLV

the SID received via an intra-area route in a prefix SID sub-TLV part of a OSPFv2 Extended Range Prefix TLV

the SID received via an inter-area route in a prefix SID sub-TLV part of a OSPFv2 Extended Range Prefix TLV

Leaking does not occur within the TLV between areas. Also, an ABR does not propagate the prefix-SID sub-TLV from the Extended Prefix Range TLV (received from a mapping server) into an Extended Prefix TLV if the latter is propagated between areas.

The mapping server which advertised the OSPFv2 Extended Prefix Range TLV does not need to be in the shortest path for the FEC prefix.

If the same FEC prefix is advertised in multiple OSPFv2 Extended Prefix Range TLVs by different routers, the SID in the TLV of the first router that is reachable is used. If that router becomes unreachable, the next reachable one is used.

There is no check to determine whether the contents of the OSPFv2 Extended Prefix Range TLVs received from different mapping servers are consistent.

Any other sub-TLV, for example, the ERO metric and unnumbered interface ID ERO, is ignored but the user can get a dump of the octets of the received-but-not-supported sub-TLVs using the existing IGP show command.

SR OS supports propagation on the ABR of external prefix LSAs into other areas with routeType set to 3 as per draft-ietf-ospf-segment-routing-extensions-04.

SR OS supports propagation on the ABR of external prefix LSAs with route type 7 from NSSA area into other areas with route type set to 5 as per draft-ietf-ospf-segment-routing-extensions-04. SR OS does not support propagating the prefix SID sub-TLV between OSPF instances.

When the user configures an OSPF import policy, the outcome of the policy applies to prefixes resolved in RTM and the corresponding tunnels in TTM. So, a prefix removed by the policy does not appear as both a route in RTM and as an SR tunnel in TTM.

OSPFv3 control protocol changes

The OSPFv3 extensions support the following TLVs:

a prefix SID that is a sub-TLV of the OSPFv3 prefix TLV

The OSPFv3 prefix TLV is a new top-level TLV of the extended prefix LSA introduced in draft-ietf-ospf-ospfv3-lsa-extend. The OSPFv3 instance can operate in either LSA sparse mode or extended LSA mode.

The config>router>extended-lsa only command advertises the prefix SID sub-TLV in the extended LSA format in both cases.

an adjacency SID that is a sub-TLV of the OSPFv3 router-link TLV

The OSPFv3 router-link TLV is a new top-level TLV in the extended router LSA introduced in draft-ietf-ospf-ospfv3-lsa-extend. The OSPFv3 instance can operate in either LSA sparse mode or extended LSA mode. The config>router>extended-lsa only command advertises the adjacency SID sub-TLV in the extended LSA format in both cases.

the SR-Algorithm TLV and the SID/Label range TLV

Both of these TLVs are part of the TLV-based OSPFv3 Router Information Opaque LSA defined in RFC 7770.

Announcing ELC, MSD-ERLD and MSD-BMI with OSPF

OSPF has the ability to announce node Entropy Label Capability (ELC), the Maximum Segment Depth (MSD) for node Entropy Readable Label Depth (ERLD), and the Maximum Segment Depth (MSD) for node Base MPLS Imposition (BMI). If needed, exporting these OSPF extensions into BGP-LS requires no additional configuration. These extensions are standardized through draft-ietf-ospf-mpls-elc-12, Signaling Entropy Label Capability and Entropy Readable Label-stack Depth Using OSPF, and RFC 8476, Signaling Maximum SID Depth (MSD) Using OSPF.

The ELC, ERLD, and BMI OSPF values are announced automatically when entropy and segment routing is enabled on the router. The following configuration logic is used:

ELC is automatically announced for host prefixes associated with a node SID when segment-routing and segment-routing entropy-label are enabled for OSPF.

The router maximum node ERLD is announced for OSPF when segment-routing and segment-routing entropy-label is enabled together with advertise-router-capability.

The router maximum node MSD-BMI for OSPF is announced when segment-routing advertise-router-capability are enabled.

Exporting ELC, MSD-ERLD and MSD-BMI OSPF extensions into BGP-LS encoding occurs automatically when database-export for BGP-LS is configured.

The announced value for maximum node MSD-ERLD and MSD-BMI can be modified to a smaller number using the override-bmi and override-erld commands. This can be useful when services (such as EVPN) or more complex link protocols (such as Q-in-Q) are deployed. Provisioning correct ERLD and BMI values helps controllers and local-cspf to construct valid segment routing label stacks to be deployed in the network.

Segment routing parameters are configured in the following context:

configure>router>ospf>segment-routing

configure>router>ospf>segment-routing>override-bmi value

configure>router>ospf>segment-routing>override-erld valueEntropy label for OSPF segment routing

The router supports the MPLS entropy label, as specified in RFC 6790, on OSPF segment-routed tunnels. LSR nodes in a network can load-balance labeled packets in a more granular way than by hashing on the standard label stack. See the MPLS Guide for more information.

Announcing of Entropy Label Capability (ELC) is supported, however, processing of ELC signaling is not supported for OSPF segment-routed tunnels. Instead, ELC is configured at the head-end LER using the configure router ospf entropy-label override-tunnel-elc command. This command causes the router to ignore any advertisements for ELC that may or may not be received from the network, and to assume that the whole domain supports entropy labels.

IPv6 segment routing using MPLS encapsulation in OSPFv3

This feature supports SR IPv6 tunnels in OSPFv3 instances 0 to 31. The user can configure a node SID for the primary IPv6 global address of a loopback interface, which then gets advertised in OSPFv3. OSPFv3 automatically assigns and advertises an adjacency SID for each adjacency with an IPv6 neighbor. After the node SID is resolved, it is used to install an IPv6 SR-OSPF3 tunnel in the TTMv6 for use by the routes and services.

Segment routing mapping server for IPv4 prefixes

The mapping server feature configures and advertises, in OSPF, of the node SID index for prefixes of routers which are in the LDP domain. This is performed in the router acting as a mapping server and using a prefix-SID sub-TLV within an OSPF Extended Prefix Range TLV.

Use the following command syntax to configure the SR mapping database in OSPF:

configure

— router

— [no]ospf

— segment-routing

— no segment-routing

— mapping-server

— sid-map node-sid {index value 0 to 4294967295 [range value 1 to 65535]} prefix {{ip-address/mask}|{netmask}}[scope {area area-id | as}]

— no sid-map node-sid index value

The user enters the node SID index, for one prefix or a range of prefixes, by specifying the first index value and, optionally, a range value. The default value for the range option is 1. Only the first prefix in a consecutive range of prefixes must be entered. If the user enters the first prefix with a mask lower than 32, the OSPF Extended Prefix Range TLV is advertised but a router that receives the OSPF Extended Prefix Range TLV does not resolve the SID and instead generates a trap.

The no form of the sid-map command deletes the range of node SIDs beginning with the specified index value. The no form of the mapping-server command deletes all node SID entries in the OSPF instance.

Use the scope option to specify the flooding scope of the mapping server for the generated OSPF Extended Prefix Range TLV. There is no default value. If the scope is a specific area, the TLV is flooded only in that area.

An ABR that propagates an intra-area OSPF Extended Prefix Range TLV flooded by the mapping server in that area into other areas sets the inter-area flag (IA-flag). The ABR also propagates the TLV if it is received with the inter-area flag set from other ABR nodes but only from the backbone to leaf areas and not leaf areas to the backbone. However, if the identical TLV was advertised as an intra-area TLV in a leaf area, the ABR does not flood the inter-area TLV into that leaf area.

Each time a prefix or a range of prefixes is configured in the SR mapping database in any routing instance, the router issues for this prefix, or range of prefixes, a prefix-SID sub-TLV within an OSPF Extended Prefix Range TLV in that instance. The flooding scope of the TLV from the mapping server is determined as previously described. The reachability of that prefix in the mapping server route table is not checked. Additionally, the SR OS does not check whether the SID index is a duplicate of an existing prefix in the local IGP instance database or if the SID index is out of range with the local SRGB.

IP prefix resolution for segment routing mapping server

The following processing rules apply for IP prefix resolution.

-

SPF calculates the next hops, up to max-ecmp, to reach a destination node.

-

Each prefix inherits the next hops of one or more destination nodes advertising it.

-

A prefix advertised by multiple nodes, all reachable with the same cost, inherits up to max-ecmp next hops from the advertising nodes.

-

The next-hop selection value, up to max-ecmp, is based on sorting the next hops by:

-

lowest next-hop router ID

-

lowest interface index, for parallel links to same router ID

Each next hop keeps a reference to the destination nodes from which it was inherited.

-

Prefix SID resolution for segment routing mapping server

The following processing rules apply for prefix SID resolution.

-

For a specific prefix, IGP selects the SID value among multiple advertised values in the following order:

-

local intra-area SID owned by this router

-

prefix SID sub-TLV advertised within a OSPF Extended Prefix TLV

If multiple SIDs exist, select the SID corresponding to the destination router or ABR with the lowest OSPF Router ID which is reachable via the first next hop of the prefix

-

OSPF Extended Prefix Range TLV from mapping server

If multiple SIDs exist, select the following, using the preference rules in draft-ietf-spring-conflict-resolution-05 when applied to the SRMS entries of the conflicting SIDs. The order of these rules is as follows:

- smallest range

- smallest starting address

- smallest algorithm

- smallest starting SID

-

-

The selected SID is used with all ECMP next hops from step 1 toward all destination nodes or ABR nodes which advertised the prefix.

-

If duplicate prefix SIDs exist for different prefixes after above steps, the first SID which is processed is programmed for its corresponding prefix. Subsequent SIDs causes a duplicate SID trap message and are not programmed. The corresponding prefixes are still resolved normally using IP next hops.

SR tunnel programming for segment routing mapping server

The following processing rules apply for SR tunnel programming.

-

If the prefix SID is resolved from a prefix SID sub-TLV advertised within an OSPF Extended Prefix TLV, one of the following applies:

-

The SR ILM label is swapped to an SR NHLFE label as in SR tunnel resolution when the next hop of the OSPF prefix is SR-enabled.

-

The SR ILM label is stitched to an LDP FEC of the same prefix when either the next hop of the OSPF prefix is not SR enabled (no SR NHLFE) or an import policy rejects the prefix (SR NHLFE deprogrammed).

The LDP FEC can also be resolved using the same or a different IGP instance as that of the prefix SID sub-TLV or using a static route.

-

-

If the prefix SID is resolved from a mapping server advertisement, one of the following applies:

-

The SR ILM label is stitched to an LDP FEC of the same prefix, if one exists. The stitching is performed even if an import policy rejects the prefix in the local OSPF instance.

The LDP FEC can also be resolved using a static route, a route within an IS-IS instance, or a route within an OSPF instance. The latter two can be the same as, or different from the IGP instance that advertised the mapping server prefix SID sub-TLV.

-

The SR ILM label is swapped to an SR NHLFE label toward the stitching node.

-

Segment routing with BGP

Segment routing allows a router, potentially by action of an SDN controller, to source route a packet by prepending a segment router header containing an ordered list of SIDs. Each SID can be viewed as some sort of topological or service-based instruction. A SID can have a local impact to one particular node or it can have a global impact within the SR domain, such as the instruction to forward the packet on the ECMP-aware shortest path to reach a prefix P. With SR-MPLS, each SID is an MPLS label and the complete SID list is a stack of labels in the MPLS header.

For all the routers in a network domain to have a common interpretation of a topology SID, the association of the SID with an IP prefix must be propagated by a routing protocol. Traditionally this is done by an IGP protocol, however, in some cases the effect of a SID may need to be carried across network boundaries that extend beyond IGP protocol boundaries. For these cases, BGP can carry the association of an SR-MPLS SID with an IP prefix. This is possible by attaching a prefix-SID BGP path attribute to an IP route belonging to a labeled-unicast address family. The prefix SID attribute attached to a labeled-unicast route for prefix P advertises a SID corresponding to the network-wide instruction to forward the packet along the ECMP-aware BGP-computed best path or paths to reach P. The prefix-SID attribute is an optional, transitive BGP path attribute with type code 40. This attribute encodes a 32-bit label-index (into the SRGB space) and also provides details about the SRGB space of the originating router. The encoding of this BGP path attribute and its semantics are further described in draft-ietf-idr-bgp-prefix-sid.

An SR OS router with upgraded software that processes the prefix SID attribute can prevent it from propagating outside the segment routing domain where it is applicable, using the block-prefix-sid command. This BGP command removes the prefix SID attribute from all routes sent and received to and from the IBGP and EBGP peers included in the scope of the command. By default, the attribute propagates without restriction.

SR OS attaches a meaning to a prefix SID attribute only when it is attached to routes belonging to the labeled-unicast IPv4 and labeled-unicast IPv6 address families. When attached to routes of unsupported address families, the prefix SID attribute is ignored but still propagated, as with any other optional transitive attribute.

Segment routing must be administratively enabled under BGP using the following command: config>router>bgp>segment-routing>no shutdown for any of the following behaviors to be possible:

For BGP to redistribute a static or IGP route for a /32 IPv4 prefix as a label-ipv4 route, or a /128 IPv6 prefix as a label-ipv6 route, with a prefix SID attribute, a route-table-import policy with an sr-label-index action is required.

For BGP to add or modify the prefix SID attribute in a received label-ipv4 or label-ipv6 route, a BGP import policy with an sr-label-index action is required.

For BGP to advertise a label-ipv4 or label-ipv6 route with an incoming datapath label based on the attached prefix SID attribute when BGP segment-routing is disabled, new label values assigned to label-ipv4 or label-ipv6 routes come from the dynamic label range of the router and has no network-wide impact.

To enable BGP segment routing, the base router BGP instance must be associated with a prefix-sid-range. This command specifies which SRGB label block to use (for example, to allocate labels). This command also specifies which SRGB label block to advertise in the Originator SRGB TLV of the prefix SID attribute. The global parameter value indicates that BGP should use the SRGB as configured under config>router>mpls-labels>sr-labels. The start-label and max-index parameters are used to restrict the BGP prefix SID label range to a subset of the global SRGB.

This is useful when partitioning of the SRGB into non-overlapping subranges dedicated to different IGP/BGP protocol instances is required. Segment routing under BGP must be shutdown before any changes can be made to the prefix-sid-range command.

A unique label-index value is assigned to each unique IPv4 or IPv6 prefix that is advertised with a BGP prefix SID. If label-index N1 is assigned to a BGP-advertised prefix P1, and N1 plus the SRGB start-label creates a label value that conflicts with another SR programmed LFIB entry, then the conflict situation is addressed according to the following rules:

-

If the conflict is with another BGP route for prefix P2 that was advertised with a prefix SID attribute, all the conflicting BGP routes (for P1 and P2) are advertised with a normal BGP-LU label from the dynamic label range.

-

If the conflict is with an IGP route, and BGP is not attempting to redistribute that IGP route as a label-ipv4 or label-ipv6 route with a route-table-import policy action that uses the prefer-igp keyword in the sr-label-index command, the IGP route takes priority and the BGP route is advertised with a normal BGP-LU label from the dynamic label range.

-

If the conflict is with an IGP route, and BGP is trying to redistribute that IGP route as a label-ipv4 or label-ipv6 route with a route-table-import policy action that uses the prefer-igp keyword in the sr-label-index command, this is not considered a conflict and BGP uses the IGP-signaled label-index to derive its advertised label. This has the effect of stitching the BGP segment routing tunnel to the IGP segment routing tunnel.

Any /32 label-ipv4 or /128 label-ipv6 BGP routes containing a prefix SID attribute can be resolved and used in the same way as /32 label-ipv4 or /128 label-ipv6 routes without a prefix SID attribute. That is, these routes are installed in the route table and tunnel table (unless disable-route-table-install or selective-label-ipv4-install are in effect), and they can have ECMP next hops or FRR backup next hops and be used as transport tunnels for any service that supports BGP-LU transport.

Note that receiving a /32 label-ipv4 or /128 label-ipv6 route with a prefix-SID attribute does not create a tunnel in the segment-routing database; it only creates a label swap entry when the route is re-advertised with a new next hop. It is recommended the first SID in any SID-list of an SR policy should not be based on a BGP prefix SID; if this recommendation is not followed, then the SID-list may appear to be valid but the datapath is not programmed correctly. However, it is acceptable to use a BGP prefix SID for any SID other than first SID in any SR policy.

Segment routing operational procedures

Prefix advertisement and resolution

After segment routing is successfully enabled in the IS-IS or OSPF instance, the router performs the following operations. See IS-IS control protocol changes, OSPFv2 control protocol changes, and OSPFv3 control protocol changes for information about all TLVs and sub-TLVs for both IS-IS and OSPF protocols.

-

Advertise the Segment Routing Capability sub-TLV to routers in all areas or levels of this IGP instance. However, only neighbors with which it established an adjacency interpret the SID/label range information and use it for calculating the label to swap to or push for a specific resolved prefix SID.

-

Advertise the assigned index for each configured node SID in the new prefix SID sub-TLV with the N-flag (node-SID flag) set. The segment routing module programs the ILM with a pop operation for each local node SID in the data path.

-

Assign and advertise an adjacency SID label for each formed adjacency over a network IP interface in the new Adjacency SID sub-TLV. The following points should be considered:

-

Adjacency SID is advertised for both numbered and unnumbered network IP interfaces.

-

Adjacency SID is not advertised for an IES interface because access interfaces do not support MPLS.

-

Adjacency SID must be unique per instance and per adjacency. ISIS MT=0 can establish an adjacency for both IPv4 and IPv6 address families over the same link. In this case, a different adjacency SID is assigned to each next hop. However, the existing IS-IS implementation assigns a single Protect-Group ID (PG-ID) to the adjacency and therefore when the state machine of a BFD session tracking the IPv4 or IPv6 next hop times out, an action is triggered for the prefixes of both address families over that adjacency.

The segment routing module programs the ILM with a swap to an implicit null label operation for each advertised adjacency SID.

-

-

Resolve received prefixes and, if a prefix SID sub-TLV exists, the Segment Routing module programs the ILM with a swap operation and an LTN with a push operation, both pointing to the primary/LFA NHLFE. An SR tunnel is also added to the TTM. If a node SID resolves over an IES interface, the data path is not programmed and a trap message is generated. Thus, only next-hops of an ECMP set corresponding to network IP interfaces are programmed in the data path; next-hops corresponding to IES interfaces are not programmed. However, if the user configures the interface as network on one side and IES on the other side, MPLS packets for the SR tunnel received on the access side are dropped.

-

LSA filtering causes SIDs not to be sent in one direction which means some node SIDs is resolved in parts of the network upstream of the advertisement suppression.

When the user enables segment routing in an IGP instance, the main SPF and LFA SPF are computed normally and the primary next hop and LFA backup next hop for a received prefix are added to RTM without the label information advertised in the prefix SID sub-TLV. In all cases, the segment routing (SR) tunnel is not added into RTM.

Error and resource exhaustion handling

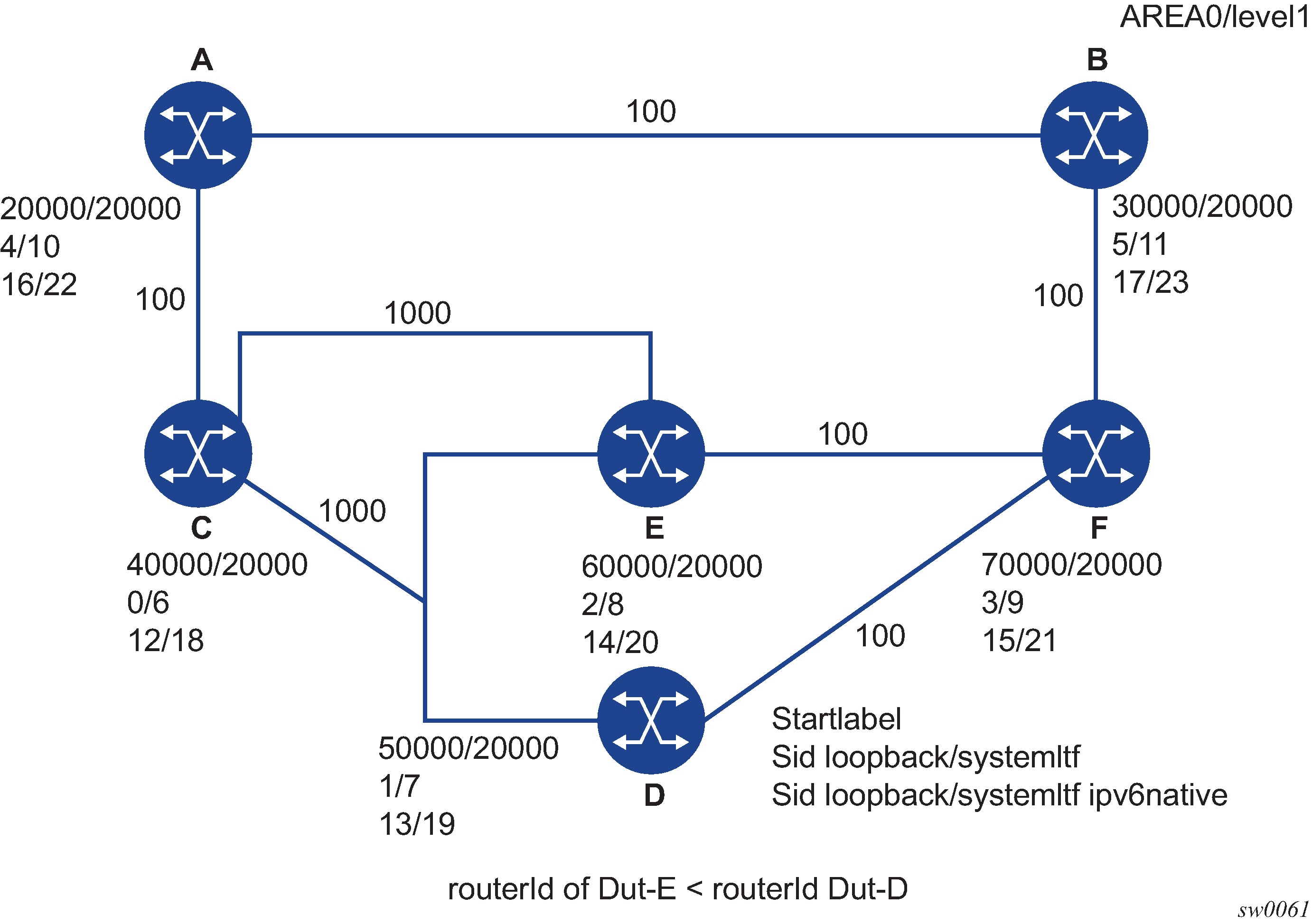

Supporting multiple topologies for the same destination prefix

The SR OS supports assigning different prefix-SID indexes and labels to the same prefix in different IGP instances. While other routers that receive these prefix SIDs program a single route into RTM, based on the winning instance ID as per RTM route type preference, the SR OS adds two tunnels to this destination prefix in TTM. This supports multiple topologies for the same destination prefix.

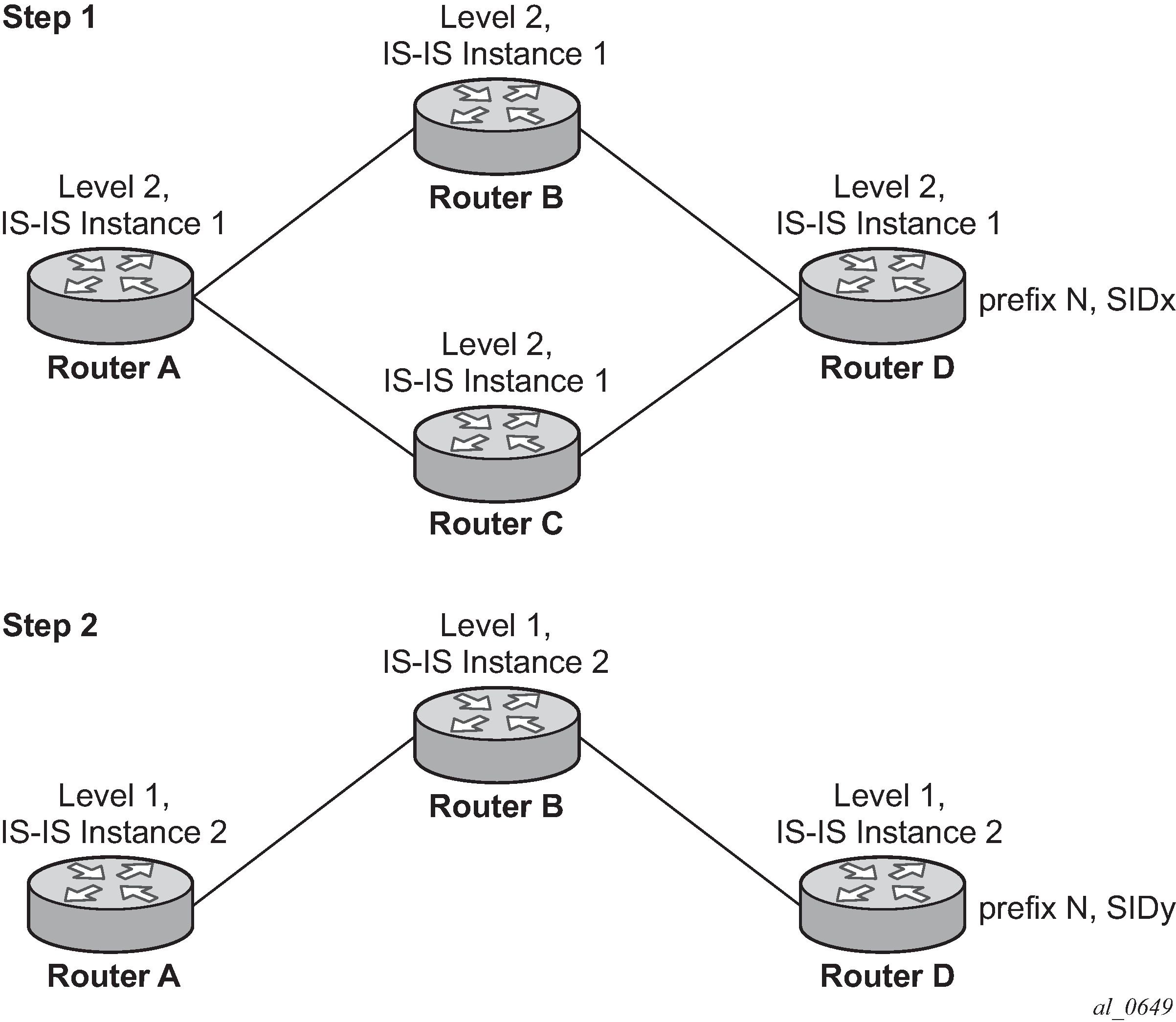

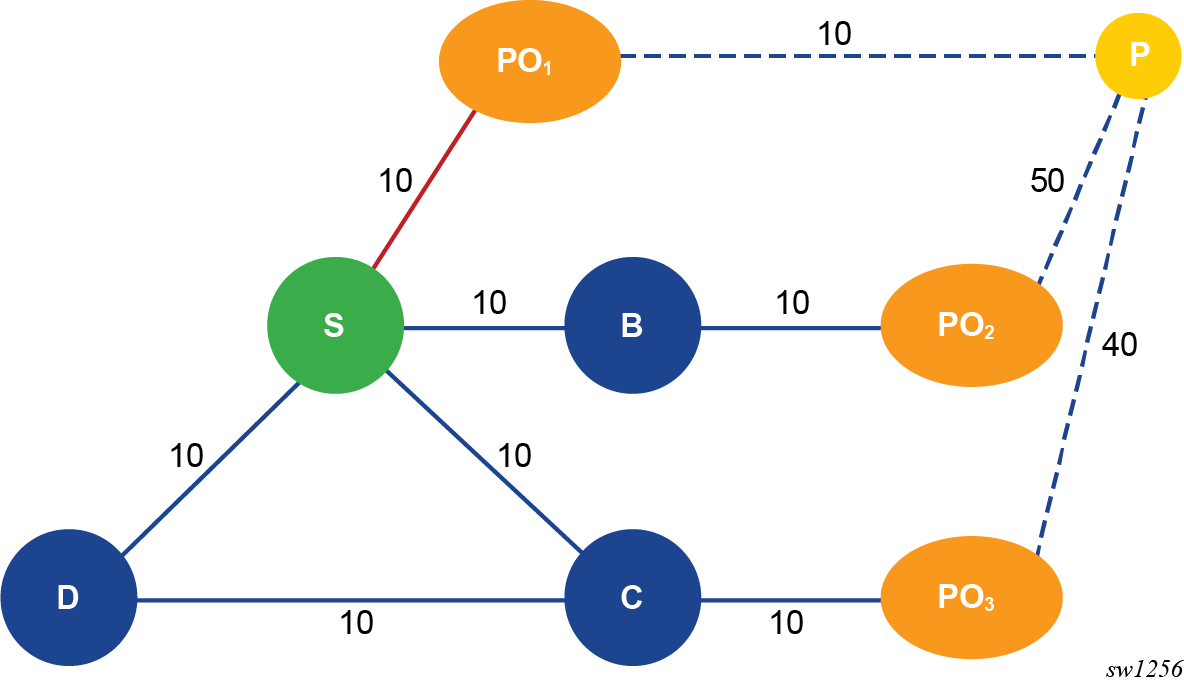

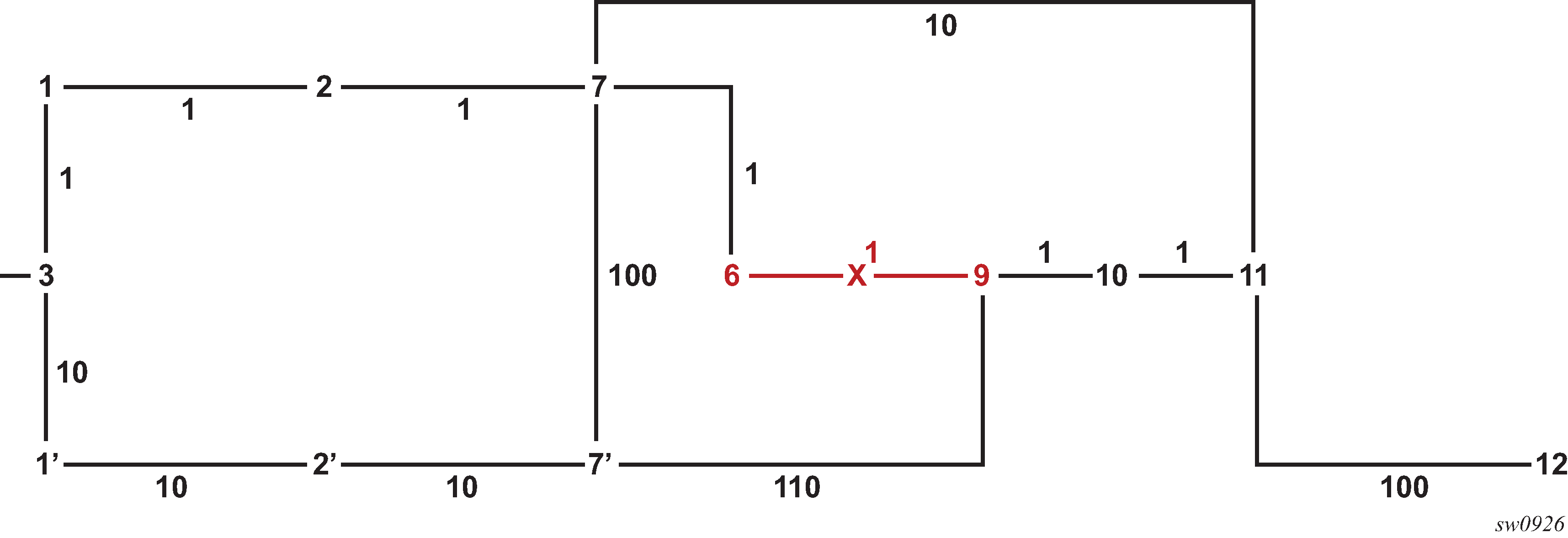

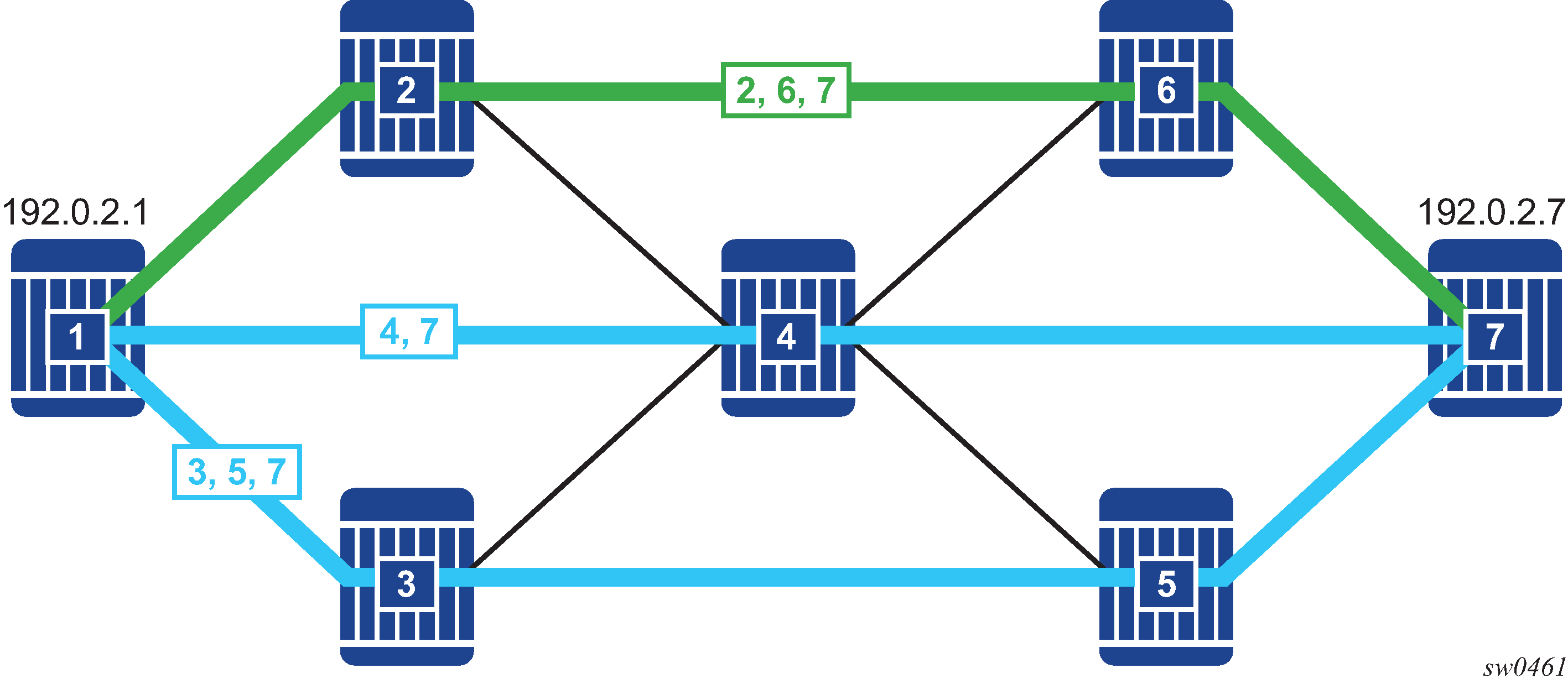

For example: in two instances (L2, IS-IS instance 1 and L1, IS-IS instance 2—see Programming multiple tunnels to the same destination), Router D has the same prefix destination (N) with different SIDs (SIDx and SIDy).

Assume the following route-type preference in RTM and tunnel-type preference in TTM are configured:

-

ROUTE_PREF_ISIS_L1_INTER (RTM) 15

-

ROUTE_PREF_ISIS_L2_INTER (RTM) 18

-

ROUTE_PREF_ISIS_TTM 10

- Router A performs the following resolution within the single IS-IS instance 1,

level 2. All metrics are the same, and ECMP = 2.

-

For prefix N, the RTM entry is:

-

prefix N

-

nhop1 = B

-

nhop2 = C

-

preference 18

-

-

For prefix N, the SR tunnel TTM entry is:

-

tunnel-id 1: prefix N-SIDx

-

nhop1 = B

-

nhop2 = C

-

tunl-pref 10

-

-

- Add IS-IS instance 2 (Level 1) in the same configuration, but in routers A, B, and

C only.

-

For prefix N, the RTM entry is:

-

prefix N

-

nhop1 = B

-

preference 15

RTM prefers L1 route over L2 route

-

-

For prefix N, there are two SR tunnel entries in TTM:

SR entry for L2:

-

tunnel-id 1: prefix N-SIDx

-

nhop1 = B

-

nhop2= C

-

tunl-pref 10

SR entry for L1 is tunnel-id 2: prefix N-SIDy.

-

-

Resolving received SID indexes or labels to different routes of the same prefix within the same IGP instance

The router can perform the following variations of this procedure:

-

When the SR OS does not allow assigning the same SID index or label to different routes of the same prefix within the same IGP instance, the router resolves only one of the duplicate SIDs if the SIDs are received from another segment routing implementation and the SIDs are based on the RTM active route selection.

-

When SR OS does not allow assigning different SID indexes or labels to different routes of the same prefix within the same IGP instance, the router resolves only one of the duplicate SIDs if the SIDs are received from another segment routing implementation and the SIDs are based on the RTM active route selection.

The selected SID is used for ECMP resolution to all neighbors. If the route is inter-area and the conflicting SIDs are advertised by different ABRs, ECMP toward all ABRs uses the selected SID.

Checking for SID errors before programming the ILM and NHLFE

If any of the following conditions are true, the router logs a trap, generates a syslog error message, and does not program the ILM and NHLFE for the prefix SID.

-

The received prefix SID index falls outside of the locally configured SID range.

-

One or more resolved ECMP next-hops for a received prefix SID did not advertise the SR Capability sub-TLV.

-

The received prefix SID index falls outside the advertised SID range of one or more resolved ECMP next-hops.

Programming ILM/NHLFE for duplicate prefix-SID indexes/labels for different prefixes

The router can perform the following variations of this procedure:

-

For received duplicate prefix-SID indexes or labels for different prefixes within the same IGP instance, the router:

-

programs the ILM/NHLFE for the first prefix-SID index or label

-

logs a trap and generates a syslog error message

-

does not program the subsequent prefix-SID index or label in the datapath

-

-

For received duplicate prefix-SID indexes or labels for different prefixes across IGP instances, there are two options.

-

In the global SID index range mode of operation, the resulting ILM label value is the same across the IGP instances. The router:

-

programs ILM/NHLFE for the prefix of the winning IGP instance based on the RTM route-type preference

-

logs a trap and generates a syslog error message

-

does not program the subsequent prefix SIDs in the datapath

-

-

In the per-instance SID index range mode of operation, the resulting ILM label has different values across the IGP instances. The router programs ILM/NHLFE for each prefix as expected.

-

Programming ILM/NHLFE for the same prefix across IGP instances

In global SID index range mode of operation, the resulting ILM label value is the same across the IGP instances. The router programs ILM/NHLFE for the prefix of the winning IGP instance based on the RTM route-type preference. The router logs a trap and generates a syslog error message, and does not program the other prefix SIDs in the datapath.

In the per-instance SID index range mode of operation, the resulting ILM label has different values across the IGP instances. The router programs ILM/NHLFE for each prefix as expected.

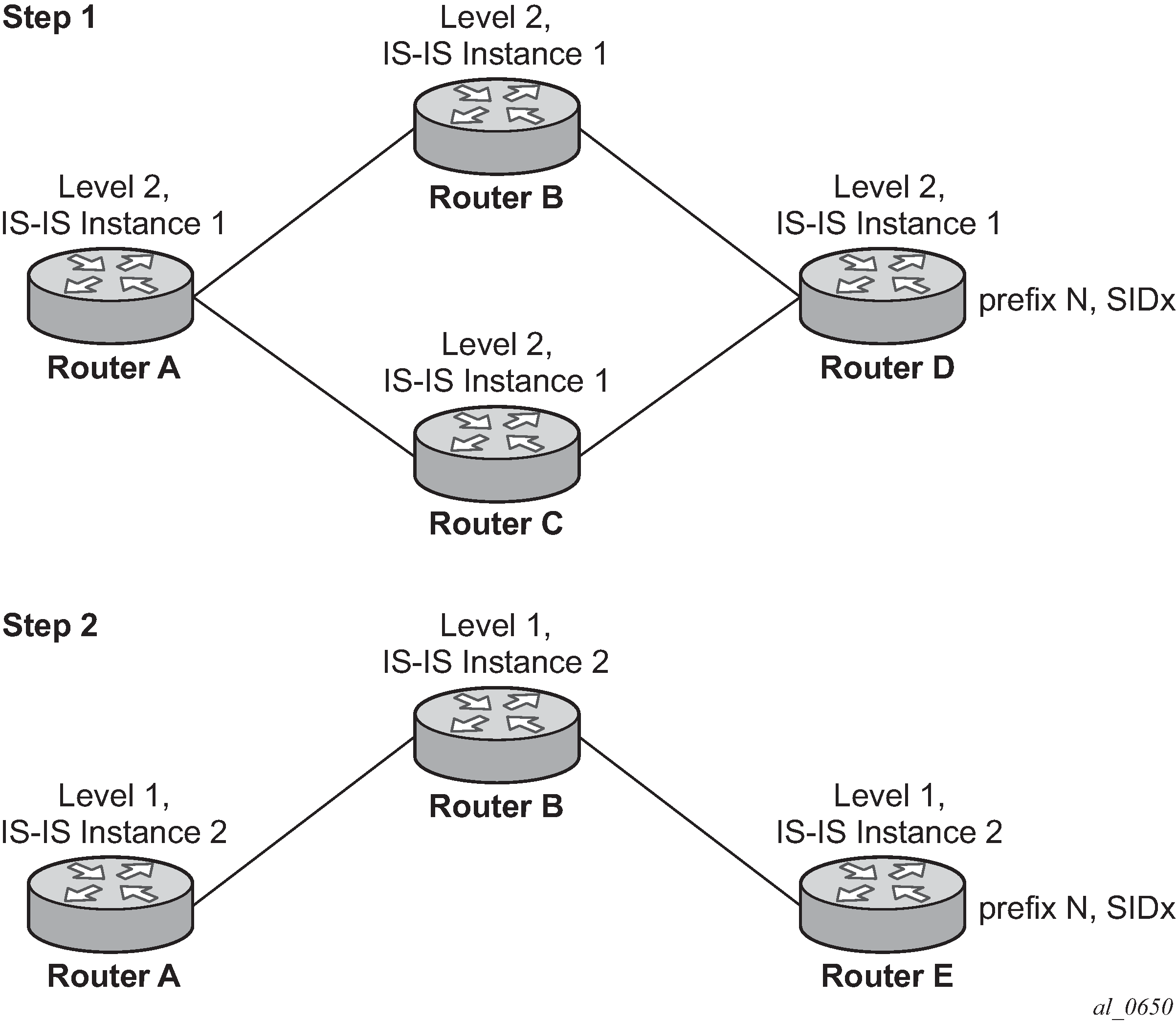

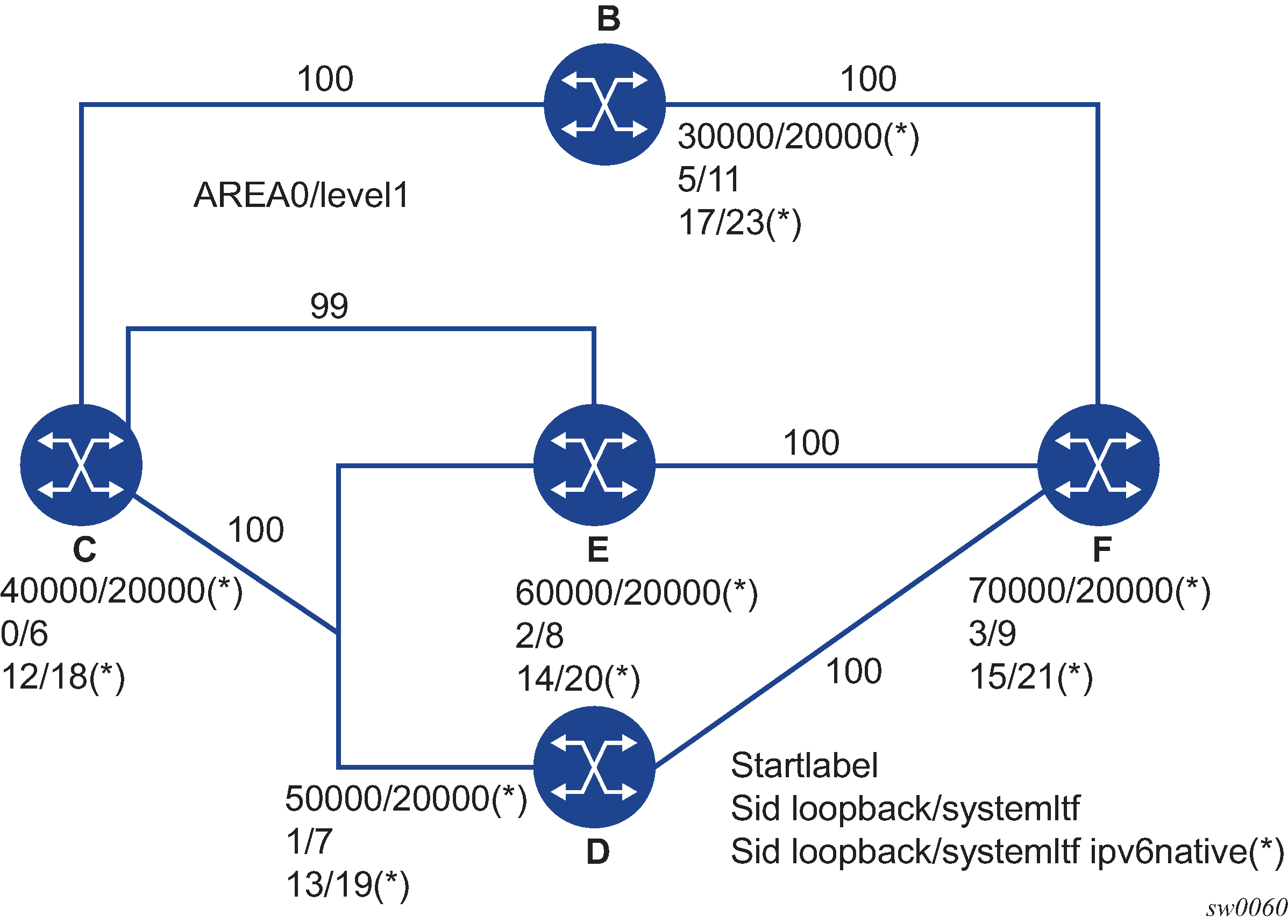

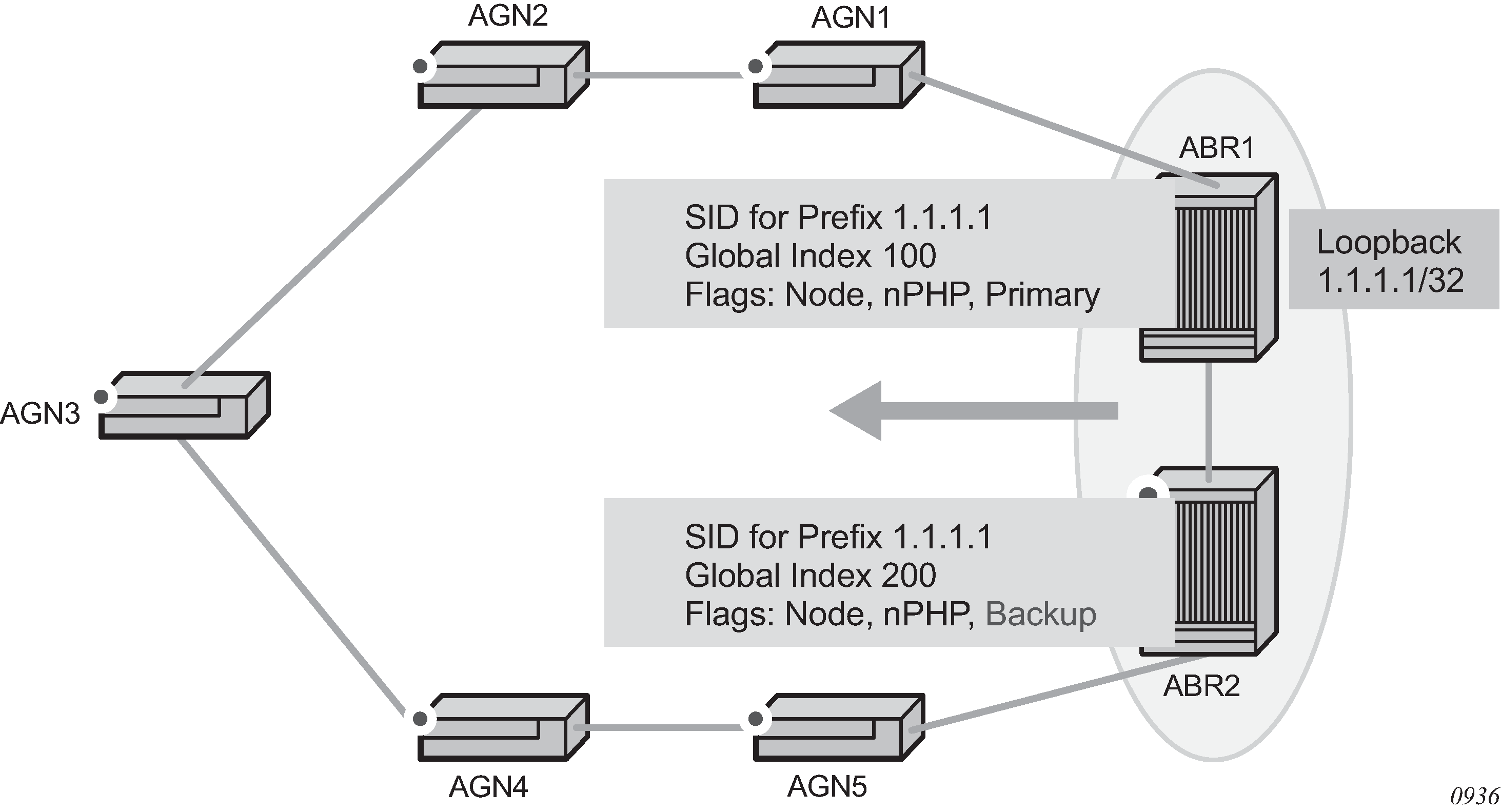

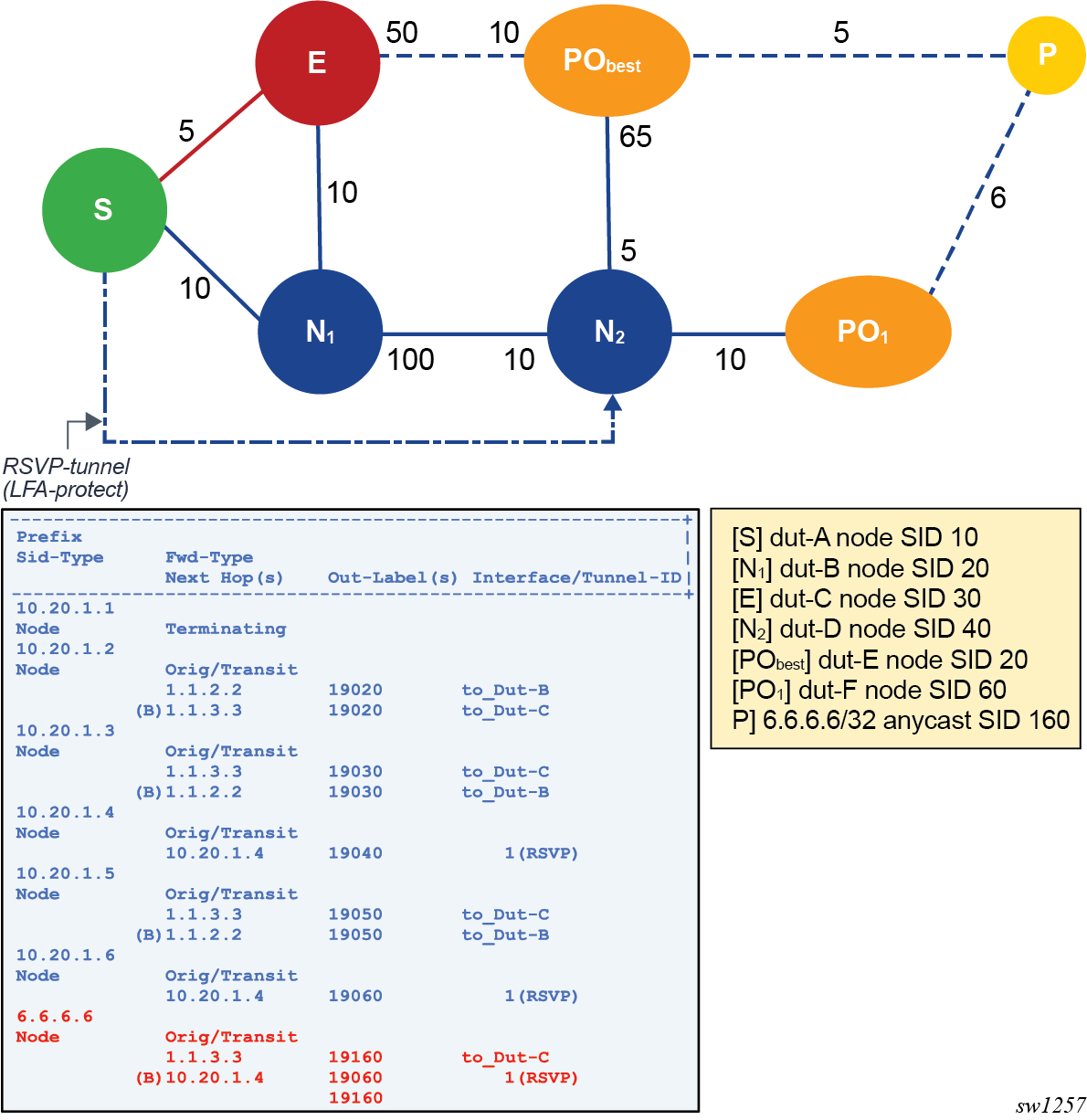

The following figure shows an IS-IS example of handling in case of a global SID index range.

Assume the following route-type preference in RTM and tunnel-type preference in TTM are configured:

-

ROUTE_PREF_ISIS_L1_INTER (RTM) 15

-

ROUTE_PREF_ISIS_L2_INTER (RTM) 18

-

ROUTE_PREF_ISIS_TTM 10

- Router A performs the following resolution within the single level 2, IS-IS instance 1. All

metrics are the same and ECMP = 2.

-

For prefix N, the RTM entry is:

-

prefix N

-

nhop1 = B

-

nhop2 = C

-

preference 18

-

-

For prefix N, the SR tunnel TTM entry is:

-

tunnel-id 1: prefix N-SIDx

-

nhop1 = B

-

nhop2 = C

-

tunl-pref 10

-

-

- Add Level 1, IS-IS instance 2 in the same configuration, but in routers A, B, and E only.

-

For prefix N, the RTM entry is:

-

prefix N

-

nhop1 = B

-

preference 15

The RTM prefers L1 route over L2 route.

-

-

For prefix N, there is one SR tunnel entry for L2 in TTM:

-

tunnel-id 1: prefix N-SIDx

-

nhop1 = B

-

nhop2 = C

-

tunl-pref 10

-

-

Handling ILM resource exhaustion while assigning a SID index/label

If the system exhausted an ILM resource while assigning a SID index/label to a local loopback interface, then index allocation fails and an error is displayed in the CLI. The router logs a trap and generates a syslog error message.

Handling ILM, NHLFE, or other IOM or CPM resource exhaustion while resolving or programming a SID index/label

If the system exhausted an ILM, NHLFE, or any other IOM or CPM resource while resolving and programming a received prefix SID or programming a local adjacency SID, the following occurs:

-

The IGP instance goes into overload and a trap and syslog error message are generated.

-

The segment routing module deletes the tunnel.

The user must manually clear the IGP overload condition after freeing resources. After the IGP is brought back up, it attempts to program all tunnels that previously failed the programming operation at the next SPF.

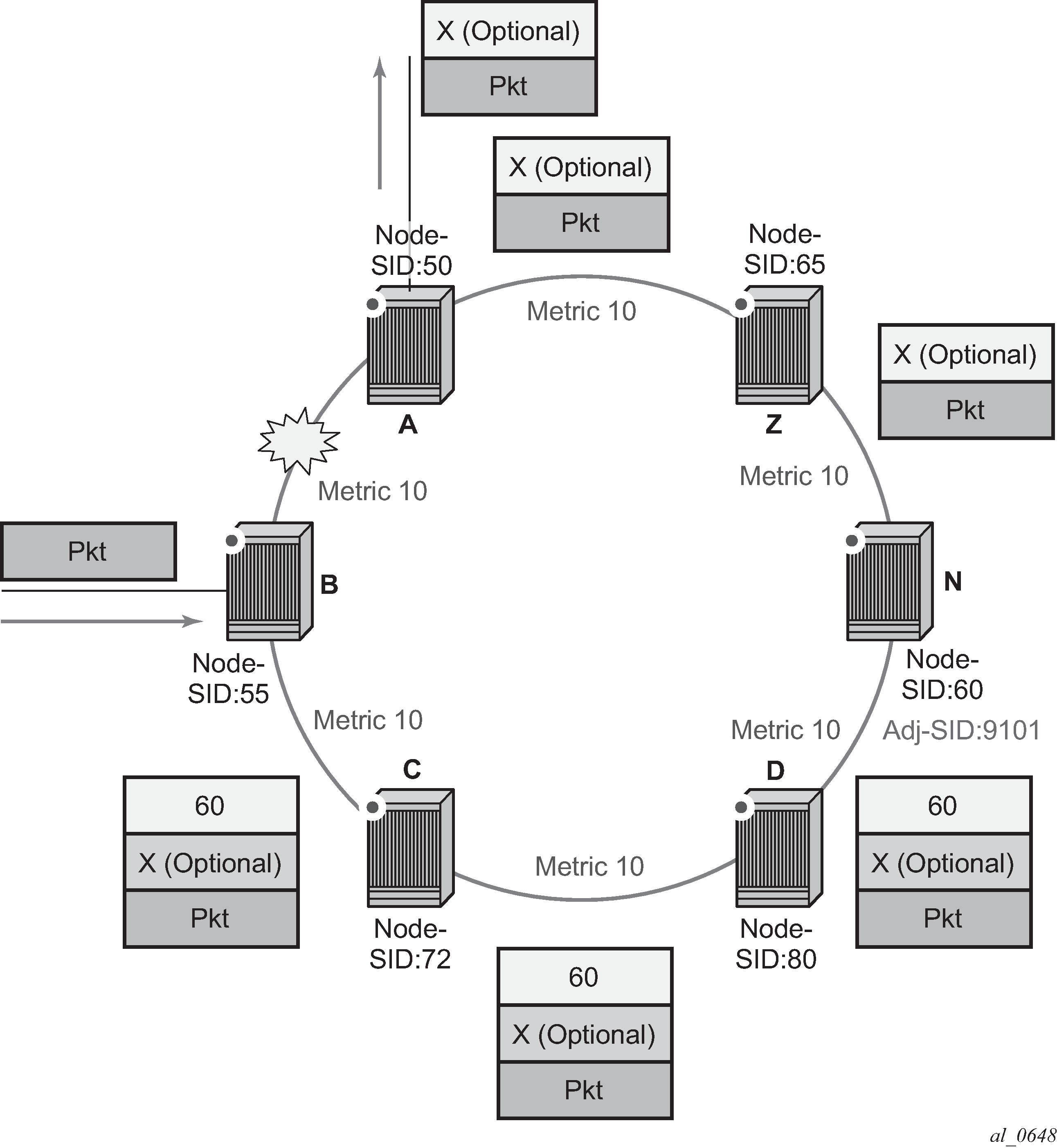

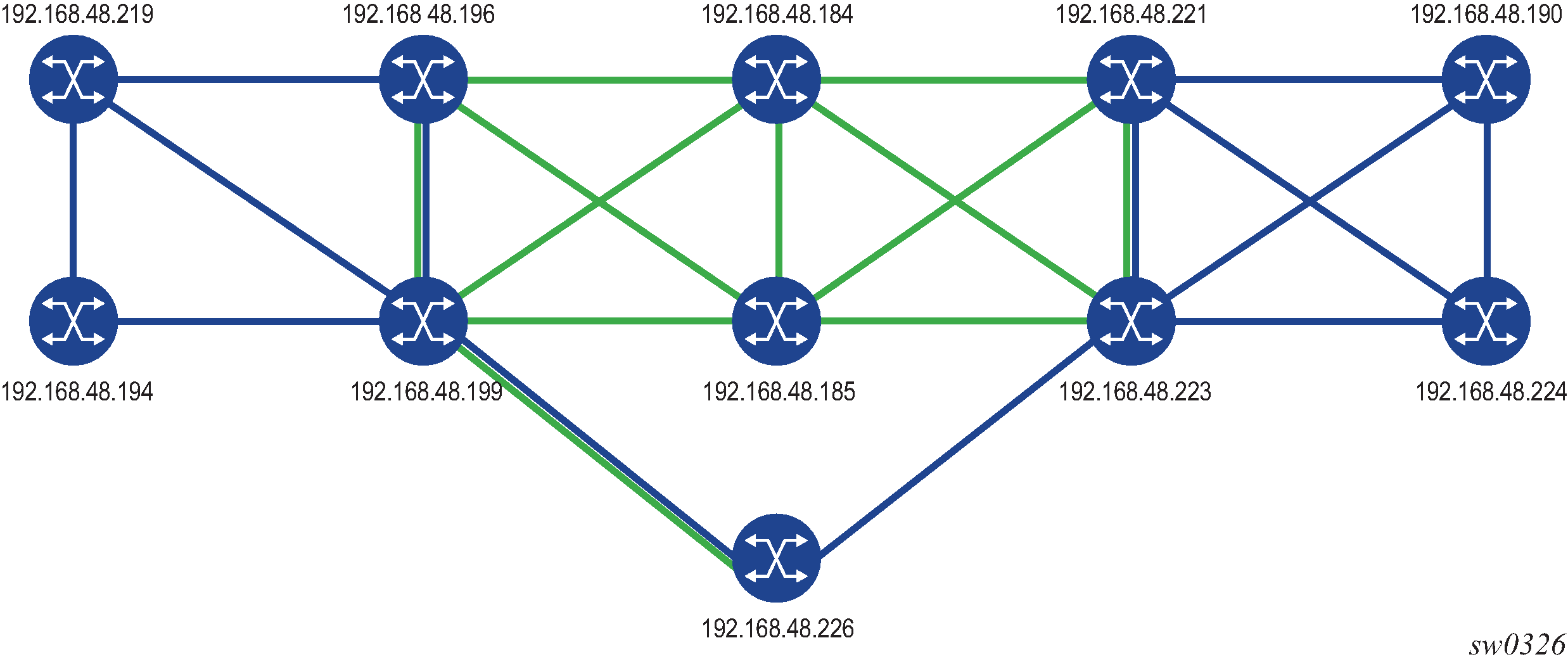

Segment routing tunnel management

The segment routing module adds a shortest path SR tunnel entry to TTM for each resolved remote node SID prefix and programs the data path with the corresponding LTN with the push operation pointing to the primary and LFA backup NHLFEs. The LFA backup next hop for a prefix which was advertised with a node SID is only computed if the loopfree-alternates option is enabled in the IS-IS or OSPF instance. The resulting SR tunnel that is populated in TTM is automatically protected with FRR when an LFA backup next hop exists for the prefix of the node SID.

With ECMP, a maximum of 32 primary next-hops (NHLFEs) are programmed for the same tunnel destination for each IGP instance. ECMP and LFA next-hops are mutually exclusive.

The default preference for shortest path SR tunnels in the TTM is set lower than LDP tunnels but higher than BGP tunnels to allow controlled migration of customers without disrupting their current deployment when they enable segment routing. The following is the setting of the default preference of the tunnel types. This includes the preference of both SR tunnels based on shortest path (SR-ISIS and SR-OSPF).

The global default TTM preference for the tunnel types is as follows:

ROUTE_PREF_RSVP 7

ROUTE_PREF_SR_TE 8

ROUTE_PREF_LDP 9

ROUTE_PREF_OSPF_TTM 10

ROUTE_PREF_ISIS_TTM 11

ROUTE_PREF_BGP_TTM 12

ROUTE_PREF_GRE 255

The default value for SR-ISIS or SR-OSPF is the same, even if one or more IS-IS or OSPF instances programmed a tunnel for the same prefix. The selection of an SR tunnel in this case is based on lowest IGP instance ID.

The TTM preference is used in the case of BGP shortcuts, VPRN auto-bind, or BGP transport tunnel when the tunnel binding commands are configured to the any value, which parses the TTM for tunnels in the protocol preference order. The user can use the global TTM preference or explicitly list the tunnel types to be used. When the tunnel types are listed explicitly, the TTM preference is still used to select one type over the other. In both cases, if the selected tunnel type fails, the system falls back to the next preferred tunned type. When a more preferred tunnel type becomes available, the system reverts to that tunnel type. See BGP shortcut using segment routing tunnel, BGP label route resolution using segment routing tunnel, and Service packet forwarding with segment routing for the detailed service and shortcut binding CLI.

For SR-ISIS and SR-OSPF, the user can change the preference of each IGP instance away from the default values.

config>router>isis>segment-routing>tunnel-table-pref preference 1 to 255

config>router>ospf>segment-routing>tunnel-table-pref preference 1 to 255Tunnel MTU determination

The MTU of an SR tunnel populated into TTM is determined like an IGP tunnel; for example, LDP LSP is based on the outgoing interface MTU minus the label stack size. Segment routing, however, supports remote LFA and TI-LFA, which can program an LFA repair tunnel by adding one or more labels.

To configure the MTU of all SR tunnels within each IGP instance:

config>router>isis>segment-routing>tunnel-mtu bytes bytes

config>router>ospf>segment-routing>tunnel-mtu bytes bytesThere is no default value for this command. If the user does not configure an SR tunnel MTU, the MTU is determined by IGP as described below.

SR_Tunnel_MTU = MIN {Cfg_SR_MTU, IGP_Tunnel_MTU- (1+ frr-overhead)*4}

Where:

-

Cfg_SR_MTU is the MTU configured by the user for all SR tunnels within an IGP instance using the CLI shown above. If no value was configured by the user, the SR tunnel MTU is determined by the IGP interface calculation.

-

IGP_Tunnel_MTU is the minimum of the IS-IS or OSPF interface MTU among all the ECMP paths or among the primary and LFA backup paths of this SR tunnel.

-

frr-overhead is set the following parameters:

-

value of ti-lfa [max-sr-frr-labels labels] if loopfree-alternates and ti-lfa are enabled in this IGP instance

-

1 if loopfree-alternates and remote-lfa are enabled but ti-lfa is disabled in this IGP instance

-

otherwise, it is set to 0

-

The SR tunnel MTU is dynamically updated anytime any of the parameters used in its calculation change. This includes when the set of the tunnel next-hops changes or the user changes the configured SR MTU or interface MTU value.

Segment routing local block

Some labels that are provisioned through CLI or a management interface must be allocated from the Segment Routing Local Block (SRLB). The SRLB is a reserved label block configured under config>router>mpls-labels. See the 7450 ESS, 7750 SR, 7950 XRS, and VSR MPLS Guide for more information about reserved label blocks.

The label block to use is specified by the srlb command under IS-IS or OSPF:

config>router>isis>segment-routing

[no] srlb reserved-label-block-name

config>router>ospf> segment-routing

[no] srlb reserved-label-block-nameProvisioned labels for adjacency SIDs and adjacency SID sets must be allocated from the configured SRLB. If no SRLB is specified, or the requested label does not fall within the SRLB, or the label is already allocated, then the request is rejected.

Bundling adjacencies in adjacency sets

An adjacency set is a bundle of adjacencies, represented by a common adjacency SID for the bundled set. It enables, for example, a path for an SR-TE LSP through a network to be specified while allowing the local node to spray packets across the set of links identified by a single adjacency SID.

SR OS supports both parallel adjacency sets (for example, those where adjacencies originating on one node terminate on a second, common node), and the ability to associate multiple interfaces on a specified node, irrespective of whether the far end of the respective links of those interfaces terminate on the same node.

An adjacency set is created under IS-IS or OSPF using the following CLI commands:

config

router

isis | ospf

segment-routing

[no] adjacency-set id

family [ipv4 | ipv6]

parallel [no-advertise]

no parallel

exit

...

. exit

exit

config

router

ospf

segment-routing

[no] adjacency-set id

parallel [no-advertise]

no parallel

exit

...

.

exit

The adjacency-set id command specifies an adjacency set, where id is an unsigned integer from 0 to 4294967295.

In IS-IS, each adjacency set is assigned an address family, IPv4 or IPv6. The family command for IS-IS indicates the address family of the adjacency set. For OSPF, the address family of the adjacency set is implied by the OSPF version and the instance.

The parallel command indicates that all members of the adjacency set must terminate on the same neighboring node. When the parallel command is configured, the system generates a trap message if a user attempts to add an adjacency terminating on a neighboring node that differs from the existing members of the adjacency set. See Associating an interface with an adjacency set for details about how to add interfaces to an adjacency set. The system stops advertising the adjacency set and deprograms it from TTM. The parallel command is enabled by default.

By default, parallel adjacency sets are advertised in the IGP. The no-advertise option prevents a parallel adjacency set from being advertised in the IGP; it is only advertised if the parallel command is configured. To prevent issues in the case of ECMP if a non-parallel adjacency set is used, an external controller may be needed to coordinate the label sets for SIDs at all downstream nodes. As a result, non-parallel adjacency sets are not advertised in the IGP. The label stack below the adjacency set label must be valid at any downstream node that exposes it, even though it is sprayed over multiple downstream next-hops.

Parallel adjacency sets are programmed in TTM (unless there is an erroneous configuration of a non-parallel adjacency). Non-parallel adjacency sets are not added to TTM or RTM, meaning they cannot be used as a hop at the originating node. Parallel adjacency sets that are advertised are included in the link-state database and TE database, but non-parallel adjacency sets are not included because they are not advertised.

An adjacency set with only one next hop is also advertised as an individual adjacency SID with the S flag set. However, the system does not calculate a backup for an adjacency set even if it has only one next hop.

Associating an interface with an adjacency set

IS-IS or OSPF interfaces are associated with one or more adjacency sets using the following CLI commands. Both numbered and unnumbered interfaces can be assigned to the same adjacency set.

config

router

isis

interface

[no] adjacency-set id

[no] adjacency-set id

[no] adjacency-set id

config

router

ospf

area

interface

[no] adjacency-set id

[no] adjacency-set id

[no] adjacency-set idIf an interface is assigned to an adjacency set, then a common adjacency SID value is advertised for every interface in the set, in addition to the adjacency SID corresponding to the IPv4 and or IPv6 adjacency for the interface. Each IS-IS or OSPF advertisement therefore contains two adjacency SID TLVs for an address family:

an adjacency SID for the interface (a locally-unique value)

an adjacency SID TLV for the adjacency set

This TLV is distinguished by having the S-bit (IS-IS) or G-bit (OSPF) in the flags field set to 1. Its value is the same as other adjacency SIDs in the set at that node.

By default, both the adjacency SID for an interface and the adjacency SID for a set are dynamically allocated by the system. However, it is possible for the user to configure an alternate, static value for the SID; see Provisioning adjacency SID values for an adjacency set for more information.

A maximum of 32 interfaces can be bound to a common adjacency set. Configuring more than 32 interfaces is blocked by the system and a CLI error is generated.

Only point-to-point interfaces can be assigned to an adjacency set.

If a user attempts to assign an IES interface to an adjacency set, the system generates a CLI warning and segment routing does not program the association.

The IGP blocks the configuration of an adjacency set under an interface when the adjacency set has not yet been created under segment-routing.

In IS-IS, it is possible to add Layer 1, Layer 2, or a mix of Layer 1 and Layer 2 adjacencies to the same adjacency set.

Provisioning adjacency SID values for an adjacency set

For an adjacency set, static values are configured using the sid CLI command, as follows:

config>router>isis>segment-routing

[no] adjacency-set id

family [ipv4 | ipv6]

[no] sid label value

parallel [no-advertise]

no parallel

exit

[no] adjacency-set id

family [ipv4 | ipv6]

[no] sid label value

parallel [no-advertise]

no parallel

exit

...

config>router>ospf>segment-routing

[no] adjacency-set id

[no] sid label value

parallel [no-advertise]