EVPN for Layer 2 ELINE services

SR Linux supports EVPN-VPWS services under VPWS network instances where the network instance can only use a single subinterface and BGP-EVPN.

Two connection point objects and at least one bridged subinterface must be configured. Each subinterface configured must be associated with a different connection point.

When one subinterface and EVPN network are configured, they must be associated with different connection points. The local-attachment-circuit and remote-attachment-circuit parameters must be associated with the same connection point.

The vpws-attachment-circuits container hosts the specific EVPN-VPWS configuration, including the configuration and state for local and remote Ethernet tags. The vpws-attachment-circuits can only be configured using the encapulation-type mplscommand.

EVPN multihoming and VPWS network instances (single and all-active) are supported on SR Linux. The configuration of the Ethernet Segments (ESs), supported features, and association with interfaces follows what is described in EVPN Layer 2 multihoming.

-

VPWS services a backup PE in single-active mode, which is the next best PE after the elected DF. Remote nodes forward VPWS traffic to the primary PE (which signals flag P=1), and immediately switch over to the backup PE (which signals flag B=1) upon a failure on the primary PE.

-

For MAC-VRF services, the primary/backup concept is not implemented. Upon a failure on the primary PE, the backup PE does not wait for the activation timer to activate the interface.

-

VPWS services do not use the ESI label, because traffic from a PE can never be forwarded back to the ES peer PE.

-

All-active multihoming for VPWS services does not run DF election, however the router still shows only four candidates in the ES state.

The use of control-word is supported.

-

encapsulation-type mpls

-

mpls next-hop-resolution allowed-tunnel-types[X], where X is at least one of the tunnel types supported by SR Linux

When EVPN routes are resolved to tunnels that support (underlay) ECMP, EVPN packets are sprayed over multiple paths for the tunnel.

Configuring EVPN-VPWS services

--{ * candidate shared default }--[ ]--

A:srl2# info network-instance VPWS-4

network-instance VPWS-4 {

type vpws

description "VPWS-4 EVPN-MPLS single-homing"

interface ethernet-1/1.4 {

connection-point A

}

protocols {

bgp-evpn {

bgp-instance 1 {

admin-state enable

encapsulation-type mpls

evi 4

ecmp 4

vpws-attachment-circuits {

local {

local-attachment-circuit ac-4 {

ethernet-tag 4

connection-point B

}

}

remote {

remote-attachment-circuit ac-4 {

ethernet-tag 4

connection-point B

}

}

}

mpls {

next-hop-resolution {

allowed-tunnel-types [

ldp

]

}

}

}

}

bgp-vpn {

bgp-instance 1 {

}

}

}

connection-point A {

}

connection-point B {

}

}Similar to MAC-VRF network instances, VPWS network instances make use of an oper-vpws-mtu value derived from the sub-interface l2-mtu of the subinterface with lower Layer 2 MTU attached to the network instance, without the service delimiting tag size. If there is no subinterface in the VPWS network instance, then the oper-vpws-mtu is computed as system mtu default-l2-mtu.

This oper-vpws-mtu value is advertised in the Layer 2 information extended community along with the AD per-EVI route for the service. On reception, the local oper-vpws-mtu value must match the received Layer 2 MTU value. If the values do not match, the EVPN destination is not created. A received value of zero is ignored and the destination is created, assuming the rest of the attributes are valid.

Operational state in VPWS network instances

In a VPWS network instance, the following objects have their own operational state:

- network-instance

- bgp-evpn bgp-instance

- connection-point

- subinterface

- network-instance interface

The network instance is operationally up as long as admin-state enable is configured. The network instance remains up regardless of the state of the connection-points, bgp-evpn bgp-instance or the subinterfaces.

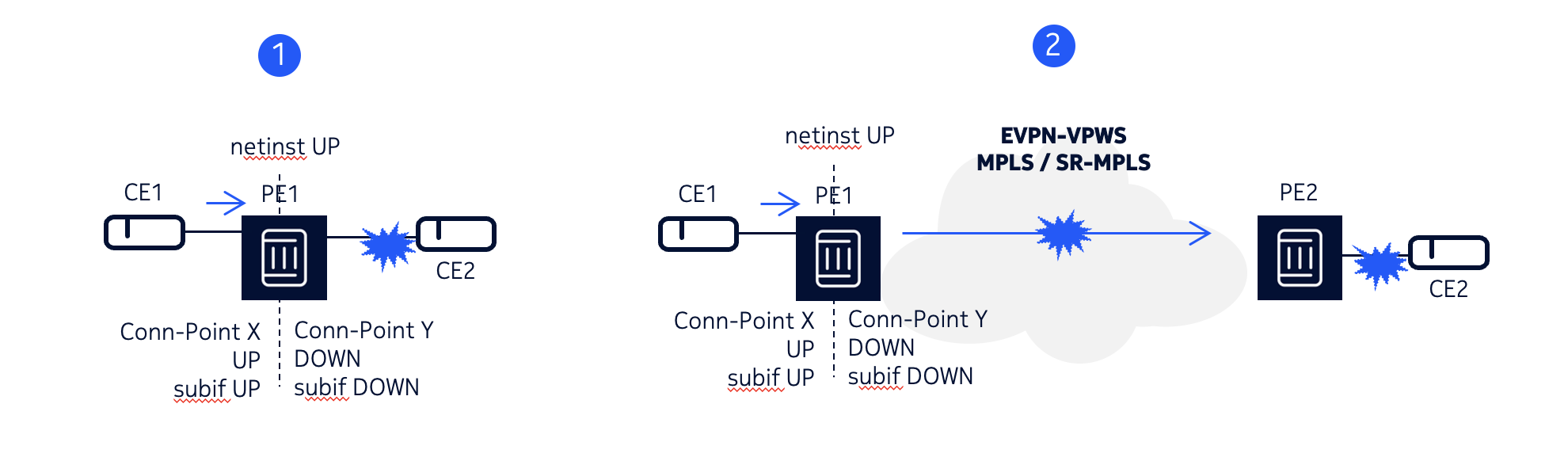

In case 1, PE1 is attached to a VPWS service with two subinterfaces. If the interface and/or subinterface CE2 goes down:

- Connection point Y goes operationally down.

- Connection point X and subinterface CE1 remain operationally up and the network instance remains operationally up.

In case 2, PE1 is attached to an EVPN-VPWS service with a subinterface and an EVPN destination. If the interface and/or subinterface CE2 goes down:

- EVPN destination on PE is removed and connection point Y goes operationally down.

- bgp-evpn bgp-instance remains operationally up.

- Connection point X and subinterface CE1 remain operationally up and the network instance remains operationally up. This changes when link-loss-forwarding true is enabled. For more information, see Interface/LAG link loss forwarding.

In a third example (not shown in the figure), the two connection points can go operationally down but the network instance remains operatioanlly up.

Layer 2 attribute mismatch

A Layer 2 attribute mismatch occurs when an AD per EVI route is imported into a VPWS service and the signaled layer 2 attributes (Layer 2 MTU, control word, or flow label) do not match the local settings.

In general, a Layer 2 attribute mismatch causes the evpn_mgr to discard

the AD per EVI route and remove the EVPN destination from the PE that advertised the

route.

The received Layer 2 MTU must match the oper-vpws-mtu so that the EVPN destination can be created. If the received MTU value is zero, the value is ignored and the destination is created if the rest of the attributes are valid.

A mismatch on the C flag (the flag that indicates the capability to use control-word) removes the EVPN destination. Even if the received C flag matches the local setting, if packets are received without the control-word and the control-word is configured, the data path discards the packets. Packets are also dropped if the control-word is received but control-word false is configured.

A mismatch on the F flag (flow label) also removes the EVPN destination.

Flow-aware transport label for EVPN-VPWS

Flow-aware transport (FAT) labels (also known as hash labels or flow labels) add entropy to divisible flows and create ECMP load balancing in a network. A FAT label can be used by EVPN services as per draft-ietf-bess-rfc7432bis.

SR Linux supports FAT labels for EVPN-VPWS services. When configured, FAT label support is always signaled as a new flag in the Layer 2 attributes extended community (F flag).

When

flow-label

is configured to be true, the EVPN AD per EVI route for the VPWS

service is signaled with the F flag

set and

the

local node expects the peer route to be received with F=1. If there is

a mismatch in the F flag value between peers, the EVPN destination is removed.

FAT label configuration

--{ * candidate shared default }--[ network-instance mgmt ]--

A:srl2# info

protocols {

bgp-evpn {

bgp-instance 1 {

mpls {

flow-label true

}

}

}

}Interface/LAG link loss forwarding

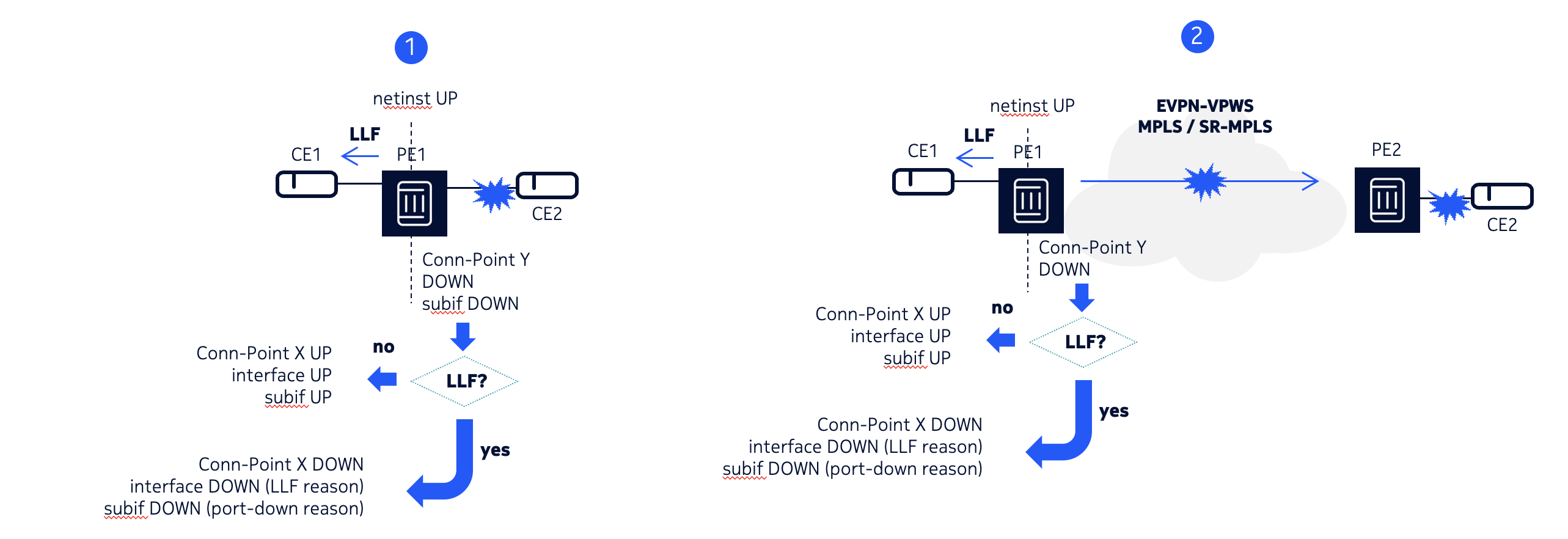

Interface/LAG link loss forwarding (LLF) covers the propagation of a fault on an EVPN-VPWS service. The removal of all the AD per EVI routes from a remote PE triggers a fault propagation event on the local PE to the local CE. That fault propagation can use power-off or LACP out-of-synch signaling.

In the cases 1 and 2 in the preceding figure, upon a failure that causes connection point Y to go operationally down, the system checks the configuration of the interface on connection point X. If interface ethernet link-loss-forwarding true is configured in the interface of connection point X, the interface ethernet standby-signaling action is triggered. Otherwise, connection point X and its subinterface remain operationally up.

In case 2, where BGP-EVPN is configured, when the subinterface connected to CE1 is operationally down due to active LLF, the EVPN AD per EVI route is still advertised by PE1.

LLF does not introduce any oper-down-reason at connection point or subinterface levels. LLF introduces port-oper-per-down-reason link-loss-forwarding at the interface level.

-

connection point X goes operationally down with the oper-down-reason being associations-oper-down.

-

subinterface to CE1 goes operationally down with the oper-down-reason being port-down.

-

interface goes operationally down with the oper-down-reason being link-loss-forwarding.

Configuring link loss forwarding

LLF is enabled by the interface ethernet link-loss-forwarding true

command on the interface connected to the CE over which the fault signaling is

propagated. In addition, the command standby-signaling power-off

lacp must be configured on the same interface so that

ch_mgr can trigger the relevant standby signaling to the

CE.

--{ * candidate shared default }--[ interface ethernet-1/1 ]--

A:srl# info

ethernet {

standby-signaling power-off

link-loss-forwarding true

}