Egress buffer management

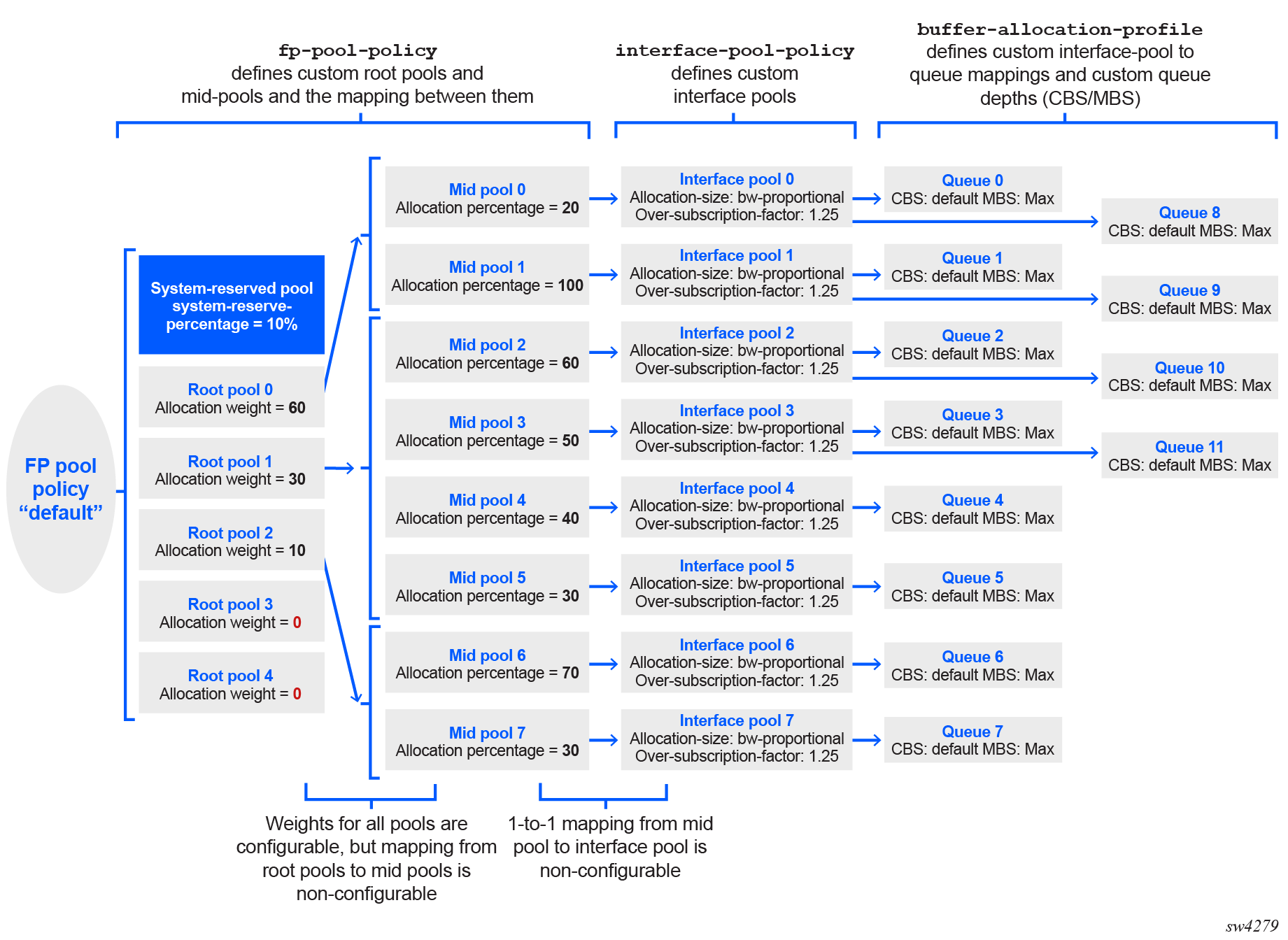

7730 SXR platforms support customized buffer allocation for egress queues beyond the default buffer pool behavior. The total system buffer allocation is divided into a system-reserved portion and a configurable portion. Within the configurable portion, the buffer hierarchy consists of the following levels:

- root pools

- mid-pools

- interface pools

- queues

The following diagram shows an overview of the default pools and default queue buffer allocations.

Buffer management configuration elements

Buffer management on 7730 SXR platforms consists of the following four configurable elements:

-

FP pool policy (for root pool and mid-pool)

The upper-level FP pool policy defines the buffer allocation for the root and mid-pools. On a linecard with two forwarding complexes, a different custom FP pool policy can be applied to each one of the forwarding complexes, as required.

-

Interface pool policy

The interface pool policy defines the interface-level buffer allocations.

The mapping of interface pools to FP mid-pools is one-to-one and not configurable. The interface pool policy defines the interface buffer pool size as a percentage or proportion of the mid-pool size. All interfaces on an FP that are assigned to a particular interface pool share the buffer space of the associated mid-pool.

-

Buffer allocation profile

The buffer allocation profile associates the interface pool policy with individual queues. It also defines the queue depth parameters including maximum burst size (MBS), committed burst size (CBS) and the related adaptation rule parameter. The buffer allocation profile is applied to interfaces or subinterfaces as required.

The 12 queues are always available on every interface at egress (queue names and parameters are configurable). On a subinterface, by default all subinterface traffic uses the same interface-level queues. Local queues are created on a subinterface only after an output class map is assigned to the subinterface.

-

WRED slope policy

A WRED slope policy can be applied to an FP pool policy, to an interface pool policy, or directly to queues to handle congestion when queue space is depleted.

FP pool policy for root pool and mid-pool

FP pool policies define the parameters for the system buffer root pools and mid-pools. All queues draw buffers from these pools as defined by the policies.

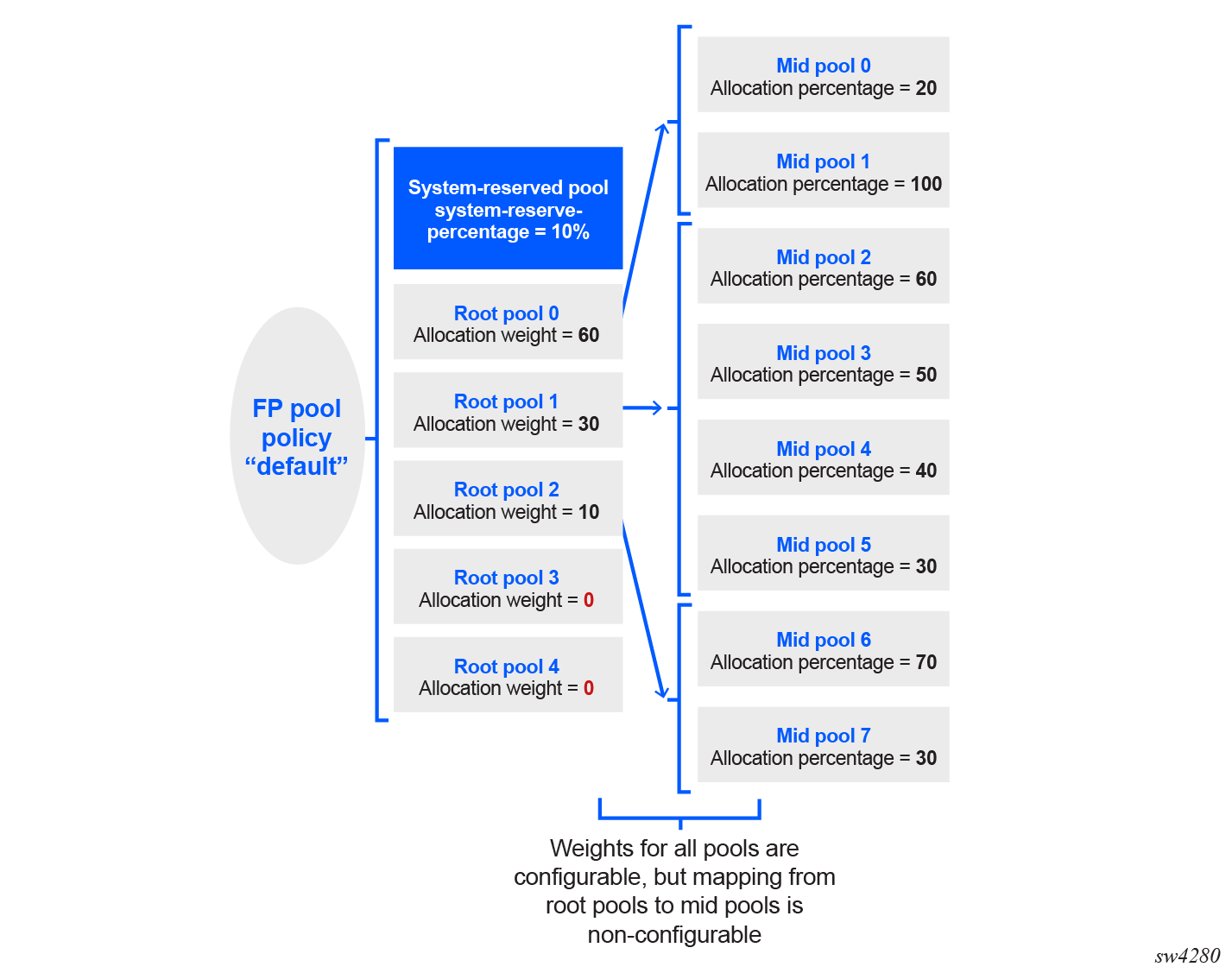

The following figure shows the default FP pool policy.

In addition to the default FP pool policy shown, two additional configurable FP pool policies can be defined, to support up to two forwarding complexes. Within all three policies, the individual pool allocation weights are configurable, however the mapping of root pools to mid pools is static and non-configurable.

Root pools

The FP pool policy divides the FP buffer space into six root pools:

- One system-reserved pool for system generated traffic. The default value is 10% of total available buffer space.

- Five root pools, which divide the non-system-reserved FP buffer space using allocation weights relative to the total buffer space remaining.

The system-reserved pool is reserved for all system-generated traffic and for all traffic using queues created by the system (for example, failover queues).

A root pool can be used by different applications such as data, video, voice, or any other network application. The whole FP buffer space cannot be oversubscribed. Within each FP pool policy, all root pools (excluding the system-reserved pool) are assigned an allocation-weight, which determines the proportion of bandwith assigned to the pool in relation to the sum of all assigned pool weights in the policy.

Mid-pools

Mid-pools can represent different service classes within a single root pool.

Each forwarding complex supports a total of eight mid-pools (0 to 7), which are mapped to root pools 0, 1, and 2. The mid-pools can oversubscribe individual root pools, if required. If the mid-pool is not defined in the configuration, no buffer space is allocated to it. The mid-pool allocation percentages are configurable, however the mapping of mid-pools to root pools is static and non-configurable.

Default FP pool policy configuration

The following output shows the configuration for FP pool policy default, which is assigned to all forwarding complexes in the system by default.

--{ candidate shared default }--[ ]--

# info from state qos buffer-management fp-pool-policy default

qos {

buffer-management {

fp-pool-policy default {

system-reserve-percentage 10

root-tier {

root-pool 0 {

allocation-weight 60

mid-pool-members {

mid-pool-member 0 {

}

mid-pool-member 1 {

}

}

}

root-pool 1 {

allocation-weight 30

mid-pool-members {

mid-pool-member 2 {

}

mid-pool-member 3 {

}

mid-pool-member 4 {

}

mid-pool-member 5 {

}

}

}

root-pool 2 {

allocation-weight 10

mid-pool-members {

mid-pool-member 6 {

}

mid-pool-member 7 {

}

}

}

root-pool 3 {

allocation-weight 0

}

root-pool 4 {

allocation-weight 0

}

}

mid-tier {

mid-pool 0 {

allocation-percentage-size 20

}

mid-pool 1 {

allocation-percentage-size 100

}

mid-pool 2 {

allocation-percentage-size 60

}

mid-pool 3 {

allocation-percentage-size 50

}

mid-pool 4 {

allocation-percentage-size 40

}

mid-pool 5 {

allocation-percentage-size 30

}

mid-pool 6 {

allocation-percentage-size 70

}

mid-pool 7 {

allocation-percentage-size 30

}

}

}

}

}Default settings for new FP pool policy

When a new custom defined FP pool policy is created, the following default settings apply:

- system-reserve-percentage = 10

- Mapping of the mid pools to root pools is the same as in the default FP pool policy, and is non-configurable.

- All mid-pool and root pool parameters are initially set the same as in the default FP pool policy.

Interface pool policy

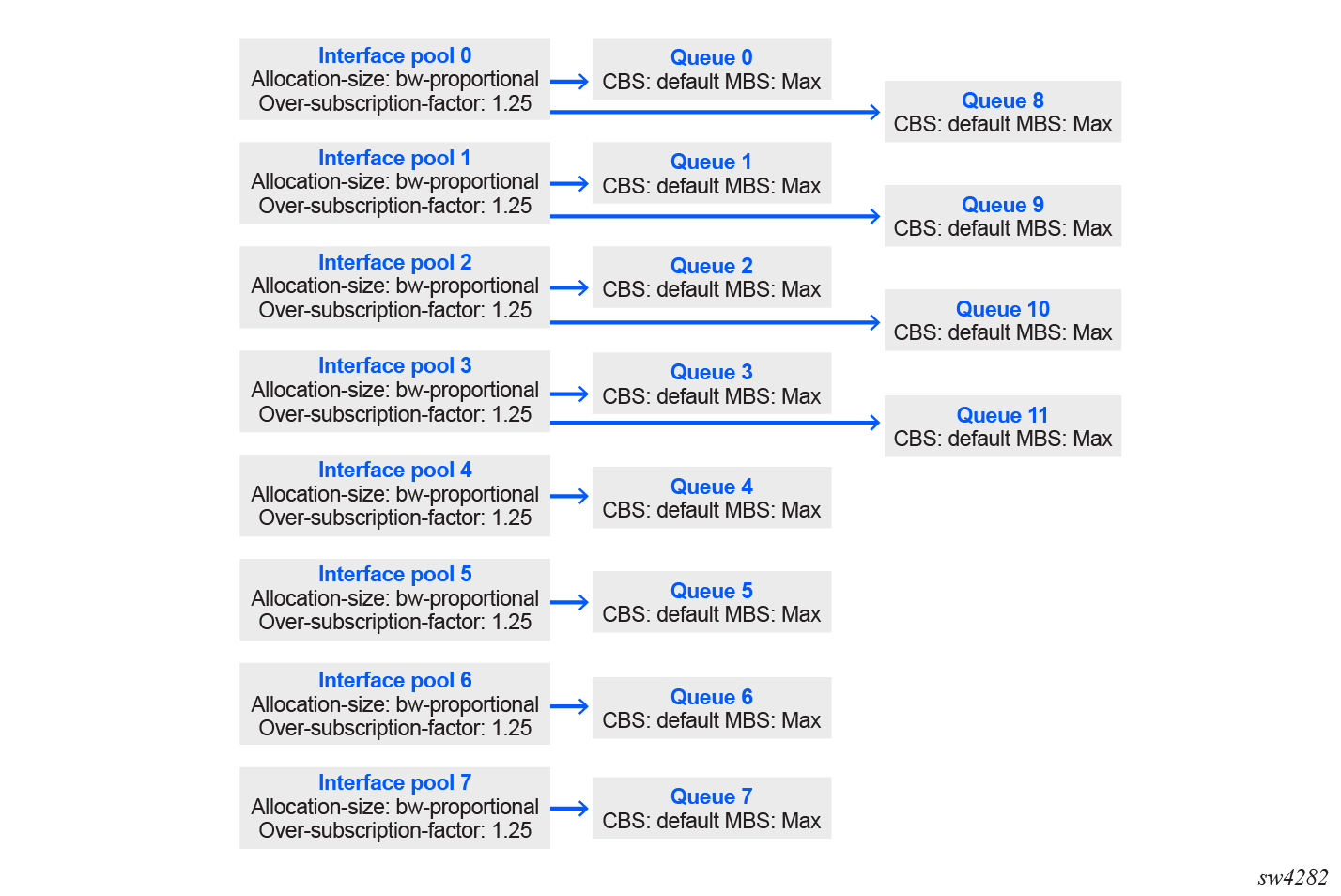

Interface pools allow for a more precise allocation of the interface-level buffer space. Each interface can support up to eight interface pools, and the mapping from interface pools to FP-level mid-pools is one-to-one and not configurable. In other words, interface-pool 0 maps to mid-pool 0 and so on.

The default interface pool policy is assigned to every interface by default. A custom-defined interface pool policy can be attached to any interface at any time, although doing so is generally considered very exceptional.

Individual queues must still be mapped to corresponding interface pools using buffer allocation profiles, which are then assigned to interfaces and subinterfaces.

All interfaces that are assigned to a particular interface pool share the buffer space of the associated mid-pool. The size of the individual interface pools can be defined in one of two ways using the following parameters:

-

explicit-percentage

Sets the interface pool size as a percentage of the associated mid-pool size. The sum of participating interface pool percentages can be more than 100% (for oversubscription).

-

bw-proportional and over-subscription-factor

The bw-proportional parameter divides the mid-pool size between participating interface pools based on their respective administrative interface rate (the nominal share). The over-subscription-factor defines how much the interface pool share is increased relative to its nominal share.

By default, the mid-pool buffer space is divided between interface pools using the bandwidth proportional approach, with an oversubscription factor of 1.25.

Default interface pool policy

The following figure illustrates the default interface pool policy and its mapping to the mid-pools.

The following output shows the default interface pool policy configuration.

qos {

buffer-management {

interface-pool-policy {

interface-pool 0 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 1 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 2 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 3 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 4 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 5 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 6 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

interface-pool 7 {

allocation-size {

bw-proportional {

over-subscription-factor 1.25

}

}

}

}

}

}Buffer allocation profile

- The interface pool policy to associate with the queue

- The queue depth parameters including:

- Maximum burst size (MBS)

- Committed burst size (CBS)

- MBS and CBS adaptation rules, which defines how the user-configured MBS and CBS values are adjusted to the available hardware values (closest [default], higher, or lower)

The buffer allocation profile is applied to interfaces and subinterfaces under the qos interfaces interface output context. It defines queue parameters for the interface or subinterface, depending on the context used.

The system provides default pools for queues that are not explicitly mapped to a pool in a buffer allocation profile.

Default buffer allocation profile

The following figure illustrates the default buffer allocation profile.

The following output shows the default buffer allocation profile configuration.

--{ candidate shared default }--[ ]--

# info from state qos buffer-management buffer-allocation-profile default

qos {

buffer-management {

buffer-allocation-profile default {

queues {

queue queue-0 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 0

}

queue queue-1 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 1

}

queue queue-10 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 2

}

queue queue-11 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 3

}

queue queue-2 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 2

}

queue queue-3 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 3

}

queue queue-4 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 4

}

queue queue-5 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 5

}

queue queue-6 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 6

}

queue queue-7 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 7

}

queue queue-8 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 0

}

queue queue-9 {

maximum-burst-size 0

mbs-adaptation-rule closest

interface-pool 1

}

}

}

}

}Committed burst size table

7730 SXR platforms support only four configurable values for the committed burst size. A single global table defines the four possible values for the system. At the individual queue level, the explicit committed burst size is defined and the adaptation rule parameter defines how this value is rounded to one of the four possible values. After being adapted to one of the four committed burst size table values, the configured CBS values are then adapted to the available hardware values. This latter adaptation always uses the closest algorithm.

The actual CBS value can be viewed in state using the info from state qos interfaces interface <id> output queues queue <name> command for the subinterface.

The committed burst size table is defined under the qos buffer-management context. Below is the default configuration for this table.

You can modify the table settings at any time. The corresponding values are changed accordingly for all active queues.

Default committed burst size table

qos {

buffer-management {

committed-burst-size-table {

alt-0 0

alt-1 0

alt-2 0

alt-3 0

}

}

}The 7730 SXR platforms also support disabling of CBS altogether by omitting the committed-burst-size parameter from the buffer allocation profile. If CBS is disabled, the datapath does not perform a lookup in the committed burst size table.

WRED slope policy

To implement WRED behavior, a slope policy must be attached to an object. Three slopes are defined in a slope policy, for in, out, and exceed profiles. Packets matching the in-plus profile are not subject to the WRED slope.

Without a WRED slope policy applied, when a queue reaches its maximum fill size, the queue discards any packets arriving at the queue (known as tail drop).

The WRED slope policy can be applied to root pools, mid-pools, and interface pools. Mid-pools and interface pools can each have their own slope policy attached. However, for root pools, only a default slope policy can be defined.

In addition, the WRED slope policy can be applied to a specified forwarding class for all associated interface or subinterface queues.

A WRED slope policy can also emulate RED behaviour by defining all profiles (in, out, and exceed) with the same values, or by mapping all packets to a single profile.

Default WRED slope policy

The default WRED slope policy is as follows.

qos {

buffer-management {

slope-policy "default" {

wred-slope in {

slope-enabled true

min-threshold-percent 75

max-threshold-percent 90

max-probability 80

}

wred-slope out {

slope-enabled true

min-threshold-percent 50

max-threshold-percent 75

max-probability 80

}

wred-slope exceed {

slope-enabled true

min-threshold-percent 30

max-threshold-percent 55

max-probability 80

}

}

}

}Default settings for new WRED slope policy

When a new custom defined WRED slope policy is created, by default the following parameters are applied.

qos {

buffer-management {

slope-policy "slope-policy-name" {

wred-slope in {

slope-enabled false

min-threshold-percent 85

max-threshold-percent 100

max-probability 80

}

wred-slope out {

slope-enabled false

min-threshold-percent 85

max-threshold-percent 100

max-probability 80

}

wred-slope exceed {

slope-enabled false

min-threshold-percent 85

max-threshold-percent 100

max-probability 80

}

}

}

}Buffer usage monitoring

For every non-reserved pool, the system maintains the operational size and actual usage of the pool. (For system reserved pools, only the operational size is maintained.) The platform collects these values at regular intervals and publishes them in the internal database, similar to other resource usage.

To display buffer usage monitoring, use the info from state platform linecard forwarding-complex buffer-memory command.

Display buffer usage monitoring for root pool 1

--{ candidate shared default }--[ ]--

# info from state platform linecard 1 forwarding-complex 1 buffer-memory root-pool 1

platform {

linecard 1 {

forwarding-complex 1 {

buffer-memory {

root-pool 1 {

operational-size 8704

used 0

mid-pool 2 {

operational-size 5248

used 0

}

mid-pool 3 {

operational-size 4352

used 0

}

mid-pool 4 {

operational-size 3504

used 0

}

mid-pool 5 {

operational-size 2624

used 0

}

}

}

}

}

}

Display buffer usage monitoring for the system-reserved pool

--{ candidate shared default }--[ ]--

# info from state platform linecard 1 forwarding-complex 1 buffer-memory system-reserved-pool

platform {

linecard 1 {

forwarding-complex 1 {

buffer-memory {

system-reserved-pool {

operational-size 1661952

}

}

}

}

}Configuring FP pool policies for root pool and mid-pool

To configure FP pool policies for root pool and mid-pool buffers, use the qos buffer-management fp-pool-policy command. The individual allocation weights are configurable for all pools, however the default mapping of root pools to mid-pools is not configurable.

Configure FP pool policy

--{ candidate shared default }--[ ]--

# info qos buffer-management fp-pool-policy fp-pool-policy-name

qos {

buffer-management {

fp-pool-policy fp-pool-policy-name {

system-reserve-percentage 15

root-tier {

default-slope-policy default-slope-policy-name

root-pool 0 {

allocation-weight 65

mid-pool-members {

mid-pool-member 0 {

}

mid-pool-member 1 {

}

}

}

root-pool 1 {

allocation-weight 35

mid-pool-members {

mid-pool-member 2 {

}

mid-pool-member 3 {

}

mid-pool-member 4 {

}

mid-pool-member 5 {

}

}

}

}

mid-tier {

mid-pool 0 {

allocation-percentage-size 45

slope-policy slope-policy-name

}

mid-pool 1 {

allocation-percentage-size 30

}

mid-pool 2 {

allocation-percentage-size 25

}

mid-pool 3 {

allocation-percentage-size 20

}

mid-pool 4 {

allocation-percentage-size 70

}

mid-pool 5 {

allocation-percentage-size 30

}

}

}

}

}Applying an FP pool policy to a forwarding complex

You can assign one FP pool policy per forwarding complex. For linecards with two forwarding complexes, you can assign different custom policies on each forwarding complex.

To apply an FP pool policy to a forwarding complex, use the qos linecard <slot> forwarding-complex <name> output fp-pool-policy <value> command.

Apply an FP pool policy to a forwarding complex

--{ + candidate shared default }--[ ]--

A:sxr1x44s# info qos linecard 1

qos {

linecard 1 {

forwarding-complex 0 {

output {

fp-pool-policy fp-pool-policy-name

}

}

}

}Configuring interface pool policies

To configure interface pool polices, use the qos buffer-management interface-pool-policy <name> command.

Configure interface pool policy

--{ candidate shared default }--[ ]--

# info qos buffer-management interface-pool-policy interface-pool-policy-name

qos {

buffer-management {

interface-pool-policy interface-pool-policy-name {

interface-pool 0 {

allocation-size {

bw-proportional {

over-subscription-factor 1.30

}

}

}

interface-pool 1 {

allocation-size {

bw-proportional {

over-subscription-factor 1.20

}

}

}

interface-pool 2 {

allocation-size {

bw-proportional {

over-subscription-factor 1.10

}

}

}

}

}

}Applying interface pool policies to an interface

To apply interface pool policies to an interface, use the interface-pool-policy command in the qos interfaces interface <name> output context. Each interface can support up to eight interface pools.

An interface pool policy can be applied only to a QoS interface that references an interface, not a subinterface.

Apply interface pool policies to an interface

--{ +* candidate shared default }--[ ]--

# info qos interfaces interface eth-1/2 output

qos {

interfaces {

interface eth-1/2 {

interface-ref {

interface ethernet-1/2

}

output {

interface-pool-policy interface-pool-policy-name

}

}

}

}Configuring buffer allocation profiles

To configure buffer allocation profiles, use the qos buffer-management buffer-allocation-profile command.

Configure buffer allocation profile

--{ * candidate shared default }--[ ]--

# info qos buffer-management

qos {

buffer-management {

buffer-allocation-profile buffer-allocation-profile-name {

queues {

queue queue-0 {

maximum-burst-size 80

mbs-adaptation-rule closest

interface-pool 0

}

queue queue-1 {

maximum-burst-size 85

mbs-adaptation-rule closest

interface-pool 0

}

queue queue-2 {

maximum-burst-size 90

mbs-adaptation-rule closest

interface-pool 1

}

}

}

}

}Applying buffer allocation profiles to an interface or subinterface

To apply a buffer allocation profile to an interface or a subinterface, use the qos interfaces interface output buffer-allocation-profile command.

Apply buffer allocation profile to a subinterface

--{ * candidate shared default }--[ ]--

# info qos interfaces interface ethernet-1/2.1

qos {

interfaces {

interface ethernet-1/2.1 {

interface-ref {

interface ethernet-1/2

subinterface 1

}

output {

buffer-allocation-profile test-buffer-profile

}

}

}

}Configuring WRED slope policies

To configure WRED slope policies, use the qos buffer-management slope-policy command.

Configure WRED slope policy

--{ * candidate shared default }--[ ]--

# info qos buffer-management

qos {

buffer-management {

slope-policy slope-policy-name {

wred-slope in {

slope-enabled true

min-threshold-percent 80

max-threshold-percent 95

max-probability 75

}

wred-slope out {

slope-enabled true

min-threshold-percent 55

max-threshold-percent 80

max-probability 85

}

wred-slope exceed {

slope-enabled true

min-threshold-percent 35

max-threshold-percent 60

max-probability 95

}

}

}

}The configured WRED slope policy can be attached to the following objects:

- Interface forwarding-class under qos forwarding-classes

forwarding-class.

In this case the slope policy applies to all interface-level queues associated with the forwarding class.

- Subinterface forwarding-class under qos output-class-map

forwarding-class.

In this case the slope policy applies to all subinterface-level queues associated with the forwarding class.

- Root pools under qos buffer-management fp-pool-policy

root-tier.

In this case a single common slope policy applies to all root pools.

- Mid-pools under qos buffer-management fp-pool-policy mid-tier

mid-pool.

In this case a unique slope policy can be applied to each mid-pool.

- Interface pool under qos buffer-management interface-pool-policy

interface-pool.

In this case a unique slope policy can be applied to each interface pool.

Applying default WRED slope policies to root pools

To apply a WRED slope policy to serve as the default policy for all root pools, use the following command.

qos buffer-management fp-pool-policy <name> root-tier default-slope-policy <name>

Apply a default WRED slope policy to a root pool

--{ +* candidate shared default }--[ ]--

# info qos buffer-management fp-pool-policy fp-pool-policy-name root-tier default-slope-policy

qos {

buffer-management {

fp-pool-policy fp-pool-policy-name {

root-tier {

default-slope-policy default-slope-policy-name

}

}

}

}Applying WRED slope policies to mid-pools

To apply a WRED slope policy to a mid-pool use the following command.

qos buffer-management fp-pool-policy <name> mid-tier mid-pool <value> slope-policy <name>

Apply a WRED slope policy to mid-pool 0

--{ +* candidate shared default }--[ ]--

# info qos buffer-management fp-pool-policy fp-pool-policy-name mid-tier mid-pool 0

qos {

buffer-management {

fp-pool-policy fp-pool-policy-name {

mid-tier {

mid-pool 0 {

slope-policy slope-policy-name

}

}

}

}

}Applying WRED slope policies to interface pools

To apply a WRED slope policy to an interface pool use the following command.

qos buffer-management interface-pool-policy <name> interface-pool <value> slope-policy <name>

Apply a WRED slope policy to interface pool 0

--{ +* candidate shared default }--[ ]--

# info qos buffer-management interface-pool-policy interface-pool-name interface-pool 0 slope-policy

qos {

buffer-management {

interface-pool-policy interface-pool-name {

interface-pool 0 {

slope-policy slope-policy-name

}

}

}

}Applying WRED slope policies to interface-level forwarding classes

To apply a WRED slope policy to a forwarding class for interface-level queues, use the slope-policy command under the following context.

qos forwarding-classes forwarding-class <name> output

Apply WRED slope policy to interface-level forwarding classes

The following example applies a WRED slope policy to all interface-level queues associated with the specified forwarding class.

--{ * candidate shared default }--[ ]--

# info qos forwarding-classes forwarding-class fc-name

qos {

forwarding-classes {

forwarding-class fc-name {

output {

slope-policy slope-policy-name

}

}

}

}Applying WRED slope policies subinterface-level forwarding classes

To apply a WRED slope policy to a forwarding class for subinterface-level queues, use the slope-policy command under the following context.

qos output-class-map<name> forwarding-class <name>

Apply WRED slope policy to subinterface-level forwarding classes

The following example applies a WRED slope policy to all subinterface-level queues associated with the specified forwarding class.

--{ * candidate shared default }--[ ]--

# info qos output-class-map output-class-map-name

qos {

output-class-map output-class-map-name {

forwarding-class fc-name {

slope-policy slope-policy-name

}

}

}