BGP Multi-Homing for VPLS Networks

This chapter describes BGP Multi-Homing (BGP-MH) for VPLS network configurations.

Topics in this chapter include:

Applicability

Initially, the information in this chapter was based on SR OS Release 8.0.R5, with additions for SR OS Release 9.0.R1. The MD-CLI in the current edition corresponds to SR OS Release 20.10.R2.

Overview

SR OS supports the use of Border Gateway Protocol Multi-Homing for VPLS (hereafter called BGP-MH). BGP-MH is described in draft-ietf-bess-vpls-multihoming, BGP based Multi-homing in Virtual Private LAN Service, and provides a network-based resiliency mechanism (no interaction from the Provider Edge routers (PEs) to Multi-Tenant Units/Customer Equipment (MTU/CE)) that can be applied on service access points (SAPs) or network (pseudowires) topologies. The BGP-MH procedures will run between the PEs and will provide a loop-free topology from the network perspective (only one logical active path will be provided per VPLS among all the objects SAPs or pseudowires which are part of the same Multi-Homing site).

Each multi-homing site connected to two or more peers is represented by a site ID (2 bytes long) which is encoded in the BGP MH Network Layer Reachability Information (NLRI). The BGP peer holding the active path for a particular multi-homing site will be named as the Designated Forwarder (DF), whereas the rest of the BGP peers participating in the BGP MH process for that site will be named as non-DF and will block the traffic (in both directions) for all the objects belonging to that multi-homing site.

BGP MH uses the following rules to determine which PE is the DF for a particular multi-homing site:

-

A BGP MH NLRI with D flag = 0 (multi-homing object up) always takes precedence over a BGP MH NLRI with D flag = 1 (multi-homing object down). If there is a tie, then:

-

The BGP MH NLRI with the highest BGP Local Preference (LP) wins. If there is a tie, then:

-

The BGP MH NLRI issued from the PE with the lowest PE ID (system address) wins.

The main advantages of using BGP-MH as opposed to other resiliency mechanisms for VPLS are:

-

Flexibility: BGP-MH uses a common mechanism for access and core resiliency. The designer has the flexibility of using BGP-MH to control the active/standby status of SAPs, spoke SDPs, Split Horizon Groups (SHGs) or even mesh SDP bindings.

-

The standard protocol is based on BGP, a standard, scalable, and well-known protocol.

-

Specific benefits at the access:

-

It is network-based, independent of the customer CE and, therefore, it does not need any customer interaction to determine the active path. Consequently, the operator will spend less effort on provisioning and will minimize both operation costs and security risks (in particular, this removes the requirement for spanning tree interaction between the PE and CE).

-

Easy load balancing per service (no service fate-sharing) on physical links.

-

-

Specific benefits in the core:

-

It is a network-based mechanism, independent of the MTU resiliency capabilities and it does not need MTU interaction, therefore operational advantages are achieved as a result of the use of BGP-MH: less provisioning is required and there will be minimal risks of loops. In addition, simpler MTUs can be used.

-

Easy load balancing per service (no service fate-sharing) on physical links.

-

Less control plane overhead: there is no need for an additional protocol running the pseudowire redundancy when BGP is already used in the core of the network. BGP-MH just adds a separate NLRI in the L2-VPN family (AFI=25, SAFI=65).

-

This chapter describes how to configure and troubleshoot BGP-MH for VPLS

Knowledge of the LDP/BGP VPLS (RFC 4762, Virtual Private LAN Service (VPLS) Using Label Distribution Protocol (LDP) Signaling, and RFC 4761, Virtual Private LAN Service (VPLS) Using BGP for Auto-Discovery and Signaling) architecture and functionality is assumed throughout this chapter. For further information, see the relevant Nokia documentation.

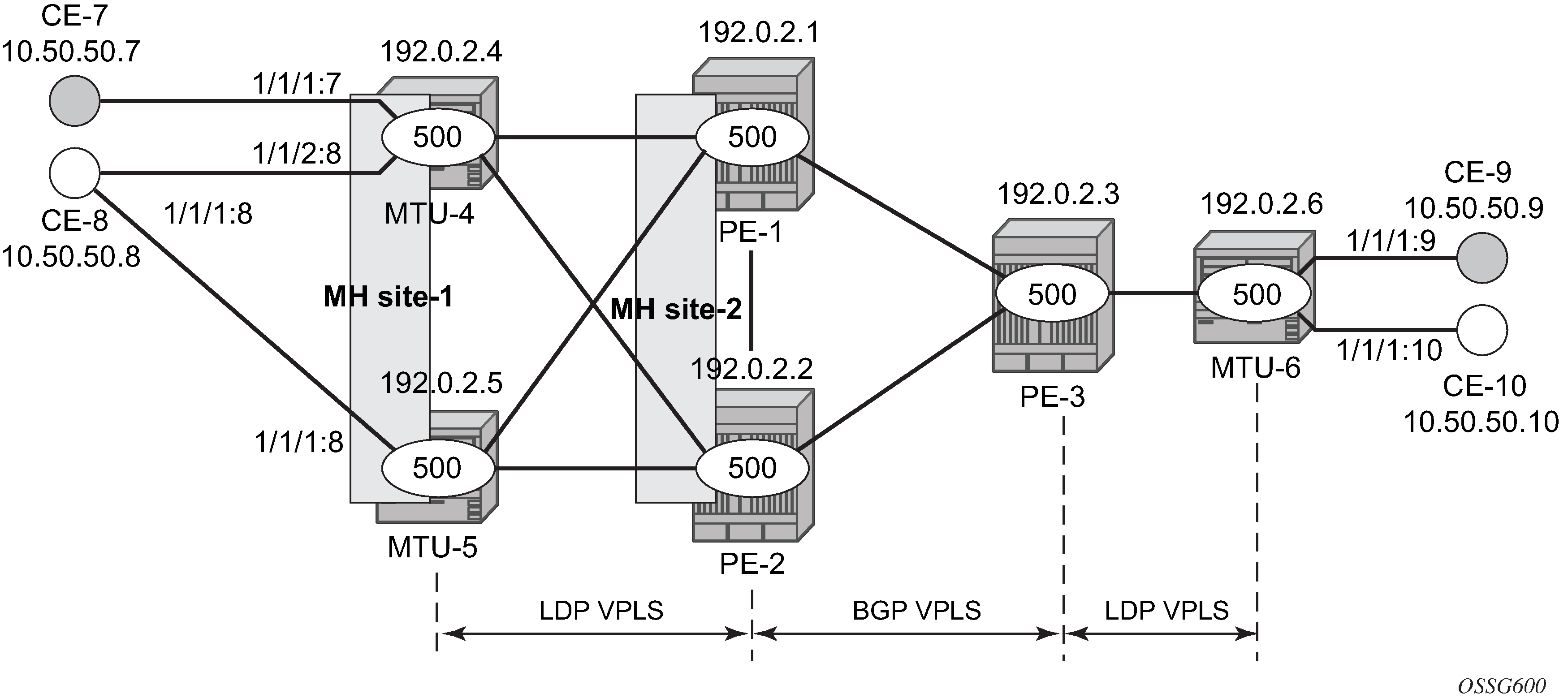

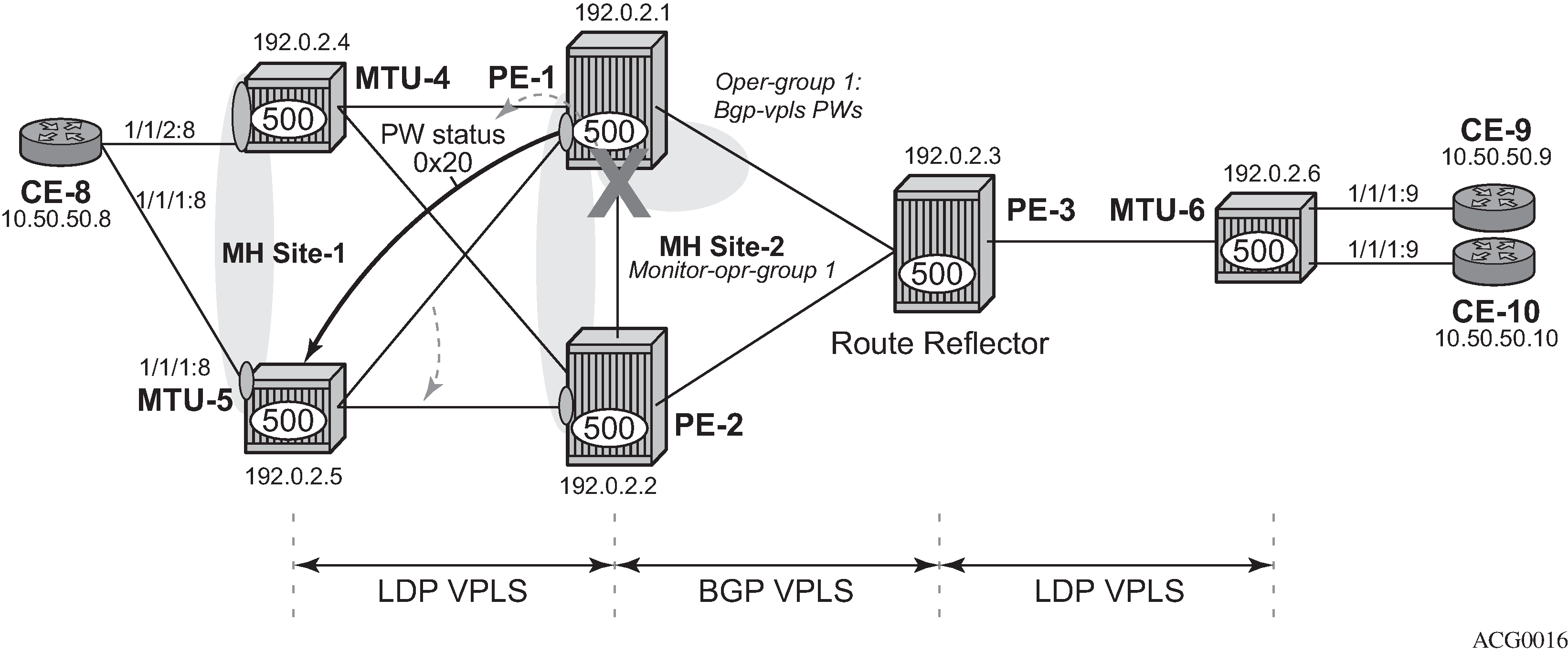

Example topology shows the example topology that will be used throughout the rest of the chapter.

The initial configuration includes:

-

IGP — IS-IS, Level 2 on all routers; area 49.0001

-

RSVP-TE for transport tunnels

-

Fast reroute (FRR) protection in the core; no FRR protection at the access.

The topology consists of three core nodes (PE-1, PE-2, and PE-3) and three MTUs connected to the core.

The VPLS service VPLS-500 is configured on the six nodes with the following characteristics:

-

The core VPLS instances are connected by a full mesh of BGP-signaled pseudowires (that is, pseudowires among PE-1, PE-2, and PE-3 will be signaled by BGP VPLS).

-

As shown in Example topology, the MTUs are connected to the BGP VPLS core by T-LDP pseudowires. MTU-6 is connected to PE-3 by a single pseudowire, whereas MTU-4 and MTU-5 are dual-homed to PE-1 and PE-2. The following resiliency mechanisms are used on the dual-homed MTUs:

-

MTU-4 is dual-connected to PE-1 and PE-2 by an active/standby pseudowire (A/S pseudowire hereafter).

-

MTU-5 is dual-connected to PE-1 and PE-2 by two active pseudowires, one of them being blocked by BGP MH running between PE-1 and PE-2. The PE-1 and PE-2 pseudowires, set up from MTU-5, will be part of the BGP MH site MH-site-2.

-

MTU-4 and MTU-5 are running BGP MH, being SHG site-1 and SAP 1/1/1:8 on MTU-5 part of the same BGP MH site, MH-site-1.

-

-

The CEs are connected to the network in the following way:

-

CE-7, CE-9, and CE-10 are single-connected to the network

-

CE-8 is dual connected to MTU-4 and MTU-5.

-

CE-7 and CE-8 are part of the split-horizon group (SHG) site-1(SAPs 1/1/4:500 and 1/1/3:500 on MTU-4). Assume that CE-7 and CE-8 have a backdoor link between them so that when MTU-5 is elected as DF, CE-7 does not get isolated. This configuration highlights the use of a SHG within a site configuration.

-

For each BGP MH site, MH-site-1 and MH-site-2, the BGP MH process will elect a DF, blocking the site objects for the non-DF nodes. In other words, based on the specific configuration described throughout the chapter:

-

For MH-site-1, MTU-4 will be elected as the DF. The non-DF-MTU-5 will block the SAP 1/1/1:8.

-

For MH-site-2, PE-1 will be elected as the DF. The non-DF PE-1 will block the spoke-SDP to MTU-5.

Configuration

This section describes all the relevant configuration tasks for the setup shown in Example topology. The appropriate associated IP/MPLS configuration is out of the scope of this chapter. In this example, the following protocols will be configured beforehand:

-

ISIS-TE as IGP with all the interfaces being level-2 (OSPF-TE could have been used instead).

-

RSVP-TE as the MPLS protocol to signal the transport tunnels (LDP could have been used instead).

-

LSPs between core PEs will be FRR protected (facility bypass tunnels) whereas LSP tunnels between MTUs and PEs will not be protected.

Note:The designer can choose whether to protect access link failures by means of MPLS FRR or A/S pseudowire or BGP MH. Whereas FRR provides a faster convergence (around 50ms) and stability (it does not impact on the service layer, therefore, link failures do not trigger MAC flush and flooding), some interim inefficiencies can be introduced compared to A/S pseudowire or BGP MH.

When the IP/MPLS infrastructure is up and running, the specific service configuration including the support for BGP MH can begin.

Global BGP configuration

BGP is used in this configuration guide for these purposes:

-

Exchange of multi-homing site NLRIs and redundancy handling from MTU-5 to the core.

-

Auto-discovery and signaling of the pseudowires in the core, as per RFC 4761.

-

Exchange of multi-homing site NLRIs and redundancy handling at the access for CE-7/CE-8.

A BGP route reflector (RR), PE-3, is used for the reflection of BGP updates corresponding to the preceding uses 1 and 2.

A direct peering is established between MTU-4 and MTU-5 for use 3. The same RR could have been used for the three cases, however, like in this example, the designer may choose to have a direct BGP peering between access devices. The reasons for this are:

-

By having a direct BGP peering between MTU-4 and MTU-5, the BGP updates do not have to travel back and forth.

-

On MTU-4 and MTU-5, BGP is exclusively used for multi-homing, therefore there will not be more BGP peers for either MTUs and a RR adds nothing in terms of control plane scalability.

On all nodes, the autonomous system number must be configured, as follows:

# on all nodes:

configure {

router "Base" {

autonomous-system 65000

}

The following CLI output shows the global BGP configuration required on MTU-4. The 192.0.2.5 address will be replaced by the corresponding peer or the RR system address for PE-1 and PE-2.

# on MTU-4:

configure {

router "Base" {

autonomous-system 65000

bgp {

router-id 192.0.2.4

rapid-withdrawal true

family {

ipv4 false

l2-vpn true

}

rapid-update {

l2-vpn true

}

group "Multi-Homing" {

}

neighbor "192.0.2.5" {

group "Multi-Homing"

peer-as 65000

}

}

In this example, PE-3 is the BGP RR with clients PE-1 and PE-2, as follows:

# on PE-3:

configure {

router "Base" {

autonomous-system 65000

bgp {

router-id 192.0.2.3

rapid-withdrawal true

family {

ipv4 false

l2-vpn true

}

rapid-update {

l2-vpn true

}

group "internal" {

cluster {

cluster-id 1.1.1.1

}

}

neighbor "192.0.2.1" {

group "internal"

peer-as 65000

}

neighbor "192.0.2.2" {

group "internal"

peer-as 65000

}

Some considerations about the relevant BGP commands for BGP-MH:

-

It is required to specify family l2-vpn in the BGP configuration. That statement will allow the BGP peers to agree on the support for the family AFI=25 (Layer 2 VPN), SAFI=65 (VPLS). This family is used for BGP VPLS as well as for BGP MH and BGP AD.

-

The rapid-update l2-vpn statement allows BGP MH to send BGP updates immediately after detecting link failures, without having to wait for the Minimum Route Advertisement Interval (MRAI) to send the updates in batches. This statement is required to guarantee a fast convergence for BGP MH.

-

Optionally, rapid-withdrawal can also be added. In the context of BGP MH, this command is only useful if a particular multi-homing site is cleared. In that case, a BGP withdrawal is sent immediately without having to wait for the MRAI. A multi-homing site is cleared when the BGP MH site is removed or even the entire VPLS service.

Service level configuration

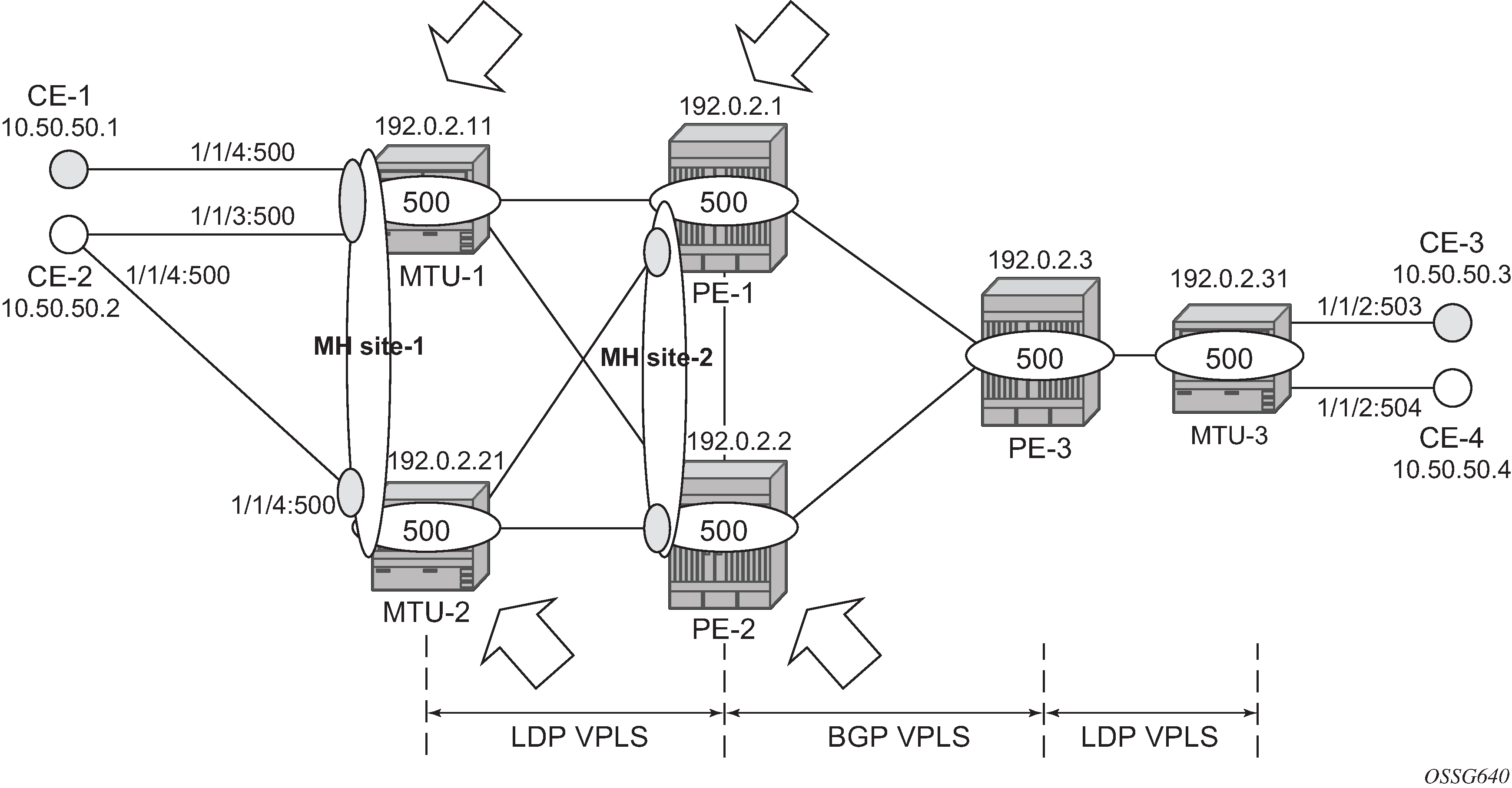

After the IP/MPLS infrastructure is configured, including BGP, this section shows the configuration required at service level (VPLS-500). The focus is on the nodes involved on BGP MH, that is, MTU-4, MTU-5, PE-1, and PE-2. These nodes are highlighted in Nodes involved in BGP MH.

Core PE service configuration

The following CLI excerpt shows the service level configuration on PE-1. The import/export policies configured on the PE nodes are identical:

# on PE-1:

configure {

policy-options {

community "comm_core" {

member "target:65000:500" { }

}

policy-statement "vsi500_export" {

entry 10 {

action {

action-type accept

community {

add ["comm_core"]

}

}

}

}

policy-statement "vsi500_import" {

entry 10 {

from {

family [l2-vpn]

community {

name "comm_core"

}

}

action {

action-type accept

}

}

default-action {

action-type reject

}

}

The configuration of the SDPs, PW template, and VPLS on PE-1 is as follows:

# on PE-1:

configure {

service {

pw-template "PW500" {

pw-template-id 500

provisioned-sdp use

}

sdp 12 {

admin-state enable

description "SDP to transport BGP-signaled PWs"

delivery-type mpls

path-mtu 8000

signaling bgp

far-end {

ip-address 192.0.2.2

}

lsp "LSP-PE-1-PE-2" { }

}

sdp 13 {

admin-state enable

description "SDP to transport BGP-signaled PWs"

delivery-type mpls

path-mtu 8000

signaling bgp

far-end {

ip-address 192.0.2.3

}

lsp "LSP-PE-1-PE-3" { }

}

sdp 14 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.4

}

lsp "LSP-PE-1-MTU-4" { }

}

sdp 15 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.5

}

lsp "LSP-PE-1-MTU-5" { }

}

vpls "VPLS-500" {

admin-state enable

service-id 500

customer "1"

bgp 1 {

route-distinguisher "65000:501"

vsi-import ["vsi500_import"]

vsi-export ["vsi500_export"]

pw-template-binding "PW500" {

split-horizon-group "CORE"

}

}

bgp-vpls {

admin-state enable

maximum-ve-id 65535

ve {

name "501"

id 501

}

}

spoke-sdp 14:500 {

}

spoke-sdp 15:500 {

}

bgp-mh-site "MH-site-2" {

admin-state enable

id 2

spoke-sdp 15:500

}

}

The following are general comments about the configuration of VPLS-500:

-

As seen in the preceding CLI output for PE-1, there are four provisioned SDPs that the service VPLS-500 will use in this example. SDP 14 and SDP 15 are tunnels over which the T-LDP FEC128 pseudowires for VPLS-500 will be carried (according to RFC 4762), whereas SDP 12 and SDP 13 are the tunnels for the core BGP pseudowires (based on RFC 4761).

-

The BGP context provides the general service BGP configuration that will be used by BGP VPLS and BGP MH:

-

Route distinguisher (notation chosen is based on <AS_number:500 + node_id>)

-

VSI export policies are used to add the export route-targets included in all the BGP updates sent to the BGP peers.

-

VSI import policies are used to control the NLRIs accepted in the RIB, normally based on the route targets.

-

Both VSI-export and VSI-import policies can be used to modify attributes such as the Local Preference (LP) that will be used to influence the BGP MH Designated Forwarder (DF) election (LP is the second rule in the BGP MH election process, as previously discussed). The use of these policies will be described later in the chapter.

-

The pw-template-binding command maps the previously defined pw-template PW500 to the SHG ‟CORE”. In this way, all the BGP-signaled pseudowires will be part of this SHG. Although not shown in this example, the pw-template-binding command can also be used to instantiate pseudowires within different SHGs, based on different import route targets:

Note:Detailed BGP-VPLS configuration is out of the scope of this chapter. For more information, see chapter BGP VPLS.

[ex:configure service vpls "VPLS-500" bgp 1] A:admin@PE-1# pw-template-binding "PW500" ? pw-template-binding apply-groups - Apply a configuration group at this level apply-groups-exclude - Exclude a configuration group at this level bfd-liveness - Enable BFD bfd-template - BFD template name for PW-Template binding import-rt - Import route-target communities split-horizon-group - Split horizon group Choice: oper-group-association monitor-oper-group :- Operational group to monitor oper-group :- Operational group -

-

The BGP-signaled pseudowires (from PE-1 to PE-2 and PE-3) are set up according to the configuration in the BGP context. Beside those pseudowires, the VPLS-500 also has two more pseudowires signaled by TLDP: spoke-SDP 14:500 (to MTU-4) and spoke-SDP 15:500 (to MTU-5).

The MH site name is defined by a string of up to 32 characters:

[ex:configure service vpls "VPLS-500"]

A:admin@PE-1# bgp-mh-site ?

[site-name] <string>

<string> - <1..32 characters>

Name for the specific site

The general BGP MH configuration parameters for a particular multi-homing site are as follows:

[ex:configure service vpls "VPLS-500"]

A:admin@PE-1# bgp-mh-site "MH-site-2" ?

bgp-mh-site

activation-timer - Time that the local sites are in standby status, waiting for

BGP updates

admin-state - Administrative state of the VPLS BGP multi-homing site

apply-groups - Apply a configuration group at this level

apply-groups-exclude - Exclude a configuration group at this level

boot-timer - Time that system waits after node reboot and before it runs

DF election algorithm

failed-threshold - Threshold for the site to be declared down

id - ID for the site

min-down-timer - Minimum downtime for BGP multi-homing site after transition

from up to down

monitor-oper-group - Operational group to monitor

Choice: site-object

mesh-sdp-binds :- Specify if a mesh-sdp-binding is associated with this site

sap :- SAP to be associated with this site

shg-name :- Split horizon group to be associated with this site

spoke-sdp :- SDP to be associated with this site

Where:

-

The site name is defined by a string of up to 64 characters.

-

The id is an integer that identifies the multi-homing site and is encoded in the BGP MH NLRI. This ID must be the same one used on the peer node where the same multi-homing site is connected to. That is, MH-site-2 must use the same site-id in PE-1 and PE-2 (value = 2 in the PE-1 site configuration).

-

Out of the four potential objects in a site—spoke SDP, SAP, SHG, and mesh SDP binding—only one can be used at the time on a particular site. To add more than just one SAP/spoke-SDP to the same site, an SHG composed of the SAP/spoke-SDP objects must be used in the site configuration. Otherwise, only one object—spoke SDP, SAP, SHG, or mesh SDP binding—is allowed per site. When a new object is configured in a site, it replaces the previous object in that site.

-

The failed-threshold command defines how many objects should be down for the site to be declared down. This command is obviously only valid for multi-object sites (SHGs and mesh-SDP bindings). By default, all the objects in a site must be down for the site to be declared as operationally down.

[ex:configure service vpls "VPLS-500" bgp-mh-site "MH-site-2"] A:admin@PE-1# failed-threshold ? failed-threshold (<number> | <keyword>) <number> - <1..1000> <keyword> - all Default - all Threshold for the site to be declared down -

The boot-timer specifies for how long the service manager waits after a node reboot before running the MH procedures. The boot-timer value should be configured to allow for the BGP sessions to come up and for the NLRI information to be refreshed/exchanged. In environments with the default BGP MRAI (30 seconds), it is highly recommended to increase this value (for instance, 120 seconds for a normal configuration). The boot-timer is only important when a node comes back up and would become the DF. Default value: 10 seconds.

[ex:configure service vpls "VPLS-500" bgp-mh-site "MH-site-2"] A:admin@PE-1# boot-timer ? boot-timer <number> <number> - <0..600> - seconds Time that system waits after node reboot and before it runs DF election algorithm -

The activation-timer command defines the amount of time the service manager will keep the local objects in standby (in the absence of BGP updates from remote PEs) before running the DF election algorithm to decide whether the site should be unblocked. The timer is started when one of the following events occurs only if the site is operationally up:

-

Manual site activation by enabling the admin-state at the id level or at member objects level (SAPs or pseudowires)

-

Site activation after a failure

-

The BGP MH election procedures will be resumed upon expiration of this timer or the arrival of a BGP MH update for the multi-homing site. Default value: 2 seconds.

[ex:configure service vpls "VPLS-500" bgp-mh-site "MH-site-2"] A:admin@PE-1# activation-timer ? activation-timer <number> <number> - <0..100> - seconds Time that the local sites are in standby status, waiting for BGP updates

-

-

When a BGP MH site goes down, it may be preferred that it stays down for a minimum time. This is configurable by the min-down-timer. When set to zero, this timer is disabled.

[ex:configure service vpls "VPLS-500" bgp-mh-site "MH-site-2"] A:admin@PE-1# min-down-timer ? min-down-timer <number> <number> - <0..100> - seconds Minimum downtime for BGP multi-homing site after transition from up to down -

The boot-timer, activation-timer, and min-down-timer commands can be provisioned at service level or at global level. The service level settings have precedence and override the global configuration. When no timer values are provisioned at global level, the default values apply; when no timer values are provisioned at service level, the timers inherit the global values.

[ex:configure redundancy bgp-mh] A:admin@PE-1# site ? site activation-timer - Time to keep local sites in standby status before running DF election algorithm boot-timer - Time that system waits after node reboot and before it runs DF election algorithm min-down-timer - Minimum downtime for BGP multi-homing site after transition from up to down -

Each site has three possible states:

-

Admin state — controlled by the admin-state command.

-

Operational state — controlled by the operational status of the individual site objects.

-

Designated Forwarder (DF) state — controlled by the BGP MH election algorithm.

-

The following CLI output shows the three states for BGP MH site ‟MH-site-1” on MTU-5:

[]

A:admin@MTU-5# show service id 500 site MH-site-1

===============================================================================

Site Information

===============================================================================

Site Name : MH-site-1

-------------------------------------------------------------------------------

Site Id : 1

Dest : sap:1/1/1:8 Mesh-SDP Bind : no

Admin Status : Enabled Oper Status : up

Designated Fwdr : No

DF UpTime : 0d 00:00:00 DF Chg Cnt : 0

Boot Timer : default Timer Remaining : 0d 00:00:00

Site Activation Timer: default Timer Remaining : 0d 00:00:00

Min Down Timer : default Timer Remaining : 0d 00:00:00

Failed Threshold : default(all)

Monitor Oper Grp : (none)

===============================================================================

On PE-1, MH-site ‟ MH-site-2” is configured with site ID 2 and object spoke-SDP 15:500 (pseudowire established from PE-1 to MTU-5).

The following CLI shows the service configuration for PE-2. The site ID is 2, that is, the same value configured in PE-1. The object defined in PE-2’s site is spoke-SDP 25:500 (pseudowire established from PE-2 to MTU-5).

# on PE-2:

service {

pw-template "PW500" {

pw-template-id 500

provisioned-sdp use

}

sdp 21 {

admin-state enable

description "SDP to transport BGP-signaled PWs"

delivery-type mpls

path-mtu 8000

signaling bgp

far-end {

ip-address 192.0.2.1

}

lsp "LSP-PE-2-PE-1" { }

}

sdp 23 {

admin-state enable

description "SDP to transport BGP-signaled PWs"

delivery-type mpls

path-mtu 8000

signaling bgp

far-end {

ip-address 192.0.2.3

}

lsp "LSP-PE-2-PE-3" { }

}

sdp 24 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.4

}

lsp "LSP-PE-2-MTU-4" { }

}

sdp 25 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.5

}

lsp "LSP-PE-2-MTU-5" { }

}

vpls "VPLS-500" {

admin-state enable

service-id 500

customer "1"

bgp 1 {

route-distinguisher "65000:502"

vsi-import ["vsi500_import"]

vsi-export ["vsi500_export"]

pw-template-binding "PW500" {

split-horizon-group "CORE"

}

}

bgp-vpls {

admin-state enable

maximum-ve-id 65535

ve {

name "502"

id 502

}

}

spoke-sdp 24:500 {

}

spoke-sdp 25:500 {

}

bgp-mh-site "MH-site-2" {

admin-state enable

id 2

spoke-sdp 25:500

}

}

MTU service configuration

The following CLI output shows the service level configuration on MTU-4.

# on MTU-4:

configure {

service {

sdp 41 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.1

}

lsp "LSP-MTU-4-PE-1" { }

}

sdp 42 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.2

}

lsp "LSP-MTU-4-PE-2" { }

}

vpls "VPLS-500" {

admin-state enable

service-id 500

customer "1"

bgp 1 {

route-distinguisher "65000:504"

route-target {

export "target:65000:500"

import "target:65000:500"

}

}

endpoint "CORE" {

suppress-standby-signaling false

}

split-horizon-group "site-1" {

}

spoke-sdp 41:500 {

endpoint {

name "CORE"

precedence primary

}

stp {

admin-state disable

}

}

spoke-sdp 42:500 {

endpoint {

name "CORE"

}

stp {

admin-state disable

}

}

bgp-mh-site "MH-site-1" {

admin-state enable

id 1

shg-name "site-1"

}

sap 1/1/1:7 {

split-horizon-group "site-1"

}

sap 1/1/2:8 {

split-horizon-group "site-1"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "assoc-1" mep-id 48 {

admin-state enable

direction down

fault-propagation use-if-status-tlv

ccm true

}

}

}

}

MTU-4 is configured with the following characteristics:

-

The BGP context provides the general BGP parameters for service 500 in MTU-4. The route-target command is now used instead of the vsi-import and vsi-export commands. The intent in this example is to configure only the export and import route-targets. There is no need to modify any other attribute. If the local preference is to be modified (to influence the DF election), a vsi-policy must be configured.

-

An A/S pseudowire configuration is used to control the pseudowire redundancy towards the core.

-

The multi-homing site, MH-site-1 has a site-id = 1 and an SHG as an object. The SHG site-1 is composed of SAP 1/1/1:7 and SAP 1/1/2:8. As previously discussed, the site will not be declared operationally down until the two SAPs belonging to the site are down. This behavior can be changed by the failed-threshold command (for instance, in order to bring the site down when only one object has failed even though the second SAP is still up).

-

As an example, a Y.1731 MEP with fault-propagation has been defined in SAP 1/1/2:8. As discussed later in the chapter, this MEP will signal the status of the SAP (as a result of the BGP MH process) to CE-8.

The service configuration in MTU-5 is as follows:

# on MTU-5:

configure {

service {

sdp 51 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.1

}

lsp "LSP-MTU-5-PE-1" { }

}

sdp 52 {

admin-state enable

delivery-type mpls

path-mtu 8000

far-end {

ip-address 192.0.2.2

}

lsp "LSP-MTU-5-PE-2" { }

}

vpls "VPLS-500" {

admin-state enable

service-id 500

customer "1"

bgp 1 {

route-distinguisher "65000:505"

route-target {

export "target:65000:500"

import "target:65000:500"

}

}

spoke-sdp 51:500 {

}

spoke-sdp 52:500 {

}

bgp-mh-site "MH-site-1" {

admin-state enable

id 1

sap 1/1/1:8

}

sap 1/1/1:8 {

}

}

Influencing the DF election

As previously described, assuming that the sites on the two nodes taking part of the same multi-homing site are both up, the two tie-breakers for electing the DF are (in this order):

-

Highest LP

-

Lowest PE ID

The LP by default is 100 in all the routers. Under normal circumstances, if the LP in any router is not changed, MTU-4 will be elected the DF for MH-site-1, whereas PE-1 will be the DF for MH-site-2. Assume in this section that this behavior is changed for MH-site-2 to make PE-2 the DF. Because changing the system address (to make PE-2’s ID the lower of the two IDs) is usually not an easy task to accomplish, the vsi-export policy on PE-2 is modified with an LP of 150 with which the MH-site-2 NLRI is announced to PE-1. Because LP 150 is greater than the default 100 in PE-1, PE-2 will be elected as the DF for MH-site-2. The vsi-import policy remains unchanged and the vsi-export policy is modified as follows:

# on PE-2:

configure {

policy-options {

community "comm_core" {

member "target:65000:500" { }

}

policy-statement "vsi500_export" {

entry 10 {

action {

action-type accept

local-preference 150

community {

add ["comm_core"]

}

}

}

}

On PE-1, the import and export policies are not modified. The policies were already applied in the bgp context of VPLS-500, as follows:

# on PE-2:

configure {

service {

vpls "VPLS-500" {

admin-state enable

service-id 500

customer "1"

bgp 1 {

route-distinguisher "65000:502"

vsi-import ["vsi500_import"]

vsi-export ["vsi500_export"]

pw-template-binding "PW500" {

split-horizon-group "CORE"

}

}

---snip---

The DF state of PE-2 can be verified as follows:

[]

A:admin@PE-2# show service id 500 site MH-site-2

===============================================================================

Site Information

===============================================================================

Site Name : MH-site-2

-------------------------------------------------------------------------------

Site Id : 2

Dest : sdp:25:500 Mesh-SDP Bind : no

Admin Status : Enabled Oper Status : up

Designated Fwdr : Yes

DF UpTime : 0d 00:00:10 DF Chg Cnt : 2

Boot Timer : default Timer Remaining : 0d 00:00:00

Site Activation Timer: default Timer Remaining : 0d 00:00:00

Min Down Timer : default Timer Remaining : 0d 00:00:00

Failed Threshold : default(all)

Monitor Oper Grp : (none)

===============================================================================

The import and export policies are applied at service 500 level, which means that the LP changes for all the potential multi-homing sites configured under service 500. Therefore, load balancing can be achieved on a per-service basis, but not within the same service.

These policies are applied on VPLS-500 for all the potential BGP applications: BGP VPLS, BGP MH, and BGP AD. In the example, the LP for the PE-2 BGP updates for BGP MH and BGP VPLS will be set to 150. However, this has no impact on BGP VPLS because a PE cannot receive two BGP VPLS NLRIs with the same VE-ID, which implies that a different VE-ID per PE within the same VPLS is required.

The vsi-export policy is restored to its original settings on PE-2, as follows:

# on PE-2:

configure {

policy-options {

community "comm_core" {

member "target:65000:500" { }

}

policy-statement "vsi500_export" {

entry 10 {

action {

action-type accept

delete local-preference

community {

add ["comm_core"]

}

}

}

}

In all the PE nodes, the import and export policies applied in the bgp context of VPLS-500 have identical settings again, and PE-1 is the DF.

Black-hole avoidance

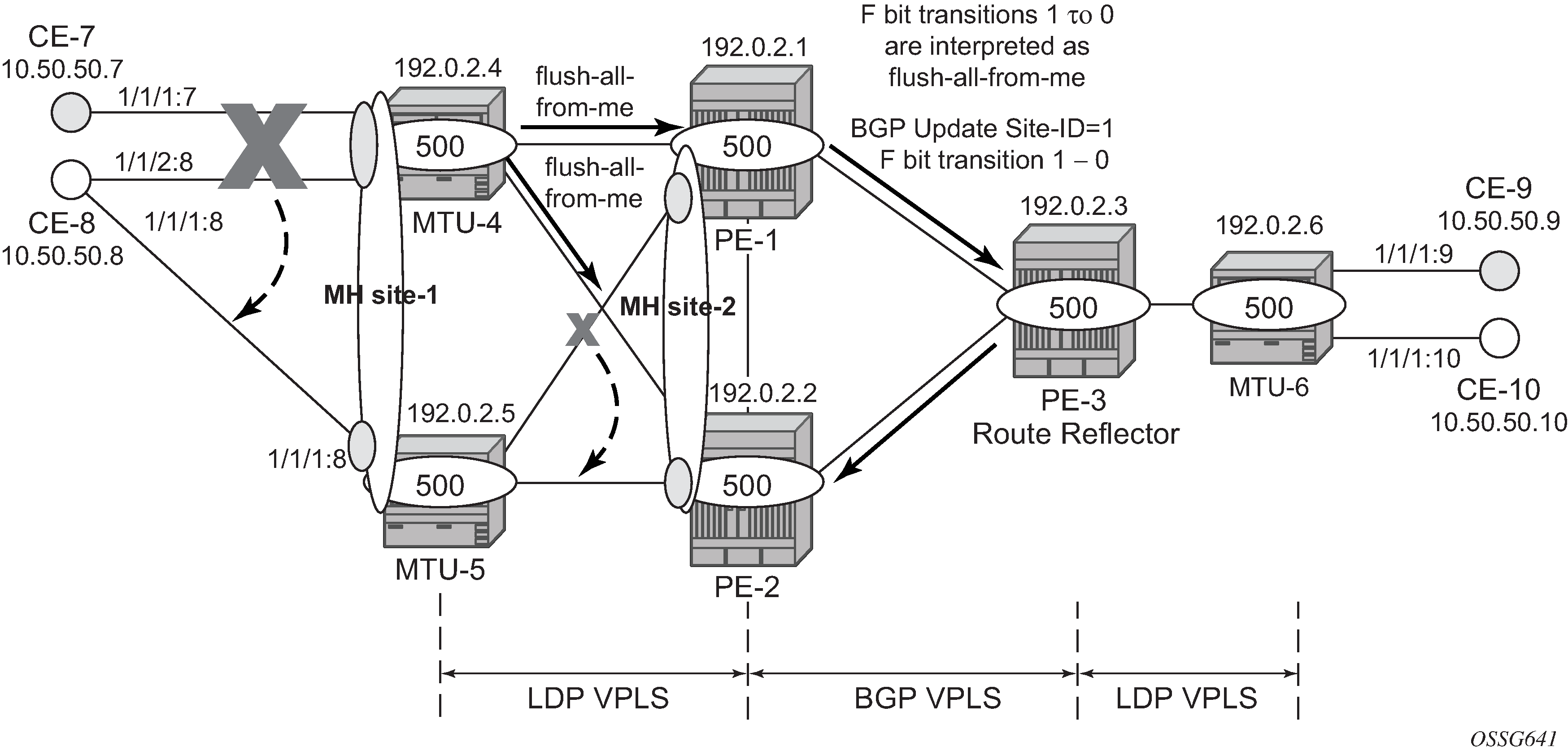

SR OS supports the appropriate MAC flush mechanisms for BGP MH, regardless of the protocol being used for the pseudowire signaling:

-

LDP VPLS — The PE that contains the old DF site (the site that just experienced a DF to non-DF transition) always sends an LDP MAC flush-all-from-me to all LDP pseudowires in the VPLS, including the LDP pseudowires associated with the new DF site. No specific configuration is required.

-

BGP VPLS — The remote BGP VPLS PEs interpret the F bit transitions from 1 to 0 as an implicit MAC flush-all-from-me indication. If a BGP update with the flag F=0 is received from the previous DF PE, the remote PEs perform MAC flush-all-from-me, flushing all the MACs associated with the pseudowire to the old DF PE. No specific configuration is required.

Double flushing will not happen because it is expected that between any pair of PEs there will exist only one type of pseudowires—either BGP or LDP pseudowire—, but not both types.

In the example, assuming MTU-4 and PE-1 are the DF nodes:

-

When MH-site-1 is brought operationally down on MTU-4 (so by default, the two SAPs must go down unless the failed-threshold parameter is changed so that the site is down when only one SAP is brought down), MTU-4 will issue a flush-all-from-me message.

-

When MH-site-2 is brought operationally down on PE-1, a BGP update with F=0 and D=1 is issued by PE-1. PE-2 and PE-3 will receive the update and will flush the MAC addresses learned on the pseudowire to PE-1.

Figure 3. MAC flush for BGP MH

Node failures implicitly trigger a MAC flush on the remote nodes, because the TLDP/BGP session to the failed node goes down.

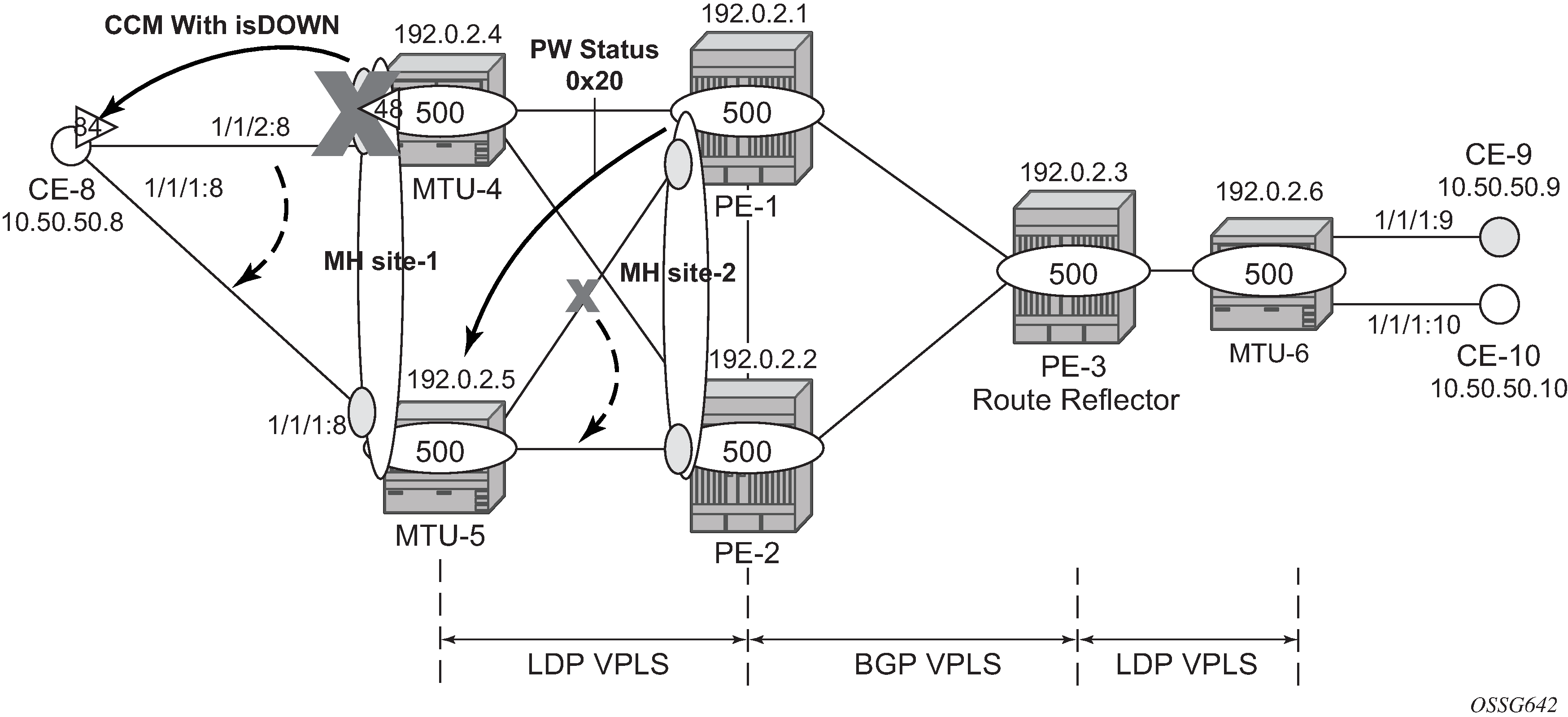

Access CE/PE signaling

BGP MH works at service level, therefore no physical ports are torn down on the non-DF, but rather the objects are brought down operationally, while the physical port will stay up and used for any other services existing on that port. Because of this reason, there is a need for signaling the standby status of an object to the remote PE or CE.

-

Access PEs running BGP MH on spoke SDPs and elected non-DF, will signal pseudowire standby status (0x20) to the other end. If no pseudowire status is supported on the remote MTU, a label withdrawal is performed. If there is more than one spoke SDP on the site (part of the same SHG), the signaling is sent for all the pseudowires of the site.

Note:The configure service vpls x spoke-sdp y:z pw-status signaling false parameter allows to send a TLDP label-withdrawal instead of pseudowire status bits, even though the peer supports pseudowire status.

-

Multi-homed CEs connected through SAPs to the PEs running BGP MH, are signaled by the PEs using Y.1731 CFM, either by stopping the transmission of CCMs or by sending CCMs with isDown (interface status down encoding in the interface status TLV).

In this example, down MEPs on MTU-4 SAP 1/1/2:8 and CE-8 SAP 1/1/2:8 are configured. In a similar way, other MEPs can be configured on MTU-4 SAP 1/1/1:7, MTU-5 SAP 1/1/1:8, and CE-8 SAP 1/1/1:7 and SAP 1/1/1:8. Access PE/CE signaling shows the MEPs on MTU-4 SAP 1/1/2:8 and CE-8. Upon failure on the MTU-4 site MH-site-1, the MEP 48 will start sending CCMs with interface status down.

The CFM configuration required at SAP 1/1/2:8 is as follows. Down MEPs will be configured on CE-8 and MTU-5 SAPs in the same way, but in a different association. The option fault-propagation use-if-status-tlv must be added. In case the CE does not understand the CCM interface status TLV, the fault-propagation suspend-ccm option can be enabled instead. This will stop the transmission of CCMs upon site failures. Detailed configuration guidelines for Y.1731 are beyond the scope of this chapter.

# on MTU-4:

configure {

eth-cfm {

domain "domain-1" {

level 3

name "domain-1"

md-index 1

association "assoc-1" {

icc-based "Association48"

ma-index 1

ccm-interval 1s

bridge-identifier "VPLS-500" {

}

remote-mep 84 {

}

}

}

# on MTU-4:

configure {

service {

vpls "VPLS-500" {

sap 1/1/2:8 {

split-horizon-group "site-1"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "assoc-1" mep-id 48 {

admin-state enable

direction down

fault-propagation use-if-status-tlv

ccm true

}

}

}

}

If CE-8 is a service router, upon receiving a CCM with isDown, an alarm will be triggered and the SAP will be brought down:

# on CE-8:

71 2021/01/20 16:09:02.701 CET WARNING: OSPF #2047 vprn8 VR: 2 OSPFv2 (0)

"LCL_RTR_ID 10.50.50.8: Interface int-CE-8-MTU-4 state changed to down (event

IF_DOWN)"

70 2021/01/20 16:09:02.701 CET WARNING: SNMP #2004 vprn8 int-CE-8-MTU-4

"Interface int-CE-8-MTU-4 is not operational"

69 2021/01/20 16:09:02.700 CET MINOR: SVCMGR #2203 vprn8

"Status of SAP 1/1/2:8 in service 8 (customer 1) changed to admin=up oper=down

flags=OamDownMEPFault "

68 2021/01/20 16:09:02.700 CET MINOR: SVCMGR #2108 vprn8

"Status of interface int-CE-8-MTU-4 in service 8 (customer 1) changed to admin=up

oper=down"

67 2021/01/20 16:09:02.700 CET MINOR: ETH_CFM #2001 Base

"MEP 1/1/84 highest defect is now defRemoteCCM"

On CE-8, the status of the SAP can be verified as follows:

[]

A:admin@CE-8# show service id 8 sap 1/1/2:8

===============================================================================

Service Access Points(SAP)

===============================================================================

Service Id : 8

SAP : 1/1/2:8 Encap : q-tag

Description : (Not Specified)

Admin State : Up Oper State : Down

Flags : OamDownMEPFault

Multi Svc Site : None

Last Status Change : 01/20/2021 16:09:03

Last Mgmt Change : 01/20/2021 16:05:49

===============================================================================

As also depicted in Access PE/CE signaling, PE-1 will signal pseudowire status standby (code 0x20) when PE-1 goes to non-DF state for MH-site-2. MTU-5 will receive that signaling and, based on the ignore-standby-signaling parameter, will decide whether to send the broadcast, unknown unicast, and multicast (BUM) traffic to PE-1. In case MTU-5 uses in its configuration ignore-standby-signaling, it will be sending BUM traffic on both pseudowires at the same time (which is not normally desired), ignoring the pseudowire status bits. The following output shows the MTU-5 spoke-SDP receiving the pseudowire status signaling. Although the spoke SDP stays operationally up, the Peer Pw Bits field shows pwFwdingStandby and MTU-5 will not send any traffic if the ignore-standby-signaling parameter is disabled.

[]

A:admin@MTU-5# show service id 500 sdp 51:500 detail

===============================================================================

Service Destination Point (Sdp Id : 51:500) Details

===============================================================================

-------------------------------------------------------------------------------

Sdp Id 51:500 -(192.0.2.1)

-------------------------------------------------------------------------------

Description : (Not Specified)

SDP Id : 51:500 Type : Spoke

Spoke Descr : (Not Specified)

Split Horiz Grp : (Not Specified)

Etree Root Leaf Tag: Disabled Etree Leaf AC : Disabled

VC Type : Ether VC Tag : n/a

Admin Path MTU : 8000 Oper Path MTU : 8000

Delivery : MPLS

Far End : 192.0.2.1 Tunnel Far End : n/a

Oper Tunnel Far End: 192.0.2.1

LSP Types : RSVP

---snip---

Admin State : Up Oper State : Up

---snip---

Endpoint : N/A Precedence : 4

PW Status Sig : Enabled

Force Vlan-Vc : Disabled Force Qinq-Vc : none

Class Fwding State : Down

Flags : None

Time to RetryReset : never Retries Left : 3

Mac Move : Blockable Blockable Level : Tertiary

Local Pw Bits : None

Peer Pw Bits : pwFwdingStandby

---snip---

Operational groups for BGP-MH

Operational groups (oper-group) introduce the capability of grouping objects into a generic group object and associating its status to other service endpoints (pseudowires, SAPs, IP interfaces) located in the same or in different service instances. The operational group status is derived from the status of the individual components using certain rules specific to the application using the concept. A number of other service entities—the monitoring objects—can be configured to monitor the operational group status and to drive their own status based on the oper-group status. In other words, if the operational group goes down, the monitoring objects will be brought down. When one of the objects included in the operational group comes up, the entire group will also come up, and therefore so will the monitoring objects.

This concept can be used to enhance the BGP-MH solution for avoiding black-holes on the PE selected as the DF if the rest of the VPLS endpoints fail (pseudowire spoke(s)/pseudowire mesh and/or SAP(s)). Oper-groups and BGP-MH illustrates the use of operational groups together with BGP-MH. On PE-1 (and PE-2) all of the BGP-VPLS pseudowires in the core are configured under the same oper-groupgroup-1. MH-site-2 is configured as a monitoring object. When the two BGP-VPLS pseudowires go down, oper-groupgroup-1 will be brought down, therefore MH-site-2 on PE-1 will go down as well (PE-2 will become DF and PE-1 will signal standby to MTU-5).

In the preceding example, this feature provides a solution to avoid a black-hole when PE-1 loses its connectivity to the core.

Operational groups are configured in two steps:

-

Identify a set of objects whose forwarding state should be considered as a whole group, then group them under an operational group (in this case oper-groupgroup-1, which is configured in the bgp pw-template-binding context).

-

Associate other existing objects (clients) with the oper-group using the monitor-group command (configured, in this case, in the site MH-site-2).

The following CLI excerpt shows the commands required (oper-group, monitor-oper-group).

# on PE-1:

configure {

service {

oper-group "group-1" {

}

vpls "VPLS-500"

bgp 1 {

pw-template-binding "PW500" {

split-horizon-group "CORE"

oper-group "group-1"

}

}

bgp-mh-site "MH-site-2"

monitor-oper-group "group-1"

}

}

When all the BGP-VPLS pseudowires go down, oper-groupgroup-1 will go down and therefore the monitoring object, site MH-site-2, will also go down and PE-2 will then be elected as DF. The log 99 gives information about this sequence of events:

# on PE-1:

configure {

service {

sdp 12 {

admin-state disable

}

sdp 13 {

admin-state disable

}

175 2021/01/20 16:15:32.377 CET WARNING: SVCMGR #2531 Base BGP-MH

"Service-id 500 site MH-site-2 is not the designated-forwarder"

174 2021/01/20 16:15:32.377 CET MAJOR: SVCMGR #2316 Base

"Processing of a SDP state change event is finished and the status of all affected SDP

Bindings on SDP 12 has been updated."

173 2021/01/20 16:15:32.377 CET MAJOR: SVCMGR #2316 Base

"Processing of a SDP state change event is finished and the status of all affected SDP

Bindings on SDP 13 has been updated."

172 2021/01/20 16:15:32.377 CET MINOR: SVCMGR #2306 Base

"Status of SDP Bind 15:500 in service 500 (customer 1) changed to admin=up oper=down

flags="

171 2021/01/20 16:15:32.376 CET MINOR: SVCMGR #2326 Base

"Status of SDP Bind 15:500 in service 500 (customer 1) local PW status bits changed

to pwFwdingStandby "

170 2021/01/20 16:15:32.376 CET MINOR: SVCMGR #2542 Base

"Oper-group group-1 changed status to down"

169 2021/01/20 16:15:32.376 CET MINOR: SVCMGR #2303 Base

"Status of SDP 13 changed to admin=down oper=down"

168 2021/01/20 16:15:32.376 CET MINOR: SVCMGR #2303 Base

"Status of SDP 12 changed to admin=down oper=down"

PE-1 is no longer the DF, as follows:

[]

A:admin@PE-1# show service id 500 site

===============================================================================

VPLS Sites

===============================================================================

Site Site-Id Dest Mesh-SDP Admin Oper Fwdr

-------------------------------------------------------------------------------

MH-site-2 2 sdp:15:500 no Enabled down No

-------------------------------------------------------------------------------

Number of Sites : 1

-------------------------------------------------------------------------------

===============================================================================

PE-2 becomes the DF.

[]

A:admin@PE-2# show service id 500 site

===============================================================================

VPLS Sites

===============================================================================

Site Site-Id Dest Mesh-SDP Admin Oper Fwdr

-------------------------------------------------------------------------------

MH-site-2 2 sdp:25:500 no Enabled up Yes

-------------------------------------------------------------------------------

Number of Sites : 1

-------------------------------------------------------------------------------

===============================================================================

The process reverts when at least one BGP-VPLS pseudowire comes back up.

Show commands and debugging options

The main command to find out the status of a site is the show service id x site command.

[]

A:admin@MTU-5# show service id 500 site

===============================================================================

VPLS Sites

===============================================================================

Site Site-Id Dest Mesh-SDP Admin Oper Fwdr

-------------------------------------------------------------------------------

MH-site-1 1 sap:1/1/1:8 no Enabled up No

-------------------------------------------------------------------------------

Number of Sites : 1

-------------------------------------------------------------------------------

===============================================================================

A detail modifier is available:

[]

A:admin@MTU-5# show service id 500 site detail

===============================================================================

Site Information

===============================================================================

Site Name : MH-site-1

-------------------------------------------------------------------------------

Site Id : 1

Dest : sap:1/1/1:8 Mesh-SDP Bind : no

Admin Status : Enabled Oper Status : up

Designated Fwdr : No

DF UpTime : 0d 00:00:00 DF Chg Cnt : 0

Boot Timer : default Timer Remaining : 0d 00:00:00

Site Activation Timer: default Timer Remaining : 0d 00:00:00

Min Down Timer : default Timer Remaining : 0d 00:00:00

Failed Threshold : default(all)

Monitor Oper Grp : (none)

-------------------------------------------------------------------------------

Number of Sites : 1

===============================================================================

The detailview of the command displays information about the BGP MH timers. The values are only shown if the global values are overridden by specific ones at service level (and will be tagged with Ovr if they have been configured at service level). The Timer Remaining field reflects the count down from the boot timer and activation timer down to the moment when this router tries to become DF again. Again, this is only shown when the global timers have been overridden by the ones at service level.

The objects on the non-DF site will be brought down operationally and flagged with StandByForMHProtocol, for example, for SAP 1/1/1:8 on non-DF MTU-5:

[]

A:admin@MTU-5# show service id 500 sap 1/1/1:8

===============================================================================

Service Access Points(SAP)

===============================================================================

Service Id : 500

SAP : 1/1/1:8 Encap : q-tag

Description : (Not Specified)

Admin State : Up Oper State : Down

Flags : StandByForMHProtocol

Multi Svc Site : None

Last Status Change : 01/20/2021 15:11:14

Last Mgmt Change : 01/20/2021 15:44:01

===============================================================================

For spoke SDP 25:500 on non-DF PE-2:

[]

A:admin@PE-2# show service id 500 sdp 25:500 detail

===============================================================================

Service Destination Point (Sdp Id : 25:500) Details

===============================================================================

-------------------------------------------------------------------------------

Sdp Id 25:500 -(192.0.2.5)

-------------------------------------------------------------------------------

Description : (Not Specified)

SDP Id : 25:500 Type : Spoke

---snip---

Admin State : Up Oper State : Down

---snip---

Flags : StandbyForMHProtocol

---snip---

The BGP MH routes in the RIB, RIB-In and RIB-Out can be shown by using the corresponding show router bgp routes l2-vpn and show router bgp neighbor x.x.x.x filter1 received-routes|advertised-routes family l2-vpn commands. The BGP MH routes are only shown when the operator uses the l2-vpn family modifier. Should the operator want to filter only the BGP MH routes out of the l2-vpn routes, the l2vpn-type multi-homing filter has to be added to the show router bgp routes commands.

[]

A:admin@PE-3# show router bgp routes l2-vpn

===============================================================================

BGP Router ID:192.0.2.3 AS:65000 Local AS:65000

===============================================================================

Legend -

Status codes : u - used, s - suppressed, h - history, d - decayed, * - valid

l - leaked, x - stale, > - best, b - backup, p - purge

Origin codes : i - IGP, e - EGP, ? - incomplete

===============================================================================

BGP L2VPN Routes

===============================================================================

Flag RouteType Prefix MED

RD SiteId Label

Nexthop VeId BlockSize LocalPref

As-Path BaseOffset vplsLabelBa

se

-------------------------------------------------------------------------------

u*>i VPLS - - 0

65000:501 - -

192.0.2.1 501 8 100

No As-Path 497 524271

u*>i MultiHome - - 0

65000:501 2 -

192.0.2.1 - - 100

No As-Path - -

u*>i VPLS - - 0

65000:502 - -

192.0.2.2 502 8 100

No As-Path 497 524271

u*>i MultiHome - - 0

65000:502 2 -

192.0.2.2 - - 100

No As-Path - -

-------------------------------------------------------------------------------

Routes : 4

===============================================================================

The following output shows the L2-VPN BGP-MH routes from site 2 (PE-1 and PE-2) in detail:

[]

A:admin@PE-3# show router bgp routes l2-vpn l2vpn-type multi-homing siteid 2 hunt

===============================================================================

BGP Router ID:192.0.2.3 AS:65000 Local AS:65000

===============================================================================

Legend -

Status codes : u - used, s - suppressed, h - history, d - decayed, * - valid

l - leaked, x - stale, > - best, b - backup, p - purge

Origin codes : i - IGP, e - EGP, ? - incomplete

===============================================================================

BGP L2VPN-MULTIHOME Routes

===============================================================================

-------------------------------------------------------------------------------

RIB In Entries

-------------------------------------------------------------------------------

Route Type : MultiHome

Route Dist. : 65000:501

Site Id : 2

Nexthop : 192.0.2.1

From : 192.0.2.1

Res. Nexthop : n/a

Local Pref. : 100 Interface Name : NotAvailable

Aggregator AS : None Aggregator : None

Atomic Aggr. : Not Atomic MED : 0

AIGP Metric : None IGP Cost : n/a

Connector : None

Community : target:65000:500

l2-vpn/vrf-imp:Encap=19: Flags=-DF: MTU=0: PREF=0

Cluster : No Cluster Members

Originator Id : None Peer Router Id : 192.0.2.1

Flags : Used Valid Best IGP

Route Source : Internal

AS-Path : No As-Path

Route Tag : 0

Neighbor-AS : n/a

Orig Validation: N/A

Source Class : 0 Dest Class : 0

Add Paths Send : Default

Last Modified : 00h07m03s

Route Type : MultiHome

Route Dist. : 65000:502

Site Id : 2

Nexthop : 192.0.2.2

From : 192.0.2.2

Res. Nexthop : n/a

Local Pref. : 100 Interface Name : NotAvailable

Aggregator AS : None Aggregator : None

Atomic Aggr. : Not Atomic MED : 0

AIGP Metric : None IGP Cost : n/a

Connector : None

Community : target:65000:500

l2-vpn/vrf-imp:Encap=19: Flags=none: MTU=0: PREF=0

Cluster : No Cluster Members

Originator Id : None Peer Router Id : 192.0.2.2

Flags : Used Valid Best IGP

Route Source : Internal

AS-Path : No As-Path

Route Tag : 0

Neighbor-AS : n/a

Orig Validation: N/A

Source Class : 0 Dest Class : 0

Add Paths Send : Default

Last Modified : 00h07m03s

---snip---

The following shows the Layer 2 BGP routes on PE-1:

[]

A:admin@PE-1# show service l2-route-table ?

l2-route-table [detail] [bgp-ad] [multi-homing] [bgp-vpls] [bgp-vpws] [all-routes]

all-routes - <keyword>

bgp-ad - <keyword>

bgp-vpls - <keyword>

bgp-vpws - <keyword>

detail - keyword - display detailed information

multi-homing - <keyword>

[]

A:admin@PE-1# show service l2-route-table multi-homing

===============================================================================

Services: L2 Multi-Homing Route Information - Summary

===============================================================================

Svc Id L2-Routes (RD-Prefix) Next Hop SiteId State DF

-------------------------------------------------------------------------------

500 65000:502 192.0.2.2 2 up(0) clear

-------------------------------------------------------------------------------

No. of L2 Multi-Homing Route Entries: 1

===============================================================================

In case PE-3 were the RR for MTU-4 and MTU-5 as well as for PE-1 and PE-2, PE-1 would have two more L2-routes for multi-homing in this table, as follows:

[]

A:admin@PE-1# show service l2-route-table multi-homing

===============================================================================

Services: L2 Multi-Homing Route Information - Summary

===============================================================================

Svc Id L2-Routes (RD-Prefix) Next Hop SiteId State DF

-------------------------------------------------------------------------------

500 65000:504 192.0.2.4 1 up(0) set

500 65000:505 192.0.2.5 1 up(0) clear

500 65000:502 192.0.2.2 2 up(0) clear

-------------------------------------------------------------------------------

No. of L2 Multi-Homing Route Entries: 3

===============================================================================

When operational groups are configured (as previously shown), the following show command helps to find the operational dependencies between monitoring objects and group objects.

[]

A:admin@PE-1# show service oper-group "group-1" detail

===============================================================================

Service Oper Group Information

===============================================================================

Oper Group : group-1

Creation Origin : manual Oper Status: up

Hold DownTime : 0 secs Hold UpTime: 4 secs

Members : 2 Monitoring : 1

===============================================================================

=======================================================================

Member SDP-Binds for OperGroup: group-1

=======================================================================

SdpId SvcId Type IP address Adm Opr

-----------------------------------------------------------------------

12:4294967295 500 BgpVpls 192.0.2.2 Up Up

13:4294967294 500 BgpVpls 192.0.2.3 Up Up

-----------------------------------------------------------------------

SDP Entries found: 2

=======================================================================

===============================================================================

Monitoring Sites for OperGroup: group-1

===============================================================================

SvcId Site Site-Id Dest Admin Oper Fwdr

-------------------------------------------------------------------------------

500 MH-site-2 2 sdp:15:500 Enabled up Yes

-------------------------------------------------------------------------------

Site Entries found: 1

===============================================================================

For debugging, the following CLI sources can be used:

-

log-id 99 — Provides information about the site object changes and DF changes.

-

debug router bgp update (in classic CLI) — Shows the BGP updates for BGP MH, including the sent and received BGP MH NLRIs and flags.

# on MTU-4 (classic CLI): debug router "Base" bgp update -

debug router ldp commands (in classic CLI) — Provides information about the pseudowire status bits being signaled as well as the MAC flush messages.

# on MTU-4 (classic CLI): debug router "Base" ldp peer 192.0.2.1 packet init detail label detail

As an example, log-id 99 shows the following debug output after disabling MH-site-1 on MTU-4:

# on MTU-4:

configure {

service {

vpls "VPLS-500"

sap 1/1/1:7 {

admin-state disable

}

sap 1/1/2:8 {

admin-state disable

}

120 2021/01/20 16:38:54.685 CET WARNING: SVCMGR #2531 Base BGP-MH

"Service-id 500 site MH-site-1 is not the designated-forwarder"

119 2021/01/20 16:38:54.685 CET MINOR: SVCMGR #2203 Base

"Status of SAP 1/1/2:8 in service 500 (customer 1) changed to admin=down oper=down

flags=SapAdminDown MhStandby"

---snip---

On MTU-4, debugging is enabled for BGP updates and the following BGP-MH updates are logged:

4 2021/01/20 16:38:54.692 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.3

"Peer 1: 192.0.2.3: UPDATE

Peer 1: 192.0.2.3 - Received BGP UPDATE:

Withdrawn Length = 0

Total Path Attr Length = 86

Flag: 0x90 Type: 14 Len: 28 Multiprotocol Reachable NLRI:

Address Family L2VPN

NextHop len 4 NextHop 192.0.2.5

[MH] site-id: 1, RD 65000:505

Flag: 0x40 Type: 1 Len: 1 Origin: 0

Flag: 0x40 Type: 2 Len: 0 AS Path:

Flag: 0x80 Type: 4 Len: 4 MED: 0

Flag: 0x40 Type: 5 Len: 4 Local Preference: 100

Flag: 0x80 Type: 9 Len: 4 Originator ID: 192.0.2.5

Flag: 0x80 Type: 10 Len: 4 Cluster ID:

1.1.1.1

Flag: 0xc0 Type: 16 Len: 16 Extended Community:

target:65000:500

l2-vpn/vrf-imp:Encap=19: Flags=-DF: MTU=0: PREF=0

"

---snip---

2 2021/01/20 16:38:54.686 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.3

"Peer 1: 192.0.2.3: UPDATE

Peer 1: 192.0.2.3 - Send BGP UPDATE:

Withdrawn Length = 0

Total Path Attr Length = 72

Flag: 0x90 Type: 14 Len: 28 Multiprotocol Reachable NLRI:

Address Family L2VPN

NextHop len 4 NextHop 192.0.2.4

[MH] site-id: 1, RD 65000:504

Flag: 0x40 Type: 1 Len: 1 Origin: 0

Flag: 0x40 Type: 2 Len: 0 AS Path:

Flag: 0x80 Type: 4 Len: 4 MED: 0

Flag: 0x40 Type: 5 Len: 4 Local Preference: 100

Flag: 0xc0 Type: 16 Len: 16 Extended Community:

target:65000:500

l2-vpn/vrf-imp:Encap=19: Flags=D: MTU=0: PREF=0

"

As described earlier, debugging is enabled on MTU-4 for LDP messages between MTU-4 and PE-1. The following MAC flush-all-from-me message is sent by MTU-4 to PE-1.

1 2021/01/20 16:38:54.686 CET MINOR: DEBUG #2001 Base LDP

"LDP: LDP

Send Address Withdraw packet (msgId 383) to 192.0.2.1:0

Protocol version = 1

MAC Flush (All MACs learned from me)

Service FEC PWE3: ENET(5)/500 Group ID = 0 cBit = 0

"

Assuming all the recommended tools are enabled, a DF to non-DF transition can be shown as well as the corresponding MAC flush messages and related BGP processing.

On PE-1, MH-site-2 is brought down by disabling the spoke-SDP 15:500 object. A BGP-MH update will be sent when the MH site goes down. When all objects on the VPLS are disabled as in the following configuration, a BGP VPLS update will be sent as well.

# on PE-1:

configure {

service {

vpls "VPLS-500" {

spoke-sdp 14:500 {

admin-state disable

}

spoke-sdp 15:500 {

admin-state disable

}

When MH-site-2 is torn down on PE-1, the debug router bgp update command allows us to see two BGP updates from PE-1:

-

A BGP MH update for site ID 2 with flag D set (because the site is down).

-

A BGP VPLS update for veid=501 and flag D set. This is due to the fact that there are no more active objects on the VPLS, besides the BGP pseudowires.

4 2021/01/20 16:43:15.326 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.3 "Peer 1: 192.0.2.3: UPDATE Peer 1: 192.0.2.3 - Send BGP UPDATE: Withdrawn Length = 0 Total Path Attr Length = 72 Flag: 0x90 Type: 14 Len: 28 Multiprotocol Reachable NLRI: Address Family L2VPN NextHop len 4 NextHop 192.0.2.1 [VPLS/VPWS] preflen 17, veid: 501, vbo: 497, vbs: 8, label-base: 524271, RD 65000:501 Flag: 0x40 Type: 1 Len: 1 Origin: 0 Flag: 0x40 Type: 2 Len: 0 AS Path: Flag: 0x80 Type: 4 Len: 4 MED: 0 Flag: 0x40 Type: 5 Len: 4 Local Preference: 100 Flag: 0xc0 Type: 16 Len: 16 Extended Community: target:65000:500 l2-vpn/vrf-imp:Encap=19: Flags=D: MTU=1514: PREF=0 " 3 2021/01/20 16:43:15.326 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.3 "Peer 1: 192.0.2.3: UPDATE Peer 1: 192.0.2.3 - Send BGP UPDATE: Withdrawn Length = 0 Total Path Attr Length = 72 Flag: 0x90 Type: 14 Len: 28 Multiprotocol Reachable NLRI: Address Family L2VPN NextHop len 4 NextHop 192.0.2.1 [MH] site-id: 2, RD 65000:501 Flag: 0x40 Type: 1 Len: 1 Origin: 0 Flag: 0x40 Type: 2 Len: 0 AS Path: Flag: 0x80 Type: 4 Len: 4 MED: 0 Flag: 0x40 Type: 5 Len: 4 Local Preference: 100 Flag: 0xc0 Type: 16 Len: 16 Extended Community: target:65000:500 l2-vpn/vrf-imp:Encap=19: Flags=D: MTU=0: PREF=0 "

The D flag, sent along with the BGP VPLS update for veid 501, would be seen on the remote core PEs as though it was a pseudowire status fault (although there is no TLDP running in the core).

[]

A:admin@PE-2# show service id 500 all | match Flag

Flags : PWPeerFaultStatusBits

Flags : None

Flags : None

Flags : None

Conclusion

SR OS supports a wide range of service resiliency options as well as the best-of-breed system level HA and MPLS mechanisms for the access and the core. BGP MH for VPLS completes the service resiliency tool set by adding a mechanism that has some good advantages over the alternative solutions:

-

BGP MH provides a common resiliency mechanism for attachment circuits (SAPs), pseudowires (spoke SDPs), split horizon groups and mesh bindings

-

BGP MH is a network-based technique which does not need interaction to the CE or MTU to which it is providing redundancy to.

The examples used in this chapter illustrate the configuration of BGP MH for access CEs and MTUs. Show and debug commands have also been suggested so that the operator can verify and troubleshoot the BGP MH procedures.