Operational Groups in EVPN Services

This chapter provides information about Operational Groups in EVPN Services.

Topics in this chapter include:

Applicability

The information and configuration in this chapter are based on SR OS Release 21.10.R1. EVPN operational groups are supported in EVPN-VXLAN and EVPN-MPLS VPLS and R-VPLS services in SR OS Release 19.10.R2 and later; in EVPN-MPLS Epipes in SR OS Release 19.5.R1 and later.

Overview

An operational group includes objects and drives the status of service endpoints (such as pseudowires, SAPs, IP interfaces) located in the same or in different service instances. The operational group status is derived from the status of the individual components. Other service objects can monitor the operational group status. The status of the operational group influences the status of the monitoring objects.

If the operational group goes down, the monitoring objects are also brought operationally down. When one of the objects included in the operational group comes up, the entire operational group comes up, as well as the monitoring objects.

Operational groups for EVPN destinations

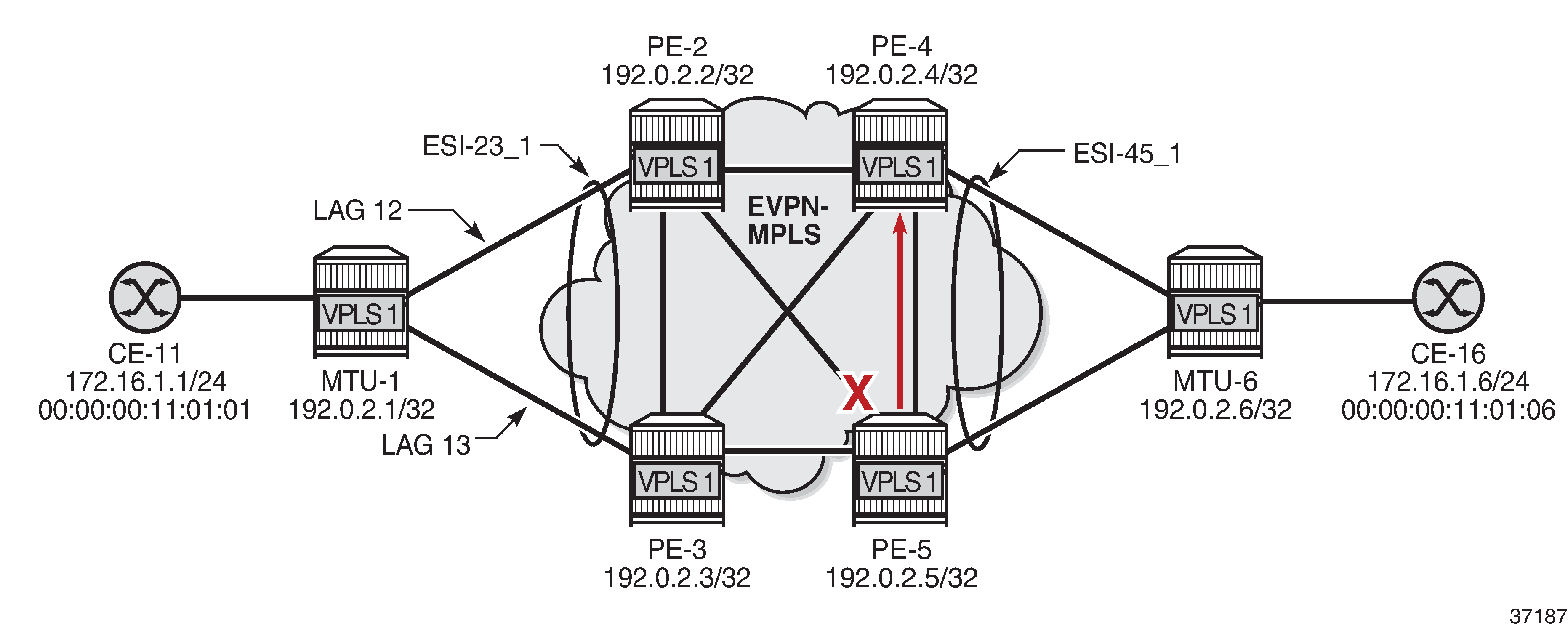

EVPN mesh going down triggers DF switchover from PE-5 to PE-4 shows a sample topology with VPLS 1 configured on all nodes. PE-4 and PE-5 share a single-active Ethernet Segment (ES) "ESI-45_1" where PE-5 is the Designated Forwarder (DF).

When the EVPN-VPLS service becomes isolated from the rest of the EVPN network (for example, all EVPN destinations are removed on DF PE-5), an operational group for EVPN destinations is required to trigger a DF switchover and bring the monitoring access SAP (or spoke SDP) down. EVPN single-active multi-homing PEs that are elected as NDF must notify their attached access nodes to prevent these from sending traffic to the NDF. Ethernet Connectivity Fault Management (ETH-CFM) is enabled on a down Maintenance Endpoint (MEP) configured on the SAP to detect SAP failure. After the remote MEP on MTU-6 detects the failure, MTU-6 redirects its traffic to PE-4. This avoids blackholes when PE-5 is disconnected from the EVPN core.

On PE-5, VPLS 1 is configured with operational group "vpls-1_45" in EVPN-MPLS and SAP 1/1/2:1 monitoring this operational group. The operational group configured under a BGP-EVPN instance cannot be configured under any other object, such as SAPs or SDP-bindings.

# on PE-5:

configure {

service {

oper-group "vpls-1_45" {

hold-time {

down 0

up 0

}

}

vpls "VPLS 1" {

admin-state enable

service-id 1

customer "1"

bgp 1 {

}

bgp-evpn {

evi 1

routes {

mac-ip {

cfm-mac true

}

}

mpls 1 {

admin-state enable

oper-group "vpls-1_45"

auto-bind-tunnel {

resolution any

}

}

}

sap 1/1/2:1 {

description "to MTU-6"

monitor-oper-group "vpls-1_45"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "association-11" mep-id 56 {

admin-state enable

mac-address 00:00:00:00:56:05

fault-propagation suspend-ccm

ccm true

}

}

}

}

Using operational groups in the EVPN service, it is possible to monitor if the PE is isolated and, if it is, trigger a Designated Forwarder switchover. The operational group associated with the EVPN-MPLS instance goes down in the following cases:

-

bgp-evpn mpls is disabled

-

VPLS is disabled

-

all EVPN destinations associated with the instance are removed, for example, when:

-

no tunnels are available for auto-bind-tunnel resolution

-

the network ports facing the EVPN ports are down

-

the BGP sessions to the route reflector or PEs are down

-

Operational groups for Ethernet Segments (Port-active multi-homing)

Operational groups can be configured on single-active ESs that need to function as port-active multi-homing Ethernet Segments. 'Port-active' refers to a special single-active mode where the PE is DF or non-DF for all the services attached to the ES. The configuration of a port-active ES is as follows:

# on PE-2:

configure {

service {

oper-group "vpls-1_23" {

hold-time {

down 0

up 0

}

}

system {

bgp {

evpn {

ethernet-segment "ESI-23_1" {

admin-state enable

esi 01:23:00:00:00:00:01:00:00:00

multi-homing-mode single-active

oper-group "vpls-1_23"

ac-df-capability exclude

df-election {

es-activation-timer 3

service-carving-mode manual

manual {

preference {

value 150 # on PE-3: value 100

}

}

}

association {

lag "lag-12" { # on PE-3: lag 13

}

}

}

}

}

}

This ES operational group "vpls-1_23" can be monitored on the LAG:

# on PE-2:

configure {

lag "lag-12" {

admin-state enable

description "to MTU-1"

encap-type dot1q

mode access

standby-signaling lacp # default value

monitor-oper-group "vpls-1_23"

max-ports 64

lacp {

mode active

system-id 00:00:00:01:02:01

system-priority 1

administrative-key 1

}

port 1/1/2 {

}

}

When the operational group is configured on the ES and monitored on the associated LAG:

-

The status of the ES operational group is driven by the ES DF status.

-

When a node becomes NDF, the ES operational group goes down and all the SAPs in the ES go down.

-

-

The ES operational group goes down when all the SAPs in the ES go down.

-

When all SAPs in the ES go down, the operational group goes down and the node becomes NDF.

-

The monitoring LAG goes down when the ES operational group is down. The LAG signals the LAG standby state to the access node. The LAG standby signaling can be configured as lacp or power-off.

*[ex:/configure lag "lag-12"]

A:admin@PE-2# standby-signaling ?

standby-signaling <keyword>

<keyword> - (lacp|power-off)

Default - lacp

Way of signaling a member port to the remote side

-

standby-signaling lacp signals LACP out-of-sync to the CE when the application layer instructs the LAG to become standby

-

standby-signaling power-off brings the LAG members down, and hence the access SAPs down

The ES and AD routes for the ES are not withdrawn because the router recognizes that the LAG becomes standby due to the ES operational group.

Some restrictions:

-

Multi-chassis LAG and ES are mutually exclusive:

*[ex:/configure redundancy multi-chassis peer 192.0.2.2 mc-lag lag "lag-13"] A:admin@PE-3# commit MINOR: MGMT_CORE #4001: configure lag "lag-13" - invalid combination mc-lag <-> monitor-oper-group -

LAG sub-groups are blocked:

*[ex:/configure lag "lag-13" port 1/1/1] A:admin@PE-3# sub-group 2 *[ex:/configure lag "lag-13" port 1/1/1] A:admin@PE-3# commit MINOR: MGMT_CORE #4001: configure lag "lag-13" port 1/1/1 - invalid combination port sub-group <-> monitor-oper-group - configure lag "lag-13" monitor-oper-group -

Only LAGs in access mode can monitor operational groups:

*[ex:/configure lag "lag-3"] A:admin@PE-3# commit MINOR: MGMT_CORE #3001: configure lag "lag-3" mode - monitor-oper-group not allowed when lag is not access -

Operational groups cannot be assigned to virtual ESs:

*[ex:/configure service system bgp evpn ethernet-segment "vESI-23_1" association lag "lag-5" virtual-ranges dot1q q-tag 1] A:admin@PE-3# commit MINOR: SVCMGR #12: configure service system bgp evpn ethernet-segment "vESI-23_1" oper-group - Inconsistent Value error - ethernet-segment oper-group not supported with virtual ethernet-segment -

Operational groups cannot be assigned to all-active ESs:

*[ex:/configure service system bgp evpn] A:admin@PE-3# commit MINOR: SVCMGR #12: configure service system bgp evpn ethernet-segment "AA_ESI-23_1" oper-group - Inconsistent Value error - all-active multi-homing not supported with ethernet-segment oper-group -

Operational groups cannot be assigned to ESs with service-carving auto:

*[ex:/configure service system bgp evpn] A:admin@PE-3# commit MINOR: SVCMGR #12: configure service system bgp evpn ethernet-segment "ESI-23_auto" oper-group - Inconsistent Value error - ethernet-segment oper-group not supported with service-carving-mode auto

Link Loss Forwarding in EVPN-VPWS

Fault propagation in EVPN-VPWS services is supported using ETH-CFM. However, not all access nodes support ETH-CFM and, in that case, LAG standby-signaling lacp or power-off can be used instead.

Configuration

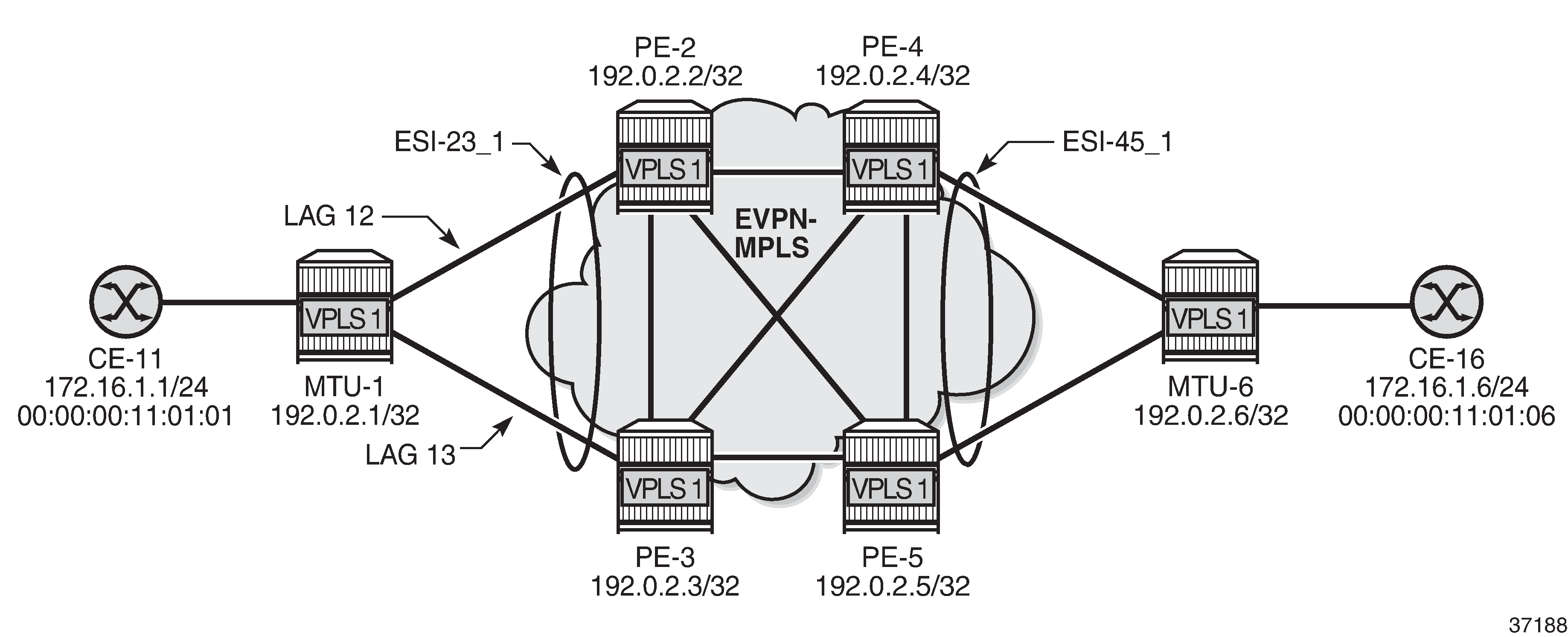

Sample topology with VPLS 1 shows the sample topology with VPLS 1 configured on all nodes.

The initial configuration includes:

-

Cards, MDAs, ports

-

LAG 12 between PE-1 and PE-2; LAG 13 between PE-1 and PE-3

-

Router interfaces between PE-2, PE-3, PE-4, and PE-5

-

IS-IS on all router interfaces

-

LDP between PE-2, PE-3, PE-4, and PE-5

-

BGP between PE-2, PE-3, PE-4, and PE-5

For BGP, PE-2 acts as route reflector and the configuration is as follows:

# on PE-2:

configure {

router "Base" {

autonomous-system 64500

bgp {

vpn-apply-export true

vpn-apply-import true

rapid-withdrawal true

peer-ip-tracking true

split-horizon true

rapid-update {

evpn true

}

group "internal" {

peer-as 64500

family {

evpn true

}

cluster {

cluster-id 192.0.2.2

}

}

neighbor "192.0.2.3" {

group "internal"

}

neighbor "192.0.2.4" {

group "internal"

}

neighbor "192.0.2.5" {

group "internal"

}

}

Operational groups for EVPN destinations

On PE-4, single-active ES "ESI-45_1" is configured with service carving auto. Operational group "vpls-1_45" is associated with EVPN-MPLS in VPLS 1 and SAP 1/1/1:1 is monitoring that operational group. ETH-CFM is enabled on a down MEP configured on the SAP to detect SAP failures. The service configuration is as follows:

# on PE-4:

configure {

service {

oper-group "vpls-1_45" {

hold-time {

down 0

up 0

}

}

system {

bgp {

evpn {

ethernet-segment "ESI-45_1" {

admin-state enable

esi 01:45:00:00:00:00:01:00:00:00

multi-homing-mode single-active

df-election {

es-activation-timer 3

}

association {

port 1/1/1 {

}

}

}

}

}

}

vpls "VPLS 1" {

admin-state enable

service-id 1

customer "1"

bgp 1 {

}

bgp-evpn {

evi 1

routes {

mac-ip {

cfm-mac true

}

}

mpls 1 {

admin-state enable

oper-group "vpls-1_45"

auto-bind-tunnel {

resolution any

}

}

}

sap 1/1/1:1 {

description "to MTU-6"

monitor-oper-group "vpls-1_45"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "association-10" mep-id 46 {

admin-state enable

mac-address 00:00:00:00:46:04

ccm true

}

}

}

}

The configuration on PE-5 is similar.

On MTU-6, VPLS 1 is configured with three SAPs: SAP 1/1/2:1 toward PE-4, SAP 1/1/1:1 toward PE-5, and SAP 1/2/1:1 toward CE-16. ETH-CFM MEPs are configured on SAP 1/1/1:1 and SAP 1/1/2:1. The service configuration is as follows:

# on MTU-6:

configure {

service {

vpls "VPLS 1" {

admin-state enable

service-id 1

customer "1"

sap 1/1/1:1 {

description "to PE-5"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "association-11" mep-id 65 {

admin-state enable

mac-address 00:00:00:00:65:06

ccm true

}

}

}

sap 1/1/2:1 {

description "to PE-4"

eth-cfm {

mep md-admin-name "domain-1" ma-admin-name "association-10" mep-id 64 {

admin-state enable

mac-address 00:00:00:00:64:06

ccm true

}

}

}

sap 1/2/1:1 {

description "to CE-16"

}

}

Initial situation without failure

On MTU-6, ETH-CFM MEP 65 receives Continuity Check (CC) messages from its remote peer 56 on PE-5:

[/]

A:admin@MTU-6# show eth-cfm mep 65 domain 1 association 11 all-remote-mepids

=============================================================================

Eth-CFM Remote-Mep Table

=============================================================================

R-mepId AD Rx CC RxRdi Port-Tlv If-Tlv Peer Mac Addr CCM status since

-----------------------------------------------------------------------------

56 True False Absent Absent 00:00:00:00:56:05 12/23/2021 16:59:01

=============================================================================

Entries marked with a 'T' under the 'AD' column have been auto-discovered.

The following command shows that PE-5 is DF for VPLS 1:

[/]

A:admin@PE-5# show service id 1 ethernet-segment

===============================================================================

SAP Ethernet-Segment Information

===============================================================================

SAP Eth-Seg Status

-------------------------------------------------------------------------------

1/1/2:1 ESI-45_1 DF

===============================================================================

No sdp entries

No vxlan instance entries

PE-5 has full mesh with all EVPN destinations in VPLS 1:

[/]

A:admin@PE-5# show service id 1 evpn-mpls

===============================================================================

BGP EVPN-MPLS Dest

===============================================================================

TEP Address Egr Label Num. Mcast Last Change

Transport:Tnl MACs Sup BCast Domain

-------------------------------------------------------------------------------

192.0.2.2 524283 0 bum 12/23/2021 16:58:51

ldp:65539 No

192.0.2.3 524283 0 bum 12/23/2021 16:58:51

ldp:65538 No

192.0.2.4 524283 0 bum 12/23/2021 16:58:51

ldp:65537 No

-------------------------------------------------------------------------------

Number of entries : 3

-------------------------------------------------------------------------------

===============================================================================

===============================================================================

BGP EVPN-MPLS Ethernet Segment Dest

===============================================================================

Eth SegId Num. Macs Last Change

-------------------------------------------------------------------------------

01:23:00:00:00:00:01:00:00:00 1 12/23/2021 16:59:37

-------------------------------------------------------------------------------

Number of entries: 1

-------------------------------------------------------------------------------

===============================================================================

Avoiding blackholes when EVPN destinations are removed

On PE-5, a failure is simulated by disabling LDP:

# on PE-5:

configure exclusive

router "Base" {

ldp {

admin-state disable

commit

With LDP disabled, PE-5 has no tunnels available for auto-bind-tunnel in VPLS 1 and all EVPN destinations are removed, as follows:

[/]

A:admin@PE-5# show service id 1 evpn-mpls

===============================================================================

BGP EVPN-MPLS Dest

===============================================================================

TEP Address Egr Label Num. Mcast Last Change

Transport:Tnl MACs Sup BCast Domain

-------------------------------------------------------------------------------

No Matching Entries

===============================================================================

===============================================================================

BGP EVPN-MPLS Ethernet Segment Dest

===============================================================================

Eth SegId Num. Macs Last Change

-------------------------------------------------------------------------------

No Matching Entries

===============================================================================

Log 99 on PE-5 shows that the operational group "vpls-45_1" goes down and PE-5 becomes NDF in "ESI-45_1":

79 2021/12/23 17:01:15.697 CET MINOR: SVCMGR #2094 Base

"Ethernet Segment:ESI-45_1, EVI:1, Designated Forwarding state changed to:false"

78 2021/12/23 17:01:15.696 CET MINOR: SVCMGR #2542 Base

"Oper-group vpls-1_45 changed status to down"

The following command on PE-5 shows that the operational status of oper-group "vpls-45_1" is down, the EVPN-MPLS destinations are down, and the monitoring SAP 1/1/2:1 is down:

[/]

A:admin@PE-5# show service oper-group "vpls-1_45" detail

===============================================================================

Service Oper Group Information

===============================================================================

Oper Group : vpls-1_45

Creation Origin : manual Oper Status: down

Hold DownTime : 0 secs Hold UpTime: 0 secs

Members : 1 Monitoring : 1

===============================================================================

===============================================================================

Member BGP-EVPN for OperGroup: vpls-1_45

===============================================================================

SvcId:Instance (Type) Status

-------------------------------------------------------------------------------

1:1 (mpls) Inactive

-------------------------------------------------------------------------------

BGP-EVPN Entries found: 1

===============================================================================

===============================================================================

Monitoring SAPs for OperGroup: vpls-1_45

===============================================================================

PortId SvcId Ing. Ing. Egr. Egr. Adm Opr

QoS Fltr QoS Fltr

-------------------------------------------------------------------------------

1/1/2:1 1 1 none 1 none Up Down

-------------------------------------------------------------------------------

SAP Entries found: 1

===============================================================================

The following command shows that SAP 1/1/2:1 is operationally down with flags StandByForMHProtocol and OperGroupDown:

[/]

A:admin@PE-5# show service id 1 sap 1/1/2:1

===============================================================================

Service Access Points(SAP)

===============================================================================

Service Id : 1

SAP : 1/1/2:1 Encap : q-tag

Description : to MTU-6

Admin State : Up Oper State : Down

Flags : StandByForMHProtocol

OperGroupDown

Multi Svc Site : None

Last Status Change : 12/23/2021 17:01:16

Last Mgmt Change : 12/23/2021 16:58:49

===============================================================================

With ETH-CFM enabled, log 99 on MTU-6 shows that local MEP 65 did not receive a Continuity Check Message (CCM) from the remote MEP:

56 2021/12/23 17:01:19.288 CET MINOR: ETH_CFM #2001 Base

"MEP 1/11/65 highest defect is now defRemoteCCM"

PE-4 receives the following BGP-EVPN withdrawal messages:

33 2021/12/23 17:01:15.700 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.2

"Peer 1: 192.0.2.2: UPDATE

Peer 1: 192.0.2.2 - Received BGP UPDATE:

Withdrawn Length = 0

Total Path Attr Length = 129

Flag: 0x90 Type: 15 Len: 125 Multiprotocol Unreachable NLRI:

Address Family EVPN

Type: EVPN-ETH-SEG Len: 23 RD: 192.0.2.5:0

ESI: 01:45:00:00:00:00:01:00:00:00, IP-Len: 4 Orig-IP-Addr: 192.0.2.5

Type: EVPN-AD Len: 25 RD: 192.0.2.5:1 ESI: 01:45:00:00:00:00:01:00:00:00,

tag: 0 Label: 0 (Raw Label: 0x0) PathId:

Type: EVPN-MAC Len: 33 RD: 192.0.2.5:1 ESI: ESI-0, tag: 0, mac len: 48

mac: 00:00:00:11:01:06, IP len: 0, IP: NULL, label1: 0

Type: EVPN-MAC Len: 33 RD: 192.0.2.5:1 ESI: ESI-0, tag: 0, mac len: 48

mac: 00:00:00:00:65:06, IP len: 0, IP: NULL, label1: 0

"

The following command on PE-4 shows that PE-4 is the DF and the only DF candidate in "ESI-45_1" for VPLS 1:

[/]

A:admin@PE-4# show service system bgp-evpn ethernet-segment name "ESI-45_1"

evi evi-1 1

===============================================================================

EVI DF and Candidate List

===============================================================================

EVI SvcId Actv Timer Rem DF DF Last Change

-------------------------------------------------------------------------------

1 1 0 yes 12/23/2021 17:01:19

===============================================================================

===============================================================================

DF Candidates Time Added Oper Pref Do Not

Value Preempt

-------------------------------------------------------------------------------

192.0.2.4 12/23/2021 16:58:45 0 Disabl*

-------------------------------------------------------------------------------

Number of entries: 1

===============================================================================

* indicates that the corresponding row element may have been truncated.

Finally, the failure is restored by re-enabling LDP on PE-5:

# on PE-5:

configure exclusive

router "Base" {

ldp {

admin-state enable

commit

Operational groups for ES (Port-Active Multi-Homing)

On PE-2 and PE-3, operational group vpls-1_23 is configured and associated with ES " ESI-23_1", but not configured or monitored in VPLS 1. The service configuration on PE-3 is as follows:

# on PE-3:

configure {

service {

oper-group "vpls-1_23" {

hold-time {

down 0

up 0

}

}

system {

bgp {

evpn {

ethernet-segment "ESI-23_1" {

admin-state enable

esi 01:23:00:00:00:00:01:00:00:00

multi-homing-mode single-active

oper-group "vpls-1_23"

ac-df-capability exclude

df-election {

es-activation-timer 3

service-carving-mode manual

manual {

preference {

value 100 # on PE-2: value 150

}

}

}

association {

lag "lag-13" {

}

}

}

}

}

}

vpls "VPLS 1" {

admin-state enable

service-id 1

customer "1"

bgp 1 {

}

bgp-evpn {

evi 1

mpls 1 {

admin-state enable

auto-bind-tunnel {

resolution any

}

}

}

sap lag-13:1 { # on PE-2: sap lag-12:1

description "to MTU-1"

}

}

LAG 12 on PE-2 and LAG 13 on PE-3 monitor operational group "vpls-1_23". The monitor-oper-group command can be added to the LAG:

# on PE-3:

configure {

lag "lag-13" {

admin-state enable

description "to MTU-1"

encap-type dot1q

mode access

standby-signaling lacp # default value

monitor-oper-group "vpls-1_23"

max-ports 64

lacp {

mode active

system-id 00:00:00:01:03:01

system-priority 1

administrative-key 1

}

port 1/1/1 {

}

In this example, MTU-1 is connected to PE-2 and PE-3 through two different LAGs, however, this port-active multi-homing mode also supports the use of a single LAG on MTU-1. If a single LAG was used on MTU-1, the LAG ports on PE-2 and PE-3 must be configured with the same LACP parameters (administrative-key, system-id and system-priority) to ensure that PE-2 and PE-3 show themselves as a single system to MTU-1.

EVPN single-active multi-homing PEs that are elected as NDF must notify their attached access nodes to prevent these from sending traffic to the NDF. In this port-active multi-homing mode, ETH-CFM is not used, and other notification mechanisms are needed, such as LAG standby signaling (lacp or power-off). When the EVPN application layer instructs the LAG to become standby as a result of the NDF status, the behavior is as follows:

-

the lacp option signals LACP out-of-sync to MTU-1

-

the power-off option brings down the LAG ports connected to MTU-1

MTU-1 is connected to PE-2 and PE-3 using two different access LAGs with encapsulation dot1q and at least one port in each LAG. Any encapsulation type is supported in the LAGs. The LAG configuration is as follows:

# on MTU-1:

configure {

lag "lag-12" {

admin-state enable

description "to PE-2"

encap-type dot1q

mode access

max-ports 64

lacp {

mode active

administrative-key 32768

}

port 1/1/1 {

}

}

lag "lag-13" {

admin-state enable

description "to PE-3"

encap-type dot1q

mode access

max-ports 64

lacp {

mode active

administrative-key 32769

}

port 1/1/2 {

}

}

On MTU-1, VPLS 1 is configured as follows:

# on MTU-1:

configure {

service {

vpls "VPLS 1" {

admin-state enable

service-id 1

customer "1"

sap 1/2/1:1 {

description "to CE-11"

}

sap lag-12:1 {

description "to PE-2"

}

sap lag-13:1 {

description "to PE-3"

}

}

Initial situation without failures

PE-2 is DF for VPLS 1:

[/]

A:admin@PE-2# show service id 1 ethernet-segment

===============================================================================

SAP Ethernet-Segment Information

===============================================================================

SAP Eth-Seg Status

-------------------------------------------------------------------------------

lag-12:1 ESI-23_1 DF

===============================================================================

No sdp entries

No vxlan instance entries

[/]

A:admin@PE-3# show service id 1 ethernet-segment

===============================================================================

SAP Ethernet-Segment Information

===============================================================================

SAP Eth-Seg Status

-------------------------------------------------------------------------------

lag-13:1 ESI-23_1 NDF

===============================================================================

No sdp entries

No vxlan instance entries

On NDF PE-3, operational group "vpls-1_23" is operationally down, which has an impact on the operational status of the monitoring LAG, as follows:

[/]

A:admin@PE-3# show service oper-group "vpls-1_23" detail

===============================================================================

Service Oper Group Information

===============================================================================

Oper Group : vpls-1_23

Creation Origin : manual Oper Status: down

Hold DownTime : 0 secs Hold UpTime: 0 secs

Members : 1 Monitoring : 1

===============================================================================

===============================================================================

Member Ethernet-Segment for OperGroup: vpls-1_23

===============================================================================

Ethernet-Segment Status

-------------------------------------------------------------------------------

ESI-23_1 Inactive

-------------------------------------------------------------------------------

Ethernet-Segment Entries found: 1

===============================================================================

===============================================================================

Monitoring LAG for OperGroup: vpls-1_23

===============================================================================

Lag-id Adm Opr Weighted Threshold Up-Count Act/Stdby

name

-------------------------------------------------------------------------------

13 up down No 0 0 N/A

lag-13

-------------------------------------------------------------------------------

LAG Entries found: 1

===============================================================================

The following command shows that SAP lag-13:1 is operationally down on PE-3 with flags PortOperDown and StandByForMHProtocol:

[/]

A:admin@PE-3# show service id 1 sap lag-13:1

===============================================================================

Service Access Points(SAP)

===============================================================================

Service Id : 1

SAP : lag-13:1 Encap : q-tag

Description : to MTU-1

Admin State : Up Oper State : Down

Flags : PortOperDown StandByForMHProtocol

Multi Svc Site : None

Last Status Change : 12/23/2021 16:58:33

Last Mgmt Change : 12/23/2021 16:58:33

===============================================================================

The following command on PE-3 shows that LAG 13 has LACP standby signaling enabled to the MTU-1. LAG 13 is operationally down because the operational group is down.

[/]

A:admin@PE-3# show lag 13 detail

===============================================================================

LAG Details

===============================================================================

Description : N/A

-------------------------------------------------------------------------------

Details

-------------------------------------------------------------------------------

Lag-id : 13 Mode : access

Lag-name : lag-13

Adm : up Opr : down

---snip---

Standby Signaling : lacp

---snip---

Monitor oper group : vpls-1_23

Oper group status : down

Adaptive loadbal. : disabled Tolerance : N/A

-------------------------------------------------------------------------------

Port-id Adm Act/Stdby Opr Primary Sub-group Forced Prio

-------------------------------------------------------------------------------

1/1/1 up active down yes 1 - 32768

-------------------------------------------------------------------------------

Port-id Role Exp Def Dist Col Syn Aggr Timeout Activity

-------------------------------------------------------------------------------

1/1/1 actor No No No No No Yes Yes Yes

1/1/1 partner No No No No Yes Yes Yes Yes

===============================================================================

DF switchover

To trigger a DF switchover, the preference value is modified on PE-2, as follows:

# on PE-2:

configure exclusive

service {

system {

bgp {

evpn {

ethernet-segment "ESI-23_1" {

df-election {

es-activation-timer 3

service-carving-mode manual

manual {

preference {

value 50

}

}

}

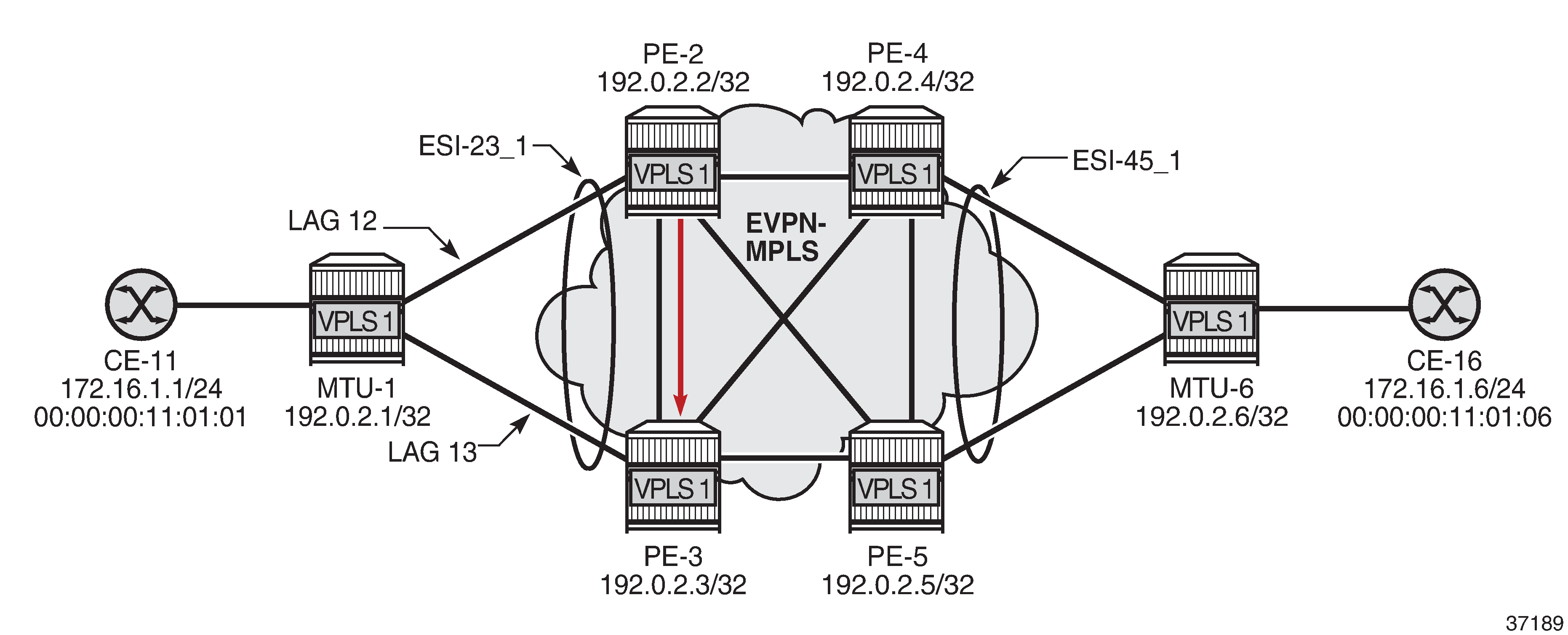

DF switchover in single-active ESI-23_1 shows a DF switchover from PE-2 to PE-3. PE-2 becomes the NDF and LAG 12 is in standby.

Log 99 on PE-2 shows that SAP lag-12:1 goes down, the ES operational group goes down, the monitoring LAG 12 goes down, port 1/1/2 goes down, and subsequently an LACP out-of-sync message is sent:

110 2021/12/23 17:15:27.781 CET WARNING: LAG #2007 Base LAG

"LAG lag-12 : partner oper state bits changed on member 1/1/2 : [sync FALSE -> TRUE] [expired TRUE -> FALSE] [defaulted TRUE -> FALSE]"

109 2021/12/23 17:15:27.781 CET WARNING: LAG #2007 Base LAG

"LAG lag-12 : LACP RX state machine entered current state on member 1/1/2"

108 2021/12/23 17:15:27.777 CET MAJOR: SVCMGR #2210 Base

"Processing of an access port state change event is finished and the status of all affected SAPs on port lag-12 has been updated."

107 2021/12/23 17:15:27.777 CET WARNING: SNMP #2004 Base lag-12

"Interface lag-12 is not operational"

106 2021/12/23 17:15:27.777 CET MINOR: SVCMGR #2203 Base

"Status of SAP lag-12:1 in service 1 (customer 1) changed to admin=up oper=down flags=MhStandby"

105 2021/12/23 17:15:27.777 CET WARNING: SNMP #2004 Base 1/1/2

"Interface 1/1/2 is not operational"

104 2021/12/23 17:15:27.777 CET WARNING: LAG #2006 Base LAG

"LAG lag-12 : initializing LACP, all members will be brought down"

103 2021/12/23 17:15:27.777 CET MINOR: SVCMGR #2094 Base

"Ethernet Segment:ESI-23_1, EVI:1, Designated Forwarding state changed to:false"

102 2021/12/23 17:15:27.777 CET MINOR: SVCMGR #2542 Base

"Oper-group vpls-1_23 changed status to down"

On PE-3, log 99 shows that PE-3 becomes DF for "ESI-23_1" and operational group "vpls-1_23", interface 1/1/1, and LAG 13 are operationally up.

112 2021/12/23 17:15:31.753 CET WARNING: LAG #2007 Base LAG

"LAG lag-13 : partner oper state bits changed on member 1/1/1 : [collecting FALSE -> TRUE]"

111 2021/12/23 17:15:31.734 CET MAJOR: SVCMGR #2210 Base

"Processing of an access port state change event is finished and the status of all affected SAPs on port lag-13 has been updated."

110 2021/12/23 17:15:31.733 CET WARNING: SNMP #2005 Base lag-13

"Interface lag-13 is operational"

109 2021/12/23 17:15:31.733 CET WARNING: SNMP #2005 Base 1/1/1

"Interface 1/1/1 is operational"

108 2021/12/23 17:15:30.831 CET MAJOR: SVCMGR #2210 Base

"Processing of an access port state change event is finished and the status of all affected SAPs on port lag-13 has been updated."

107 2021/12/23 17:15:30.811 CET MINOR: SVCMGR #2094 Base

"Ethernet Segment:ESI-23_1, EVI:1, Designated Forwarding state changed to:true"

106 2021/12/23 17:15:30.811 CET MINOR: SVCMGR #2542 Base

"Oper-group vpls-1_23 changed status to up"

Link Loss Forwarding in EVPN-VPWS

Fault propagation in EVPN-VPWS services is supported using ETH-CFM, but also using LAG standby-signaling lacp or power-off.

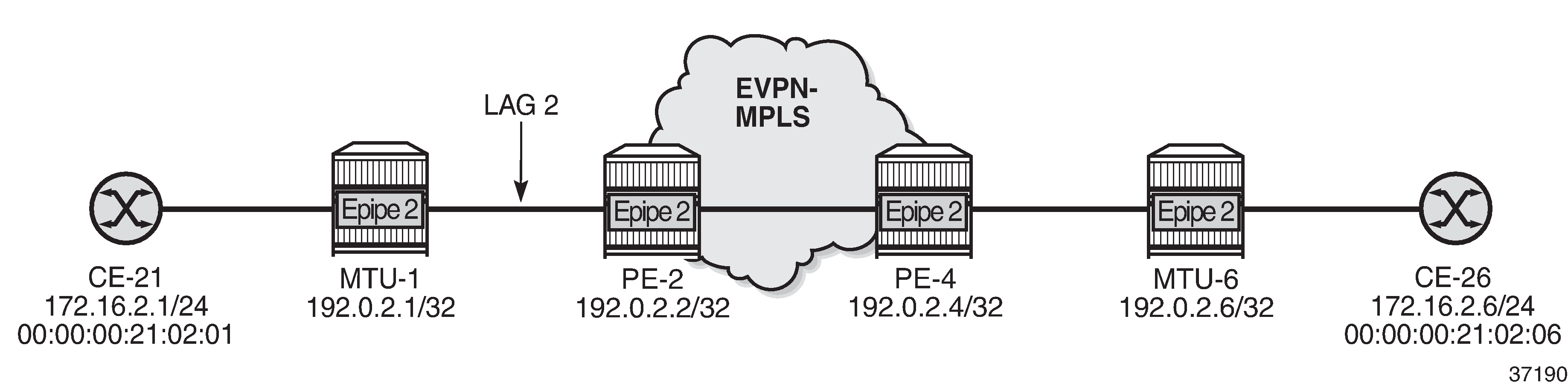

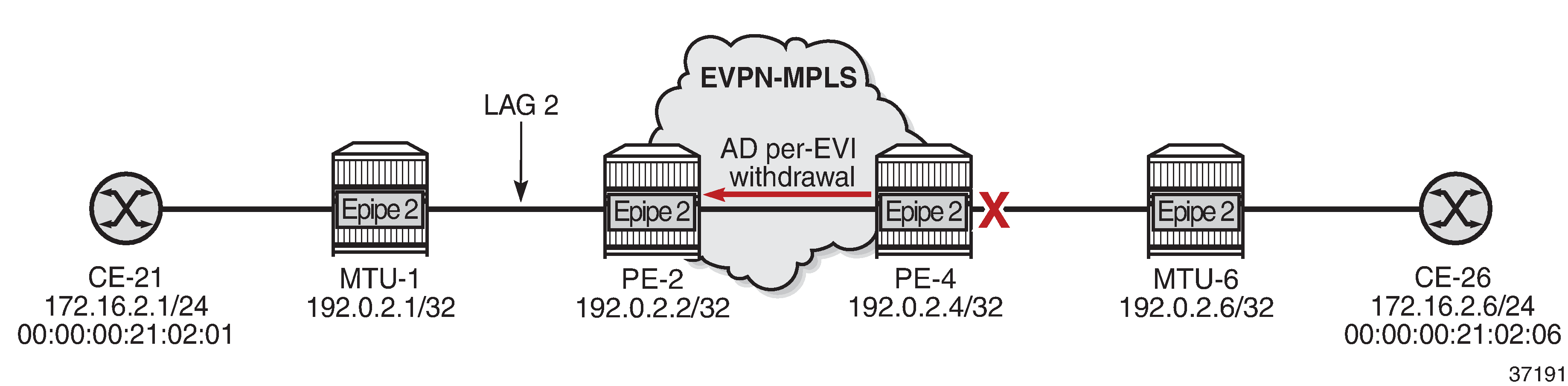

Sample topology with Epipe 2 shows the sample topology with Epipe 2.

The configuration on MTU-1 is as follows:

# on MTU-1:

configure {

lag "lag-2" {

admin-state enable

encap-type dot1q

mode access

max-ports 64

lacp {

administrative-key 32770

}

port 1/1/5 {

}

}

service {

epipe "Epipe 2" {

admin-state enable

service-id 2

customer "1"

sap 1/2/1:2 {

}

sap lag-2:2 {

}

}

On PE-2, operational group "llf-1" is configured and associated to EVPN-MPLS. LAG 2 monitors this operational group.

# on PE-2:

configure {

lag "lag-2" {

admin-state enable

encap-type dot1q

mode access

standby-signaling lacp

monitor-oper-group "llf-1"

max-ports 64

lacp {

mode active

system-id 00:00:00:00:12:01

system-priority 1

administrative-key 2

}

port 1/1/5 {

}

}

service {

oper-group "llf-1" {

hold-time {

down 0

up 0

}

}

epipe "Epipe 2" {

admin-state enable

service-id 2

customer "1"

bgp 1 {

}

sap lag-2:2 {

}

bgp-evpn {

evi 2

local-attachment-circuit "ac-1_2" {

eth-tag 12

}

remote-attachment-circuit "ac-6_2" {

eth-tag 62

}

mpls 1 {

admin-state enable

oper-group "llf-1"

auto-bind-tunnel {

resolution any

}

}

}

}

The configuration on PE-4 is as follows:

# on PE-4:

configure {

service {

epipe "Epipe 2" {

admin-state enable

service-id 2

customer "1"

bgp 1 {

}

sap 1/1/5:2 {

}

bgp-evpn {

evi 2

local-attachment-circuit "ac-6_2" {

eth-tag 62

}

remote-attachment-circuit "ac-1_2" {

eth-tag 12

}

mpls 1 {

admin-state enable

auto-bind-tunnel {

resolution any

}

}

}

}

LLF in Epipe 2 - PE-4 failure shows when a failure occurs on PE-4.

The failure is simulated on PE-4 by disabling port 1/1/5 toward MTU-6.

# on PE-4:

configure exclusive

port 1/1/5 {

admin-state disable

commit

When the link between PE-4 and MTU-6 fails, PE-4 withdraws the AD per-EVI route for Epipe 2. PE-2 receives the following AD per-EVI withdrawal from PE-4:

155 2021/12/23 17:18:37.217 CET MINOR: DEBUG #2001 Base Peer 1: 192.0.2.4

"Peer 1: 192.0.2.4: UPDATE

Peer 1: 192.0.2.4 - Received BGP UPDATE:

Withdrawn Length = 0

Total Path Attr Length = 34

Flag: 0x90 Type: 15 Len: 30 Multiprotocol Unreachable NLRI:

Address Family EVPN

Type: EVPN-AD Len: 25 RD: 192.0.2.4:2 ESI: ESI-0, tag: 62

Label: 0 (Raw Label: 0x0) PathId:

"

Upon receiving this AD per-EVI route, Epipe 2 goes operationally down on PE-2:

[/]

A:admin@PE-2# show service id 2 base | match "Oper State"

Admin State : Up Oper State : Down

Operational group "llf-1" goes down when the Epipe is operationally down:

[/]

A:admin@PE-2# show lag 2 detail | match "per group"

Monitor oper group : llf-1

Oper group status : down

On PE-2, the detailed information for operational group "llf-1" shows that the operational group and the monitoring LAG are down.

[/]

A:admin@PE-2# show service oper-group "llf-1" detail

===============================================================================

Service Oper Group Information

===============================================================================

Oper Group : llf-1

Creation Origin : manual Oper Status: down

Hold DownTime : 0 secs Hold UpTime: 0 secs

Members : 1 Monitoring : 1

===============================================================================

===============================================================================

Member BGP-EVPN for OperGroup: llf-1

===============================================================================

SvcId:Instance (Type) Status

-------------------------------------------------------------------------------

2:1 (mpls) Inactive

-------------------------------------------------------------------------------

BGP-EVPN Entries found: 1

===============================================================================

===============================================================================

Monitoring LAG for OperGroup: llf-1

===============================================================================

Lag-id Adm Opr Weighted Threshold Up-Count Act/Stdby

name

-------------------------------------------------------------------------------

2 up down No 0 0 N/A

lag-2

-------------------------------------------------------------------------------

LAG Entries found: 1

===============================================================================

PE-2 signals the fault based on the configuration of the LAG standby signaling:

-

If the LAG standby signaling is power-off, PE-2 brings down the ports in the LAG.

-

If the LACP standby signaling is configured, PE-2 signals an LACP out-of-sync on the LAG ports.

In either case, MTU-1 stops forwarding traffic to PE-2.

The following debug message in log 99 on MTU-1 shows that MTU-1 received an LACP out-of-sync message for port 1/1/5 of LAG 2:

154 2021/12/23 17:18:37.216 CET WARNING: LAG #2007 Base LAG

"LAG lag-2 : partner oper state bits changed on member 1/1/5 : [sync TRUE -> FALSE] [collecting TRUE -> FALSE]"

The following debug messages in log 99 on MTU-1 show that LAG 2 and interface 1/1/5 are not operational:

156 2021/12/23 17:18:37.217 CET WARNING: SNMP #2004 Base lag-2

"Interface lag-2 is not operational"

155 2021/12/23 17:18:37.216 CET WARNING: SNMP #2004 Base 1/1/5

"Interface 1/1/5 is not operational"

On MTU-1, LAG 2 is operationally down:

[/]

A:admin@MTU-1# show lag 2

===============================================================================

Lag Data

===============================================================================

Lag-id Adm Opr Weighted Threshold Up-Count MC Act/Stdby

name

-------------------------------------------------------------------------------

2 up down No 0 0 N/A

lag-2

===============================================================================

Conclusion

Operational groups can be useful in EVPN services to avoid blackholes when a PE is disconnected from the EVPN core. Failures can be propagated by the PEs to access nodes, either by ETH-CFM or LAG standby signaling.